PLAYING WITH ROBOTS AND AVATARS IN MIXED REALITY

SPACES

V. Antunes, R. Wanderley, T. Tavares, P. Alsina, G. Lemos, L. Gonc¸alves

Universidade Federal do Rio Grande do Norte

DCA-CT-UFRN, Campus Universit

´

ario, Lagoa Nova, 59072-970, Natal, RN, Brasil

Keywords:

Robots, Avatars, Visual Positioning, Mixed Reality

Abstract:

We propose a hyperpresence system designed for broad manipulation of robots and avatars in mixed reality

spaces. The idea is to let human users play with robots and avatars through the internet via a mixed reality

system interface. We make a mixing between hardware and software platforms for control of multi-user

agents in a mixed reality environment. We introduce several new issues (or behaviors) as “Remote Control”,

“Augmented Reality” for robots, making robots “Incarnating” avatars and user humans connected through

the Internet. A human user can choose which role he/she wants to play. The proposed system involves

a complex implementation of heterogeneous software and hardware platforms including their integration in

a real-time system working through the Internet. We performed several tests including experiments using

different computer architectures and different operating systems, in order to validate the syustem.

1 INTRODUCTION

According to the Computer-Supported Cooperative

Work (CSCW) aproach, Moran and Anderson (Moran

et. al., 1990) discuss three fundamental ideas: Shared

Workspaces, Coordinated Communication and Infor-

mal Interaction. Shared Spaces can represent CSCW

applications in terms of spatiality, transportation and

artificiality (Milgram, 1994; Benford, 2000). Unlike

usual applications in Virtual Reality it replaces the

physical world. Augmented reality explores seam-

lessly applications or applications that mix virtual and

real features. Ressier (Rssler, 2000) presents an en-

vironment for collaborative engineering. This envi-

ronment integrates a multiuser synthetic environment

with physical robotic devices. The goal is to provide

a physical representation of the 3D environment en-

abling the user to perform direct, tangible manipula-

tions of the devices. The implementation uses robots

constructed with LEGO kits and join VRML and Java

technologies to it. It also uses a vision processing

system for computing orientation and position of the

robots in the real environment. The Augmented Re-

ality (AR) paradigm (Moran et. al., 1990) enhances

physical reality by integrating virtual objects into the

physical world, which becomes, in the visual sense,

an equal part of the natural environment. The Collab-

orative AR Games project is a good example of these

(Moran et. al., 1990). This kind of work has been

designed for demonstrating the use of AR technolo-

gies in a collaborative entertainment application. It is

a multi player game simulation where the users play

with cards. Game goal is to match object that log-

ically belong together, such as an alien and a flying

saucer. When cards containing logical matches were

placed side by side, an animation is triggered involv-

ing the objects on the card.

We propose a system that is more general and

closer to reality than the above ones, possible of ap-

plications in remote working spaces where (real-time)

interaction is a requirement. We use autonomous

robots with proper perception and decision and pro-

vide a way for them to, on-line, interact with people

and objects in the real and virtual world. In certain

cases, the human, through an avatar, would interfere

in the robot autonomous, attentional behavior guiding

its motion, as for example to closer examine an ob-

ject detected in a certain environment place. In other

cases, the robot would guide the human user through

the real environment (as in a museum). Other humans

could enter the environment and also interact with the

robot or other human users, through their avatars. In

this way, robots, avatars and human users can live in

this environment, interacting to each other as neces-

318

Antunes V., Wanderley R., Tavares T., Alsina P., Lemos G. and Gonçalves L. (2004).

PLAYING WITH ROBOTS AND AVATARS IN MIXED REALITY SPACES.

In Proceedings of the First International Conference on Informatics in Control, Automation and Robotics, pages 318-321

DOI: 10.5220/0001133603180321

Copyright

c

SciTePress

sary. The system perception of the world is constantly

updated to reflect the current world status.

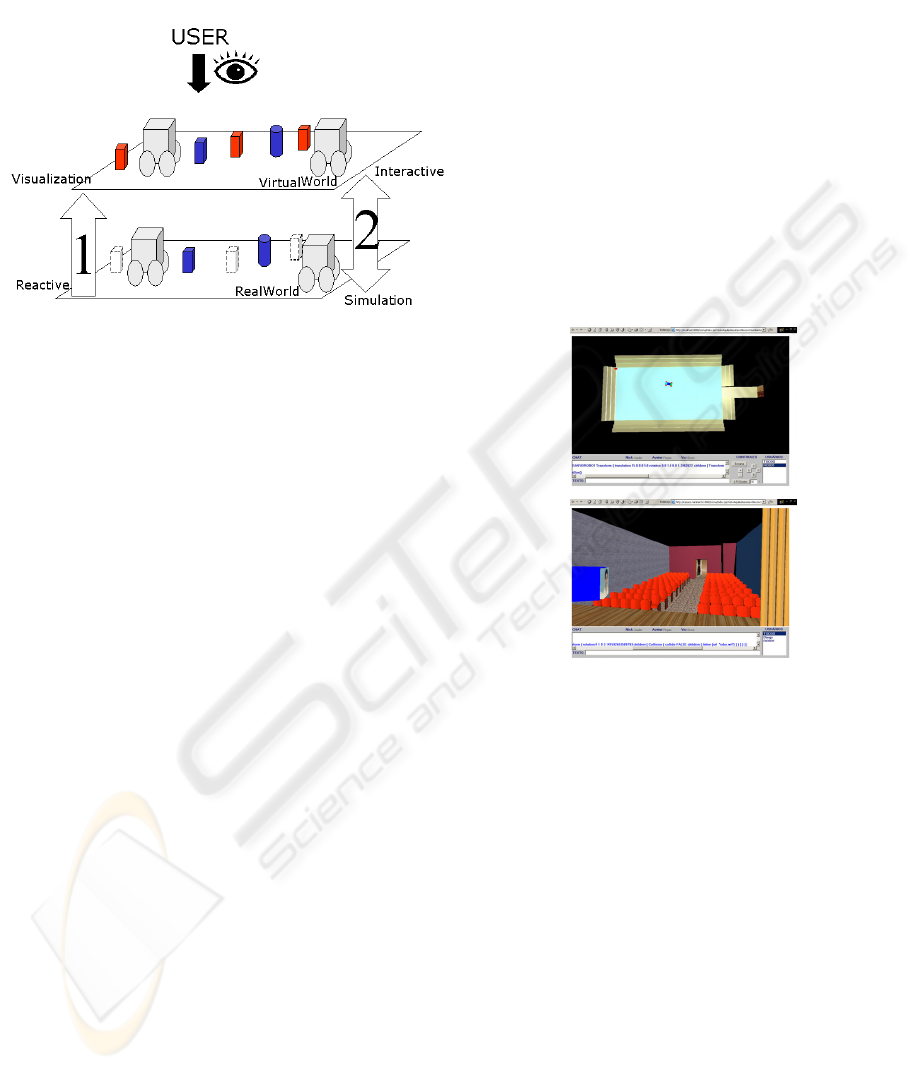

Figure 1: HYPERPRESENCE overview.

2 THE HYPERPRESENCE

SYSTEM

Figure 1 illustrates the conceptual model of the HY-

PERPRESENCE system. It follows the basic idea of

having real and virtual worlds representing the same

situation. In phase 1 (arrow 1 in the left of figure) we

have an unidirectional flow from real to virtual envi-

ronment. The virtual interface is used for visualiza-

tion of reactive or intended actions performed by real

objects or robots. The second step is to promote in-

teraction between these two worlds. Arrow 2 (in the

right) illustrates this phase where we have interactive

options for users that can create and manipulate vir-

tual objects that will be perceived in the real world

and influencing actions of the agents. In this case,

we have a platform for simulating the behavior of the

agents in the real world.

Our robots operate in real environments that could

be any place providing interactions with its virtual

version. We adopted here an arena, a robot soccer

game, and a Museum (Casa da Ribeira) located in Na-

tal city, Brasil. All environments are closed places in

the real world limited by walls in which we can have

real objects as robots and obstacles. The robots are

autonomous vehicles implemented by using the hard-

ware technology developed by LEGO consortium. In

this implementation, the obstacles are colored cubes

or cylinders. We can have more than one robot in the

environment so a robot could be a dynamic obstacle

for another one. With this hardware setup, we can

stimulate many kinds of tasks as: (1) moving around

and avoiding obstacles; (2) looking for special objects

(as colored cubes or cylinders); (3) identifying and

catching special objects, (4) manipulating objects.

For representing the real world and the objects,

we implemented a virtual version of the above real

environments using VRML (see Figure 2. The vir-

tual version of Casa da Ribeira includess all gallery

and cultural works being exposited. The resulting

model is put in an Internet site where virtual users

can visit, look around, and interact with the matte-

rial (evaluating and sending comments). Each real

object is represented in the 3D interface by using a

corresponding avatar. Communication between the

real and any (restricted) number of virtual versions is

provided through an Internet connection. So avoiding

sending images and other massive information is one

of the key restrictions we must pay attention.

Figure 2: Virtual versions of: Robot soccer and arena (top),

Casa da Ribeira cultural space (middle) , and its Theater

(bottom).

2.1 The architectural model

Figure 3 illustrates the design of the Hyperpresence

system, relating the main architectural components

and connectors. Briefly, the 3D Interface Compo-

nent represents the 3D virtual interface and the 3D

objects. The major function of the 3D Interface com-

ponent is the real-time visualization of events in the

real environment. The VRML Multi-User Client is

the observer of events for the 3D Interface. For each

event generated for an avatar in the virtual environ-

ment the VRML Multi-User Client sends a message

to the VRML Multi-User Server component, which

controls the VRML MultiUser Client components.

For each real object (robot or obtacles) it provides

its geometric 3D representation - an avatar. Each

avatar is treated by the VRML Multi-User Server as

PLAYING WITH ROBOTS AND AVATARS IN MIXED REALITY SPACES

319

a client connected in this server. The VRML Multi-

User Server feeds each client with the corresponding

information (position and orientation) from the Visual

Server and Hardware Server components, so it can

keep the syncronization between both environments.

Also, if a virtual avatar representing an user enters

the scene its position and orientation is constantly up-

dated. The Hardware Server Component is responsi-

ble for getting and sending information in real-time to

the autonomous real entities, that is, to the robots in

the real world. Although, the robots are implemented

as reactive units the Hardware Server can send mes-

sages to each robot and update their inputs according

to application needs. This component also sends mes-

sages for the VRML Multi-User Server. The func-

tionality of the Visual Server Component is to ac-

quire/determine position and orientation of the real

objects and to send them to the other components.

To do that, the Visual Server component has a video

camera system, and uses image processing and recog-

nition algorithms (discussed later) for mapping colors

marks contained in each object in position and ori-

entation, for each real object. The Real Environment

Component is the physical environment and the real

objects system represented by robots, cubes, cylinder

and walls. Each robot can have its own implemen-

tation code and this depends on the task goal to be

achieved. Also, we can run different tasks for each

robot, for example one robot can move and/or pick up

a can. We note that all of the above components and

connectors must operate in real-time. Some of them

operate in synschronous way, as the visual server, and

some of them in assynchronous way.

Figure 3: System Architecture.

3 EXPERIMENTS,

DEMONSTRATIONS AND

RESULTS

In the first experiment, an user operating a remote

computer, located far away from the robot place runs

the remote control behavior for controlling the robot

through an open internet connection with the robot

hardware. The real and virtual robots could per-

form the same remote controlled motion, following

the same path. In this case, the control was under

a human user command. In the following a point in

the environment (the red ball in the top-left part) is

set for the robot to design a trajectory, and to follow

it in order to reach that point. Both real and virtual

versions of the robot could be seen moving while the

trajectory is traversed in real-time. We did next an

experiment in which the real robot encarnates the vir-

tual one. In this case, the virtual robot is performing a

motion from one point to another, commanded by the

human user (this is different than remote controling,

since a virtual point is given to the virtual version).

The virtual robot performs a motion from one place

to another. The real robot could perform well this

task in the real side. In the next experiment, we sim-

ulate remote visualization in the cultural space Casa

da Ribeira. The aim of Casa da Ribeira is to improve

cultural activities specially using its gallery and the-

ather. In its gallery people can see photos, pictures

or sculptures. The multiuser server allows each user

to note the presence of other virtual users in the same

way as in the real cultural center. With this, virtual

users can even communicate to each other during the

visit.

Figure 4: Real robot performing in the Lab ground.

The multiuser server is used in order to control

robots in the real environment. That is, an au-

tonomous robot can be in the real Casa da Ribeira

room interacting with the real environment and a syn-

ICINCO 2004 - ROBOTICS AND AUTOMATION

320

cronous version for this robot can be in the virtual

side. Figures 4 and 5 show the robots running this ex-

periment. In this case we used the the robot located in

our lab ground as representing the real theater and the

avatar of it in the virtual theather room. We are plan-

ning soon the other side of this experience: using the

robot located in the Casa da Ribeira real theater. Note

that we can use the same infrastructure implemented

on the theather for improving a mixed reality play,

where we can envolve real actors (people) interacting

with virtual actors (robots). This virtual actors can be

any person located in anywhere incarnating a robot

avatar. Other intersting application of this structure

is to improve a real-virtual bridge, im other words, to

improve a communication tool for connecting people

located in different spaces. In that way the robots will

be used as the connection channel.

Figure 5: Virtual robot performing in the theater, in a syn-

chronized way.

4 CONCLUSIONS AND

PERSPECTIVES

We developed and tested several tools for allowing

control of both virtual and real versions of robots and

users connected through internet. The developed tools

allow us to provide interactions with users, robots and

avatars through the WWW in several ways. An exam-

ple of an application devised involving both real and

virtual sides is to have a robot as an avatar in a real

museum for a human connected through the internet

in a virtual museum. In this case, the robot could have

an autonomous behavior (to follow a given museum

route tour), learned through several trials were the

robot takes user preferences. Then, as a human enters

the virtual museum, a robot is allocated to it in the real

version of the museum. The robot starts following the

traced route, according to the user preference. As the

robot approach a picture, the human user may use the

remote control, for example, to examine a given pic-

ture with more detail. Note that several robots could

be present representing several humans. As the num-

ber of robots expires, a new user could be represented

by a virtual avatar (without phisical entities represent-

ing it). In this case, the robots must know that there

is another user (avatar) close to them (augmented re-

ality for the robots). We will shortly run experiments

involving robots performing as actors in the Casa da

Ribeira Theater. Besides the above applications, sev-

eral other involving a mix between real and virtual

parts could take place. As a future application exam-

ple, we plan to use this system in order to transmit,

through the internet robot contests. This will include

robot soccer and other versions of games for robots.

REFERENCES

S. Ellis. What are Virtual Environments? IEEE Comp.

Graphics & Applications, 14(1):17-22, Jan 1994

Moran, T.P. and Anderson, R.J. The Workaday World as

a Paradigm for CSCW Design. In Proceedings of

the Conf. on Computer-Supported Cooperative Work,

CSCW ’90. (Los Angeles, CA), pages 381-393. ACM

Press, New York, 1990.

P. Milgram and F. Kishino. A Taxonomy of Mixed Reality

Visual Display, IEEE Transactions. on Information &

Systems.,Vol. E77-D, No.12, pp.1321-1329, 1994.

S. Ressler, B. Antonishek, Q. Wang, and A. Godil. Integrat-

ing Active Tangible Devices with a Synthetic Environ-

ment for Collaborative Engineering. National Institute

of Standards and Technology. 2000.

Anil K. Jain. Fundamentals of Digital Image Processing.

Prentice Hall, 1 edn, 1989.

K. R. T. Aires. Desenvolvimento de um sistema de vis

˜

ao

global para uma frota de mini-rob

ˆ

os m

´

oveis, Master’s

thesis, Federal University of Rio Grande do Norte,

Natal, RN, Brasil.

Shared Spaces: Transportation, Artificiality, and Spaciality.

Steve Benford, Chris Brown, Gail Reynard and Chris

Greenhalgh. Department of Computer Science, Uni-

versity of Nottingham. NG7 2RD, UK. E-mail: sdb,

ccb, gtr, cmg@cs.nott.ac.uk

BRICOS. BricOS Operating System and Compiler ”C” and

”C++” for RCX. brickos.sourceforge.net.

LNP: LegOS Network Protocol. le-

gos.sourceforge.net/HOWTO/x405.html

PLAYING WITH ROBOTS AND AVATARS IN MIXED REALITY SPACES

321