MODELLING DIALOGUES WITH EMOTIONAL

INTERACTIVE AGENTS

Cveta Martinovska, Stevo Bozinovski

Electric Engieering Faculty, Karpos II, 1000, Skopje, Macedonia

Keywords: Intelligent Social Agents, Emotions and Personality

Abstract: The paper describes a possible approach to the d

esign of believable dialogs between users and emotionally

intelligent interactive agents capable of conveying emotional, verbal and non-verbal signals. User's affective

profile is built according to the standard test in psychiatry and clinical psychology Emotions Profile Index.

The agents have to predict and influence the behavior of users through communicative acts. The predictive

power to a certain degree relies on expecting cooperation and on understanding user emotions, personality,

interests and other mental states. Dialog automata are used to conceptualize the conversation between the

users and the animated agents.

1 INTRODUCTION

There is a general agreement that emotion and

personality are essential to achieve believable

behavior in AI applications that use animated agents

as virtual characters for entertainment (Rousseau

and Hayes-Roth 1998), as tutors in pedagogical

software (Johnson, Rickel, and Lester 2000) or for

presentation tasks (André et al. 2000).

Virtual characters might have impact on the

users a

nd motivate their responses or increase

learning abilities in tutoring applications (Lester et

al. 2000). Animated agents composed of multimedia

elements are able to present relevant information in

more appealing way and to convey gestures and

emotional signals that might have effect on user

attitudes.

Besides emotions and personality verbal and

no

nverbal behaviors are some of the key issues that

have to be addressed in creating virtual believable

characters (Cassell and Stone 1999). Coordinated

verbal and nonverbal conversational behaviors

convey the semantic and pragmatic content of the

information through different modalities.

Accentuating certain words, intonation and gestures

synchronized with the spoken utterances of the

artificial agents serve to reinforce the meaning of the

speech. The propositional goals that make the

content of the conversation might be realized with

different linguistic styles, which might express

agent's character and personality as Walker and

colleagues argue (Walker, Cahn, and Whittaker

1997). The agents should be able to coordinate their

communicative and expressive behavior.

Other important aspect to enhance the

b

elievability of animated agents is a social role

awareness that determines the emotion expression

and behavioral reactions according to the social

context (Prendinger and Ishizuka 2001). For

example, when interacting with the buyer the agent

seller has to suppress negative emotions and to use a

polite form. In a particular social setting the social

distance between the participants and the power that

an agent's role has over other roles determine the

appropriate behavioral and communicative

conventions.

Recognizing user emotions and personality is

one

of the key issues in building emotionally

intelligent interactive systems. Conati (Conati, 2002)

proposes a probabilistic model, based on Dynamic

Decision Networks to infer user's emotions during

the interaction with educational game. Other works

focus on the assessment of a specific emotion, such

as anxiety in pilots (Hudlicka and McNeese 2002) or

stress in car drivers (Healy and Picard 2000).

This paper introduces our approach to the design

of bel

ievable dialogs between users and animated

presenters. Important property of the animated

agents is the capability to engage in affective

communication with users according to their

personality. Our affective user-modeling component

is based on standard psychological test Emotions

246

Martinovska C. and Bozinovski S. (2004).

MODELLING DIALOGUES WITH EMOTIONAL INTERACTIVE AGENTS.

In Proceedings of the Sixth International Conference on Enterprise Information Systems, pages 246-249

DOI: 10.5220/0002630502460249

Copyright

c

SciTePress

Profile Index (EPI) (Plutchik 1980). During the

initial interaction with the system, user profiles

based on their interests and priorities are built.

For the audio-visual implementation of the

virtual agents we use the programmable interface of

the Microsoft Agent package that includes several

predefined characters (Microsoft Agent 1999). The

package has a speech recognizer and text-to-speech

engine.

To illustrate our approach we present an ongoing

work in developing animated agents as presenters in

accommodation renting scenario. Agent's

presentations are structured as informative dialogs

where users provide and seek a specific type of

information. Conversation as a type of discourse is

used to communicate information, views and

feelings in an ordered and structured way.

The same message can be conveyed by a variety

of expressions and the agents have to choose the

right expression for a linguistic situation according

to their character and personality. For example, an

extrovert agent will use more direct speech and

expansive gestures. The agent has to cooperate with

the users, by sharing speaking turns with them and

introducing topics of their interest in order to be

considered as a conversationally competent.

The application provides limited dialogs and

explanations within constrained situations and

enables competent communication only about the

values of the attributes and the impact that they have

on the user requirements.

2 AFFECTIVE USER PROFILE

User modeling component builds the affective

profile of a user according to the standard test in

psychiatry and clinical psychology Emotions Profile

Index. This instrument uses the idea that personality

traits are mixtures of two or more primary emotions

(Plutchik 1980). For example, personality trait

cautious includes expectancy and fear as two main

emotional components, and affectionate includes

acceptance and joy.

EPI assesses the user affective state based on a

partial ordering scheme of personality traits:

adventurous, affectionate, brooding, cautious,

gloomy, impulsive, obedient, quarrelsome, resentful,

self-conscious, shy, and sociable. The emotional

dispositions, such as fear, anger, joy, sadness,

acceptance, disgust, expectancy and surprise,

represent the user's affective state. We characterize

the user's affective state as a mixture of different

emotions and use fuzzy linguistic labels to express

the scores of the emotional scales measured with the

psychological test. The term set T(A)={high,

medium, low} is used for the linguistic variable A

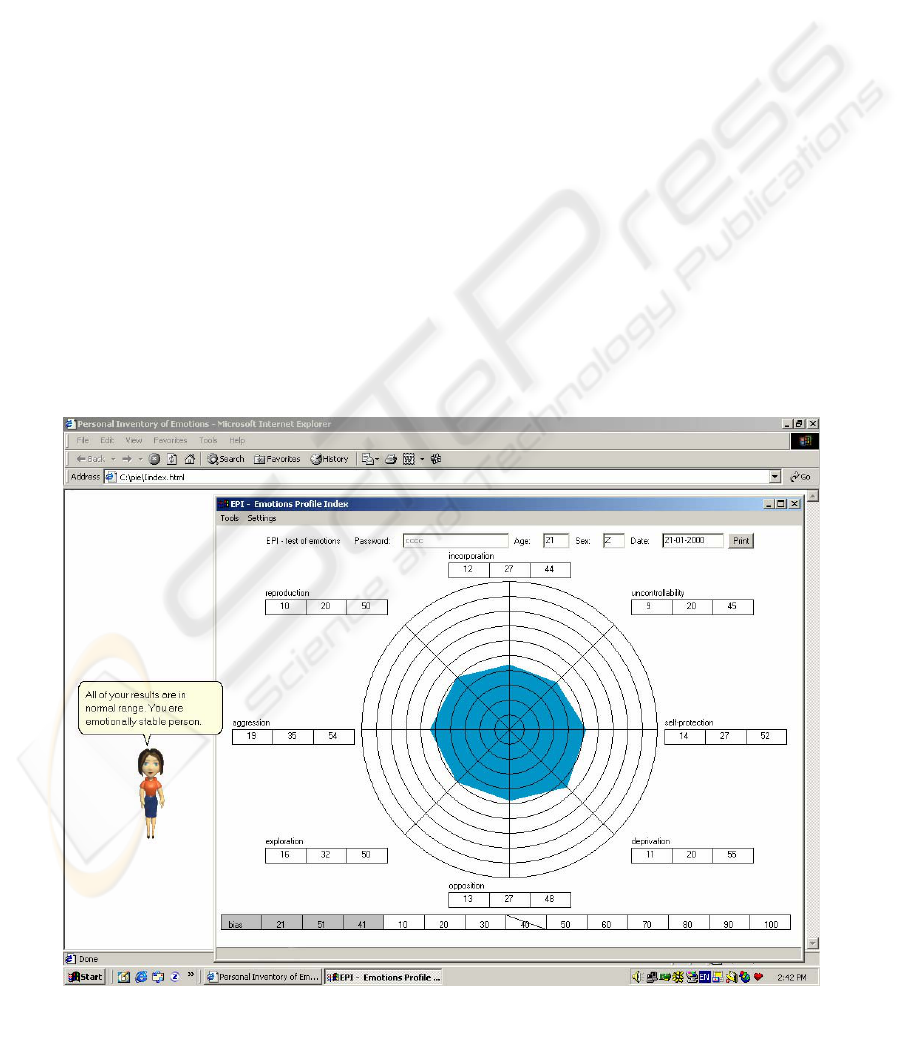

Figure 1: Representation of the user affective profile

MODELLING DIALOGUES WITH EMOTIONAL INTERACTIVE AGENTS

247

that represents the score of the emotional scale.

The user-modeling component infers user's

emotional state and presents the appropriate

interpretation in the form of expert explanations, or

offers a diagrammatic view of the results. One type

of a user profile representation is shown in Figure 1.

Expert explanations of the emotional profile are

obtained using the affective modeling system and

consulting experts (Bozinovski et al. 1991). In our

affective modeling system they are formed by

merging fragments of texts activated by fired fuzzy

rules.

Besides emotions, we consider that personality is

essential for a virtual character that has to exhibit a

believable behavior and to predict future behaviors

of the user. Personality influences person's goals and

behaviors and determines person's adjustment to the

environment. Personality traits predispose people to

behave consistently in situations and they remain

stable over a period of time. In psychology,

emotions are defined as focused on particular events,

while personality is more diffuse and indirect.

In our approach, we follow the Costa and

McCrae's Five-Factor Model of personality (Costa

and McCrae 1992) where traits are structured as five

dimensions: neuroticism, extroversion, openness,

agreeableness and conscientiousness. For example,

using personality traits, an extrovert person might be

described as affectionate, sociable, assertive,

trusting, person who likes excitements and carefully

selects right words when speaking. By contrast, the

introvert person is reserved, skeptical, seldom seeks

company, and rather stays in the background instead

of being assertive.

We use the partial ordering of the personality

traits to infer the user's personality. For example,

personality traits as cautious, brooding, obedient or

altruist contribute to the evaluation of the person as

agreeable or disagreeable.

Users perceive the same dimensions of

personality in virtual agents as in humans. They

generally prefer cooperative and outgoing agents to

those that are competitive and withdrawn for

presentation tasks. Interesting fact is that similar

personalities to user's own personality are liked more

than the dissimilar ones (Isbister and Nass 1998).

3 CONCEPTUALIZATION OF THE

INFORMATIVE DIALOGS

In this section we present the formal model of the

conversation process in our application. First, we

specify the models of the virtual agent and the user.

Then we deal with the communication aspects.

The agent can be formalized as a 3-tuple (H, A,

S) where H={h

i

| h

i

=(p

i

,f

i

), f

i

=(v

i1

, ..., v

im

), 0 ≤ i ≤ n}

is the domain knowledge of the agent (i.e., the set of

accommodations the real estate agent holds). p

i

is the

profit attached to the accommodation h

i

that the

agent tries to maximize. f

i

is the value vector for the

attributes associated with the accommodation h

i

.

Domain knowledge consists of information about

the available accommodation with attributes, such as

rental rate, rental period, and location. Other

component of the agent model is the set of

conversational acts A. The third component is the

behavior planner component S that specifies the

rules that agent has to obey during the conversation.

The behavior planner component makes use of the

plan operators and defines the order of the

conversational acts and the structure of the

communication.

The user is represented as a 3-tuple (R, A, S)

where R=(r

j

,w

j

) is the model of user requirements

expressed as fuzzy constraints over the attributes of

the accommodations. Each constraint r

j

has a priority

w

j

. A is the set of conversational acts the user is

allowed to take during the conversation and S is the

behavior planner component.

The information exchange between the user and

the agent is organized as a sequence of

conversational acts.

The agent evaluates accommodations in the

database according to the user requirements and

specified priorities. Let w

j

be the priority of the

decision criterion c

j

, x

ij

be the degree of

appropriateness of the accommodation A

i

versus

decision criterion c

j

and A

i

*

be the appropriateness

of the accommodation A

i

versus all the criteria

obtained by aggregating x

ij

and w

j

. Using the mean

operator to aggregate the assessments, A

i

*

is given

by ,

where ⊕ and ⊗ are fuzzy addition and

multiplication.

))(...)((/1

11

*

wxwx

m

A

mimi

i

⊗⊕⊕⊗=

3.1 Modelling Dialogs by Automata

The agents have to decide what information to

provide to the users and how to provide it. The way

of representing the information is impacted by the

user's and agent's affective state and the user's

tolerance to assistance. What information to provide

depends on the user's requirements and agent's

interests.

Mealy-type automata are used to model the

dialog DA=(S,X,Y,f,g), where S is a set of states, X

and Y are sets of input and output utterances

respectively,

f: S x X → S is a transition function, and

g: S x X → Y is an output function.

ICEIS 2004 - HUMAN-COMPUTER INTERACTION

248

States of the automation correspond to the states

of the dialog, and input and output symbols

correspond to the dialog utterances. The initial state

of the automaton is the greeting phase of the dialog.

User personality and emotions as well as user

utterance are attributes of the input symbols and

their values are:

UE - user emotions: joy, fear, anger

UP - user personality: extrovert, introvert, agreeable,

disagreeable

UU - user utterance: speech, buttons pressed, options

choosed.

Output utterance attributes are agent's gestures,

emotions and presentations with the following

values:

AG - agent gestures: point, congratulate, blink,

greet, look, wave

AE - agent emotions: sad, confused, pleased

AP - agent presentation: text, speech, video,

pictures.

The dialog state is characterized by the following

attributes and their values:

SIF - importance to the user of the feature under

consideration: high, medium, low

SDA - the degree of feature satisfaction by the

proposed accomodation: high, medium, low

SAP - agent profit: high, medium, low.

4 CONCLUSION

The presented work models emotions and

personality to enhance the believability of the

human-agent informative dialogues.

In addition to creating more natural and intuitive

interfaces, animated agents provide help and may

have positive and motivational effect on the user

experience with interactive technologies.

The proposed work tries to predict and influence

the conversational dialog based on the assessment of

the user emotions and personality. Simulated

sympathetic agents have positive impact on the

user's affective state.

REFERENCES

André, E., Rist, T., van Mulken, S., Klesen, M., and

Baldes, S. 2000. The Automated Design of Believable

Dialogues for Animated Presentation Teams. In

Embodied Conversational Agents, eds. Cassell, J.,

Sullivan, J., Prevost, S. and Churchill, E. Cambridge,

MA: MIT Press, 220-255

Bozinovski, S., Martinovska, C., Bozinovska, L., Pop-

Jordanova, N. 1991. MEXYS2: A Fuzzy Reasoning

Expert System Based on the Subject Emotions

Consideration. In Medical Informatics Europe, eds.

Addlasnig, K., Grabner, G., Bengtsson, S., Hausen, R.,

Springer Verlag

Cassell, J. and Stone, M. 1999. Living Hand to Mouth:

Psychological Theories about Speech and gesture in

Interactive Dialogue Systems. Fall Symposium on

Narrative Intelligence, 34-42. Menlo Park: AAAI

Press

Conati, C. 2002. Probabilistic Assessment of User's

Emotions in Educational Games. Journal of Applied

Artificial Intelligence, special issue on Merging

Cognition and Affect in HCI, vol. 16(7-8): 555-575

Costa, T., and McCrae, R. 1992. Four Ways Five Factors

are Basic. Personality and Individual Differences 1,

13: 653-665

Healy, J. and Picard, R. 2000. SmartCar: Detecting Driver

Stress. In Proceedings of the 15th International

Conference on Pattern Recognition, Barcelona Spain

Hudlicka, E. and McNeese, M. 2002. Assessment of User

Affective and Belief States for Interface Adaptation:

Application to an Air Force Pilot Task. User Modeling

and User-Adapted Interaction 12(1): 1-47

Isbister, K. and Nass, C. 1998. Personality in

Conversational Characters: Building Better Digital

Interaction Partners Using Knowledge About Human

Personality Preferences and Perceptions. Workshop on

Embodied Conversational Characters, 103-111. CA

Johnson, L., Rickel, J., and Lester, J. 2000. Animated

Pedagogical Agents: Face-to-Face Interaction in

Interactive Learning Environments. International

Journal of Artificial Intelligence in Education 11: 47-

78

Lester, J., Towns, S., Callaway, J., and FitzGerals, P.

2000. Deictic and Emotive Communication in

Animated Pedagogical Agents. In Embodied

Conversational Agents eds. Cassell, J., Sullivan, J.,

Prevost, S. and Churchill, E. 132-154. Cambridge,

MA: MIT Press

Microsoft Agent. Software Development Kit. 1999.

Redmond, Washington: Microsoft Press

<http://microsoft.public.msagent>

Plutchik, R., 1980. Emotion: A Psychoevolutionary

Synthesis. New York: Harper and Row

Prendinger, H. and Ishizuka, M. 2001. Social Role

Awareness in Animated Agents. In Proceedings of the

5th Conference on Autonomous Agents, 270-377. New

York: ACM Press

Rousseau, D., and Hayes-Roth, B. 1998. A Social

Psychological Model for Synthetic Actors. In

Proceedings 2nd International Conference on

Autonomous Agents. 165-172

Walker, M., Cahn, J., and Whittaker, S. 1997. Improvising

Linguistic Style: Social and Affective Bases for Agent

Personality. In Proceedings of Autonomous Agents '97,

96-105. Marina del Ray, California: ACM Press

MODELLING DIALOGUES WITH EMOTIONAL INTERACTIVE AGENTS

249