PREDICTING BLOCKING EFFECTS IN THE SPATIAL DOMAIN

USING A LEARNING APPROACH

Aladine Chetouani, Ghiles Mostafaoui

Laboratory L2TI, Institute of Galilee, University Paris XIII, France

Azeddine Beghdadi

Laboratory L2TI, Institute of Galilee, University Paris XIII, France

Keywords: Degradations, blocking effect, prediction, learning phase, subjective test.

Abstract: A new method for predicting blocking effect in the spatial domain is proposed. This method aims at

estimating the appearance of blocking artefacts in the original image prior to compression for a given bit

rate and a given compression technique. The basic idea is to use a training process in order to compute a

visibility measure. A weighting function of the blocking effects is then derived from this training process

performed on a database. The proposed method is objectively and subjectively evaluated on various actual

images. The obtained results confirm the efficiency of the proposed method in predicting blocking effect.

1 INTRODUCTION

Block-based image processing approaches are

systematically applied to account for the non

stationarity of the image signal and the

computational constraints. The other motivation

behind the development of block-based image

treatments is to make them appropriate for real-time

application and their possible implementation on

parallel architectures. However, block-based

methods are prone to artefacts, called blocking

effect, that may affect the image quality and limit

the efficiency of some image processing techniques.

This artefact is the most known annoying image

distortion. The efficiency limitation of many

compression methods, such as BTC (Block

Truncation Coding) technique (Delp, 1979) or VQ

(Vector Quantization) coding (Gray, 1984), is

essentially due to blocking effect. Many

improvements have been proposed in order to reduce

this effect. But at our knowledge, the design of

formalized procedures allowing to control this effect

is still missing. Here, we focus our study on block-

based compression methods and especially those

using Discrete Cosine Transform (DCT). This

method has been widely used in image and video

compression standards such as JPEG and MPEG2.

For low bit rate, these block-based coding methods

produce a noticeable blocking effect, in the

reconstructed image. This is mainly due to the fact

that the blocks are transformed and quantized

independently. This annoying artefact appears at the

block frontiers as artificial horizontal and vertical

contours. The visibility of this blocking effect

depends highly on the spatial intensity distribution in

the image. Moreover, the Human Visual System

(HVS) increases the perceived contrast between two

adjacent regions.

Blocking effect has been widely studied and

many had hoc methods for estimating and reducing

this artefact have been proposed in the literature. In

(Wang et al., 2000), a blind method for estimating

the blocking effect in the frequency domain is

proposed. It worth to noticing that blind approaches

are more appealing than full reference approaches.

In (Bovik et al., 2001; Coudoux et al., 1998), the

blocking artefacts are modelled as 2D signals in the

DCT-coded images. By taking into account some

HVS properties, the local contrast in the vicinity of

the inter-block boundary is used as an estimate of

the blocking effect. In (Jang and al., 2003), an

iterative algorithm is applied for reducing the

blocking effect artefact in the block transform-coded

images by using a minimum mean square error filter

in the wavelet domain. Another similar method

based on image restoration approach has been

197

Chetouani A., Mostafaoui G. and Beghdadi A. (2008).

PREDICTING BLOCKING EFFECTS IN THE SPATIAL DOMAIN USING A LEARNING APPROACH.

In Proceedings of the International Conference on Signal Processing and Multimedia Applications, pages 197-201

DOI: 10.5220/0001935401970201

Copyright

c

SciTePress

proposed (Luong et al., 2005). A blocking effect

visibility measure based on the local contrast is used

to control the iterative process. In (Singh et al.,

2007), a new technique based on a frequency

analysis is proposed for detecting blocking effects.

The artefacts are modelled as 2-D step function

between the neighbouring blocks. The presence of

the blocking artefacts is detected by using block

activity signal based on HVS and block statistics.

Several other interesting methods (Castagno et

al., 1998; Lee et al., 1998; Zeng, 1999), dealing with

blocking effects have been also developed.

However, most of these studies aim to detect or

remove the blocking effects on the compressed

images.

Here, we propose a different approach which

allows to predict the visibility of the blocking effects

on images prior to compression. The paper is

organized as follows. Section 2 presents the

motivations and describes in details the weighting

procedure. Section 3 is dedicated to the results and

the performance evaluation of our method. The last

section contains the conclusion and perspectives.

2 MOTIVATION AND METHOD

The continuing development of high resolution

imagery technology leads to higher bit rates because

of the increase in both spatial resolution and

intensity range. Much research on block-based lossy

image compression is still needed. However, lossy

compression at low bit rate may produce some

annoying artefacts limiting thus their efficiency.

Here, we focus the study on blocking effects. One of

the main issues related to image compression is how

to control these artefacts. One way to achieve this

goal is first to predict this structured distortion and

then to propose a solution for reducing it. Inspired

by the fact that human observer is able to detect and

recognize blocking effect, even in the absence of the

original image, we propose a new approach based on

a learning process. The approach use here is based

on a training offline process. The main idea is to

compute a weighting function which assigns to each

pixel a weight that could be interpreted as a

prediction probability of the appearance of the local

blocking effect. The main idea developed here is to

study the relation between the appearance of the

blocking effects and the pixels neighbourhoods in

the non-compressed image. Therefore, we perform a

learning offline process on a database containing

various grey-level and color images. The whole

weighting process is summarized in fig. 1.

2.1 Learning Process

The learning process is applied on a database of 211

different real images (from F. C. Donders Centre for

Cognitive Neuroimaging database). These images

contain various kinds of textures with different

roughness and regions with different intensity

distribution and uniform regions.

Figure 1: Synoptic.

First, we analyze the spatial distribution of the

pixels before extracting some local characteristics

from these images. Indeed, the appearance of a

blocking effect in an image area depends highly on

the local descriptors such as color, homogeneity,

gradient etc. These local characteristics could be

expressed in the transformed domain such as

Wavelet Transform or DCT. To make the method

independent of compression method, we use the

local variance as a local homogeneity measure. For

each image f taken from the learning database, we

compute the corresponding local variance image V

(see fig. 2.b). Once the local variance image

computed, we analyse the compression effect in

terms of blocking appearance. To do this, we have to

detect the blocking effects on the compressed

images. Let us define:

•

the compressed images of an original

image f of the database where q represents

the different quality factors (q [1,100] for

JPEG compression).

•

the gradient absolute values images of

.

Depending on the bit rate, the blocking effect tends

to create large uniform zones where the gradient is

null. The blocking effects on the compressed images

could be then detected by analyzing the signal

.

This first simple process gives coarse detection of

blocking effect (see fig. 2.c). We will show that by

Original Image Compressed Image

Local Variance

Gradient image

Image Fusion step

Accumulation matrix computation

Weighting process

SIGMAP 2008 - International Conference on Signal Processing and Multimedia Applications

198

increasing the size of the database, used in the

learning process, the blocking effect detection could

be improved.

Here, we use a cumulative learning scheme

based on a voting process. A table of accumulation

representing the statistics of the appearance of

blocking effect in the compressed images (at

different quality factors) is computed. This vote

table is a 2D array denoted H(v,q) where v is the

possible values of the variance and q the

compression quality factor.

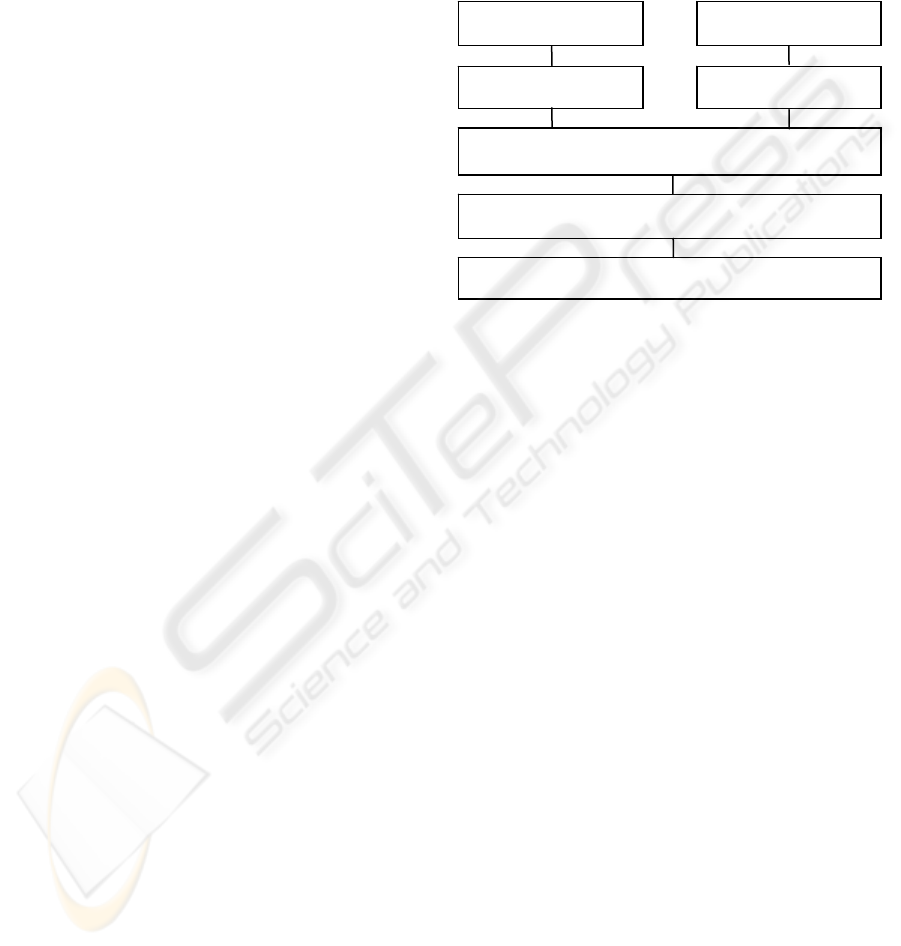

Figure 2: a) Original image, b) Local variances image, c)

Labelled regions with null gradients d) Local variances of

pixels with null gradients.

Let

,

1,

be the set of database

images,

,

and

1,100

the corres-

ponding images obtained as explained above.

For each pixel

,

of an image

we

define an influence function for each couple (v,q).

This function can be written as:

,

1

,

0

0

1

This expression means that a pixel will have a

positive influence on a cell (v,q) of H(v,q) only if its

local variance corresponds to v and its gradient

absolute value on the compressed image (

) with q

as quality factor is null (pixel probably belonging to

a blocking effect on

). After computing the

influence functions for all the pixels (

,

) of

all the images

of the database, we can define the

value of a cell (v,q) of the accumulation table as

follows :

,

,

2

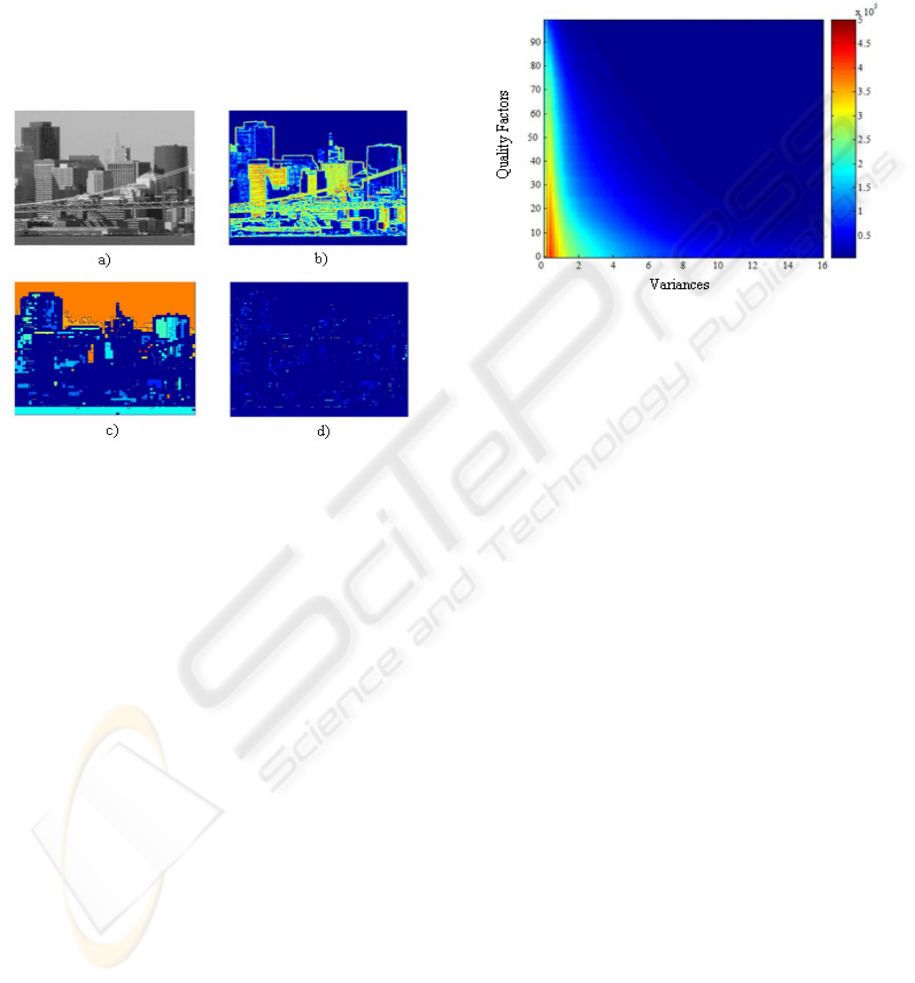

Fig. 3 displays a part of the accumulation matrix

corresponding to variances between 0 and 16 for a

better visibility. This table contains the statistical

information about the pixel neighbourhood and the

corresponding degree of blocking effect appearance.

It contains all the relevant local characteristics and

compression factors.

Figure 3: The accumulation matrix.

Fig. 3 clearly shows the coherence of the

statistics. In fact, one can notice that less the

variance is and less the quality factor is (high

compression ratio), higher is the probability of

appearance of a blocking effect. The errors due to

approximations are completely cancelled by the

large mass of correct accumulations.

2.2 The Weighting Process

The obtained voting matrix is used for predicting

blocking effect. A weighting function, representing

the probability of appearance of a blocking effect on

a pixel neighbourhood, is then derived from this

table. For each factor quality value, a simple

weighting function is to consider the row of the

matrix H given by:

,

Є

0,255

3

The matrix H is constructed from the image

database. Due to the lack of regularity of the weight

function, a polynomial interpolation is applied in

order to obtain a well behaved function as shown in

fig. 4.

Here, we can also notice the coherence of the

results related to the fact that for a given local

variance value, low the quality factor is (high

compression ratio), high the weights are (increase of

the appearance probability of blocking effects).

PREDICTING BLOCKING EFFECTS IN THE SPATIAL DOMAIN USING A LEARNING APPROACH

199

Figure 4: The weighting function for different quality

factors.

3 EXPERIMENTAL RESULTS

To evaluate the efficiency of the proposed method in

predicting blocking effect, we use both objective and

subjective assessment. In the following, we describe

the two strategies in details. Since, the subjective

evaluation of perceptual distortion measure is the

most accepted approach, we evaluate the objective

measure in terms of correlation with the MOS

obtained through subjective tests.

3.1 Objective Test

To test the efficiency of the proposed measure,

various experimental tests are realized over 200

natural images different from those of the learning

database and with variable bit rate. The experimental

procedure is quite simple and does not require the

compressed images. We use only the uncompressed

images. Let f be an original test image and V its

corresponding local variance image. The probability

of a pixel (x,y) to belong to a blocking effect area

with a given quality factor q of compression (here

JPEG) is :

,

4

Where,

represents the weight function

obtained from the learning process. This makes the

method very fast. In fact, for implementation, the

weight function (obtained with the offline learning)

could be considered as a simple Look Up Table. The

prediction procedure is then based only on to the

local variances computation. The proposed method

has been successfully evaluated on various images.

Here, due to the limited place only one typical case

is shown.

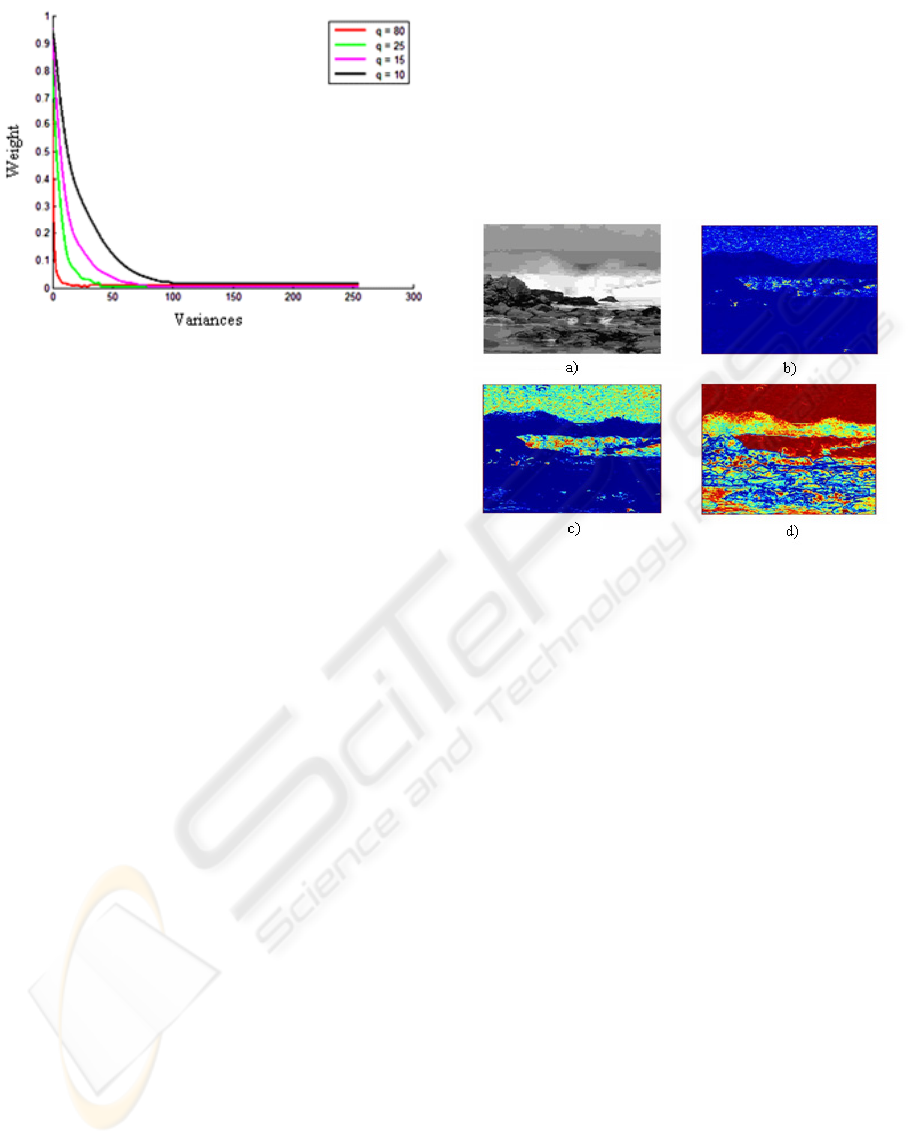

Fig. 5, gives an example of the predicted weight

images obtained for a natural test image. Fig. 5.a is

the original test image. We choose three quality

factor q = 49, 17, 4. The predicted weight images are

represented in figs. 5.b, 5.c and 5.d respectively. The

red and blue regions correspond to the high and low

weights respectively.

Figure 5: Blocking effect prediction. a) Original image, b)-

d): Blocking effect visibility map for q=49, q=17 and q=4,

respectively.

It could be noticed that the weights gradually

increase as the quality factor decreases. This

expresses the fact that homogeneous regions are

more affected by blocking effect than texture ones.

3.2 Subjective Test

Subjective evaluation of image quality is still the

most accepted solution. Unfortunately, it

necessitates the use of several procedures, which

have been formalized by the ITU recommendation

(CCIR, 1990-1994). These procedures are complex,

time consuming, and nondeterministic. In our

experiments, we performed subjective tests with ten

observers. We present to each observer, various

images with different quality factor values q. The

observers are asked to visually detect for each

compression ratio (quality factor) and for each

image of the database, the appearance of blocking

effects.

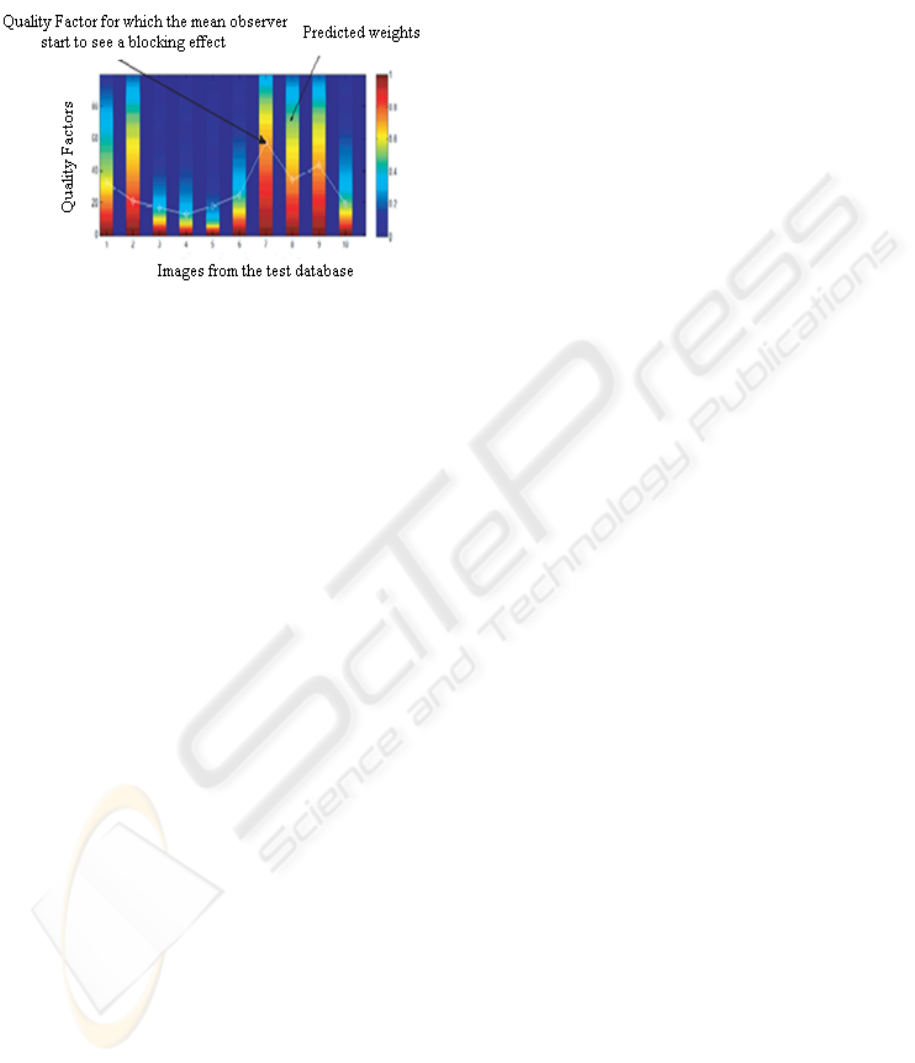

Fig. 6 shows the Mean Opinion Score (MOS) for

each image (white line) used in the subjective tests.

This MOS corresponds to a quality factor value for

which the blocking effect starts to be visible. For

each quality factor and for each test image, we

compute the corresponding weight. The obtained

SIGMAP 2008 - International Conference on Signal Processing and Multimedia Applications

200

results show that the mean observer starts to see

blocking effects on the compressed images at ratio

corresponding to prediction probabilities up to 0.4.

Figure 6: Objective vs subjective quality measure.

4 CONCLUSIONS AND

PERSPECTIVES

A simple and efficient method for predicting

blocking effects on the original non-compressed

image based on a local image analysis and a training

scheme is proposed. The obtained results show that

the proposed method is efficient in predicting

blocking effect and show good correlation with

subjective evaluation. This predictive scheme could

be used as a blind image quality control system prior

to compression in order to achieve the trade-off

between bit rate and perceptual image quality. As

perspective, we are planning to introduce a masking

model in the method to make it more adaptive to

image signal activity and HVS limitations. This

predictive method could be extended to other block-

based image compression techniques.

REFERENCES

Bovik, A.C., Liu, S., May 2001. “DCT-domain blind

measurement of blocking artefacts in DCT-coded

images”. Proc. IEEE International Conference on

Acoustics, Speech, and Signal Processing.

Castagno, R., Marsi, S., Ramponi, G., August 1998. “A

simple algorithm for the reduction of blocking artifacts

in images and its implementation”. IEEE Trans. on

Consumer Electronics, vol. 44, no.3.

CCIR, 1990-1994. “Method for subjectives assessment of

the quality of television pictures”, Recommendation.

500-4.

Coudoux, F.X., Gazalet, M.G., Corlay, P., January 1998.

“Reduction of blocking effect in DCT-coded images

based on a visual perception criterion”. Signal

Processing: Image Communication, Volume 11, Issue

3, pp. 179-186.

Delp, E. J., Mitchel, O.R., 1979. “Image compression

using block truncation coding”. IEEE transactions on

Communications, No 27 pp 1135-1142.

Gray, R. M., 1984. “Vector Quantization”. IEEE ASSP

Magazine, 1, N°2, pp.4-29.

Jang, I. H., Kim, N.C., So, H.J., 2003. “Iterative blocking

artefact reduction using a minimum mean square error

filters in wavelet domain”. Signal Processing 83(12),

pp. 2607-2619.

Lee, Y.L., Lim, H.C., Park, H.W., February 1998.

“Blocking effect reduction of JPEG images by signal

adaptative filtering”. Image Processing, IEEE

Transactions on Volume 7, Issue 2, pp. 229 - 234.

Luong, M., Beghdadi, A., Souidene, W., Le Négrate, A.,

2005. “Coding artefact reduction using adaptive post-

treatment”. IEEE ISSPA, Volume 1, pp. 347-350.

Singh, S., Kumar, V., Verma, H.K., January 2007.

“Reduction of blocking artefacts in JPEG compressed

images”. Digital Signal Processing, Volume 17, Issue

1, pp. 225-243.

Wang, Z., Bovik, A.C., Evans, B.L., September 2000.

“Blind measurement of blocking artefacts in images”.

IEEE International Conference on Image Processing,

vol. 3, pp. 981-984.

Zeng, B., December 1999. “Reduction of blocking effect

in DCT-coded images using zero-masking

techniques”. Signal Processing, Volume 79, Issue 2,

pp. 205-211.

PREDICTING BLOCKING EFFECTS IN THE SPATIAL DOMAIN USING A LEARNING APPROACH

201