A NEW PROBLEM IN FACE IMAGE ANALYSIS

Finding Kinship Clues for Siblings Pairs

A. Bottino

1

, M. De Simone

1

, A. Laurentini

1

and T. Vieira

1,2

1

Computer Graphics and Vision Group, Politecnico di Torino, C.so Duca degli Abruzzi, 24, Torino, Italy

2

Departamento de Eletrônica e Sistemas, Universidade Federal de Pernambuco, Recife, Brazil

Keywords: Kinship verification, Support Vector Machines, Principal Component Analysis, Feature Selection

Algorithm.

Abstract: Human face conveys to other human beings, and potentially to computers, much information such as

identity, emotional states, intentions, age and attractiveness. Among this information there are kinship clues.

Face kinship signals, as well as the human capabilities of capturing them, are studied by psychologist and

sociologists. In this paper we present a new research aimed at analyzing, with image processing/pattern

analysis techniques, facial images for detecting objective elements of similarity between siblings. To this

end, we have constructed a database of high quality pictures of pairs of siblings, shot in controlled

conditions, including frontal, profile, expressionless and smiling face images. A first analysis of the

database has been performed using a commercial identity recognition software. Then, for discriminating

siblings, we combined eigenfaces, SVM and a feature selection algorithm, obtaining a recognition accuracy

close to that of a human rating panel.

1 INTRODUCTION

Analyzing face images is a main research topic in

pattern analysis/image processing, since face is the

part of the body that supplies more information to

other humans and thus potentially to computer

systems. A traditional area of research is identity

recognition, but several other areas are emerging,

such as affective computing (Pantic and Rothkrantz,

2003), age estimation (Fu and Huang, 2010) and

analyzing attractiveness (Bottino and Laurentini,

2010).

In this paper we deal with the new problem of

analyzing facial kinship clues with objective pattern

analysis/ image processing techniques. The problem

of kin recognition has been much studied in human

sciences areas such as psychology and sociology.

According to the theory of inclusive fitness put

forward by Hamilton (1964), recognizing kinship

and also the degree of relatedness is very relevant to

social behavior of animals and humans. According

to Bailenson et al. (2009), facial similarity could

also affect voting decisions.

Detecting kinship from face images could have

applications in other areas, as historic and

genealogic research and forensic science.

Several human scientists have investigated the

ability of human raters of recognizing kinship from

human face images, and have attempted to locate the

facial features more significant as kinship clues. For

instance, Kaminski et al. (2009), using a data set of

face images shot in uncontrolled condition, reported

a correct classification of kinship of 66% for

siblings. For a comparison, the raters did not exceed

73% of kinship assessment when shown two images

of the same person. Maloney and Dal Martello

(2006), on the basis of a high quality data set of

children images, found that the upper part of the face

carries more kinship clues.

Very little research on recognizing kinship from

face images is reported in pattern analysis area. With

regard to facial similarity, a related problem, Holub

et al. (2007) described the construction of facial

similarity maps based on human ratings. The

relationship between human perception of similarity

and computer based scores has been investigated

also by Kalocsai et al. (1998), which found that the

Gabor filter model was closer to human judgment

than PCA.

The only research explicitly dealing with the

computer analysis of facial features for a set of

parent/child images has been presented in a paper by

Fang et al. (2010). A database containing 150 semi-

405

Bottino A., De Simone M., Laurentini A. and Vieira T. (2012).

A NEW PROBLEM IN FACE IMAGE ANALYSIS - Finding Kinship Clues for Siblings Pairs.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 405-410

DOI: 10.5220/0003771004050410

Copyright

c

SciTePress

frontal image pairs, collected from the Internet and

shot in uncontrolled lighting conditions, was

analyzed. 22 facial features and small windows

surrounding feature points were extracted according

to the Pictorial Structure Model. KNN and SVM

classifications provided accuracy of 70.67% and

68.60%, respectively. These data should be

compared with the average classification accuracy of

67.19% of a panel of human raters on the same

dataset.

In this paper we present a new investigation on

detecting couples of siblings with computer analysis

of facial similarity elements. To avoid problems due

to the heterogeneity of images collected on the

Internet, we have prepared a high quality database of

pairs of siblings, shot in exactly frontal and profile

positions, with and without expression and in the

same lighting conditions. The database, that will be

made available to other researchers, has been first

analyzed with a commercial face recognition

package. Then, for discriminating siblings, we used

PCA, SVM and a feature selection algorithm.

Finally, the results of the computer analysis have

been compared with the classification supplied by

human raters.

The content of the paper is as follows. In section

2 we describe the databases used. In section 3, we

report the results obtained using a commercial

identity recognizer software for discriminating pairs

of siblings. In section 4, the proposed method for

automatic siblings recognition is discussed. In

section 5 we compare the results of our classifier

with that obtained by human raters on the same

dataset. Finally, conclusions and future works are

presented in section 6.

2 DATABASES

Heterogeneous data sets, as those containing images

collected over the Internet, have been used several

times in face analysis. However, uncontrolled

imaging condition can introduce disturbing elements

which can seriously affect the result of the research.

In order to avoid these problems, we constructed for

our analysis a high quality database, called HQfaces,

containing images of 97 pairs of siblings. A subset

of 79 pairs contains profile images as well, and 56 of

them have also smiling frontal and profile pictures.

The images, with resolution 4256×2832, were shot

by a professional photographer with uniform

background and controlled lighting. The subjects are

voluntary students and workers of the Politecnico di

Torino and their siblings, in the age range between

13 and 50. All subjects are Caucasian and around

57% of them are male. As an example, some

cropped frontal expressionless images of siblings in

HQfaces are shown in Figure 1 (top row). Currently,

the DB is available on request contacting the

authors. In order to verify the advantages of using

high quality images, we also prepared a second

database, LQfaces, containing 98 pairs of siblings

found over the Internet, where most of the subjects

are celebrities. The low quality photographs have an

average size of 378x283, they are almost frontal, but

not always expressionless, and with various lighting

conditions. Profiles are not available in LQfaces.

The individuals are 45.5% male, 87.9% Caucasian,

9.1% Afro-descendants and 3% Asiatic. Examples

of siblings in LQfaces are shown in

Figure 1 (bottom

row).

Figure 1: Pairs of siblings from the HQfaces (top row) and

from the LQfaces (bottom row).

2.1 Databases Normalization

Images in the DBs have been normalized. This

process was first aimed at aligning them and

delimiting the same section for all frontal and profile

faces, including the most significant facial features.

Geometric normalization is based on the position of

two landmarks in the images. For frontal images,

those points are the eye centers. For profiles, the

repere points are Nasion (the depressed area directly

between the eyes, just superior to the bridge of the

nose) and Pogonion (the most anterior point on the

chin). Eye centers are detected using the Active

Shape Model (ASM) technique (Milborrow and

Nicolls, 2008) while the profile landmarks are

identified using an algorithm derived from that in

Bottino and Cumani (2008). Examples of extracted

keypoints are shown in Figure 2.

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

406

Figure 2: Expressionless/smiling frontal and profile, faces

from HQfaces (top row). Keypoints extracted from

corresponding photographs (second row).

The result is a normalized image enclosed within

a fixed area of interest (standard area) with the

selected landmarks aligned and coincident with two

predefined fixed points (reference positions). The

transformations involved are rotation and

translation, to align the line joining the landmarks

with the corresponding line in the standard area,

isotropic scaling to make the landmarks coincident,

and finally cropping. The dimension of the standard

area and the reference positions, in pixel units, for

frontal and profile HQfaces and frontal LQfaces are

shown in Table 1.

Table 1: Reference positions used for normalization.

Normalized

image

Standard area Reference

position 1

Reference

position 2

Frontal

HQ

1000x1000 Left eye:

(200,200)

Right eye:

(800,200)

Profile HQ

800x600 Nasion:

(400,100)

Pogonion:

(400,700)

Frontal

LQ

140x140 Left eye:

(20,20)

Right eye:

(120,20)

Finally, normalized images are converted to

grayscale and their histograms are equalized. For

profile images, the background is discarded using

simple chroma-keying techniques prior to colour and

intensity related processing, since part of it appears

in the standard area.

3 PREDICTING KINSHIP WITH A

COMMERCIAL FACE

RECOGNIZER

The concept of “similarity” of faces is much more

encompassing than the concept of “identity”.

However, we believe to be interesting attempting to

recognize pairs of siblings using an effective

commercial identity recognition software. For this

task, we selected the FaceVACS

®

Software

Development Kit (SDK), supplied by Cognitec

Systems (Cognitec, 2011). A previous version of this

software was tested in the Face Recognition Vendor

Test (FRVT) 2006, obtaining excellent results in

identity recognition (Phillips et al., 2010).

When the SDK analyses a pair of images, it

provides a score value s∈[0,1]. The higher the score,

the higher the probability they belong to the same

subject. Since siblings are likely to share facial

attributes, one can suppose that the score between

two siblings should be higher than the score between

two unrelated people.

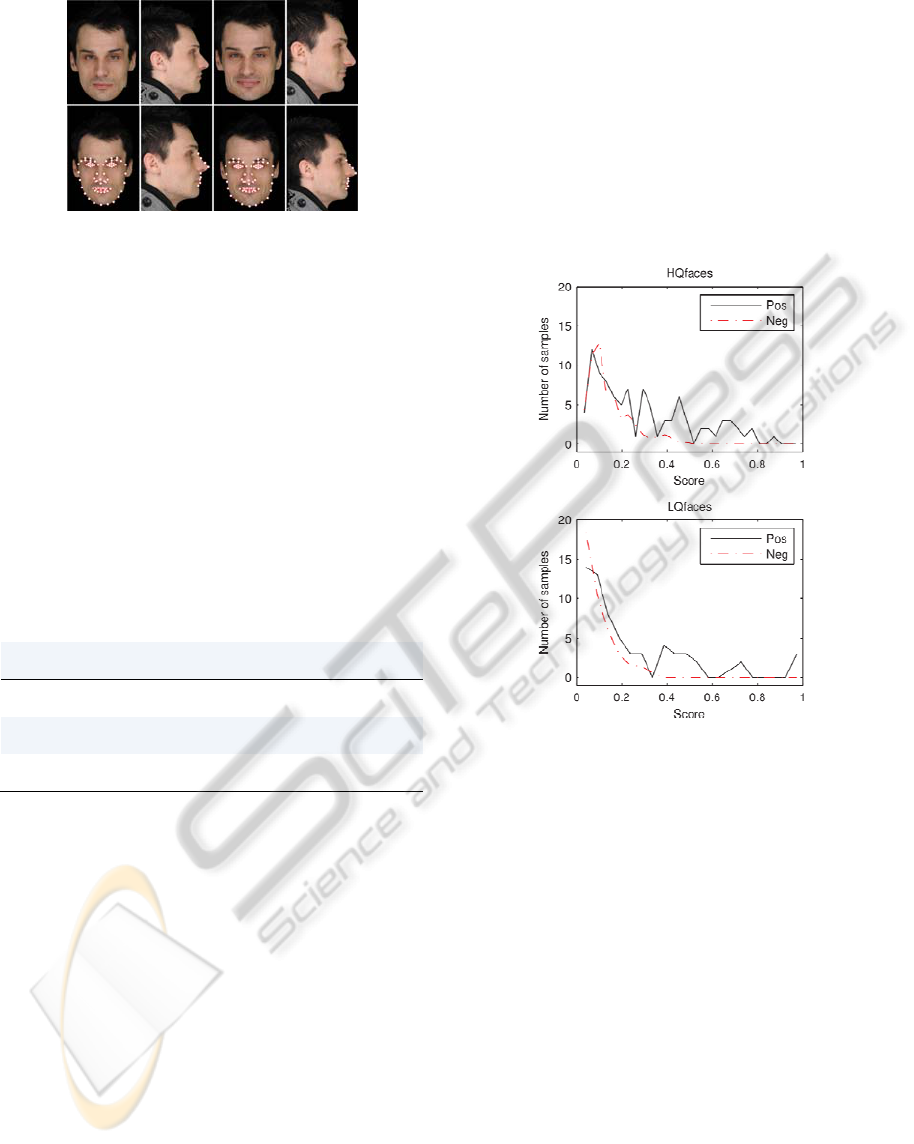

Figure 3: Scores histograms for HQfaces (top) and for

LQfaces (bottom).

Indeed, the FaceVACS can provide an initial

insight about the possibility of dealing with sibling

images. We experimented FaceVACS with all pairs

of siblings and with an equal number of randomly

selected pairs. The histograms of the scores are

shown in Figure 3, where Pos stands for pairs of

siblings, and Neg for random pairs of not siblings.

The figure shows that no negative pair scored higher

than 0.4 for LQfaces and 0.5 for HQfaces. In other

words, if the score of a couple of images is above

these thresholds, they are likely to belong to

siblings, otherwise, another algorithm must be used

to make the decision. Using a fixed threshold might

guarantee a null False Acceptance Ratio (FAR) but

strongly penalizes the False Rejection Ratio (FRR),

since there are many positive samples with scores

lower than this threshold. For instance, to obtain a

null FAR, the FRR is 78.12% for LQfaces, and

82.47% for HQfaces.

A NEW PROBLEM IN FACE IMAGE ANALYSIS - Finding Kinship Clues for Siblings Pairs

407

4 USING PCA, SVM AND

FEATURE SELECTION FOR

SIBLINGS VERIFICATION

Eigenfaces, first suggested by Sirovic and Kirby

(1987), have been extensively used for face image

analysis in reduced dimensionality spaces. The main

feature of the eigenfaces is that they capture both

facial texture and geometry. Since we do not know

yet which are the facial elements more significant

for detecting kinship clues for siblings, we decided

in this paper to perform a first analysis using this

popular catch-all technique for feature extraction.

The main datasets used for our experiments are

the following:

i. 196 frontal images of subjects from LQfaces

(98 siblings pairs);

ii. frontal expressionless images of 184 subjects

from HQfaces (92 siblings pairs);

iii. 158 individuals, represented by a set of a

frontal and a profile expressionless images from

HQfaces (79 siblings pairs);

iv. 112 individuals, represented by a set of

expressionless frontal and profile, smiling

frontal and profile images from HQfaces (56

siblings pairs).

The outline of the proposed automatic sibling

classification algorithm, irrespective of the dataset it

is applied to, is the following:

a) Extract the principal components vectors from

all the single images of the dataset and compute

the representative vector of each individual;

b) Compute the representative vector for pairs of

siblings and not siblings, where the

representative vector of a pair is given by the

absolute difference of the representative vectors

of its composing individuals;

c) Train a SVM classifier with the representative

vectors of a training set of pairs, composed by

an equal number of siblings and not siblings,

and apply the classifier to a test set. In order to

improve classification accuracy, a feature

selection (FS) algorithm has also been applied,

and the output of FaceVACS combined with

the SVM classification results.

In the following subsections, we will detail how

these steps have been implemented, and we will

describe and discuss the experimental results

obtained for the classification of image pairs.

4.1 Representative Vectors

For each dataset, we first compute its eigenfaces and

then the representative vector v of each face by

projecting it on these eigenfaces. For datasets

containing different type of images for each person,

(e.g. frontal and profile), eigenfaces are computed

separately for each type of image and the

representative vector of an individual is simply

obtained by concatenating the representative vectors

of each of its available images. In all datasets (and

for each type of image), the dimension

n of the

representative vector (or of the part of it related to an

image type) is the number of principal components

that account for 99% of the total variance of the data

(150 for frontal and 98 for profiles images in

Hqfaces, 119 for images in LQfaces). The

representative vector v

(ab)

of a pair of images I

(a)

and

I

(b)

or of a pair of image sets IS

(a)

and IS

(b)

in multi-

type datasets, is computed from their representative

vectors v

(a)

and v

(b)

as:

v

(ab)

= abs (v

(a)

- v

(b)

)

The representative vector of a pair is such that

v

(ab)

= v

(ba)

.

4.2 Building the Classifier

For each of the four main datasets (i-iv), 6 different

datasets of pairs have been composed, each

containing all the positive samples (pairs of siblings)

available and an equal number of randomly chosen

negative samples (pairs of not siblings). All pairs

datasets have been classified using Support Vector

Machines. Five-Fold cross-validation technique has

been used and a grid search has been done to

optimize parameters of the SVM radial basis kernel,

as suggested by Chang and Lin (2011). Other

classification techniques, as KNN and Classification

Trees, have been tested, but they provided worse

classification results, that we omit for brevity.

4.3 Improving the Classifier

For each pairs dataset, classification has been also

performed applying the Minimum Redundancy and

Maximum Relevance (mRMR) feature selection

(FS) algorithm. This algorithm has been shown to be

effective in building robust learning models,

increasing the classification accuracy under different

datasets and classification techniques (Peng, Long

and Ding, 2005).

The mRMR algorithm selects, for each dataset,

the more relevant features (eigenfaces) for

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

408

characterizing the classification variable by

assigning a score to each element of the

representative vector of an image pair. For each

main dataset, mRMR scores have been averaged

over its six data sets, and the 20 more effective (on

the average) eigenfaces have been used for final

classification. The number of chosen feature is the

one for which, on average, accuracies have a peak.

We have found that the eigenfaces more

significant for discriminating siblings are relatively

stable with respect to the pairs dataset used. To

support the thesis that the described technique is

sufficiently general to also work with other

databases of siblings, we performed further tests

subdividing each of the 6 pairs datasets into 4 not

intersecting subsets, composed by an equal number

of siblings and not siblings. For these subsets, we

obtained again similar eigenvectors from mRMR.

An example of the eigenfaces selected by mRMR is

shown in

Figure 4 for frontal HQfaces.

Figure 4: Best eigenfaces for dataset ii.

As further improvement, we combined SVM

classification, feature selection and the FaceVACS

SDK results. In section 2, we observed that

FaceVACS SDK was effective in detecting siblings

when scores are above a predefined threshold. Then

we corrected the SVM classification as follows. For

each sample classified as Not Siblings, we check the

FaceVACS SDK score, and, if greater than 0.5, we

change the classification to Siblings. This heuristic

provides slightly better, from 1% to 3.5%,

classification accuracies.

4.4 Classification Results

The classification results obtained in our

experiments are summarized in Table 2 and

organized by main dataset (i-iv) and by whether or

not feature selection has been applied (FS / No FS).

For each main dataset, results are reported as the

mean classification accuracy of its 6 pairs datasets.

Results obtained combining SVM and FaceVACS

are also reported.

The following remarks can be drawn:

As expected, accuracies provided for LQfaces

are significantly lower than those for HQfaces.

The more information is available, the higher is

the accuracy of the classifier. Profile and

expressions significantly improve classification

results, as it can be seen in Table 2, where

results for set iv outperform those for set iii,

which are in turn better than those for set ii.

FS always significantly increases accuracy, and

a further minor improvement is provided by

combining the output of our classifier and of

FaceVACS.

Table 2: Classification accuracies for sets i, ii and iii.

Set Feature Selection SVM SVM+SDK

i

No FS

52,39 55,75

FS

59,40 62,05

ii

No FS

61,27 63,19

FS

70,85 70,94

iii

No FS

62,77 65,78

FS

73,28 73,48

iv

No FS

69,68 70,94

FS

75,51 76,33

5 COMPARING AUTOMATIC

AND HUMAN

CLASSIFICATION

In this section we compare the ability of the

automatic system to correctly discriminate between

siblings and not siblings with that of human raters.

To this purpose, we presented on an Internet site the

pairs used for automatic classification, exception

made for the LQ data set, since it is composed

mainly by well known personages, and then likely to

produce biased ratings.

In particular for each main dataset ii-iv, only one

of the 6 pairs datasets was used to collect human

ratings. The pairs were presented in a random order,

and the raters were informed that some of the pairs

presented were siblings, but they were not told in

which percentage. In total, we collected 213.396

YES (the two individuals are siblings) or NO (they

are not) answers from 2.929 students and employees

of the Politecnico di Torino; an average of 444

answers for each pair were collected.

In order to perform a meaningful comparison

with the classifier, we transformed for each pair the

average ratings of the human panel (HP) into the

value that obtained the majority of votes. The

comparison is presented in Table 3, which shows

that the performance of our automatic classifier is

very close to that of the HP. Observe that the

classification accuracy is lower than that shown in

Table 2, which is an average value, while here the

classification results refers only to the same pairs

A NEW PROBLEM IN FACE IMAGE ANALYSIS - Finding Kinship Clues for Siblings Pairs

409

datasets rated by human observers.

Table 3: Comparison between automatic and human

classifications.

Set Feature

Selection

SVM SVM+SDK HP

ii

No FS 62.50 65.18

72.55

FS 65.18 68.75

iii

No FS 66.96 67.85

71.34

FS 67.86 70.53

iv

No FS 68.75 69.64

75.22

FS 71.43 74.10

6 SUMMARY AND FUTURE

WORK

In this paper, we have presented the first results of a

new research aimed at recognizing siblings pairs

with pattern analysis/image processing techniques.

To this purpose we have constructed a data base of

high quality images of pairs of siblings, also

containing profile and smiling images, which will be

used for further investigation on the subject. The

ability of human observers to discriminate pairs of

siblings and not siblings from images of this

database has been experimentally determined as

well. A first automatic analysis of the database has

been performed using a commercial identity

recognition package, which, although not aimed at

this specific problem, has provided some interesting

insight about the problem. Then, we experimented a

technique based on PCA features and a SVM

classifier. Combining them with a feature selection

technique, we obtained correct classification

percentages close to those of the human raters.

Although the PCA features are in principle database

dependent, the algorithm experimented appears

rather general, since it provides similar results using

different training and test sets extracted from our

database. The importance of using high quality

images for these studies has been proven by the

significantly lower percentages of correct

classification obtained for a low quality database,

collected over the Internet.

Future analysis of the database will experiment

other techniques likely to improve the percentage of

correct classification. Gabor filters and other feature

extraction techniques will be applied. In general, we

will focus on approaches able to enhance detailed

comparisons of particularly significant areas of

human faces which could be relevant to discriminate

pairs of siblings.

REFERENCES

Bailenson, J., Iyengar, S., N. Yee and Collins, N., 2009.

Facial similarity between voters and candidates causes

influence, Public Opinion Quarterly 72, pp. 935-961

Bottino, A. and Cumani, S., 2008. A fast and robust

method for the identification of face landmarks in

profile images, WSEAS Trans. on Computers, 7(8).

Bottino, A. and Laurentini A., 2010. The analysis of facial

beauty: an emerging area of research in pattern

analysis, LNCS Vol. 6111/2010, 425-435.

Chang, C.-C. and Lin, C.-J., 2011. LIBSVM: A library for

support vector machines, ACM Transactions on

Intelligent Systems and Technology 2: 27:1–27:27.

Cognitec, 2011. http://www.cognitec-systems.de/Face-

VACS-SDK.19.0.html.

Fang, R., Tang, K. D., Snavely, N. and Chen, T., 2010.

Towards computational models of kinship verification,

Proc. ICIP 2010: 1577-1580.

Fu, G. Y. and Huang, T., 2010. Age synthesis and

estimation via faces: A survey, IEEE Trans. PAMI 32:

1955 - 1976.

Hamilton, W. (1964). The genetic evolution of social

behaviour i and ii, Journal of Theor. Biology 7: 1–52.

Holub A., Liu, Y. and Perona, P., 2007. On constructing

facial similarity maps, IEEE Proc. CVPR 07, pp. 1–8.

Kalocsai, P., Zhao, W. and Elagin, E., 1998. Face

similarity space as perceived by humans and artificial

systems, IEEE Proc. FG’98, pp. 177–180.

Kaminski, G., Dridi, S., Graff, C. and Gentaz, E., 2009.

Human ability to detect kinship in stranger faces:

effects of the degree of relatedness, Proc. Biol. Sci.,

Vol. 276.

Martello, M. and Maloney, L., 2006. Where are kin

recognition signals in human face?, Journal of Vision

6: 1356–1366.

Milborrow, S. and Nicolls, F., 2008. Locating facial

features with an extended active shape model, Proc.

ECCV’08.

Pantic, M. and Rothkrantz, L. J. M., 2003. Toward an

affect-sensitive multimodal human-computer

interaction, Proceedings of the IEEE, pp. 1370–1390.

Peng, H., Long, F. and Ding, C., 2005. Feature selection

based on mutual information: criteria of max-

dependency, max-relevance, and min-redundancy,

IEEE Transactions PAMI 27: 1226–1238.

Phillips, P., Scrugg, T., Toole., A. O., Flinn, P., Bowyer,

K., Shott, C. and Sharpe, M., 2010. Frtv 2006 and ice

2006 large-scale experimental results, IEEE Trans.

PAMI, Vol. 32, pp. 831–846.

Sirovic, L. and Kirby, M., 1987. Low-dimensional

procedure for the characterization of human faces,

Journal of the Optical Society of America 4(3): 519–

524.

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

410