The NInA Framework

Using Gesture to Improve Interaction and Collaboration in Geographical

Information Systems

Daniel Y. T. Chino

1

, Luciana A. S. Romani

2

, Letricia P. S. Avalhais

1

,

Willian D. Oliveira

1

, Renata R. V. Gonc¸alves

3

,

Caetano Traina Jr.

1

and Agma J. M. Traina

1

1

Institute of Mathematics and Computer Science,

University of S

˜

ao Paulo, S

˜

ao Carlos, Brazil

2

Embrapa Agriculture Informatics, Campinas, Brazil

3

Cepagri-Unicamp, Campinas, Brazil

Keywords:

Natural User Interface (NUI), GIS, Kinect, NOAA-AVHRR images, Image-based Information Systems.

Abstract:

Nowadays, Geographical Information Systems (GIS) have expanded their functionalities including larger in-

teractive displays exploration of spatiotemporal data with several views. These systems maintain a traditional

navigation method based on keyboard and mouse, interaction devices not well suited for large screens nor

for collaborative work. This paper aims at showing the applicability of new devices to fill the usability gap

for the scenario of large screens presentation, interaction and collaboration. New gesture-based devices have

been proposed and adopted in games and medical applications, for example. This paper presents the NInA

Framework, which allows an integration of natural user interface (NUI) on GIS, with the advantage of being

expandable, as new demands are posed to that systems. The validation process of our NInA Kinect-based

framework was made through user experiments involving specialists and non-specialists in TerrainViewer, a

geographical information system, as well as experts and non-experts in the Kinect technology. The results

showed that a NUI approach demands a short learning time, with just a couple of interactions and instructions,

and the user is ready to go. Moreover, the users demonstrated greater satisfaction, leading to their productivity

improvement.

1 INTRODUCTION

Advances in several knowledge areas, such as elec-

tronics, physics, computer science, mathematics, and

others have led to the increasing volume of data col-

lected and stored that are employed for analysis and

decision making. One of the areas that had benefited

from those advances are the sensor technology, ob-

taining better images from satellites for Earth obser-

vation. Orbital sensors aboard polar and stationary

satellites capture images of the Earth’s surface every

day, generating a huge volume of data in different

spatial and temporal resolutions and in different light

spectra.

Data provided by these sensors can be used in dif-

ferent scientific areas, such as Geography, Meteorol-

ogy and more recently Agriculture. The large amount

of data and their higher resolution are better viewed

on large screens that allow specialists to spot fine de-

tails with less effort. Therefore, the interaction de-

vices would allow easy and natural ways to display

and to manipulate the views. This is the main motiva-

tion of this paper: to present a framework that allows

the specialists to easily manipulate large amount of

data in big screens, with natural and intuitive interac-

tion devices, as well as to do collaborative work and

analysis.

This paper also presents a case study applied to

a remote sensing images system, which improves the

process of decision making in agrometeorological and

Geographical Information Systems (GIS). Specialists

in remote sensing increasingly depend on computa-

tional systems to deal with satellite images. As a con-

sequence, there is an increasing demand for technol-

ogy (software and hardware) suitable to receive, dis-

tribute and manipulate data recorded by orbital sen-

sors. However, the majority of such technology avail-

able today is intended for commercial use, such as Er-

58

Y. T. Chino D., A. S. Romani L., P. S. Avalhais L., D. Oliveira W., R. V. Gonçalves R., Traina Jr. C. and J. M. Traina A..

The NInA Framework - Using Gesture to Improve Interaction and Collaboration in Geographical Information Systems.

DOI: 10.5220/0004433700580066

In Proceedings of the 15th International Conference on Enterprise Information Systems (ICEIS-2013), pages 58-66

ISBN: 978-989-8565-61-7

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

das

1

, Idrisi

2

and ArcGIS

3

, which are not open source.

These applications include functionalities to open im-

ages, extract values, visualize layers, create algebra of

maps, project images in 3D among others.

GIS have expanded their functionalities includ-

ing geovisualization that studies interactive display

and exploration of spatiotemporal data (Stannus et al.,

2011). Recently, GIS have become popular espe-

cially due to software such as Google Earth. However,

these systems are based on the same traditional navi-

gation method based on keyboard and mouse. New

gesture-based devices have been recently proposed

and adopted for games and medical areas, for exam-

ple, making the interaction much more practical and

simple.

Basically, the interaction with a software tool is

provided by the keyboard and mouse, which is suit-

able for the majority of interface options. However,

layers in 3D view are not just for display, but are be-

ing used to describe surface aspects and can be ob-

served at oblique angles. Thus, images in 3D views

must be handled in a different manner from the tradi-

tional approach employed for 2D views.

Our focus is on how to take advantage of new

interaction possibilities provided by natural user in-

terfaces, such as gesture and body movement inter-

pretation to improve the user/computer interaction.

New devices, such as the Microsoft Kinect, have been

widely used in games to track the players’ move-

ments, enabling the game to become much more re-

alistic. Additionally, these games allow more than

one player acting together. Natural movements as-

sociated to the collaboration aspects can also strongly

contribute to improve the interaction in systems from

other areas such as geographical information systems

and satellite imagery systems.

In this context, this paper proposes an innova-

tive interaction approach for satellite imagery systems

based on gestures. Our focus is on how to take advan-

tage of new interaction possibilities provided by nat-

ural user interfaces, such as gesture and body move-

ment interpretation to improve the user/computer in-

teraction. Our framework uses the Kinect technol-

ogy to improve the interaction when handling 3D vi-

sualizations. The validation process of our Kinect-

based framework was made through experiments in-

cluding specialists and non-specialists in geographi-

cal information systems, as well as experts and non-

experts in the Kinect device. The experiments results

showed that at the very first interactions, beginners

not trained on this device found more difficult to ma-

1

http://www.erdas.com/

2

http://www.clarklabs.org/

3

http://www.arcgis.com

nipulate the interface, but after a while everyone re-

ported this model of interaction much more intuitive

and enjoyable than using the keyboard and mouse.

This paper is organized as follows. In the next

section we present the background needed to follow

the ideas of this paper. The framework proposed is

detailed in Section 3. The user experiments are re-

ported in Section 4 and finally we conclude the work

in Section 5.

2 BACKGROUND

One of the most troublesome problems to develop a

computer system is the design of its user interface.

This is an important issue, because technologies with

complex interfaces can become useless. Interactive

graphical interfaces offer the advantage of allowing

users with different computer skills to easily interact

with the systems.

Researchers in Human Computer Interaction have

proposed innumerous methods and techniques in or-

der to contribute to the improvement of user interface

design processes. The majority of these methods con-

sider users as the center of the design process. In this

sense, several works have investigated different as-

pects related to human beings such as cognition, per-

ception, motor system, memory, among others.

All of those aspects must be considered, but one

of foremost importance is the time that users take to

complete a task, as shorter times imply more intuitive

designs. According to the Fitts’s law, the time to per-

form a task is proportional to the size and the distance

of the target (Fitts, 1954). Thus, the designer must

know about the capacity of the human’s motor sys-

tem to accurately control movements in order to bet-

ter place the interface elements in the screen (Fitts,

1954). In addition, the learning curve should also be

considered when a new design is proposed to a sys-

tem, taking into account both the beginners and the

experts.

The time to perform a task and the learning curve

become more important when the user interface is

designed to be manipulated through new interaction

modalities such as touch and gesture. When users

employ their hands in three-dimensional spaces to in-

teract with the interface instead of moving a device

we call this type of interaction “gestural user inter-

face”. In gestural interfaces, the learning curve tends

to shrink, since the user reproduces the same move-

ments performed in the real world, thus they probably

spend less time learning new interaction metaphors

(Rizzo et al., 2005; Stannus et al., 2011). Although

gestures are a powerful mode of interaction, cultural

TheNInAFramework-UsingGesturetoImproveInteractionandCollaborationinGeographicalInformationSystems

59

differences/conventions can make it difficult to inter-

act with such interfaces (Norman, 2010), leading to

an adaptation process.

Gestural user interfaces are called natural user

interfaces (NUI). They are defined as interfaces in

which the users are able to interact with computers

as they interact with the world (Jain et al., 2011). Ac-

cording to Malizia et al. (Malizia and Bellucci, 2012),

the term natural in NUI is mainly used to differentiate

them from classical user interfaces, which use artifi-

cial devices whose operation has to be learned. How-

ever, the current gesture interfaces are based on a set

of gestures that also must be learned. According to

Norman (Norman, 2010), the gestures are a valuable

contribution as interaction technique, but still requires

more research involving the use of this form of inter-

action in different systems, so that we can better un-

derstand how to use them. Moreover, conventions for

standards should be developed, so that the same ges-

tures mean the same things in different systems. In

other words, researchers need to conduct research to

assess the feedback, guides, correction of errors and

other issues related to the use of gestures and the ben-

efits of this form of interaction.

Malizia et al. (Malizia and Bellucci, 2012) affirm

that the way to go for natural interfaces is still very

long, since only using natural gestures are not enough

for computer systems. They are still artificial because

the designer of the system still requires a set of ges-

tures to be used.

The Microsoft Kinect is one of the recent devices

that aims at supporting gestural interfaces. The Kinect

sensor is available to the community since November

2010. It contains a color sensor, an infrared emit-

ter, an infrared depth sensor and a multi-array mi-

crophone. Combining these sensors, Kinect provides

a stream of color images, depth images and audio.

Through the infrared sensor, Kinect is also able to

track people actions, simultaneously recognizing up

to six users. From these users, up to two users can be

tracked in detail, given spatial information about the

joints of the tracked users over time. The Kinect API

4

enables the application to access and manipulate the

data collected by the sensor (Microsoft, 2013).

Recently, several works have explored natural user

interfaces in different contexts. Stannus et al. (Stan-

nus et al., 2011) developed a gesture-based system

to the Google Earth application. Boulos et al. (Bou-

los et al., 2011) applied depth sensors such as Mi-

crosoft Kinect and ASUS Xtion to control 3-D vir-

tual globes such as Google Earth (including its Street

4

Kinect for Windows. Available at http://

www.microsoft.com/en-us/kinectforwindows. Accessed

date January 16, 2013.

View mode), Bing Maps 3D, and NASA World

Wind. Richards-Rissetto et al. (Richards-Rissetto

et al., 2012) explore the Microsoft’s Kinect to create a

system to virtually navigate, through a prototype 3D

GIS, the digitally reconstructed ancient Maya city.

3 OUR PROPOSED

FRAMEWORK

In this section we present the NInA framework (Nat-

ural Interaction for Agrometeorological Data). This

framework provides a complete set of functionalities

for natural interactions on GIS. We also present a case

study named TerrainViewer, a tool to visualize remote

sensing images. After a brief introduction to Terrain-

Viewer, the next sections highlights the NInA gesture

module.

3.1 TerrainViewer

TerrainViewer is a tool capable of presenting agrome-

teorological data through three-dimensional rendered

models including altitude data. This tool combines to-

pographic data with remote sensing images from dif-

ferent sources, as AVHRR/NOAA

5

and SRTM

6

im-

ages. The rendered models assist agrometeorologi-

cal specialists in finding relationships between remote

sensing data and their respective geographic position

and elevation. Thus, specialists can analyze huge

amounts of data in a short period of time and focus

on a region of interest.

The TerrainViewer tool was originally developed

using a simple and robust manipulation scheme,

based on just keyboard and mouse. With these de-

vices it is possible to rotate, translate and scale the

model, meeting the basic specialists needs to perform

image and data analyses. However, the sophisticated

and highly detailed handling of a 3D scenery pressed

for a more flexible and natural interface. The answer

for those needs was the NInA Framework.

3.2 The NInA Framework

Many studies regarding more natural computer in-

terfaces, as well as the increasing number of differ-

ent domain systems using these interfaces, show that

there are effective gains in allowing users to interact

with tools in the most intuitive way. Based on this

5

Advanced Very High Resolution Radiometer sensors

aboard National Oceanic and Atmospheric Administration

satellites.

6

NASA’s Shuttle Radar Topography Mission.

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

60

Input Stream

Video

Audio

TerrainViewer

NInA

Interaction

Recognition Layer

Streaming Processing

Core Layer

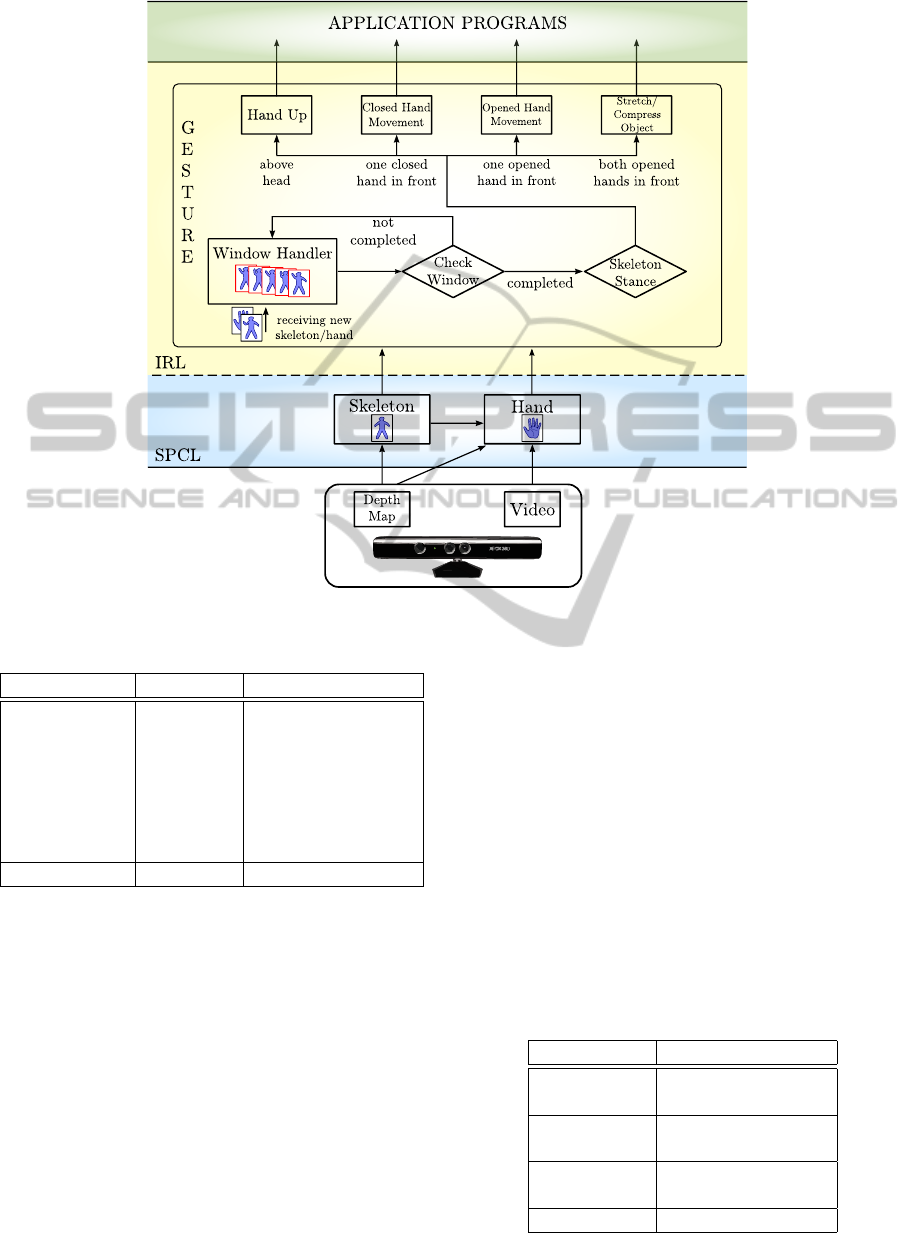

Figure 1: NInA framework architecture.

premise, the NInA Framework was developed aimed

at providing natural interactions for GIS.

The NInA framework is a novel approach that

brings the natural interactions advantages for the sev-

eral visual analysis requirements on satellite imagery

systems. It is structured using a two layer architec-

ture. The Streaming Processing Core Layer (SPCL)

receives data from several devices. The data is pro-

cessed and sent to the Interaction Recognition Layer

(IRL), which is responsible to detect the user actions.

The actions generate events that are sent to the appli-

cations, where they will be acknowledge processing

the visualizations. Figure 1 shows an overview of the

NInA framework and its dataflow.

The SPCL receives data streams of video, audio

and depth maps that can be captured by several de-

vices, including video cameras, microphones, motion

sensing controllers and multi-touch gadgets. SPCL

was designed as a modular architecture. Each mod-

ule receives a set of raw input streams and outputs the

processed data stream required for the second layer.

For instance, the skeleton module receives raw depth

data and outputs a stream containing 3D positions of

body joints. Eventually, a module may receive the

output of another module. A summarization of all

modules is shown in Table 1.

The first module, Audio, has been designed to pro-

cess input audio stream data, i.e., filtering noise and

detecting speech directly from the frequency domain.

In this module, text stream is also extracted for further

use on IRL. The Depth module is used to compute a

matrix with depth values from the signals provided by

the infrared (IR) sensor. In the Hand module, video

and depth data are used to segment user’s hands posi-

tion and contour. The segmentation is used to detect

the fingers’ positions and to determine the hand state

(opened/closed). With the depth values, the module

Skeleton is able to compute the 3D position of user’s

body joints.

The current version of NInA focuses on the Kinect

device, because its range of sensors can provide a

pretty complete experience on natural interaction.

The Kinect streams are received by SPCL using Mi-

crosoft Kinect for Windows API and drivers and the

SPCL modules process the streams aided by Kinect

API. However, the SPCL modules improve their out-

puts with focus in GIS and produce other outputs that

Kinect API doesn’t provide, such as fingers positions,

which are very useful on natural interaction (gesture)

over maps.

Table 1: Requirements and outputs of the SPCL modules.

Module Input Data Output Stream

Audio – Audio Stream

– Audio

–Text

Depth – IR signals – Depth Matrix

Hand

– Depth Matrix

– Fingers Position

– Video Stream

– Hand State

(opened/closed)

Skeleton – Depth Matrix

– 3D Position of

Body Joints

On the next step, the SPCL output is sent as input

stream to the IRL, where it is processed into an inter-

active control scheme. Table 3 shows the input and

output event of each module in IRL. In the current

version there are two main modules in IRL, Gesture

and Voice Control. The Gesture module receives the

output of the Hand and Skeleton modules of the pre-

vious layer and triggers the detected events. All de-

tected events are processed, as the visualization target

is a real object in front of the user. The voice con-

trol receives only the Audio module output and the

triggered event brings the detected voice as audio and

text to be used in the action decision.

In both modules we manipulate the input streams

using a time-based sliding window approach. The

capture rate is 30 frames per second and the window

size is 15 frames. For each window slide, the mod-

ules process their input and detect a specific action,

triggering their respective events.

Figure 2 presents the flowchart of how the Ges-

ture module triggers events. The IRL receives video,

depth and skeleton streams from the Kinect device,

combining them to detect the main user by choosing

TheNInAFramework-UsingGesturetoImproveInteractionandCollaborationinGeographicalInformationSystems

61

Figure 2: Processing streams and events in the Gesture module from NInA.

Table 2: Requirements and outputs of the IRL modules.

Module SPCL Output Event

Gesture

– Hand

– Stretch/Compress

– Skeleton

Object

– Closed Hand

Movement

– Opened Hand

Movement

– Hand Up

Voice Control – Audio – Voice Command

the nearest one. With the main user chosen, the skele-

ton positions, the hand status and fingers positions are

computed.

The Skeleton and Hand streams are sent as input

to the Window Handler in the Gesture module in the

IRL. The Window Handler receives the stream un-

til the sliding window is full. Thereafter, it is pro-

cessed generating the IRL output events, e.g., if the

user moves both opened hands before his/her body,

the Gesture module triggers a Stretch/Compress Ob-

ject event.

3.3 TerrainViewer and NInA

Since the release of Kinect, TerrainViewer users were

enthusiastic with the new possibilities that this tech-

nology could provide, based on the later results pre-

sented in the literature about NUI. The NInA frame-

work has been developed with an incremental ap-

proach, based on the requests of specialists over the

TerrainViewer functionalities. This section contains

the explanations about how NInA was built to meet

the proposed requirements and these natural interac-

tions.

The set of actions was defined by the specialists

and their natural gestures were chosen between the

proposed events for the NInA Framework. Table 3

presents the association between actions and gestures

that results in the NUI version of TerrainViewer.

Table 3: Gestures and the respectives actions on NInA

Framework + TerrainViewer.

Actions Gestures

Zoom In/Out

– Stretch/Compress

Object

Rotation

– Closed Hand

Movement

Translation

– Opened Hand

Movement

Initial State – Hand Up

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

62

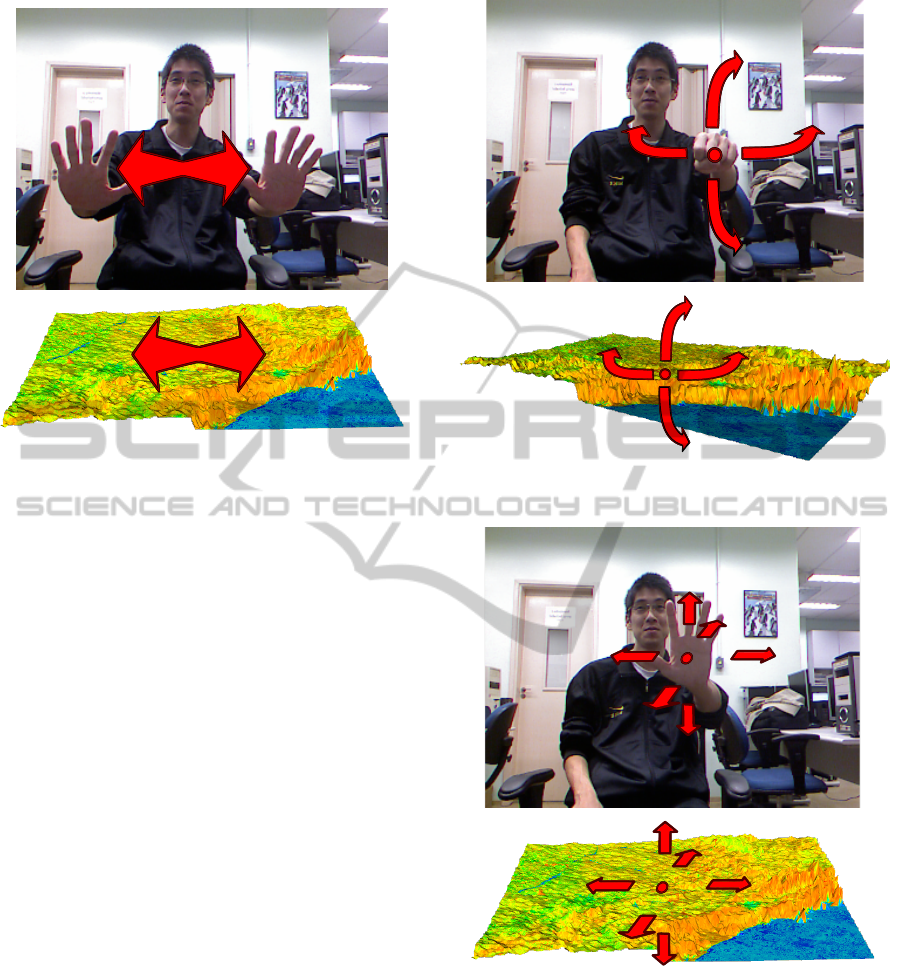

Figure 3: Scaling a map visualization using the NInA

Framework.

All gestures were implemented considering the

rendered model being shown in front of the user. For

instance, if the user puts both hands in front of his/her

body and move away one hand from the other, which

describes the stretching object gesture, the Stretch

Object gesture is triggered and the model view zooms

in as the result action. As the model gets larger, the

user feels like he/she is stretching the model, as shown

in Figure 3. The zoom out action is analogous, and it

is recognized by the Compress Object gesture when

both hands move toward each other. Another gesture

recognized by the NInA framework is when the user

moves one closed hand in front of his/her body. With

this gesture, the user feels like he/she is grabbing one

part of the rendered model and changing its angula-

tion, as shown in Figure 4. This results in the rotation

action on the model being displayed. The same ges-

ture executed with an opened hand results in the trans-

lation action, where the position of the model changes

depending on the hand position (Figure 5). Finally,

although it is not quite a natural interaction, by rais-

ing one or both hands above his/her head (Hand Up

gesture) for at least two seconds the user resets the

visualization to its initial state.

It is important to highlight that all the interaction

movements provided by NinA are the ones a user does

when pointing to an image during discussion or ana-

lyzes in a collaborative work. Moreover, when the

user is standing up for a plenary presentation employ-

ing large screens, the use of this kind of interaction

makes the whole process natural, simple and easy.

Figure 4: Rotation of a map visualization using the NInA

Framework.

Figure 5: Translation of a map visualization using the NInA

Framework.

4 ANALISYS WITH USERS

In order to evaluate the NInA framework incorpo-

rated in the TerrainViewer system, we conducted a

user experiment involving six subjects with different

profiles. Although the number of users in the test had

been small, they were a representative part of poten-

tial users. Furthermore, the GIS application involves

TheNInAFramework-UsingGesturetoImproveInteractionandCollaborationinGeographicalInformationSystems

63

a specific group of users becoming more difficult to

find an expressive number of testers to be involved

in the experiment. The main objectives of this user-

based experiment aimed at assessing:

1. facilities and difficulties of using Kinect-based in-

teraction;

2. users’ satisfaction;

3. robustness and performance of the system;

4. usability of the system.

The group of participants was composed of spe-

cialits in cartography, forest engineering, agricultural

engineering and computer engineering with different

knowledge about GIS (experts and non-experts). Ac-

cording to their levels of ability in the manipulation

of the Kinect device, the users were classified as:

a) beginners: two of them had never used it before;

b) intermediaries: two users had used it once or

twice;

c) upper-intermediary: one user had already used it

with games more than 10 hours;

d) expert: one of them had used it with games more

than 100 hours.

The user test was conducted in a usability labo-

ratory, where the Kinect sensor was installed and the

system was projected in a large screen as showed in

Figure 6. Although the best way of observing users

was in their own work environment, we were not able

to install the Kinect sensor in the users’ office because

of the distance required to perform the test (more than

one meter from the device).

Figure 6: Devices used in the users’ test.

The experiment was registered by two cameras,

one positioned in front of the users and another by

their side to capture users’ facial expressions as well

as their gestures during each interaction (Figure 7).

Audio was also recorded to allow assessing expres-

sions, interjections and comments during the experi-

ence. Also, two observers took notes during the test.

Figure 7: User’s interaction recorded by cameras as well

as the user’s representation and the Terrain Viewer system

projected in the screen.

The whole test took around four hours and was

organized in four different steps:

1. Interaction through Kinect. First, the users were

invited to freely explore the system using NInA

for some minutes. After that, one observer

asked them to perform three tasks with the 3D

satellite image presented in the screen: rota-

tion/translation, zoom in/out and restart the map

to the initial position. No instruction was provided

to users during this step. In the end, one of the

observers explained all commands to perform the

suggested tasks. Then, all users redid the tasks.

2. Interaction using Mouse. After all users finished

the tests using Kinect with NInA, the observer in-

vited them to manipulate the TerrainViewer sys-

tem through mouse. First they could explore the

system without previously defined tasks. After a

few minutes, users should execute the same se-

quence of tasks performed with Kinect and NInA.

3. Answering a Survey. After their interaction with

the Kinect/NInA and keyboard/mouse, the users

reported their experience in a survey about

difficulties/facility met during the interaction,

satisfaction / frustration, and adoption of the

Kinect/NInA technology.

4. Brainstorm and Discussion. When all users have

finished the test, they have participated in a brain-

storming session with two observers. They talked

about their impressions, doubts and commands to

interact with the system.

As expected, during the exploratory phase, the be-

ginners demonstrated more difficulties to interact with

the Kinect sensor than experts. They moved their

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

64

arms and hands without discretion, with broad and

quick movements. Moreover, they also moved along

the body without focusing the sensor. The hands were

pointed to the screen instead of being directed to the

sensor. Although they initially could not totally con-

trol the object, their expression was relaxed, enjoying

with the activity, regardless of they were making mis-

takes or not. They laughed, made funny comments,

as when the map screen became out of focus and they

joked saying “come on, come on!”.

Only one of them was frustrated when was unable

to zoom out the map saying, “Why does it not lis-

ten to me? And now, what do I do?” (talking to the

map that had disappeared with too much zoom from

the screen). The less experienced users not positioned

the body in front of the sensor to be recognized nei-

ther looked at windows with the skeleton of the body

drawn. The focus of their attention was just over the

display system.

On the contrary, the experts positioned their hands

in front of their body, expecting to be recognized by

the sensor. They made slow and precise movements.

Their face demonstrated concentration attempting to

associate the system response to each gesture. In

general, they spoke little throughout the exploratory

phase. The focus of the experts was not just on the

system’s windows, but also on what the sensor was

picking up. Their looks went from one window to an-

other in the screen trying to capture every movement

as well as the system responses.

During the next phases, in which users should

perform a sequence of tasks, their attention became

greater. All of them tried to accomplish the tasks

in the best way, but they had difficulties with the

zoom task. All of them successfully rotated the ob-

ject. However, only the most experienced managed

to zoom in, and only two were able to zoom out the

object. The last task corresponding to place the map

back to its initial state has not been satisfactorily per-

formed by any of the subjects. Several users have

commented on the difficulty with the zoom out con-

sidering that the problem occurred due to the distance

from the sensor, the delay in response or system cali-

bration for closed hand movements.

After the first two steps (free exploration and se-

quence of tasks), the developer showed to all subjects

which were the movements programmed to be per-

formed in each activity. After the explanations, ev-

eryone wanted to repeat the script to make sure they

would be able to perform all tasks. Many of them

agreed that it seemed much easier after the tips, espe-

cially the command to return to the initial state, rais-

ing one or both hands. Several users commented that

this last task (back to initial state) was the most fun

and were raising their arms at various times laughing.

The next step on the achievement of the same se-

quence of tasks by using the mouse was performed

satisfactorily for all. Although the activity with the

mouse seemed simple, they also showed no enthusi-

asm.

Apart from the cameras that captured users’ ges-

tures and speech, observers took notes and users an-

swered a questionnaire with the following questions:

• What is your academic formation?

• What is your level of experience with Kinect?

• What was the difficulty level with the system em-

ploying Kinect/NInA?

• Is the use of the system intuitive?

• What was the level of satisfaction? The experi-

ence was fun?

• Would you adopt this technology for interacting

with a GIS?

The answers are summarized in Table 4. Accord-

ing to the answers, also confirmed by the recordings,

they had more initial difficulties by not knowing the

new interaction technology (Kinect). Although one of

the users is an expert in GIS, as this user was not fa-

miliar with the gestural interaction, the performance

of activities was impacted, which did not occur when

using the mouse, because of previous training in us-

ing it. However, after receiving instructions from the

system developer, the user interacted with the system

without difficulty. This allows us to acknowledge the

need of presenting basic instructions on the screen

(similar to those presented in various videogames)

and/or training user before the interaction. However,

it was confirmed that, the learning time is very short,

what is a plus of this technique.

Despite some initial difficulties, only one user felt

that this form of interaction is non-intuitive. On the

other hand, all users stated that the hand movement

approaching or moving away to do the zoom com-

mand was the first movement that occurred to them.

Some of them commented that the scroll ball on the

mouse to accomplish the same task is far less intu-

itive, for example.

The degree of satisfaction was high as we could

see by the expressions of each one during the experi-

ment. They smiled, made jokes, grimaced when wan-

dered or were unsure about what to do, commented

something funny and no one wanted to stop using the

system. When asked about the possibility of adoption

of the technology in his/her work, all subjects were in

favour of it.

During the brainstorming session, each user had

the opportunity to report their experiences and feel-

ings beyond making suggestions. They commented

TheNInAFramework-UsingGesturetoImproveInteractionandCollaborationinGeographicalInformationSystems

65

Table 4: Information about users and their answers of the questionnaire applied to them after the interaction through Kinect.

User Profession Kinect experience Difficulty Intuitive Satisfaction Adopt

degree technology?

User1 Cartography beginner high yes high yes

User2 Forest engineering intermediary medium yes high yes

User3 Agricultural engineering upper-intermediary low yes high yes

User4 Agricultural engineering beginner high yes high yes

User5 Computer engineering intermediary medium no high yes

User6 Computer engineering expert low yes high yes

on the difficulty of making subtle movements to zoom

and asked if this command could be calibrated less

rigid. Several of the subjects suggested different

movements to rotate the object instead of making a

fist. They requested a movement to stop interacting

with the possibility of returning later. They com-

mented that it would be very interesting to use the

tool for collaborative use, which is hard to do with the

mouse. Furthermore, they suggested that the system

with this type of interaction would be appropriate for

use in teaching and presentations, since the gestures

are natural movements that they usually do.

5 CONCLUSIONS

This paper presented the NInA framework aimed at

providing resources to easily add a natural user inter-

face (NUI) based on the Kinect technology on geo-

graphical information systems. The modules of NInA

make it a simple, but powerful basis to build natural

interfaces based on gestures, with the advantage of

being expandable, as new needs arrive at the system,

and is easily portable to other devices.

The need of NUI became more urgent as the vol-

ume of data to be analyzed as well as the size of the

maps and presentations grow, demanding the use of

very large displays to allow comfortable interaction.

Therefore, the use of mouse and keyboard was clearly

regarded as being not convenient anymore, since they

limit the users’ movements. In this scenario, NInA

is the natural choice, since the user is not limited by

holding a device. Moreover, when the analysis in-

volves collaborative work, the other analysts easily

recognize the users’ movements, and can participate

as a group.

The analyses performed with the users, in the case

study with the TerrainViewer system, showed that the

learning time is quite short, just a couple of interac-

tions and instructions and the user is ready to go. Be-

yond that, the users felt greater satisfaction employing

the Kinect/NInA framework to interact with the sys-

tem, what can lead to improve their productivity.

ACKNOWLEDGEMENTS

The authors are grateful for the financial support

granted by CNPq, CAPES, FAPESP, Microsoft Re-

search, Embrapa Agricultural Informatics, Cepa-

gri/Unicamp and Agritempo for data.

REFERENCES

Boulos, M. N. K., Blanchard, B. J., Walker, C., Montero, J.,

Tripathy, A., and Gutierrez-Osuna, R. (2011). Web gis

in practice x: a microsoft kinect natural user interface

for google earth navigation. International Journal of

Health Geographics, 10(45):1–14.

Fitts, P. M. (1954). The information capacity of the human

motor system in controlling the amplitude of move-

ment. Journal of Experimental Psychology, 47:381–

391.

Jain, J., Lund, A., and Wixon, D. (2011). The future of nat-

ural user interfaces. In Proceedings of the 2011 an-

nual conference extended abstracts on Human factors

in computing systems, CHI EA ’11, pages 211–214,

New York, NY, USA. ACM.

Malizia, A. and Bellucci, A. (2012). The artificiality of

natural user interfaces. Communications of the ACM,

55(3):36–38.

Microsoft (2013). Kinect for windows sdk. Avail-

able at http://msdn.microsoft.com/en-us/library/

hh855347.aspx. Accessed date January 16, 2013.

Norman, D. A. (2010). Natural user interfaces are not natu-

ral. Interactions, 17(3):6–10.

Richards-Rissetto, H., Remondino, F., Agugiaro, G.,

Robertsson, J., von Schwerin, J., and Girardi, G.

(2012). Kinect and 3d gis in archaeology. In Pro-

ceedings of 18th International Conference on Virtual

Systems and Multimedia (VSMM’12), pages 331–337.

IEEE Computer Society.

Rizzo, A., Kim, G. J., Yeh, S.-C., Thiebaux, M., Hwang,

J., and Buckwalter, J. G. (2005). Development of a

benchmarking scenario for testing 3D user interface

devices and interaction methods. In Proceedings of

the 11th International Conference on Human Com-

puter Interaction, Las Vegas, NV.

Stannus, S., Rolf, D., Lucieer, A., and Chinthammit, W.

(2011). Gestural navigation in google earth. In

Proceedings of the 23rd Australian Computer-Human

Interaction Conference, pages 269–272, New York,

USA. ACM.

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

66