Hand Recognition using Texture Histograms

A Proposed Technique for Image Acquisition and Recognition of the Human Palm

Luiz Ant

ˆ

onio Pereira Neves, Dionei Jos

´

e M

¨

uller, Fellipe Alexandre,

Pedro Machado Guillen Trevisani, Pedro Santos Brandi and Rafael Junqueira

Federal University of Paran

´

a - UFPR, Department of Technological and Professional Education, Curitiba, Paran

´

a, Brazil

Keywords:

Image Processing, Biometrics, Local Binary Pattern, Chi-square Comparison, Texture Histograms.

Abstract:

This paper presents a technique for biometric identification based on image acquisition of the palm of the

human hand. A computer system called Palm Print Authentication System (PPAS) was implemented using the

technique exposed, it identifies human hand palm by processing image data through texture identification and

geometrical data by employing the Local Binary Pattern (LBP) method. The methodology proposed has four

steps: image acquisition; image pre-processing (normalization), and segmentation for biometric extraction

and hand recognition. The technique has been tested utilizing 50 different images and the tests have proven

promising results, showing that the approach is not only robust but also quite efficient.

1 INTRODUCTION

Biometrics refers to individual recognition based on

biological and behavioral traits. Recently, this tech-

nology has been widely adopted. Due to the mar-

ket expansion, private and corporate investments, re-

search in this technology no longer relies on govern-

ment support and costs related to this technology have

been decreased.

Today, researches present several interesting ways

of biometric identification using the human hand. For

instance, (Khan and Khan, 2009) proposed user iden-

tification and authentication by mapping blood ves-

sels obtained from infrared techniques. Similarly,

other studies employ hand geometry, applying geo-

metric calculation in the hand shape as source of bio-

metric data. Additionally, several forms of image

acquisition devices, such as scanners, digital cam-

eras and CDC cameras are available today, simplify-

ing the adoption of biometric identification by human

hand images. However, by analysing studies regard-

ing palm fingerprints, it is clear that the hand posi-

tion is a crucial factor to consider while acquiring an

image that permits biometric identification. For ex-

ample, (Li et al., 2009) propose extraction of images

from the region of interest (ROI), showing that ge-

ometric calculation makes it possible to extract data

from the hand palm with any placement of the fingers

or hand rotation.

In the same way, a research from (Bakina, 2011)

implements an application using a webcam for image

acquisition and considers that images from fingers can

be captured together or apart, with no restriction on

hand rotation. This uses key points as biometric data,

extracting them from the top of the fingers to end of

the wrist, in a circular pattern of a binary image; this

technique has an accuracy check of 99% with high

false acceptance rate (FAR). Also, the low FAR is 0%

when the system becomes bimodal by combination of

voice features.

Also, in the study conducted by (Jemma and Ham-

mami, 2011), first the image is represented in binary

format and then hand contour is used as a reference.

After a Euclidian method is employed for extract-

ing the ROI. This technique divides the palm in sub-

regions to discover regions with more un-continuous

data. In order to extract the features, the LBP method

is used the identified regions, which has shown sat-

isfactory identification performance and saves store

space.

Furthermore, in the system proposed by (Ribarc,

2005), a scanner captures the hand image with no re-

striction on its position, making the system easier for

its users. This implementation also increases the sys-

tem efficiency by turning it in a multimodal system

employing both the hand palm and fingers as biomet-

ric data.

In addition, according to (P.K. and Swamy, 2010),

for people recognition, single mode systems are more

profitable, but less efficient. Therefore, multimodal

180

Pereira Neves L., Müller D., Alexandre F., Trevisani P., Brandi P. and Junqueira R..

Hand Recognition using Texture Histograms - A Proposed Technique for Image Acquisition and Recognition of the Human Palm.

DOI: 10.5220/0004692601800185

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 180-185

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

systems (Ribarc, 2005) have a higher accuracy rate,

reaching up to 100% rate. Although, the results ob-

tained by (Kumar and Shen, 2003), using only the

hand palm as biometric data was able to reach a suc-

cess rate of 98.67%. Another remarkable research

was conducted by (Wu et al., 2004), it proposes a

method without biometric recognition, but using the

classification of the lines from the hand palm and was

able to obtain 96.03% of accuracy.

Likewise, this work proposes a tool for extract-

ing biometric data from the palm of hand, using im-

age processing techniques and a protocol to acquire

the images. This protocol is explained in section 2.

Then, sections 3 and 4 present the methodology with

the techniques and results obtained from the tests. Fi-

nally, conclusions are presented in Section 5.

2 IMAGE ACQUIRING

PROTOCOL

To validate the algorithms and techniques exposed,

the presented work employed a hand-made device de-

signed to acquire images from hands.

Figure 1: Device diagram with camera and lamps.

Furthermore, the proposed algorithms were im-

plemented in C programming language using the

Open Source Computer Vision Library (OPENCV,

2011), an open source computer vision and machine

learning software library built to provide infrastruc-

ture for image processing applications. Additionally,

the designed device accessed a camera connected to a

personal computer.

The device case was assembled in medium-

density fiberboard (MDF), an engineered wood prod-

uct popularly used in furniture. It consisted in a

240 mm x 440 mm box, 320 mm depth, containing

a 5-megapixel webcam and two compact fluorescent

lamps of 5 and 7 watts, to produce stable light source

and avoid shadows, brightness and contrast variation.

To use the device to capture images, the hand must be

placed on the delimited area in the upper side of the

device (see Figure 1). After that a bitmap (.bmp) im-

age is captured in RGB scale with 640 x 480 pixels of

resolution.

The following rules were defined to capture im-

ages:

a) The distance between the camera and the hand

should not change as empirical tests show that it

affects texture recognition,

b) The device must be closed so its light sources can

produce enough light to avoid variance in the im-

age due to the external light,

c) The camera needs predefined settings to avoid

changes due to automatic adjustment,

d) Considering that every camera has distortion, it is

fundamental to use a reference (as the delimited

area on the top of the device) and

e) The image captured must be oriented vertically

and needs to present part of the fingers. These

must be apart, as shown in Figure 2.

Figure 2: Original image obtained from the device.

3 METHODOLOGY

The methodology can be described in the following

steps: first, the image acquisition step is responsible

for capturing the image through the device, then pre-

processing techniques use several pre-processing ap-

proaches to obtain the hand boundaries using mathe-

matical morphology.

Afterwards, techniques to locate the reference

points take place. Next, the located reference points

are used to normalize the image. After, geometric

data is extracted to and then hand texture based on

the region of interest can be read. Finally, textures are

compared based on techniques and methods to com-

pare the texture histograms.

HandRecognitionusingTextureHistograms-AProposedTechniqueforImageAcquisitionandRecognitionoftheHuman

Palm

181

3.1 Image Acquisition

To ensure the efficacy of the algorithms implemented,

the image must be acquired following the image ac-

quiring protocol presented in section 2. In addition,

the time spent by the device to capture each image

was 0,001 second.

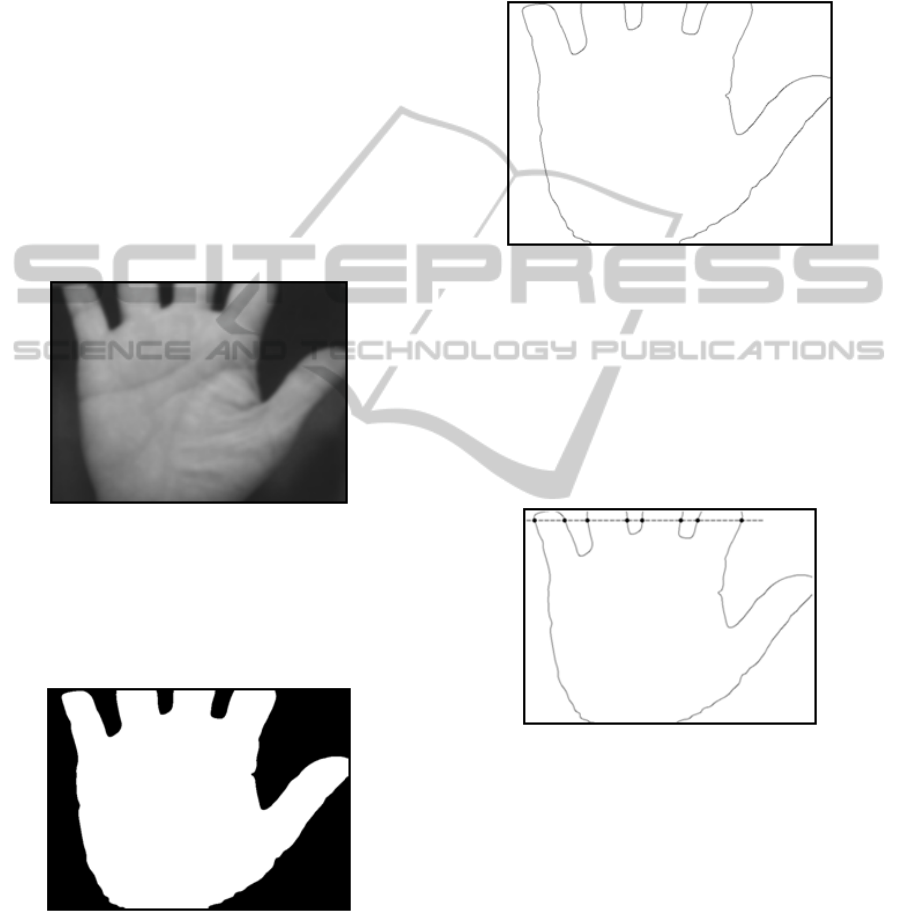

3.2 Pre-processing Techniques

This step presents methods to obtain the hand bound-

aries, which will be used to locate the reference

points, normalization and to acquire geometric data.

To accomplish it, initially the image is converted into

grey scale, then a 19x19 smoothing Gaussian filter

(Figure 3) is applied to improve the outline definition

during its binarization process and to reduce noise

that could prevent contour identification.

Figure 3: Grey scale image with Gaussian filter.

Further, the image can be transformed in a binary

image employing Otsu’s method (Otsu, 1979), then

active pixels (white) represent the palm while inactive

pixels (black) represent the background as shown in

Figure 4.

Figure 4: Otsu image.

In figure 4, it is possible to notice that the im-

age edges are well defined, this effect is attributed

to the proposed low-pass filter. Next, mathematical

morphology operations (Gonzalez and Woods, 2007)

(Facon, 1996) are used to identify the image contour.

To accomplish it, a morphological dilation process is

applied to the image using a 3x3 cross-shaped struc-

turing element. Second, a morphological erosion pro-

cess is applied, also using a 3x3 cross-shaped struc-

tural. Finally, to detect the contour, a XOR logical op-

erator is used between the resulting images as shown

in Figure 5.

Figure 5: Palm contour.

3.3 Reference Point Technique

Now is presented a technique to identify statistically

stable reference points used during the image normal-

ization, to accomplish it, an algorithm scans the hand

contoured image from the top until find the first line

where 8 intersection points from the fingers can be

found, as shown in Figure 6.

Figure 6: Identify points intersecting with fingers.

Next, it is necessary to identify the midpoints

(Ma, Mb, Mc) between the fingers, starting from the

initial line as reference as shown in Figure 7.

After the reference points can be found in the

longer distance between them and the midpoints

(Ma, Mb, Mc) as illustrated in Figure 8.

Although, a better understanding of the solution

is necessary once the reference points (A, B, C) do not

always exist, they can vary depending on the hand po-

sition or characteristics. In addition, stabilizing the

reference points using only the longer distance crite-

ria (Ojala et al., 2002) was not efficient in this work.

From the 50 tested images, 23 had a considerable

variation in their position. To circumvent this issue,

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

182

Figure 7: Midpoints identification.

Figure 8: Reference point identification.

was used an algorithm that measures the longer dis-

tance from the midpoint until the finger curve, using a

1x3 mask that scans the finger curve and moves down

a 1 pixel for each distance measurement and stops

when no pixel from the contour can be detected in

this mask. This way, point stability (A, B, C) is en-

sured. The mask action is shown in Figure 9.

Figure 9: Identification from point to curve using a mask.

This method presented stability in all images

tested. Besides, it is worth to mention that the algo-

rithm has shown to be efficient even when applied in

10% of the images photographed wearing a ring.

3.4 Image Normalization Technique

Once the reference points are identified, the image

normalization can be performed. After image normal-

ization, it is possible to extract the geometric data.

To perform normalization, a right triangle is rep-

resented from points A, C and D. Its segments CD

Figure 10: Identification of the Inclination skew.

Figure 11: Normalized image.

and AD length determine the angle (a) using arctan-

gent from CD and AD ratio. Then the grey scale im-

age (without Gaussian filter), is inverse-rotated from

point A to be normalized, as shown in Figure 10.

Next, the algorithm used to find the reference

points must be re-applied. Its important to notice that

if there is variation greater than 3 pixels in point A

in images from the same source, the procedure will

not work properly. The Figure 11 shows normalized

image.

3.5 Geometric Data Acquisition

Technique

This section presents statistically stable geometric

data, which can be used in a biometric recognition

system.

After acquiring the contour from the normalized

image, 3 stable geometric data are available: the hand

width (EF segment), the distance from points A to C

and the distance from point B to segment AC. Defin-

ing X as half of the segment AC length, the line EF

must have X pixels length and be projected below the

segment AC (see Figure 12).

Moreover, the geometric measures selected

demonstrated to be efficient discrimination criteria in

the 50 images used in the tests. A set of 10 different

people was selected, as a result, a test executed with 5

images per person displayed great stability, with less

than 5-pixel variation. It is important to notice that

finger width was not considered due to wide variation

range found during the tests in images with rings, fin-

ger swelling or other finger size variation.

HandRecognitionusingTextureHistograms-AProposedTechniqueforImageAcquisitionandRecognitionoftheHuman

Palm

183

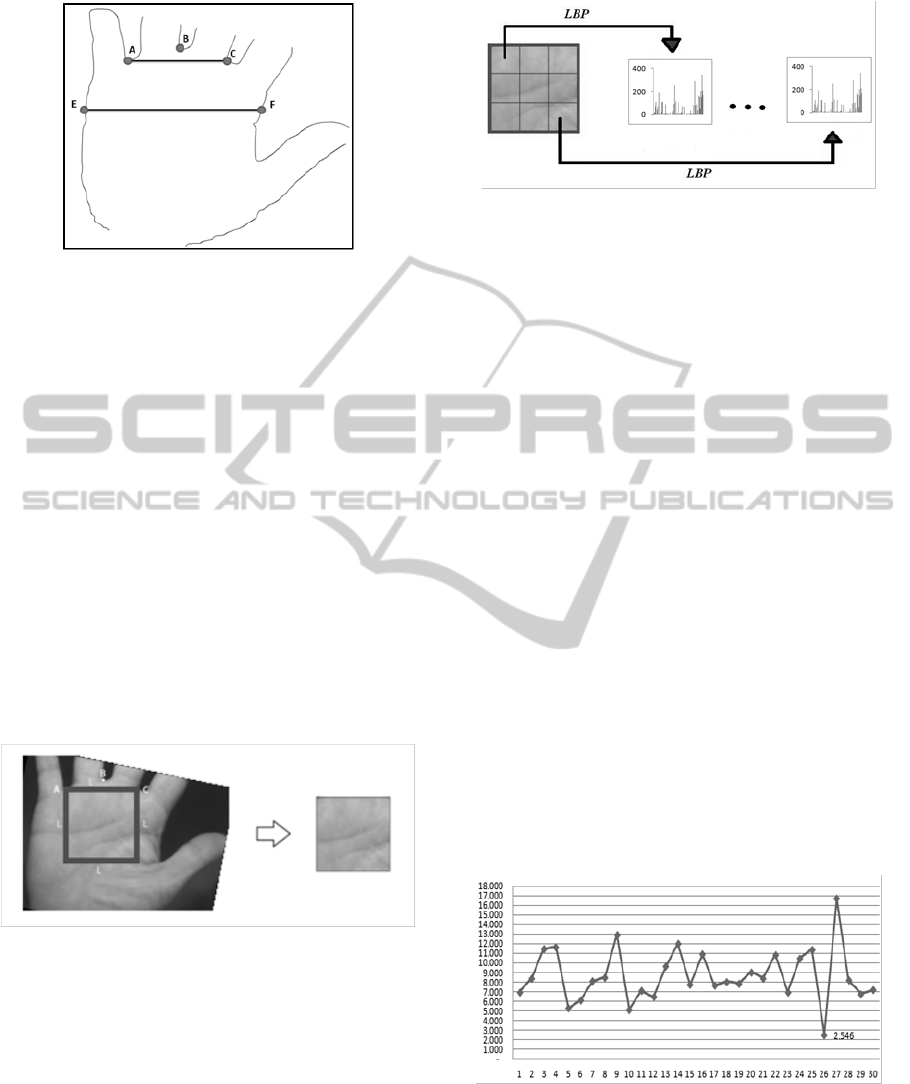

Figure 12: Geometric data.

3.6 ROI Acquisition and Texture

Extraction Techniques

Here is suggested a region of interest (ROI) employed

to extract hand texture characteristics applying a tech-

nique to obtain the texture with histograms resulted

from the Local Binary Pattern (LBP) processing. The

LBP is an operator, proposed by (Ojala et al., 1996)

(Ojala et al., 2002), which describes bi-dimensional

textures by using its spatial patterns and greyscale

contrast. This operator assigns binary values to the

pixels of an image by thresholding the neighbors of

every pixel that configures the image. A resulting bi-

nary sequence is then used to create a histogram that

can be used to classify or analyze the texture. Then,

to define the ROI using the LBP operator, it is neces-

sary to draw a square with side L, where L is equal to

segment AC length, as shown in Figure 13.

Figure 13: Extracting the ROI.

After finding the ROI, the hand texture data is ex-

tracted by applying the LBP descriptor. Additionally,

to execute the LBP, the ROI must be divided in a 3x3

matrix as illustrated in Figure 14.

The LBP application result in the histograms con-

catenation and each histogram is an element from this

matrix. Afterwards, the data is stored in a vector with

2304 positions (256 for each square). The relevant

characteristic in this procedure is that no features are

selected during LBP execution.

Figure 14: ROI divided for LBP application.

3.7 Texture Comparing Techniques

This section explains approaches to compare the tex-

ture histograms stored in the concatenated histograms

vector explained before. First, images are selected

from an image database for online recognition. Then,

the comparison is done employing Chi-square distri-

bution method in the LBP histograms of the selected

images. To use point-to-point comparison in the his-

togram vector Chi-square distribution method needs

a threshold. To define it, a considerable quantity of

images either from the same as from different indi-

viduals are essential. This ensures that the FAR rate

(false-positive recognition) is almost eliminated.

4 RESULT ANALYSIS

This experiment used 30 images from different indi-

viduals to test the recognition operation through the

proposed methods and a previously registered image

was used to test the comparison. First, the images

are compared employing the Chi-square distribution.

As shows Figure 15, the position 26 indicates that the

system correctly identified the person with 2546 fea-

tures, using a LBP texture comparison.

Figure 15: Results from Chi-square identification method.

To Chi-squares comparison, the threshold was

previously defined as 2900. As shows Figure 15, po-

sition 26 indicates that the system correctly identified

the individual with 2546. Besides, no other individual

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

184

had a comparison less then 2900, indicating a

correct operation of the system.

The FAR and FRR rates tested are zero, once no

false acceptance or rejection could be found.

5 CONCLUSIONS

In conclusion, the results presented in the paper show

that it is possible to implement a biometrical recogni-

tion system using human hand palm images acquired

by simple low-cost devices. Further, although the

presented technique analysis would be certainly im-

proved by using a larger image dataset, the initial

analysis evidences promising results with a reduced

number of images. Thus, increasing the size of the

testing image collection is evidently the next step to

continue assessing the system performance and its ac-

curacy as well as compares it to methods adopted by

similar systems.

REFERENCES

Bakina, I. (2011). Palm shape comparison for person recog-

nition. In VISAPP’11 Proceedings of the International

Conference on Computer Vision Theory and Applica-

tions 2011, pages 5– 11.

Facon, J. (1996). Morfologia matemtica: teoria e exemplos.

Editor Jacques Facon.

Gonzalez, R. C. and Woods, R. E. (2007). Digital Image

Processing. Prentice Hall.

Jemma, S. B. and Hammami, M. (2011). Palmprint recog-

nition based on regions selection. In VISAPP’11 Pro-

ceedings of the International Conference on Computer

Vision Theory and Applications 2011, pages 320–325.

Khan, M. and Khan, N. (2009). A new method to ex-

tract dorsal hand vein pattern using quadratic infer-

ence function. International Journal of Computer Sci-

ence and Information Security, 6(3):26–30.

Kumar, A. and Shen, H. (2003). Recognition of palmprints

using eigenpalm. In Proceedings of the International

Conference on Computer Vision Pattern Recognition

and Image Processing 2003.

Li, F., Leung, M., and Chiang, C. (2009). Making palm

print matching mobile. International Journal of Com-

puter Science and Information Security, 6(2):1–9.

Ojala, T., Pietikainen, M., and Harwoork, D. (1996). A

comparative Study of texture measures with classifi-

cation based on feature distribution. Pattern Recogni-

tion (PR), 29(1):51–59.

Ojala, T., Pietikainen, M., and Maenpaa, T. (2002). Mul-

tiresolution gray-scale and rotation invariant texture

classification with local binary patterns. IEEE Trans-

action on Pattern Analysis and Machine Intelligence

(PA MI), 27(7):971–987.

OPENCV (2011). Open Computer Vision Library

http://sourceforge.net/projects/opencvlibrary/.

Otsu, N. (1979). A threshold selection method from gray-

level histograms. IEEE Transactions on Systems, Man

and Cybernetics, 9(1):62–69.

P.K., M. and Swamy, M. S. (2010). An efficient process of

human recognition fusing palmprint and speech fea-

tures. International Journal of Computer Science and

Information Security, 6(11):1–6.

Ribarc, S. (2005). A biometric identification system based

on eigenpalm and eigenfinger features. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence,

27(11):1698–1709.

Wu, X., Zhang, D., Wang, K., and Huang, B. (2004).

Palmprint classification using principal lines. Pattern

Recognition,, pages 1987–1998.

HandRecognitionusingTextureHistograms-AProposedTechniqueforImageAcquisitionandRecognitionoftheHuman

Palm

185