A Sharp Fitness Function for the Problem of Finding Roots of

Polynomial Equations Systems

Cruz E. Borges

1

, Jose L. Monta

˜

na

2

and Luis M. Pardo

2

1

DeustoTech Labs, Universidad de Deusto, Deusto, Spain

2

Department of Mathematics, Statistics and Computing, Universidad de Cantabria, Santander, Spain

Keywords:

Non Linear Equations, Polynomial Equations, Evolutionary Algorithms.

Abstract:

We experiment with several evolutionary algorithms for solving systems of polynomial equations with real

coefficients. As a main difference with previous work, our algorithms can certify the correctness of the so-

lutions they provide. This achievement is made possible by incorporating results from the field of numerical

analysis to their fitness functions. We have performed an experimental comparison between the various pro-

posed algorithms. The results of this comparison show that evolutionary and other local search algorithms can

deal with the problem of solving systems of polynomial equations even for systems having many variables

and high degree. Our main contribution is a nontrivial fitness function adjusted to the problem to be solved.

This function is not based on any heuristics but on the fundamentals of numerical computation.

1 INTRODUCTION

Solving systems of non linear equations constitutes an

important challenge both in Mathematics and Com-

puter Science. Many problems in a variety of fields

such as engineering, mechanics, medicine, chemistry,

and robotics can be reduced to the problem of solving

a system of non linear equations. Several methods and

strategies have been proposed to address this problem,

but so far one of the simplest cases, solving systems of

polynomial equations with real coefficients, is still an

open problem. Namely, the following remains open:

Problem 1 (Root finding problem for Systems of

Polynomial Equations). Let f

1

= 0,..., f

n

= 0 be a

system of n polynomial functions of degree bounded

by d:

f

1

:=

∑

i

1

,...,i

n

a

i

1

,...,i

n

1

x

i

1

1

···x

i

n

n

.

.

.

f

n

:=

∑

i

1

,...,i

n

a

i

1

,...,i

n

n

x

i

1

1

···x

i

n

n

,

where

∑

n

j=1

i

j

≤ d and a

a

1

,...,i

n

j

∈ R for all j and

i

1

,...i

n

.

The Root finding problem for Systems of Poly-

nomial Equations is to find a point ζ ∈ R

n

such that

f

i

(ζ) = 0 for all i, 1 ≤i ≤ n.

The following is an example of this problem with

three equations and three variables:

Example 2.

f

1

= 5.38 ·10

8

x

6

2

+ 5.03 ·10

8

x

5

2

+ 8.95 ·10

7

x

4

2

−

− 6.25 ·10

13

x

2

1

x

3

+ 5.78 ·10

6

x

3

2

+ 1.07 ·10

5

x

2

2

+

+ 6.17 ·10

2

x

2

+ 1

f

2

= −5.03 ·10

8

x

5

2

−1.79 ·10

8

x

4

2

+ 6.25 ·10

13

x

2

1

x

2

+

+ 1.88 ·10

14

x

2

1

x

3

−1.73 ·10

7

x

3

2

+ 2.03 ·10

7

x

1

x

2

−

− 4.29 ·10

5

x

2

2

−3.09 ·10

3

x

2

−6

f

3

= −5.55 ·10

15

x

2

1

+ 1.11 ·10

16

x

1

x

3

+ 1.8 ·10

9

x

2

+ 1.

The case in which the system has the same number

of equations as variables is one of the most important

and widely studied, both theoretically and in practice

(see, for example, (Dieudonn

´

e, 1985)). In fact, solv-

ing the case in which there are more equations than

unknowns is rewarded with 1 million US dollars by

the Clay Mathematics Institute (P vs NP Millennium

Prize).

2 STATE OF THE ART

Traditionally there are, in addition to heuristics, two

ways of addressing the root finding problem for poly-

nomial systems of equations, the symbolic form (with

294

Borges C., Montaña J. and Pardo L..

A Sharp Fitness Function for the Problem of Finding Roots of Polynomial Equations Systems.

DOI: 10.5220/0005140002940301

In Proceedings of the International Conference on Evolutionary Computation Theory and Applications (ECTA-2014), pages 294-301

ISBN: 978-989-758-052-9

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

exact calculations) and the numerical approximation

(with approximate computations).

2.1 Symbolic and Numerical Methods

The standard way to solve the problem symboli-

cally is via Gr

¨

obner bases (see (Cox et al., 1997;

Becker and Weispfenning, 1993; Giusti et al., 2003)

for an extensive review of this technique). Indeed,

the algorithm described in the above mentioned pa-

pers, despite its doubly exponential complexity, is the

method commonly implemented in symbolic solvers

like Maple

TM

, Mathematica

TM

or Matlab

TM

. In

(Castro et al., 2003), a simply exponential lower

bound for this problem is given (see also (Castro et al.,

2001)). They also give a simple exponential algorithm

for the problem. An implementation can be found

in the symbolic solver Magma

TM

. See (Durvye and

Lecerf, 2008) for the details.

On the other hand, in the framework of numeri-

cal computation, the problem of root finding is solved

using the well known Newton’s method that can be

applied not only to polynomial systems of equations

but also to non linear systems with good derivability

properties. In (Blum et al., 1998) the authors initi-

ated a series of papers with the aim of formalizing

this technique and analyzing its inherent computa-

tional complexity when applied to polynomial sys-

tems. This analysis resulted in the so-called homo-

topy continuation methods. Recently, in another se-

ries of papers (see (Beltr

´

an and Pardo, 2011) for a

survey) the authors provided a probabilistic polyno-

mial time algorithm for the case of systems having

complex coefficients (i.e. looking for complex roots

of systems of polynomials with complex coefficients).

Unfortunately the same techniques presented several

difficulties when applied to the case of systems hav-

ing real coefficients (see (Borges and Pardo, 2008)).

Other alternatives to Newton’s method exist in the lit-

erature: Reduction Methods (see (Grapsa and Vra-

hatis, 2003)) and Interval Methods (see (Hentenryck

et al., 1997) are well studied examples.

2.2 Evolutionary Methods

As it is well known, evolutionary algorithms provide

a simple optimization method that mimics some pro-

cess of biological evolution and, over some particular

problems, can perform better than random or exhaus-

tive search. There are several ways to translate the

problem of root finding into an optimization problem

suitable for applying evolutionary techniques. As an

example, given the polynomial equation f (x) = 0, the

usual way of translating the corresponding root find-

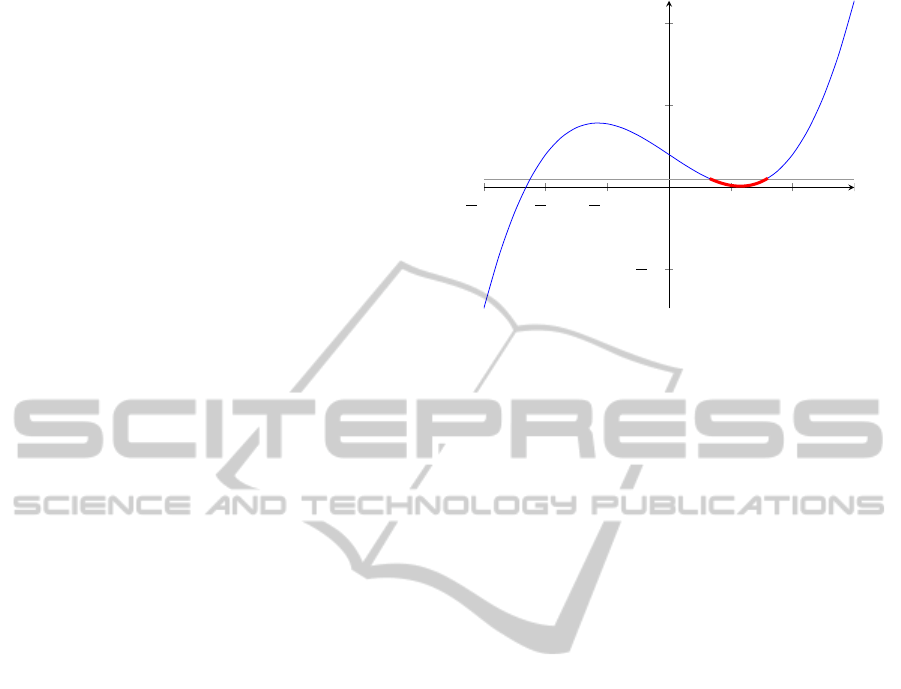

1.5 1 0.5 0.5 1 1.5

1

1

2

Figure 1: Example of a polynomial equation with probably

bad properties.

ing problem into a minimization problem is just to

minimize g(x) = ||f (x)||, where x can take values in

R

n

. Here ||·||denotes the Euclidean norm. This strat-

egy can be easily extended to a system of polynomial

equations. Given f

1

(x) = 0, . . . , f

n

(x) = 0, the corre-

sponding root finding problem is to minimize g(x) =

||f

1

(x)||+ . . . , ||f

n

(x)||. Another natural way of mak-

ing the translation of root finding into an optimiza-

tion problem is to minimize g(x) =

∑

1≤i≤n

[ f

i

(x)]

2

.

This method is described for instance in (Mastorakis,

2005), see also (Kuri-Morales, 2003), and is used in

many situations to solve many particular problems

from a variety of fields. See the works by (Benedetti

et al., 2006), (Tan et al., 2006), (K. Deb and Majum-

dar, 2004) as illustration of this technique. A remark-

able result using a slightly different idea is found in

(Grosan and Ajith Abraham, 2007) where a novel ap-

proach that transforms a system of nonlinear equa-

tions into a multiobjective optimization problem is

presented. The obtained problem is then solved using

the standard Pareto dominance relationship between

solutions and an iterative strategy that evolves some

random solutions in the search for optimal solutions.

The technique uses principles from the evolutionary

computation field and, apparently, is able to approx-

imate the solutions even for large-scale systems of

equations. The objection is that under these reduc-

tions and transformations the method can easily lead

to false roots although it might work in some particu-

lar cases. See, for example, Figure 1. In this case, we

can find a local minimum near 0.5 that is fundamen-

tally far from a real root. We remark that any opti-

mization method based on minimizing some suitable

combination of the original equations cannot certify,

in general, correctness of the provided solutions and

has the same limitations as those pointed out by the

example given in Figure 1 above.

ASharpFitnessFunctionfortheProblemofFindingRootsofPolynomialEquationsSystems

295

3 BACKGROUND AND

SUMMARY OF RESULTS

Because of the limitation for certifying correctness

of the solutions present in the existing evolutionary

methods, we propose –regardless of the evolution-

ary algorithm to be subsequently applied– a thorough

study of the characteristics of the function to be opti-

mized when trying to solve equations. The problem

in its full generality is very difficult to address how-

ever; in the case of systems of polynomial equations,

the theory of approximate zeroes may shed some light

on it.

We recall some elementary notions of numerical anal-

ysis. Given a system of n polynomial equations with

real coefficients and n variables as below,

f

1

(x

1

,...,x

n

) = 0

f

2

(x

1

,...,x

n

) = 0

...

f

n

(x

1

,...,x

n

) = 0

let f : R

n

−→ R

n

, f = ( f

1

,..., f

n

) be the polynomial

function corresponding to such system. In addition let

ζ be a root of f (i.e. f

i

(ζ) = 0, f or 1 ≤ i ≤ n). The

Newton operator is defined as follows.

N

f

(x) := x −D f (x)

−1

f (x),

where D f is the differential of the function f (also

called the Jacobian matrix of f ).

D f =

∂ f

1

∂x

1

...

∂ f

1

∂x

n

...

∂ f

n

∂x

1

...

∂ f

n

∂x

n

As usual D f

−1

denotes the inverse of the matrix D f .

Let x

k

be the point obtained by applying k iterations

of the Newton operator to some initial point x

0

∈ R

n

. We say that x

0

is an approximate zero of ζ if the

sequence x

k

converges quadratically to ζ, i. e. the

following holds for all values of k:

||x

k+1

−ζ|| ≤

1

2

2

k

−1

||x

0

−ζ||.

In the next Section, Theorems 8 and 9, we show that

there exists a function α

∗

( f ,x) having the following

properties:

• α

∗

( f ,x) depends only on the system of equations

f and requires essentially to compute the Jacobian

matrix of f .

• There is a constant α

0

:=

13−3

√

17

4

such that if

α

∗

( f ,x

0

) < α

0

then x

0

is an approximate zero of

f .

• There is a convex bounded region B

f

⊂ R

n

de-

pending only of the function f such that approx-

imate zeroes of f are guaranteed to belong to

B

f

⊂ R

n

.

As a main contribution, our approach avoids the prob-

lem of computing false solutions using α

∗

( f ,x) as fit-

ness function. The precise form in which α

∗

is de-

fined is described in next Section. The way in which

any evolutionary or local search algorithm can com-

pute one or more solutions of a polynomial equations

system is by driving the search trying to minimize the

function α

∗

( f ,x) and using as stopping criterion con-

dition α

∗

( f ,x) < α

0

. The individuals of the evolution-

ary or local search algorithm must belong to region

B

f

.

4 DEFINING THE FUNCTION α

∗

Next theorem in (Blum et al., 1998) characterizes the

points x

0

which are approximate zeroes of a polyno-

mial system of equations:

Theorem 3 (α and γ-Theorem). Let f : R

n

−→R

n

be

a system of polynomial equations, x ∈R

n

and

β( f ,x) := ||D f (x)

−1

f (x)||,

γ( f ,x) := sup

k>1

D f (x)

−1

D

k

f (x)

k!

1

k−1

,

α( f ,x) := β( f , x)γ( f ,x),

D

k

f (x) being the k-th differential map of f in x, ||·||

the Euclidean norm and sup the supreme value. Then,

the following holds:

• There is a constant α

0

:=

13−3

√

17

4

such that if

α( f ,x

0

) < α

0

then x

0

is an approximate zero of

f .

• Let ζ be a root of f , then there is a constant

γ

0

:=

3−

√

7

2

such that for all x

0

∈ R

n

verifying

||x

0

−ζ|| <

γ

0

γ( f ,ζ)

is an approximate zero of f .

The quantities previously defined do not exhibit good

mathematical properties. In addition they are difficult

to compute. In order to overcome these difficulties

Smale introduced the notion of non-linear condition

number µ

norm

(in the same spirit of Turing’s linear

condition numbers of matrices) and did it in the fol-

lowing terms:

Definition 4 (Non linear condition number). Let f be

a system of polynomial equations and x ∈ R

n

. The

non-linear condition number of f at x, µ

norm

( f ,x), is

defined as follows:

µ

norm

( f ,x) := ||(D f (x))

−1

∆||,

ECTA2014-InternationalConferenceonEvolutionaryComputationTheoryandApplications

296

where ∆ is a matrix that depends only on the degrees

of the system f and ||·|| is the usual matrix norm.

The non-linear condition number of f is:

µ( f ) := max

ζ∈V ( f )

µ

norm

( f ,ζ),

where V ( f ) denote the set of all roots of f .

The non linear condition number µ

norm

( f ,x) has bet-

ter mathematical properties than α and γ, mainly or-

thogonal invariance, see (Blum et al., 1998) and ref-

erences therein for more details, and in addition it is

easy to compute. The following theorem gives an es-

timation of its expected value:

Theorem 5. The expected value of the non linear

condition number of a system of polynomial equations

is bounded by:

E[µ( f )] ≤2

√

2C(n + 1)

3/2

ND

π

1/4

,

where N :=

∑

n

i=1

n+d

i

n

, d

i

denotes the degree of the

variable x

i

in the system, D :=

∏

n

i=1

d

i

is the B

´

ezout

number and C is a universal constant such that 5 <

C < 7.

The connection between the non linear condition

number and the previous quantities is summarized in

the following result:

Theorem 6 (µ-theorem). Using the previous nota-

tion:

γ( f ,x) <

D

3/2

2

µ

nomr

( f ,x),

where D is the maximum degree of the variables of the

system f .

Using this bound it is easy to prove the next corol-

lary.

Corollary 7 (Root distances). Using the previous no-

tation, the expected value of the distance d between

any two roots of the system of polynomial equations f

satisfies the following inequality

E(d) >

2u

0

π

1/4

CD

3/2

(ND)

1/4

(n + 1)

3/2

,

where u

0

≈0.05992 and 5 < C < 7 are universal con-

stants.

Proofs of the previously stated results can be found

in (Blum et al., 1998), (Beltr

´

an and Pardo, 2009) and

(Borges and Pardo, 2008).

As we have seen before it is easy to approximate a

true zero provided that we have found an approxi-

mate zero. Hence, using Theorem 3 it is enough to

find a point x in R

n

such that α( f , x) < α

0

. We can

transform this criterion into an optimization problem

in the evolutionary (or local search) sense: the func-

tion to be minimized would be α( f ,x) and the stop-

ping criterion would be “to find a point x such that the

previous bound holds”.

In this situation two problems arise:

1. To find a bounded set in which one can guarantee

that there is an approximate zero and

2. to provide an efficient algorithm to calculate, or at

least to give a bound on, α( f , x).

Solving the first item provides a finite region in

which one can generate the initial population as well

as a limit for all the other populations. Solving the

second item will provide a suitable fitness function.

For solving the first problem we use the following re-

sult.

Theorem 8. With probability 1 −ε the norm of any

root is bounded above by

Γ

1

4

2

D

ε

2

,

where D denotes the B

´

ezout number and Γ(·) is the

hyper-geometric Γ function (see (Gradshte

˘

ın et al.,

2007) for a comprehensive list of its properties).

Due of lack of space and to preserve readability

we omit the proof of this theorem. The main ideas

can be found in (Borges and Pardo, 2008). For the

second problem we show the following result. We

give a short proof in order to illustrate the technique.

Theorem 9 (α

∗

-theorem). Let f be a system of poly-

nomial equations, 0 < ε ≤

1

2

and x ∈ R

n

with norm

bounded by

Γ(1/4)

2

D

ε

2

. We define α

∗

( f ,x) as:

α

∗

( f ,x) := β( f , x)µ

norm

( f ,x) =

=

D

3/2

||x||

2

||D f (x)

−1

f (x)||||D f (x)

−1

∆||,

Then, x

0

is an approximate zero of f with probability

1 −ε if any of the following conditions holds:

α

∗

( f ,x

0

) ≤ α

0

or α

∗

c

( f ,x

0

) := α

∗

( f ,x

k

) ≤ α

0

,

x

k

denotes the k-th iteration of the Newton operator.

Here k has order

k ∈O

loglog

(Dn)

3/2

N

1/4

D

5/4

ε

5/2

!!

,

where D denotes the maximum degree of the system f ,

d

i

denotes the degree of the variable x

i

in the system,

N :=

∑

n

i=1

n+d

i

n

, D is the B

´

ezout number and α

0

is

the constant defined in Theorem 3.

ASharpFitnessFunctionfortheProblemofFindingRootsofPolynomialEquationsSystems

297

Proof. The first part is trivial. Let us suppose that

the first condition holds, i.e. α

∗

( f ,x

0

) ≤ α

0

. Then,

using the bounds given in Theorem 6, we conclude

that α( f ,x

0

) ≤ α

∗

( f ,x

0

) < α

0

; as a consequence we

realize that x

0

is an approximate zero.

Let us suppose now that α

∗

( f ,x

0

) > α

0

but

α( f ,x

0

) ≤ α

0

. In this case, x

0

is a true approxi-

mate zero hence the iteration of the Newton’s operator

N

k

f

(x

0

) converges quadratically to a root ζ. Hence we

have

α

∗

( f ,x

k

) ≤

D

3/2

2

1

2

2

k

−1

||x

0

−ζ||µ

norm

( f ,x

0

)

Let 0 < ε ≤

1

2

, using Corollary 5 and Corollary 8

we have:

• µ

norm

( f ,x

0

) is bounded, with probability 1−ε, by:

µ

norm

( f ,x

0

) ≤C(n + 1)

3/2

ND

4π

1/4

,

where C is the constant defined in Corollary 5.

• ||x

0

−ζ|| is bounded, with probability 1 −ε, by:

||x

0

−ζ|| ≤

Γ

1

4

2

D

ε

2

,

ζ is the root for which the iteration converges and

Γ is the usual Γ function.

After k ≥ loglog

L(D(n + 1))

3/2

N

1/4

D

5/4

ε

−5/2

we

have that α

∗

( f ,x

k

) < α

0

and as a consequence, x

k

is

an approximate zero. Note that here L is the universal

constant

L :=

α

0

Γ

1

4

2

C

(4π)

1/4

.

Observe that both functions α

∗

and α

∗

c

can be easily

computed. Moreover, the previous result gives an al-

ternative version of Theorem 3. Hence we can use α

∗

c

as fitness function.

5 EVOLUTIONARY

ALGORITHMS

In this section we present several evolutionary and lo-

cal search algorithms that use the results to approxi-

mate zeros of a system of polynomial equations with

real coeffuicients. Listing 1 presents in a homoge-

nized way some common evolutionary an local search

techniques. We choose the operators of the evolution-

ary/local search algorithm following four of the tradi-

tional evolutionary/local search methods: Evolution

Listing 1: Pseudocode of an Evolutionary Algorithm. Note

that t represents the current generation, T represents the

maximum number of generations, op

1

and op

2

represent

the probability of using Operator

1

and Operator

2

respec-

tively and finally m

1

, m

2

and m

r

represent the number of in-

dividuals needed to perform the Operator

1

, Operator

2

and

the Reproduction Operator respectively.

1 g e n e r a t e i n i t i a l p o p u l a t i o n p

0

compute f i t n e s s o f p

0

3 elite := b e s t i n d i v i d u a l o f p

0

t : = 1

5 w h i l e ( t < T ) or ( fo u n d s o l u t i o n ? )

p

aux

: = empty p o p u l a t i o n

7 f o r i i n 1 t o l e n g t h p

t−1

aux : = empty s e t of i n d i v i d u a l s

9 r := random number i n [ 0 , 1 ]

s w i t c h r

11 c a s e 0 ≤ r ≤ op

1

s e l e c t i n d i v i d u a l s a

1

,. .. , a

m

1

13 aux : = O p e r a t o r

1

o v e r

a

1

,. .. , a

m

1

15 end c a s e

c a s e op

1

< r ≤ op

2

17 s e l e c t i n d i v i d u a l s a

1

,. .. , a

m

2

aux : = O p e r a t o r

2

o v e r

19 a

1

,. .. , a

m

2

end c a s e

21 c a s e op

2

< r ≤ 1

aux : = s e l e c t a

1

,. .. , a

m

r

23 end c a s e

end s w i t c h

25 compute f i t n e s s o f aux

r e p r o d u c t i o n : =

27 s e l e c t i o n ( aux , i n d i v i d u a l s )

p

aux

: = p

aux

∪ r e p r o d u c t i o n

29 end f o r

p

aux

: = p

aux

∪ elite

31 elite : = b e s t i n d i v i d u a l o f p

aux

p

t

: = p

t−1

33 t : = t +1

end w h i l e

Strategy (Michalewicz and Schoenauer, 1996), Sim-

ulated Annealing (Laarhoven and Aarts, 1987), Dif-

ferential Evolution (Price et al., 2005) and Particle

Swarm Optimization (Poli, 2008). See (Koza, 1992)

and the following references for a more complete de-

scriptions of these algorithms. Next follows a more

detailed description.

ESα Evolution Strategy: Operator

1

and Operator

2

are respectively the arithmetic crossover and

non uniform mutation operator (see (Michalewicz

et al., 1994)). Given two parents p

1

, p

2

∈ R

n

the

arithmetic crossover produces the children c

1

and

c

2

according to the following expressions:

c

1

= λp

1

+ (1 −λ)p

2

c

2

= λp

2

+ (1 −λ)p

1

,

where λ is a random number in [0,1]. For the

non uniform mutation operator, given p ∈ R

n

and

ECTA2014-InternationalConferenceonEvolutionaryComputationTheoryandApplications

298

[a,b]

n

the bounds given by Lemma 8 below, this

operator produces a child c = (c

1

,...,c

n

) ran-

domly chosen among the following two cases:

c

k

= p

k

+ ∆(t, b − p

k

) c

k

= p

k

−∆(t, p

k

−a),

for all k = 1,...,n, where t is the actual generation

and ∆ is defined as:

∆(t,y) := yr

1 −

t

T

,

r is a random number in [0, 1] and T is the maxi-

mum number of generations.

The selection of the individuals is a 2-tournament

selection and the reproduction step is based in a

raking scheme where the best two (or one in the

case of the mutation operator) different individ-

uals between parents and children survive in the

next generation. This procedure preserves diver-

sity of the population. Finally the elitist operator

takes the best individual of the previous genera-

tion and places it in the current one. See (Koza,

1992) for a more detailed description of all these

operators.

SA Simulated Annealing: The principal difference

between this algorithm and the previous one lies

in the reproduction operator: when children are

better than the parents, the best one passes to the

next generation but when they are worse they can

still pass with hight probability (0.9). The rest of

the operators behave as in ESα.

DE Differential Evolution: The difference between

this algorithm and the Evolution Strategy lies

in its first operator. Instead of using the arith-

metic crossover it uses the so called differential

crossover: given three parents p

1

, p

2

and p

3

∈R

n

,

the differential crossover produces the following

child:

c = p

1

+ λ(p

2

−p

3

),

where λ is a random number in [0,1]. The remain-

ing operators behave in the same way a ESα.

PSO Particle Swarm Optimization: In this algorithm

the crossover an the elitist operators are as fol-

lows. Instead of preserving the best element of the

population (that we denote by p

e

) we preserve, for

every position i in the population, the best element

that has held this position. We denote this element

by p

e

i

. Given a parent p

i

∈R the child c

i

produced

by the swarm crossover is:

c

i

= λ

1

p

i

+ λ

2

(p

e

i

−p

i

) + λ

3

(p

e

−p

i

),

where λ

1

, λ

2

and λ

3

are random numbers in [0,1].

6 EXPERIMENTAL RESULTS

In order to experiment with the algorithm we have

carried an extensive test in a Core 2 Duo SU4100 with

4 GB of RAM using a Gentoo operating system up to

date.

• We have tested the algorithms with systems of

sparse and dense polynomials. We represent

sparse polynomials by means of Straight Line

Programs (see (Giusti et al., 1998) for an exhaus-

tive reference of this data structure and (Alonso

et al., 2009) for an introductory reference to their

use as an artificial intelligence tool). Dense en-

coding is as usual, i.e. the vector of coefficients.

• We have randomly generated 100 systems in ev-

ery case. For the dense encoding we have gener-

ated every coefficient as a normal random variable

with mean 0 and variance 1. In the sparse case we

have used the method described in (Alonso et al.,

2009).

• We have experimented with systems having the

same number n ∈ {3, 5, 7, 10} of variables as

equations. The degree was at least 2 in the sparse

case and at least 3 in the dense case. Note that in

the last case, the number of roots of the polynomi-

als could be {27, 243, 2187, 59049} and the num-

ber of coefficients would be {60, 280, 840, 2860}.

• The executions finish after 100 generations or

when an approximate zero was found.

• The probability of operator

1

has been set to 0.9

and the probability of operator

2

to 0.05 so that the

reproduction probability is 0.05. In the case of the

Simulated Annealing algorithm the probabilities

have been reversed.

• The population has been set to 100 individuals.

• All of our methods are elitist, so we add the best

individual of the previous generation to the next

one.

• We have also implemented an evolution strategy

using the Euclidean norm of the evaluated poly-

nomial as the fitness function. In the following

tables we have denoted this algorithm by ES||·||.

We also wanted to compare our algorithms with

the symbolic algorithm used in Matlab

TM

but in the

case n = 3, using dense encoding of polynomials it

takes more than 10 hours to solve only one of the sys-

tems of equations we have generated, even using a

very powerful machine.

The experimental results for both the sparse and

the dense encoding are quite similar although they

present some remarkable differences. In Table 1 we

present the results for the sparse encoding case. As

ASharpFitnessFunctionfortheProblemofFindingRootsofPolynomialEquationsSystems

299

Table 1: Results using sparse encoding.

(a) Successful runs (%).

n PSO DE SA ESα ES||·||

3 83% 83% 83% 79% 68%

5 95% 81% 78% 56% 31%

7 92% 68% 68% 21% 9%

10 90% 33% 41% 16% 3%

(b) Execution time (s).

n PSO DE SA ESα ES||·||

3 2.18 2.23 2.21 2.3 0.2

5 2.57 7.3 6.91 6.44 0.48

7 8.87 25.36 21.83 23.74 0.79

10 29.14 78.45 72.09 83.5 1.3

Table 2: Results using dense encoding.

(a) Successful runs (%).

n PSO DE SA ESα ES||·||

3 100% 100% 100% 100% 75%

5 100% 91% 88% 28% 26%

7 100% 0% 1% 0% 2%

10 100% 0% 0% 0% 0%

(b) Execution time (s).

n PSO DE SA ESα ES||·||

3 0.04 0.02 0.02 0.02 0.02

5 0.25 1.39 0.83 0.5 0.06

7 2.9 29.21 29.04 29.91 0.21

10 30.35 174.28 174.68 179.86 0.99

it can be seen all methods perform quite well in the

first two cases (n = 3 and n = 5) but their success rate

starts to decrease quickly with exception of the Parti-

cle Swarm Optimization method (PSO). Note that the

Evolution Strategy (ES||·||) is the fastest one but also

it presents the worst success rate and very often re-

ports false solutions. This good execution time is due

to the simplicity of its fitness function.

In Table 2 we display the results for the dense en-

coding case. In this case the particle swarm optimiza-

tion algorithm has exhibited a remarkable success rate

while for the other methods the performance is quite

week.

The differences in the execution time between

each group of problems (sparse and dense encodings)

are due to the different programming tools used in

each case. While for the dense encoding very fast and

specific classes were used, in the sparse case we have

used a general purpose and slow library. In all cases,

algorithms performed quite fast when compared with

the symbolic solution provided by Matlab

TM

. We also

want to remark that the traditional Evolution Strategy

had a better behavior than expected, however some

false solutions were detected.

7 CONCLUSIONS AND FUTURE

WORK

Our experimentation supports the idea that most com-

mon evolutionary algorithms and local search tech-

niques can deal quite well with the problem of solv-

ing randomly generated polynomial equation systems

if the α

∗

fitness functions is used. The two main fea-

tures of the α

∗

fitness function are:

• it seems to work well in practice with any reason-

able evolutionary or local search technique,

• it can certify the correctness of the provided solu-

tions just by checking an inequality which is eas-

ier and more robust, when performing numerical

computations, than checking zero equalities.

To our knowledge this last feature makes α

∗

differ-

ent from any other fitness function previously used to

solve polynomial equation systems. Experimentation

with systems coming from specific fields has been de-

liberately excluded since our goal was to show that

the α

∗

function ”generically” works. Application to

real world problems is part of a future development

that requires an extensive comparison with a variety

of heuristics designed ad hoc for the problems they

try to solve and is out of the scope of this paper.

A main future development will be to extend the pro-

posed method to analytic functions. There are several

extensions of the α-theorem (see for example (Dedieu

and Kim, 2002) or (Dedieu et al., 2003)) but the

equivalent to our α

∗

-theorem and bounds ensuring the

existence of at least one root of the system in certain

bounded regions is not clear in this more general con-

text.

ACKNOWLEDGEMENTS

This work is partially supported by spanish grant

TIN2011-27479-C04-04.

REFERENCES

Alonso, C., Monta

˜

na, J., Puente, J., and Borges, C. (2009).

A New Linear Genetic Programming Aproach Based

on Straight Line Programs: Some Theoretical and Ex-

eperimental Aspects. International Journal on Artifi-

cial Intelligence Tools, 18(5):757–781.

Becker, T. and Weispfenning, V. (1993). Gr

¨

obner Bases,

Graduate Texts in Mathematics. Springer-Verlag,

New York.

Beltr

´

an, C. and Pardo, L. (2009). On Smale’s 17 Problem:

Average Polynomial Solver for Affine and Proyective

Solutions. J.Amer. Math. Soc., 22:363–385.

ECTA2014-InternationalConferenceonEvolutionaryComputationTheoryandApplications

300

Beltr

´

an, C. and Pardo, L. (2011). Fast linear homotopy to

find approximate zeros of polynomial systems. Found.

Comput. Math., 11(1):95–129.

Benedetti, A., Farina, M., and Gobbi, M. (2006). Evolu-

tionary multiobjective industrial design: the case of a

racing car tire-suspension system. IEEE Trans. Evo-

lutionary Computation, 10(3):230–244.

Blum, L., Cucker, F., Shub, M., and Smale, S. (1998). Com-

plexity and Real Computation. Springer-Verlag, New

York.

Borges, C. and Pardo, L. (2008). On the Probability Distri-

bution of Data at Points in Real Complete Intersection

Varieties. Journal of Complexity, 24(4):492–523.

Castro, D., Giusti, M., Heintz, J., Matera, G., and Pardo,

L. M. (2003). The hardness of polynomial equation

solving. Foundations of Computational Mathematics,

3(4):347–420.

Castro, D., Pardo, L., H

¨

agele, K., and Morais, J. (2001).

Kronecker’s and newton’s approaches to solving: A

first comparison. J. Complexity, 17(1):212–303.

Cox, D., Little, J., and O’Shea, D. (1997). Ideals, Varieties,

and Algorithms. Undergraduate Texts in Mathemat-

ics. Springer-Verlag, New York.

Dedieu, J., Priouret, P., and Malajovich, G. (2003). New-

ton’s method on riemannian manifolds: covariant al-

pha theory. IMA Journal of Numerical Analysis,

23(3):395–419.

Dedieu, J.-P. and Kim, M.-H. (2002). Newton’s method

for analytic systems of equations with constant rank

derivatives. Journal of Complexity, 18(1):187 – 209.

Dieudonn

´

e, J. (1985). History of Algebraic Geometry: An

Outline of the History and Development of Algebraic

Geometry. Chapman & Hall.

Durvye, C. and Lecerf, G. (2008). A concise proof of

the Kronecker polynomial system solver from scratch.

Expositiones Mathematicae, 26(2):101–139.

Giusti, M., Heintz, J., Morais, J., Morgenstern, J., and

Pardo, L. (1998). Straight-line programs in geomet-

ric elimination theory. Journal of Pure and Applied

Algebra, 124(1–3):101–146.

Giusti, M., Pardo, L., and Weispfenning, V. (2003). Al-

gorithms of Commutative Algebra and Algebraic Ge-

ometry: Algorithms for Polynomial Ideals and Their

Varieties, Handbook of Computer Algebra. Springer

Verlag.

Gradshte

˘

ın, I., Ryzhik, I., Jeffrey, A., and Zwillinger, D.

(2007). Table of integrals, series and products. Aca-

demic Press. Academic.

Grapsa, T. N. and Vrahatis, M. N. (2003). Dimension reduc-

ing methods for systems of nonlinear equations and

unconstrained optimization: A review. A Review, Re-

cent Adv. Mech. Related Fields, pages 215–225.

Hentenryck, P. V., Mcallester, D., and Kapur, D. (1997).

Solving polynomial systems using a branch and prune

approach. SIAM Journal on Numerical Analysis,

34:797–827.

K. Deb, K. Mitra, R. D. and Majumdar, S. (2004). Towards

a better understanding of the epoxy-polymerization

process using multi-objective evolutionary computa-

tion. Chem. Eng. Sci., 59(20):4261–4277.

Koza, J. (1992). Genetic Programming: On the Program-

ming of Computers by Means of Natural Selection.

The MIT Press.

Kuri-Morales, A. (2003). Solution of simultaneous non-

linear equations using genetic algorithms. In WSEAS

Transactions on SYSTEMS, Issue 1, vol. 2, pages 44–

51.

Laarhoven, P. and Aarts, E., editors (1987). Simulated an-

nealing: theory and applications. Kluwer Academic

Publishers, Norwell, MA, USA.

Mastorakis, N. E. (2005). Solving non linear equations via

genetic algorithms. In Proceedings of the 6th WSEAS

Int. Conf. on EVOLUTIONARY COMPUTING, pages

24–28.

Michalewicz, Z., Logan, T., and Swaminathan, S. (1994).

Evolutionary operators for continuous convex param-

eter spaces. In Proceedings of the 3

rd

Annual Con-

ference on Evolutionary Programming, pages 84–97.

World Scientic.

Michalewicz, Z. and Schoenauer, M. (1996). Evolution-

ary algorithms for constrained parameter optimization

problems. Evolutionary Computation, 4:1–32.

Poli, R. (2008). Analysis of the publications on the appli-

cations of particle swarm optimisation. J. Artif. Evol.

App., 2008:1–10.

Price, K., Storn, R., and Lampinen, J. (2005). Differential

Evolution: A Practical Approach to Global Optimiza-

tion. Springer, 1 edition.

Tan, K. C., Yang, Y. J., and Goh, C. K. (2006). A distributed

cooperative coevolutionary algorithm for multiobjec-

tive optimization. IEEE Trans. Evolutionary Compu-

tation, 10(5):527–549.

ASharpFitnessFunctionfortheProblemofFindingRootsofPolynomialEquationsSystems

301