Using a Bilingual Context in Word-Based Statistical

Machine Translation

Christoph Schmidt, David Vilar and Hermann Ney

Chair of Computer Science 6, RWTH Aachen University, Germany

Abstract. In statistical machine translation, phrase-based translation (PBT) mod-

els lead to a significantly better translation quality over single-word-based (SWB)

models. PBT models translate whole phrases, thus considering the context in

which a word occurs. In this work, we propose a model which further extends

this context beyond phrase boundaries. The model is compared to a PBT model

on the IWSLT 2007 corpus. To profit from the respective advantages of both mod-

els, we use a model combination, which results in an improvement in translation

quality on the examined corpus.

1 Introduction

The goal of machine translation is to translate a text from one natural language into

another using a computer. In statistical machine translation, the process of translating is

modelled as a statistical decision process.

The IBM-models proposed in the early 1990s were single-word-based models [1]. A

characteristic of the single-word-based approach is that lexicon probabilities are mod-

elled for single words. Consequently, the context in which a word is used does not

influence its translation probability.

The phrase-based approach tries to overcome this disadvantage by learning the

translation of whole phrases instead of single words. Here, “phrase” is not used in the

linguistic sense but simply refers to a sequence of words. (A more detailed descrip-

tion of the phrase-based approach can be found in [2].) As the phrase-based approach

translates phrases independently, words outside the phrase are not considered for its

translation. Moreover, the PBT model makes some independence assumptions which

seem to be arbitrary, e.g. the assumption that all segmentations of the source sentence

into phrases are equally likely.

To overcome these deficiencies, the model proposed in this work considers a bilin-

gual context beyond phrase boundaries. This approach is similar to the N -gram model

presented in [3]. However, the model presented here remains at the target word level.

As the model conditions its translation on both the words of the source and the target

sentence, we will refer to it simply as the “conditional model”.

The remaining part of this work is organized as follows: in the next section, we will

briefly sketch the basics of statistical machine translation and describe the log-linear

approach. In Section 3, the conditional model is introduced. Section 4 and Section 5

Schmidt C., Vilar D. and Ney H. (2008).

Using a Bilingual Context in Word-Based Statistical Machine Translation.

In Proceedings of the 8th International Workshop on Pattern Recognition in Information Systems, pages 144-153

Copyright

c

SciTePress

describe the search process and the feature functions used in the log-linear approach.

In Section 6, we propose a model combination of the PBT model and the conditional

model. Section 7 discusses the experimental results obtained on the IWSLT 2007 cor-

pus. The last section gives a conclusion and an outlook for possible future research.

2 Statistical Machine Translation

In statistical machine translation, a given source language sentence f

J

1

= f

1

. . . f

J

has

to be translated into a target language sentence e

I

1

= e

1

. . . e

I

. According to Bayes’

decision rule, to minimize the sentence error rate we have to choose the sentence ˆe

I

1

which maximizes the posterior probability

1

:

ˆe

I

1

= argmax

e

I

1

{P r(e

I

1

|f

J

1

)} . (1)

The posterior probability P r(e

I

1

|f

J

1

) is modelled directly using a log-linear model [4]:

p(e

I

1

|f

J

1

) =

exp(

P

M

m=1

λ

m

h

m

(e

I

1

, f

J

1

))

P

˜e

exp(

P

M

m=1

λ

m

h

m

(˜e, f

J

1

))

. (2)

Inserting Equation (2) into Bayes’ decision rule (1) and simplifying, we obtain:

ˆe

I

1

= argmax

e

I

1

{P r(e

I

1

|f

J

1

)} (3)

= argmax

e

I

1

{

M

X

m=1

λ

m

h

m

(e

I

1

, f

J

1

)} . (4)

In the log-linear model, different knowledge sources can be easily combined using fea-

ture functions h

m

(e

I

1

, f

J

1

). Statistical translation systems typically use bilingual fea-

tures such as translation probabilities and monolingual features such as the target lan-

guage model. The conditional model which models the posterior probability P r(e

I

1

|f

J

1

)

can also be used as one feature function in the log-linear approach.

The model scaling factors λ

m

are estimated on a development corpus to optimize

some performance measure, usually BLEU.

3 The Conditional Model

3.1 Motivation

The main improvement of the phrase-based translation (PBT) model over the single-

word-based (SWB) translation model is the extension of the context taken into account

when translating a word: While the SWB model translates words individually and uses

context information only in the target language model, the PBT model translates whole

sequences of words. Nonetheless, the PBT model does not take into account words out-

side the phrase to be translated. In the following, the conditional model which considers

a context beyond phrase boundaries is developed.

1

Note that in this work, P r is used to indicate true probabilities, while p denotes probability

models.

145

In Equation (8), the probabilities are decomposed using the chain rule P r(x

N

1

) =

Q

n

P r(x

n

|x

n−1

1

). Furthermore, the joint probability of target words and alignment is

separated.

The conditional model restricts the dependency of a target word to the source and

target words which are aligned by the last m alignment points:

P r(e

i

|e

i−1

1

, f

J

1

, A) = p(e

i

|e

i−1

i(k

i

−m)

, f

j(k

i

)

j(k

i

−m)

, A) . (9)

This Markov assumption of order m is similar to that of n-gram models in language

modelling: n-gram models restrict the dependency of a word to its n − 1 predecessors.

m is called model order.

Additionally, the dependency of the alignment probability P r(A

k

i

|A

k

i−1

1

, f

J

1

) is

restricted to the previous alignment point A

k

i−1

and the current source word f

j(k

i

)

. As

only the differences between j(k

i

) and j(k

i−1

) are considered, A

k

i

= (j(k

i

), i) can be

simplified to j(k

i

):

P r(A

k

i

|A

k

i−1

1

, f

J

1

) = p(j(k

i

)|j(k

i−1

), f

j(k

i

)

) . (10)

In a second step, the conditional probability p(j(k

i

)|j(k

i−1

)) is replaced by the

Bakis model known from speech recognition [5]:

δ =

0 if j(k

i

) = j(k

i−1

)

1 if j(k

i

) = j(k

i−1

) + 1

2 if j(k

i

) > j(k

i−1

) + 1

(11)

Fig. 2 shows the different values δ can take. In this example, the target word to be

translated is e

6

. Consequently, k

6

is 8. For a model order of m = 5, the gray alignment

points are the considered context, which corresponds to the words e

5

3

and f

6

2

.

Applying the assumptions of Equations (9) and (10) leads to:

P r(e

I

1

|f

J

1

) =

X

A

I

Y

i=1

p(e

i

|e

i−1

i(k

i

−m)

, f

j(k

i

)

j(k

i

−m)

, A) · p(j(k

i

)|j(k

i−1

), f

j(k

i

)

) (12)

=

X

A

I

Y

i=1

p(e

i

|δ, e

i−1

i(k

i

−m)

, f

j(k

i

)

j(k

i

−m)

) · p(δ|f

j(k

i

)

) (13)

The alignment information is contained in the function k

i

, and consequently A is

omitted in (13).

Instead of summing over all possible alignments, the maximum-approximation which

considers only the alignment leading to the highest value is used. This assumption re-

duces the complexity of the search procedure.

P r(e

I

1

|f

J

1

) ≈ max

A

I

Y

i=1

p(e

i

|δ, e

i−1

i(k

i

−m)

, f

j(k

i

)

j(k

i

−m)

) · p(δ|f

j(k

i

)

) (14)

147

[Original alignment,

source sentence f]

Alignment #0

i’ll

have

one

number

two

hamburger

lunch

prendo

un

menu

hamburger

numero

due

[Monotone alignment

˘

A,

reordered source

˘

f]

Alignment #0

i’ll

have

one

number

two

hamburger

lunch

prendo

un

numero

due

hamburger

menu

Fig. 3. A monotone alignment obtained by reordering the source sentence f.

5 Feature Functions

In the log-linear approach, six feature functions were used:

h

1

(e

I

1

, f

J

1

) = log

P r(e

I

1

|f

J

1

)

posterior probability

h

2

(e

I

1

, f

J

1

) = log

P r(f

J

1

|e

I

1

)

inverse posterior probability

h

3

(e

I

1

, f

J

1

) = I word penalty

h

4

(e

I

1

, f

J

1

) = log

P r(e

I

1

)

target language model

h

5

(e

I

1

, f

J

1

) = log

P r(

˘

f

J

1

)

language model for reordered source

h

6

(e

I

1

, f

J

1

) = reorder(f

J

1

,

˘

f

J

1

) reordering cost

Often, the translation system produces very short sentences e

I

1

, because for each ad-

ditional word, the translation probability gets smaller. The word penalty feature function

can counterbalance this tendency. With a negative weight λ

3

, a bonus is added for each

additional word. The language model for P r(

˘

f

J

1

) is trained on the reordered source

sentences of the training corpus. It is able to learn reordered word sequences which

occur in the training corpus and complements the simple reordering cost h

6

.

6 Combining the PBT Model and the Conditional Model

Additionally, the PBT model and the conditional model can be combined to take advan-

tage of their respective benefits:

149

– The PBT model is able to reorder whole phrases instead of single words. It can rule

out many reorderings in which related words are separated.

– The conditional model considers a context beyond phrase boundaries.

A standard method for combining different models is N -best list rescoring: First, one

model is used to translate the source text. For each sentence, the N best translations are

stored along with their model costs. In a second step, the other model is used to score

the translated sentences of the first model. Given a translation made by the first model,

the cost of this translation with respect to the second model is calculated. In the end, a

log-linear combination of both costs is calculated. The weights of this combination are

optimized on the development corpus.

In the model combination, the reordering which was computed by the PBT model

when translating a sentence is also used by the conditional model. The conditional

model performs a monotone translation of the reordered source sentence produced by

the PBT model. Thus, the model combination takes advantage of the better reordering

capabilities of the PBT model. The model combination led to an improvement over the

PBT model, as can be seen in the following section.

7 Translation Results

7.1 Evaluation Criteria

Evaluating the quality of a translation is in itself a difficult task. In the experiments

presented here, we rely on two criteria, TER and BLEU.

- TER (Translation Edit Rate.) [8] The TER criterion is a recent refinement of the

WER criterion. The WER criterion is defined as the edit distance (minimum num-

ber of insertions, deletions and substitutions) between the translation and a refer-

ence translation. In addition to this, the TER allows for a sequence of contiguous

words to be moved to another place. This enhancement is natural, as often phrases

can be placed at different position of the sentence without altering its meaning.

- BLEU (Bi-Lingual Evaluation Understudy.) [9] BLEU is the current de facto stan-

dard in machine translation evaluation. When using several translation references,

BLEU can capture the variability translations can have. It evaluates the translated

sentence by calculating an n-gram precision for n ∈ {1, 2, 3, 4}. In addition, a

brevity penalty is calculated to penalise translations which are too short. Note that

a good translation is indicated by a high BLEU scowc vamos re. BLEU is said to

have a high correlation with human evaluation. The parameter of the model pre-

sented in this work were optimized with respect to BLEU.

7.2 Corpus Statistics

For the following experiments, the two language pairs Chinese-English and Italian-

English were chosen from the corpus used in the “International Workshop on Spoken

Language Translation” IWSLT 2007 [11]. The corpus is a further development of the the

multilingual BTEC (“Basic Travel Expression Corpus”) corpus which contains typical

150

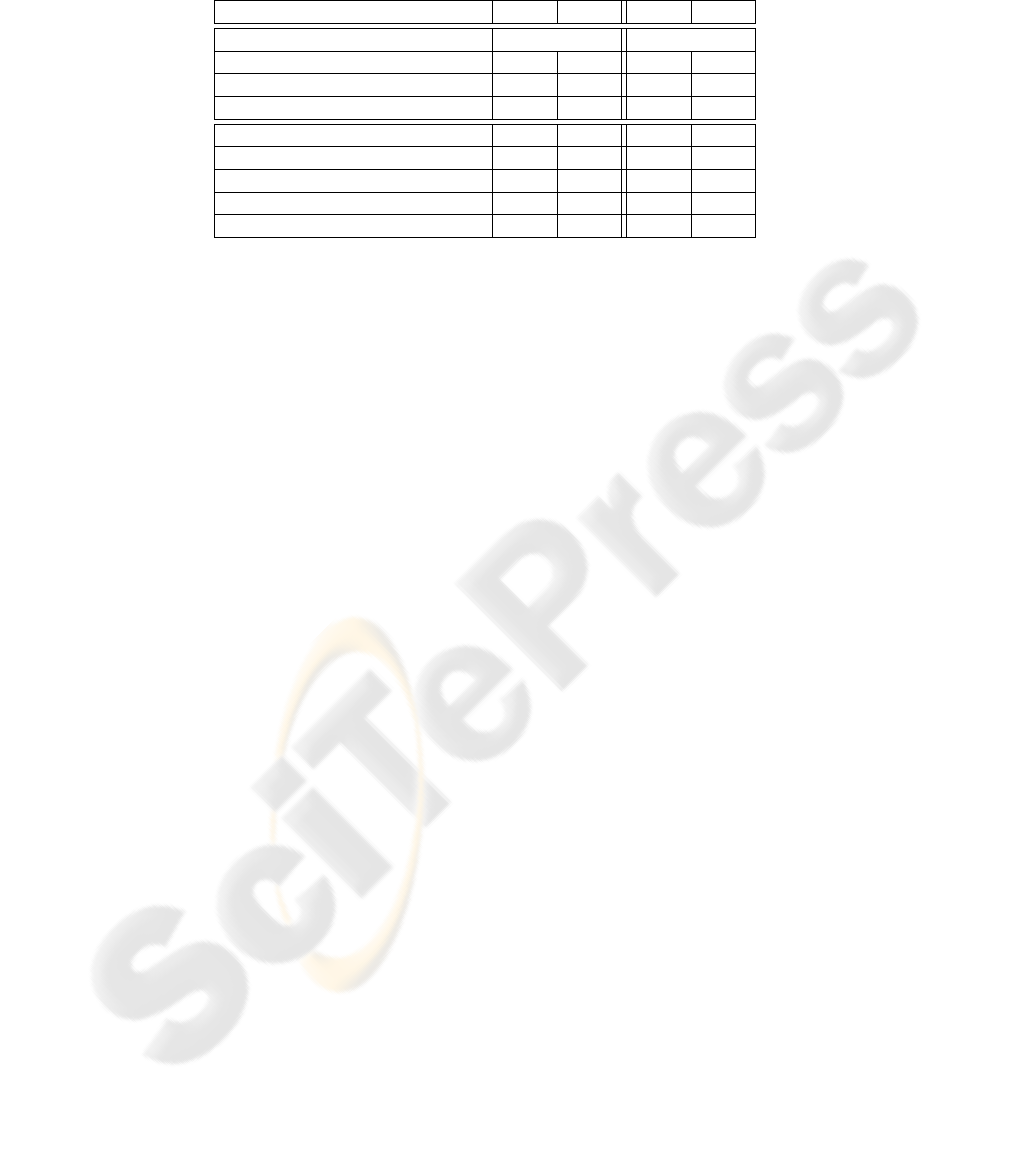

Table 1. Corpus Statistics IWSLT 2007 Chinese-English and Italian-English.

Chinese English Italian English

Training data: Sentences 42,942 22,995

Running Words 390,335 420,431 164,715 222,005

Vocabulary 10,385 9,933 10,329 7,794

Singletons 3,696 3,937 4,729 3,355

Test data: Sentences 489 6 · 489 724 4 · 724

Running Words 3,256 22,574 6,540 36,725

Vocabulary 885 1,527 735 940

OOVs (running words) 70 4,377 449 6,799

OOVs (in vocabulary) 69 394 110 288

phrases and sentences from the travelling domain. In Table 1, some corpus statistics

are summarized. In the Chinese case, six reference translations were given for each

sentence in the test set, in the Italian case, four reference translations were given.

To see whether the extension of the context beyond phrase boundaries leads to an

improvement in translation quality, the conditional model is compared to the PBT sys-

tem which was implemented at the Chair of Computer Science 6, RWTH Aachen Uni-

versity [12]. Moreover, a model combination of the PBT model and the conditional

model is evaluated.

7.3 Chinese-English

To obtain the optimal window length of the reordering graph, we performed exper-

iments on the development corpus with different window lengths. Fig. 4 shows the

influence of the window length on the translation quality. It points out the importance

of reordering for the Chinese-English language pair: a non-monotone translation leads

to significantly better results than a monotone translation (l = 1). For window lengths

higher than l = 7, the search space becomes too large to be processed. Heavy pruning

had to be applied, which led to a decrease in translation quality.

The results obtained on the Chinese-English test data are summarized in Table 2. A

model order of m = 8 was used.

The conditional model does not achieve the same translation quality as the PBT

model. One reason is the cost function of reordering graphs. The assumption that a

monotonic translation is more probable than a translation in which many words are

reordered does not hold for the Chinese-English language pair, because the sentence

structure between the two languages is often very different. The model combination of

the PBT model and the conditional translation model led to an improvement on the test

corpus of 0.5 BLEU and 0.7 TER absolute.

7.4 Italian-English

For the Italian-English language pair, the reordering problem is not as pronounced as

for the Chinese-English case. Optimal results on the development corpus were obtained

for local reorderings with a window length of l = 3 and a model order of m = 4.

151

In the future, advanced smoothing methods should be applied to the conditional

model. Moreover, a better reordering model should be developed to take into account

differences in word order.

References

1. Brown, P., Della Pietra, S., Della Pietra, V., Mercer, R.: The Mathematics of Statistical Ma-

chine Translation: Parameter Estimation Computational Linguistics, Vol 19.2, pages 263-

311, 1993.

2. Zens, R., Och F., Ney, H.: Phrase-based statistical machine translation In M. Jarke, J.

Koehler, and G. Lakemeyer, editors, 25th German Conf. on Artificial Intelligence (KI2002),

volume 2479 of Lecture Notes in Artificial Intelligence (LNAI), pages 18–32, Aachen, Ger-

many, September.

3. Casacuberta, F., Vidal, E.: Machine Translation with Inferred Stochastic Finite-State Trans-

ducers, in COLING 2004, Vol. 30, No. 2, pages 205–225, Cambridge, MA, USA

4. Och, F., Ney, H.: Discriminative training and maximum entropy models for statistical ma-

chine translation In: Proc. of the 40th Annual Meeting of the Association for Computational

Linguistics (ACL). (2002) pp. 295-302, Philadelphia, PA, July.

5. Bakis, R.: Continuous speech word recognition via centisecond acoustic states in Proc. ASA

Meeting, Washington DC, 1976, April.

6. Ney, H., Martin, S., Wessel, F.: Statistical Language Modeling Using Leaving-One-Out,

Corpus-Based Methods in Language and Speech Processing, pages 174-207, 1997

7. Kanthak, S., Vilar, D., Matusov, E., Zens, R., Ney, H.: Novel Reordering Approaches in

Phrase-Based Statistical Machine Translation, in ACL Workshop on Building and Using Par-

allel Texts, pages 167–174, Association for Computational Linguistics, Ann Arbor, Michi-

gan, June 2005.

8. Snover, M., Dorr, B., Schwartz, R., Makhoul, J., Micciula, L., Weischedel, R.: A Study of

Translation Error Rate with Targeted Human Annotation. University of Maryland, College

Park and BBN Technologies 2005, July

9. Papineni, K., Roukos, S. ,Ward T., Zhu, W.: Bleu: a method for automatic evaluation of ma-

chine translation Technical Report RC22176 (W0109-022) IBM Research Division, Thomas

J. Watson Research Center (2001)

10. Takezawa, T., Sumita, E., Sugaya, F., Yamamoto, H., Yamamoto, S.: Toward a broad-

coverage bilingual corpus for speech translation of travel conversations in the real world

Proceedings of the Third International Conference on Language Resources and Evaluation

(LREC 2002), pages 147-152

11. Fordyce, C.: Overview of the IWSLT 2007 evaluation campaign International Workshop on

Spoken Language Translation (IWSLT) 2007 Trento, Italy, October Kanthak, S., Vilar, D.,

Matusov, E., Zens, R., Ney, H.: Novel Reordering Approaches in Phrase-Based Statistical

Machine Translation ACL Workshop on Building and Using Parallel Texts, pages 167-174,

2005 Association for Computational Linguistics, Ann Arbor, Michigan, June.

12. Mauser, A., Vilar, D., Leusch, G., Zhang, Y., Ney, H.: The RWTH Machine Translation

System for IWSLT 2007 International Workshop on Spoken Language Translation, pages

161-168, Trento, Italy, 2007, October.

153