SCIENTIFIC

DOCUMENTS MANAGEMENT SYSTEM

Application of Kohonens Neural Networks with Reinforcement in Keywords

Extraction

Bła

˙

zej Zyglarski

Faculty of Mathematics And Computer Science, Nicolaus Copernicus University, Chopina 12/18, Toru

´

n, Poland

Piotr Bała

Faculty of Mathematics And Computer Science, Nicolaus Copernicus University, Chopina 12/18, Toru

´

n, Poland

ICM, Warsaw University, ul.Pawinskiego 5a, Warsaw, Poland

Keywords:

Keywords extraction.

Abstract:

Keywords choice is an important issue during the document analysis. We’ve developed the document man-

agement system which performs the keywords-oriented document comparison. In this article we presents our

approach to keywords generation, which uses self-organizing Kohonen neural networks with reinforcement.

This article shows the relevance of this method to the standard statistical method.

1 INTRODUCTION

This article contains complete description and com-

parison of two approaches of keywords choosing: the

statistical one and the neural network based one. Sec-

ond one consists of two types: the simple neural net-

work and the neural network with the reinforcement.

In both cases we are using as the analysis example

the same input string built over a two letters alpha-

bet, which is ”aab abb abaa abb bbba abb bbaa baa

bbaa bbbb bbba bbbb”. The last part of this docu-

ment shows effectiveness of the reinforced learning

and other algorithms. All presented approaches was

tested with example set of about 200 documents.

2 THE STATISTICAL APPROACH

The standard approach to selecting keywords is to

count their appearance in the input text. As it was

described in narimura, most of frequent words are ir-

relevant. The next step is deleting trash words with

use of specific word lists.

In order to speed up the trivial statistical algorithm

for keywords generation, weve developed the algo-

rithm which constructs the tree for text extracted from

a document. The tree is built letter by letter and each

letter is read exactly once, which guarantees that the

presented algorithm works in a linear time.

2.1 An Algorithm

Denote the plain text extracted from an i-th document

as T (i). Let

b

T (i) be a lowercase text devoid of punc-

tuation. In order to select appropriate keywords, we

need to build a tree (denoted as τ(

b

T (i))).

1. Let r be a root of τ(

b

T (i)). Let p be a pointer,

pointing at r

p = r (1)

2. Read a letter a from the source text.

a = ReadLetter(

b

T (i)) (2)

3. If there exists an edge labeled by a, leaving a ver-

tex pointed by p, and coming into some vertex s

then

p = s (3)

count(p) = count(p) + 1 (4)

else, create a new vertex s and a new edge labeled

by a leaving vertex p and coming into vertex s

p = s (5)

counter(p) = 1 (6)

55

Zyglarski B. and Bała P. (2009).

SCIENTIFIC DOCUMENTS MANAGEMENT SYSTEM - Application of Kohonens Neural Networks with Reinforcement in Keywords Extraction.

In Proceedings of the International Conference on Knowledge Management and Information Sharing, pages 55-62

DOI: 10.5220/0002298100550062

Copyright

c

SciTePress

4. If a was a white space character then go back with

the pointer to the root

p = r (7)

5. If

b

T (i) is not empty, then go to step 2.

After these steps every leaf l of τ(

b

T (i)) represents

some word found in the document and count(l) de-

notes appearance frequency of this word. In most

cases the most frequent words in every text are irrele-

vant. We need to subtract them from the result. This

goal is achieved by creating a tree ρ which contains

trash words.

1. For every document i

ρ = ρ + τ(

b

T (i)) (8)

Every time a new document is analyzed by the sys-

tem, ρ is extended. It means, that ρ contains most

frequent words all over files which implies that these

words are irrelevant (according to different subjects of

documents). The system is also learning new unim-

portant patterns. With increase of analyzed docu-

ments, accuracy of choosing trash words improves.

Let Θ(ρ) be a set of words represented by Θ(ρ).

We should denote as a trash word a word, which fre-

quency is higher than median m of frequencies of

words which belongs to Θ(ρ).

1. For every leaf l ∈ τ(

b

T (i)) If there exist z ∈ ρ,

such as z and l represents the same word and

count(z) > count(m) then

count(l) = 0 (9)

2.2 Limitations

Main limitation of this type of generating keywords

is loosing the context of keywords occurrences. It

means that words which are most frequent (which im-

plies that they are appearing together on the result list)

could be actually not related. The improvement for

this issue is to pay the attention at the context of key-

words. It could be achieved by using neural networks

for grouping words into locally closest sets. In our

approach we can select words which are less frequent,

but their placement indicates, that they are important.

Finally we can give them a better position at the result

list.

3 KOHONEN’S NEURAL

NETWORKS APPROACH

During implementation of the document management

system (Zyglarski et al., 2008) weve discovered previ-

ously mentioned limitations in using simple statistical

methods for keywords generation. Main goal of fur-

ther contemplations was to pay attention of the word

context. In other words we wanted to select most fre-

quent words, which occur in common neighborhood.

Table 1 presents an example text, with discovered

keywords. Presented algorithm selects the most fre-

quent words, which are close enough. It is achieved

with a words categorization (Frank et al., 2000).

This is simple text about keywords generation

(discovery). Of course all keywords are difficult

for automatic generation (or

discovery), but in

limited way we could achieve results, which will

fullfil our needs and retrieve proper

keywords.

During implementation of document management

system we’ve

discovered mentioned previously li-

mitations in using simple statistical methods for

keywords generation.

Main goal of further contemplations was to pay

attention of words context. In other words we

wanted to select most

frequent keywords, which

occur in common neighborhood. Using

neural ne-

tworks

we show disadvantages of statistical key-

words discovery.

Neural networks

based keywords generation is

way much reliable and gives better results, inc-

luding promotion of less frequent keywords.

Others, which can be more

frequent do not have to

be part of the results. Limitations were defe-

ated.

Figure 1: Example keywords selection.

Precise results are shown in the Table 1. Each key-

word is presented as a pair (a keyword, a count). Key-

words are divided into categories with use of Kohonen

neural networks. Each category has assigned rank,

which is related to number of gathered keywords and

their frequency).

Results were filtered using words from ρ defined

in first part of this article.

3.1 An Algorithm

Algorithm of neural networks based keyword discov-

ery consists of 3 parts. At the beginning we have

to compute distances between all words. Then we

have to discover categories and assign proper words

to them. At the end we need to compute the rank od

each category.

3.1.1 Counting Distances

Lets

b

T

f

=

b

T ( f ) be a text extracted from a document

f and

b

T

f

(i) be a word placed at the position i in this

text. Lets denote the distance between words A and B

KMIS 2009 - International Conference on Knowledge Management and Information Sharing

56

Table 1: Example keywords selection.

Category Rank Keyword Count

1 0,94 keywords 8

discovery 3

frequent 3

simple 2

text 1

automatic 1

2 0,58 neural 2

networks 2

discovered 1

neighborhood 1

3 0,50 fulfill 1

4 0,41 statistical 2

words 2

contemplations 1

promotion 1

disadvantages 1

5 0,36 retrieve 1

reliable 1

within the text

b

T

f

as δ

f

(A, B).

δ

f

(A, B) = (10)

= min

i, j∈

{

1,..,n

}

{ki − jk; A =

b

T

f

(i) ∧ B =

b

T

f

( j)} (11)

By the position i we need to understand a number of

white characters read so far during reading text. Ev-

ery sentence delimiter is treated like a certain amount

W of white characters in order to avoid combining

words from separate sequences (we empirically chose

W = 10). Weve modified previously mentioned tree

generation algorithm. Analogically we are construct-

ing the tree, but every time we reached the leaf (which

represents a word) we are updating the distances ma-

trix M (presented on figure 2), which is n × n upper-

triangle matrix and n ∈ N means number of distinct

words read so far.

ξ

f

1 2 ... k ... n

1 0 *

2 0 *

... ... *

k 0* * *

... ...

n 0

Figure 2: Distances matrix.

Generated tree (presented on figure 3) is used for

checking previous appearance of actually read word,

assigning the word identifier (denoted as ξ

f

( j) =

ξ(

b

T

f

( j)),ξ

f

( j) ∈ N ) and to decide whether update

existing matrix elements or increase matrix’s dimen-

sion by adding new row and new column. Figure 2

marks with dashed borders fields updateable in the

first case. For updating existing matrix elements and

counting new ones we also use the array with posi-

tions of last occurrences of all read words. Lets de-

note this array as λ(ξ

f

( j))

n=1

n=2

n=3

n=k

.

.

.

Figure 3: Word tree.

We need to consider two cases:

1. Lets assume that j − 1 words was read from input

text and a word with position j is read for the first

time.

∀

i< j

b

T

f

( j) 6=

b

T

f

(i) (12)

λ(ξ

f

( j)) = j (13)

∀

k∈{1,...,ξ

f

j}

M[k, ξ

f

( j)] = | j − λ(k)| (14)

This case is shown on figures 4 and 5, which con-

tains a visualization of the state of am algorithm

after reading three first words from example string

”aab abb abaa — abb bbba abb bbaa baa bbaa

bbbb bbba bbbb”. At the figure 5 there is also

presented the table of last occurrences.

1

1

1

1

1

2

3

3

n=1

n=2

n=3

a

a

a

a

b

b

b

Figure 4: The word tree generated after reading first three

words.

λ(ξ

f

) 1 2 3

ξ

f

1 2 3

aab 1 0 1 2

abb 2 0 1

abaa 3 0

Figure 5: The distance matrix generated after reading first

three words.

SCIENTIFIC DOCUMENTS MANAGEMENT SYSTEM - Application of Kohonens Neural Networks with Reinforcement

in Keywords Extraction

57

2. Lets assume that j − 1 words was read from input

text and a word with position j was read ealier and

already has given a worde identifier.

∃

i< j

b

T

f

( j) =

b

T

f

(i) (15)

We have to update the last occurrences table by

λ(ξ

f

( j)) = j (16)

and update appropriate row and column in exist-

ing matrix

∀

k∈{1,...,ξ

f

( j)−1}

M[k, ξ

f

( j)] = ∆(k, ξ

f

( j)) (17)

∀

k∈{ξ

f

( j)+1,...,

b

ξ

f

}

M[ξ

f

( j), k] = ∆(ξ

f

( j), k) (18)

where

b

ξ

f

= max

i={1,..., j}

{ξ

f

(i)} (19)

∆(k, l) = min(|λ(k) − λ(l)|, M[k, l]) (20)

This case is shown on figures 6 and 7, which con-

tains visualization of the state of the algorithm af-

ter reading four first words from an example string

”aab abb abaa abb — bbba abb bbaa baa bbaa

bbbb bbba bbbb”. In this case a word ”abb” was

read twice, so we need to update λ array and the

actual distance matrix M.

1

1

1

1

2

3

4

4

n=1

n=2

n=3

a

a

a

a

b

b

b

Figure 6: The word tree generated after reading first four

words.

λ(ξ

f

) 1 4 3

ξ

f

1 2 3

aab 1 0 1 2

abb 2 0 1

abaa 3 0

Figure 7: The distance matrix generated after reading first

four words.

Bold-faced fields indicates values, that could be

evaluated each time using λ array and dont have to

be memorized. According to that observation we

can omit the distance table, using instead of it only

specific lists (Figure 10), containing these elements,

which cannot be evaluated with λ array. Final results

of our example (after reading all words from string

”aab abb abaa abb bbba abb bbaa baa bbaa bbbb bbba

bbbb —”) are shown on figures 8 and 9.

1

1

1

1

1

1

1

1

2

2

3

4

4

5

6

5

11

n=1

n=2

n=3

n=7

n=4

n=5

n=6

a

a

a

a

a

a

a

a

a

b

b

b

b

b

b

b

Figure 8: The word tree generated after reading all words.

λ(ξ

f

) 1 6 3 11 9 8 12

ξ

f

1 2 3 4 5 6 7

aab 1 0 1 2 4 6 7 9

abb 2 0 1 1 1 2 4

abaa 3 0 2 4 5 7

bbba 4 0 2 3 1

bbaa 5 0 1 1

baa 6 0 2

bbbb 7 0

Figure 9: The distance matrix generated after reading all

words.

Presented algorithm guarantees that its result ma-

trix is a matrix of shortest distances between words.

δ

f

(A, B) = M[ξ(A), ξ(B)] (21)

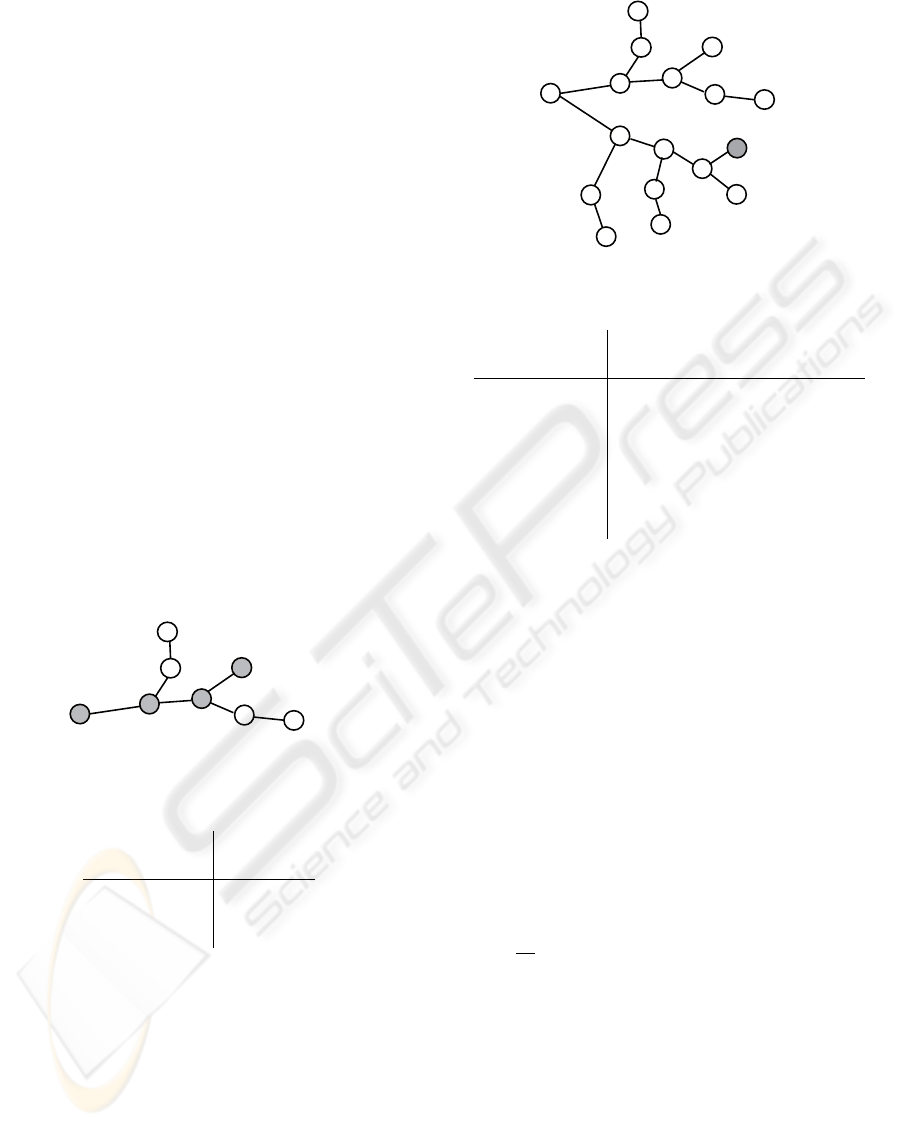

3.1.2 Generating Categories

The knowledge of distances between words in doc-

ument is used to categorize them with use of the

self-organizing Kohonen Neural Network (Figure 11)

(Kohonen, 1998).

This procedure takes 5 steps:

1. Create rectangular m × m network, where m =

b

4

q

b

ξ

f

c. Presented algorithm can distinguish max-

imally m

2

categories. Every node (denoted as

ω

x,y

) is connected with four neighbors and con-

tains a prototype word (denoted as

b

T

f

ω

(x, y)) and a

set (denoted as β

f

ω

(x, y)) of locally close words.

2. For each node choose random prototype of the

category p ∈ {1, 2, ...,

b

ξ

f

}.

3. For each word k ∈ {1, 2, ...,

b

ξ

f

} choose closest

prototype

b

T

f

ω

(x, y) in network and add it to list

β

f

ω

(x, y).

KMIS 2009 - International Conference on Knowledge Management and Information Sharing

58

λ(ξ

f

) λ(1) λ(2) ... λ(

b

ξ

f

)

ξ

f

1 2 ...

b

ξ

f

δ

f

(1, u

1,1

) δ

f

(1, u

2,1

) ... δ

f

(1, u

b

ξ

f

,1

)

δ

f

(1, u

1,2

) δ

f

(1, u

2,2

) ... δ

f

(1, u

b

ξ

f

,2

)

... ... ... ...

δ

f

(1, u

1,k

1

) δ

f

(1, u

2,k

2

) ... δ

f

(1, u

b

ξ

f

,k

b

ξ

f

)

Figure 10: The scheme of the distance table.

Figure 11: The scheme of the neural network for words cat-

egorization with selected element and its neighbors.

4. For each network node ω

x,y

compute a general-

ized median for words from β

f

ω

(x, y) and neigh-

bors lists (denoted as β). A generalized median is

defined as an element A which minimizes a func-

tion:

Σ

B∈β

δ

f 2

(A, B) (22)

Set

b

T

f

ω

(x, y) = A

5. Repeat step 4 until the network is stable. Stability

of the network is achieved, when in two follow-

ing iterations all word lists are unchanged (with-

out paying attention to iternal lists sturcture and

their position in nodes).

Such algorithm divides the set of all words found in

document into separate subsets, which contains only

locally close words. In other words it groups words

into related sets (see Figure 12).

3.1.3 Selecting Keywords

After execution of described procedure all words from

the analyzed document are divided into categories.

We need to choose most important categories and then

select most frequent words among them. Each cate-

gory contains list of words, which are locally close.

As a category rank we could take

ρ

x,y

=

Σ

w∈β

f

ω

(x,y)

count(w)

|β

f

ω

(x, y)|

(23)

Figure 12: The two dimensional example of Kohonen net-

work grouping locally closest words (small circles) into dif-

ferent categories.

Now ordering categories descending by ρ

x,y

we can

select from each a number of most frequent words.

This method allows selecting words, which appear-

ance isnt most frequent in the complete scope of a

document, but is frequent enough in some sub-scope.

The sample results are shown in table 1. The table

presents first eighteen results presented method.

4 THE NEURAL NETWORK

REINFORCEMENT

At this stage of work we’ve decided to extend an

idea of presented algorithm with use of a reinforce-

ment for improving it’s accuracy. The reinforcement

is performed with use of presented in (Zyglarski et al.,

2008) system, in which we have created huge repos-

itory of documents. Articles used for testing algo-

rithms were also put into this repository. It means that

all of them were compared using statistics of words,

statistics of n-grams (Cavnar and Trenkle, 1994) (in

this particular case 3-grams) and Kolmogorov Com-

plexity (Fortnow, 2004). Three kinds of distances be-

tween documents are computed.

Table 2: The comparison of results for the example text.

Statistical Kohonen Reinforced

words 40 words 40 keyword 25

keywords 25 keywords 25 distance 7

text 22 neural 13 neural 13

neural 13 distances 3 statistical 10

abb 13 text 22 context 4

b

T

f

11 simple 5 matrix 11

matrix 11 elements 2 denoted 4

tree 9 reading 8 vertex 3

frequent 9 extracted 3 web 2

b

T 9

b

T

f

11 relevant 2

bbbb 8 matrix 11 string 3

networks 8 distance 7 extracted 3

extracted 3 discovery 5 networks 8

SCIENTIFIC DOCUMENTS MANAGEMENT SYSTEM - Application of Kohonens Neural Networks with Reinforcement

in Keywords Extraction

59

4.1 An Idea of the Reinforcement

Main idea of the reinforcement (Sutton, 1996) is to

modify a behavior of the neural network depending

of a weight of a keywords candidate. At the begin-

ning we need to initiate the weight attribute (with val-

ues from interval [0,1]) of every word from a docu-

ment. Each word, which can be found in previously

mentioned trash word set has weight equal to 0, rest

of them have weights equal to 1. The neural net-

work algorithm is modified in such way, that words

with smaller weight are pushed away from words with

greater weight. It means that they are pushed aside the

main categories. Of course they will have also small

influence on category rank. Moreover we need to add

parent iteration, which will modify weights of words

and repeat neural network steps until proper words

will be selected. After performance of word catego-

rization a set of proposed keywords is generated. At

this stage we need to check every keyword for it’s ac-

curacy. This is performed by checking a number (in

our tests it was 10) of articles (containing tested key-

word) randomly selected from repository and compar-

ing normalized distances between them and the an-

alyzed document. If these documents are relatively

close (in the terms of counted distances) to initial one,

a keyword is prized with increasing it’s weight. If dis-

tances are relatively far, weight is decreased. In other

words, if an selected keyword is good, a network is re-

warded. With this improvement, algorithm continues

with steps of neural network learning.

4.2 The Results Propriety

Methodology of creating repository, which is de-

scribed in (Zyglarski et al., 2008) guarantees, that col-

lected documents has various subjects. They are gath-

ered with using of most frequent words appeared in

each document. Additional variety is an result of the

collection which initiates repository - containing ar-

ticles from various areas of interests. It means that

there is a big chance, for articles containing tested

keyword to be really connected with the same sub-

ject. If a keyword candidate isn’t really a keyword,

these documents will probably differ from tested one

and network will not be reinforced.

5 THE COMPARISON OF

RESULTS

Presented method gives better results than the sim-

ple statistical method. In table 2 we show keywords

found over this article, chosen with using all three

methods (with italic font there are marked actual (sub-

jectively selected by authors) keywords). It’s clear

that Kohonen Networks related methods gives better

results than statistical method and also reinforcement

has very good influence on final results.

0%

5%

10%

15%

20%

25%

30%

35%

40%

45%

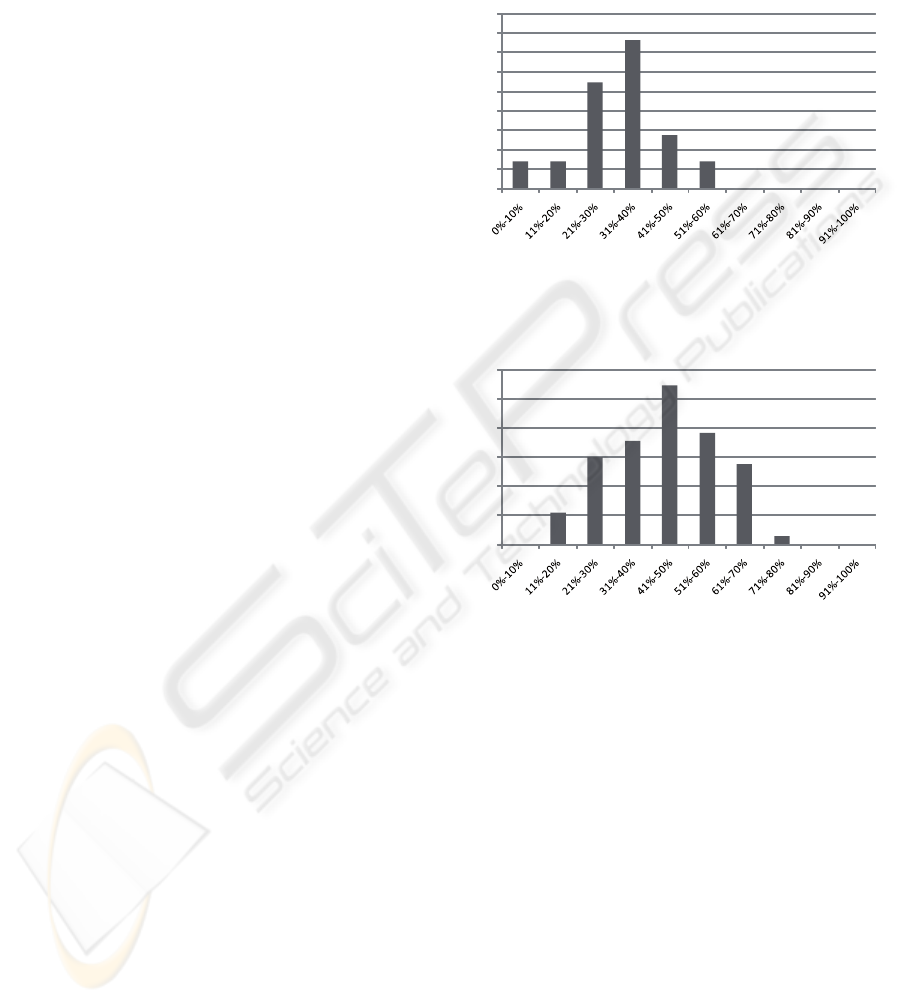

Figure 13: Effects of statistical method. X axis shows ef-

fectiveness, Y axis shows number of documents with pro-

cessed with this effectiveness.

0%

5%

10%

15%

20%

25%

30%

Figure 14: Effects of neural network method. X axis shows

effectiveness, Y axis shows number of documents with pro-

cessed with this effectiveness.

In our tests we’ve used about 200 various articles.

In most cases results given by our approach was more

accurate than other approaches. The accuracy was

checked manually and is subjective. Finally, accord-

ing to executed tests, statistical methods gave very

poor results (see Figure 13). In the best case list of

proposed keywords achieved 65% accuracy. In the

worst case it was about 5%. At the figure 13 there

are presented accuracies of results from tested arti-

cles (for example: in 66% of articles accuracy of key-

words was at level between 20% and 40%). Better

results archived with Kohonen Networks without the

reinforcement are presented at the figure 15). In the

best case, list of proposed keywords achieved almost

80% accuracy. 10%-40% accuracy was in this case

very less often.

The best result were generated with using Rein-

forced Kohonen Networks, where best results reached

KMIS 2009 - International Conference on Knowledge Management and Information Sharing

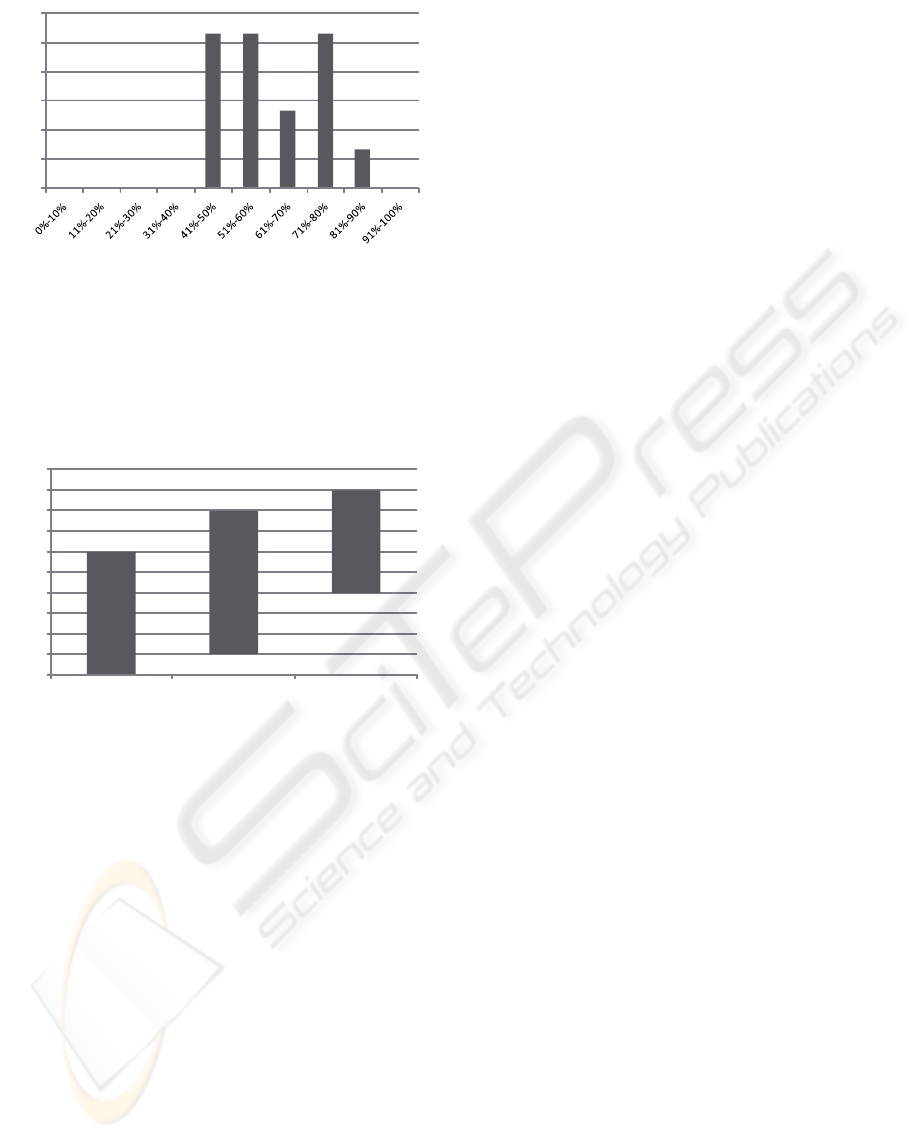

60

0%

5%

10%

15%

20%

25%

30%

Figure 15: Effects of neural network method with reinforce-

ment. X axis shows effectiveness, Y axis shows number of

documents with processed with this effectiveness.

level of 90% accuracy and results between 10%-40%

were completely eliminated. Comparison of effec-

tiveness of all presented methods is shown on fig. 16.

0%

10%

20%

30%

40%

50%

60%

70%

80%

90%

100%

Reinforced learning

Kohonen Networks

Sta!s!cal approach

Figure 16: Comparison of presented methods.

6 DOCUMENT MANAGEMENT

SYSTEM IMPLEMENTATION

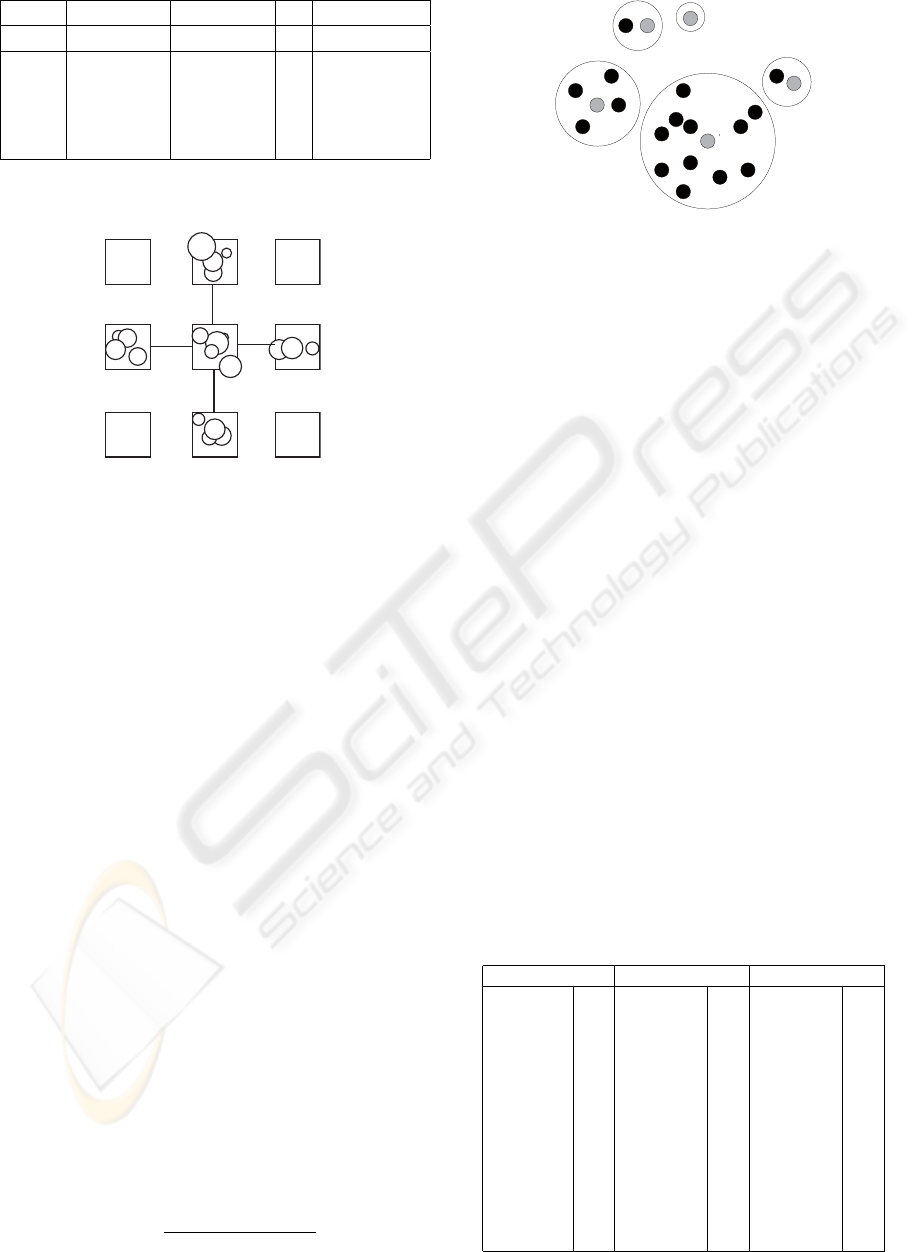

The Web Services based Scientific Article Manager

(Zyglarski et al., 2008) has been designed to provide

the knowledge management for the users using jour-

nal articles and internet documents as main sources

of information. The journal articles are usually re-

trieved in PDF format, however the content can be

processed and analyzed automatically (excluding rel-

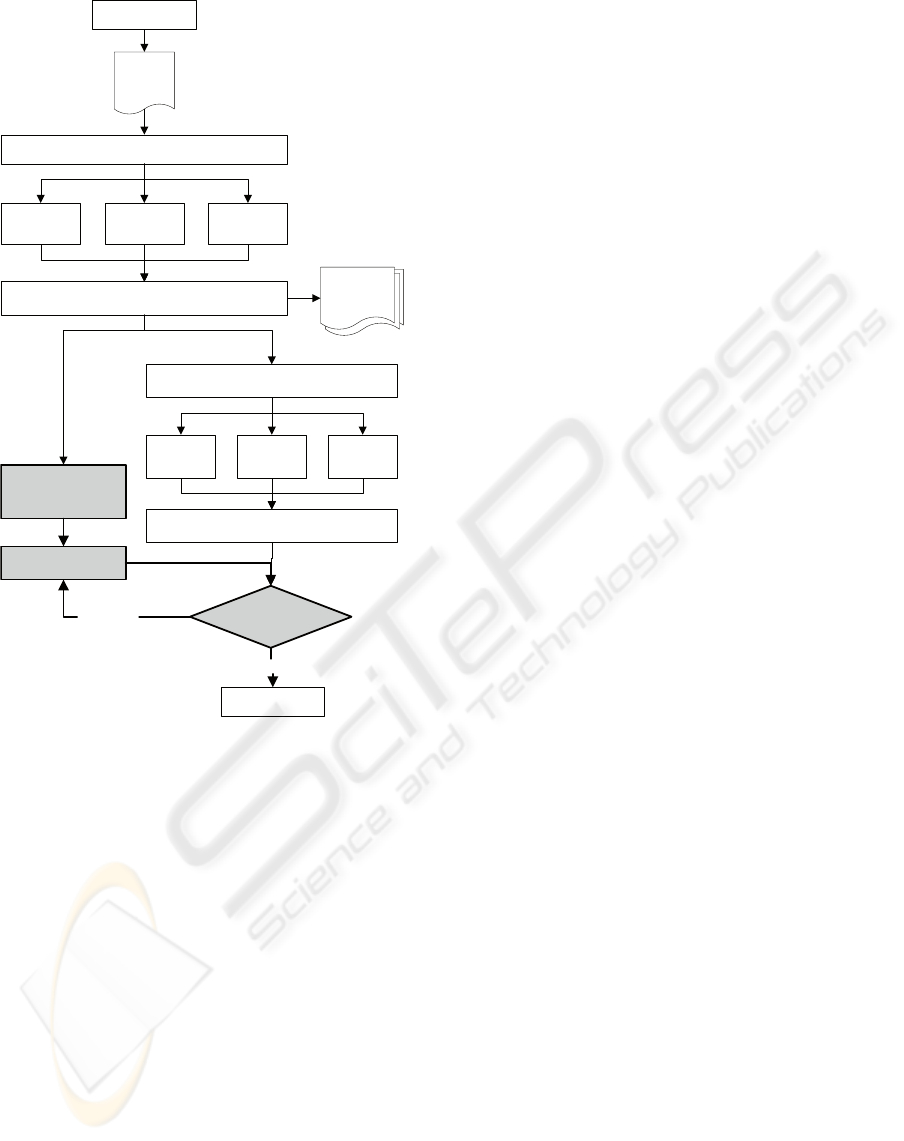

atively small number of documents which are stored

as direct scans). Figure 17 presents the architecture of

learning part of the system. This article shows details

of the gray shaded part of the figure.

During selection of documents connected to ex-

amined article, there are used three different methods

of comparison, which are finally combined. It guaran-

tees accuracy of obtained results, which pay attention

to content and structure of documents:

1. Statistical based, performed by checking apper-

ance of all words in two documents. Each word

is treated as a dimension and a taxicab metric is

used for counting distances betweeen documents.

In this case all punctuation and white characters

are excluded.

2. N-grams based, performed by checking apperance

of all n-grams in two documents. Each n-gram

is treated as a dimension, analogicaly to previous

method.

3. Kolmogorov distance based. Kolmogorov com-

plexity of text X (denoted as K(X)) is length of the

shortest compressed binary file X∗, from which

original text can be reconstructed. Formally Kol-

mogorov Complexity K(x) is defined as length of

the smallest program running on Turing Machine,

which returns word x . In our case Turing Ma-

chine is substituted by compression program and

compressed file responds to the Turing program.

Presented system is implemented with Java lan-

guage and provides data with use of the webservices

technology. We’ve also implemented three kinds of

user interfaces:

1. Servlet based;

2. Portlet based (used with Liferay portal);

3. .Net Windows client (used with Windows ex-

plorer, in future intended to work seamlessly

within Windows explorer)

The users are about to share, and access each other

documents, with apropriate permission subsystem.

7 CONCLUSIONS

Presented approach gives in most cases very good

results of the context aware keywords recognition.

Efectiveness is related with size of the repository of

available documents, which are used for reinforce-

ment of the neural network. It means, they are

more effective while working with large data col-

lections. Moreover continuously working kohonen

network can improve the keywords recognition ev-

ery time new documents are added to the reposi-

tory. If neural network is stable, any change of key-

words weights implies reorganisation of this network,

which (according to size of performed changes - only

weights in proposed keywords are changed) is in most

cases quite fast. Most of the processing time is spent

to check accuracy of proposed keywords. Fortunately,

results of this check are used also for categorization of

documents collected in our Document Management

System.

SCIENTIFIC DOCUMENTS MANAGEMENT SYSTEM - Application of Kohonens Neural Networks with Reinforcement

in Keywords Extraction

61

Document analysis

Scientific

Article

Scientific

Article

Scientific

Article

Repository

Statistical

N-grams

based

Kolmogorov

Compare with other documents from repository

Statistical

N-grams

based

Kolmogorov

Categorize documents

Analyze words

distances

Combine results and generate

documents ranking list

Categorize words

Check results and

prepare reinforcement

INPROPER

PROPER

END

START

Figure 17: The Document Management System implemen-

tation.

REFERENCES

Cavnar, W. B. and Trenkle, J. M. (1994). N-gram-based

text categorization. In Proceedings of SDAIR-94, 3rd

Annual Symposium on Document Analysis and Infor-

mation Retrieval, pages 161–175, Las Vegas, US.

Fortnow, L. (2004). Kolmogorov complexity and computa-

tional complexity.

Frank, E., Chui, C., and Witten, I. H. (2000). Text cate-

gorization using compression models. In In Proceed-

ings of DCC-00, IEEE Data Compression Conference,

Snowbird, US, pages 200–209. IEEE Computer Soci-

ety Press.

Kohonen, T. (1998). The self-organizing map. Neurocom-

puting, 21(1-3):1–6.

Sutton, R. S. (1996). Generalization in reinforcement learn-

ing: Successful examples using sparse coarse coding.

In Advances in Neural Information Processing Sys-

tems 8, volume 8, pages 1038–1044.

Zyglarski, B., Baa, P., and Schreiber, T. (2008). Web ser-

vices based scientific article manager. In Informa-

tion Systems Architecture and Technology, Web Infor-

mation Systems: Models, Concepts and Challenges,

pages 205–215. Wrocaw University of Technology.

APPENDIX

Scientific work cofunded from resources of Euro-

pean Social Fund and Budget within ZPORR (Zin-

tegrowany Program Operacyjny Rozwoju Regional-

nego), Operation 2.6 ”Regional Innovation Strate-

gies and knowledge transfer” of Kujawsko-Pomorskie

voivodship project ”Scholarship for PhD students

2008/2009 - ZPORR”.

KMIS 2009 - International Conference on Knowledge Management and Information Sharing

62