COMBINING TWO DECISION MAKING THEORIES FOR

AFFECTIVE LEARNING IN PROGRAMMING COURSES

Efhimios Alepis, Maria Virvou

Department of Informatics, University of Piraeus, 80 Karaoli & Dimitriou St., 18534, Piraeus, Greece

Katerina Kabassi

Department of Ecology and the Environment, Technological Educational Institute of the Ionian Islands

2 Kalvou Sq., 29100, Zakynthos, Greece

Keywords: Affective learning, Bi-Modal Interaction, Multi-Criteria Decision Making.

Abstract: Recently it has been widely acknowledged that the recognition of emotions of computer users can provide

more user friendly systems and eventually increase the productivity of users. User friendly interfaces are

even more important for the design of educational software that is appropriate for young children. In

human-human interaction the expression of emotions of people can be evident in different modes of

interaction, such as in speech, in body language, and in facial expressions. In human-computer interaction

evidence about the users’ emotional states can be drawn by the input devices each user uses for his/her

interaction with a computer. In this paper we describe how two decision making theories have been

combined in order to provide emotional interaction in an educational application. The resulting educational

system is targeted to young children that are taught the basic principles of programming through our own

implementation of a programming language called AffectLOGO.

1 INTRODUCTION

Recent advances in human-computer interaction

indicate the need for user interfaces to recognise

emotions of users while they interact with the

computer. For example, (Hudlicka, 2003) points out

that an unprecedented growth in HCI has led to a

redefinition of requirements for effective user

interfaces and that a key component of these

requirements is the ability of systems to address

affect. This is especially the case for computer-based

educational applications that are targeted to children

that are in the process of learning. Learning is a

complex cognitive process and it is argued that how

people feel may play an important role on their

cognitive processes as well (Goleman, 1981). At the

same time, many researchers acknowledge that

affect has been overlooked by the computer

community in general (Picard and Klein, 2002).

A remedy in the problem of effectively teaching

children through educational applications may lie in

rendering student-computer interaction more human-

like and affective. To this end, the incorporation of

speaking, animated personas in the user interface of

the educational application can be quite important.

Indeed, the presence of animated, speaking personas

has been considered beneficial for educational

software (Johnson et. al., 2000, Lester et. al., 1997).

In view of the above, in this paper we present an

affective educational system for children where the

basic principles of programming are being taught. In

the past, one of the first attempts to teach

programming to children was made with the creation

of the well-known “Logo” programming language.

The first “Logo” programming language was

created in 1967 (Frazier, 1967). The objective was to

create a friendly programming language for the

education of children where they could learn

programming by playing with words and sentences.

A detailed study on the “Logo” programming

language from its early stages and also recent work

on Logo-derived languages and learning applications

can be found in (Feurzeig, 2010). For the purposes

of our research, the authors have created their own

implementation of the “Logo” programming

language by incorporating affective interaction into

the existing user interfaces.

103

Alepis E., Virvou M. and Kabassi K..

COMBINING TWO DECISION MAKING THEORIES FOR AFFECTIVE LEARNING IN PROGRAMMING COURSES.

DOI: 10.5220/0003343701030109

In Proceedings of the 3rd International Conference on Computer Supported Education (CSEDU-2011), pages 103-109

ISBN: 978-989-8425-49-2

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

The resulting educational system is called

AffectLOGO and is an affective educational

software application targeted to children between the

ages of 10 and 15. By using AffectLOGO, children

as students can learn basic principles of

programming while at the same time their

interaction with the computer can be accomplished

either orally (by using the computer’s microphone),

or traditionally by using the computer’s keyboard

and mouse. At the same time, an animated

interactive pedagogical agent is present in order to

make the interaction more human like and thus more

affective and entertaining.

The system uses the Technique for Order

Preference by Similarity to Ideal Solution (TOPSIS)

(Hwang & Yoon, 1981), which is a decision-making

model. TOPSIS is based on the concept that “the

chosen alternative should have the shortest distance

from a positive-ideal solution and the longest

distance from a negative-ideal solution”. So, it

calculates the relative Euclidean distance of the

alternative from a fictitious ideal alternative. The

alternative closest to that ideal alternative and

furthest from the negative-ideal alternative is chosen

best.

In the system, we use TOPSIS in order to

identify the alternative actions that are closest to an

ideal alternative action. The selection of the best

alternative action is a multi-criteria decision making

problem as there are many criteria to be taken into

account.

The main body of this paper is organized as

follows: In section 2 we present decision making

aspects. In sections 3 and 4 we describe the overall

functionality and architecture of our system. In

section 5 we present our approach in combining

evidence from the two modes of interaction using a

multi-criteria decision making method in the context

of the educational application. Finally, in section 5

we give the conclusions drawn from this work.

2 DECISION MAKING ASPECTS

A multi-attribute decision problem is a situation in

which, having defined a set A of actions and a

consistent family F of n attributes

1

g

,

2

g

, …,

n

g

(

3≥n

) on A, one wishes to rank the actions of A

from best to worst and determine a subset of actions

considered to be the best with respect to F (Vincke,

1992). In traditional methods such as the Simple

Additive Weighting (SAW) (Fishburn, 1967, Hwang

& Yoon, 1981), the alternative actions are ranked by

the values of a multi-attribute function that is

calculated for each alternative as a linear

combination of the values of the n attributes. Unlike

SAW, the Technique for Order Preference by

Similarity to Ideal Solution (TOPSIS) calculates the

relative Euclidean distance of the alternative from a

fictitious ideal alternative. The alternative closest to

that ideal alternative and furthest from the negative-

ideal alternative is chosen best. More specifically,

the steps that are needed in order to implement the

technique are:

1. Scale the values of the n attributes to make

them comparable.

2. Calculate Weighted Ratings. The weighted

value is calculated as:

ijiij

rwv ⋅=

, where

i

w is

the weight and

ij

r is the normalised value of

the ith attribute.

3. Identify Positive-Ideal and Negative-Ideal

Solutions. The positive ideal solution is the

composite of all best attribute ratings

attainable, and is denoted:

},...,,...,,{

***

2

*

1

*

ni

vvvvA =

where

*

i

v is the best

value for the ith attribute among all

alternatives. The negative-ideal solution is the

composite of all worst attribute ratings

attainable, and is denoted:

},...,,...,,{

21

−−−−−

=

ni

vvvvA

where

−

i

v is the

worst value for the ith attribute among all

alternatives.

4. Calculate the separation measure from the

positive-ideal and negative-ideal alternative.

The separation of each alternative from the

positive-ideal solution

*

A , is given by the n-

dimensional Euclidean distance:

∑

=

−=

n

i

iijj

vvS

1

2**

)(

, where

j

is the index related

to the alternatives and

i

to one of the n

attributes. Similarly, the separation from the

negative-ideal solution

−

A is given by

∑

=

−−

−=

n

i

iijj

vvS

1

2

)(

.

Calculate Similarity Indexes. The similarity to

positive-ideal solution, for alternative j, is finally

given by

−

−

+

=

jj

j

j

SS

S

C

*

*

with

10

*

≤≤

j

C

. The alternatives

can the be ranked according to

*

j

C

in descending

order.

CSEDU 2011 - 3rd International Conference on Computer Supported Education

104

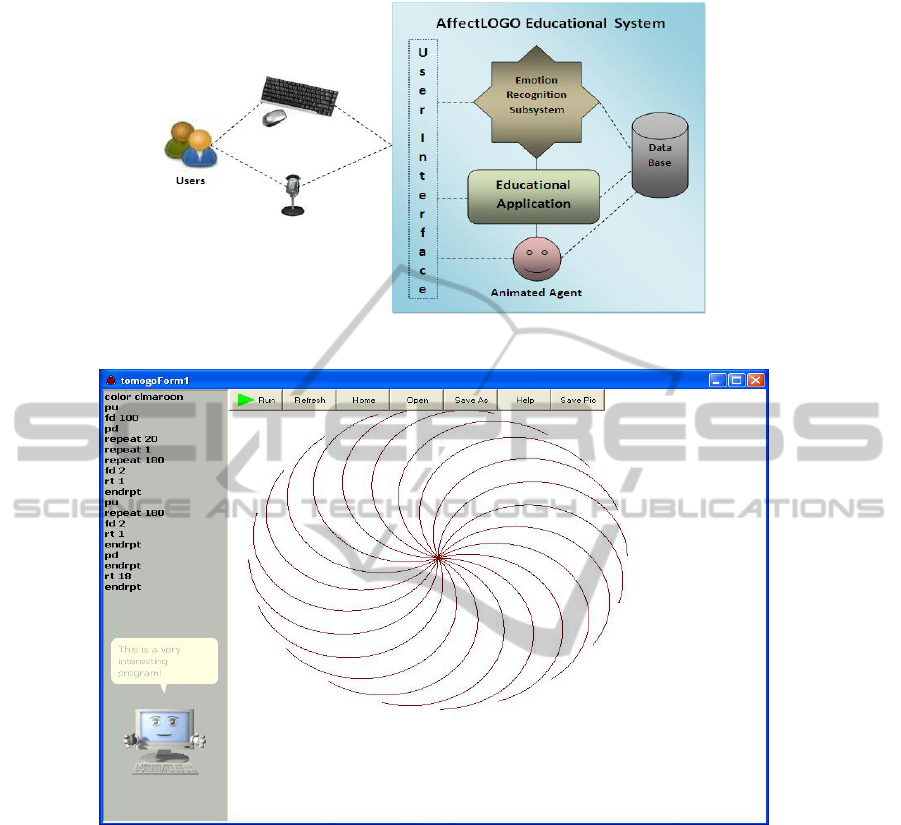

Figure 1: Architecture of the Affectlogo Educational System.

Figure 2: A snapshot of the AffectLOGO educational system.

3 OVERVIEW OF THE SYSTEM

In this section, we describe the overall functionality

and emotion recognition features of AffectLOGO.

The architecture of AffectLOGO consists of the

main educational application, a user monitoring

component, emotion recognition inference

mechanisms and a database. Part of the database is

used to store educational data and data related to the

pedagogical agent. Another part of the database is

used to store and handle emotion recognition related

data. Finally, the database is also used to store user

models and user personal profiles for each individual

user that uses and interacts with the system. The

systems architecture is illustrated in figure 1. As we

can see in figure 1, the students’ interactin can be

accomplished either orally through the microphone,

or through the keyboard/mouse modality. The

educational systems consists of three subsystems,

namely the emotion recognitin subsystem, the

educatin applicaton subsystem and the subsystem

that reasons and handles the animated agent’s

behaviour.

While using the educational application from a

desktop computer, students are being taught a

particular programming course. The information is

given in text form while at the same time an

animated agent reads it out loud using a speech

engine. Students are prompted to write programming

commands and also programs in the AffectLOGO

COMBINING TWO DECISION MAKING THEORIES FOR AFFECTIVE LEARNING IN PROGRAMMING COURSES

105

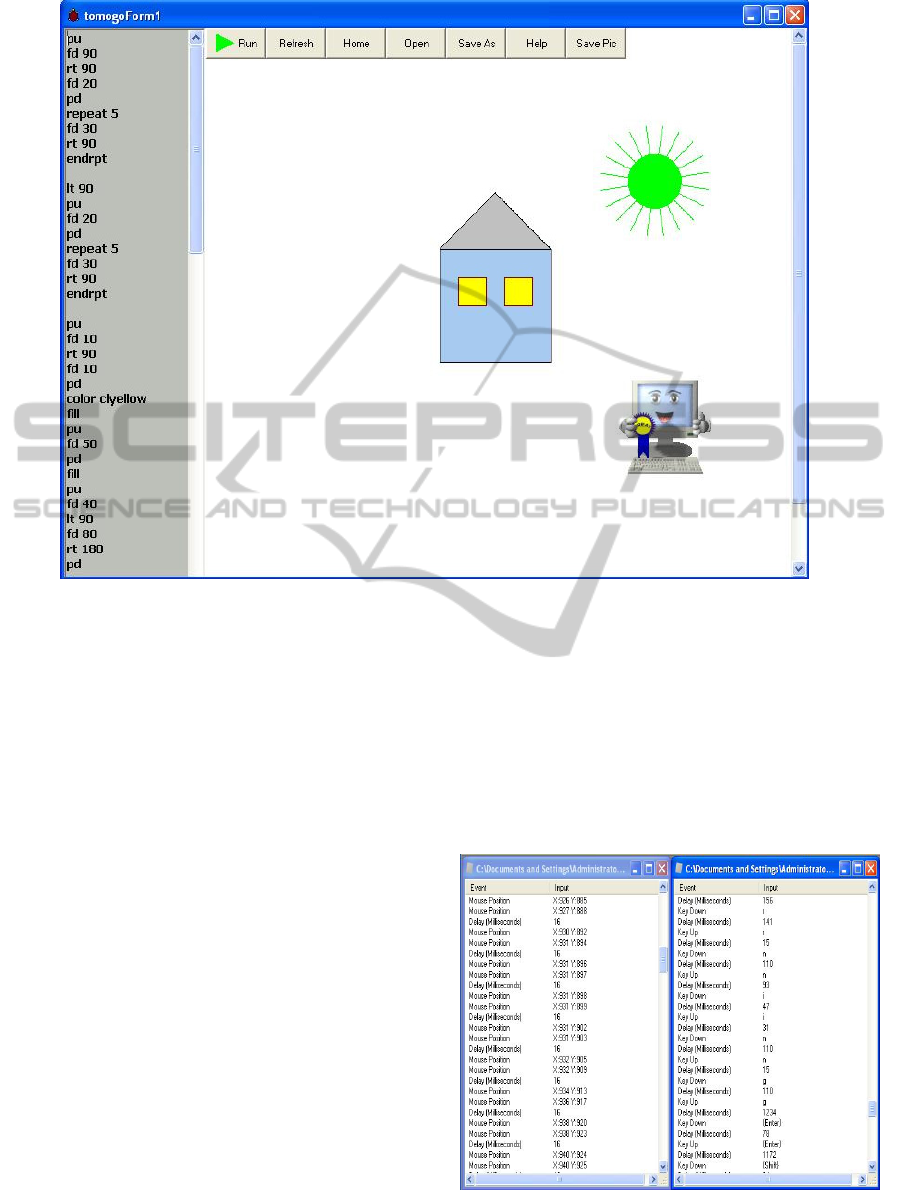

Figure 3: Successful completion of an exercise and reward by the animated agent.

language in order to produce drawings and particular

shapes. The main application is installed either on a

public computer where all students have access, or

alternatively each student may have a copy on

his/her own personal computer. An example of using

the main application is illustrated in figure 2. The

animated agent is present in these modes to make the

interaction more human-like.

As it is illustrated in figure 2, a user has

accomplished writing a quite complicated program

that uses nested loops in order to produce a specific

drawing. Figure 3 also illustrates a user who has

completed creating a drawn house and a sun by

providing the educational system the correct

programming commands in the AffectLOGO

language. This student’s achievement is awarded by

a characteristic animation of the agent who also

congratulates the student. In such cases, the student

who is actually a child between the ages of 10 and

15 is also expected to interact emotionally and in our

example the student may express his/her happiness

for his/her success in completing correctly a

programming exercise.

While the students interact with the main

educational application a monitoring component

records silently on the background their actions from

the keyboard and the microphone interaction and

interprets them in terms possibly recognized

emotions. The basic function of this component is to

capture all the data inserted by the students either

orally or by using the keyboard and the mouse of the

computer. The data is recorded to a database and

then returned to the basic application the user

interacts with. Figure 4 illustrates the “monitoring”

component that records the user’s input and the

exact time of each event.

Figure 4: A user-monitoring component recording all user

input actions.

CSEDU 2011 - 3rd International Conference on Computer Supported Education

106

As a next step, all recorded user input actions are

translated in terms of discrete input actions related to

the microphone and to the keyboard. The human

experts of the empirical study (Alepis et al 2007)

have identified which input action from the

keyboard and the microphone could led them in the

successful recognition of possible emotional states.

From the input actions that appeared in the

experiment we have used those that were proposed

by the majority of the human experts.

In particular considering the keyboard we have:

a) a student types normally b) a student types

quickly (speed higher than the usual speed of the

particular user) c) a student types slowly (speed

lower than the usual speed of the particular user) d)

a student uses the backspace key often e) a student

hits unrelated keys on the keyboard f) a student does

not use the keyboard.

Considering the students’ basic input actions

through the microphone we have 7 cases: a) a

student speaks using strong language b) a student

uses exclamations c) a student speaks with a high

voice volume (higher than the average recorded

level) d) a student speaks with a low voice volume

(low than the average recorded level) e) a student

speaks in a normal voice volume f) a student speaks

words from a specific list of words revealing an

emotion g) a student does not say anything.

4 ANIMATED AGENTS

Elliot et al. (Elliot, 1999) suggest that animated

agents in an educational environment will be more

effective teachers if they display and understand

emotions. More specifically they point out that:

1. An animated agent should appear to care

about students and their progress

2. An animated agent should be sensitive to the

student’s emotions

3. An animated agent should foster enthusiasm

in the student for a subject matter

4. An animated agent may make learning more

fun

However, even if new multimodal capabilities

like 3D-video and speech synthesis have made

pedagogical personas more human-like, there is also

a great need in determining “how” (what exactly

should the pedagogical persona do) and “when” (in

which situation) an animated agent should

act/behave in each part of the tutoring process.

An expected contribution of our system is to

affect positively the educational process of students

who learn programming languages. More

specifically, the system should motivate the students

for the purpose of learning more efficiently and also

more enjoyable. In (Soldato and Du Boulay, 1995) it

is suggested that a tutoring system must react with

the purpose of motivating distracted, less confident

or discontented students, or sustaining the

disposition of already motivated students.

At the same time a system’s critical long-term

objective is to operate as an educational tool that

implements affective functionalities in order to assist

teachers in using user-friendlier, thus more

communicable, educational e-learning applications.

In view of the above our system also

incorporates an affective module that relies on

animated agents. The system modells possible

emotional states of users-students and proposes

tactics for improving the interaction between the

animated agent and the student who uses the

educational application. The system may suggest

that the animated agent should express a specific

emotional state to the student for the purpose of

motivating her/him while s/he learns. Accordingly,

the agent becomes a more effective teacher. Table 1

illustrates event variables that are used as triggers

for the activation of the animated agents. Each time

a trigger condition takes place the animated agent

uses a certain tactic in order to communicate

emotionally with the user for pedagogical reasons.

Table 1: Event variables for the activation of animated

agents.

Event variables

•

a mistake (the user may receive an error

message by the application or navigate

wrongly)

• many consecutive mistakes

• absence of user action for a period of time

• action unrelated to the main application

• correct interaction

• many consecutive correct answers (related

to a specific test)

• many consecutive wrong answers (related

to a specific test)

• user aborts an exercise

• user aborts reading the whole theory

• user requests help from the persona

• user takes a difficult test

• user takes an easy test

• user takes a test concerning a new part of

the theory

• user takes a test from a well known part of

the theory

COMBINING TWO DECISION MAKING THEORIES FOR AFFECTIVE LEARNING IN PROGRAMMING COURSES

107

5 APPLICATION OF THE

COMBINATION OF THE

TWO DIFFERENT DECISION

MAKING METHODS

For the evaluation of each alternative emotion the

system uses as criteria the input actions that are

relate to the emotional states that may occur while a

student interacts with our educational system. These

input actions were described in the previous section

and are considered as criteria for evaluating all

different emotions and selecting the one that seems

to be prevailing. More specifically, the system uses a

novel combination of TOPSIS and SAW for a

particular category of users. This particular category

comprises of the young students (between the ages

of 10 and 15) and who are novice in programming

courses.

In order to find out which emotion is more likely

to have been felt by the user interacting with the

system, we use TOPSIS. More specifically, we use

the specific multi-criteria decision making theory for

combining evidence from the two different modes

and finding the best indication. More specifically,

we want to combine

11

1

e

em

and

21

1

e

em

.

11

1

e

em

is

the probability that an emotion has occurred based

on the keyboard actions and

21

1

e

em

is the

probability that refers to an emotional state using the

users’ input from the microphone. These

probabilities result from the application of the

decision making model of SAW and are presented

below.

11

1

e

em

and

21

1

e

em

take their values in

[0,1].

44133122111111

11111

kwkwkwkwem

kekekekee

+++=

661551

11

kwkw

keke

++

Formula 1.

44133122111121

11111

mwmwmwmwem

memememee

+++=

771661551

111

mwmwmw

mememe

+

++

Formula 2.

In formula 1 the k’s from k1 to k6 refer to the six

basic input actions that correspond to the keyboard.

In formula 2 the m’s from m1 to m7 refer to the

seven basic input actions that correspond to the

microphone. These variables are Boolean. In each

moment the system takes data from the bi-modal

interface and translates them in terms of keyboard

and microphone actions. If an action has occurred

the corresponding criterion takes the value 1,

otherwise its value is set to 0. The w’s represent the

weights. These weights correspond to a specific

emotion and to a specific input action and are

acquired by the stereotype database. More

specifically, the weights are acquired by the

stereotypes about the emotions.

In a previous related work (Alepis et al. 2007),

the combination of the two modes was accomplished

by calculating the mean of the likelihood of every

emotion of the two modes. However, this way of

calculation was simple and did not combine

effectively the evidence from the two modes.

Therefore, in this paper we check the efficiency of

TOPSIS for combining effectively the evidence

from two modes.

TOPSIS is based on the concept that “the chosen

alternative should have the shortest distance from a

positive-ideal solution and the longest distance from

a negative-ideal solution”. Therefore, the system

first identifies the Positive-Ideal and the Negative-

Ideal alternative actions taking into account the

criteria that were presented in the previous section.

The Positive-Ideal alternative action is the

composite of all best criteria (in this case the mode

plays the role of criteria) ratings attainable, and is

denoted:

},{

*

21

*

11

*

ee

ememA =

where

*

21

*

11

,

ee

emem are best values of the modes among

all alternative emotions. The Negative-Ideal solution

is the composite of all worst attribute ratings

attainable, and is denoted:

},{

2111

−−

−

=

ee

ememA

where

−−

2111

,

ee

emem

are the worst values for the

modes among all alternative emotions.

For every alternative action, the system

calculates the Euclidean distance from the Positive-

Ideal and Negative-Ideal alternative. For the j

alternative emotion, the Euclidean distance from the

Positive-Ideal alternative is given by:

2

*

2121

2

*

1111

*

)()(

eejeej

ememememS

j

−+−=

. The

Euclidean distance from the Negative-Ideal

alternative is given by the formula:

2

2121

2

1111

)()(

−−

−

−+−=

eejeej

ememememS

j

.

Finally, the value of the likelihood for the

alternative emotion j, is given by the formula

−

−

+

=

jj

j

j

SS

S

lem

*

*

with

10

*

≤≤

j

lem

and shows how

similar the j alternative is to the ideal alternative

CSEDU 2011 - 3rd International Conference on Computer Supported Education

108

action

*

A

. Therefore, the system selects the

alternative emotion that has the likelihood (lem).

6 CONCLUSIONS

In this paper we have shown a novel combination of

two different decision making theories for emotion

recognition in a learning environment. More

specifically, the system uses SAW for estimating the

result of each mode and TOPSIS for combining the

results of the two modes and find the emotion that is

more likeable to have been felt by young children as

users of the resulting system.

It is in our future plans to evaluate AffectLOGO

in order to examine the degree of usefulness of the

educational tool for the teachers, as well as the

degree of usefulness and user-friendliness for the

students who are going to use the educational

system.

REFERENCES

Alepis, E., Virvou, M. & Kabassi, K. (2007).

Development process of an affective bi-modal

Intelligent Tutoring System. Intelligent Decision

Technologies, 1, pp. 117-126.

Elliott, C., Rickel, J., Lester, J., 1999. Lifelike pedagogical

agents and affective computing: An exploratory

synthesis, Lecture Notes in Computer Science, 1600,

pp. 195-212.

Feurzeig, W., Toward a Culture of Creativity: A Personal

Perspective on Logo's Early Years and Ongoing

Potential, International Journal of Computers for

Mathematical Learning, 2010, Pages 1-9, Article in

press

Fishburn, P. C.: Additive Utilities with Incomplete

Product Set: Applications to Pri-orities and

Assignments, Operations Research (1967)

Frazier, F. (1967). The logo system: Preliminary manual.

BBN Technical Report. Cambridge, MA: BBN

Technologies.

Goleman, D., Emotional Intelligence, Bantam Books, New

York, 1995.

Hudlicka, E. To feel or not to feel: The role of affect in

human-computer interaction, International Journal of

Human-Computer Studies, Elsevier Science, London,

July 2003, pp. 1-32.

Hwang, C. L., Yoon, K.: Multiple Attribute Decision

Making: Methods and Applications, Lecture Notes in

Economics and Mathematical Systems 186, Springer,

Berlin, 1981.

Johnson, W. L, J. Rickel, and Lester, J., 2000. Animated

Pedagogical Agents: Face-to-Face Interaction in

Interactive Learning Environments. International

Lester, J., Converse, S., Kahler, S., Barlow, S., Stone, B.,

and Bhogal, R. 1997. The Persona Effect: affective

impact of animated pedagogical agents. In Pemberton

S. (Ed.) Human Factors in Computing Systems, CHI’

97, Conference Proceedings, ACM Press, pp. 359-366.

Picard R. W. and Klein, J. Computers that recognise and

respond to user emotion: theoretical and practical

implications, Interacting with Computers 14, 2002, pp.

141-169.

Soldato, D, Boulay, D., 1995. Implementation of

motivational tactics in tutoring systems. Journal of

Artificial Intelligence in Education 6 (4), pp. 337-378.

Vincke, P.: Multicriteria Decision-Aid. Wiley (1992).

COMBINING TWO DECISION MAKING THEORIES FOR AFFECTIVE LEARNING IN PROGRAMMING COURSES

109