DESCRIPTION PLAUSIBLE LOGIC PROGRAMS FOR STREAM

REASONING

Ioan Alfred Letia and Adrian Groza

Department of Computer Science, Technical University of Cluj-Napoca, Cluj-Napoca, Romania

Keywords:

Stream reasoning, Description logic, Plausible logic, Lazy evaluation, Sensors.

Abstract:

Stream reasoning is defined as real time logical reasoning on large, noisy, heterogeneous data streams, aiming

to support the decision process of large numbers of concurrent querying agents. In this research we exploit

nonmonotonic rule-based systems for handling inconsistent or incomplete information and also ontologies to

deal with heterogeneity. Data is aggregated from distributed streams in real time and plausible rules fire when

new data is available. This study also investigates the advantages of lazy evaluation on data streams.

1 INTRODUCTION

Sensor networks are estimated to drive the formation

of a new Web, by 2015 (Le-Phuoc et al., 2010). The

value of the Sensor Web is related to the capacity to

aggregate, analyse and interpret this new source of

knowledge. Currently, there is a lack of systems de-

signed to manage rapidly changing information at the

semantic level (Valle et al., 2009). The solution given

by data-stream management systems (DSMS) is lim-

ited mainly by the incapacity to perform complex rea-

soning tasks.

Stream reasoning is defined as real time logi-

cal reasoning on huge, possible infinite, noisy data

streams, aiming to support the decision process of

large numbers of concurrent querying agents. In or-

der to handle blocking operators on infinite streams

(like min, mean, average, sort), the reasoning process

is restricted to a certain window of concern within

the stream, whilst the previous information is dis-

charged (Barbieri et al., 2010). This strategy is ap-

plicable only for applications where recent data have

higher relevance (e.g. average water debit in the last

10 minutes). In some reasoning tasks, tuples need to

be joined arbitrarily far apart from different streams.

Stream Reasoning adopts the continuous processing

model, where reasoning goals are continuously eval-

uated against a dynamic knowledge base. This leads

to the concept of transient queries, opposite to the per-

sistent queries in a database. Typical applications of

stream reasoning are: traffic monitoring, urban com-

puting, patient monitoring, weather monitoring from

satellite data, monitoring financial transactions (Valle

et al., 2009) or stock market. Real time events anal-

ysis is conducted in domains like seismic incidents,

flu outbreaks, or tsunami alert based on a wide range

of sensor networks starting from the RFID technol-

ogy to the Twitter dataflow (Savage, 2011). Decisions

should be taken based on plausible events. Waiting to

have complete confirmation of an event might be to

risky action.

Streams of sensor data are often characterised by

heterogeneity, noise and contradictory data. In this

research we exploit nonmonotonic rule-based systems

for handling inconsistent or incomplete information

and also ontologies to deal with heterogeneity. Data

is aggregated from distributed streams in real time and

plausible rules fire when new data is available. This

study investigates the advantages of lazy evaluation

on data streams, as well.

2 INTEGRATING PLAUSIBLE

RULES WITH ONTOLOGIES

2.1 Plausible Logic

Plausible logic is an improvement of defeasible

logic (Rock, 2010; Billington and Rock, 2001). A

clause ∨a

1

, a

2

, ...a

n

is the disjunction of positive or

negative atoms a

i

. If both an atom and its negation ap-

pear, the clause is a tautology. A contingent clause is

a clause which is neither empty nor a tautology (Rock,

2010).

560

Letia I. and Groza A..

DESCRIPTION PLAUSIBLE LOGIC PROGRAMS FOR STREAM REASONING.

DOI: 10.5220/0003887405600566

In Proceedings of the 4th International Conference on Agents and Artificial Intelligence (IWSI-2012), pages 560-566

ISBN: 978-989-8425-95-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

Definition 1. A plausible description of a situation

is a tuple PD = (Ax, R

p

, R

d

, ), where Ax is a set of

contingent clauses, called axioms, characterising the

aspects of the situation that are certain, R

p

is a set of

plausible rules, R

d

is a set of defeater rules, and is

a priority relation on R

p

∪ R

d

.

A plausible theory is computed from a plausible

description by deriving the set R

s

of strict rules from

the definite facts Ax. Thus, a plausible knowledge

base consists of strict rules (→), plausible rules (⇒),

defeater (warning) rules (+), and a priority relation

on the rules (). Strict rules are rules in the classical

sense, that is whenever the premises are indisputable,

then so is the conclusion. An atomic fact is repre-

sented by a strict rule with an empty antecedent. The

plausible rule a

i

⇒ c means that if all the antecedents

a

i

are proved and all the evidence against the conse-

quent c has been defeated then c can be deduced. The

plausible conclusion c can be defeated by contrary ev-

idence.

The only use of defeaters is to prevent some con-

clusions, as in ”if the buyer is a regular one and he

has a short delay for paying, we might not ask for

penalties”. This rule does not provide sufficient evi-

dence to support a ”non penalty” conclusion, but it is

strong enough to prevent the derivation of the penalty

consequent. The priority relation allows the repre-

sentation of preferences among non-strict rules.

Decisive plausible logic consists of a plausible

theory and a proof function P(λ f , ), which given a

proof algorithm λ and a formula f in conjunctive nor-

mal form, returns +1 if λ f was proved, −1 if there

is no proof for λ f , or 0 when λ f is undecidable due

to looping. Plausible Logic has five proof algorithms

{µ, α, π, β, γ}, one is monotonic and four are non-

monotonic: µ monotonic, strict, like classical logic;

α = β∧π; π plausible, propagating ambiguity; β plau-

sible, blocking ambiguity; and γ = π ∨ β.

2.2 Translating from DL to Plausible

Logic Programs

Facing the challenge to reason on huge amount of

noise and heterogeneous data, the OWL fragment cor-

responding to Horn clauses, known as Description

Logic Programs (Grosof et al., 2003), can be a suit-

able choice. This section exploits the work in (Gomez

et al., 2010) in order to translate description logic

based ontologies into plausible logic axioms.

Conjunctions and universal restrictions in the right

hand side of inclusion axioms are converted into rule

heads (L

h

classes), whilst conjunction, disjunction

and existential restriction appearing in the left-hand

side are translated into rule bodies (L

b

classes). Fig-

T (C v D) = T

b

(C, X ) → T

h

(D, X)

T (> v ∀P.D) = P(X,Y ) → T

h

(D,Y )

T (> v ∀P

−

.D) = P(X,Y ) → T

h

(D, X)

T (a : D) = T

h

(D, a)

T ((a, b) : P) = P(a, b)

T (P v Q) = P(X,Y ) → Q(X,Y )

T (P

+

v P) = P(X,Y ) ∧ P(Y, Z)

→ P(X , Z)

where

T

h

(A, X) = A(X)

T

h

(C u D, X) = T

h

(C, X ) ∧ T

h

(D, X)

T

h

(∀R.C) = R(X,Y ) → T

h

(C,Y )

T

b

(A, X) = A(X)

T

b

(C u D, X) = T

b

(C, X ) ∧ T

b

(D, X)

T

b

(C t D, X) = T

b

(C, X ) ∨ T

b

(D, X)

T

h

(∃R.C) = R(X,Y ) → T

b

(C,Y )

Figure 1: Mapping from DL ontologies into strict rules.

ure 1 presents the mapping function T from DL to

strict rules in a plausible knowledge base, where A,

C, and D are concepts such that A,C ∈ L

b

, D ∈ L

h

,

A is an atomic concept, X, Y , Z variables, and P, Q

roles.

3 DATA STREAM MANAGEMENT

SYSTEM IN HASKELL

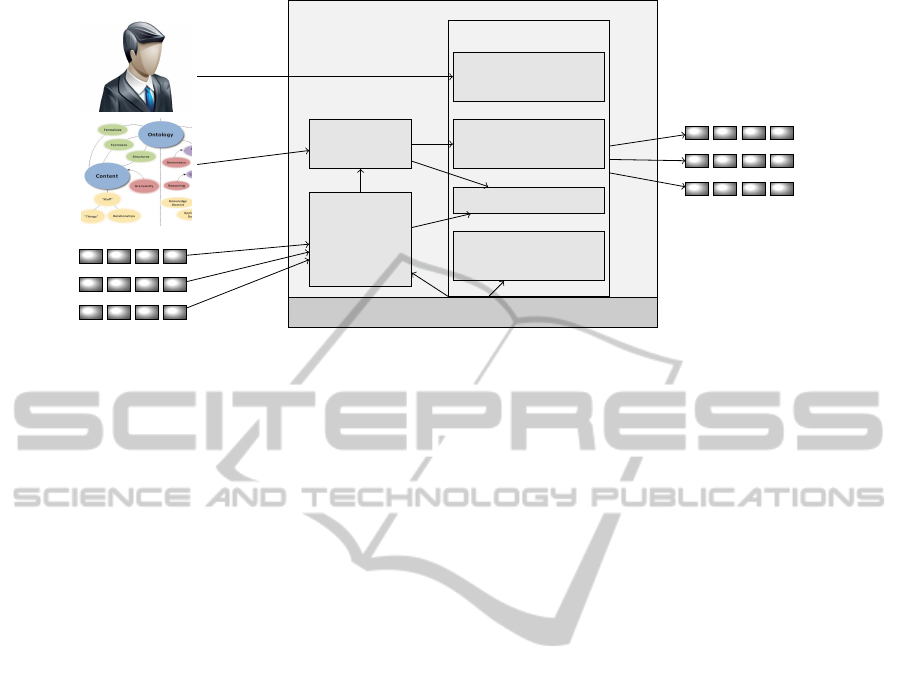

This section details the system architecture, as de-

picted in figure 2. The user is responsible to define

the priorities and the plausible rules in order to han-

dle contradictory data for the problem in hand.

3.1 The Haskell Platform

The advantages which Haskell brings in this land-

scape, lazy evaluation and implicit parallelism, are

significant features when dealing with huge data

streams which are parallel in nature. The parralel

performing of reasoning tasks is of significant impor-

tance in order to provide answers in due time (Valle

et al., 2009). The Haskell’s polymorphism allows to

write generic code to process streams, which is par-

ticularly useful due to the different exploitation of

the same data stream. The absence of side effects,

means that the order of expression evaluation is of no

importance, which is extremely desirable in the con-

text of data streams coming from different sources.

One challenge when answering in real-time to many

continuously queries is query optimisation. Allowing

equational reasoning, can be exploited for automatic

program and query optimisation. A premise specified

as lazy is matched only when its variables are antici-

DESCRIPTION PLAUSIBLE LOGIC PROGRAMS FOR STREAM REASONING

561

Framework

Plausible Theory

Haskell Platform

Streams

Module

.

.

Decisive

Plausible Tool

Mapping

Module

Facts

Strict

Rules

Plausible Rules

and Priorities

Figure 2: System architecture.

pating to participate in the answer to a pending con-

clusion or query.

The continuous semantics of data streams assumes

that: i) streams are volatile - they are consumed on

the fly and not stored forever; and ii) continuous pro-

cessing - queries are registered and produce answers

continuously (Barbieri et al., 2010). In our case, the

rules are triggered continuously in order to produce

streams of consequents. The stateless feature of pure

Haskell facilitates the conceptual model of networks

of stream reasoners as envisaged in (Stuckenschmidt

et al., 2010), where data is processed on the fly, with-

out being stored. The lazy evaluation in Haskell pro-

vides answers perpetually, when the queries are exe-

cuted against infinite streams. One does not have to

specify the timesteps when the query should be exe-

cuted. By default, the tuples are consumed when they

become available, and only in case they contribute to

a query answer.

The computational efficiency is supported by the

fact that a function is not forced to wait for a data

to arrive - the possible computation are executed in-

stead. Moreover one can use the about-to-come data

by borrowing it from the future, as long as no func-

tion tries to change its value. The non strict semantics

of Haskell, allows the functions to not produce errors

in case these errors can be avoided. Consequently,

some noise data can be avoided, without disturbing

the computations.

There is no constraint on the nature of data fed

by a stream. The functions can be applied on RDF

streams as follows: A triple object is created by the

triple function

data Triple = triple !Node !Node !Node

triple :: Subject -¿ Predicate -¿ Object -¿ Triple

Definition 2. An RDF stream is an infinite list of tu-

ples of the form hsub j, pred, ob ji annotated with their

timestamps τ.

type RDFStream = [((sub j, pred, ob j),τ)]

Example 1. An RDF stream of auction bids states

the bidder agent, its action, and the bid value: [(: a

1

, :

sell, : 30E), 14.32), (: a

2

, : sell, : 28E), 14.34), (: a

3

, :

buy, : 26E), 14.35), (: a

1

, : sell, : 27E), 14.36)]

3.2 Streams Module

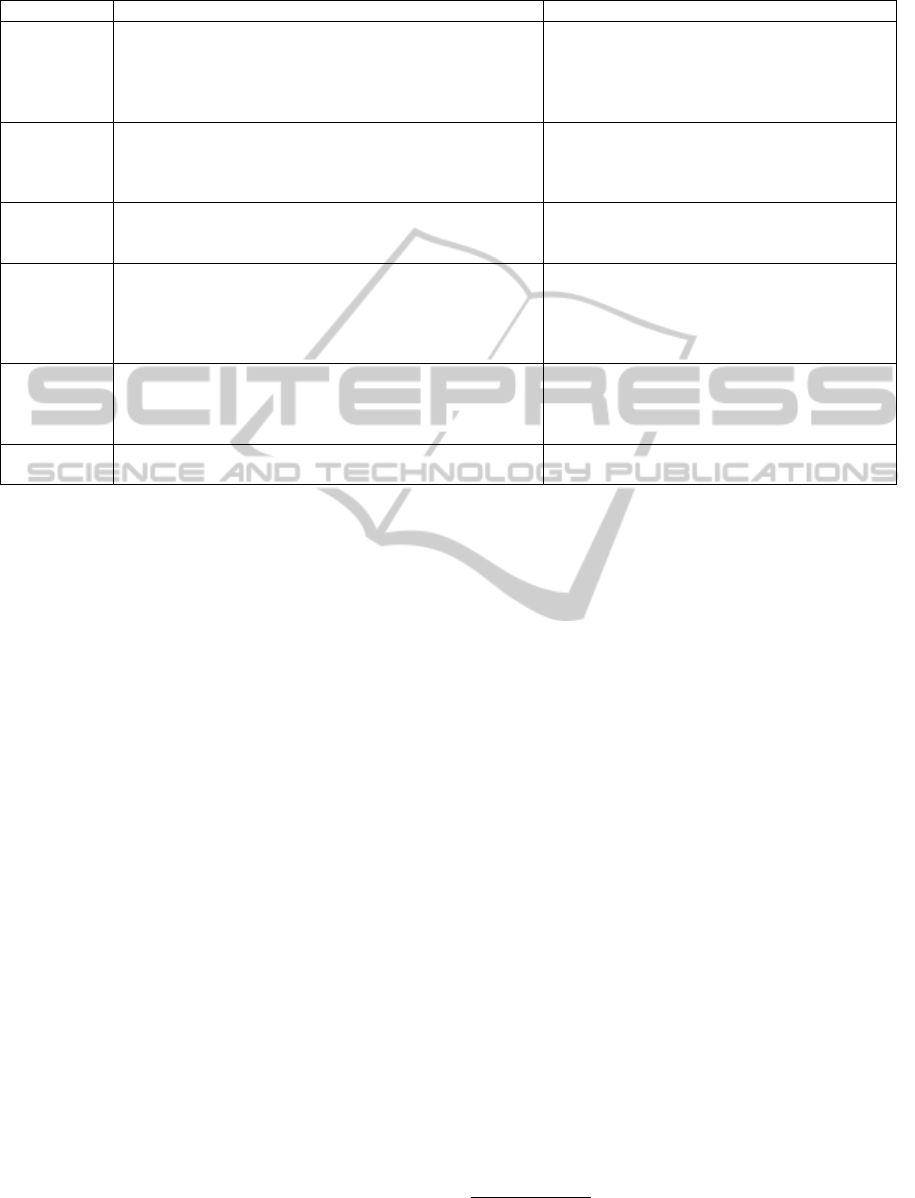

Table 1 illustrates the operators provided by Haskell

to manipulate infinite streams. Considering one wants

to add the corresponding values from two financial

data streams s

1

and s

2

, expressed by two different cur-

rencies:

zipWith + s

1

(map conversion s

2

)

where the conversion function is applied on each el-

ement from s

2

. For computing at each step the sum

of a string of transactional data, the following expres-

sion can be used: scan + 0 [2, 4, 5, 3, ...], providing

as output the infinite stream [0, 2, 6, 11, 14, ...].

The aggregation of two streams takes place ac-

cording to an aggregation policy, depending on the

time or the configuration of the new tuples. Here, the

policy is a function provided as input argument for

the high order function zipWith policy stream stream.

Similarly, generating new stream is done based on

a policy. The incoming streams can be dynamically

split into two streams, based on a predicate p.

3.3 The Mapping Module

The ontologies are translated based on the concep-

tual instrumentation introduced in section 2.2. Two

sources of knowledge are exploited to reason on data

ICAART 2012 - International Conference on Agents and Artificial Intelligence

562

Table 1: Stream operators in Haskell (S stands for the Stream datatype).

Type Function Signature

Basic constructor <:> ::a -> S a -> Sa

extract the first element head:: S a -> a

extracts the sequence following the stream’s head tail:: S a -> S a

takes a stream and returns all its finite prefixes inits :: S a -> S ([a])

takes a stream and returns all its suffixes tails :: S a -> S (S a)

Transfor applies a function over all elements map :: (a -> b) -> S a -> S b

mation interleaves 2 streams inter :: Stream a -> Stream a -> S a

yields a stream of successive reduced values scan :: (a -> b -> a) -> a -> S b -> S a

computes the transposition of a stream of streams transp :: S (S a) -> S (S a)

Building repeated applications of a function iterate :: (a -> a) -> a -> S a

streams constant streams repeat :: a -> S a

returns the infinite repetition of a set of values cycle :: [a] -> S a

Extracting takes the first elements take :: Int -¿ S a -> [a]

sublists drops the first elements drop :: Int -> S a -> S a

returns the longest prefix for which the predicate p holds takeWhile :: (a -> Bool) -> S a -> [a]

return the suffix remaining after takeWhile dropWhile :: (a -> Bool) -> S a -> S a

removes elements that do not satisfy p filter :: a -> Bool) -> S a -> S a

Index return the element of the stream at index n !! :: S a -> Int -> a

return the index of the first element equal to the elemIndex :: Eq a => a -> S a -> Int

query element

return the index of the first element satisfying p findIndex :: (a -> Bool) -> S a -> Int

Aggregation return a list of corresponding pairs from 2 streams zip :: S a -> S b -> S (a,b)

combine two streams based on a given function ZipWith :: (a -> b -> c) -> S a -> S b -> S c

Sensor v ∀measure.PhysicalQuality

Sensor v ∀hasLatency.Time

Sensor v ∀hasLocation.Location

Sensor v ∀hasFrequency.Frequency

Sensor v ∀hasAccuracy.MeasureUnit

WirelessSensor v Sensor

RFIDSensor v WirelessSensor

ActiveRFID v RFIDSensor

Sensor(X), Measures(X,Y ) → PhysicalQuality(Y )

Sensor(X), HasLatency(X,Y ) → Time(Y )

Sensor(X), HasLocation(X,Y ) → Location(Y )

Sensor(X), HasFrequency(X,Y ) → Frequency(Y )

Sensor(X), HasAccuracy(X ,Y ) → MeasureUnit(Y )

WirelessSensor(X) → Sensor(X)

RFIDSensor(X) → WirelessSensor(X)

ActiveRFID(X) → WirelessSensor(X )

Figure 3: Translating the sensor ontology.

collected by the sensors. On the one hand, one

needs detailed information about sensors, measure-

ments domain and units, or accuracy (see figure 3).

On the other hand domain specific axioms are ex-

ploited when reasoning on a specific scenario.

The rapid development of the sensor technology

rises the problem of continuously updating the sen-

sor ontology. The system is able to handle this situa-

tion by treating the ontology as a stream of description

logic axioms. When applying the high order function

map on the transformation function T , each axiom in

description logic is converted to strict rules as soon as

it appears:

map T [A v B,C v ∀r.D, ...]

ouputs the infinite list:

[r

1

: A(X ) → B(X)), r

2

: C(X ), r(X,Y ) → D(Y ), ...]

The main advantage consists in the possibility to dy-

namically include new background knowledge in the

system.

3.4 Efficiency

The system incorporates the Decisive Plausible Logic

tool

1

. A Haskell glue module that exports functions

requesting proofs (Rock, 2010) is used to make the

connection with the other modules. The efficiency is

mandatory when one needs to reason on huge data

in real time. The efficiency of the proposed solu-

tion is based on the following vectors: i) The im-

plementation of a family of defeasible logic is poly-

nomial (Maher et al., 2001). Plausible logic being a

particular case of defeasible reasoning belongs to this

efficiency class. The possibility to select the current

inference algorithm among {µ, α, π, β, γ} can be ex-

ploited to adjust the reasoning task to the complexity

of problem in hand. ii) DLP are subfragments of Horn

logics and their complexity is polynomial, as reported

in (Kr

¨

otzsch et al., 2007).

1

Available at http://www.ict.griffith.edu.au/arock/DPL/

DESCRIPTION PLAUSIBLE LOGIC PROGRAMS FOR STREAM REASONING

563

Milk v Item

Item v ∀HasPeak.Time

W holeMilk v Milk

LowFatMilk v Milk

f m

1

: W holeMilk

sm

1

: LowFatMilk

sm

1

: LowFatMilk.

Figure 4: Domain Knowledge for the Milk Monitoring.

r

1

: Milk(X) → Item(X )

r

2

: Item(X), HasPeak(X,Y ) → Time(Y )

r

3

: W holeMilk(X) → Milk(X)

r

4

: LowFatMilk(X) → Milk(X)

f

1

: W holeMilk( f m1)

f

2

: LowFatMilk(sm1)

f

3

: LowFatMilk(sm2)

r

10

: Milk(X), Stock(X,Y ), Less(Y, c1) ⇒

NormalSupply(X , c2)

r

11

: HasPeak(X,Y ) * NormalSupply(X, c2)

r

12

: Milk(X), Stock(X,Y ), Less(Y, c1),

hasPeak(X, Z), now(Z) ⇒ PeakSupply(X, c3)

r

13

: AlternativeItem(X, Z), Milk(X), Stock(Z,Y ),

Greater(Y, c4) ⇒ ¬PeakSupply(X, c3)

r

14

LastMeasurement(S,Y ), HasLatency(S, Z),

Greater(Y, Z) ⇒ BrokenSensor(S)

r

15

BrokenSensor(S), Measur(S, X) * Stock(X, )

r

13

r

12

Figure 5: Plausible Knowledge Base.

4 RUNNING SCENARIO

The scenario regards supporting real-time supply

chain decisions based on RFID streams. Consider

the stock management of a retailer. RFID sensors

are used to count the items entering on the shelves

from two locations. The clients leave the supermar-

ket from three payment points, corresponding to three

output streams. Monitoring an item like Milk implies

monitoring several subcategories like W holeMilk and

LowFatMilk. The retailer sells a specific item f m

1

of

whole milk, and two types of low fat milk sm

1

and

sm

2

. Some peak periods are associated to each com-

mercialised item. This background knowledge is for-

malised in figure 4. The corresponding strict rules are

depicted in the upper part of the figure 5. During peak

periods for an item the usual supply action is blocked

by the defeater r

11

.

The plausible rule r

10

says that if the milk stock

Y is bellow the alert threshold c1, the normalSupply

action should be executed. NormalSupply assures a

stock value of c2. Instead, the PeakSupply action is

derived by the rule r

11

.

If there is an alternative item Z for the Milk prod-

uct and the stock of the alternative is larger than the

threshold c4, this implies not to supply the higher

quantity c2 (the rule r

12

). Depending on the prior-

ity relation between the rules r

12

and r

13

, the action is

executed or not.

The sensor related information can be integrating

when reasoning. If the sensor S seems not to function

according to the specifications in the ontology, it is

plausible to be broken (the rule r

14

). A broken sensor

defeats the stock information asserted in the knowl-

edge base related to the measured item (the defeater

r

15

).

The merchandise flow is simulated by generating

infinite input and output streams. Assuming that the

function randomItem :: [Item]− > Item, based on the

list of availalbe items returns a random item. The out-

put stream for the payment point out1 would be:

out1 = (randomItem l) : out1

where l represents the available items in

the simulation. Assume a stream of sold

items and the time of measurement s

1

:

[(sm

1

, 1), (m

1

, 2), ( f m

1

, 3), (m

2

, 4), (m

3

, 5), (sm

2

, 6),

(m

4

, 7), ....].

The updateStock function continously computes

the current stocks based on the s

1

stream. Based on

the fact f

1

and the rule r

3

, one can conclude that f m1

is a milk item. Similarly, based on the facts f

2

and f

3

,

the rule r

4

cathegorises the instances sm

1

and sm

2

as

milk items. The filter function is used to monitor each

milk item, either low fat or not:

milkItems = f ilter milk (map f irst s1)

Here, the predicate milk returns true if the input is

of type Milk according to the rules r

3

or r

4

. The

map function is used to select only the first element

from the tuples (item,time) from the stream s1. The

stream milkItems collects all the items of type milk,

and everytime an item occurs, the updateStock ::

Item− > Stream− > Int function is activated to com-

pute the available stock for a specific cathegory. Thus,

by combining ontological knowledge with plausible

rules one can reason with generic products (Milk),

even if the streams report data regarding instances

of specific products (W holeMilk and LowFatMilk),

minimising the number of business rules that should

be added within the system.

5 DISCUSSION AND RELATED

WORK

Stream integration is considered an ongoing chal-

lenge for the stream management systems (Valle

ICAART 2012 - International Conference on Agents and Artificial Intelligence

564

et al., 2009; Calbimonte et al., 2010; Le-Phuoc et al.,

2010; Palopoli et al., 2003). There are several tools

available to perform stream reasoning.

DyKnow (Fredrik Heintz and Doherty, 2009) in-

troduces the knowledge processing language KPL

to specify knowledge processing applications on

streams. We exploit the Haskell stream operators

to handle streams and list comprehension for query-

ing this streams. The SPARQL algebra is extended

in (Bolles et al., 2008) with time windows and pat-

tern matching for stream processing. In our approach

we exploit the existing list comprehension and pat-

tern matching in Haskell, aiming at the same goal of

RDF streams processing. Comparing to C-SPARQL,

Haskell provides capabilities to aggregate streams be-

fore querying them Etalis tool performs reasoning

tasks over streaming events with respect to back-

ground knowledge (Anicic et al., 2010). In our case

the background knowledge is obtained from ontolo-

gies, translated as strict rules in order to reason over a

unified space.

The research conducted here can be integrated

into the larger context of Semantic Sensor Web, where

challenges like abstraction level, data fusion, applica-

tion development (Corcho and Garcia-Castro, 2010)

are adressed by several research projects like Aspire

2

or Sensei

3

. By incapsulating domain knowledge as

description logic programs, the level of abstraction

can be adapted for the application in hand by import-

ing a more refined ontologly into DLP.

Streams being approximate, omniscient rational-

ity is not assumed when performing reasoning tasks

on streams. Consequently, we argue that plausible

reasoning for real time decision making is adequate.

One particularity of our system consists of applying

an efficient non-monotonic rule based system (Maher

et al., 2001) when reasoning on gradually occurring

stream data. The inference is based on several algo-

rithms, which is in line with the proof layers defined

in the Semantic Web cake. Moreover, all the Haskell

language is available to extend or adapt the existing

code. The efficiency of data driven computation in

functional reactive programming is supported by the

lazy evaluation mechanism which allows to use val-

ues before they can be known.

The strength of plausibility of the consequents

is given by the superiority relation among rules.

One idea of computing the degree of plausibility is

to exploit specific plausible reasoning patterns like

epagoge: ”If A is true, then B is true, B is true. There-

fore, A becomes more plausible”, ”If A is true, then B

is true. A is false. Therefore, B becomes less plausi-

2

http://www.fp7-aspire.eu/

3

http://www.ict-sensei.org/

ble.”, or ”If A is true, then B becomes more plausible.

B is true. Therefore, A becomes more plausible.”

6 CONCLUSIONS

Our semantic based stream management system is

characterised by: i) continuous situation awareness

and capability to handle theoretically infinite data

streams due to the lazy evaluation mechanism, ii) ag-

gregating heterogeneous sensors based on the ontolo-

gies translated as strict rules, iii) handling noise and

contradictory information inherently in the context of

many sensors, due to the plausible reasoning mecha-

nism. Ongoing work regards conducting experiments

to test the efficiency and scalability of the proposed

framework, based on the results reported in (Maher

et al., 2001) and on the reduced complexity of de-

scription logic programs (Kr

¨

otzsch et al., 2007).

ACKNOWLEDGEMENTS

We are grateful to the anonymous reviewers for

their useful comments. The work has been co-

funded by the Sectoral Operational Programme Hu-

man Resources Development 2007-2013 of the Ro-

manian Ministry of Labour, Family and Social

Protection through the Financial Agreement POS-

DRU/89/1.5/S/62557 and PN-II-Ideas-170.

REFERENCES

Anicic, D., Fodor, P., Rudolph, S., St

¨

uhmer, R., Stojanovic,

N., and Studer, R. (2010). A rule-based language

for complex event processing and reasoning. In Pas-

cal Hitzler, T. L., editor, Web Reasoning and Rule Sys-

tems - Fourth International Conference, volume 6333

of LNCS, pages 42–57. Springer.

Barbieri, D., Braga, D., Ceri, S., Della Valle, E., and Gross-

niklaus, M. (2010). Incremental reasoning on streams

and rich background knowledge. In Aroyo, L., Anto-

niou, G., Hyvnen, E., ten Teije, A., Stuckenschmidt,

H., Cabral, L., and Tudorache, T., editors, The Se-

mantic Web: Research and Applications, volume 6088

of Lecture Notes in Computer Science, pages 1–15.

Springer Berlin / Heidelberg.

Billington, D. and Rock, A. (2001). Propositional plausible

logic: Introduction and implementation. Studia Log-

ica, 67(2):243–269.

Bolles, A., Grawunder, M., and Jacobi, J. (2008). Stream-

ing sparql extending sparql to process data streams. In

Proceedings of the 5th European semantic web con-

ference on The semantic web: research and applica-

tions, ESWC’08, pages 448–462, Berlin, Heidelberg.

Springer-Verlag.

DESCRIPTION PLAUSIBLE LOGIC PROGRAMS FOR STREAM REASONING

565

Calbimonte, J.-P., Corcho,

´

O., and Gray, A. J. G. (2010).

Enabling ontology-based access to streaming data

sources. In Patel-Schneider, P. F., Pan, Y., Hitzler,

P., Mika, P., Zhang, L., Pan, J. Z., Horrocks, I., and

Glimm, B., editors, International Semantic Web Con-

ference (1), volume 6496 of Lecture Notes in Com-

puter Science, pages 96–111. Springer.

Corcho,

´

O. and Garcia-Castro, R. (2010). Five challenges

for the semantic sensor web. Semantic Web, 1(1-

2):121–125.

Fredrik Heintz, J. K. and Doherty, P. (2009). Stream reason-

ing in dyknow: A knowledge processing middleware

system. In In Stream Reasoning Workshop, Heraklion,

Crete.

Gomez, S. A., Chesnevar, C. I., and Simari, G. R. (2010).

A defeasible logic programming approach to the inte-

gration of rules and ontologies. Journal of Computer

Science and Technology, 10(2):74–80.

Grosof, B. N., Horrocks, I., Volz, R., and Decker, S. (2003).

Description logic programs: combining logic pro-

grams with description logic. In WWW, pages 48–57.

Kr

¨

otzsch, M., Rudolph, S., and Hitzler, P. (2007). Complex-

ity boundaries for horn description logics. In AAAI,

pages 452–457. AAAI Press.

Le-Phuoc, D., Parreira, J. X., Hausenblas, M., and

Hauswirth, M. (2010). Unifying stream data and

linked open data. Technical report, DERI.

Maher, M. J., Rock, A., Antoniou, G., Billington, D., and

Miller, T. (2001). Efficient defeasible reasoning sys-

tems. International Journal on Artificial Intelligence

Tools, 10(4):483–501.

Palopoli, L., Terracina, G., and Ursino, D. (2003). A

plausibility description logic for handling information

sources with heterogeneous data representation for-

mats. Annals of Mathematics and Artificial Intelli-

gence, 39:385–430.

Rock, A. (2010). Implementation of decisive plausible

logic. Technical report, School of Information and

Communication Technology, Griffith University.

Savage, N. (2011). Twitter as medium and message. Com-

mun. ACM, 54:18–20.

Stuckenschmidt, H., Ceri, S., Valle, E. D., and van Harme-

len, F. (2010). Towards expressive stream reasoning.

In Aberer, K., Gal, A., Hauswirth, M., Sattler, K.-

U., and Sheth, A. P., editors, Semantic Challenges in

Sensor Networks, number 10042 in Dagstuhl Seminar

Proceedings, Dagstuhl, Germany. Schloss Dagstuhl -

Leibniz-Zentrum fuer Informatik.

Valle, E. D., Ceri, S., van Harmelen, F., and Fensel, D.

(2009). It’s a streaming world! reasoning upon

rapidly changing information. IEEE Intelligent Sys-

tems, 24:83–89.

ICAART 2012 - International Conference on Agents and Artificial Intelligence

566