An Online Passive Testing Approach for Communication Protocols

Jorge Lopez, Xiaoping Che and Stephane Maag

Institut Mines-Telecom/Telecom SudParis CNRS UMR 5157, Evry, France

Keywords: Online Testing, Passive Testing, Formal Methods.

Abstract:

Testing a protocol at runtime in an online way is a complex and challenging work. It requires the same precise-

ness in conformance testing and efficiency in performance testing, where conformance testing is a functional

test which verifies whether the behaviors of the protocol satisfy defined requirements, and performance testing

is a qualitative and quantitative test which checks whether the performance requirements of the protocol have

been satisfied under certain conditions. As a matter of course, it raises an interesting issue of converging these

two kinds of testing by using the same formal approach, and applying the approach online. In this paper, we

present a novel logic-based online testing approach to test the protocol conformance and performance through

formally specified properties. In order to evaluate and assess our methodology, we developed a prototype

and experimented it with a set of Session Initiation Protocol properties in a real IP Multimedia Subsystem

environment. Finally, the relevant verdicts and discussions are provided.

1 INTRODUCTION

Testing is a crucial activity in the evaluation pro-

cess of a system or an implementation under test

(IUT). Among the well known and commonly ap-

plied approaches, the passive testing techniques (also

called monitoring or run-time verification) are to-

day gaining efficiency and reliability (Bauer et al.,

2011). These techniques are divided in two main

groups: online and offline testing approaches. Of-

fline testing aims at collecting set of protocol traces

while running (through interfaces, ports or points of

observations (P.O)) and then checking some proper-

ties through these traces afterwards. Several model

based offline testing techniques have been studied by

the community in order to passively test systems or

protocol implementations (Veanes et al., 2005; Lee

and Miller, 2006; Raimondi et al., 2008; Cao et al.,

2010). Nevertheless, though offline testing still raises

many interesting issues (Lalanne and Maag, 2013),

online testing approaches bring out these same issues

plus the challenges that are inherent to online testing.

Among these inherent constraints, we shall cite the

non-collection of traces. Indeed, in passive online

testing, the traces are observed (through an eventual

sniffer), analyzed on-the-fly to provide test verdicts

and no trace sets are studied a posteriori to the testing

process. In our work, we focus on the online testing

of an IUT using passive testing technique.

Testing an implementation of a protocol is often

associated to the checking of its conformance and per-

formance. Conformance testing is a functional test

which verifies whether the behaviors of the proto-

col satisfy defined requirements. Performance test-

ing is a qualitative and quantitative test which checks

whether the performance requirements of the proto-

col have been satisfied under certain conditions. They

are mainly applied to validate or verify the scalabil-

ity and reliability of the system. Many benefits can

be brought to the testing process if conformance and

performance testing inherit from the same approach

and can be applied online.

Our main objective is then to propose a novel pas-

sive online testing approach based on formal confor-

mance and performance testing techniques (Che et al.,

2012) (Che and Maag, 2013). Although some crucial

works have been done in run-time conformance test-

ing area (Bauer et al., 2011), they study run-time ver-

ification of properties expressed either in linear-time

temporal logic (LTL) or timed linear-time temporal

logic (TLTL). Different from their work focusing on

testing functional properties based on formal models,

our work concentrates on formally testing functional

and non-functional properties without formal models,

through real protocols in an online manner.

In this work, we firstly extend one of our pre-

vious proposed methodologies to present a passive

testing approach for checking the conformance and

performance requirements of communicating proto-

cols. Furthermore, we design an online testing frame-

136

Lopez J., Che X. and Maag S..

An Online Passive Testing Approach for Communication Protocols.

DOI: 10.5220/0004885501360143

In Proceedings of the 9th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE-2014), pages 136-143

ISBN: 978-989-758-030-7

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

work to test these requirements in real-time, with new

verdicts definitions of ‘Pass’, ‘Fail’, ‘Time-Fail’ and

‘Inconclusive’. Finally, since several protocol con-

formance and performance requirements need to be

tested in order to verify the efficiency of our online

approach, we perform our approach in a real IP Multi-

media Subsystem (IMS) communicating environment

for assessing its preciseness and efficiency.

Our paper’s primary contributions are:

• A formal approach is proposed for formally ex-

pressing the conformance and performance re-

quirements, and data portions are taken into ac-

count.

• An online testing framework is designed for test-

ing conformance and performance requirements

in real-time, and new definition of testing verdicts

are introduced.

The reminder of the paper is organized as follows.

In Section 2, a short review of the related works are

provided. In Section 3, a brief description of the syn-

tax and semantics used to describe the tested proper-

ties is presented. In Section 4, we illustrate our on-

line testing framework and relevant algorithms. Our

approach has been implemented and experimented in

Section 5. It has been performed through a real IMS

framework to test Session Initiation Protocol (SIP)

properties. The real-time communications of the IMS

allow to evaluate our approach efficiently. Finally, we

conclude and provide interesting perspectives in Sec-

tion 6.

2 RELATED WORKS

While a huge number of papers are dedicated to on-

line testing. In this section we present the prior works

in these fields.

Model Based Online Testing. Model based test-

ing is a crucial technique in the testing research do-

main. In (Larsen et al., 2004), the authors present T-

UPPAAL– a tool for online black-box testing of real-

time embedded systems from non-deterministic timed

automata specifications. They describe a sound and

complete randomized online testing algorithm and

implement it by using symbolic state representation

and manipulation techniques. They propose the no-

tion of relativized timed input/output conformance as

the formal implementation relation. Likewise, some

other researchers describe a practical online testing al-

gorithm that is implemented in the model-based test-

ing tool called Spec Explorer (Veanes et al., 2005),

which is being used daily by several Microsoft prod-

uct groups. They formalize the model programs as

interface automata, and use the interface automata for

conformance testing. The conformance relation be-

tween a model and an implementation under test is

formalized in terms of refinement between interface

automata. Besides, in (Raimondi et al., 2008), the

authors describe how timed automata can be used as

a formalism to support efficient online monitoring of

timeliness, reliability and throughput constraints ex-

pressed in web service SLAs. And they present an im-

plementation to derive on-line monitors for web ser-

vices automatically form SLAs using an Eclipse plu-

gin and Apache AXIS handlers. The readers would

notice that all these works are based on modeling the

system by automata or timed automata, due to the

convenience that the constructed models can be han-

dled by existing verification tools. However, when the

system can not be accessed or modeled, our work will

be a complementary to these techniques since we do

not need to formalize the system by any automata.

Online Conformance Testing. In the online test-

ing area, there are lots of work focus on conformance

testing. In (Cao et al., 2010), the authors present a

framework that automatically generates and executes

tests ”online” for conformance testing of a compos-

ite of Web services described in BPEL. The proposed

framework considers unit testing and it is based on a

timed modeling of BPEL specification, and an online

testing algorithm that generates, executes and assigns

verdicts to every generated state in the test case. Nev-

ertheless, in (Nguyen et al., 2012), the authors defined

a formal model based on Symbolic Transition Graph

with Assignment (STGA) for both peers and choreog-

raphy with supporting complex data types. The local

and global conformance properties are formalized by

the Chor language in their works. The local properties

are used to test behaviors of one isolated peer with re-

spect to its specification model, while the global prop-

erties test the collaboration of a set of peers with re-

spect to its choreography model. Inspired from all

these works, our work does not require to model the

IUT and tackles not only conformance requirements,

but also the performance requirements.

indent Moreover, another similar work is provided by

the authors of (Hall

´

e and Villemaire, 2012). They

presented an algorithm for the runtime monitoring

of data-aware workflow constraints. Sample proper-

ties taken from runtime monitoring scenarios in exist-

ing literature were expressed using LTL-FO

+

, an ex-

tension of Linear Temporal Logic that includes first-

order quantification over message contents. Similarly

to our work, data are a more central part of the def-

inition of formulas, and formulas are defined with

quantifiers specific to the labels. Although the syntax

of the logic they used is flexible, it can quickly lose

AnOnlinePassiveTestingApproachforCommunicationProtocols

137

clarity as the number of variables required increases.

Our work improves on this by allowing to group con-

straints with clause definitions.

Online Performance Testing. Many studies have

investigated the performance of online systems. A

method for analyzing the functional behavior and

the performance of programs in distributed systems

is presented in (Hofmann et al., 1994). In the

paper, the authors discuss event-driven monitoring

and event-based modeling. However, no evaluation

of the methodology has been performed. In (Du-

mitrescu et al., 2004), the authors present a distributed

performance-testing framework, which aimed at sim-

plifying and automating service performance testing.

They applied Diperf to two GT3.2 job submission ser-

vices, and several metrics are tested, such as Service

response time, Service throughput, Offered load, Ser-

vice utilization and Service fairness.

Besides, in (Wei et al., 2009), the authors propose

two online algorithms to detect 802.11 traffic from

packet-header data collected passively at a monitor-

ing point. The algorithms have a number of appli-

cations in real-time wireless LAN management, they

differ in that one requires training sets while the other

does not. Moreover, in (Yuen and Chan, 2012), the

authors present a monitoring algorithm SMon, which

continuously reduces network diameter in real time in

a distributed manner. Nevertheless, most of these ap-

proaches are based on monitoring techniques, they do

not provide a formalism to test a specific performance

requirement. Our approach allows to formally spec-

ified protocol performance requirements in order to

check whether the real-time performance of the pro-

tocol remains as expected in its standard.

Although lots of works have been done in the on-

line testing area. Inspired from and based on their

works, our work is different from focusing on using

model-driven techniques, evaluating the performance

of the system. We concentrate on how to formally

and passively test the conformance and performance

requirements written in the standard. And also we are

trying to converge the online conformance and perfor-

mance testing by using the same formal approach.

3 FORMAL APPROACH

We will provide in this section basic definitions, syn-

tax and semantics of our formalism which are neces-

sary for the understanding of our approach.

3.1 Basics

A communication protocol message is a collection

of data fields of multiple domains. Data domains are

defined either as atomic or compound (Che et al.,

2012). An atomic domain is defined as a set of

numeric or string values. A compound domain is

defined as follows.

Definition 1. A compound value v of length

n > 0, is defined by the set of pairs {(l

i

,v

i

) | l

i

∈ L

∧v

i

∈ D

i

∪ {ε},i = 1...n}, where L = {l

1

,..., l

n

} is

a predefined set of labels and D

i

are sets of values,

meaningful from the application viewpoint, and

called data domains. Let D be a Cartesian product of

data domains, D = D

1

× D

2

× ... × D

n

. A compound

domain is the set of pairs (L,d), where d belongs to D.

Once given a network protocol P, a compound do-

main M

p

can generally be defined by the set of labels

and data domains derived from the message format

defined in the protocol specification/requirements. A

message m of a protocol P is any element m ∈ M

p

.

For each m ∈ M

p

, we add a real number t

m

∈ R

+

which represents the time when the message m is

received or sent by the monitored entity.

Example 1. A possible message for the SIP pro-

tocol, specified using the previous definition could be

m = {(method,‘INVITE’), (time,‘210.400123000’),

(status,ε),( f rom,‘alice@a.org’),(to,‘bob@b.org’),

(cseq,{(num,7), (method,‘INVITE’)})}

representing an INVITE request from alice@a.org

to bob@b.org. The value of time ‘210.400123000’

(t

0

+ 210.400123000) is a relative value since the

P.O started its timer (initial value t

0

) when capturing

traces.

A trace is a sequence of messages of the same do-

main containing the interactions of a monitored entity

in a network, through an interface (the P.O), with one

or more peers during an arbitrary period of time. The

P.O also provides the relative time set T ⊂ R

+

for all

messages m in each trace.

3.2 Syntax and Semantics of Our

Formalism

In our previous work, a syntax based on Horn clauses

is defined to express properties that are checked

on extracted traces. We briefly describe it in the

following. Formulas in this logic can be defined with

the introduction of terms and atoms, as it follows.

ENASE2014-9thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

138

Definition 2. A term is defined in BNF as

term ::= c | x | x.l.l...l where c is a constant in some

domain, x is a variable, l represents a label, and

x.l.l...l is called a selector variable.

Definition 3. A substitution is a finite set of

bindings θ = {x

1

/term

1

,..., x

k

/term

k

} where each

term

i

is a term and x

i

is a variable such that x

i

6= term

i

and x

i

6= x

j

if i 6= j.

Definition 4. An atom is defined as

A ::= p

k

z }| {

(term,...,term)

| term = term

| term 6= term

| term < term

| term + term = term

where p(term, ...,term) is a predicate of label p and

arity k. The timed atom is a particular atom defined as

p

k

z }| {

(term

t

,...,term

t

), where term

t

∈ T .

The relations between terms and atoms are stated

by the definition of clauses. A clause is an expression

of the form

A

0

← A

1

∧ ... ∧ A

n

where A

0

is the head of the clause and A

1

∧ ... ∧ A

n

its

body, A

i

being atoms.

A formula is defined by the following BNF:

φ ::= A

1

∧ ... ∧ A

n

| φ → φ | ∀

x

φ | ∀

y>x

φ

| ∀

y<x

φ | ∃

x

φ | ∃

y>x

φ | ∃

y<x

φ

where A

1

,..., A

n

(n ≥ 1) are atoms, x,y represent for

different messages of a trace and {<, >} indicate the

order relation of messages.

In our approach, while the variables x and y are

used to formally specify the messages of a trace, the

quantifiers commonly define “it exists” (∃) and “for

all” (∀). Therefore, the formula ∀

x

φ means “for all

messages x in the trace, φ holds”.

The semantics used in our work is related to the

traditional Apt–Van Emdem–Kowalsky semantics for

logic programs (Emden and Kowalski, 1976), from

which an extended version has been provided in order

to deal with messages and trace temporal quantifiers.

Based on the above described operators and quanti-

fiers, we provide an interpretation of the formulas to

evaluate them to > (‘Pass’), ⊥ (‘Fail’) or ‘?’ (‘Incon-

clusive’) (Che et al., 2012).

Then the truth values {>,⊥,?} are provided to the

interpretation of the obtained formulas on real proto-

col execution traces. However, different from offline

testing, definite verdicts should be immediately re-

turned in online testing. Which indicates that only >

(‘Pass’) and ⊥ (‘Fail’) should be emitted in the final

report, and the indefinite verdict ‘?’ (‘Inconclusive’)

will be used as temporary unknown status, but finally

must be transformed to one of the definite verdicts.

4 ONLINE TESTING

FRAMEWORK

In this section, we will introduce our novel online test-

ing framework and provide the relevant algorithm for

testers.

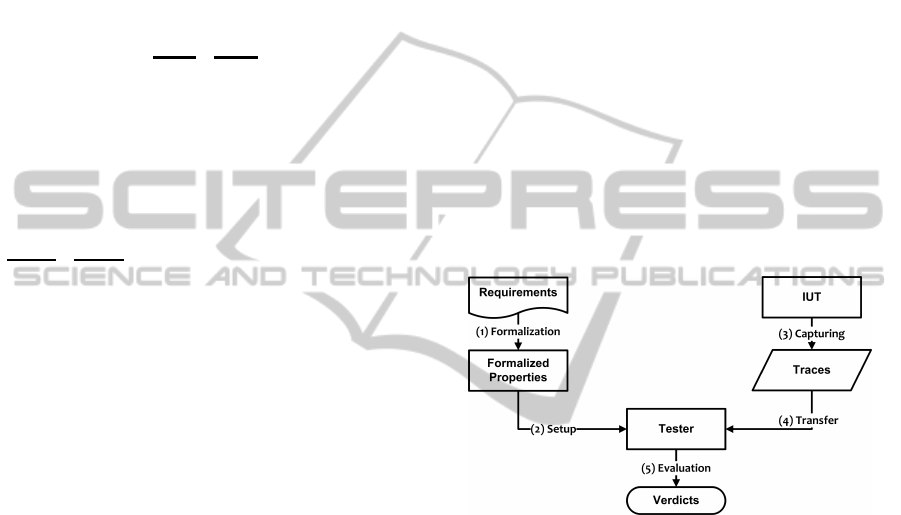

4.1 Framework

For the aim of testing conformance and performance

requirements in an online way, we design and use a

passive online testing architecture. As Figure 1 de-

picts, the testing process consists of five following

parts.

Figure 1: Core functions of our testing framework.

1. Formalization: Initially, the informal protocol

requirements are formalized to formulas by us-

ing Horn-logic based syntax and semantics men-

tioned in section 3. Due to the space limitation,

we will not go into details. The interested readers

may have a look at the papers (Che et al., 2012)

and (Lalanne and Maag, 2013).

2. Setup: When all the requirements are formalized

to formulas, they will be sent to the Tester with

the definition of verdicts.

• Pass: The message or trace satisfies the require-

ment.

• Fail: The message or trace does not satisfy the

requirement.

• Time-Fail: Since we are testing on-line, a time-

out is used to stop searching target message

in order to provide the real-time status. The

timeout value should be the maximum response

time written in the protocol. If we can not

AnOnlinePassiveTestingApproachforCommunicationProtocols

139

observe the target message within the timeout

time, then a Time-Fail verdict will be assigned

to this property. It has to be noticed that this

kind of verdict is only provided when no time

constraint is required in the requirement. If any

time constraint is required, the violation of re-

quirements will be concluded as Fail, not as a

Time-Fail verdict.

• Inconclusive: Uncertain status of the proper-

ties. It only exists at the beginning of the test

or at the end of the test.

3. Capturing: The monitor consecutively captures

the traces of protocols to be tested from the IUT.

When the messages have been captured, each

message will be tagged with a time-stamp in or-

der to test the properties with time requirements.

4. Transfer: The tagged messages are transferred to

the Tester when the Tester is capable for testing.

Since we optimize our algorithm in order to have

the best effort, the tester is always capable for test-

ing when dealing with less than 20 million pack-

ages per minute.

5. Evaluation: The Tester checks whether or not

the incoming traces satisfy the formalized require-

ments and provide the final verdicts. Based on

different results and the definition of verdicts, we

conclude the verdicts as: Pass, Fail or Time-Fail.

4.2 Testing Algorithm

The online testing algorithm is described in Algo 1.

Algorithm 1 describes the behaviors of an online

tester. Firstly, the tester will capture packets from the

predefined interface by using libpcap

1

, and it will tag

time stamps to all the captured packets at the same

time (Line 1-3).

Secondly, it will load all the properties (formal-

ized requirements) have to be tested, and match each

packet with the properties in chronological order. In

this step, only the packets needed for the current prop-

erty will be saved and tackled. The other irrelevant

packets will be discarded in order to accelerate the

testing process (Line 4-15). This process will keep

running until all the properties have been checked.

When finishing the checking process, it will report

the testing result and empty the buffer immediately in

order to make good use of the limited memory (Line

16-29).

1

http://www.tcpdump.org/

5 EXPERIMENTS

After introducing our novel framework, we will de-

scribe the testing environment and interpret the ex-

periments results in this section.

5.1 Environment

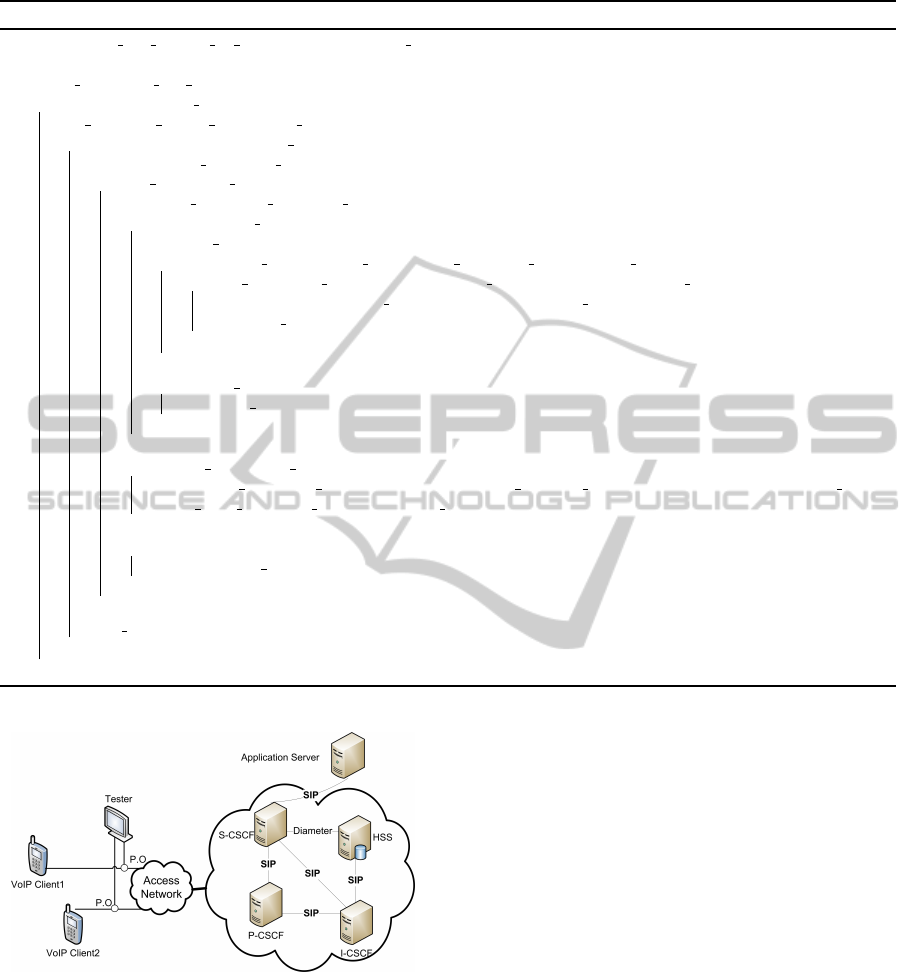

The IP Multimedia Subsystem is a standardized

framework for delivering IP multimedia services to

users in mobility. It aims at facilitating the access

to voice or multimedia services in an access indepen-

dent way, in order to develop the fixed-mobile conver-

gence. The core of the IMS network consists on the

Call Session Control Functions (CSCF) that redirect

requests depending on the type of service, the Home

Subscriber Server (HSS), a database for the provision-

ing of users, and the Application Server (AS) where

the different services run and interoperate. Most com-

munication with the core network and between the

services is done using the Session Initiation Proto-

col (Rosenberg et al., 2002).

The Session Initiation Protocol is an application-

layer protocol that relies on request and response mes-

sages for communication, and it is an essential part

for communication within the IMS framework. Mes-

sages contain a header which provides session, ser-

vice and routing information, as well as an body part

to complement or extend the header information. Sev-

eral RFCs have been defined to extend the protocol

to allow messaging, event publishing and notification.

These extensions are used by services of the IMS such

as the Presence service and the Push to-talk Over Cel-

lular (PoC) service.

For our experiments, communication traces were

obtained through ZOIPER

2

. ZOIPER softphone is a

VoIP soft client, meant to work with any IP-based

communications systems and infrastructure. It pro-

vides secure high-quality voice calls and conference,

fax sending and receiving functionality, and enhanced

IP-calling features wrapped in a compact interface

and small download size.

As Figure 2 shows, a simple environment is con-

structed for our experiments. We run two ZOIPER

VoIP clients on the virtual machines using Virtual-

Box for Mac version 4.2.16. The virtual machines

have 4GB of RAM, one processor Intel i5 @2.3GHz

and the software being used is Zoiper 3.0.19649. On

the other side, the server is provided by Fonality

3

,

which is running Asterisk PBX 1.6.0.28-samy-r115.

The P.Os are placed on the client side. Tests are per-

formed using a prototype implementation of the for-

2

http://www.zopier.com/softphone/

3

http://www.fonality.com

ENASE2014-9thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

140

Algorithm 1: Algorithm of online tester.

Input: open live capture on interface(INTERFACE NAME) //Using libpcap

Output: property verdicts report

1 thread init(report live status) //thread to report the live

2 for each packet on live capture do

3 last observed packet time ← get time(packet);

4 for each prototype on prototype packets do

5 property ← get prototype property(prototype);

6 if match properties of(prototype, packet) then

7 prototype list ← get prototype list(prototype);

8 for each prototype dependency on dependencies(prototype) do

9 matched dependency ← FALSE;

10 for each stored

packet on get dependency prototype list(prototype dependency) do

11 if match properties dependency(prototype dependency, packet, stored packet) then

12 associate(packet, stored packet, property), matched dependency ← TRUE;

13 goto next dependency;

14 end

15 end

16 if !matched dependency then

17 goto next prototype

18 end

19 end

20 if prototype determines property(prototype) then

21 associations list ← get associations(packet) report property pass(property, packet, associations list)

delete from prototype lists(associations list)

22 end

23 else

24 push(prototype list, packet)

25 end

26 end

27 next prototype;

28 end

29 end

Figure 2: Environment for experiments.

mal approach above mentioned, using the algorithm

introduced in the previous section.

5.2 Tests Results

In our approach, the conformance and performance

requirement properties are formalized to formulas.

These formulas will be tested through the testers in

real-time. Simultaneously, not only ‘Pass’, ‘Fail’,

‘Time-Fail’ and ‘Inconclusive’ verdicts are returned,

but also N

p

,N

f

,N

t f

and N

in

will be given to the tester,

which represent the accumulated number of ‘Pass’,

‘Fail’, ‘Time-Fail’ and ‘Inconclusive’ verdicts respec-

tively. We may write:

N

p

(φ) =

∑

[eval(φ,θ, ρ) = ‘>’]

N

f

(φ) =

∑

[eval(φ,θ, ρ) = ‘⊥’]

N

t f

(φ) =

∑

[

eval(φ,θ, ρ) = ‘⊥’

term

t

/∈ φ,timeout ∈ θ

]

N

in

(φ) =

∑

[eval(φ,θ, ρ) = ‘?’]

Properties: In order to formally design the proper-

ties to be passively tested online, we got inspired from

the TTCN-3 test suite (ETSI, 2004) and the RFC 3261

of SIP (Rosenberg et al., 2002). Several properties

relevant to session establishment are designed.

Conformance requirements φ

1

,φ

2

(“Every IN-

VITE request must be responded”, “Every success-

ful INVITE request must be responded with a suc-

cess response”) and a performance requirement ψ

1

AnOnlinePassiveTestingApproachforCommunicationProtocols

141

Table 1: Online Testing result for Client1 and Client2.

φ

1

φ

2

ψ

1

P.O Pass Fail Time-Fail Incon Pass Fail Time-Fail Incon Pass Fail Time-Fail Incon

Client1 50 0 0 0 43 0 7 0 645 240 0 0

Client2 36 0 0 0 34 0 2 0 473 6 0 0

(“The response time for each request should not ex-

ceed T

1

= 8s”) are tested. They can be formalized as

the following formulas:

φ

1

=

∀

x

(request(x) ∧ x.method = ‘INVITE’

→ ∃

y>x

(nonProvisional(y) ∧ responds(y,x)))

φ

2

=

∀

x

(request(x) ∧ x.method = ‘INVITE’

→ ∃

y>x

(success(y) ∧ responds(y, x)))

ψ

1

=

∀

x

(request(x) ∧ x.method! = ‘ACK’

→ ∃

y>x

(nonProvisional(y) ∧ responds(y,x)

∧withintime(y,x, T

1

)))

The two hours online testing results are illustrated

in Table 1. A number of ‘Fail’ and ‘Time-Fail’ ver-

dicts can be observed when testing φ

2

and ψ

1

. Let’s

have a look at the ‘Time-Fail’ verdicts in φ

2

. They

indicate that during the testing, the tester can not de-

tect some successful responses to ‘INVITE’ requests

within the maximum response time. However, there is

no time constraint required in φ

2

, we have to conclude

these verdicts as ‘Time-Fail’. Which means proba-

bly the server refused some ‘INVITE’ requests, the

responses are lost during the transmission or the re-

sponses will arrive later than the timeout time. Com-

bining the results of φ

1

, we can know that it was due

to the first reason.

Similarly, we can observe some ‘Fail’ verdicts

when testing ψ

1

, they are caused by the same reason

that the tester can not find the target message within

the required time. On the contrary, there is a specific

time constraint required in ψ

1

, which is 8 seconds. It

shows some responses exceeded the required time 8s,

they exactly violated the requirement and we have to

conclude them as ‘Fail’.

These testing results successfully show that our

approach can detect both the usual and unusual faults.

Moreover, by using the verdicts obtained from these

properties. We can also test the performance issues

of session establishment in real-time, which can be

defined as:

• Session Attempt Number: N

p

(φ

1

)

• Session Attempt Rate: N

p

(φ

1

) / t

slot

• Session Attempt Successful Rate: N

p

(φ

2

) / N

p

(φ

1

)

• Session establishment Number: N

p

(φ

2

)

• Session establishment Rate: N

p

(φ

2

) / t

slot

• Session Packets Delay: N

p

(ψ

1

).

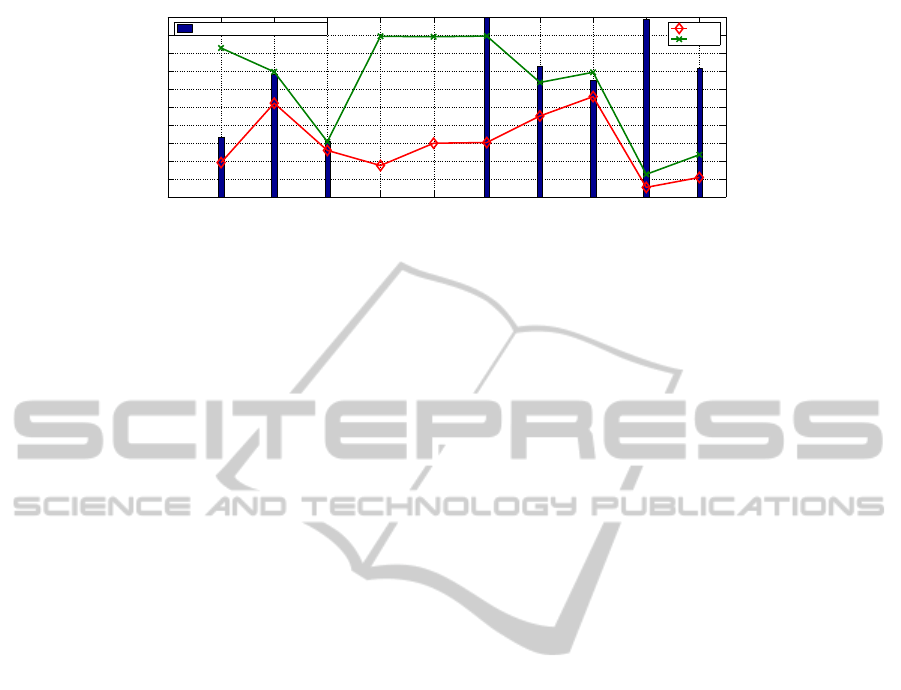

Figure 3 illustrates the testing results of Session At-

tempt Number, Rate and Successful Rate in each hour.

We can observe that in the 4th and 5th hour, the suc-

cessful attempt rates are zero while the attempts num-

bers/rates are not, which denotes that in those two pe-

riods, all the session attempts (‘INVITE’ requests)

were refused. And it returned to normal in the 6th

hour. In this way, we can have a clear view of the pro-

tocol performance during online testing. It has to be

noticed that when the tester receive an incoming trace,

it will apply the formalized requirements on the trace

and get the verdicts in a short time. During our exper-

iments, all the testing results for requirements without

time constraints are obtained in 1s, which proves the

efficiency of our approach.

6 PERSPECTIVES AND

CONCLUSION

This paper introduces a novel online approach to test

conformance and performance of network protocol

implementation. Our approach allows to define rela-

tions between messages and message data, and then to

use such relations in order to define the conformance

and performance properties that are evaluated on real

protocol traces. The evaluation of the property returns

a Pass, Fail, Time-Fail or Inconclusive result, derived

from the given trace.

The approach also includes an online testing

framework. To verify and test the approach, we de-

sign several SIP properties to be evaluated by our

approach. Our methodology has been implemented

into an environment which provides the real-time IMS

communications, and the results from testing several

properties online have been obtained successfully.

Furthermore, our approach can not only test re-

quirements and return relevant verdicts, but also it

can reflect current protocol performance status based

on these verdicts. We extended several performance

measuring indicators for SIP. As Figure 3 shows,

these indicators are used for testing the performance

of session establishment in SIP. The real time updated

results displayed in the screen can precisely reflect the

performance of the protocol in different time periods.

Consequently, extending more testers in a dis-

tributed environment based on the work (Che and

ENASE2014-9thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

142

1 2 3 4 5 6 7 8 9 10

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Testing time slot

Percentage of the Successful Attempts

Percentage of Successful Attempts

Rate

Number

80

64

48

32

16

0

Figure 3: Session Attempt Number, Rate and Successful Rate.

Maag, 2013) and building an online testing system

for all the network protocols would be the work we

will focus on in the future. In that case, the efficiency

and processing capacity of the system would be the

crucial point to handle, leading to an optimization of

our algorithms to severe situations.

REFERENCES

Bauer, A., Leucker, M., and Schallhart, C. (2011). Run-

time verification for ltl and tltl. ACM Transactions on

Software Engineering and Methodology, 20(4):14.

Cao, T.-D., F

´

elix, P., Castanet, R., and Berrada, I. (2010).

Online testing framework for web services. In Third

International Conference on Software Testing, Verifi-

cation and Validation, pages 363–372.

Che, X., Lalanne, F., and Maag, S. (2012). A logic-based

passive testing approach for the validation of commu-

nicating protocols. In Proceedings of the 7th Interna-

tional Conference on Evaluation of Novel Approaches

to Software Engineering, Wroclaw, Poland, pages 53–

64.

Che, X. and Maag, S. (2013). A formal passive perfor-

mance testing approach for distributed communica-

tion systems. In ENASE 2013 - Proceedings of the

8th International Conference on Evaluation of Novel

Approaches to Software Engineering, Angers, France,

4-6 July, 2013, pages 74–84.

Dumitrescu, C., Raicu, I., Ripeanu, M., and Foster, I.

(2004). Diperf: An automated distributed perfor-

mance testing framework. In 5th International Work-

shop in Grid Computing, pages 289–296. IEEE Com-

puter Society.

Emden, M. V. and Kowalski, R. (1976). The semantics of

predicate logic as a programming language. Journal

of the ACM, pages 23(4):733–742.

ETSI (2004). Methods for testing and specification (mts);

conformance test specification for sip.

Hall

´

e, S. and Villemaire, R. (2012). Runtime enforcement

of web service message contracts with data. IEEE

Transactions on Services Computing, 5(2):192–206.

Hofmann, R., Klar, R., Mohr, B., Quick, A., and Siegle, M.

(1994). Distributed performance monitoring: Meth-

ods, tools and applications. IEEE Transactions on

Parallel and Distributed Systems, 5:585–597.

Lalanne, F. and Maag, S. (2013). A formal data-centric

approach for passive testing of communication pro-

tocols. In IEEE / ACM Transactions on Networking,

volume 21, pages 788–801.

Larsen, K. G., Mikucionis, M., and Nielsen, B. (2004). On-

line testing of real-time systems using uppaal. In For-

mal Approaches to Software Testing, 4th International

Workshop, pages 79–94.

Lee, D. and Miller, R. (2006). Network protocol sys-

tem monitoring-a formal approach with passive test-

ing. IEEE/ACM Transactions on Networking, pages

14(2):424–437.

Nguyen, H. N., Poizat, P., and Za

¨

ıdi, F. (2012). Online

verification of value-passing choreographies through

property-oriented passive testing. In 14th Interna-

tional IEEE Symposium on High-Assurance Systems

Engineering, pages 106–113.

Raimondi, F., Skene, J., and Emmerich, W. (2008). Efficient

online monitoring of web-service slas. In Proceedings

of the 16th ACM SIGSOFT International Symposium

on Foundations of Software Engineering, pages 170–

180.

Rosenberg, J., Schulzrinne, H., Camarillo, G., Johnston, A.,

and Peterson, J. (2002). Sip: Session initiation proto-

col.

Veanes, M., Campbell, C., Schulte, W., and Tillmann, N.

(2005). Online testing with model programs. In Pro-

ceedings of the 10th European Software Engineering

Conference held jointly with 13th ACM SIGSOFT In-

ternational Symposium on Foundations of Software

Engineering, pages 273–282.

Wei, W., Suh, K., Wang, B., Gu, Y., Kurose, J. F., Towsley,

D. F., and Jaiswal, S. (2009). Passive online detec-

tion of 802.11 traffic using sequential hypothesis test-

ing with tcp ack-pairs. IEEE Transactions on Mobile

Computing, 8(3):398–412.

Yuen, C.-H. and Chan, S.-H. (2012). Scalable real-

time monitoring for distributed applications. IEEE

Transactions on Parallel and Distributed Systems,

23(12):2330 –2337.

AnOnlinePassiveTestingApproachforCommunicationProtocols

143