Plateau in a Polar Variable Complex-valued Neuron

Tohru Nitta

National Institute of Advanced Industrial Science and Technology (AIST), Tsukuba, Japan

Keywords:

Complex-valued neuron, Coordinate, Learning, Unidentifiable model, Singularity.

Abstract:

In this paper, the characteristics of the complex-valued neuron model with parameters represented by polar

coordinates (called polar variable complex-valued neuron) are investigated. The main results are as reported

below. The polar variable complex-valued neuron is unidentifiable: there exists a parameter that does not

affect the output value of the neuron and one cannot identify its value. The plateau phenomenon can occur

during learning of the polar variable complex-valued neuron: the learning error does not decrease in a period.

Furthermore, it is suggested by computer simulations that a single polar variable complex-valued neuron has

the following characteristics: (a) Unidentifiable parameters (singular points) degrade the learning speed. (b)

A plateau can occur during learning. When the weight is attracted to the singular point, the learning tends to

be stuck.

1 INTRODUCTION

A complex-valued neural network is a general neural

network with parameters such as weight and a thresh-

old value extending from real to complex numbers.

Complex-valued neural networks are suitable for in-

formation processing of complex-valued data or two-

dimensional data (Hirose, 2006; Nitta, 2009; Hirose,

2013).

Conventionally, an approach for real numbers

must be applied to a real part and imaginary part

separately, whereas a complex-valued neural network

allows direct data processing. It is also advanta-

geous because good-natured behavior of the com-

plex number to rotation can be taken automatically.

Consequently, some properties that are intrinsic to a

complex-valued neural network have been clarified

(Nitta, 2008).

Learning models have been studied by relation

with singular points lately (Amari et al., 2006; Wei

et al., 2008; Cousseau et al., 2008; Nitta, 2013). For

example, learning models with hierarchic structures

or a symmetric property on exchange of weights, such

as hierarchical neural networks and mixture models,

have singular points, mostly. It has been proved that

singular points affect the learning dynamics of learn-

ing models, and that they can cause a standstill in

learning.

Properties of the singular points of complex-

valued neuron constituting a complex-valued neural

network are investigated in this paper. A usual neu-

ron with real-valued weights and a real-valued thresh-

old is designated as a real-valued neuron. A neu-

ral network comprising real-valued neurons is des-

ignated as a real-valued neural network. Generally,

a complex number can be expressed in two ways:

using orthogonal coordinates and with polar coordi-

nates. A complex-valued neuron whose parameters

(weight and threshold) are expressed with an orthogo-

nal coordinate is designated as an orthogonal variable

complex-valued neuron, whereas a complex-valued

neuron whose parameters are expressed using a polar

coordinate is designated as a polar variable complex-

valued neuron. The literature (Hirose et al., 2001;

Kawata and Hirose, 2003; Hirose et al., 2004; Hirose,

2006) includes numerous explanations of complex-

valued neural network models comprising polar vari-

able complex-valued neurons and their applications.

This paper demonstrates that a polar variable

complex-valued neuron is unidentifiable. Mathemat-

ical indications show that a plateau phenomenon can

occur during learning (Nitta, 2010). Then it is sug-

gested experimentally that (a) unidentifiable parame-

ters (singular points) degrade the learning speed. (b)

A plateau can occur during learning.

Properties related to the singular points of a polar

variable complex-valued neuron are investigated ana-

lytically in Section 2. Then, using computer simula-

tions, the kind of effect the singular point has on the

learning dynamics of a polar variable complex-valued

526

Nitta T..

Plateau in a Polar Variable Complex-valued Neuron.

DOI: 10.5220/0004887905260531

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 526-531

ISBN: 978-989-758-015-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

neuron is investigated in Section 3. Finally, this paper

is concluded. Future topics are described in Section

4.

2 ANALYSIS

The singularity of a polar variable complex-valued

neuron is investigated analytically in this section.

2.1 Unidentifiability of a Polar Variable

Complex-valued Neuron

In this section, it is shown that a polar variable

complex-valued neuron is unidentifiable. A con-

nected set comprising parameter values for which po-

lar variable complex-valued neurons take an identical

output value is designated as a critical set, whereas

points on a critical set are regarded as singular points

in this paper. Only connected sets were employed as

analysis objects because an unconnected set is con-

sidered to have no bad effect on learning dynamics.

The following polar variable complex-valuedneu-

ron of N input is assumed. Then output value v is

defined as

v = f

C

N

∑

k=0

r

k

exp[iθ

k

] ·z

k

!

∈C, (1)

whereC stands for the set of complex numbers, z

k

∈C

signifies the k-th input signal, r

k

exp[iθ

k

] ∈C denotes

weight to the k-th input signal (r

k

∈ R represents the

amplitude and θ

k

∈ R is phase where R is the set

of real numbers) (1 ≤ k ≤ N), i =

√

−1, z

0

≡ 1,

r

0

exp[θ

0

] ∈C represents the threshold of a complex-

valued neuron (r

0

∈ R is amplitude and θ

0

∈ R is

phase). In addition, f

C

: C →C is an activation func-

tion.

In the polar variable complex-valued neuron de-

scribed above, if the amplitude parameter r

k

is equal

to zero for some 0 ≤ k ≤ N, then [weight × input]

= r

k

exp[iθ

k

] ·z

k

= 0 holds, and no value of θ

k

affects

the output value v of a complex-valued neuron. That

is, one cannot identify the value of the phase param-

eter θ

k

uniquely when the amplitude parameter r

k

is

equal to zero. Therefore, it is verified that the phase

θ

k

is an unidentifiable parameter and a polar variable

complex-valued neuron has an unidentifiable nature.

Next, the critical set of the polar variable complex-

valued neuron described above is specifically deter-

mined. First, let

M

def

= {(r, Θ) ∈ R

N+1

×R

N+1

}, (2)

r

def

=

r

0

.

.

.

r

N

∈ R

N+1

, (3)

Θ

def

=

θ

0

.

.

.

θ

N

∈ R

N+1

, (4)

where M is a parameter space that specifies the po-

lar variable complex-valued neuron described above.

Then, for any (r

′

,Θ

′

) ∈ M and any 0 ≤ k ≤ N, let

C

k

(r

′

,Θ

′

)

def

= {(r,Θ) ∈ M | r

0

= r

′

0

,··· ,

r

k−1

= r

′

k−1

,r

k

= 0,r

k+1

= r

′

k+1

,

··· ,r

N

= r

′

N

,θ

0

= θ

′

0

,··· ,

θ

k−1

= θ

′

k−1

,θ

k+1

= θ

′

k+1

,··· ,

θ

N

= θ

′

N

}. (5)

Then the critical set of the polar variable complex-

valued neuron described above,C(r

′

,Θ

′

) is given as

C(r

′

,Θ

′

) = ∪

N

k=0

C

k

(r

′

,Θ

′

). (6)

Actually, for any (r,Θ) ∈C(r

′

,Θ

′

), there exists some

k such that (r,Θ) ∈C

k

(r

′

,Θ

′

). Therefore considering

r

k

= 0,

v = f

C

r

′

0

exp[iθ

′

0

]z

0

+ ···+ r

′

k−1

exp[iθ

′

k−1

]z

k−1

+r

k

exp[iθ

k

]z

k

+ r

′

k+1

exp[iθ

′

k+1

]z

k+1

+ ···

+r

′

N

exp[iθ

′

N

]z

N

= f

C

r

′

0

exp[iθ

′

0

]z

0

+ ···+ r

′

k−1

exp[iθ

′

k−1

]z

k−1

+0+ r

′

k+1

exp[iθ

′

k+1

]z

k+1

+ ···

+r

′

N

exp[iθ

′

N

]z

N

(7)

holds, and v remains constant irrespective of θ

k

∈R.

2.2 Learning Dynamics Near the

Singular Point of a Polar Variable

Complex-valued Neuron

Learning dynamics near the singular point of a po-

lar variable complex-valuedneuron is investigated us-

ing the analysis procedure of reference (Amari et al.,

2006).

A polar variable complex-valued neuron defined

in section 2.1 is adopted as an analysis object. The

activation function f

C

is taken as a linear function for

simplicity.

f

C

(z) = z, z = x+ iy. (8)

Error is defined as E = (1/2)|t −v|

2

(t ∈ C is

teacher signal and v ∈ C is an actual output value).

PlateauinaPolarVariableComplex-valuedNeuron

527

In fact, E is a complex function, but it takes only a

real value as function values, and is not regular as

a complex function. That is, E is not complex dif-

ferentiable. Nevertheless, it is possible to derive a

learning rule by considering the partial differential. In

that case, the learning dynamics of a complex-valued

neuron change according to whether the parameter

(weight and threshold) is considered as orthogonal co-

ordinates system, or it is considered as a polar coor-

dinate system. Although error function E was dis-

cussed, the same argument applies to the complex dif-

ferentiability of activation function f

C

(See (Hirose,

2006, pp.18-22) for details).

A learning rule is derived as follows using the

steepest descent method: For any 0 ≤ k ≤N,

∆r

k

(n)

def

= r

k

(n+ 1) −r

k

(n)

= −ε·

∂E

∂r

k

= ε·Re

h

δ·z

k

·exp[iθ

k

(n)]

i

, (9)

∆θ

k

(n)

def

= θ

k

(n+ 1) −θ

k

(n)

= −ε·

∂E

∂θ

k

= −ε·r

k

(n) ·Im

h

δ·z

k

·exp[iθ

k

(n)]

i

,

(10)

where δ

def

= t −v,

z is a complex conjugate of com-

plex z, and n is a variable that represents the num-

ber of learning cycles. For example, r

k

(n) expresses

the value of parameter r

k

after finishing learning of n

times.

A learning rule of a single complex-valued neu-

ron whose weight is expressed on a polar coordinate

is derivedusing the steepest descent methodin the ref-

erence (Hirose, 2006, pp.59-64). Because the follow-

ing nonlinear function (amplitude - phase type activa-

tion function) is used as the activation function of the

complex-valued neuron concerned, difference in ex-

pression has occurred from the learning rule derived

in this paper:

f

ap

(u) = tanh(|u|) ·exp[i·arg(u)], u ∈C. (11)

For any 0 ≤ k ≤ N, define

M

r

k

def

= { (r, Θ) ∈ M | ∆r

k

= 0 }, (12)

M

θ

k

def

= { (r, Θ) ∈ M | ∆θ

k

= 0 }. (13)

Then learning rules (Eqs. (9) and (10)) yield

M

r

k

=

(r,Θ) ∈ M

Re

h

δz

k

·exp[iθ

k

]

i

= 0

, (14)

M

θ

k

=

(r,Θ) ∈ M

r

k

·Im

h

δz

k

·exp[iθ

k

]

i

= 0

.

(15)

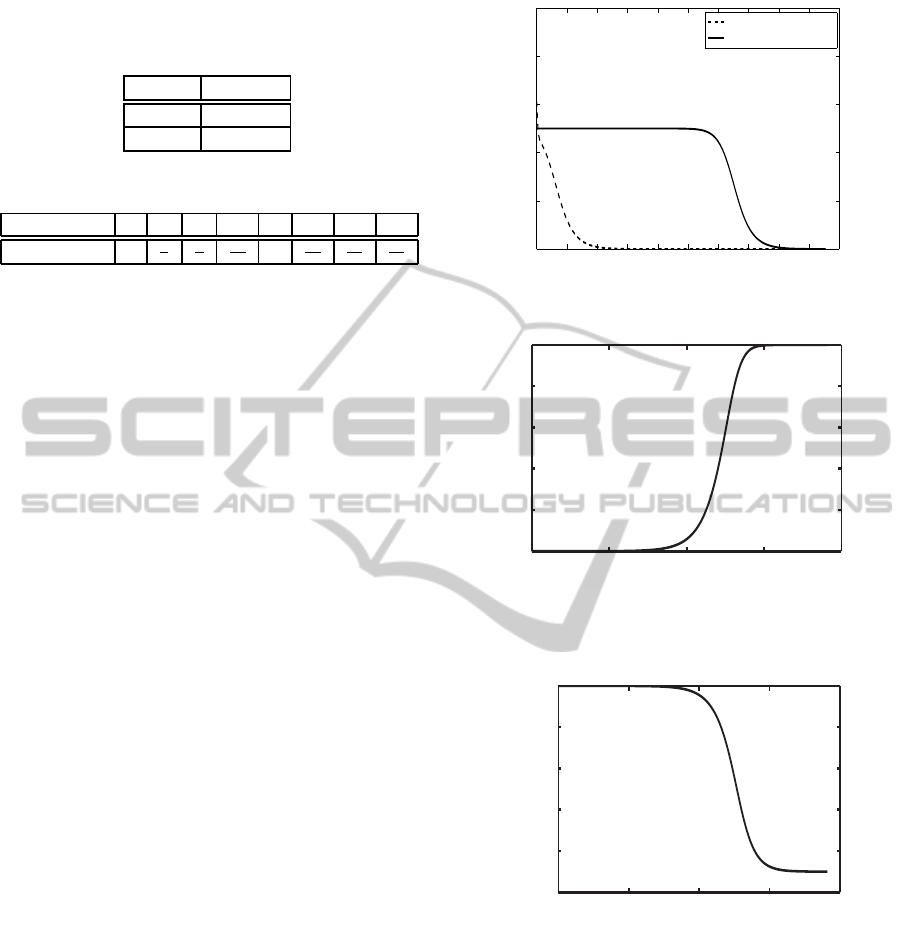

Table 1: Training patterns used in the experiment.

Input Output

Pattern 1 1.0 0.5i

Pattern 2 0.5−0.5i −0.5+ 0.5i

Pattern 3 −0.5−0.5i 1.0−0.5i

Next, the behavior of learning near singular points

is investigated. Near singular point r

k

= 0 ( k =

0,··· , N), for k = 0,··· ,N, Eqs. (9), (10) yield,

∆r

k

= ε·Re

h

δz

k

·exp[iθ

k

]

i

, (16)

∆θ

k

; 0. (17)

Therefore, the velocity of change of amplitude

r

k

( k = 0,··· , N) is higher than the velocity of phase

θ

k

( k = 0,··· , N), and a state is attracted to the sub-

manifold ∩

N

k=0

M

r

k

(State ∆r

k

; 0 (k = 0,··· ,N) is ap-

proached). That is, an equilibrium state ∩

N

k=0

{M

r

k

∩

M

θ

k

} is reached, and consequently parameter (r, Θ) ∈

M will change only slightly. This is a plateau phe-

nomenonin a learning curve, which is the same as that

in learning dynamics near singular points of a real-

valued neural network, as demonstrated in an earlier

study (Amari et al., 2006).

3 EXPERIMENT

In this section, behavior of learning near the singular

points of a polar variable complex-valued neuron is

investigated experimentally.

A polar variable complex-valuedneuron of one in-

put is used for simplicity. The activation function f

C

is assumed as a linear function:

f

C

(z) = z, z = x+ iy. (18)

We assume that the threshold w

0

= r

0

·exp[iθ

0

] ≡

0. That is, the learnable parameter is only one

weight w

1

= r

1

·exp[iθ

1

]. The general steepest de-

scent method (Eqs. (9), (10)) was used in learning.

The learning rate was set to 0.5. Training patterns are

of three types, as shown in Table 1. Learning was

judged to converge, and terminated when the learn-

ing error (1/2)|t −v|

2

dropped to 0.0001 or less (t is

a teacher signal and v is the actual output value of a

polar variable complex-valued neuron).

At the singular point of the polar variable

complex-valued neuron described above, r

1

= 0 (the

amplitude of the weight w

1

is zero). Therefore, the

initial value of r

1

was set to 0.00001, assuming the

case in which learning was started near singular point

r

1

= 0 (Case 1 of Table 2). Moreover, initial value

r

1

= 1.0 was adopted assuming that learning started

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

528

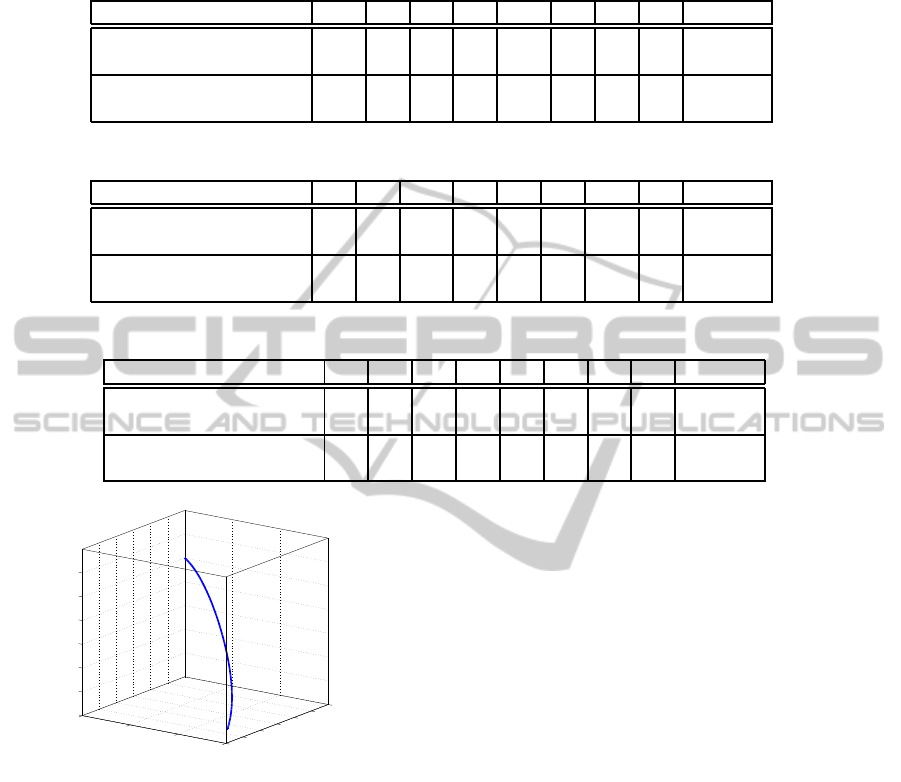

Table 2: Initial values of amplitude of weight. Case 1:

Learning is started from near the singular point. Case 2:

Learning is started from off the singular point.

r

1

Case 1 0.00001

Case 2 1.0

Table 3: Initial values of phase θ

1

of weight w

1

.

Case 1 2 3 4 5 6 7 8

Initial value 0

π

4

π

2

3π

4

π

5π

4

3π

2

7π

4

from a point distant from the singular point r

1

= 0

(Case 2 of Table 2). The initial value of the phase

θ

1

of the weight w

1

was chosen from the eight types

presented in Table 3.

The experimental results are presented in Table

4. The average numbers of training cycles start-

ing from near the singular point were 1.52 times

(;83.88/55.13), 1.71 times (;55.75/32.63) those of

starting off from the singular point for training pat-

terns 1 and 2, respectively. The average number of

training cycles starting from near the singular point in

training pattern 3 was 1.05 times (;34.50/33.00) that

starting off from the singular point. Thus, we could

realize from the above results that the average learn-

ing speed of the polar variable complex-valued neu-

ron starting from near the singular point is about 1.5

- 1.7 times slower than or comparable to that starting

off from the singular point.

When starting from near the singular point, we ob-

served a plateau phenomenon in the case 5 for the

training pattern 1 (Fig. 1). A standstill in learning

occurred from 1st to 110th cycle. The transitions of

the amplitude r

1

and the phase θ

1

of the weight w

1

are shown in Figs. 2 and 3, respectively. As shown

in Fig. 4, the speed of change of the amplitude is

faster than that of the phase: ∆r

1

> ∆θ

1

. And also,

the phase θ

1

changed little up to around 90th learn-

ing cycle: ∆θ

1

; 0. These observation results have

agreed with the theoretical results presented in Sec-

tion 2.2. The amplitude of the weight was attracted to

singular point 0 from 1st to around 100th cycle.

It seems at a glance from Fig. 1 that the error

remains completely unchanged and a plateau occurs

from 1st to 110th learning cycle. However, the actual

data says that this is not true. For a fact, the error re-

mains completely unchanged during 1-40, 42-46, 48-

49, and 54-55 learning cycles, respectively: plateau

occurs in each period. In other periods, the error de-

creases albeit only slightly. However, roughly speak-

ing, we could say that a quasi-plateau occurs from 1st

to 110th cycle.

Experimental results suggest the followingfor sin-

0 20 40 60 80 100 120 140 160 180 200

0

0.2

0.4

0.6

0.8

1

Learning cycles

Error

Start apart from the singular point

Start around the singular point

Figure 1: A Learning curve (Training Pattern 1, Case 5).

0 50 100 150 200

0

0.1

0.2

0.3

0.4

0.5

Learning cycles

Amplitude

Figure 2: Transition of the amplitude r

1

of the weight w

1

(Training Pattern 1, Case 5, starting from near the singular

point).

0 50 100 150 200

80

100

120

140

160

180

Learning cycles

Phase

Figure 3: Transition of the phase θ

1

of the weight w

1

(Train-

ing Pattern 1, Case 5, starting from near the singular point).

gular points and learning dynamics of polar variable

complex-valued neurons. (a) When learning is started

near the singular point, a mostly greater than average

number of training cycles is required compared with

the case in which learning is started from off the sin-

gular point. (b) A plateau can occur during learning.

When the weight is attracted to the singular point, the

learning speed tends to be stuck.

PlateauinaPolarVariableComplex-valuedNeuron

529

Table 4: Number of training cycles necessary for convergence. Case number implies those presented in Table 3 (the initial

value of the phase θ

1

of the weight w

1

).

(a) Training pattern 1

Case 1 2 3 4 5 6 7 8 Average

Start around the singular

point (r

1

= 0.00001) 191 66 12 66 192 66 12 66 83.88

Start apart from the

singular point (r

1

= 1.0) 74 61 12 61 74 73 13 73 55.13

(b) Training pattern 2

Case 1 2 3 4 5 6 7 8 Average

Start around the singular

point (r

1

= 0.00001) 30 37 119 37 30 37 119 37 55.75

Start apart from the

singular point (r

1

= 1.0) 33 45 38 31 0 31 38 45 32.63

(c) Training pattern 3

Case 1 2 3 4 5 6 7 8 Average

Start around the singular

point (r

1

= 0.00001) 37 36 32 33 37 36 32 33 34.50

Start apart from the

singular point (r

1

= 1.0) 36 31 29 29 32 38 34 35 33.00

0

0.2

0.4

0.6

60

80

100

120

140

160

180

0

0.1

0.2

0.3

0.4

0.5

0.6

Phase

Amplitude

Error

Figure 4: Transition of the error with respect to the ampli-

tude r

1

and the phase θ

1

of the weight w

1

(Training Pattern

1, Case 5, starting from near the singular point).

4 CONCLUSIONS

The singularity of a polar variable complex-valued

neuron is investigated in this paper. The following

results are obtained. (a) A polar variable complex-

valued neuron is unidentifiable. That is, there exists a

parameter that does not affect the output value of the

neuron, and as a result one cannot identify its value.

(b) A plateau can occur during learning of a polar

variable complex-valued neuron. In the plateau pe-

riod, the learning error does not decrease. (c) Singular

points (or critical points) degrade the learning speed.

When using polar variable complex-valued neurons,

one should pay attention to these properties.

It is reported that the expectation of generaliza-

tion error in cases where true parameters are uniden-

tifiable is greater than in cases where true parameters

are identifiable in a three-layer real-valued neural net-

work (Fukumizu, 1999). Properties peculiar to sin-

gular points, including whether the generalization er-

ror of a polar variable complex-valued neuron deteri-

orates, are interesting subjects for future study.

ACKNOWLEDGEMENTS

The author would like to give special thanks to the

anonymous reviewers for valuable comments.

REFERENCES

Amari, S., Park, H., and Ozeki, T. (2006). Singularities

affect dynamics of learning in neuromanifolds. Neural

Computation, 18(5):1007–1065.

Cousseau, F., Ozeki, T., and Amari, S. (2008). Dynamics of

learning in multilayer perceptrons near singularities.

IEEE Trans. Neural Networks, 19(8):1313–1328.

Fukumizu, K. (1999). Generalization error of the maximum

likelihood estimation of layered models. In Proc. 1999

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

530

Workshop on Information-Based Induction Sciences

(IBIS’99), pages 221–226. (In Japanese).

Hirose, A. (2006). Complex-valued neural networks, Series

on Studies in Computational Intelligence, volume 32.

Springer-Verlag.

Hirose, A. (2013). Complex-valued neural networks: ad-

vances and applications. IEEE Press-Wiley.

Hirose, A., Asano, Y., and Hamano, T. (2004). Mode-

utilizing developmental learning based on coherent

neural networks. In Proc. Int. Conf. on Neural In-

formation Processing (ICONIP2004), pages 116–121,

Berlin. Springer.

Hirose, A., Tabata, C., and Ishimaru, D. (2001). Coherent

neural network architecture realizing a self-organizing

activeness mechanism. In Baba, N., Jain, J. C., and

Howlett, R. J., editors, Knowledge-Based Intelligent

Information Engineering Systems & Allied Technolo-

gies, pages 576–580. IOS Press, Tokyo.

Kawata, S. and Hirose, A. (2003). A coherent optical neu-

ral network that learns desirable phase values in fre-

quency domain by using multiple optical-path differ-

ences. Opt. Lett., 28(24):2524–2526.

Nitta, T. (2008). Complex-valued neural network and

complex-valued back-propagation learning algorithm.

In Hawkes, P. W., editor, Advances in Imaging and

Electron Physics, volume 152, pages 153–221. Else-

vier, Amsterdam, The Netherlands.

Nitta, T. (2009). Complex-valued neural networks: utiliz-

ing high-dimensional parameters. Information Sci-

ence Reference, Pennsylvania.

Nitta, T. (2010). On the singularity of a single complex-

valued neuron. IEICE Trans. Inf. & Syst. D, J93-

D(8):1614–1621. (in Japanese).

Nitta, T. (2013). Local minima in hierarchical structures of

complex-valued neural networks. Neural Networks,

43:1–7.

Wei, H., Zhang, J., Cousseau, F., Ozeki, T., and Amari, S.

(2008). Dynamics of learning near singularities in lay-

ered networks. Neural Computation, 20:813–843.

PlateauinaPolarVariableComplex-valuedNeuron

531