Evaluating the Effectiveness of Three Different Course Delivery

Methods in Online and Distance Education

Barry Cartwright and Sheri Fabian

Simon Fraser University, Burnaby, Canada

Keywords: Online and Distance Education, Online Lectures, Asynchronous Online Discussion Groups, Educational

Video Games, on-Line Surveys.

Abstract: Students who completed at least one of three introductory Criminology courses offered through Simon

Fraser University’s Centre for Online and Distance Education (CODE) between May 2013 and April 2014

were invited to participate in an online survey regarding their perceptions of and experiences with these

three fully online courses. The three courses varied substantively from each other in their online format and

pedagogical approaches. The research indicates that students find interactive exercises, educational video

games, online audio-visual instruction (e.g., online lectures or Webcasts) and online quizzes helpful in

understanding course content and preparing for examinations. Results regarding participation in online

discussion groups were mixed, although students feel these groups give them an opportunity to interact with

their peers in the online environment. The survey results have already influenced the format of recently

revised and newly designed CODE courses at the university, and are expected to inform the design of future

courses.

1 INTRODUCTION

This paper reports on an online research survey

regarding student perceptions of and experiences

with three different (fully online) introductory

Criminology courses offered by the Centre for

Online and Distance Education (CODE) at Simon

Fraser University (SFU). All three online courses

were designed by different instructors, offering an

opportunity to investigate different pedagogical

approaches to online education. To illustrate, two of

the courses (CRIM 103 and CRIM 131) made use of

online discussion groups, whereas CRIM 104 did

not. CRIM 131 required an online (tutorial)

presentation, whereas CRIM 103 and 104 did not.

Only CRIM 104 had a series of fully-developed

online lectures, similar to those delivered in a

regular lecture theatre over the course of a semester.

This afforded the researchers the opportunity to

compare and contrast the effectiveness of

asynchronous discussion groups, interactive videos

and educational games, online audio-visual

instruction, and online quizzes.

The research questions addressed in this study

include why students take online and distance

education courses, whether students feel that they

benefit from participation in online discussion

groups, whether they find interactive videos and

educational games to be effective, what benefits they

derive from online audio-video instruction, and

which quiz formats they think provide the best

insight and exam preparation.

2 BACKGROUND

The Centre for Online and Distance Education at

SFU offers 187 online/distance education courses,

including 30 Criminology courses. At the time of the

study, there were 17,572 students enrolled in CODE

courses, 2,819 of whom were enrolled in

Criminology courses. The researchers surveyed the

students enrolled in the CODE versions of CRIM

103, 104 and 131.

CRIM 103, the oldest of the three courses, made

limited use of interactive/educational video games

and on-line audio-visual instruction. The course

consisted of 10 (short) online lectures, and three

interactive/educational video games. CRIM 103

emphasized participation in weekly online

discussions, requiring individual students to

268

Cartwright, B. and Fabian, S.

Evaluating the Effectiveness of Three Different Course Delivery Methods in Online and Distance Education.

DOI: 10.5220/0006270202680275

In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU 2017) - Volume 1, pages 268-275

ISBN: 978-989-758-239-4

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

facilitate asynchronous class discussions for two out

of eleven weeks (SFU operates on a thirteen week

semester system, not including the final exam

period). Participation in the weekly online

discussions, plus facilitation of two discussions, was

worth 20 percent of the grade for the course. The

CODE version of CRIM 103 had a weekly online

quiz, but unlike CRIM 104 and 131, had no points

allocated for performance on the quiz.

The CODE version of CRIM 104 consists of 11

online lectures and 10 online tutorials, plus online

modules on how to do the tutorials and how to

prepare for examinations. Each lecture is about an

hour in length, with presentation slides with a voice-

over (audio track). Each online tutorial for CRIM

104 consists of a 20-30 minute audio-visual

presentation, an interactive preliminary assessment

exercise (a video game designed to assist with

learning), a series of interactive flash cards that flip

from term to definition, and a timed, 10 minute (5

question) quiz at the end (cf. MacKenzie and

Ballard, 2015). Students can earn one point per

tutorial for attendance and participation, by going

through all four of the required elements and

spending at least 30 minutes doing so, and up to one

point for their performance on a five question quiz at

the end. The CRIM 104 tutorials are worth 20

percent of the overall grade for the course.

CRIM 131 is broken down at the beginning of

the semester into tutorial groups of 19 students. The

ten tutorials for CRIM 131 each involve two or three

online readings and a 20 minute (10 question) quiz

at the end. Over the course of the semester, each

CRIM 131 student is required to provide an online

presentation to their group, which relates to their

assigned reading, and includes two discussion

questions (cf. Huang, 2013). Four times per

semester, assigned discussants from the presenter’s

group respond to these questions, while the presenter

facilitates discussion (monitored by the teaching

assistants and instructor) (cf. Alammary, Sheard, and

Carbone, 2014). The CRIM 131 tutorials are worth

25 percent of the overall grade for the course—the

quizzes are worth 10 percent, the presentation, 10

percent, and discussion, 5 percent.

Research indicates that online discussion groups

such as those used in CRIM 103 and CRIM 131 can

foster more meaningful interaction, and thus

promote ‘reflective learning,’ as students have more

time to think about what they want to say than in

face-to-face classroom discussions (Comer and

Lenaghan, 2012). The type of interactive

preliminary assessment exercises (educational video

games) employed in CRIM 104 have proven to have

a positive effect on test results, and to be useful to

students when reviewing for mid-term and final

examinations (Grimley, Green, Nilsen, Thompson,

and Tomes, 2011; Means, Toyama, Murphy, Bakia,

and Jones, 2010). Online discussion groups,

interactive video games and online quizzes are all

consistent with ‘active learning,’ and the notion that

students learn better when they assume

responsibility for their own education through

participation, problem-solving and self-assessment

(Handelsman, Miller, and Pfund, 2007).

3 METHODOLOGY

Given that this study targeted three fully online

CODE courses, it was determined that the only way

to survey the students was through an online survey.

Apart from sampling students in the current

semester, the study sampled students enrolled in

these CODE courses in the two preceding semesters,

many of whom were no longer available to fill out a

‘pencil and paper survey’ in person. Also, many of

the CODE students were not regularly on campus, if

they were on campus at all.

3.1 Survey Design

The survey employed software from

fluidsurveys.com, which features ‘skip logic,’ where

required questions are answered by all participants,

while topic-specific questions are presented only to

those participants who trigger them through their

previous responses (Evans et al., 2009; Rademacher

and Lippke, 2007). Students who lacked experience

with a particular course would automatically be

redirected to the next set of questions regarding the

course or courses which they had taken.

The online survey consisted of 96 ‘quantitative’

questions regarding student experiences with and

perceptions of the three online/distance education

courses. Eight of these questions asked why students

took CODE courses, how much effort they felt was

required for CODE courses, how many hours a week

they spent on CODE courses, and how they thought

CODE courses compared to on-campus courses.

Twenty-three were demographic questions

pertaining to age, gender, citizenship, fluency in the

English language, credit hours accumulated, and

grade point average. Only three students were

expected to answer all 96 of the questions, as they

were the only ones who had taken all three courses

(there would be no point in asking students to

answer the 33 course-specific questions on CRIM

131 if they had never taken the course). Similarly, it

Evaluating the Effectiveness of Three Different Course Delivery Methods in Online and Distance Education

269

was not possible to ask exactly the same set of

questions for each course, as the courses had

different designs (it would be pointless to ask CRIM

104 students to answer questions about online

discussion groups, as CRIM 104 had no such

discussion groups). That said, the questions were

standardized to the greatest extent possible, and

where applicable, were virtually identical for each

course. These ‘quantitative’ questions were

straightforward and simple to answer, by pointing

and clicking on the desired response (Maloshonok

and Terentev, 2016). The data generated by

responses to these questions were exported to and

analyzed in Excel and SPSS.

Advantages to online surveys include efficiency,

cost savings, and ease of data collection and analysis

(Anderson and Kanuka, 2003; Evans et al., 2009).

Reported problems with online surveys include low

response rates and survey abandonment (Adams and

Umbach, 2012; Webber, Lynch, and Oluku, 2013).

Measures were taken to encourage participation and

survey completion, including six modest cash prizes

(drawn randomly) for students who completed the

survey, and a series of three carefully timed

reminders (Best and Krueger, 2004; Joinson, 2005;

Pan, Woodside and Meng, 2013).

Participation in the survey was voluntary and

anonymous. This research was categorized as

“minimal risk” and approved by SFU’s Research

Ethics Board in January 2014.

3.2 Research Sample

Students enrolled in the Summer 2013, Fall 2013

and Spring 2014 offerings of the CODE versions of

CRIM 103, 104 and 131 were invited to participate

in this online survey through emails sent to 450

prospective participants. There were 161 surveys

completed, for a response rate of 35.7 percent, which

is considered reasonable for online surveys (Sax,

Gilmartin, and Bryant, 2003; Sue and Ritter, 2007).

Of the 147 participants who answered the

question on gender, 97 (66%) were female, and 50

34%) were male. The slight overrepresentation of

females is consistent with known enrolment patterns

in the courses surveyed. Other researchers have also

reported that females are more likely than males to

respond to online surveys (Laguilles, Williams, and

Saunders, 2011; Sax et al., 2003).

The number of first and second year students

was much lower than in the on-campus offerings of

CRIM 103, 104 and 131. Of the 145 participants

who answered this question, there were only 8 first

year students (6%) and 30 second year students

(21%). On the other hand, 57 third year students

(39%) and 34 fourth year students (23%)

participated. Another 16 (11%) of students were

unsure of what year they were in, or were in fifth

year, or had already graduated.

Of the 160 participants who responded to the

question, 42 (27%) reported that they were

Criminology majors or minors, and 61 (38%) that

they were intending Criminology majors or minors.

Those taking Criminology as a ‘breadth’ or ‘general

interest’ course (or for other reasons entirely) came

from a wide variety of disciplines, such as business

administration, psychology, economics, education,

communications, political science, and engineering

sciences, to name a few.

Of the 145 participants who elected to provide

information on their cumulative grade point average

(GPA), 23 (16%) reported a GPA of between 3.5

and 4.0 (A-/A), 51 (35%) reported a GPA of

between 3.0 and 3.49 (B/ B+), 50 (35%) a GPA of

between 2.5 and 2.99 (C+/B-), and 18 (12%) a GPA

of between 2 and 2.49 (a C). Only 3 had cumulative

GPAs lower than 2.0. These GPAs differ from first

year on-campus courses, where you might expect to

see more C-s, Ds and Fs. These findings may reflect

a slight ‘selection bias,’ wherein better-than-average

students may have taken an interest in and

participated in the survey.

4 RESEARCH FINDINGS

As noted above, the survey questionnaire was

comprised of 96 ‘quantitative’ questions, involving a

series of questions regarding student experiences

with and perceptions of the three online/distance

education courses, demographic data, and their

experiences with CODE courses.

4.1 Why Do Students Take Online and

Distance Education Courses?

The online survey asked why students took online

and distance education courses (as opposed to

regular on-campus courses), how much effort they

felt was required for online and distance education

courses (as opposed to regular on-campus courses),

how many hours a week they spent on online and

distance education courses, and whether they

thought that online and distance education courses

were the same, better or worse than on-campus

courses. The students who responded to this online

survey had evidently enjoyed a significant degree of

exposure to online and distance education courses,

CSEDU 2017 - 9th International Conference on Computer Supported Education

270

(see Table 1 below), and thus, were well-positioned

to address these questions.

Table 1: Number of CODE courses in which Students

were Currently or Previously Enrolled.

Some faculty members feel that students take

online and distance education courses because their

grades are not high enough to get them into the on-

campus courses, or because students think that

online and distance education courses are easier than

their on-campus equivalents (Driscoll, Jicha, Hunt,

Tichavsky, and Thompson, 2012; Otter et al., 2013).

In the present survey, when asked about their

reasons for taking online and distance education

courses rather than regularly scheduled on-campus

courses, only 21 out of 161 students (13%) indicated

that they did better in online and distance education

courses, while only 12 students (8%) responded that

online and distance education courses were easier

than on-campus courses. The predominant reasons

offered for taking online and distance education

courses were that the courses were more convenient,

or that the on-campus courses conflicted with their

work schedules or other courses that they wanted to

take. Furthermore, when asked to rate the effort

required for online and distance education courses as

compared to on-campus courses, the vast majority

said that online and distance education courses

required at least as much effort as on-campus

courses, if not more effort (see Table 2 below).

Table 2: Effort Required in CODE Courses.

4.2 Participation in Asynchronous

Online Discussion Groups

One of the research questions addressed whether

students felt that participation in online discussion

groups improved their learning outcomes, and if not,

whether online discussion at least increased their

enjoyment or sense of engagement in the learning

process. The researchers also asked whether students

felt that the online discussions were the same or

better than discussions in traditional (on-campus)

tutorials. This series of questions applied only to

CRIM 103 and 131, as CRIM 104 did not employ

online discussion groups.

From a purely quantitative perspective, this

could best be described as a ‘split decision.’ Of the

50 CRIM 103 students who responded to the

question, 34 (68%) felt that the asynchronous online

discussion groups were helpful or very helpful in

understanding the course materials, while 14

(34.9%) did not (two said they did not participate in

the discussion groups). However, only 23 (46%) of

the 50 CRIM 103 students who responded to the

question felt that the online discussions were the

same or better than discussions in traditional (on-

campus) tutorials, while 25 (53.5%) did not. Of the

49 CRIM 131 students who responded to the

question, 21 (43%) felt that the online discussion

groups were helpful or very helpful in understanding

the course materials, while 25 (51%) did not (three,

or 6%, said they didn’t look at the discussions unless

they were required to present). Also, 24 (49%) of the

49 CRIM 131 students who responded to the

question felt that the online discussions were the

same or better than discussions in traditional (on-

campus) tutorials, while 25 (51%) did not.

4.3 The Effectiveness of Interactive

Videos and Educational Games

Another research question addressed how students

felt about the effectiveness of interactive exercises

and educational video games. This series of

questions applied only to CRIM 103 and 104, as

CRIM 131 did not employ any interactive

preliminary exercises or educational video games.

There were only three online exercises or

‘games’ employed in CRIM 103. Of the 50 students

who responded to the question, 33 (66%) felt that

the CRIM 103 exercises or games were either

helpful or very helpful in understanding the course

materials, while 34 (68%) again felt that they were

either helpful or very helpful in preparing for

examinations. Remarkably, 11 out of the 50

respondents (22%) said that they did not do the

CRIM 103 exercises or games at all, perhaps

because doing the CRIM 103 exercises or games had

no influence on the students’ grades, whereas doing

the interactive preliminary assessment exercises and

Number of Courses

Count Percentage Count Percentage

None 68 43% 9 6%

1 58 36% 30 19%

2 to 3 30 19% 63 39%

4 to 5 3 2% 35 22%

6 to 9 1 1% 18 11%

10 or more 0 0% 5 3%

Total 160 100% 160 100%

Currently Enrolled Previously Enrolled

Amount of Effort Required Count Percentage

Much more effort required for CODE courses 21 13%

More effort required for CODE courses 48 30%

Same effort required for CODE/on campus courses 75 47%

Less effort required for CODE courses 16 10%

Much less effort required for CODE courses 1 1%

Total 161 100%

Evaluating the Effectiveness of Three Different Course Delivery Methods in Online and Distance Education

271

interactive flash cards for CRIM 104 counted toward

the 10% participation grade.

Of the 59 CRIM 104 students who responded to

the question, 54 (92%) felt that the interactive

preliminary assessment exercises were either helpful

or very helpful in understanding the course

materials, while 50 (85%) felt that they were either

helpful or very helpful in preparing for

examinations. When asked about the interactive

flash cards used in CRIM 104, 50 (86%) of the 58

students who answered this question felt that the

interactive flash cards were either helpful or very

helpful in understanding the course materials, while

51 (88%) felt that they were either helpful or very

helpful in preparing for examinations.

4.4 The Benefits of Online Video

Instruction

The next research question addressed benefits that

students derived from online audio-visual instruction

designed specifically for the course (e.g., lectures,

mini-lectures, or Webcasts). This series of questions

applied only to CRIM 103 and 104, as at the time of

the survey, CRIM 131 did not employ any online

audio-visual instruction designed specifically for the

course.

Of the 51 students who responded to the

question, 35 (69%) said they watched/listened to all

10 of the CRIM 103 online lectures, while 8 (16%)

watched most of the lectures, and 7 (14%) watched

about half of the lectures. Of those, 37 (73%) felt

that the CRIM 103 online lectures were either

helpful or very helpful in understanding the course

materials, while 33 (65%) felt that they were either

helpful or very helpful in preparing for

examinations. That said, 13 (26%) felt that the

CRIM 103 online lectures were either not very

helpful or not helpful at all in understanding the

course materials, while 17 (34%) felt that they were

not very helpful or not helpful at all in preparing for

examinations.

In contrast, of the 53 students who responded to

the question, 40 (75.5%) said they watched/listened

to all 12 of the CRIM 104 online lectures, while 9

(17%) watched most of the lectures, and 4 (.07%)

watched half of the lectures or less. Of those, 47

(88.7%) felt that the CRIM 104 online lectures were

either helpful or very helpful in understanding the

course materials, while 46 (86.8%) felt that they

were either helpful or very helpful in preparing for

examinations. Only 5 students (9.4%) felt that the

CRIM 104 online lectures were either not very

helpful or not helpful at all in understanding the

course materials, while 6 (11.3%) felt they were not

very helpful or not helpful at all in preparing for

examinations.

4.5 Which Quiz Formats Provide the

Best Insight and Exam

Preparation?

The final research question addressed the issue of

which quiz format (or formats) the students found

offered them the best insight into whether or not

they had met the learning objectives.

The online quizzes for CRIM 103 consisted of

five questions. Of the 49 students who responded to

the question about online quizzes, 42 (86%) felt that

the CRIM 103 quizzes were either helpful or very

helpful in understanding the course materials, while

40 (82%) felt that they were either helpful or very

helpful in preparing for examinations. The online

quizzes in CRIM 103 counted for nothing toward the

overall grade for the course, yet 40 out of 49

students who responded to the question (82%) felt

that the value of the quizzes was either reasonable or

very reasonable based on the amount of work

required. A handful of students complained about

the quiz not being worth anything, and/or about the

five minute time limit, saying that they felt rushed.

The quizzes for CRIM 104 consist of five

multiple choice or five true-false questions (or a

combination of five multiple choice and true-false

questions), with a ten minute time limit. Of the 50

CRIM 104 students who responded to the question,

46 (92%) felt that the quizzes were either helpful or

very helpful in understanding the course materials,

while 46 (92%) again felt that they were either

helpful or very helpful in preparing for

examinations. Moreover, 46 (92%) found that the

value of the quizzes was either reasonable or very

reasonable based on the amount of work required.

The quizzes for CRIM 131 consist of 10

questions, with a 20 minute time limit. CRIM 131

students were not asked about the role of quizzes in

exam preparation because the online readings

supplement the primary course textbook and

students are not re-tested on these readings in

examinations. Of the 49 CRIM 131 students who

responded to the question about online quizzes, 34

(69%) felt that the quizzes were either helpful or

very helpful in understanding the course materials,

while 15 (31%) felt that they were either not very

helpful or not helpful at all. That said, 35 out of the

49 students (92%) found that the value of the CRIM

131 quizzes was either reasonable or very reasonable

based on the amount of work required.

CSEDU 2017 - 9th International Conference on Computer Supported Education

272

5 CONCLUSIONS

As predicted by the literature, this study found that

students take online and distance education courses

for a variety of reasons, and not necessarily with the

expectation that these courses will be easier or

require less effort than on-campus courses (cf.

Gaytan and McEwen, 2007; Nonis and Fenner,

2012; Pastore and Carr-Chellman, 2009). Most

participants said that they took online and distance

education courses because they were more

convenient, or because on-campus courses

conflicted with their work schedules or other courses

that they wanted to take. Moreover, the vast majority

said that online and distance education courses

required at least as much effort as on-campus

courses, if not more effort.

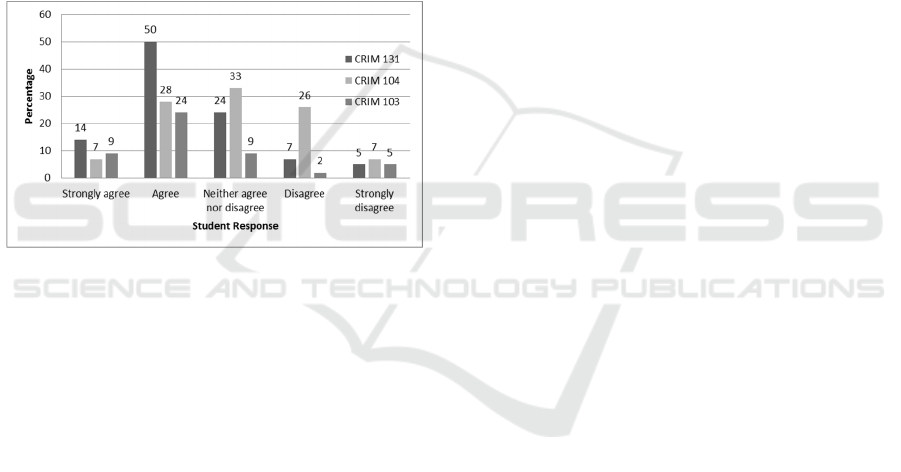

Figure 1: Opportunity for Interaction with Peers.

The results were mixed with respect to student

perceptions of the value of asynchronous online

discussion groups when it came to understanding

course materials and preparing for examinations.

However, one of the primary objectives of such

discussion groups is to give students the opportunity

to interact with their peers in the online environment

(cf. 2010; Webb Boyd, 2008). When presented with

the statement: ‘There were sufficient opportunities

to interact with peers in this CRIM 103 course,’ 33

out of 49 respondents (67.3%) agreed or strongly

agreed with this proposition, while only 16 (32.7%)

strongly disagreed, disagreed, or neither agreed nor

disagreed. When presented with the same statement,

31 out of 49 CRIM 131 respondents (61%) agreed or

strongly agreed with the proposition, while only 19

(39%) strongly disagreed, disagreed, or neither

agreed nor disagreed (see Figure 1 above). These

asynchronous online discussion groups therefore

served the purpose for which they were intended.

The research indicates that interactive exercises

and educational video games can be effective

teaching and learning tools. However, students

appear less inclined to use them if they do not

perceive an immediate advantage when it comes to

their grade for the course. This serves to illustrate

the importance of assigning a grade value (however

small) to online modules in which students are

expected to participate (Dennen, 2008; Leflay and

Groves, 2013; Rovai, 2003).

It is apparent that the type of online audio-visual

instruction employed in CRIM 103 and 104 has its

place in online and distance education. The higher

percentage of students who felt that the CRIM 104

online lectures were helpful or very helpful in

understanding the course materials and preparing for

examinations is possibly because the course

instructor/supervisor personally prepared and

recorded all of the lectures (Kim, Kwon, and Cho,

2011; Mandernach, 2009), whereas most of the

CRIM 103 design work was left to the teaching

assistants. It is almost axiomatic to say that the more

thought, time and effort invested by the designer, the

more likely it is that students will find online audio-

visual instruction interesting and helpful.

Evidently, students do not object to being tested

online. In fact, they find that it helps them to

understand the course content and prepare for formal

examinations. If there are lessons to be learned in

this regard, it is that students prefer shorter (five

question) quizzes, with a longer (10 or 20 minute)

time limit. Also, students indicated (in their written

comments) that they preferred quiz questions that

closely addressed subject areas that were likely to be

tested on formal examinations.

Overall, when asked to rate the three different

CODE courses (see Figure 2 below), and asked

whether they would recommend the course in

question to someone else, students indicated a clear

preference for CRIM 104, with ratings for CRIM

103 and 131 being roughly the same. This suggests

that students have an appetite for online and distance

education courses that maximize the use of

interactive/educational video games and on-line

audio-visual instruction (cf. Kim et al., 2011;

MacKenzie and Ballard, 2015; Mandernach, 2009).

With the above in mind, a new CODE version of

CRIM 101 (designed in the aftermath of this study)

adopted a similar format to that employed in CRIM

104, with weekly online lectures plus a series of ten

online tutorials, each consisting of an audio-visual

presentation, an interactive preliminary assessment

exercise, a series of interactive flash cards, and a

timed, 10 minute (5 question) quiz at the end. One

major difference between the CODE version of

CRIM 104 and the new CODE version of CRIM 101

is that the audio-visual lectures were captured from

Evaluating the Effectiveness of Three Different Course Delivery Methods in Online and Distance Education

273

Figure 2: Student Ratings of Overall Experience.

an on-campus iteration of the course, thus giving the

online lectures more of a “live” feel. The CODE

version of CRIM 131 was also re-designed

following the completion of the study; now, it too

includes live audio-visual lectures from an on-

campus iteration of the course. Other recent

revisions to the CODE version of CRIM 131 include

alterations to the readings, instructions, and course

expectations. Future research will survey students in

the newly designed CODE version of CRIM 101 and

the recently re-designed CODE version of CRIM

131, to see whether insights gained from this present

study have translated into enhanced learning

experiences for students in these newer courses.

As always when dealing with student surveys of

this nature, we need to ask ourselves whether student

satisfaction should be conflated with student

learning. Students may simply express a preference

for courses that allow them to ‘participate’

anonymously, because they wish to avoid having to

engage in scholarly discourse, or to avoid having

their thinking challenged by their peers (Bolliger and

Erichsen, 2013). Indeed, as Kirkwood and Price

(2013) point out in their review of the extant

literature on technology-enhanced learning in higher

education, there is no clear or consistent evidence

that these new learning technologies actually

enhance student learning. That said, present-day

online and distance education courses by definition

have to be seen to be maximizing the use of these

online learning technologies, or in the alternative,

run the risk of being viewed as out-of-date, or worse

yet, obsolete. In any event, it is difficult to deny the

attractiveness of these online learning technologies,

especially in the face of such strong student

endorsement.

ACKNOWLEDGEMENTS

This study was funded in part by a Teaching and

Learning Development Grant from the Institute for

the Study of Teaching and Learning in the

Discipline at Simon Fraser University in Burnaby,

Canada. We would also like to thank the Centre for

Online and Distance Education at SFU for allowing

us to conduct this study. In addition, we value the

work of our research assistants, Aynsley Pescitelli

and Rahul Sharma, and the assistance of the

Teaching and Learning Centre at SFU.

REFERENCES

Adams, J. D., and Umbach, P. D. (2012). Nonresponse

and online student evaluations of teaching:

Understanding the influence of salience, fatigue and

academic environments. Research in Higher

Education, 53(5), 576–

591.https://doi.org/10.1007/s11162-011-9240-5.

Alammary, A., Sheard, J., and Carbone, A. (2014).

Blended learning in higher education: Three different

design approaches. Australasian Journal of

Educational Technology, 30(4), 440–

454.https://doi.org/10.14742/ajet.693.

Anderson, T., and Kanuka, H. (2003). e-Research:

Methods, Strategies, and Issues. Boston: Pearson

Education, Inc.

Best, S. J., and Krueger, B. S. (2004). Internet Data

Collection. Thousand Oaks, CA: Sage Publications.

Bolliger, D. U., and Erichsen, E. A. (2013). Student

Satisfaction with Blended and Online Courses Based

on Personality Type. Canadian Journal of Learning

and Technology, 39(1), 1–23.

Comer, D. R., and Lenaghan, J. A. (2012). Enhancing

Discussions in the Asynchronous Classroom: The

Lack of Face-to-Face Interaction Does not Lessen the

Lesson. Journal of Management Education, 37(2),

261–294. https://doi.org/10.1177/1052562912442384.

Dennen, V. P. (2008). Looking for evidence of learning:

Assessment and analysis methods for online discourse.

Computers in Human Behavior, 24(2), 205–219.

https://doi.org/10.1016/j.chb.2007.01.010.

Driscoll, A., Jicha, K., Hunt, A. N., Tichavsky, L., and

Thompson, G. (2012). Can online courses deliver in-

class results? A comparison of student performance

and satisfaction in an online versus a face-to-face

introductory sociology course. Teaching Sociology,

40(4), 312–331.

https://doi.org/10.1177/0092055X12446624.

Evans, R. R., Burnett, D. O., Kendrick, O. W., MacRina,

D. M., Synder, S. W., Roy, J. P. L., and Stephens, B.

C. (2009). Developing Valid and Reliable Online

Survey Instruments Using Commercial Software

Programs. Journal of Consumer Health on the

Internet, 13(1), 42–

52.https://doi.org/10.1080/15398280802674743.

Gaytan, J., and McEwen, B. C. (2007). Effective online

instructional and assessment strategies. American

CSEDU 2017 - 9th International Conference on Computer Supported Education

274

Journal of Distance Education, 21(3), 117–132.

https://doi.org/doi:10.1080/08923640701341653.

Grimley, M., Green, R., Nilsen, T., Thompson, D., and

Tomes, R. (2011). Using Computer Games for

Instruction: The Student Experience. Active Learning

in Higher Education, 12(1), 45–56.

https://doi.org/10.1177/1469787410387733.

Handelsman, J., Miller, S., and Pfund, C., 2007. Scientific

Teaching. Roberts and Company, Englewood, CO.

Huang, H. (2013). E-reader and e-discussion: EFL

learners’ perceptions of an e-book reading program.

Computer Assisted Language Learning, 26(3), 258–

281. https://doi.org/10.1080/09588221.2013.656313.

Joinson, A. N. (2005). Internet Behaviour and the Design

of Virtual Methods. In C. Hine (Ed.), Virtual Methods:

Issues in Social Research on the Internet (Vols. 1–2,

pp. 21–34). Oxford: Berg.

Kim, J., Kwon, Y., and Cho, D. (2011). Investigating

factors that influence social presence and learning

outcomes in distance higher education. Computers and

Education, 57(2), 1512–1520.

https://doi.org/10.1016/j.compedu.2011.02.005.

Kirkwood, A., and Price, L. (2013). Technology-enhanced

learning and teaching in higher education: what is

“enhanced” and how do we know? A critical literature

review. Learning, Media and Technology, 39(1), 6–36.

https://doi.org/10.1080/17439884.2013.770404.

Laguilles, J. S., Williams, E. A., and Saunders, D. B.

(2011). Can Lottery Incentives Boost Web Survey

Response Rates? Findings from Four Experiments.

Research in Higher Education, 52(2), 537–553.

https://doi.org/10.1007/s1162-010-9203-2.

Leflay., K., and Groves, M. (2013). Using online forums

for encouraging higher order thinking and “deep”

learning in an undergraduate Sports Sociology

module. Journal of Hospitality, Leisure, Sport and

Tourism Education, 13, 226–

323.https://doi.org/10.1016/j.jhlste.2012.06.001.

MacKenzie, L., and Ballard, K. (2015). Can Using

Individual Online Interactive Activities Enhance Exam

Results? MERLOT Journal of Online Learning and

Teaching, 11(2), 262–266.

Maloshonok, N., and Terentev, E. (2016). The impact of

visual design and response formats on data quality in a

web survey of MOOC students. Computers in Human

Behavior, 62, 506–515.

https://doi.org/10.1016/j.chb.2016.04.025.

Mandernach, B. J. (2009). Effect of instructor-

personalized multimedia in the online classroom. The

International Review of Research in Open and

Distance Learning

, 10(3), 1–19.

Means, B., Toyama, Y., Murphy, R., Bakia, M., and Jones,

K. (2010). Evaluation of Evidence-Based Practices in

Online Learning: A Meta-Analysis and Review of

Online Learning Studies. Washington, D.C.: U.S.

Department of Education. Retrieved from

www.ed.gov/about/offices/list/opepd/ppss/reports.html

.

Nguyen, T. (2015). The Effectiveness of Online Learning:

Beyond No Significant Difference and Future

Horizons. MERLOT Journal of Online Learning and

Teaching, 11(2), 309–319.

Nonis, S. A., and Fenner, G. H. (2012). An exploratory

study of student motivations for taking online courses

and learning outcomes. Journal of Instructional

Pedagogies, 7, 2–13.

Otter, R. R., Seipel, S., Graeff, T., Alexander, B., Boraiko,

C., Gray, J., and Sadler, K. (2013). Comparing student

and faculty perceptions of online and traditional

courses. The Internet and Higher Education, 19, 27–

35. https://doi.org/10.1016/j.iheduc.2013.08.001.

Pan, B., Woodside, A. G., and Meng, F. (2013). How

Contextual Cues Impact Response and Conversion

Rates of Online Surveys. Journal of Travel Research,

53(1), 58–68.

https://doi.org/10.1177/0047287513484195.

Pastore, R., and Carr-Chellman, A. (2009). Motivations

for residential students to participate in online courses.

Quarterly Review of Distance Education, 10(3), 263–

277.

Rademacher, J. D., and Lippke, S. (2007). Dynamic

Online Surveys and Experiments with the Free Open-

Source Software dynaQuest. Behavior Research

Methods, 39(3), 415–426.

https://doi.org/10.3758/BF03193011.

Rovai, A. P. (2003). Strategies for grading online

discussions: Effects on discussions and classroom

community in internet-based university courses.

Journal of Computing in Higher Education, 15(1), 89–

107. https://doi.org/10.1007/BF02940854.

Sax, L. J., Gilmartin, S. K., and Bryant, A. N. (2003).

Assessing Response Rates and Nonresponse Bias in

Web and Paper Surveys. Research in Higher

Education, 44(4), 409–432.

https://doi.org/http://www.jstor.org.proxy.lib.sfu.ca/stable/

40197313.

Sue, V. M., and Ritter, L. A. (2007). Conducting Online

Surveys. Thousand Oaks, CA: Sage Publications.

Webber, M., Lynch, S., and Oluku, J. (2013). Enhancing

Student Engagement in Student Experience Surveys.

Educational Research, 55(1), 71–86.

https://doi.org/10.1080/00131881.2013.767026.

Evaluating the Effectiveness of Three Different Course Delivery Methods in Online and Distance Education

275