Agent-based Semantic Negotiation Protocol for Semantic Heterogeneity

Solving in Multi-agent System

Dhouha Ben Noureddine

1,2

, Atef Gharbi

1

and Samir Ben Ahmed

2

1

LISI, National Institute of Applied Sciences and Technology, INSAT, University of Carthage, Tunis, Tunisia

2

FST, University of El Manar, Tunis, Tunisia

Keywords:

Multi-agent System, Ontology, Semantic Heterogeneity, Semantic Negotiation Protocol, Ontology Alignment.

Abstract:

In this article, we propose an interactive agent model in an open and heterogeneous multi-agent system (MAS).

Our model allows agents to autonomously communicate between each other through semantic heterogeneity.

The communication problem can be expressed by the calculation based on the abilities acquired in the recei-

ver agent, compared to the message sent by the sender agent. Hence, the semantic heterogeneity should be

resolved in the message processing. The agent can autonomously enrich its own ontology by using semantic

negotiation approach in several steps. We develop firstly, a model using an ontology alignment framework.

Then, we enhance a similarity measure to select the most similar pairs by combining a psychological know-

ledge of the relevance, the resemblance, and the non-symmetry of similarit y. At the end, we suggest a protocol

for supporting semantic negotiation. In order to explain our approach, we implement a simple benchmark pro-

duction system on JADE.

1 INTRODUCTION

In a MAS, agents are required to communicate to

solve tasks and accomplish their goals that are assig-

ned. The features and behavior of agents make the

system comply with a set of external constraints on

the system. An open MAS means that new agents

enter the system and bring with them new features,

and oth er age nts take with them when they go ou t of

the system the capabilities req uired by MAS, making

these actions not known as a p riori by other agents of

the sy stem.

Therefore, in the communication phase, the receiver

agent handling different data models should under-

stand formulated demands from another sema ntically

heteroge neous sender agent. The problem of ma-

naging heterogeneity among various information re-

sources is increasing in the interactive MAS r equiring

the adap ta tion to communication pr otocols. A stan-

dard approach to the resulting problem lies in the use

of ontologies for data descr iption. As a consequence,

various so lutions have been proposed to facilitate de-

aling with this situation.

That leads us to propose a reflec tive age nt model to

solve the semantic heterogeneity problem using two

techniques: the calculation of similarity mea sure and

the sema ntic negotiation protocol. Our agents are

communicating with each other, when an age nt asks

another agent about his capab ilities, it will be able to

understand the answer from the definitions of the sy-

stem. In our approach, each agen t must have its own

ontology in which it’s defined.

In this paper we focus on a kind of semantic techno-

logies, named ontology alignment. It is supposed to

be accessible by agents and proposed by (Shvaiko and

Euzenat, 20 13). As the alignment between ontolog ie s

is incomplete, agents must then treat queries inclu-

ding non -defined terms in their respe ctive ontolo gies,

and the semantic h eterogeneity should be resolved in

the message proc essing. So, solving this problem are

no longer in alignmen t level, it’s nece ssary to define

a higher level of messages interp retation and appro-

priate communication protocols mechanism. Once

the translation is done, the receiver ag ent evaluate the

understanding degree of the tr anslated query using a

thresholds system such as those de fined by (Maes,

1994). Th is a ssessment fo cuses on the reflection thin-

king capabilities of agents to be able to analyse their

own code and to be conscious of the capabilities they

have at a given time.

Not to forget that our ag ent model is based on se-

mantic negotiation technique of (Morge and Rou-

tier, 2007), our protoco l can be seen as an extension

of Founda tion for I ntelligent Physical Agents FIPA-

Noureddine, D., Gharbi, A. and Ahmed, S.

Agent-based Semantic Negotiation Protocol for Semantic Heterogeneity Solving in Multi-agent System.

DOI: 10.5220/0006344602470254

In Proceedings of the 12th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2017), pages 247-254

ISBN: 978-989-758-250-9

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

247

request

1

. In fact, in a semantic negotiation context we

have situations similar to those of the human discussi-

ons, where huma n beings try to solve those situations

in which the involved terms are not mutually u nder-

standable, by negotiating the semantics of these terms

(Comi et al., 2015).

This paper introduces a simple benchmark production

system that will be used throughout this article to il-

lustrate our contribution which is developed as r obot-

based application. We implement the benchmark pro-

duction system in a free platform which is JADE

(JavaTM Agent DEvelopment)

2

Framework (Belli-

femine et al., 2007). JADE is a platform to deve-

lop MAS in compliance with the FIPA specifications

(Salvatore and Vincenzo, 2009), (Chuan-Jun, 2 011),

(Bordini, 2006).

The remainder of this paper is organized as follows:

we present in the section 2 our agent model, we des-

cribe the ontology model, the alignment service used

and our semantic similarity measure. Section 3 outli-

nes the negotiation strategy, the spe ech acts in FIPA -

ACL a nd the communication protocol. A benchmark

is used to explain the message exchange between

agents to clarify our contribution in section 4. Sec tion

5 provides the major conclusion.

2 AGENT REPRESENTATION

It is a reflective agent model in an open and hete-

rogene ous MAS a llowing dynamic in teracting in an

environment during run-time. Our model is able to

modify messages at the run-time in th e co mmunica-

tion phase, and the age nt is able to produce the list

of those capa bilities at the current time. In th is con-

text, when an agent A wants to c ommunicate with an

agent B, it will use its own ontology to build its mes-

sages, B will receive a formulated message compati-

ble with the terms of agen t’s A on tology, which does

not allow him to interpret this message. In fact, af-

ter re ceiving the message from A, B compares the re-

quest received with its own capacities at the current

time. We use a s tec hnique: the similarity measure

proposed by (Shvaiko and Euzenat, 201 3) to calcu-

late if two conc epts are semantically similar, i.e., they

share common proper ties and attributes, the interest

of this measure is the leveraging o f all o ntologies as-

pects and holding the maximum similarity. It there-

fore offers immediately a secure basis for a distance

measure. We imp rove this measure by optimizing it

as an asymmetric similarity in order to enhance the

1

http://www.fipa.org/specs/fipa00026/SC00026H.html

2

http://jade.tilab.com/

performance of capturing human judgements and pro-

duce b e tter n earest neighbors.

To make a n agent reflective, we need to represen t the

agent’s state during its own execution a nd manipulate

it. To do so, we adopt the Alignment API

3

of (Da-

vid et al., 20 11) to align ontologies. The API imple-

mentation itself carries little overhead: alignments of

thousands of terms (from large thesauri) are been able

to be handled without a slack manner. Furthermore,

the API is used to deal with instances of larger onto-

logies. The primary purpose of this API is that it may

be used as a specific tool based directly on seco ndary

memory storage and indexing for dealing with instan-

ces and dropping that support fro m the API.

We work on an alignment in semantic heterogeneous

environment and we completely neglect the problem

of syntactical, terminological and lexical heteroge-

neity. We assume that all agents use the same syntax

for messages.

In this section, we will present our ontology model,

then we will describe the alig nment API u sed an d its

role for our agent model.

2.1 Ontology Model

An onto logy O is described forma lly as a 6-tuple:

{C, P, H

c

, H

p

, A, I } where C a set of concepts,

P a set of pr operties, H

c

a set of hierarchical relati-

onships between concepts an d sub-concepts, H

p

a set

of hierarchical relations betwe e n properties and su b-

properties, A a set of axioms and I a set of instances

of concepts C and of properties P.

2.2 Ontology Alignment API

The ontology alignment requests the semantic simila-

rity measur e of the ontologies’ concepts and the alig-

nments among them. It aims to identify concepts that

can be considered similar, regardless the use of the

type of alignment: it can include tasks like queries in-

terpreting, translation of messages or obtaining pas-

sage axioms between two ontologies.

The ontology alignme nt problem can be descr ibed in

one sentence as defined (David et al., 2011): “Given

two ontologies O

A

and O

B

each describing a set of

discrete entities (which can be c la sses, properties, ru-

les, predicates, etc.), find the relationships (e.g., equi-

valence or subsumption) holding between these en-

tities.” In the API description, (David et al., 2011)

defined other para meters such as the alignment level,

the arity and the set of co rrespondences.

3

is a Java API for manipulating alignments i n the alig-

nment format and EDOAL (Expressive and D eclarative On-

tology Alignment Language).

ENASE 2017 - 12th International Conference on Evaluation of Novel Approaches to Software Engineering

248

We can define the ontology alig nment between two

concepts of two different ontologies O

A

and O

B

as a

4-tuple align = {e

1

, e

2

, n, R }:

• e

1

an e ntity (c la ss, relationship or instance) of the

ontology O

A

that should be aligned (e

1

∈ O

A

);

• e

2

an e ntity (c la ss, relationship or instance) of the

ontology O

B

that should be aligned (e

2

∈ O

B

);

• R the correspondence relationship (e.g. equiva-

lence, etc.) between e

1

and e

1

;

• n ∈ [0, 1] is the validity degree of th is corr espon-

dence.

We integrate the Alignment API (David et al., 2011)

in our model to ease our contribution. In the balance

of this article, we w ill note P(S) the set of subsets S.

We define M (O

A

, O

B

) the set of mappings between

the ontology O

A

and ontology O

B

. By exten sio n, if S

is a set of entities (class, relatio nship or instance) of

the ontology O

A

, then we define M (S, O

B

) the set of

mappings c orrespon ding to entities S of ontology O

A

in ontology O

B

.

2.3 Translation Data

In order to use an ontology instead of another, we

must find it first. A translation program must allow a n

agent to locally transform a message expr essed as a

function of an ontology O

A

to a new message expres-

sed according to an ontology O

B

. That means, when

an agent A sends a request to an agent B, B must first

translate the request with its own capacity existing in

its ontology O

B

. We choose to consider ( work ( La-

era et al., 2007)) that MAS has acc e ss to an ontology

alignment service (subsection 2.2): It should help to

reform ulate the propositional co ntent of message, i.e.,

translate it into terms of another ontology (of the re-

ceiver age nt). In this section, we explain how agent B

uses this ontologies alignment service to translate the

content of the request received from agent A in terms

of its ontology O

B

. We consider S

A

the message sent

by A to B. By nature, S

A

⊂ P(O

A

) (i.e. S

A

is a set

of concepts of ontology A). The alignment service

builds then a set of mappings M (S

A

, O

B

). The set S

B

which is the translation of S

A

in O

B

, is defined as the

set of concepts of O

B

as it exists an alignme nt align ∈

M (S

A

, O

B

) connecting to one of the concepts of S

A

.

2.4 Semantic Similarity Measure

We speak about semantic similarity, when the calcu-

lated measure betwee n two concepts are sem antically

similar, i.e . when they share common properties

and attributes. For example , ”aircraft” and ”car”

are similar because they both have the attributes of

”transporta tion”. Semantic matching score specifies

a similarity function in the form of a seman tic

relation (hypernym, hyponym, meronym, p art-of,

etc) between the intention of the sender agen t’s

message and the concepts of the rece iver agent.

This measure is a real number ∈ [0,1] where 0 (1)

stands for completely different (similar) entities

(Shvaiko and Euzenat, 2013). So, we ca n say that the

approa c h followed here consists of assigning each

entity category, (e.g. a class), to a specific measure

which is defined as a function of the results computed

in the related categories of the entity. We apply this

following equation (1) to compute the similarity

measure between the receiver agent capabilities and

the received query from the sender agent.

sim

c

(A,B) =

∑

(c

1

,c

2

)∈M

(A,B)

(sim

c

(c

1

,c

2

))

max(|A|,|B|)

(1)

Where M

(A,B)

is a mapping from elements of A to

elements of B which maximises Sim

c

(A,B). The

similarity between the sets is the average of the

values of matched pairs. M

(A,B)

is a functio n retur-

ning the set of pairs of concepts resulting from the

possible permutations between A a nd B, for instance,

M({x,y},{1,2,3}) return s the set of permutations

{{x,1}, {x,2},{x, 3},{y,1},{y, 2},{y,3}}.

In the a lignment API (David et al., 2011), the authors

ignore the cognitive sense, for instance if the concepts

have more common attributes, they are more similar,

and if there are more differences, they are less similar

or dissimilar. An important psychological idea is

that the similarity is non-symmetric. Nevertheless,

(Tversky, 1977) proved tha t the similarity measure

between concepts could not be symmetrical, human

judgements have not been too. For example, w e

say more easily “John looks like his father” than

“His father looks like John”, or in the relation is-a:

“a borzoi resembles a carnivore”, than “a carnivore

resembles to a borzoi”. Building on th at, we apply

the best average (BA) approach which doesn’t face

any of the pre-mentioned restraints, and takes into

consideration both similar and dissimilar conce pts as

would be expecte d.

Because of above reasons, we introduce the psycho-

logical theory in our similarity measurement methods

by optimizing the approach of ( Shvaiko and Euzenat,

2013) through ad ding the non-sym metry property of

similarity. To do so, we slightly shift the formulas of

(Euzenat, 2013) to serve our purposes. We combine

the average (obtained through the API) with the BA

one (our upturn), where each average confidence

(similarity measure calculated by (Shvaiko and

Euzenat, 2013)) of the first ontology is paired only

Agent-based Semantic Negotiation Protocol for Semantic Heterogeneity Solving in Multi-agent System

249

with the most similar concept of the second one and

vice-versa. We propose a completely new me thod

for the computation of the similarity measurement,

which has the ability to not ignore the non-symmetry

of similarity, a nd the skill required to generate a best

matching average.

Our approach focuses on the calculation of the

average similarity between each term in O

A

and its

most similar term in O

B

, averaged with its reciprocal

to obtain a symmetric score:

sim

c

(S

1

,S

2

) =

sim

c

(A,B) + sim

c

(B,A)

2

(2)

We define the A(M) ∈ [0,1] the values of a set of

mappings M as the following formula shows:

A(M) =

∑

(c

1

,c

2

,n,R)∈M

n

|M|

(3)

In other words, A(M) represents the average of the

alignment scores involved in th e mapping M. Then,

we consider a measure (score) calculating the score

between two sets of concept S

1

and S

2

as follows:

score(S

1

,S

2

) = ·A(M)sim

c

(S

1

,S

2

) (4)

3 SEMANTIC NEGOTIATION

APPROACH

This section shows a communication between two

agents based on the semantic negotiation. Once the

translation is done, the receiver agent assesses the un-

derstandin g degree of th e translated query using a sy-

stem of thresholds. This assessment is based on the

reflective capabilities of ag ents to be able to analyze

their own codes, to be aware of the capabilities they

have at a given time and to modify their own execu-

tion state or alter their own interpretation or meaning.

Using this lightly understood q uery and the capabili-

ties list of the agent at a given time, the receiver age nt

choose among our five proposed perf ormatives how

to describe its understanding of the order placed in the

receiver agent so that it can, if necessary, reconsider

its request.

3.1 Speech Acts in FIPA-ACL

In the literatur e, the majo rity of researches on seman-

tic heterogeneity perfo rms the calcu la tion of the se-

mantic measu re without using special modeling for

the content of the queries. Some authors measure

the similarity between two concepts of the same onto-

logy, others compute th e similarity betwee n two con-

cepts of different ontologies. But, few authors c a lc u-

late the similarity between two sets of conce pts. The

originality of our approach departed from this ide a to

compute the similarity between sets of concepts (re-

quest, ca pacity) from a concept-to-concept, especially

to calculate the distance between two ontologies to

optimize f uture alignment.

We consider that the message exch a nge describ e d in

the subsection 3.2 uses and respects the identified

message information specified by the control FIPA-

ACL performatives. We can put forward a few hyp ot-

hesis to specify the respon se ID corresponding to the

initial message to avoid any problems linked to mes-

sages intersection. Our protocol can be seen as a

FIPA-request extension that would focus more on not-

understood message s. The content of the performa-

tive will correspond to the classical performatives re-

quest, agree, etc. We will define in de tail in the next

subsection our new proposed performatives for the

application of our communication protocol.

3.2 Communication Model Proposed

The selection of the dynamic p rotocol in open and

heteroge neous MAS for the collaborative tasks exe-

cution during the agents’ communication proves to be

an important step to structure message exchange and

ensure consistency of agents’ be havior in the system.

In order to solve possible understanding problems,

two communicating agen ts need the contribution

of other agents in the system, this is the idea of

some work addressing the semantic negotiatio n in

the literatu re (De Meo et al., 2012) (Garruzzo et al.,

2011) (Garruzzo and Rosaci, 2008) (Me ssina et al.,

2014). We try to use the negotiation strategy to

resolve conflicts between agents.

In this section , we define the role of calculation o f

the score for the selection of candidates’ capa bilities,

and for the communica tion between age nts through

determining the speech acts used in the response

strategy in the work of (Morge and Routier, 2007).

We adopt this approach because, authors assume that

it’s inconceivable to consider as systematically as

possible the ontology a lignment. The main problem

they see that the alignment is unable to guarantee

if it will be correct or complete. Or, if the align-

ment is imperf ect the communication is generally

impossible. So, they thin k that they should deal with

the semantic heterogeneity problem d irectly during

the communication, using a protocol tha t treats

semantic negotiation. The sender agen t (customer)

sends re quests to th e receiver agent (provider). Each

ENASE 2017 - 12th International Conference on Evaluation of Novel Approaches to Software Engineering

250

agent can use a number of performatives (question,

request, assert, propose, refuse, reject, u nknown,

concede, challenge and withdraw) in a c ertain order

(Table 1) to argue his perception of th e world a nd his

personal beliefs. We adopt this negotiation strategy

because it takes into consideration the dynamicity of

interactions a nd cognition of agents.

We consider C

p

the set of capacities of an agent at

a given time and S a set of concepts re presenting a

content of th e message after translation. It’s possible

to build from C

p

, the subset C

p

(S) where C

p

(S)

contains the capacities m a ximizing the score(S,c),

and c ∈ C

p

. To sum up, if a subset of the maximum

capabilities exists give a score result is close to 1,

that means the c a pabilities of this subset are similar

to the query, if the result is close to 0, that means the

request and the current capabilities are different.

We define c

p

= c

p

(S,c) the maximum value of score

result of the su bset C

p

(S). C

p

(S) is defined as follows:

c

p

(S,c) = max

e∈C

p

(S)

score(S,e)|c ∈ C

p

(S) (5)

Reciprocally, we note C

i

the subset of impossible ca-

pabilities where c

i

is the resu lt of the score.

Our protocol is an extension of FIPA-request, we con-

sider here the requesting multi-response persuasion

protocol (defined ReqMultiRe sPersProto) using the

following rules: sr

R/P

, sr

A/W

and sr

A/R

(Morge and

Routier, 2007).

This protocol is determined by a set of sequence ru-

les (see table 1). “Each rule sp ecifies authorized re-

plying moves. Accor ding to the “Request/Propose”

rule (sr

R/P

) is qu ite similar. The heare r of a request

(request(ϕ(x))) is allowed to respond either by asser-

ting an instantiation of this a ssumption (assert(ϕ(a))),

or w ith a plea of ignorance (unknown(ϕ(x))). The

respond can resist or surrender to the previous

speech act.” For example, the “Assert/Welcome”

rule (written sr

A/W

), indica te s that when it sends

an (assert(Φ)), surrendering acts are close to the di-

alogue line. A concession (concede(Φ)) surrenders

to the previous proposition. Resisting acts allow

the discussion; a challenge (challenge(ϕ)) and refuse

(refuse(ϕ)), resist to the previous proposition. In the

“Assert/Reject” (written sr

A/R

) rules, the rejec tion of

one of the assumptions previously asserted (reject(ϕ))

closes the dialogue line. As mentioned in his article

in section 5 , the same argumentative/public seman-

tics are shared between an assertion an d a pro position.

Furthermore, assert(¬ϕ), refuse(ϕ) and reject(ϕ) are

identical. But, the place of speech acts are different in

the sequence of moves.

A strategy is applied to choose which communicative

act to use according to a threshold system ∈ [0,1]

Table 1: Set of speech acts and their potential answers.

(Morge and Routier, 2007).

sequence Speech Resisting Surrendering

rules acts replies replies

sr

R/P

request(ϕ(x)) propose(ϕ(a)) unknown(ϕ)

sr

A/W

assert(Φ) challenge(ϕ), concede(Φ)

refuse(ϕ),

ϕ ∈ Φ

sr

A/R

assert(Φ) challenge(ϕ), concede(Φ)

ϕ ∈ Φ reject(ϕ),

ϕ ∈ Φ

(Maes, 1994). The answer given by o ur system

depends on the results of c

p

and c

i

using c

min

and

c

max

4

. We differentiate 5 different response strategies

for the message of the receiver agent according to

sr

R/P

, sr

A/W

and sr

A/R

rules of (Morge and Routier,

2007).

1. If c

p

≥ c

max

and c

i

≤ c

p

and |C

p

(S)| = 1 , the

request is considered properly understood by th e

agent. We respond by asserting an instantiation of

this assumption (assert(ϕ(a))).

2. If c

min

< c

p

< c

max

and c

p

< c

i

, the receiver

agent believes that the r eceived que ry is not pos-

sible (i.e. c

p

< c

i

s ). So, it sends to the initial

agent a list of closest events possible to the re-

ceived command. For this, we introduce the p er-

formative propose(ϕ(a)) indicating: 1) the initial

message is not executable and 2) that the content

of the message is a set of commands (request) that

are a cceptable and judged to be close to the origi-

nal message.

3. If c

p

≤ c

i

, and (c

max

≤ c

p

, but |C

p

(S)| > 1), and

(c

min

< c

p

< c

max

); then, impossible capa bilities

can be ignored, but the agent is not sure if the re-

quest is understo od (c

p

< c

max

) or that there are

too many can didate queries (|C

p

(S)| > 1). In o t-

her words, the receiver agent has a list of possible

candidate capabilities, but can not proceed with

executions. Hence, the B agent makes a clarifi-

cation request to the agent A by noting the set of

possible capabilities most corresponding to the re-

ceived query (i.e. the receiver agent sends the set

C

p

(S) to the sender agent). That is why, we intro-

duce the act speech (challeng e(ϕ)).

4. If c

p

≤ c

min

and c

min

≤ c

i

, the rec e iver agent

thinks that the order received is understood, but it

is impossible. So, the receiver agent must tell the

sender agent tha t his co mmand is understood, but

is not currently applicable. We introduce to notify

this situation, the performative ((concede (ϕ)).

4

Maes proposed empirically use the values c

min

=0.3 and

c

max

=0.8.

Agent-based Semantic Negotiation Protocol for Semantic Heterogeneity Solving in Multi-agent System

251

5. If c

p

≤ c

min

and c

i

≤ c

min

, the receiver agent is not

able to correctly interpret the request of the sende r

agent. We then introduce the performative refuse.

4 CASE STUDY

4.1 Benchmark Production System

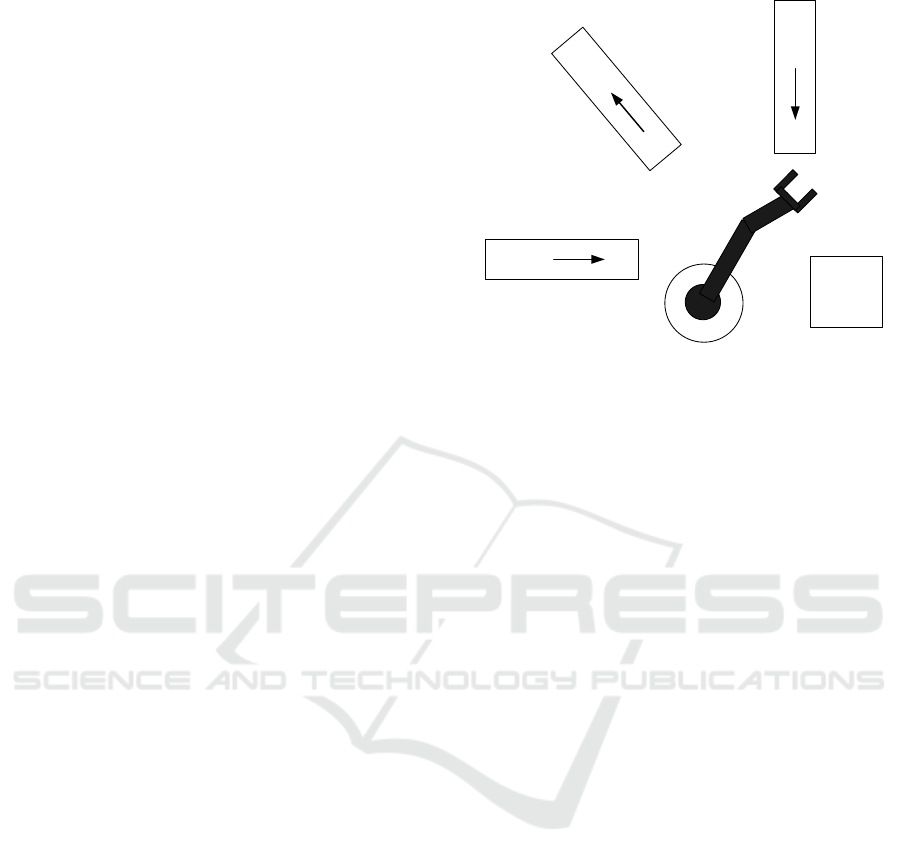

As briefly mentioned before in (Ben Noureddine

et al., 2016), we illustrate our contribution with a sim-

ple current example called RARM (Hruz and Zhou,

2007) (represented in Figure 1). It is composed of

two inputs and one output conveyors, a servicing ro-

bot and a processing-assembling center. Workpieces

to b e treated come irregularly on e by one. The work-

pieces of type A are delivered via conveyor C1 and

workpieces of the type B via the conveyor C2. Only

one workpiece can be on the input conveyor. A robot

R transfers workpieces one after the other to the pro -

cessing ce nter. The next workpiece can be put on the

input conveyor when it has been cleared by the robot.

The technology of production re quires that firstly an

A-workpiece is inserted into the center M and tre a te d,

then a B-workpie c e is added to the center, and finally

the two workpieces are assembled. Afterwards, the

assembled product is taken by the robot and put above

the C3 conveyor of output. The assembled product

can be transferred to C3 only when the output con-

veyor is empty and ready to receive th e next produced

one. We model individual robot systems as distribu-

ted age nts that deal autonomously with both local task

planning and conflicts that occ ur due to the presence

of oth e r r obotic agents. The overall behavior of the

RARM as a whole is then an emerging functiona lity

of the individual skills and the interac tion among the

forklifts.

The robot-like agent connects directly to the environ-

ment via through sensors.

4.1.1 Sensing Input

The ro bot-like agent receives the information of the

probes a s follows:

1. is there an object of the typ e A at the extreme end

of the position p1? (sens1)

2. is the conveyor C1 in its extreme left po sition?

(sens2)

3. is the conveyor C1 in its extreme right position?

(sens3)

4. is there an object of the type A at the treatment

unit M? (sens4)

A

Conveyor C1

A

B

C

o

n

v

e

y

o

r

C

3

B

Conveyor C2

Position p1

Position p2

Position p3 Position p4

P

osit

io

n

p

5

P

o

sitio

n

p6

Robot r

Processing unit

M

Figure 1: The benchmark production system RARM.

5. is the conveyor C 2 in its extreme left position?

(sens5)

6. is the conveyor C2 in its extreme right position?

(sens6)

7. is there an object of the type B at the extreme end

of the position p3? (sens7)

8. is there an object of the ty pe B at the treatment

unit M? (sens8)

9. is the conveyor C 3 in its extreme left position?

(sens9)

10. is the conveyor C3 in its extreme r ight po sition?

(sens10)

11. is there an object of the type AB at the treatment

unit M? (sens11)

12. is the agent’s robot-like ar m in its lower position?

(sens12)

13. is the agent’s robot-like arm in its highest posi-

tion? (sens13 )

4.1.2 Action Output

Once a n adapted order, called the pla n, is found; the

order w ith elevated level must be converted to ord ers

of low level to be sent to the releases so that the robot-

like agent can really carry out the plan.

Running example

The system can be ordered using the following relea-

ses:

1. move the conveyor C1 (act1);

2. move the conveyor C2 (act2);

3. move the conveyor C3 (act3);

4. rotate robotic agent (act4);

ENASE 2017 - 12th International Conference on Evaluation of Novel Approaches to Software Engineering

252

5. move elevating the robotic agent arm vertically

(act5);

6. pick up and drop a piece with the robotic agen t

arm (act6);

7. treat the workpiece (act7);

8. assembly two pieces (act8).

4.2 Preliminary Results

We prototype these id eas using the JADE agent plat-

form. We consider two RARM, each one has its

own ontology O

A

(resp. O

B

) to describe autonomous

robots. We define ontology for sub-domains, sen-

sors, perceptions, planning, actuators, decision ma-

king, etc. We assume that the agent descriptions of

the world is incomplete:

• O

A

has a complete description of sensing input

(e.g. sens1, sens2, sens3, sens4 , etc.), an action

output re presentation (e.g. act1, act2, act3, act4,

etc.), but an incomplete representation of the po-

licy (a whole state-action installs with at most an

action for each state).

• O

B

has a comp le te description fo r sensing input,

an incomplete representation of action output, and

a co mplete representation of plan (policy).

We consider 5 plans {π

i

|i=0..4 } in diso rder of the

actions used in our example:

• π

0

: (C2

le f t, take

2

, load

2

, process

2

)

• π

1

: (load

1

, put

1

, process

1

, C1

rig ht)

• π

2

: (C1

le f t, tak e

1

, load

1

, put

1

, process

1

,

C1 right)

• π

3

: (C2

le f t, take

2

, process

1

, C2 right)

• π

4

: (take

1

, load

1

, put

2

, C2

rig ht)

• π

5

: (C1

le f t, take

1

, put

2

, process

1

)

According to this kind of modeling, some action out-

puts explicitly desig nated by th e robot R ARM

a

be-

come ambiguous to RARM

b

. The align ment between

the ontologies d oes not solve the lack of action out-

puts’ rep resentation in the robot RARM

b

, similarly

the classical request protocol does not resolve the am-

biguity. We assume in this example that for every M

mapping, then A(M) = 1. We develop a scenario bet-

ween RARM

a

and RARM

b

. The goal is to exploit the

use of similarity measure in order to simplify the inte-

ractions among heterogeneous a gents, with different

sensors and different cap abilities. In this scenario, the

RARM

a

requests moving the conveyor C3 (i.e., act3)

to the RARM

b

request(1, 1, {do , sens9, ac t3}) cor-

respond ing to the request 1 of conversation 1. The

system checks the a lignment service to calculate the

alignment fo r this que ry, transforms the c oncepts in

the set of concepts correspondin g to the capabilities in

the ontology O

B

and sends a message to the RARM

b

.

Then the robot-like agent RARM

b

calculates its capa-

cities.

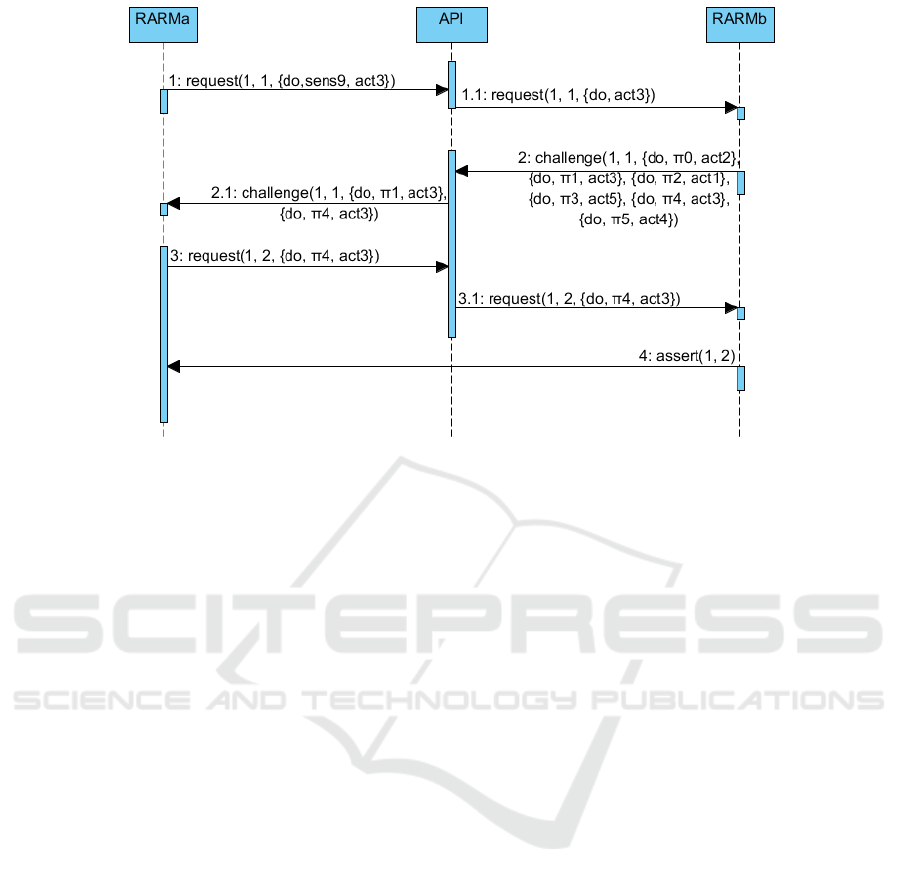

Figure 2 shows an interaction example based on the

context of RARM

b

executing cap a bilities. In our ex-

ample, RARM

b

has 6 plans. So, |C

p

| = 6. Conside-

ring tha t Sim

c

(sens9, act3 ) = 0.6, with c

p

= (1+0.6)/2

= 0.8. RARM

b

applies the third strategy, it uses the

performative challenge to inform RARM

a

by the am-

biguity. So. it sends the answer challenge(1, 1, {{do,

π

0

, act2}, {do, π

1

, act3}, {do, π

2

, act1}, {do, π

3

,

act5}, {do, π

4

, act3}, {do, π

5

, act4}}.

The ontology of RARM

a

doesn’t exactly allow mo-

deling outputs, the answer of RARM

b

is translated

into challenge (1, 1, {{do, π

1

, act3}, {do, π

4

, act3}}.

Since the initial context is RARM

a

, the next request

to RARM

a

clarifies the plans: request(1, 2, {do, π

4

,

act3}. Now, the application finds no more difficulty

and eventually is accepted by RARM

b

(i.e. RARM

b

sends a performative of confirmation assert(1, 2)).

5 CONCLUSION

In this paper, we pro pose a reflective agent model

to make a negotiation in an open and heterogeneous

MAS. We present a set of communicative acts allo-

wing queries disambiguation of heterogeneous agents

in incom plete alignment ontology. To do this, we use

a measure similarity to compare each entity of the

ontology with the other and select the most similar

pairs. In fact, when an agent A sends a request to an

agent B, B comp ares the information from the sent

message w ith its abilities; it calculates the cor respon-

dence degre e and acco rding to this degree it chooses

the corre sponding performative. This model introdu-

ces a kind of process which overco mes some common

problems that are encou ntered durin g the MAS deve-

lopment. Currently, we have b een developing a ben-

chmark production system as a case study on JADE

to improve the quality of the outcome; we shift fr om

a non-understood respond FIPA-request protocol to a

multi-response to clarify th e request. Finally, we pro-

vide an agen t interaction model to reach an agreement

over heterogene ous re presentations. The future plan-

ned works will d eal with the implementation of the

proposed model on a real multi-robot system with lar-

ger sets of data in heterogeneous ontologies.

Agent-based Semantic Negotiation Protocol for Semantic Heterogeneity Solving in Multi-agent System

253

Figure 2: Interaction example between two multi-robot system. The center column doesn’t refer to an agent but represents an

ontology alignment service used for proof.

REFERENCES

Bellifemine, F., Caire, G., and Greenwood, D. (2007). De-

veloping multi-agent systems with jade.

Ben Noureddine, D., G harbi, A., and Ben Ahmed, S.

(2016). An approach f or multi-robot system based on

agent layered architecture. In P roc. of the 87th Inter-

national Conference on Artificial Intelligence and Soft

Computing (ICAISC’16), Lasbone, Portugal, pages 1–

9.

Bordini, R. e. a. (2006). A survey of programming langua-

ges and platforms for multi-agent systems. Informa-

tica, 30(1):33–44.

Chuan-Jun, S. (2011). Jade implemented mobile multi-

agent based, distributed information platform for per-

vasive health care monitoring. Applied Soft Compu-

ting, 11(1):315–325.

Comi, A., Fotia, L., Messina, F., Pappalardo, G., Rosaci,

D., and Sarne, G. M. (2015). Using semantic negotia-

tion for ontology enrichment in e-learning multi agent

systems. In Ninth International Conference on Com-

plex, Intelligent, and Software Intensive Systems, pa-

ges 474–479.

David, J., Euzenat, J., Scharffe, F., and Trojahn dos San-

tos, C. (2011). The alignment api 4.0. Semantic web

journal, 2(1):3–10.

De Meo, P., Quattrone, G., Rosaci, D., and Ursino, D.

(2012). Bilateral semantic negotiation: a decentrali-

sed approach to ontology enrichment in open multi-

agent systems. International Journal of Data Mining,

Modelling and Management, 4(1):1–38.

Euzenat, J. (2013). An api for ontology alignment.

Springer-Verlag, pages 698–712.

Garruzzo, S., Quattrone, G., Rosaci, D., and Ursino, D.

(2011). Improving agent interoperability via the au-

tomatic enrichment of multi-category ontologies. Web

Intelligence and Agent Systems, 9(4):291–318.

Garruzzo, S. and Rosaci, D. (2008). Agent clustering ba-

sed on semantic negotiation. ACM Transactions on

Autonomous and Adaptive Systems (TAAS), 3(2):7.

Hruz, B. and Zhou, M. (2007). Modeling and control of

discrete-event dynamic systems with petri nets and ot-

her tools. Springer, page 67.

Laera, L., Blacoe, I., Tamma, V., Payne, T.and Euzenat, J.,

and Bench-Capon, T. (2007). Argumentationove on-

tology correspondances in mas. In 6th international

joint conference on Autonomous Agents and MultiA-

gent Systems (AAMAS’07), pages 1285–1292.

Maes, P. (1994). Agents that reduce workload and in-

formation overload. Communications of the ACM,

37(7):30–40.

Messina, F., Pappalardo, G., Pappalardo, C., Santoro, D.,

Rosaci, G., and L., S. G. M. (2014). An agent

based negotiation protocol for cloud service level

agreements. In 2014 IEE E 23rd International In WE-

TICE Conference (WETICE), pages 161–166.

Morge, M. and Routier, J. (2007). Debating over heteroge-

neous descriptions. Applied Ontology, 2:333–349.

Salvatore, V. and Vincenzo, C. (2009). An extended

jade-s based framework for developing secure multi-

agent systems. Computer Standards & Interfaces,

31(5):913–930.

Shvaiko, P. and Euzenat, J. (2013). Ontology matching:

State of the art and future challenges. I EEE Transacti-

ons on knowledge and data engineering, 25:158–176.

Tversky, A. ( 1977). Features of similarity. Psychological

Review, pages 327–352.

ENASE 2017 - 12th International Conference on Evaluation of Novel Approaches to Software Engineering

254