EEG Data of Face Recognition in Case of Biological Compatible

Changes: A Pilot Study on Healthy People

Aurora Saibene

1

, Silvia Corchs

1

, Roberta Daini

2

, Alessio Facchin

2

and Francesca Gasparini

1

1

Department of Informatics, Systems and Communication, University of Milano-Bicocca, Milano, Italy

2

Department of Psychology, University of Milano-Bicocca, Milano, Italy

Keywords:

Face Recognition, EEG Data, Power Spectral Density.

Abstract:

Recognizing people from their faces has a strong impact on social interaction. In this paper we present a

pilot study on healthy people where brain activities during a face recognition task have been recorded us-

ing electroencephalogram (EEG). Target images (previously seen in a training phase), were presented in the

recognition phase in two different conditions: identical to those of the initial phase, modified with biologically

plausible changes (such as features enlargement or changed expression) and randomly presented with new

faces. The raw EEG data were properly cleaned from both biological or non-physiological artifacts. Statis-

tically significant differences in brain activations were registered between the two experimental conditions,

especially in the frontal area, during the recognition process. The results of the analysis on this database of

healthy people can be useful as baseline for further studies on people affected by congenital prosopagnosia or

autism.

1 INTRODUCTION

Being able to study brain responses to a specific set

of stimuli represents a way to access new knowledge

on how the cognitive processes are activated in a task-

based environment and if there are discriminations be-

tween subjects affected or unaffected by cognitive im-

pairments.

The electroencephalogram (EEG) is considered a

useful mean to obtain the aforementioned informa-

tion. The EEG is in fact a multi-channel signal, which

measures cerebral bio-electric potentials using elec-

trodes positioned on the scalp and thus the brain activ-

ities and functions with temporal resolution (Dickter

and Kieffaber, 2013). In this work we collected EEG

multi-channel data, recorded during a face recogni-

tion task. The study of face recognition has been

intense in the last decades not only in cognitive re-

searches, but also in computer vision and surveillance

applications (Zhao et al., 2003; Cevikalp and Triggs,

2010).

Recognizing people from their faces has a strong

impact on social interaction, e.g. to discriminate a

friend from a foe, to precisely identify an individual

and try to understand his/her behavior or mood, or

to be able to interpret an emotive state starting from

facial gestures.

The human brain of healthy people is generally

able to recognize a specific face, even if its charac-

teristics have changed for various reasons, as getting

older or the simple use of make-up. Several face

recognition researches used as stimuli, i.e. faces to

be recognized, images of well known personalities

(e.g. politicians, athletes, actors). The task was gen-

erally to discriminate between familiar and unfamil-

iar faces (Sun et al., 2012; Li et al., 2015;

¨

Ozbeyaz

and Arıca, 2018). This study was designed in order

to trace the brain activity linked to different mech-

anisms involved in face recognition. In particular,

we recorded the EEG signal on a healthy population

by using a double task that consisted of: recogniz-

ing a face as previously presented or new and indi-

cating whether the already seen face was identical or

modified. The modified faces had biological compat-

ible changes of features (e.g. eye magnified, mouth

reduced) or facial expression (e.g. happiness, sad-

ness). The paradigm was the same used in previ-

ous behavioral studies (Daini et al., 2014; Malaspina

et al., 2014) for investigating face recognition in in-

dividuals affected by congenital prosopagnosia (i.e.

a developmental impairment of the ability of recog-

nizing people from their face) or autism. Our aim

was to create a pilot scheme on normal recognizers

of brain activities analyzed through EEG data to be

414

Saibene, A., Corchs, S., Daini, R., Facchin, A. and Gasparini, F.

EEG Data of Face Recognition in Case of Biological Compatible Changes: A Pilot Study on Healthy People.

DOI: 10.5220/0006909104140420

In Proceedings of the 15th International Joint Conference on e-Business and Telecommunications (ICETE 2018) - Volume 1: DCNET, ICE-B, OPTICS, SIGMAP and WINSYS, pages 414-420

ISBN: 978-989-758-319-3

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

used with atypical development populations to verify

the involvement of hypothesized psychophysiological

mechanisms. EEG data are easily affected by noise

(artifacts), which may be caused by peculiar biolog-

ical or non-physiological conditions and thus should

be processed to obtain a cleaner signal without loss

of useful experimental data. Instead of rejecting track

portions presenting noise, numerous studies have sug-

gested different artifact removal approaches: band-

pass filtering trials (Itier and Taylor, 2004), using on-

line filters (Parketny et al., 2015), applying a combi-

nation of high-pass and low-pass Butterworth filters

and rejecting manually only the ocular artifacts (i.e.

eye blinking or horizontal eye movements) (Caharel

et al., 2015). Other studies have defined multiple

steps that combine the techniques previously listed

and thus applied low-pass filtering, down-sampling,

band-pass filtering, manual pruning and adding the

Independent Component Analysis (ICA) for ocular

artifacts rejection and baseline corrections over sub-

ject epochs (Barragan-Jason et al., 2015).

In this work we present a pipeline for artifact sup-

pression, that does not stretch too much the actual

electrophysiological signal. Finally, on the cleared

data the Power Spectral Density (PSD) was estimated

for each channel and frequency rhythm (alpha, beta

and gamma) through the Welch’s method. Thus the

main contributions of this work are:

• A database of raw EEG data, acquired on 17

healthy subjects during a face recognition task, as

described in Section 2.

• A semiautomatic procedure to clean the raw data,

and the clean EEG data obtained (see Section 3).

• A statistically significant evidence that there are

differences in brain activations especially in the

frontal area during the recognition process that

makes this database suitable to be a pilot study for

further analysis on people affected by congenital

prosopagnosia or autism (see Section 4).

2 EXPERIMENTAL SETUP

2.1 Subjects

The experiment was conducted in accordance with the

ethical standards of the 1964 Declaration of Helsinki

and fulfilled the ethical standard procedure recom-

mended by the Italian Association of Psychology

(AIP). The experimental protocols were approved by

the ethical committee of the University of Milano-

Bicocca. All the participants were volunteers and

gave their informed consent to the study. Twenty sub-

jects (eleven females), aged between 18 and 30 (mean

= 24.6; SD = 1.7), participated in the experiment. All

of them declared no neurological or neuropsycholog-

ical deficits and had normal or corrected to normal

vision.

2.2 Stimuli and Tasks

Stimuli were selected from a database previously used

in (Daini et al., 2014) and generated by (Comparetti

et al., 2011). The neutral faces were created from

digital photos of real faces by means of Adobe Pho-

toshop and Poser 5.0 software (Curios Lab, Inc., ad

e-frontier, Inc., Santa Cruz, CA). Starting from these

neutral faces, (Comparetti et al., 2011) made differ-

ent kinds of manipulations, modifying features or fa-

cial expressions. For more details about how the faces

were created please refer to (Daini et al., 2014).

In Figure 1 the two neutral faces used as tar-

get stimuli and some of their modified versions are

shown.

The experiment consisted of four main phases:

• An adaptation phase, where ten iterations of the

two target stimuli (neutral faces corresponding to

two identities) were presented to each participant.

• A trial phase, where a set of 20 random faces were

presented. This set consisted of the two target

neutral faces together with the modified versions

(for a total of eight stimuli) and twelve distractors

(non-target faces and some of their modified ver-

sions). Before the stimulus presentation, a fixa-

tion point was displayed on the screen for 500 ms.

The stimulus appeared for 500 ms. The subject

had to answer (with a mouse click) if she/he rec-

ognized the identity shown in the stimulus (iden-

tity task). In case of affirmative response, the sub-

ject had also to answer if the target was exactly

the same previously seen in the adaptation phase ,

i.e. without any facial modification (neutral task).

The type of modification was not asked. The sub-

jects had also to discriminate the non-target stim-

uli, answering negatively to the identity task (table

1).

• Two experimental phases each consisting of 320

stimuli, with the same scheme presentation as the

trial phase. These stimuli were randomly cho-

sen from 640 ones composed of 320 repetitions

of the two target faces and all their modified ver-

sions and 320 distractors (non-target faces and

their modifications). The answers to the identity

and neutral tasks were collected and successively

used to label the events. Each answer time limit

EEG Data of Face Recognition in Case of Biological Compatible Changes: A Pilot Study on Healthy People

415

Figure 1: Two neutral basic stimuli (first column) together with some of their modified versions.

Table 1: Case scenarios. The identity task corresponds to

the question: ’Is the face a target one?’. The neutral task

appears only if the answer to the identity one is affirma-

tive: ’Is the target identical to the one seen in the adaptation

phase (subsection 2.3)?’. In case of non-target face, the cor-

rect answer to the task question is ’no’ and the neutral task

question does not appear.

Stimulus State

Identity

task

Neutral

task

neutral

basic

target

yes yes

modified

target

yes no

non-target no

not

asked

was set to 2000ms. After that time a new iteration

is performed, and the one with a missed answer is

considered as a wrong answer.

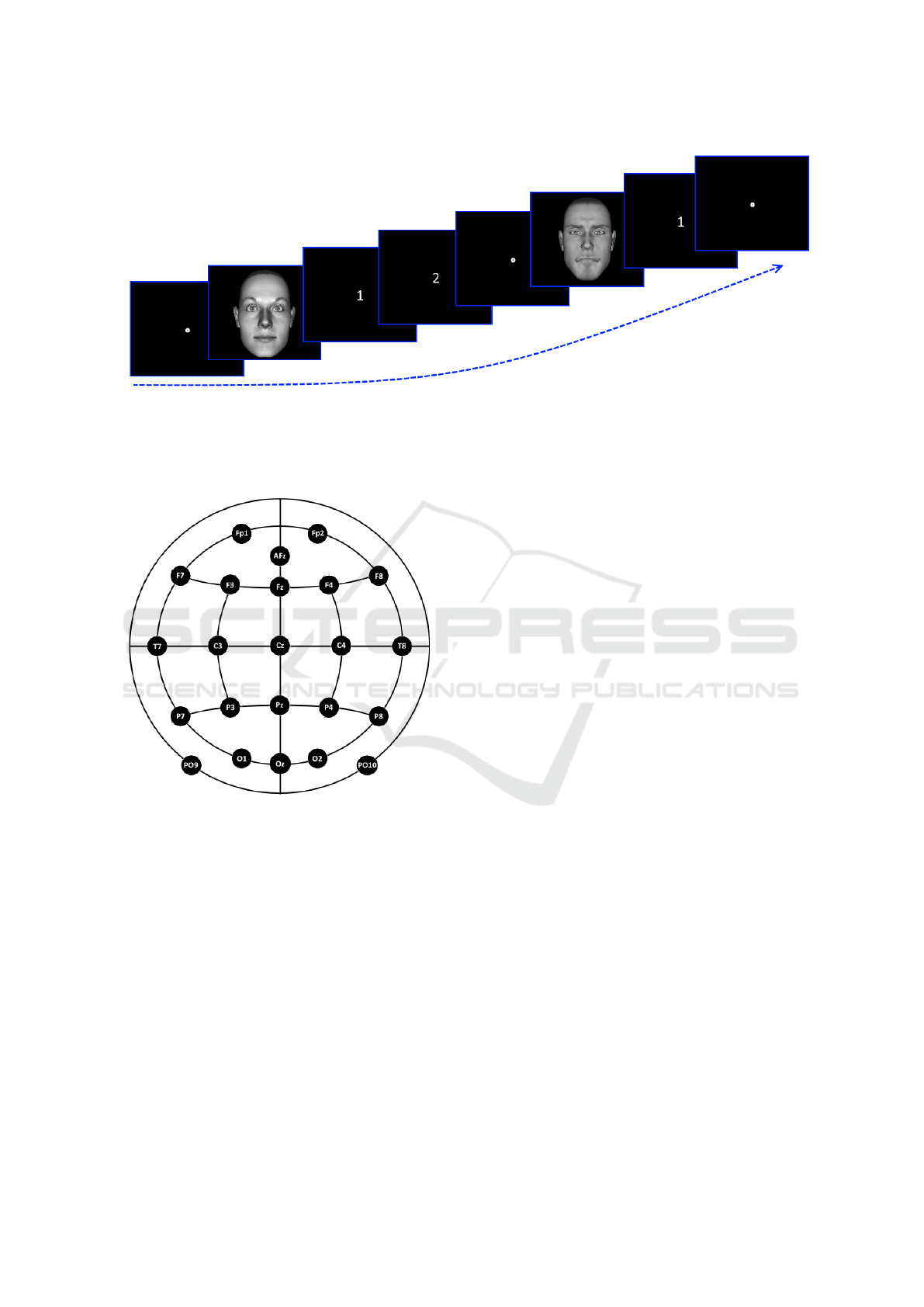

The stimuli presentation (Figure 2) was developed

with OpenSesame and triggers sent to the recording

computer to keep track of the event time.

In this work we have considered the following

four possible events:

• Correct IDentification of the neutral basic Target

(CIDT), i.e. one of the faces displayed in the

adaptation phase (see section 2.3);

• Correct perception of the MODified Target

(CMODT);

• Correct answer over a Non-target stimulus Recog-

nition (CNR), i.e. a face that was not displayed in

the adaptation phase;

• ERRoneous response (ERR).

Each trigger was labeled accordingly to the

answer types, identifying the four events CIDT,

CMODT, CNR and ERR.

From the analysis of the answers, three subjects

were excluded from the study due to technical faults

and accuracy lesser than 50% in the recognition tasks.

The EEG signals of the remaining seventeen subjects

were preprocessed and analyzed.

2.3 EEG Data Recording

Each subject was prepared for the recording and had

to wear the appropriate EEG cap, a Brain Products

GmbH EasyCap, following the standard 10-20 sys-

tem to which were added the AFz, PO9, PO10, Oz

electrodes. The electrodes positions are reported in

Figure 3. Four sensors were placed under and beside

the right eye for ocular artifacts tracking and on the

earlobes as ground and reference channels.

Afterward the subject was asked to sit in front of

a monitor in a soundproof Faraday cage and exposed

to the experiment.

3 DATA PREPROCESSING

All the procedures for noise removal were devel-

oped using MATLAB, and EEGLAB (Delorme and

Makeig, 2004) (MATLAB tool for EEG signal pro-

cessing and analysis).

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

416

Figure 2: Stimuli presentation.The first face is a target one, thus - if correctly answered - after the stimulus will appear the

white 1 screen, where the subject has to identify the face as seen/unseen in the adaptation phase, followed by the white 2

screen, corresponding to the ’Is identical to the one seen before?’ question. The second face is a non-target, thus - if correctly

answered - only the white 1 screen will appear. Notice that between each stimulus is displayed a fixation point placed in the

screen middle.

Figure 3: Electrode positions on the EasyCap, used in the

experiment.

Each raw recording was processed following three

main steps, beginning with a dataset initialization:

import of the vhdr BrainRecorder file with bva plug-

in for EEGLAB, channel editing and re-reference to

the electrically neutral Cz sensor, removal of uninter-

esting track parts corresponding to the adaptation and

trial phases and division of the total recording into

the two experimental parts. Afterward the core signal

processing procedure took place: the Hamming win-

dowed sinc Finite Impulse Response (FIR) filter was

run as a combination of low-pass and band-pass fil-

ters, cutting all the frequencies below and above 1 and

50Hz; in contrast to other literature works (Klados

et al., 2011) (Rad

¨

untz et al., 2015) (Roy et al., 2013)

(Scharinger et al., 2015) (Winkler et al., 2011), the up-

per bound was increased from 40 to 50Hz to prevent

a too high suppression of the signal due to the follow-

ing processing steps. Also, the filter range was justi-

fied by the fact that in the present study the rhythms,

frequency bands, of interest were the alpha (8-13Hz),

beta (13-30Hz) and gamma (30-100Hz) ones and the

recording was performed over non-pathological sub-

jects.

Having obtained a more clean signal, the Inde-

pendent Component Analysis (ICA) computation was

performed. In fact, the ICA model (Jung et al., 2000)

was satisfied by the signal characteristics: the multi-

channel recording was a mixture of brain activity and

artifacts, the volume conduction was considered to

be linear and instantaneous, the sources involving the

noisy components were not generally time locked to

the neural activity, the number of sources was equal

to the number of sensors. The Independent Compo-

nents (ICs) obtained from the previous computation

were then inspected with the aid of SASICA exten-

sion for EEGLAB (Chaumon et al., 2015), which pro-

vided topoplots and statistics based on power spec-

trum, kurtosis and correlation with the ocular elec-

trodes for artefactual components discrimination. Af-

ter being identified, the ICs were removed from the

FIR filtered signal. The eventually remaining artifacts

(mostly spiky eye blinks) were manually pruned.

4 RESULTS AND DISCUSSION

For each subject, the sets of CIDT, CMODT, CNR

and ERR events were collected. The signal portions

corresponding to the 500 ms preceding the stimulus

presentation were also collected and considered as

EEG Data of Face Recognition in Case of Biological Compatible Changes: A Pilot Study on Healthy People

417

Table 2: For each channel and each rhythm the percentage

of subjects that shows a significant variation in PSD com-

paring baseline and CIDT set (p-values < 0.05) is reported.

The highest percentage of subjects reports a great variability

in the alpha rhythm for parietal electrodes.

Channel Alpha Beta Gamma

Fp1 41,18 41,18 11,76

AFz 35,29 23,53 11,76

Fp2 41,18 29,41 23,53

F7 41,18 41,18 23,53

F3 47,06 35,29 11,76

Fz 41,18 35,29 11,76

F4 52,94 29,41 17,65

F8 41,18 29,41 23,53

T7 35,29 41,18 17,65

C3 47,06 47,06 23,53

C4 35,29 17,65 23,53

T8 47,06 17,65 11,76

P7 41,18 52,94 23,53

P3 58,82 52,94 23,53

Pz 52,94 47,06 23,53

P4 52,94 41,18 11,76

P8 47,06 17,65 11,76

PO9 41,18 29,41 5,88

O1 35,29 35,29 11,76

Oz 35,29 35,29 17,65

O2 41,18 29,41 11,76

PO10 41,18 29,41 11,76

Table 3: For each channel and each rhythm the percentage

of subjects that shows a significant variation in PSD com-

paring baseline and CMODT set (p-values < 0.05) is re-

ported. A percentage of subjects greater than 70% reports a

great variability in the alpha rhythm for frontal electrodes.

Channel Alpha Beta Gamma

Fp1 70,59 52,94 17,65

AFz 76,47 47,06 11,76

Fp2 70,59 52,94 11,76

F7 70,59 41,18 23,53

F3 70,59 52,94 11,76

Fz 70,59 58,82 5,88

F4 70,59 52,94 17,65

F8 58,82 41,18 17,65

T7 58,82 41,18 11,76

C3 58,82 58,82 17,65

C4 41,18 23,53 11,76

T8 41,18 47,06 11,76

P7 47,06 41,18 11,76

P3 52,94 52,94 35,29

Pz 64,71 64,71 29,41

P4 47,06 41,18 29,41

P8 41,18 23,53 17,65

PO9 47,06 47,06 17,65

O1 47,06 35,29 17,65

Oz 52,94 41,18 29,41

O2 58,82 35,29 23,53

PO10 47,06 35,29 29,41

Table 4: For each channel and each rhythm the percentage

of subjects that shows a significant variation in PSD com-

paring baseline and CNR set (p-values < 0.05) is reported.

About 90% of subjects reports a great variability in the al-

pha rhythm for frontal electrodes.

Channel Alpha Beta Gamma

Fp1 94,12 64,71 35,29

AFz 94,12 70,59 23,53

Fp2 94,12 70,59 29,41

F7 88,24 58,82 29,41

F3 88,24 52,94 17,65

Fz 88,24 64,71 23,53

F4 88,24 64,71 35,29

F8 94,12 70,59 29,41

T7 82,35 70,59 35,29

C3 94,12 88,24 41,18

C4 64,71 47,06 23,53

T8 88,24 64,71 23,53

P7 58,82 58,82 29,41

P3 76,47 82,35 41,18

Pz 88,24 88,24 29,41

P4 70,59 64,71 23,53

P8 82,35 52,94 23,53

PO9 70,59 52,94 29,41

O1 76,47 58,82 35,29

Oz 76,47 64,71 29,41

O2 64,71 58,82 35,29

PO10 88,24 58,82 29,41

Table 5: For each channel and each rhythm the percentage

of subjects that shows a significant variation in PSD com-

paring baseline and ERR set (p-values < 0.05) is reported.

About 90% of subjects reports a great variability in the al-

pha rhythm especially for frontal and parietal electrodes,

and about 80% in the beta rhythm for the same electrodes.

Channel Alpha Beta Gamma

Fp1 94,12 70,59 17,65

AFz 88,24 64,71 23,53

Fp2 94,12 70,59 23,53

F7 94,12 76,47 35,29

F3 94,12 76,47 17,65

Fz 88,24 70,59 23,53

F4 82,35 64,71 35,29

F8 88,24 82,35 35,29

T7 100,00 82,35 41,18

C3 82,35 76,47 41,18

C4 64,71 52,94 23,53

T8 82,35 58,82 17,65

P7 58,82 64,71 35,29

P3 76,47 88,24 47,06

Pz 88,24 76,47 29,41

P4 76,47 64,71 29,41

P8 88,24 52,94 17,65

PO9 70,59 64,71 35,29

O1 58,82 52,94 35,29

Oz 58,82 47,06 41,18

O2 70,59 52,94 29,41

PO10 70,59 58,82 17,65

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

418

baseline. During this time interval the fixation point

was shown. The PSD of the events of each set was

estimated using the Welch’s method for each sub-

ject, and each electrode. Also the PSD of the corre-

sponding baselines were evaluated. One-way ANaly-

sis Of Variance (ANOVA) was then applied for each

of the three rhythms of interest, i.e. alpha, beta and

gamma, to determine significant brain activity varia-

tions between baseline and each of the four types of

events. This analysis was performed for each subject

and electrode. In Tables 2-5 the percentage of subjects

that shows a significant variation in PSD (p−value <

0.05) was reported for each electrode and rhythm, for

CIDT, CMODT, CNR, and ERR events respectively.

These percentages were in general higher for alpha

and beta rhythms, in particular for CNR events (cor-

rect identification of non-target stimulus) and erro-

neous answers (ERR) and were more evident in the

frontal or parietal electrodes. In case of CIDT events

(correct identification of neutral basic target stimulus)

it seems that the variation of brain activity with re-

spect to the baseline was less evident.

5 CONCLUSIONS

Differences were found in the EEG patterns when rec-

ognizing neutral target faces versus faces modified

with biological plausible changes. In particular brain

activity changes were mainly found for alpha and beta

rhythms in frontal and parietal areas. The analysis

on this database can be useful as baseline for further

studies on people affected by congenital prosopag-

nosia or autism performing the same experiment, hav-

ing identified the brain activities variation in a healthy

population. This preliminary analysis can be strength-

ened to better distinguish between the four different

types of events, taking into account more features to

describe the EEG patterns, besides the PSD here con-

sidered. Moreover, to better compare results from

different subjects, proper data normalization has to

be addressed, such as subtracting the average power

recorded on the scalp or standardize the sensors volt-

age by using the z-score.

REFERENCES

Barragan-Jason, G., Cauchoix, M., and Barbeau, E. (2015).

The neural speed of familiar face recognition. Neu-

ropsychologia, 75:390–401.

Caharel, S., Collet, K., and Rossion, B. (2015). The

early visual encoding of a face (N170) is viewpoint-

dependent: a parametric ERP-adaptation study. Bio-

logical psychology, 106:18–27.

Cevikalp, H. and Triggs, B. (2010). Face recognition

based on image sets. In Computer Vision and Pat-

tern Recognition (CVPR), 2010 IEEE Conference on,

pages 2567–2573. IEEE.

Chaumon, M., Bishop, D. V., and Busch, N. A. (2015). A

practical guide to the selection of independent compo-

nents of the electroencephalogram for artifact correc-

tion. Journal of neuroscience methods, 250:47–63.

Comparetti, C., Ricciardelli, P., and Daini, R. (2011). Carat-

teristiche invarianti di un volto, espressioni emotive

ed espressioni non emotive: una tripla dissociazione?

Giornale Italiano di Psicologia, (1):215–224.

Daini, R., Comparetti, C. M., and Ricciardelli, P. (2014).

Behavioral dissociation between emotional and non-

emotional facial expressions in congenital prosopag-

nosia. Frontiers in human neuroscience, 8.

Delorme, A. and Makeig, S. (2004). Eeglab: an open source

toolbox for analysis of single-trial eeg dynamics in-

cluding independent component analysis. Journal of

neuroscience methods, 134(1):9–21.

Dickter, C. L. and Kieffaber, P. D. (2013). EEG methods for

the psychological sciences. Sage.

Itier, R. J. and Taylor, M. J. (2004). Face recognition mem-

ory and configural processing: a developmental ERP

study using upright, inverted, and contrast-reversed

faces. Journal of cognitive neuroscience, 16(3):487–

502.

Jung, T.-P., Makeig, S., Humphries, C., Lee, T.-W., Mck-

eown, M. J., Iragui, V., and Sejnowski, T. J. (2000).

Removing electroencephalographic artifacts by blind

source separation. Psychophysiology, 37(2):163–178.

Klados, M. A., Papadelis, C., Braun, C., and Bamidis, P. D.

(2011). REG-ICA: a hybrid methodology combining

blind source separation and regression techniques for

the rejection of ocular artifacts. Biomedical Signal

Processing and Control, 6(3):291–300.

Li, Y., Ma, S., Hu, Z., Chen, J., Su, G., and Dou, W.

(2015). Single trial eeg classification applied to a face

recognition experiment using different feature extrac-

tion methods. In Engineering in Medicine and Biol-

ogy Society (EMBC), 2015 37th Annual International

Conference of the IEEE, pages 7246–7249. IEEE.

Malaspina, M., Albonico, A., Daini, R., and Ricciardelli, P.

(2014). Elaborazione delle espressioni facciali, emo-

tive e non, in soggetti affetti da sindrome di asperger

o autismo ad alto funzionamento. Giornale italiano di

psicologia, 41(3):573–590.

¨

Ozbeyaz, A. and Arıca, S. (2018). Familiar/unfamiliar face

classification from eeg signals by utilizing pairwise

distant channels and distinctive time interval. Signal,

Image and Video Processing, pages 1–8.

Parketny, J., Towler, J., and Eimer, M. (2015). The activa-

tion of visual face memory and explicit face recog-

nition are delayed in developmental prosopagnosia.

Neuropsychologia, 75:538–547.

Rad

¨

untz, T., Scouten, J., Hochmuth, O., and Meffert, B.

(2015). EEG artifact elimination by extraction of

ICA-component features using image processing al-

gorithms. Journal of neuroscience methods, 243:84–

93.

EEG Data of Face Recognition in Case of Biological Compatible Changes: A Pilot Study on Healthy People

419

Roy, R. N., Bonnet, S., Charbonnier, S., and Campagne, A.

(2013). Mental fatigue and working memory load es-

timation: interaction and implications for EEG-based

passive BCI. In Engineering in Medicine and Biol-

ogy Society (EMBC), 2013 35th Annual International

Conference of the IEEE, pages 6607–6610. IEEE.

Scharinger, C., Kammerer, Y., and Gerjets, P. (2015). Pupil

dilation and EEG alpha frequency band power re-

veal load on executive functions for link-selection pro-

cesses during text reading. PloS one, 10(6):e0130608.

Sun, D., Chan, C. C., and Lee, T. M. (2012). Identifica-

tion and classification of facial familiarity in directed

lying: an erp study. PloS one, 7(2):e31250.

Winkler, I., Haufe, S., and Tangermann, M. (2011). Auto-

matic classification of artifactual ICA-components for

artifact removal in EEG signals. Behavioral and Brain

Functions, 7(1):30.

Zhao, W., Chellappa, R., Phillips, P. J., and Rosenfeld, A.

(2003). Face recognition: A literature survey. ACM

computing surveys (CSUR), 35(4):399–458.

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

420