A New Approach to Probabilistic Knowledge-Based Decision Making

Thomas C. Henderson

1

a

, Tessa Nishida

1

Amelia C. Lessen

1

, Nicola Wernecke

1

, Kutay Eken

1

and David Sacharny

2

1

School of Computing, University of Utah, Salt Lake City, Utah, U.S.A.

2

Blyncsy Inc, Salt Lake City, UT, U.S.A.

Keywords:

Probabilistic Logic Decision Making.

Abstract:

Autonomous agents interact with the world by processing percepts and taking acti ons in order t o achieve a

goal. We consider agents w hich account for uncertainty when evaluating the state of the world, determine a

high level goal based on this analysis, and then select an appropriate plan to achieve that goal. Such knowledge-

based agents must take into account facts which are always true (e.g., laws of nature or rules) and facts which

have some amount of uncertainty. This leads to probabilistic logic agents which maintain a knowledge base of

facts each with an associated probability. We have previously described NILS, a nonlinear systems approach

to solving atom probabilities, and compare it here to a hand-coded probability algorithm and a Monte Carlo

method based on sampling possible worlds. We provide experimental data comparing the performance of

these approaches in terms of successful outcomes in playing Wumpus World. The major contribution is the

demonstration that the NILS method performs better than the human coded algorithm and is comparable to the

Monte C arlo method. This advances the stat e-of-the-art in that NILS has been shown to have super-quadratic

convergence rates.

1 INTRODUCTION

Knowledge-based agents gene rally exhibit b rittle be-

havior when propo sitions can only b e true or false.

For example, Casado et al. (Casado et al., 2011) have

used a knowledge-based approach to handle event

recogn ition in multi-agent systems withou t consider-

ation of uncerta inty. More nuanced and informed de-

cision making is possible when the uncertainty of a

proposition can be characterized and included in the

evaluation of the current state in order to select an ac-

tion. Wang et al. (Wang et al., 2006) extend Hin-

drik’s logic programming language for Belief, De-

sire, Intention (BDI) agents (Hindriks et al., 1997)

by incorporating an interval-based uncertainty r e p-

resentation for th e language. They define a prob-

abilistic conjunction strategy to update the intervals

based on the probabilities of random variables which

satisfies the axioms of probability theory. However,

to capture all necessary relations between the atoms

requires an exponential number of constraints (i.e.,

∑

n

k=1

n

k

= 2

n

, where n is the number of logical

atoms). Dix et al. (J. Dix and Subrahmanian, 2000)

provide two broad classes of semantics for probabilis-

a

https://orcid.org/0000-0002-0792-3882

tic a gents. The drawback is that their analysis only

applies to negation free progr a ms, thus limiting their

usefulness he re. As another example, consider Milch

and Koller (Milch and Kller, 2000) whose probabilis-

tic epistemic logic (PEL) provide s a formal semantics

for probabilistic beliefs. However, PEL is based on

Bayesian networks which require the definition of the

full joint probability distribution. Other appro aches to

probabilistic logic have been proposed. Pearl (Pearl,

1988) developed Bayesian networks which struc ture

the full joint probability distribution as c onditiona l re-

lations b etween the logical variables. Reiter (Reiter,

2001) extended the situation calculus of McCarthy

to include probabilities, and Domingo s and Lowd

(Domingos and Lowd, 2 009) applied Markov Logic

Networks to relational problems in a rtificial intelli-

gence. All these methods have high computation a l

complexity (e.g., the expression of a Bayesian net-

work re quires representin g the 2

n

complete conjunc-

tions in th e network’s conditional tables, and MLN

inference is #P-complete). Moreover, none of these

methods exploit the probabilistic logical fr amewo rk

as advocated here wherein the agent’s decision mak-

ing processes are based on a novel probabilistic an aly-

sis of the world in terms of it laws (rules) and sensory

data.

34

Henderson, T., Nishida, T., Lessen, A., Wernecke, N., Eken, K. and Sacharny, D.

A New Approach to Probabilistic Knowledge-Based Decision Making.

DOI: 10.5220/0011606700003393

In Proceedings of the 15th International Conference on Agents and Artificial Intelligence (ICAART 2023) - Volume 3, pages 34-39

ISBN: 978-989-758-623-1; ISSN: 2184-433X

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

The approach proposed here (called NILS: N on-

linear Logic Solver) solves the probabilistic sentence

satisfiability problem (see (Henderson et al., 2020) for

details). This allows the estimation of the atom proba-

bilities based on all a priori knowledge as well as th at

acquired from sensors during th e execution of a task.

The method is described in detail below as well as the

results of its application to the Wumpus Wo rld pro b-

lem (for more on Wumpus Wor ld, see (Russell and

Norvig, 2009; Yob, 1975)).

1.1 Probabilistic Logic

The agents considered here use a probabilistic logic

representation of knowledge (Nilsson, 1986). The

agent’s knowledge base is a set of propositions (or

beliefs) expressed in conjunctive normal form (CNF);

i.e.:

KB ≡ C

1

∧C

2

∧ . . . ∧C

m

where

C

i

≡ L

i,1

∨ L

i,2

∨ . . . ∨ L

i,k

i

where

L

i, j

≡ a

p

or ¬a

p

where a

p

is a logical atom. In a ddition, a probability,

p

i

, is associated with each cla use (C

i

). T he ag e nt uses

the k nowledge by selecting a goal (i.e., a belief which

is to be made true) based on the current assessment of

the situation. This involves assigning prob abilities to

the beliefs, and then m a king a ration a l decision based

on these be lief probabilities (e.g., to avoid danger or

to achieve a reward).

In ord er to r eason using probabilities it is neces-

sary f or the probabilities to be deter mined in a valid

framework; for th is we must solve the probabilistic

satisfiability problem (Geo rgakopoulos et al., 1988)

which is NP-hard. Probabilistic satisfiability mea ns

that there is a functio n, π : Ω → [0, 1], where Ω is

the set of complete conjunc tions over n variables such

that:

π(ω) ∈ [0, 1], ∀ω ∈ Ω

∑

ω∈Ω

π(ω) = 1

Pr(C

i

) =

∑

ω|=C

i

π(ω)

where the complete conjunctions are the set of all

truth value a ssignments over n variables, and ω |= C

i

means that the truth assignment ω makes C

i

true. The

probabilistic satisfiability problem is to determine if

there is an appropriate function π.

We have provided an analysis of this problem and

give n the NILS method for its (approximate) solution

(Henderson et al., 2020). This involves converting

each clause to a nonlinear equa tion relating the prob-

ability of the clause to the pro babilities of the atoms

in the clause; a solution is then found for the atom

probabilities which best satisfies the definition of the

function π. The method finds the best (local) func-

tion and not necessarily an exact solution by using a

nonlinear solver. NILS works as follows:

• Convert each CNF cla use, C

i

, with probability p

i

,

to an e quation using the general addition rule of

probability: Pr(A ∪ B) = Pr(A) + Pr(B) − P r(A ∧

B)

• Solve the system; note that this can be nonlinear if

the variables are independent, or linear over new

variables if not independent.

– independent: Pr(A ∪ B) = Pr(A) + Pr(B) =

−Pr(A)Pr(B ), which lea ds to: p

i

= x

1

+ x

2

−

x

1

x

2

– not independent: Pr(A ∪B) = Pr(A) +Pr(B) −

Pr(A ∧ B), wh ic h lea ds to p

i

= x

1

+ x

2

− x

3

For a simple example, consider the CNF sentence

S given by:

• C

1

= a

1

[Pr(C

1

) = 0.7]

• C

2

= ¬a

1

∨ a

2

[Pr(C

2

) = 0.7]

A solution for this is:

• π(0, 0) = 0.2

• π(0, 1) = 0.1

• π(1, 0) = 0.3

• π(1, 1) = 0.4

Note that Pr(a

1

) = Pr(1, 0) + Pr(1, 1) = 0.3 +

0.4 = 0.7, and Pr(¬a

1

∨ a

2

) = Pr(0, 0) + Pr (0, 1) +

Pr(1, 1) = 0.2 + 0.1 + 0.4 = 0.7. Nilsson (Nilsson,

1986) shows that the solution for π is not uniqu e , and

that the Pr(a

2

) ∈ [0.4, 0.7] for the PSAT solutions.

Solving as a nonlinear system:

0.7 = Pr(a

1

)

0.7 = Pr(¬a

1

∨a

2

) = Prob(¬a

1

)+Pr(a

2

)−Pr(¬a

1

∧a

2

)

= (1 − P r(a

1

)) + Pr(a

2

) − (1 − Pr(a

1

)Pr(a

2

)

= 0.3 + Pr(a

2

) − 0.3P r(

2

)

So, Pr(a

2

) = 0.571. Note that logical variables are as-

sumed independent; th at is, Pr(A ∧B) = Pr(A)Pr(B).

We have also described a method for the case when

they are not independe nt (see (Henderson et al.,

2020)).

A New Approach to Probabilistic Knowledge-Based Decision Making

35

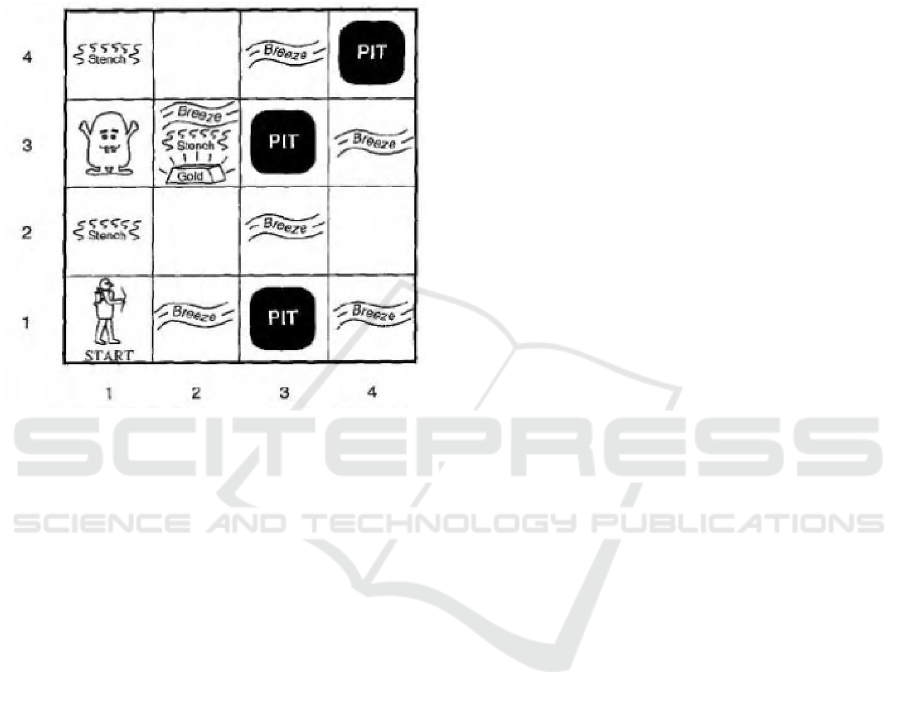

1.2 Test Domain: Wumpus World

Wumpus world is given in the AI text of Russell and

Norvig (Russell and Norvig, 2009); however, Wum-

pus World was originally developed by G. Yob ( Yob,

1975). It is a game defined on a 4x4 board (see Fig-

ure 1). The cells a re defined by their (x, y) centers

Figure 1: Wumpus World Layout (Russell and Norvig,

2009).

with the origin in the lower left, the x-axis is horizon-

tal and the y-axis is vertica l. The agent starts in cell

(1, 1) and tries to find the go ld (in this instance lo-

cated in c ell (2, 3)) while avoidin g pits (cells (3, 1),

(3, 3) and (4, 4)) and the Wumpus (cell (1, 3)). The

agent has the following percepts:

• Breeze: indica te s there is a p it in a neighboring

cell

• Stench: indicates there is a Wumpus in a neigh-

boring cell

• Glitter: indicates gold in the curr ent cell

• Bump: indicates run ning into a wall (after a For-

ward command and staying in start cell)

• Scream: indicates arrow (shot by agent) killed

Wumpus

There are six actions available to the agent:

• Forward: move forward o ne cell (agent has a di-

rection)

• Rotate Right: rotate direction 90 degrees to the

right

• Rotate Left: rotate direction 90 degrees to the left

• Grab: grab gold (if in current cell)

• Shoot arrow: shoot arrow in directio n facing (only

one arrow)

• Climb: climb out of cave (only app lies in cell

(1, 1))

There are a number of rules in the game; for example:

• Cells neighbo rin g a pit have a breeze

• Cells neighbo rin g the Wumpus have a stench

• There is one and only one Wumpus

• There is gold in one and only one cell

• If the agent moves into a c ell with a pit or Wum-

pus, the agent dies.

• Pits occur in each cell (except (1, 1)) with a fixed

probability; here we use 0.2.

Given the rules of Wumpu s, it is ne cessary to for-

mulate them as a CNF sentence. As a starting point,

the set of atoms is defined as follows; for each cell

(x, y):

• Bxy indicates a breeze in (x, y)

• Gxy indicates gold in (x, y)

• Pxy indicates a pit in (x, y)

• Sxy indicates a stench in (x, y)

• W xy indicates the Wumpus in (x, y).

Since there is only one Wumpus, there ar e rules stat-

ing that if th e Wumpus is in a g iven cell, then it is

not in any other; e .g., W 23 → ¬W 22; since implica-

tion is not a logical operator in CNF, this is written

as ¬W 23 ∨ ¬W 22. Since the Wumpus must be some-

where, there’s a rule:

W 21 ∨W 31 ∨ . . . ∨W 44

Note that the Wumpus is not allowed in cell (1, 1).

Also, there are rules expressing that there may not be

a pit and Wumpus in the same cell. The number of

atoms is then 80 (i.e., 5*16), a nd the rules give rise

to 4 02 clauses in the CNF KB. Note that the state of

neighboring cells (e.g., pit o r no pit) req uires proba-

bilistic reasoning since mutiple models can satisfy the

percepts.

To show the power of the NILS method, consider

estimating the a priori atom probabilities given just

the r ules of the game. T hese probabilities can be es-

timated using Monte Carlo by sampling a large num-

ber of boards and findin g the likelihood of each atom.

Similarly, NILS can provide an estimate. Figure 2

shows the two sets of probabilities, and it can be seen

that NILS provides a very good estimate; moreover,

the importan t issue is that safer cells be distinguished

from less safe ones, and even with the differences in

exact values, the order re la tions of the pr obabilities

are preserved.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

36

Figure 2: The A Priori Atom Probabilties found by Monte

Carlo sampling and the NILS method.

Performance in Wumpus world is measured by a

point system wherein:

• Take an action: costs -1 (except shoot the arrow

which costs -10)

• Die: costs -1000

• escape with gold: reward of 1000

The higher the score the better the performance. A

score greater than zero is deemed a success.

1.3 Problem Statement and

Experimental Methodology

The hypothesis is that it is possible to develop au-

tonomous agents that:

• represent knowledge o f the world as logical

propositions, b oth universal laws and temporal

variables (fluents),

• assign probabilities to those propositions,

• use a consistent for mal framework to make infer-

ences which allow informed rational actions to be

taken, an d

• achieve a strong level of performance.

Cognitive-level knowledge forms the core of the

knowledge base, and goals are formulated as beliefs

to be made true. The agent’s task is to organize the

goals in a reasonable manner and select a pprop riate

plans which when executed will make the goal belief

true.

In order to restrict the study to compare only the

way in which probabilities are produced, a common

agent algorithm was d eveloped; its logic is sh own in

Figure 3. This allows alternative methods to be used

to provide the atom probabilities used by the agent

in its decision making process. That is, difference in

behavior is only possible due to differences in atom

probabilities. Note that th e most imp ortant probabili-

ties concern whether a Wump us or pit are present in a

cell.

Figure 3: The Agent Behavior Algorithm.

Three mechanisms for atom probability are con-

sidered: (1) a human hand-coded method based on

an understanding of th e game, (2) a Monte Carlo

method which samples a set of boards which sat-

isfy the known conditions and computes probabili-

ties based on those boards, and (3) the NI LS method.

The Mo nte Carlo method serves as an a pprox ima-

tion to the grou nd truth (and would produce the ex-

act probabilities if the samples inc luded all satisfying

boards; since there 1,105,920 possible board s to filter

at each move, this option is not exploited). Theref ore,

the comparison allows determinatio n of how well the

NILS method performs compa red to a human-based

method as well as with re spect to the best possible

result.

2 EXPERIMENTS

Ten sets of 1000 random solvable boards were pro-

duced; that is, for each board there exists a path from

the start cell to the gold with no pit. The agent over-

all strategy is to move to the closest cell with low-

est probability of danger; the agent then goes there

either dies, finds the gold and escapes, or continues

searching. The number of successful games is used

as the m easure of success. Table 1 gives the number

of boards solved for each probability method for each

of the ten sets of 1000 boar ds.

2.1 Discussion

The question posed here is whether probabilities pro-

duced by NILS lead to a higher rate of success com-

pared to the human defined probability algorithm, and

also to determine how well NILS performs comp a red

to the Monte Carlo approximation. As can be seen

in Table 1, NILS averaged about twelve more suc-

cesses p e r 1000 boards as the human algorithm, and

was only outperformed (by three successes) in on e o f

the test sets. Moreover, the 95% confid ence intervals

A New Approach to Probabilistic Knowledge-Based Decision Making

37

Table 1: Results of Performance Test of Agents. There are

10 tri al sets consisting of 1000 solvable boards each. The

mean success rate for these 10 sets is given as well as the

variance. The 95% confidence interval s are [599.7,610.2],

[612.0,622.4], and [612.0,628.8], respectively. [Note that

the Monte Carlo success rates are the result of 10 indepen-

dent trials on each board test set.]

Board Set Human NILS Monte Carlo

1 590 609 613.3

2 610 620 626.1

3 590 608 617.9

4 610 619 628.5

5 607 604 619.3

6 597 620 619.7

7 611 630 627.6

8 614 626 631.0

9 611 626 638.2

10 609 610 623.4

Mean 604.9 617.2 624.9

Var 81.9 79.5 44.8

of the two me thods do not overlap. With respect to

the Monte Carlo method, NILS averaged six fewer

successes per thousand, but outper formed it in two of

the trial sets. The confidence intervals of these two

methods do overlap.

The results support the claim that N ILS is better

than the human probability algorithm and compara-

ble to the Monte Carlo method. In examining spe cific

cases, it was determined that the success of NILS over

the human algorithm mainly related to the fact that the

encodin g of the Wumpus World rules into the knowl-

edge base provide d implicit influence on probabili-

ties (i.e., implicit conditional probabilities) which the

human failed to capture. The success of NILS over

Monte Carlo when it occurred was seen to be r e la te d

to the result of the selection of sample boards by the

Monte Carlo meth od. To control for this, Monte Carlo

performance is given in terms of statistical measure-

ments (mean and variance) over a set of ten indepen-

dent trials per board set test case. It may be possible

to improve Monte Carlo performance by increasing

the number of samples, but computational costs go up

rapidly since each sample board must fit the c urrent

sensed data constraints, and a larger set of random

boards must be examined to get the desired appropri-

ate sample set.

3 CONCLUSIONS

We have demonstrated the viability of the non linear

logic solver (NILS) system as the basis for probabilis-

tic log ic agents. Moreover, the method is superior to

hand coded probability functions for the same appli-

cation domain, and comparable to the Monte Carlo

agent which operates with more detailed information

about the game.

In fu ture work, we intend to investigate the appli-

cation of probabilistic decision making in ter ms of:

• deeper c ognitive representations fo r the agent us-

ing a Belief, Desire, Intention (BDI) architecture.

• larger problem doma ins with multiple age nts,

• kn owledge compilation for individual agents co-

operating in a team effort in order to provide them

with just the information they need, and

• application to large-scale unmanned aircraft sys-

tems traffic management (UTM).

ACKNOWLEDGEMENTS

This work was supported in part by National Science

Foundation award 2152454.

REFERENCES

Casado, A., Martinez-Tomas, R., and F ernandez-Caballero,

A. (2011). Multi-agent System for Knowledge-Based

Event Recognition and Composition. Expert Systems,

28(5):488–501.

Domingos, P. and Lowd, D. (2009). Markov Logic Net-

works. Morgan and Claypool Publishers, Williston,

VT.

Georgakopoulos, G., Kavvadias, D., and Papadimitriou, C.

(1988). Probabi listic Satisfiability. Journal of Com-

plexity, 4:1–11.

Henderson, T., Simmons, R., Serbinowski, B., Cline, M.,

Sacharny, D., Fan, X., and Mitiche, A. (2020). Proba-

bilistic Sentence Satisfiability: An Approach to PSAT.

Artificial Intelligence, 278:71–87.

Hindriks, K., de Boer, F. , van der Hoek, W., and Meyer, J.

(1997). Formal Semantics of an Abstract Agent Pro-

gramming Language. In Proceedings of the Interna-

tional Workshop on Agent Theories, Architectures and

Languages, pages 215–229. Springer Verlag.

J. Dix, M. N. and Subrahmanian, V. (2000). Probabilis-

tic Agent Programs. ACM Transactions on Computa-

tional Logic, 1(2):208–246.

Milch, B. and Kller, D. (2000). Probabilistic Models for

Agents’ Beliefs and Decisions. In Uncertainty in Ar-

tificial Intelligence Proceedings, pages 389–396. As-

sociation for Computing Machinery.

Nilsson, N. (1986). Probabilistic Logic. Art ificial Intelli-

gence Journal, 28:71–87.

Pearl, J. (1988). Probabilistic Reasoning in Intelligent Sys-

tems. Morgan Kaufmann, San Mateo, CA.

Reiter, R. (2001). Knowledge in Action: Logical Founda-

tions f or Specifying and Implementing Dynamical Sys-

tems. MIT Press, Cambridge, MA.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

38

Russell, S. and Norvig, P. (2009). Artificial Intelligence: A

Modern Approach. Prentice Hall Press, Upper Saddle

River, NJ, 3rd edition.

Wang, J., Ju, S.-E., and Liu, C.-H. (2006). Agent-Oriented

Probabilistic Logic Programming. Journal of Compu-

tational Science and Technol ogy, 21(3):412–417.

Yob, G. (1975). Hunt the Wumpus? Creative Computing.

A New Approach to Probabilistic Knowledge-Based Decision Making

39