HMMs Recursive Parameters Estimation for Semi-Bounded Data

Modeling: Application to Occupancy Estimation in Smart Buildings

Fatemeh Rezapoor Nikroo, Manar Amayri and Nizar Bouguila

CIISE, Concordia University, Montreal, Canada

Keywords:

Hidden Markov Models, Recursive Estimation, Semi-Bounded Data, Smart Buildings, Occupancy Estimation.

Abstract:

Optimizing energy consumption is one of the key factors in smart buildings developments. It is crucial to

estimate the number of occupants and detect their presence when it comes to energy saving in smart buildings.

In this paper, we propose a Hidden Markov Models (HMM)-based approach to estimate and detect the occu-

pancy status in smart buildings. In order to dynamically estimate the occupancy level, we develop a recursive

estimation algorithm. The developed models are evaluated using two different real data sets.

1 INTRODUCTION

The daily increase in energy consumption has led to

global warming. Global energy demand has continu-

ously increased while building sector has had a ma-

jor effect in this rapid growth in energy consump-

tion (Kim et al., 2022). In this context, smart build-

ings promise automated systems to control energy

consumption. Environmental control systems have

been proved as a crucial factor in smart buildings.

Another decisive factor in energy consumption con-

cerns the occupants themselves (Gaetani et al., 2016).

Automatic occupancy detection and estimation ap-

proaches allow building energy systems to manage

the energy consumed. While 35% of USA’s en-

ergy consumption is attributed to the heating, cooling

and ventilation (HVAC) systems (Ali and Bouguila,

2022; Erickson et al., 2014), occupants’ behavior has

also a major influence on building energy consump-

tion (Soltanaghaei and Whitehouse, 2016). Studies

have shown that machine learning algorithms are cru-

cial for smart buildings applications (Alawadi et al.,

2020). For instance, machine learning models have

been used to measure HVAC actuation levels (Eba-

dat et al., 2013). Furthermore, a supervised learning

model has been developed in (Amayri et al., 2016) to

estimate the number of occupants based on sensorial

data (e.g. motion detection, power consumption, CO2

concentration sensors, microphone, or door/window

positions).

Machine learning approaches can be grouped into

3 main categories: 1) generative models such as

mixture models and HMMs, 2) Discriminative mod-

els such as support vector machine (SVM) and 3)

Heuristic-based models which combine the 2 previ-

ous families with heuristic information. In this pa-

per we propose HMM-based occupancy models. A

first crucial factor when deploying a HMM model is

the choice of probability density function (Nguyen

et al., 2019). Thus, we investigate 3 distributions

dedicated for semi-bounded data (i.e. positive vec-

tors) which are detailed in section 2. The second

important factor is to estimate the unknown param-

eters which is generally done using maximum likeli-

hood estimation (MLE) within the expectation maxi-

mization (EM) framework. Handling real-time data of

smart buildings requires continuous processing which

is challenging. Therefore, one of the motivations of

this paper is to propose a novel architecture to cope

with real-time data. Online learning techniques pro-

vide solutions addressing real-time occupancy esti-

mation to build models that can be continuously up-

dated (Amayri et al., 2020). We introduce a recursive

algorithm with linear time complexity to detect and/or

estimate the number of occupants.

2 BACKGROUND

2.1 Hidden Markov Models

HMMs are powerful statistical models. The idea of

HMM comes from a limit of Markov model in mod-

eling problems which output is the probabilistic func-

tion of the states (Epaillard and Bouguila, 2016). This

Nikroo, F., Amayri, M. and Bouguila, N.

HMMs Recursive Parameters Estimation for Semi-Bounded Data Modeling: Application to Occupancy Estimation in Smart Buildings.

DOI: 10.5220/0011715200003491

In Proceedings of the 12th International Conference on Smart Cities and Green ICT Systems (SMARTGREENS 2023), pages 81-88

ISBN: 978-989-758-651-4; ISSN: 2184-4968

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

81

function called emission probability is described in

details in the following sections. Indeed, we assume

more general distributions dedicated to positive vec-

tors to model the output observable data from HMM

to have more flexible models. It is noteworthy to men-

tion the denomination of Hidden in HMM refers to the

states not the parameters of the model. Markov chain

is referred to a time-varying random phenomenon

meeting the Markov property which indicates that the

conditional probability of the forthcoming state is just

based on the current state and not on historical infor-

mation, which can be mathematically formulated as:

p(X

t+1

|X

t

,X

t−1

,...,X

1

) = p(X

t+1

|X

t

) (1)

HMM elements are completely defined as follow,

however, it is mainly represented by three parameters

λ = (π,A, B) (Vai

ˇ

ciulyt

˙

e and Sakalauskas, 2020).

1) The number of states N, each state is defined by S

i

such that S = 1,2,...,N.

2) Vector π = π

1

,π

2

,..., π

N

indicate the probability of

being in each state.

3) The number of possible observations M for each

state as V = V

1

,V

2

,...,V

M

. In case of our work that

observations come from the distributions that we will

define later, they are continuous thus, M is infinite.

4) A is the state transition probability matrix such that

a

i, j

, 1 ≤ i, j ≤ N is the probability of moving to state

j at time t +1 while the model was in state i at time t.

The constraints for transition matrix should be met:

a

i, j

>= 0,

N

∑

j=1

a

i, j

= 1, 1 ≤ i, j ≤ N

5) B is the matrix showing the observation probability

where b

j

(k) is the probability of observing V

k

in state

S

j

.

b

j

(k) = p(V

t

k

|S

t

j

), 1 ≤ i, j ≤ N

The constraints for continuous observations are de-

fined based on the specific probability distribution:

b

j

(k) =

M

∑

m=1

c

jm

p(x|θ)

where θ represents the parameters of the de-

fined distribution, c

jm

is weighting coefficient with

∑

M

m=1

c

jm

= 1.

b

j

(k) >= 0, 1 ≤ j ≤ N, 1 ≤ k ≤ M,

M

∑

j=1

b

j

(k) = 1

2.2 Inverted Dirichlet Distribution

Consider X = (x

1

,x

2

,..., x

K

) as a vector following the

inverted Dirichlet distribution ID(α) with parame-

ter α = (α

1

,α

2

,..., α

K

,α

K+1

), the probability density

function has the following form (Bdiri and Bouguila,

2013):

p(X) =

Γ(α

0

)

∏

K+1

i=1

Γ(α

i

)

K

∏

i=1

x

α

i

−1

i

(1 +

K

∑

i=1

X

i

)

−α

0

where α

0

=

∑

K+1

i=1

α

i

, x

i

> 0, i = 1...K. Many prop-

erties of the inverted Dirichlet distribution are given in

(Tiao and Cuttman, 1965; Bdiri and Bouguila, 2012).

2.3 Generalized Inverted Dirichlet

Distribution

Inverted Dirichlet distribution assumes a positive

correlation, therefore a generalization of it is in-

troduced to cope with this limitation in order to

have the capability of modeling wider range of real-

life data (Bourouis et al., 2014a). Consider X =

(x

1

,x

2

,..., x

K

) as a vector following the Generalized

Inverted Dirichlet distribution GID(α;β) with param-

eter α = (α

1

,α

2

,..., α

K

,α

K

) and β = (β

1

,β

2

,..., β

K

).

The probability density function has the following

form (lingmnwah, 1976; Bourouis et al., 2014b):

p(x

1

,x

2

,..., x

K

) =

K

∏

i=1

Γ(α

i

+ β

i

)

Γ(α

i

)Γ (β

i

)

x

α

i

−1

i

(1 +

∑

i

m=1

x

m

)

η

i

where η

i

= β

i

+ α

i

− β

i+1

i = 1,2, ...,K, β

K+1

=

0, x

i

> 0, i = 1...K. By substituting β

1

= β

2

= ... =

β

K−1

= 0 , inverted Dirichlet distribution with param-

eter α = (α

1

,α

2

,..., α

K

,α

K

,β

K

) is obtained (lingmn-

wah, 1976; Bourouis et al., 2014b).

2.4 Inverted Beta-Liouville Distribution

Inverted Beta-Liouville distribution (IBL) has been

proved as an efficient way of modeling positive vec-

tors (Bouguila et al., 2022; Bourouis et al., 2021). It

overcomes the limit of the Inverted Dirichlet in the as-

pect of positive covariance and presents less parame-

ters as compared with generalized Inverted Dirichlet

(Bouguila et al., 2022; Bourouis et al., 2021). IBL is

in the family of the Beta-Liouville distribution which

is a natural model for analyzing compositional data

(Fang et al., 2018). Consider r = λw/(1 − w) which

w is a Beta distribution with parameters α and β as

the generating variate, thus r follows an inverted Beta

distribution with parameters β and λ. The generating

density function is (Fang et al., 2018):

f (u|θ) =

1

B(α,β)

λ

β

u

α−1

(λ + u)

α+β

(2)

where u > 0 and θ = (α, β,λ). The Liouville distri-

bution with positive parameters (α

1

,α

2

,..., α

K

) and

SMARTGREENS 2023 - 12th International Conference on Smart Cities and Green ICT Systems

82

generating density of f (.) with parameter δ is defined

as below (Bouguila, 2012; Fang et al., 2018):

p(

~

X|α

1

,..., α

K

,δ) = f (u|δ)

Γ(

∑

K

i=1

α

i

)

u

∑

K

i=1

α

i

− 1

K

∏

i=1

x

α

i

−1

i

Γ(α

i

)

We have the probability density function for

~

X as fol-

lows:

p(x

1

,x

2

,..., x

K

) =

Γ(α

0

)Γ(α + β)

Γ(α)Γ(β)

λ

β

(

∑

K

i=1

x

i

)

α−α

0

(λ +

∑

K

i=1

x

i

)

α+β

K

∏

i=1

x

α

i

−1

i

Γ(α

i

)

where θ = (α

1

,α

2

,..., α

K

,α, β,λ), α

0

=

∑

K

i=1

α

i

, x

i

> 0, i = 1...K. This distribution

can be converted to inverted Dirichlet distribution

by equalizing α = α

0

(Fang et al., 2018). Many

properties of the distribution are given in (Hu et al.,

2019; Koochemeshkian et al., 2020).

3 RECURSIVE MODEL

To learn the HMM parameters, we need to estimate

the distribution parameters of the HMM (Vai

ˇ

ciulyt

˙

e

and Sakalauskas, 2020). To achieve this goal we cal-

culate the log likelihood of each probability density

function then maximize it. Each probability density

function is explained in a different sub-section. How-

ever, the whole process is the same. It is noteworthy

that ψ used in equations below is the Digamma func-

tion which is the logarithmic derivative of the Gamma

function. The probability that sequence x is observed

while the system is at specific time t considering state

q, which can be any in range of q = 1, ,2, ...,N, is:

π

<q>

t

logdistribution(x

t

|α< q >)

∑

N

j=1

π

< j>

t

logdistribution(x

t

|α< j >)

(3)

Now the summation over t indicates the probability of

the model being in specific state considering specific

output sequence x is observed:

T

∑

t=1

π

<q>

t

logdistribution(x

t

|α< q >)

∑

N

j=1

π

< j>

t

logdistribution(x

t

|α< j >)

(4)

where “distribution” refers to each distribution we

have used in this paper. Here, we define some same

variables which are used in the rest of the paper for all

the 3 distributions.

θ

q

t

= π

q

t

logdistribution(x

t

|

~

θ

<q>

) (5)

ω

<q,s>

t

= ω

<q,s>

t−1

+

1

t

(

log(x

s

t

)θ

q

t

∑

N

j=1

θ

j

t

− ω

<q,s>

t−1

)

(6)

γ

q

t

= γ

q

t−1

+

1

t

(

θ

q

t

∑

N

j=1

θ

j

t

− γ

q

t−1

) (7)

where 1 ≤ q ≤ N , 1 ≤ s, i ≤ K.

3.1 Inverted Dirichlet Distribution

The log likelihood function of inverted Dirichlet (ID)

distribution is as follows:

logID(

~

X|

~

θ) = log Γ(

K+1

∑

i=1

α

i

) +

K

∑

i=1

(α

i

− 1)logx

i

−

(

K+1

∑

i=1

α

i

)log(1 +

K

∑

i=1

x

i

) −

K+1

∑

i=1

logΓ(α

i

)

To maximize the above equation, derivative of it with

respect to each parameter is shown below:

∂log ID(

~

X|

~

θ)

∂α

j

=

ψ(

∑

K+1

i=1

α

i

) + logx

j

− log(1 +

∑

K

i=1

x

j

)

−ψ(α

j

) if j = 1,2,..., K

ψ(

∑

K+1

i=1

α

i

) − log(1 +

∑

K

i=1

x

j

) − ψ(α

j

)

if j = K + 1

Now the batch formula to derive α

i

is as below:

α

i

= ψ

−1

[ψ(

K+1

∑

i=1

(α

q

i

))

+

1

T

∑

T

t=1

log(x

s

t

)

π

<q>

t

logID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logID(x

t

|

~

θ

< j>

)

1

T

∑

T

t=1

π

<q>

t

logID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logID(x

t

|

~

θ

< j>

)

−

1

T

T

∑

t=1

π

<q>

t

logID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logID(x

t

|

~

θ

< j>

)

] i = 1, 2,...K

α

K+1

= ψ

−1

[ψ(

K+1

∑

i=1

(α

q

i

))

−

1

T

T

∑

t=1

π

<q>

t

logID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logID(x

t

|

~

θ

< j>

)

] (8)

where 1 ≤ q ≤ N , 1 ≤ s ≤ K. We assume each ob-

servation occurs in specific time step t. Hence, in our

recursive model, the parameters are updated as time

goes until they meet the condition of |θ

new

−θ

old

| <=

ε. The middle variables and the main parameters are:

α

<q,s>

t

= ψ

−1

[ψ(

K+1

∑

i=1

(α

q

i

)) +

ω

<q>

t

γ

q

t

− γ

q

t

]

(9)

α

<q,K+1>

t

= ψ

−1

[ψ(

K+1

∑

i=1

(α

q

i

)) +

ω

<q>

t

γ

q

t

− γ

q

t

] (10)

where 1 ≤ q ≤ N , 1 ≤ s, i ≤ K.

HMMs Recursive Parameters Estimation for Semi-Bounded Data Modeling: Application to Occupancy Estimation in Smart Buildings

83

3.2 GID Distribution

The log likelihood function GID distribution is:

logGID(

~

X|

~

θ) =

K

∑

i=1

log(α

i

+ β

i

) + (α

i

− 1)logx

i

−log Γ(α

i

) − log Γ(β

i

) − η log(1 +

i

∑

m=1

x

m

)

To maximize the above equation, derivative of it with

respect to each parameter is shown below:

∂log GID(

~

X|

~

θ)

∂α

j

= ψ(α

j

+ β

j

) + log(x

j

) − ψ(α

j

)

−log(1 +

j

∑

m=1

x

m

)

∂log GID(

~

X|

~

θ)

∂β

j

= ψ(α

j

+ β

j

) − ψ(β

j

)

−log(1 +

j

∑

m=1

x

m

)

(11)

Now the batch formula to derive α

i

and β

i

are:

α

i

= ψ

−1

[ψ(α

i

+ β

i

)

+

1

T

∑

T

t=1

log(x

s

t

)

π

<q>

t

logGID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logGID(x

t

|

~

θ

< j>

)

1

T

∑

T

t=1

π

<q>

t

logGID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logGID(x

t

|

~

θ

< j>

)

−

1

T

T

∑

t=1

π

<q>

t

logGID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logGID(x

t

|

~

θ

< j>

)

] (12)

β

i

= ψ

−1

[ψ(α

i

+ β

i

)

−

1

T

T

∑

t=1

π

<q>

t

logGID(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logGID(x

t

|

~

θ

< j>

)

] (13)

α

<q,s>

t

= ψ

−1

[ψ(α + β) +

ω

<q>

t

γ

q

t

− γ

q

t

] (14)

β

<q,s>

t

= ψ

−1

[ψ(α + β) − γ

q

t

] , 1 ≤ q ≤ N (15)

3.3 IBL Distribution

The log likelihood function IBL is as follows:

logIBL(

~

X|

~

θ) = log Γ(

K

∑

i=1

α

i

) + logΓ(α + β) − log Γ(α)−

logΓ(β) + βlog λ + (α −

K

∑

i=1

α

i

)log(

K

∑

i=1

x

i

)−

(α + β)log(λ +

K

∑

i=1

x

i

) +

K

∑

i=1

(α

i

− 1)log x

i

−

K

∑

i=1

logΓ(α

i

)

To maximize the above equation:

∂log IBL(

~

X|

~

θ)

∂α

j

= ψ(

K

∑

i=1

α

i

) + logx

j

− ψ(α

j

) − log(

K

∑

i=1

x

i

)

∂log IBL(

~

X|

~

θ)

∂α

= ψ(α + β) − ψ(α) + log(

K

∑

i=1

x

i

)−

log(λ +

K

∑

i=1

x

i

)

∂log IBL(

~

X|

~

θ)

∂β

= ψ(α + β) − ψ(β) + logλ−

log(λ +

K

∑

i=1

x

i

)

(16)

∂log IBL(

~

X|

~

θ)

∂λ

=

β

λ

−

α + β

λ +

∑

K

i=1

x

i

(17)

The batch formula to derive α

i

, α, β, λ are as below:

α

i

= ψ

−1

[ψ(

K

∑

i=1

(α

q

i

))

+

1

T

∑

T

t=1

log(x

s

t

)

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

1

T

∑

T

t=1

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

−

1

T

T

∑

t=1

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

] (18)

α = ψ

−1

[ψ(α + β)

+

1

T

T

∑

t=1

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

]

β = ψ

−1

[ψ(α + β) + log λ−

1

T

T

∑

t=1

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

] (19)

λ =

β

α

1

T

T

∑

t=1

π

<q>

t

logIBL(x

t

|

~

θ

<q>

)

∑

N

j=1

π

< j>

t

logIBL(x

t

|

~

θ

< j>

)

(20)

where 1 ≤ q ≤ N , 1 ≤ s,i ≤ K. Based on the mid-

dle variables defined earlier, above explanation of re-

cursive logic and the batch formula of IBL, the main

parameters are described as below:

α

<q,s>

t

= ψ

−1

[ψ(

K+1

∑

i=1

(α

q

i

)) +

ω

<q>

t

γ

q

t

− γ

q

t

]

(21)

SMARTGREENS 2023 - 12th International Conference on Smart Cities and Green ICT Systems

84

α

<q,s>

t

= ψ

−1

[ψ(α + β) + γ

q

t

] (22)

β

<q,s>

t

= ψ

−1

[ψ(α + β) + log γ − γ

q

t

]

(23)

λ

<q,s>

t

=

β

α

γ (24)

where 1 ≤ q ≤ N , 1 ≤ s ≤ K. We present the recur-

sive proof of the model equations:

ω

<q,s>

t

= ω

<q,s>

t−1

+

1

t

(

log(x

s

t

)θ

q

t

∑

N

j=1

θ

j

i

− ω

<q,s>

t−1

)

=

1

t − 1

t−1

∑

i=1

log(x

s

t

)θ

q

i

∑

N

j=1

θ

j

i

+

1

t

(

log(x

s

t

)θ

q

t

∑

N

j=1

θ

j

t

−

1

t − 1

t−1

∑

i=1

log(x

s

t

)θ

q

i

∑

N

j=1

θ

j

i

) =

1

t

t

∑

i=1

log(x

s

i

)θ

q

i

∑

N

j=1

θ

j

i

γ

q

t

= γ

q

t−1

+

1

t

(

θ

q

t

∑

N

j=1

(θ

j

t

)

− γ

q

t−1

)

=

1

t − 1

t−1

∑

i=1

θ

q

i

∑

N

j=1

θ

j

i

+

1

t

(

θ

q

t

∑

N

j=1

θ

j

t

−

1

t − 1

t−1

∑

i=1

θ

q

i

∑

N

j=1

θ

j

i

)

=

1

t

t

∑

i=1

θ

q

i

∑

N

j=1

θ

j

i

We present the algorithm for all of the models

in Algorithm 1 which is based on the EM frame-

work. The variables ~µ

1

=(α

1

,α

2

,..., α

K

,α

K+1

),

~µ

2

=(α

1

,α

2

,..., α

K

,α

K

,β

1

,β

2

,..., β

K

),

~µ

3

=(α

1

,α

2

,..., α

K

,α, β,λ) are representative of

ID-HMM, GID-HMM and IBL-HMM parameters,

respectively.

Algorithm 1: Recursive expectation maximization algo-

rithm for ID-HMM, GID-HMM and IBL-HMM parameter

estimation.

Output: ~µ

1

, ~µ

2

and ~µ

3

with respect to conditions,

1 ≤ t ≤ T,1 ≤ s ≤ K, 1 ≤ q ≤ N

Initialization: ~µ

1

,~µ

2

,~µ

3

, initial-probability of each

state and transition-probability between states

Input: each row of dataset; x

t

, 1 ≤ t ≤ T

While |~µ

1

t

−~µ

1

t−1

| < ε, |~µ

2

t

−~µ

2

t−1

| < ε, |~µ

3

t

−

~µ

3

t−1

| < ε

E-step

Calculate values of θ

q

t

,ω

<q,s>

t

,γ

q

t

;

M-step:

Update values of~µ

1

,~µ

2

,~µ

3

The algorithm starts with random initialization of

the parameters of both HMM and distributions we

consider for data. Then, the algorithm goes through

the loop for E-step and M-step until the termination

criterion is met which is based on monitoring the dif-

ference between the previous value and update one of

each parameter after each loop. This difference value

is shown as ε and set to 0.6. As we have mentioned

before, in each loop the values are updated based on

the new rows of data feed to the model. We assume

that each row of dataset occurs in a specific time, so

that each time the new data is obtained, parameters

are updated.

4 EXPERIMENTAL RESULTS

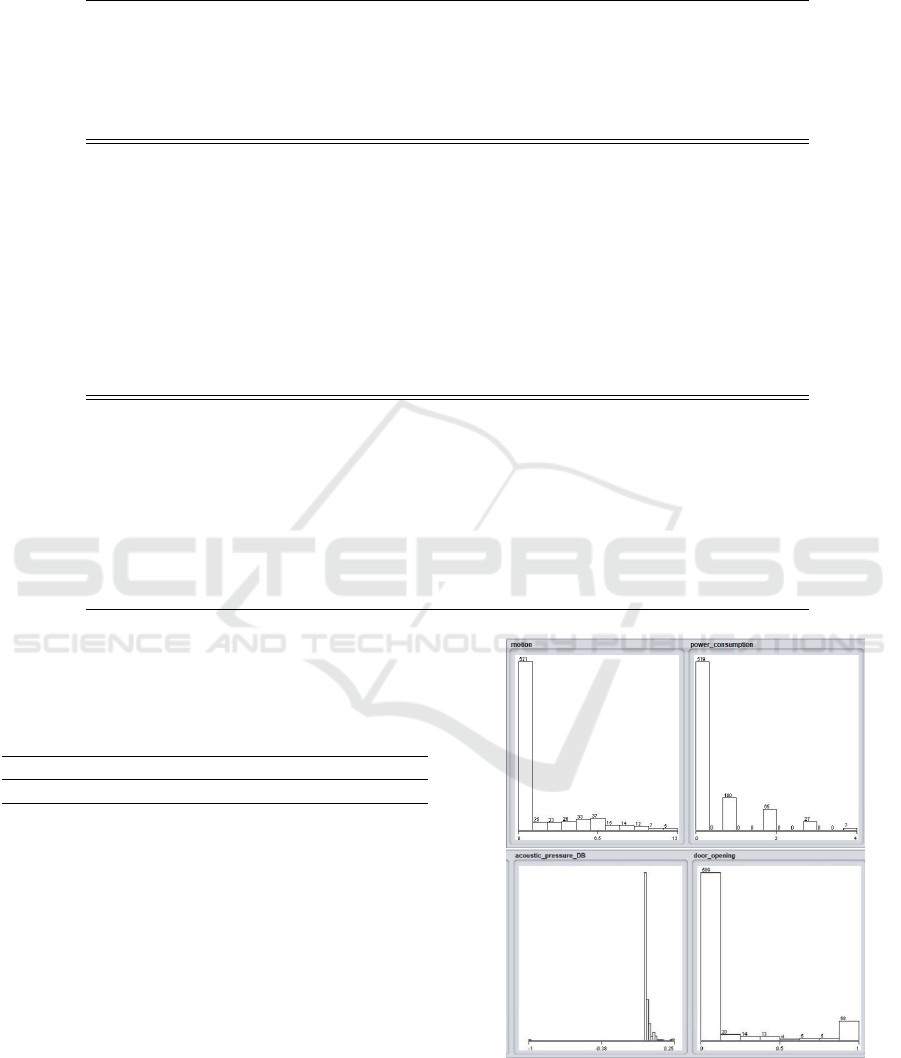

Figure 1: Visualization of occupancy detection data accord-

ing to different features.

In this section we present the validation of our pro-

posed models using 2 different real datasets for both

binary and multiclass classification of occupants in

smart buildings. Data that we have investigated, is

collected during time. Thus, based on the logic of

its application to estimate occupants in a room, each

row of data shows the conditions of the room with

the number of people in specific time, so we were

able to train the model as each row of the data comes,

to update the parameters. In that case we have suc-

cessfully overcame the problem of batch learning for

intensive data. The main motivation to evaluate our

model on occupancy datasets is the fact that in smart

buildings the goal is to automate the systems related

to HVAC which offers less energy wasting along with

better comfort of residents for facilities management

strategic decisions. In smart buildings the sensors col-

lecting environmental factors like the amount of CO

2

HMMs Recursive Parameters Estimation for Semi-Bounded Data Modeling: Application to Occupancy Estimation in Smart Buildings

85

Table 1: Estimated parameters for ID-HMM, GID-HMM and IBL-HMM for occupancy detection dataset.

Model Number of States Parameters

ID-HMM 2 α =

0.2389 0.2414 0.4244 12.47 0.2266 13.03

0.2346 0.2369 1.196 12.42 0.2227 13.34

GID-HMM 2 α =

8.723 8.706 8.708 8.876 8.633

8.786 8.603 8.610 8.778 8.586

β =

8.281 8.149 8.164 9.564 7.607

9.329 9.339 9.329 9.330 9.328

IBL-HMM 2

~

α =

0.9105 0.5656 0.0679 0.0322 0.5916

0.3517 0.3470 0.1467 0.1037 0.3807

α =

0.7223 2.478

β =

0.6733 0.5181

λ =

2.89 2.03

concentration, temperature, relative humidity, etc are

integrated in automation settings. Thus, we have the

collected information of sensors in hand allowing us

to estimate the number of occupants. This is the idea

in HMM, by mapping the collected data as our ob-

servation and the number of occupants as the hidden

states. In all of the experiments, we define the initial

probability of each states (matrix π explained earlier)

as

1

n

assuming n is the total number of states. Transi-

tion matrix along with each distributions’ parameters

are assigned randomly considering their limit accord-

ing to the distribution definition. The termination cri-

terion of the algorithm to avoid endless recursion is

set to ε = 0.6. To analyze our datasets collected over

time, we assume each record occurred in new time

step. Therefore, in our recursive model, the parame-

ters are updated as new record of data feed into the

model.

Binary Classification Dataset (Occupancy Detec-

tion Dataset): The first data used to evaluate our

models is related to occupancy detection. This dataset

is obtained from Machine Learning Repository of

University of California Irvine (UCI). Fig. 1 describes

the distribution of the features. Dataset consists of 5

different features as below which are indicated in a

time series: 1) Temperature, in Celsius, 2) Relative

Humidity, 3) Light, in Lux, 4) CO2, in ppm, 5) Hu-

midity Ratio, Derived quantity from temperature and

relative humidity, in kgwater-vapor/kg-air.

This dataset has two different parts for training

and testing. We have used the training data to train

the model to estimate its parameters, then using the

test data to evaluate the models. Table 1 shows the

number of hidden states for the occupancy detection

dataset along with the values of the model parame-

ters. The values computed through the EM algorithm

Table 2: Accuracy, F-score, precision and recall in percent

for Inverted Dirichlet HMM, Generalized Inverted Dirichlet

HMM and Inverted Beta-Liouville HMM applied for occu-

pancy detection dataset.

Model Accuracy F-score precision recall

ID-HMM 86.81 85.65 82.86 85.65

GID-HMM 86.90 87.55 89.22 86.90

IBL-HMM 84.00 79.85 86.58 84.00

are explained earlier. Model parameters, based on

the distribution considered for emission probability in

HMM, follow different dimensions. Table 2 indicates

the evaluation results of our model based on the 3 dis-

tributions we discussed which are computed on the

testing dataset.

Multiclass Classification Dataset (Occupancy Esti-

mation Dataset): This dataset is used for occupancy

estimation in smart building as the goal was to esti-

mate the number of occupants from 0 to 4. Thus,

the number of hidden states in this sample will be

5. This dataset is obtained from an experiment which

testbed was an office in Grenoble Institute of Technol-

ogy in France (Nasfi et al., 2020). This data was ob-

tained from 30 sensors of motions, power consump-

tion, acoustic pressure, and door position (Amayri

et al., 2020). In order to investigate the model we split

the dataset into training and testing data. We use the

training data to estimate the parameters of our model

then apply the trained model on testing data for clas-

sification to estimate the number of occupants. Table

3 represents the values of parameters for each model

learned with occupancy estimation dataset, while the

evaluation metrics are shown in Table 4. The visuali-

sation of the data is presented in Fig. 2.

SMARTGREENS 2023 - 12th International Conference on Smart Cities and Green ICT Systems

86

Table 3: Estimated parameters for ID-HMM, GID-HMM and IBL-HMM for occupancy estimation dataset.

Model Number of States Parameters

ID-HMM 5 α =

0.5860 0.0859 0.9971 0.5545 0.2836

0.5054 0.9837 0.2110 0.7645 0.9573

0.3307 0.1928 0.3457 0.2385 0.7199

0.4826 0.3005 0.1633 0.8344 0.9434

0.5469 0.4305 0.3090 0.0707 0.6270

GID-HMM 5 α =

7.361 6.149 4.917 4.447

2.146 2.493 2.591 2.592

4.498 5.912 6.943 6.747

1.057 1.062 1.369 1.134

8.013 6.171 1.003 5.850

β =

5.294 1.663 1.329 1.200

2.720 3.288 3.456 3.457

1.365 1.426 1.543 1.490

2.365 4.393 3.264 2.781

1.211 9.174 4.215 2.368

IBL-HMM 5

~

α =

15.25 40.16 39.95 39.33

0.1666 0.4702 0.7526 0.6555

0.3187 0.4525 0.718 0.6868

0.1185 0.1837 0.3916 0.2882

0.1212 0.1379 0.3014 0.2345

α =

0.6309 15.27 13.84 18.46 8.989

β =

0.1706 2.971 73.36 33.32 38.40

λ =

6.469 2.001 0.3127 2.343 1.790

Table 4: Accuracy, F-score, precision and recall in percent

for Inverted Dirichlet HMM, Generalized Inverted Dirichlet

HMM and Inverted Beta-Liouville HMM applied for occu-

pancy detection dataset.

Model Accuracy F-score precision recall

ID-HMM 80.59 79.25 79.36 84.51

GID-HMM 78.24 85.27 82.87 88.02

IBL-HMM 74.53 86.86 87.93 89.94

5 CONCLUSION

In this paper, we introduce a novel approach for occu-

pancy estimation in smart buildings through HMMs.

Considering the inverted Dirichlet, GID and IBL dis-

tributions as underlying distributions describing the

observation data. The models have been successfully

evaluated on real-data. The goal was to reach an ac-

curate prediction of number of occupants in a room

which plays a key role in smart buildings to reduce

energy consumption. One of the main motivations to

apply these distributions is their flexibility. In addi-

tion, the developed models are based on a recursive

approach, thus the time complexity of the algorithm

Figure 2: Visualization of occupancy estimation data ac-

cording to different features.

reduces to linear time as compared with batch pro-

cessing which in turn causes a substantial decrease in

memory overload and computational resources. Fu-

HMMs Recursive Parameters Estimation for Semi-Bounded Data Modeling: Application to Occupancy Estimation in Smart Buildings

87

ture aspirations could be devoted to improve the ini-

tialization methods. Additionally, due to the impor-

tance of prepossessing data in machine learning mod-

els, to further enhance the model accuracy, feature se-

lection and data quality assessment methods could be

investigated.

REFERENCES

Alawadi, S., Mera, D., Fernandez-Delgado, M., Alkhab-

bas, F., Olsson, C. M., and Davidsson, P. (2020). A

comparison of machine learning algorithms for fore-

casting indoor temperature in smart buildings. Energy

Systems, pages 1–17.

Ali, S. and Bouguila, N. (2022). Towards scalable de-

ployment of hidden markov models in occupancy es-

timation: A novel methodology applied to the study

case of occupancy detection. Energy and Buildings,

254:111594.

Amayri, M., Arora, A., Ploix, S., Bandhyopadyay, S., Ngo,

Q.-D., and Badarla, V. R. (2016). Estimating occu-

pancy in heterogeneous sensor environment. Energy

and Buildings, 129:46–58.

Amayri, M., Ploix, S., Bouguila, N., and Wurtz, F. (2020).

Database quality assessment for interactive learning:

Application to occupancy estimation. Energy and

Buildings, 209:109578.

Bdiri, T. and Bouguila, N. (2012). Positive vectors clus-

tering using inverted dirichlet finite mixture models.

Expert Systems with Applications, 39(2):1869–1882.

Bdiri, T. and Bouguila, N. (2013). Bayesian learning of in-

verted dirichlet mixtures for svm kernels generation.

Neural Computing and Applications, 23(5):1443–

1458.

Bouguila, N. (2012). Infinite liouville mixture models with

application to text and texture categorization. Pattern

Recognition Letters, 33(2):103–110.

Bouguila, N., Fan, W., and Amayri, M. (2022). Hidden

Markov Models and Applications. Springer.

Bourouis, S., Alroobaea, R., Rubaiee, S., Andejany, M.,

M.Almansour, F., and Bouguila, N. (2021). Markov

chain monte carlo-based bayesian inference for learn-

ing finite and infinite inverted beta-liouville mixture

models. IEEE Access, 9:71170–71183.

Bourouis, S., Mashrgy, M. A., and Bouguila, N. (2014a).

Bayesian learning of finite generalized inverted

dirichlet mixtures: Application to object classifi-

cation and forgery detection. Expert Syst. Appl.,

41(5):2329–2336.

Bourouis, S., Mashrgy, M. A., and Bouguila, N. (2014b).

Bayesian learning of finite generalized inverted dirich-

let mixtures: Application to object classification and

forgery detection. Expert Systems with Applications,

41(5):2329–2336.

Ebadat, A., Bottegal, G., Varagnolo, D., Wahlberg, B., and

Johansson, K. H. (2013). Estimation of building oc-

cupancy levels through environmental signals decon-

volution. In Proceedings of the 5th ACM Workshop

on Embedded Systems For Energy-Efficient Buildings,

pages 1–8.

Epaillard, E. and Bouguila, N. (2016). Proportional data

modeling with hidden markov models based on gen-

eralized dirichlet and beta-liouville mixtures applied

to anomaly detection in public areas. Pattern Recog-

nit., 55:125–136.

Erickson, V. L., Carreira-Perpi, M. A., and Cerpa, A. E.

(2014). Occupancy modeling and prediction for build-

ing energy management. ACM Transactions on Sensor

Networks (TOSN), 10(3):1–28.

Fang, K. T., Kotz, S., and Wangng, K. (2018). Symmetric

Multivariate and Related Distributions. Chapman and

Hall/CRC.

Gaetani, I., Hoes, P.-J., and Hensen, J. L. (2016). Occupant

behavior in building energy simulation: Towards a fit-

for-purpose modeling strategy. Energy and Buildings,

121:188–204.

Hu, C., Fan, W., Du, J.-X., and Bouguila, N. (2019). A

novel statistical approach for clustering positive data

based on finite inverted beta-liouville mixture models.

Neurocomputing, 333:110–123.

Kim, D., Yoon, Y., Lee, J., Mago, P., Lee, K., and Cho, H.

(2022). Design and implementation of smart build-

ings: A review of current research trend. Energies,

15:4278.

Koochemeshkian, P., Zamzami, N., and Bouguila, N.

(2020). Distribution-based regression models for

semi-bounded data analysis. In 2020 IEEE Interna-

tional Conference on Systems, Man, and Cybernetics

(SMC), pages 4073–4080. IEEE.

lingmnwah, G. (1976). On the generalised inverted dirichlet

distribution. Demonstratio Mathematica, 9(3):119–

130.

Nasfi, R., Amayri, M., and Bouguila, N. (2020). A novel

approach for modeling positive vectors with inverted

dirichlet-based hidden markov models. Knowledge-

Based Systems, 192:105335.

Nguyen, H., Rahmanpour, M., Manouchehri, N., Maanic-

shah, K., Amayri, M., and Bouguila, N. (2019). A

statistical approach for unsupervised occupancy de-

tection and estimation in smart buildings. In 2019

IEEE International Smart Cities Conference (ISC2),

pages 414–419. IEEE.

Soltanaghaei, E. and Whitehouse, K. (2016). Walksense:

Classifying home occupancy states using walkway

sensing. BuildSys ’16, page 167–176, New York, NY,

USA. Association for Computing Machinery.

Tiao, G. G. and Cuttman, I. (1965). The inverted dirichlet

distribution with applications. Journal of the Ameri-

can Statistical Association, 60(311):793–805.

Vai

ˇ

ciulyt

˙

e, J. and Sakalauskas, L. (2020). Recursive pa-

rameter estimation algorithm of the dirichlet hidden

markov model. Journal of Statistical Computation

and Simulation, 90(2):306–323.

SMARTGREENS 2023 - 12th International Conference on Smart Cities and Green ICT Systems

88