A Predictive Model for Assessing Satisfaction with Online Learning

for Higher Education Students During and After COVID-19 Using

Data Mining and Machine Learning Techniques: A Case of

Jordanian Institutions

Hadeel Kakish and Dalia Al-Eisawi

a

Department of Business Information Technology and Analytics, Princess Sumaya University Technology,

Khalil Al Saket Street, Amman, Jordan

Keywords: Machine Leaning, Decision Trees, Covid-19, Artificial Intelligence, Online Learning.

Abstract: Higher education institutions confronted an escalating unexpected pressure to rapidly transform throughout

and after the COVID-19 pandemic, by replacing most of the traditional teaching practices with online-based

education. Such transformation required institutions to frequently strive for qualities that meet conceptual

requirements of traditional education due to its agility and flexibility. The challenge of such electronic

learning styles remains in their potential of bringing out many challenges, along with the advantages it has

brought to the educational systems and students alike. This research came to shed the light on several factors

presented as a predictive model and proposed to contribute to the success or failure in terms of students’

satisfaction with online learning. The study took the kingdom of Jordan as a case example country

experiencing online education while and after the covid -19 intensive implementation. The study used a

dataset collected from a sample of over “300” students using online questionnaires. The questionnaire

included “25” attributes mined into the Knime analytics platform. The data was rigorously learned and

evaluated by both the “Decision Tree” and “Naive Bayes” algorithms. Subsequently, results revealed that the

decision tree classifier outperformed the naïve bayes in the prediction of student satisfaction, additionally, the

existence of the sense of community while learning electronically among other reasons had the most

contribution to the satisfaction.

1 INTRODUCTION

Ever since the World Health Organization (WHO)

announced Corona Virus disease (Covid-19) to be a

pandemic, higher educational institutions

encountered many challenges to cope with the drastic

change from the traditional (face-to-face) and on-

campus learning (Harangi-Rákos et al., 2022).

Mobility restrictions within countries significantly

impacted the educational sector, among many sectors

all over the world (Agyeiwaah et al., 2022). All

educational institutions had to switch to full remote

processes, ranging from the registration process to

conducting classrooms (Azizan et al., 2022), to

ensure the continuity of education and avoid a gap of

knowledge that could impact many generations to

come (Looi et al., 2022). Though, it was evident that

a

https://orcid.org/0000-0002-9364-2648

not all students were comfortable with this rapid

change, given the fact that the situation was

unprecedented, educational institutions were

challenged to achieve students’ satisfaction with the

process while lacking the capacity and adequate

technologies to go online (Agyeiwaah et al., 2022).

Furthermore, those institutions who had the available

technologies and were able to commence online

education encountered various challenges from the

user’s side, especially students in their first years

(Spencer & Temple, 2021).

Education in the country of Jordan - like many

countries- transitioned to online learning as an

emergent response to the mobility restrictions after a

period of shut down (Basheti et al., 2022). Online

learning, that is a learning procedure in which certain

online technologies are used to create a virtual

156

Kakish, H. and Al-Eisawi, D.

A Predictive Model for Assessing Satisfaction with Online Learning for Higher Education Students During and After COVID-19 Using Data Mining and Machine Learning Techniques: A Case

of Jordanian Institutions.

DOI: 10.5220/0011844100003467

In Proceedings of the 25th International Conference on Enterprise Information Systems (ICEIS 2023) - Volume 1, pages 156-163

ISBN: 978-989-758-648-4; ISSN: 2184-4992

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

classroom simulating traditional on-campus teaching

(Pagani-Núñez et al., 2022). This type of educational

process is unluckily linked with many disciplinary,

commitment, and network connection issues among

other challenges, from the students’ and lecturers’

sides, which ultimately prevent the student from

having a suitable surrounding environment that

guarantees the knowledge and skills are

communicated (Augustine et al., 2022).

Evaluating the satisfaction of students and

lecturers with online learning since the beginning of

the pandemic became one of the focal attention areas

of many researchers, this matter was addressed using

different statistical and Artificial Intelligence (AI)

methods and techniques (Ho et al., 2021).

Furthermore, researchers relayed on descriptive

analysis using quantitative methods to quantify

students’ satisfaction with numerous factors

impacting the online class some of these factors

include student attendance of online classes, online

evaluations and exams, and relationship with

lecturers (Basheti et al., 2022). Other researchers

employed AI techniques to predict students’

satisfaction with online learning, using different

machine learning algorithms including “Support

Vector Machines”, “K-Nearest Neighbour”, and

“Multiple Linear Regression”.

In addition, researchers selected features

including student readiness, accessibility to online

classes and sources, instructor related variables,

online assessment, and learning related features, to

construct the predictive models used for the

satisfaction prediction (Ho et al., 2021). On this

regard, one machine learning concept was used

widely in studying the satisfaction is the Educational

Data Mining (EDM), which is aimed at exploring

different education related matters using data mining

(Alsammak et al., 2022).

Investigating students’ satisfaction with online

learning in Jordan during the pandemic was done

using descriptive analysis, in which researchers

focused on characterizing the experience of online

learning during the specific period of the pandemic.

Researchers focused on statistical methods to

describe students’ experience in various universities

in Jordan (Basheti et al., 2022),(Alsoud & Harasis,

2021).

2 RESEARCH QUESTIONS AND

STRUCTURE

This section sets the scene of the entire presented

paper, by introducing the key research questions and

features of its content, offering a brief of the involved

aspects. This study aims to contribute to some facets

associated with online learning challenges and

students’ satisfaction by answering the following key

questions:

RQ1: What are the potential factors that might affect

student satisfaction within their online learning

experience?

RQ2: When comparing the Decision Tree and Naive

Bayes performance in predicting students’

satisfaction based on the potential factors, which

predictive model performs better?

The main sections of the study involved aspects

that provides insights into relevant literature, and

background review, and evaluation of algorithms

implemented and their results.

2.1 Online Learning in Higher

Education

Online learning is a method of teaching that utilizes

different known platforms such as known

applications namely (Zoom, Microsoft Teams,

Google Meet...etc), to connect students and teachers

online on multiple technologies, in a way that

simulates actual classroom (Ab Hamid et al., 2022).

Online learning, Distance Learning (DE) or E-

learning refer to the same concept of a virtual

classroom initially created to keep up with the

increased number of enrolments, and the inability of

some students to be present physically for all

classrooms (Spencer & Temple, 2021).

The aim of online learning is not exclusively

giving lectures and provide education, rather, e-

learning providers aspire to equalize the effectiveness

of online learning with face-to-face learning in terms

of extracurricular, social, and community practices,

that all together contribute to the success of the

education process (Tuan & Tram, 2022).

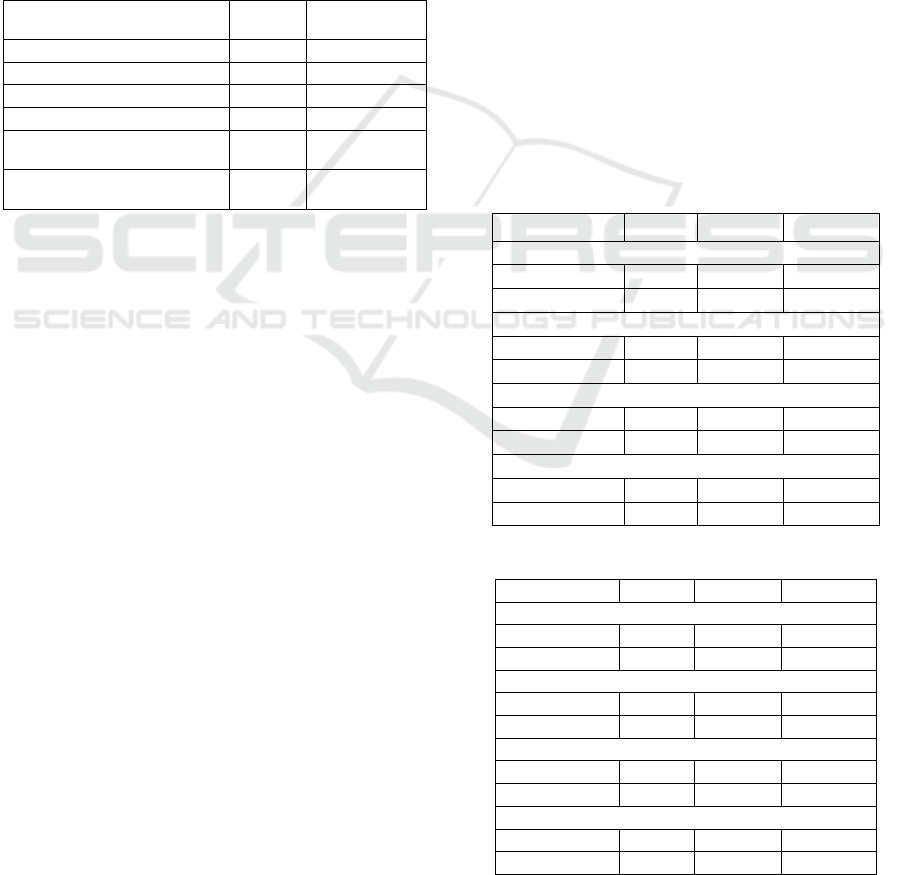

Table 1: Key factors affecting online learning.

Factors Key Source

Lecturer Performance, Student

Interaction, Course Content

(Ab Hamid et

al., 2022

)

Individual Factors, Course Factors

(Spencer &

Tem

p

le, 2021

)

Facilities, Education Program,

Lectures, Interactivity, Tuition Fees

(Tuan & Tram,

2022)

Teaching Effectiveness, Teaching

Style, Pedagogy

(Vikas &

Mathur, 2022)

Motivation, Autonomy, Digital

Pedagog

y

(Díaz-Noguera

et al., 2022)

A Predictive Model for Assessing Satisfaction with Online Learning for Higher Education Students During and After COVID-19 Using

Data Mining and Machine Learning Techniques: A Case of Jordanian Institutions

157

Most researchers focused their studies of the

online education on multiple factors that may affect

the effectiveness and success of this process, Table

“1” summarises some key studies and the factors they

considered.

According to (Ab Hamid et al., 2022), who

adopted a survey constructed to measure students’

satisfaction based on the mentioned elements- among

others, their study showed that the mentioned three

elements contributed significantly to students’

satisfaction, additionally the students’ ability to

interact was the most significant factor. Furthermore,

the authors (Spencer & Temple, 2021) designed their

survey to include four areas as follows: course format,

instructional technology, distance education

experience and course instructor technology use. The

study showed among many results that students

thought technology used in online learning was both

dependable and easy to use, and as a result, all

surveyed students were satisfied with the online

learning.

However, a drawback to online learning was that

students appreciated the interaction with their

instructor and classmates in the traditional classroom,

which they did not experience similarly in the online

classroom. Based on Table “1” extracted factors

researchers’ perceived to be most considered

literature are three main factors, these related to the

student, lecturers, and courses/ programs. Thus, they

have significant effect on students’ satisfaction with

online learning, and educational institutions may

consider these factors to achieve the desired

educational result.

2.2 Artificial Intelligence and Machine

Learning

Artificial Intelligence (AI) technologies are

supercomputers, used for mimicking human

intelligence and reasoning abilities using massive

processing capabilities. AI technologies consist of

agents that function by perceiving surrounding

environment, and acting based on these perceptions,

whilst increasing the possibility of their success in

these actions (Yousaf et al., 2022). Many researchers

used AI and machine learning (ML) methods and

techniques for the purpose of studying students'

satisfaction with online learning (Leo et al., 2021).

The use of these information technologies in studying

students’ satisfaction, provided additional benefits

compared to the statistical tools, as these AI based

technologies enable the collection of vast amounts of

data, from multiple sources in the institution, in order

to analyze it for the purpose of enhancing teaching

methods, and other processes in the institution (Karo

et al., 2022). In addition to the fact that it has a great

potential in education and learning (Limna et al.,

2022).

ML is defined as a branch of AI and computer

science, which focuses on the use of data and

algorithms to imitate the way that humans learn,

gradually improving their accuracy (Leo et al., 2021).

Using ML includes a class or a category to be

predicted, this is done after data are observed and

collected then each record in the dataset is assigned a

category or "class", using either supervised or

unsupervised ML algorithms. In the same token, one

supervised algorithm used widely is educational

research is the decision tree, this algorithm is

presented graphically as a tree, with branches

indicating possible outcomes of a certain decision

under a set of conditions (Looi et al., 2022).

Decision tree is one the most common algorithms

used to create classifiers, that is trained then used to

predict a class or value. It is known to be simple,

quick and able to classify both numerical and

categorical attributes (Yousaf et al., 2022). Another

supervised algorithm for classification problems is

the naïve bayes algorithm which is a probabilistic

technique for solving classification problems

(Mengash, 2020).

The current research focused on a sample of over

“300” students in higher education in Jordan who

have experienced both online and traditional

classrooms. Survey elements were carefully selected

from literature and tailored to suit the sample and

study in progress. After collection, the data were

further processed and engineered to fit the prediction

model used in which decision tree and naive bayes

algorithms were utilized to predict the class attribute.

3 RESEARCH METHODS

3.1 Research Design

The research design employed for the purpose of

prediction students’ satisfaction with online learning

during and after Covid-19 using EDM for studying

students’ performance and satisfaction.

Comprehensive research design is illustrated in

Figure “l” below.

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

158

Figure 1: Research Design and Phases.

3.2 Data Collection

A total of “309” responses were collected for the

study with no elimination of any records, the sample’s

demographics indicated almost equal female to male

distribution of 51.1% to 48.9% consecutively. Also,

most of the respondents were bachelor students

(89.6% bachelor to 10.4% master students) that

studied in private universities (89.6% private to

14.6% public university). Also, many respondents did

not have any type of scholarship and paid their tuition

fees in full (89.3% self-paying student to 10.7% with

partial or full scholarships). Moreover, most of these

students were residents of Amman (93.9%) and

owned a personal computer for their studies (97.1%

who owned a personal computer to 2.9% who did

not). With regards to the responses, it was noted that

that percentage of agree to disagree with overall

satisfaction with online learning was almost equal of

50.2% to 49.8%. Consecutively the current study

developed a questionnaire of “25” items, including

demographic information. The questionnaire

consisted of “6” main indicators including lecturer’s

performance, student interaction, intention, internet

connection, academic integrity misconduct, and

satisfaction with online learning. All questions related

to the aforementioned indicators were measured

using a 5-point Likert scale. The indicators were

inspired from two studies (Ab Hamid et al., 2022),

(Klijn et al., 2022), and modified to fit the sample and

study’s characteristics. The sampling method used

was purposive sampling, respondents were selected to

be current higher education students or graduates who

have experienced both online and offline education.

Each of the “6” indicators are introduced with

reference to the study’s main purposes.:

Lecturer Performance: Lecturer’s role in online

education surpasses the function of explaining

material, it includes ensuring engagement of the

whole class, trigger students’ critical thinking

and creating an interactive session that allows

instant feedback (Ab Hamid et al., 2022).

Student Interaction: Students’ interaction with

their colleagues and instructors during the online

classroom was reported to be of immense

importance in ensuring online classes is effective

as traditional classes (Yousaf et al., 2022).

Intention: Intention was studied not only in

terms of attending classes but to make use of the

technology at hand for educational purposes

(Ab Hamid et al., 2022). The behavioural

intention towards online learning was found to

be of a significant impact on satisfaction with

online learning (Azizan et al., 2022).

Internet Connection: Having stable internet

connection was directly related to increased

cost on students and their parents/ guardians,

additionally, having a proper internet

connection with sufficient data for online classes

and assessments have been a challenge for many

students and was found to affect their satisfaction

with online learning (Leo et al., 2021).

Academic Integrity and Misconduct:

Misconduct in online assessment is facilitated

due to the limited ability of instructors to

control the environment in which the student is

attending assessments in, and since the ability

to copy and cheat seems to be high in online

exams (Tweissi et al., 2022), results of these

assessments will not be as fair as those of

traditional exam rooms, eventually affecting

students’ satisfaction with the process (Basheti

et al., 2022).

Satisfaction with Online Learning: It has been

evident that the success of online learning is

related to student’s needs, expectations and

feedback that contribute directly to their

satisfaction with their education (Augustine et

al., 2022).

3.3 Data Analysis - Preprocessing,

Feature Engineering, and

Prediction Model Training’

Once the responses were collected, they were

extracted into an excel sheet for data cleaning and

pre-processing, both manual and digital pre-

processing techniques were executed to prepare the

dataset for the machine learning. Firstly, all

demographical questions with nominal or ordinal

values were transformed into binary form (0,1) expect

for the location that was discretized and assigned

values from 1-12 corresponding to the main cities of

Jordan.

After the data had been cleaned and prepared for

the model, it was extracted into a K-nime project, the

A Predictive Model for Assessing Satisfaction with Online Learning for Higher Education Students During and After COVID-19 Using

Data Mining and Machine Learning Techniques: A Case of Jordanian Institutions

159

model included further pre-processing on K-nime,

including missing value detection and treatment.

Afterwards, the dataset was partitioned into training

and testing sets using cross validation partitioning

node, executed for four times using four different

number of validations; “5”,”7”,”10” and “15” folds

were run to discover which fold provided the best

performance.

The predictive model training included a step of

feature reduction, to reduce dimensionality and keep

the most relevant attributes to train the model. Table

“2” shows the number of features initially included in

the survey for each construct, and the number of items

remaining for each construct after the elimination, in

addition to two out of “6” demographic items.

Table 2: Attributes before and after reduction.

Construct

Initial #

of Items

# of Items

after reduction

Lecturers Performance 3 0

Student interaction 3 1

Intention 2 1

Internet Connection 3 3

Academic integrity and

misconduct

4 2

Satisfaction with online

learnin

g

4 2

4 RESULTS

4.1 Model Performance Evaluation and

Results

After both classifiers had been trained and assessed,

a scorer node was executed to evaluate the

performance of each model in predicting the true and

false values of the class attribute where (True=

Satisfied, False= Unsatisfied). Initially The naïve

bayes classifier outperformed the decision tree at each

run. However, using the decision tree classifier

allowed the researchers to identify the most related

attributes, and thus use feature selection to update the

dataset and train the model again for enhanced

performance. Table 3 and 4 show the performance

measures for both classifiers in each run after feature

reduction.

The measures included in the performance

evaluation are interpreted as follows: Recall shows

how many items were correctly classified as”

Satisfied” from the total actual” Satisfied”

classifications. Precision: shows how many items

were successfully predicting as” Satisfied” from

total” Satisfied” predictions. F-measure shows how

successful the model was in measuring the recall and

accuracy. Overall Ac- curacy: how successful the

model was in identifying correct values (Satisfied and

Unsatisfied).

4.1.1 Decision Tree Classifier

The decision tree classifier uses a predefined quality

measure to construct the tree by providing all

outcomes of a decision. In this model, decision tree

used “Gain Ratio” as quality measure for the

branching step. Results showed that the highest gain

ratio was that of the attribute measuring the student’s

intention to recommend online learning to others, this

item measured showed that students were satisfied

enough to talk about online learning and encourage

others satisfied students with the online learning.

Luckily, using decision tree classifier and gain

ratio as a quality measure enabled the researchers to

evolve the model, this was done by taking all the

items in the decision tree which provide the highest

gain ratio to the model and perform further feature

selection on the dataset extracted into K-nime.

Evidently, the model performed better as the

confusion matrix and accuracy measures were

notably enhanced for both predictors.

Table 3: True Class Performance Measures.

True Class Recall Precision F-measure

Run 1: 5-Fold

Decision Tree 0.80 0.89 0.85

Naïve Bayes 0.90 1.00 0.95

Run 2: 7-Fold

Decision Tree 1.00 0.80 0.89

Naïve Bayes 0.75 0.60 0.67

Run 3: 10-Fold

Decision Tree 1.00 1.00 1.00

Naïve Bayes 1.00 0.67 0.80

Run 4: 15-Fold

Decision Tree 1.00 1.00 1.00

Naïve Bayes 0.75 1.00 0.87

Table 4: False Class Performance Measures.

False Class Recall Precision F-measure

Run 1: 5-Fol

d

Decision Tree 0.75 0.60 0.67

Naïve Bayes 1.00 0.80 0.89

Run 2: 7-Fold

Decision Tree 0.83 1.00 0.91

Naïve Bayes 0.67 0.80 0.73

Run 3: 10-Fol

d

Decision Tree 1.00 1.00 1.00

Naïve Bayes 0.80 1.00 0.89

Run 4: 15-Fol

d

Decision Tree 1.00 1.00 1.00

Naïve Bayes 1.00 0.50 0.67

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

160

4.1.2 Naive Bayes Classifier

This classifier works by calculating a set of

probabilities, by adding the combinations of

frequency and values, the class with the higher

probability is considered the most likely class (Karo

et al., 2022), and the class is assigned to this value.

Based on the performance measures in Tables 3 and

4, we note that the naïve bayes algorithm is a suitable

classifier for students’ satisfaction with online

learning like other EDM related models (Karo et al.,

2022) when the dataset is highly dimensional, as the

naïve bayes is not affected by the high

dimensionality.

5 DISCUSSION

The Covid-19 pandemic created many challenges on

every aspect for the educational sector, transitioning

to online education was the riskiest, researchers

started studying the aftermath of the transition, by

measuring students and faculty members satisfaction

and performance, this was done using both statistical,

and machine learning methods. However, using

machine learning to predict satisfaction is limited in

Jordan, and thus the authors focused in this study on

tackling the satisfaction indicators applicable to the

characteristics of higher education in Jordan, and the

insights provided by similar statistical research.

The model used in this research included the

decision tree algorithm, which took part in feature

selection helping with the dimensionality reduction

and enhancing the prediction performance,

additionally, it was used as a predictor for the

satisfaction alongside with the naive bayes algorithm.

Originally all “25” features from the survey remained

in the dataset trained and tested by both classifiers,

however, after running the model, results showed that

only “11” of the features were relevant and

significantly affected the model. The naive bayes

performed better than the decision tree at first due to

the existence of redundant and irrelevant features,

however, after feature reduction both classifiers

performed better, and decision tree outperformed the

naive bayes in the satisfaction prediction.

Results showed that students who were satisfied

were to recommend online learning to others, those

satisfied students agreed that group projects were

satisfactory, indicating that students were highly

affected by their ability to work with colleagues,

previous research showed similar results. On the

other hand, students who were not satisfied, mostly

indicated having issues accessing the internet for their

studies due to an instable internet connection in some

areas, similarly, studies showed that the internet

connection was amongst the most important features

contributing to the dissatisfaction of students as

having a stable internet connection is costly in some

countries (Leo et al., 2021). Furthermore, the

researchers noted that academic integrity and

misconduct indicator had the least contribution to the

satisfaction, indicating the probability of minimal

misconduct within the sample selected or a common

behaviour done by students that they do not wish to

declare.

6 IMPLICATIONS TO HIGHER

EDUCATION

Meticulous technological innovations in the sciences

that are related to how people learn in different

practices, and how to measure such learning in terms

of success and satisfaction brings the optimism of

developing new varieties that might aid students in

succeeding and adapting in higher education by

making the nature of the learning practice used, and

the progress of their learning as clear as possible.

However, Since online learning carried on in Jordan

after the pandemic, and bearing in mind that this

online learning experience is still primitive in higher

educational institutions in the country, this study is

proposed to have a valuable contribution to the

student satisfaction studies conducted in Jordan, by

aiding researchers and institutions identify the most

relevant indicators affecting the students’ satisfaction

and hence draw a clear picture of how online

education should be planned and executed.

The machine learning techniques used in this

research can be similarly used in predicting faculty

satisfaction, and students and faculty performance in

online learning, thus providing a novel area for

artificial intelligence studies in Jordan. Lastly, the

methodology used in this research tackles the

importance of feature engineering and pre-processing

suitable for each type of machine learning classifier,

indicating the importance of treating high

dimensionality, and model overfitting before

commencing with the processing even if the total

number of dataset items is not relatively large it could

be overfitting to the model in-hand.

A Predictive Model for Assessing Satisfaction with Online Learning for Higher Education Students During and After COVID-19 Using

Data Mining and Machine Learning Techniques: A Case of Jordanian Institutions

161

7 CONCLUSION AND FUTURE

RESEARCH

The findings of this study revealed several indicators

significantly affecting student satisfaction, using

decision tree in the prediction allowed a reduced

feature dimensionality and thus decreasing the

computational cost of the final model and proposing

that decision tree can perform better in satisfaction

studies in the EDM field when data is well fitted to

the model. Lastly, demonstrating that the naïve bayes

classifier which also provided relatively superior

performance, is suitable for such studies in the field

in which the dimensionality in the dataset is high and

number of instances is fairly enough for the study.

Future research initiatives will incorporate

enlarging the study sample to include more

individuals from public universities in order to have

normal distribution of private to public universities

students, this can possibly alter the results as many

indicators are affected by the fact that private

universities technological capabilities may not be

equivalent to that of public universities. Additionally,

future studies may consider students from

neighbouring countries other than only Jordan, as

engaging more countries and more institutions will

have a higher validity to our proposed model in terms

of factors and constructs.

REFERENCES

Ab Hamid, S. N., Omar Zaki, H., & Che Senik, Z. (2022).

Students’ satisfaction and intention to continue online

learning during the Covid-19 pandemic. Malaysian

Journal of Society and Space, 18(3). https://

doi.org/10.17576/geo-2022-1803-09

Abdelkader, H. E., Gad, A. G., Abohany, A. A., & Sorour,

S. E. (2022). An Efficient Data Mining Technique for

Assessing Satisfaction Level with Online Learning for

Higher Education Students during the COVID-19.

IEEE Access, 10, 6286–6303. https://doi.org/10.

1109/ACCESS.2022.3143035

Agyeiwaah, E., Badu Baiden, F., Gamor, E., & Hsu, F. C.

(2022). Determining the attributes that influence

students’ online learning satisfaction during COVID-19

pandemic. Journal of Hospitality, Leisure, Sport, and

Tourism Education, 30. https://doi.org/10.1016/j.

jhlste.2021.100364

Alsammak, I. L. H., Mohammed, A. H., & Nasir, ntedhar

S. (2022). E-learning and COVID-Performance Using

Data Mining Algorithms. Webology, 19(1), 3419–3432.

https://doi.org/10.14704/web/v19i1/web19225

Alsoud, A. R., & Harasis, A. A. (2021). The impact of

covid-19 pandemic on student’s e-learning experience

in Jordan. Journal of Theoretical and Applied

Electronic Commerce Research, 16(5), 1404–1414.

https://doi.org/10.3390/jtaer16050079

Azizan, S. N., Lee, A. S. H., Crosling, G., Atherton, G.,

Arulanandam, B. V., Lee, C. E., & Rahim, R. B. A.

(2022). Online Learning and COVID-19 in Higher

Education: The Value of IT Models in Assessing

Students’ Satisfaction. International Journal of

Emerging Technologies in Learning, 17(3), 245–278.

https://doi.org/10.3991/ijet.v17i03.24871

Basheti, I. A., Nassar, R. I., & Halalşah, İ. (2022). The

Impact of the Coronavirus Pandemic on the Learning

Process among Students: A Comparison between

Jordan and Turkey. Education Sciences, 12(5). https://

doi.org/10.3390/educsci12050365

Harangi-Rákos, M., Ștefănescu, D., Zsidó, K. E., &

Fenyves, V. (2022). Thrown into Deep Water:

Feedback on Student Satisfaction— A Case Study in

Hungarian and Romanian Universities. Education

Sciences, 12(1). https://doi.org/10.3390/EDUCSCI120

10036.

Jarab, F., Al-Qerem, W., Jarab, A. S., & Banyhani, M.

(2022). Faculties’ Satisfaction with Distance Education

During COVID-19 Outbreak in Jordan.

Frontiers in

Education, 7. https://doi.org/10.3389/feduc.2022.789648

Karo, I. M. K., Fajari, M. Y., Fadhilah, N. U., & Wardani, W.

Y. (2022). Benchmarking Naïve Bayes and ID3

Algorithm for Prediction Student Scholarship. IOP

Conference Series: Materials Science and Engineering,

1232(1), 012002. https://doi.org/10.1088/1757-899x/

1232/1/012002

Klijn, F., Alaoui, M. M., & Vorsatz, M. (2022). Academic

integrity in on-line exams: Evidence from a randomized

field experiment.Https://doi.org/10.13039/50110001

1033

Leo, S., Alsharari, N. M., Abbas, J., & Alshurideh, M. T.

(2021). From Offline to Online Learning: A Qualitative

Study of Challenges and Opportunities as a Response

to the COVID-19 Pandemic in the UAE Higher

Education Context. In Studies in Systems, Decision and

Control (Vol. 334, pp. 203–217). Springer Science and

Business Media Deutschland https://doi.org/10

.1007/978-3-030-67151-8_12

Limna, P., Jakwatanatham, S., Siripipattanakul, S.,

Kaewpuang, P., & Sriboonruang, P. (2022). A Review

of Artificial Intelligence (AI) in Education during the

Digital Era. https://ssrn.com/abstract=4160798

Looi, K., Wye, C. K., & Bahri, E. N. A. (2022). Achieving

Learning Outcomes of Emergency Remote Learning to

Sustain Higher Education during Crises: An Empirical

Study of Malaysian Undergraduates. Sustainability

(Switzerland), 14(3). https://doi.org/10.3390/

su14031598

Mengash, H. A. (2020). Using data mining techniques to

predict student performance to support decision making

in university admission systems. IEEE Access, 8,

55462–55470. https://doi.org/10.1109/ACCESS.2020

.2981905

Pagani-Núñez, E., Yan, M., Hong, Y., Zeng, Y., Chen, S.,

Zhao, P., & Zou, Y. (2022). Undergraduates’

perceptions on emergency remote learning in ecology

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

162

in the post-pandemic era. Ecology and Evolution, 12(3).

https://doi.org/10.1002/ece3.8659

Spencer, D., & Temple, T. (2021). Examining students’

online course perceptions and comparing student

performance outcomes in online and face-to-face

classrooms. Online Learning Journal, 25(2), 233–261.

https://doi.org/10.24059/olj.v25i2.2227

Szopiński, T., & Bachnik, K. (2022). Student evaluation of

online learning during the COVID-19 pandemic.

Technological Forecasting and Social Change, 174.

https://doi.org/10.1016/j.techfore.2021.121203

Tuan, L. A., & Tram, N. T. H. (2022). Examining student

satisfaction with online learning. International Journal

of Data and Network Science, 6(1), 273–280. https://

doi.org/10.5267/j.ijdns.2021.9.001

Tweissi, A., Al Etaiwi, W. and Al Eisawi, D. (2022) “The

accuracy of AI-based automatic proctoring in online

exams,” Electronic Journal of e-Learning, 20(4), pp.

419–435. Available at: https://doi.org/10.34190/e

jel.20.4.2600.

Yousaf, H. Q., Rehman, S., Ahmed, M., & Munawar, S.

(2022). Investigating students’ satisfaction in online

learning: the role of students’ interaction and

engagement in universities. Interactive Learning

Environments, 1-18`. https://doi.org/10.1080/104

94820.2022.2061009.

A Predictive Model for Assessing Satisfaction with Online Learning for Higher Education Students During and After COVID-19 Using

Data Mining and Machine Learning Techniques: A Case of Jordanian Institutions

163