Climbing with Virtual Mentor by Means of Video-Based Motion Analysis

Julia Richter

1 a

, Raul Beltr

´

an Beltr

´

an

1 b

, Guido K

¨

ostermeyer

2 c

and Ulrich Heinkel

1 d

1

Professorship Circuit and System Design, Chemnitz University of Technology,

Reichenhainer Straße 70, Chemnitz, Germany

2

Department Sportwissenschaft und Sport, Friedrich-Alexander-Universit

¨

at Erlangen-N

¨

urnberg,

Schlossplatz 4, 91054 Erlangen, Germany

Keywords:

Computer Vision, Human Pose Estimation, Climbing Motion Analysis, Feedback Systems.

Abstract:

Due to the growing popularity of climbing, research on non-invasive, camera-based motion analysis has re-

ceived increasing attention. While extant work uses invasive technologies, such as wearables or modified walls

and holds, or focusses on competitive sports, we for the first time propose a system that automatically detects

motion errors that are typical for beginners with a low level of climbing experience by means of video anal-

ysis. In our work, we imitate a virtual mentor that provides an analysis directly after having climbed a route.

We thereby employed an iPad Pro fourth generation with LiDAR to record climbing sequences, in which the

climber’s skeleton is extracted using the Vision framework provided by Apple. We adapted an existing method

to detect joints movements and introduced a finite state machine that represents the repetitive phases that occur

in climbing. By means of the detected movements, the current phase can be determined. Based on the phase,

single errors that are only relevant in specific phases are extracted from the video sequence and presented to

the climber. Latest empirical tests with 14 probands demonstrated the working principle. We are currently

collecting data of climbing beginners for a quantitative evaluation of the proposed system.

1 INTRODUCTION

In recent years, climbing has become a mainstream

sport. Due to this popularity of climbing, but also due

to increasing computing power of mobile devices and

enhanced sensor technologies, climbing motion anal-

ysis is an increasingly investigated research topic. To

date, existing work has investigated the use of sen-

sors integrated into the wall and the holds, body-worn

sensors, or camera-based, non-contact sensor technol-

ogy, to name several examples, which are also cited

in Section 2. So far, however, there exists no appli-

cation that, on the basis of video analysis, analyses

a climber’s pose to automatically detect motion er-

rors for beginners. In view of climbing becoming a

mainstream sport, such a system would be helpful to

teach persons without or limited climbing experience

correct techniques that would normally be introduced

by a trainer.

a

https://orcid.org/0000-0001-7313-3013

b

https://orcid.org/0000-0001-6612-3212

c

https://orcid.org/0000-0002-2681-5801

d

https://orcid.org/0000-0002-0729-6030

In our study, which is still work in progress, we

propose such a system, i. e. a virtual mentor, that

based on a skeleton model segments a climber’s mo-

tion into typical repetitive climbing phases and sub-

sequently automatically analyses the motion in terms

of technique errors occurring in these phases that are

typical for beginners.

The paper is structured as follows: Section 2 re-

views previous work, followed by an excursion into

the theory of climbing in Section 3. Thereupon,

Section 4 introduces the sensor setup, reviews ex-

isting skeleton detection algorithms with respect to

their suitability for climbing applications, and ex-

plains our system components. The developed ap-

plication that was tested in first empirical evaluations

with 14 probands is presented in Section 5. A sum-

mary and an outlook are given in Section 6.

2 RELATED WORK

When considering previous work related to systems

developed in climbing applications, we can group the

employed sensors into three main groups:

126

Richter, J., Beltrán, R., Köstermeyer, G. and Heinkel, U.

Climbing with Virtual Mentor by Means of Video-Based Motion Analysis.

DOI: 10.5220/0011959300003497

In Proceedings of the 3rd International Conference on Image Processing and Vision Engineering (IMPROVE 2023), pages 126-133

ISBN: 978-989-758-642-2; ISSN: 2795-4943

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

• Instrumented climbing walls, where sensor tech-

nology is integrated in the wall or in the holds

such as strain gauges e. g. (Quaine et al., 1997a),

(Quaine et al., 1997b), (Quaine and Martin, 1999),

force torque sensors e. g. (Aladdin and Kry, 2012),

(Pandurevic et al., 2018), and capacitive sensors

e. g. (Parsons et al., 2014).

• Body-worn sensor technology such as body-

mounted acceleration sensors e. g. (Ebert et al.,

2017) , (Kosmalla et al., 2016), (Kosmalla et al.,

2015), and visual markers e. g. (iROZHLAS,

2019), (Cordier et al., 1994), (Sibella et al., 2007),

(Reveret et al., 2018)

• Camera-based, non-contact sensor technology,

e. g. (Pandurevic et al., 2022)

Camera-based approaches offer the advantage that

they are easy to integrate into a climbing setup with-

out having to change holds or the wall itself, for exam-

ple. Furthermore, they work contactless, so that the

climber does not have to wear additional equipment,

which has to be attached on the body, may restrict the

movements or may also lead to injuries (wristbands).

Moreover, the increasingly high-quality cameras in-

tegrated in mobile devices offer new possibilities for

climbing applications.

In the following, a selection of very early as well

as most recent camera-based approaches for climbing

motion analysis and feedback systems are presented.

A very early work is the marker-based approach

by (Sibella et al., 2007). They demonstrated that

it is possible to compare entropy, velocity, and in-

duced force of climbers by tracking markers taped to

the climber’s body with a camera and calculating the

body’s centre of gravity from these markers.

In lead climbing, Adam Ondra and

ˇ

St

ˇ

ep

´

an Str

´

an

´

ık

were equipped with reflective markers and thus

analysed via a commercial motion capture system

(iROZHLAS, 2019). The centre of gravity was deter-

mined for both climbers and their distance from the

wall was compared. It became clear that Adam Ondra

keeps his centre of gravity significantly closer to the

wall during a difficult move than

ˇ

St

ˇ

ep

´

an Str

´

an

´

ık.

In speed climbing, a very recent work (Pandure-

vic et al., 2022) demonstrated the application of

OpenPose (Cao et al., 2018), (OpenPose, 2019). By

measuring body joint angles, velocities, contact times

and path lengths, they compared speed climbing tech-

niques of top athletes and identified potential for im-

provements.

Reveret et al. investigated energy losses in speed

climbing caused by non-upward climbing sequences,

i. e. lateral and horizontal components (Reveret et al.,

2018). For this purpose, they used two drones

equipped with cameras to analyse climbing move-

ments in speed climbing in terms of the energy used

in the form of velocities. This involved the climber

wearing a harness with a visual marker, which was

localised in three dimensions by the two drone cam-

eras. The trajectories of the markers were recorded so

that the velocity components could subsequently be

measured in the vertical, lateral and horizontal direc-

tions.

Kosmalla et al. presented a system for visualis-

ing reference motions on a bouldering wall (Kosmalla

et al., 2017). They calculated the climber’s centre of

gravity from the 3-D skeleton provided by the Kinect

v2 in order to determine the location of the projec-

tion onto the wall. The combination of sensor and

projection unit is called betaCube there. Their work

resulted in the Climbtrack assistive technologies for

sports climbing, which is available with the betaCube

(ClimbTrack, 2019).

A more detailed overview about sensors, motion

capture, and climbing motion analysis algorithms is

provided by (Richter et al., 2020).

3 CLIMBING THEORY

During climbing up a route, the three phases reach-

ing, stabilisation and preparation appear repeti-

tively. An ideal climbing motion sequence is charac-

terised by the following procedure: Reaching means

that the climber shifts his weight to one of his legs,

stands up over this leg while reaching to the next hold

with one hand. This reaching hand becomes the hold-

ing hand. After the climber has gripped the hold, he

is in the stabilisation phase, where he ideally lowers

his body. Then he is able to look for next holds in

a rather comfortable position. From the stabilisation

phase, the climber either re-sets the feet to prepare

the next reach resulting him to transit to the prepara-

tion phase, or directly reaches to the next hold with-

out re-setting the feet resulting in a transition back to

the reaching phase. From the preparation phase, the

climber goes to the reaching phase once the feet are

finally set. Moreover, the hip and one hand start mov-

ing. The phases with their characteristics are sum-

marised in Figure 1 in the green bubbles. Addition-

ally, the items in the gray boxes indicate the tech-

niques a climber should pay attention to during the

respective phase.

Especially for beginners, motion errors are com-

mon. Hereby, each error can be attributed to a specific

phase and shall only be detected in this very phase.

This will be explained on an example in Section 1.

In Table 1, the correct techniques and the errors that

Climbing with Virtual Mentor by Means of Video-Based Motion Analysis

127

Stabilisation

• Lowering of

body

Reaching

• Weight

shifting,

• Standing up,

• Reaching

Preparation

• Re-setting of

feet

Start (both

hands on

holds)

• Weight shift

• Hip close to wall

• Reaching hand

supports

• Both feet set

• Shoulder relaxing • Decoupling

Reaching hand

stops, no hip

movement in

vertical

direction

Hand

movement

Foot

movement

Foot movement

Hand

movement

No foot movement and hip movement or hand

movement

Figure 1: Climbing phases with their characteristics (green bubbles), according techniques (gray boxes), and transitions

between the phases that are based on hand, foot and hip movement.

Figure 2: Principles of error detection. (a), (b), (c), (d), (e) and (f) correspond to the errors described in Table 1.

occur are described. These errors occur when a spe-

cific technique is not performed correctly. Addition-

ally, Figure 2 presents a visual summary of typical

errors that we detect while a climber is in a route.

Figure 3: System setup with iPad Pro.

4 METHODS

The following sections present the components of the

developed feedback system.

4.1 Setup and Sensor

In our study, we employed the iPad pro fourth gen-

eration, which provides a light detection and ranging

(LiDAR) sensor that enables depth measurements in

our case at 60 frames per second. From this depth

information, a 3-D point cloud with a dense grid can

be calculated. Next to sensor data provision, the iPad

itself also serves as a computational and visualisation

unit where the sensor data is processed and the feed-

back is prepared for the climber. Figure 3 illustrates

the setup of our system. The iPad can be installed at

an arbitrary location in front of the wall, optimally in

at a distance of four to six metres. Within that range,

the whole wall is visible in our setup while the LiDAR

depth information is still reliable. During one single

route recording, the position should remain fixed.

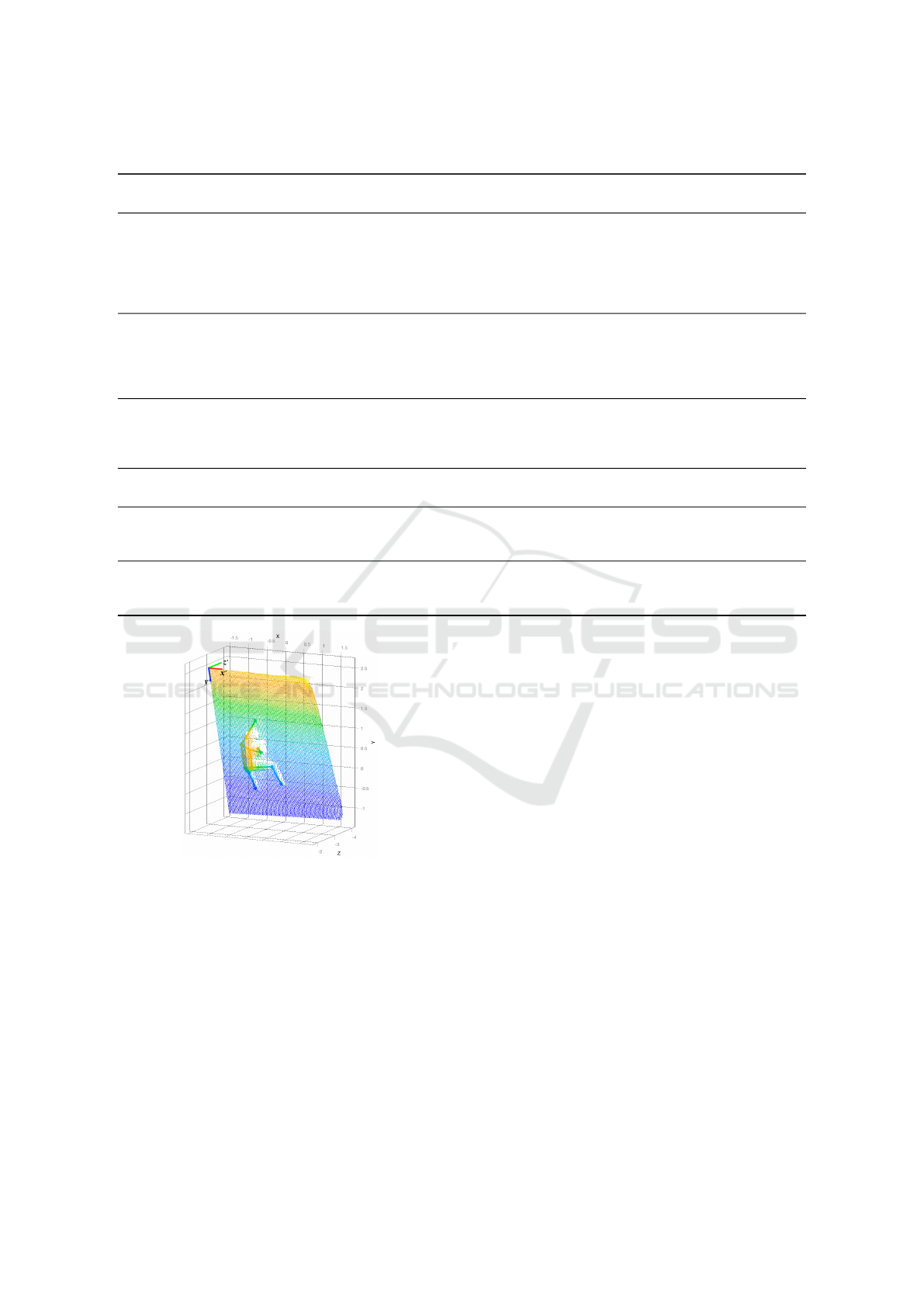

Figure 4 exemplarily shows the 3-D point cloud

provided by the iPad. This point cloud is used for 3-D

skeleton extraction and extrinsic camera calibration,

which is explained in the following sections.

IMPROVE 2023 - 3rd International Conference on Image Processing and Vision Engineering

128

Table 1: Description of correct execution and climbing errors.

Name of correct

technique

Correct execution description Error description

(a) Weight shift While standing up, the weight is shifted over one

leg (normally the leg opposite the holding hand), the

knee moves vertically in front of the toe of that leg,

the hip goes first over the leg and then upwards. In

that way, the main power origins from the large leg

muscles and not from the smaller arm muscles.

The climber does not shift the weight over

the supporting leg and stands up while

pulling on the arm.

(b) Hip close to the

wall

When the climber moves his or her weight upwards,

the main weight should remain on the supporting leg.

This can be realised by keeping the hip close to the

wall. In that way, the main weight rests on the legs

and does not pull on the arms.

The hip is far away from the wall, resulting

is the body weight pulling on the grips held

by the hands.

(c) Reaching hand

supports

The reaching hand should support the process of

standing up as long as possible to stabilise the body

and to save energy. The reaching process should not

take longer than one second.

The reaching hand leaves the hold too

early.

(d) Both feet set While standing up, both feet should have contact to

the wall because it stabilises the body.

Only one foot has wall contact.

(e) Shoulder relax-

ing

After reaching, the climber should lower his or her

position again so that the arms are as straight as pos-

sible. This position saves energy.

After reaching, the climber remains in the

position where the arms are probably bent.

(f) Decoupling When placing the feet, the climber shall keep the arm

of the holding hand as straight as possible to save

energy.

The climber bends the arm of the holding

hand resulting in an unfavourable load on

the grip, which requires more strength.

Figure 4: 3-D point cloud with wall coordinate system at

the top left corner of the wall and extracted 3-D skeleton.

4.2 Skeleton

In a pre-study, together with a partner that poten-

tially will use the developed system, we reviewed

several skeleton extraction algorithms including

NUITRACK (NUITRACK, 2022), OpenPose (Open-

Pose, 2019), PoseNet (GitHub, 2021), (Papandreou

et al., 2018), Apple Vision (Apple, 2022b) and Apple

ARKit (Apple, 2022a). We found that NUITRACK,

PoseNet and Apple ARKit are unsuitable for climb-

ing applications since they are inaccurate when the

person is viewed from behind and occlusions as well

as non-conventional poses are present. Still, PoseNet

can be re-trained with climbing poses. Considering

OpenPose, it was found to be suitable, but companies

are facing licencing problems when they want to use

it commercially. OpenPose is free of licence only for

academical use. That is why we decided to use the

Vision framework by Apple (Apple, 2022b), which

provides a 2-D skeleton suitable for climbing pose de-

tection. A profound review on 3-D human pose esti-

mation is provided by (Desmarais et al., 2021).

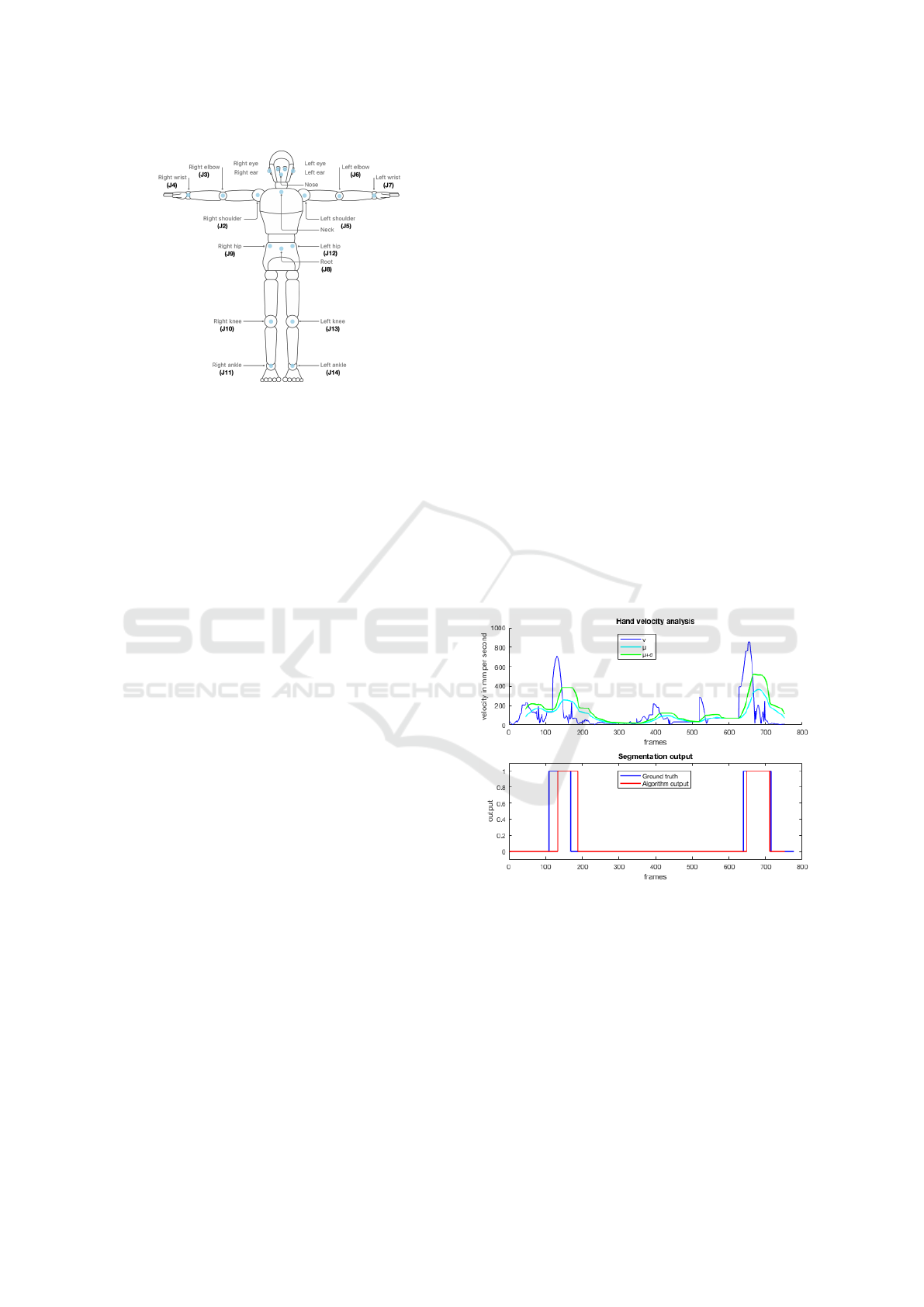

The iPad provides the opportunity to extract a 2-D

skeleton from the RGB image data. By means of the

Vision framework, 19 body features can be detected,

as illustrated in Figure 5. To obtain a 3-D skeleton

for view-invariant motion analysis, we calculated the

3-D joint coordinates for relevant by means of the 3-

D point cloud: A 2-D joint is converted into 3-D wall

coordinates and its neighbouring points are averaged

to calculate the final joint coordinate. For the trans-

formation of 2-D joints detected in the RGB image to

3-D joints in world coordinates, a calibration is per-

formed. An example of a calculated 3-D skeleton is

shown in Figure 4. Relevant joints for our climbing

evaluation are ankle, knee, elbow, wrist, shoulder and

hip joints as well as the root. In the following, the

wrist is denoted as hand and the root as hip.

Climbing with Virtual Mentor by Means of Video-Based Motion Analysis

129

Figure 5: Vision skeleton, (Apple, 2022b).

4.3 Camera Calibration

The aim of the calibration is the representation of

3-D joint coordinates with respect to a wall coordi-

nate system. This wall coordinate system is defined

by an origin at the top left wall corner while the x-y

plane represents the wall surface and the z component

the distance from the wall, see Figure 4. Moreover,

the calibration is necessary to enable an alignment

with a reference recording available for every route

and a user recording in order to compare both with

respect to motion errors. Furthermore, the represen-

tation of the hand joints in wall coordinates is used to

automatically detect the climber on the start holds.

The orientation of the coordinate system is de-

termined by firstly detecting the largest plane in the

point cloud using the RANSAC algorithm (Fischler

and Bolles, 1981). The largest plane defines the x and

y axes and the orthogonal vector denotes the z axis.

The top left corner, i. e. the origin, is found by seg-

menting the point cloud of the climbing wall by a 2-D

polygonal approximation (Ramer, 1972). This cali-

bration process is performed at the beginning of ev-

ery route recording, so that the recorded data is view-

invariant in case the sensor is re-located. The cali-

bration method works for any rectangle wall, such as

Kilter (Kletterkultur, 2019), Moon (Moon Climbing,

2019) and Tension board (Tension Climbing, 2019).

4.4 Movement Detection

Joint movement detection is a pre-processing step

for the subsequent phase detection. In order to

specify in which current phase a climber is, we have

to determine which joints are moving, because the

phase transitions depend on joint movements, see Fig-

ure 1. In particular, hands, feet and the hip are rele-

vant at this point. Joint movement detection is done

by projecting the 3-D points of these very joints onto

the x-y wall plane. Then, the joint velocity v in mm

per second on this plane is evaluated.

Here, we used an adapted version of (Beltr

´

an B.

et al., 2022), whereas we calculate the standard devi-

ation σ of the joint velocity with respect to the mean

velocity µ within a sliding window instead of an accu-

mulated acceleration. For every joint and frame, the

sum of the mean and the according standard devia-

tion µ + σ is then compared against a route-variable

threshold thr, which is determined as 40 % of the

maximum of the respective joint velocity within the

route. For all frames with this higher sum than this

threshold the respective joint is considered to be mov-

ing. In this case, the algorithm detects the joint to be

moving (mov = 1), else the joint is assumed to be not

in movement (mov = 0), as represented in Equation 1.

mov =

(

1, if µ + σ > 0.4 · max(v),

0 else.

(1)

Figure 6 presents the principle of movement detec-

tion for an example joint. At the top, the velocity, the

mean velocity of the sliding window and the standard

deviation along the route are presented. The graph at

the bottom illustrates the labeled ground truth and the

obtained movement output mov.

Figure 6: Principle of joint movement detection.

4.5 Phase Detection

Based on the movement information of hands, feet,

and hip, the current climbing phase can be deter-

mined. In this study, we propose a finite state ma-

chine that represents the process of climbing move-

ments along a route. It is necessary to know in which

phase the climber is, because depending on the phase,

only certain errors have to be checked. In the stabili-

sation and preparation phase, for example, it is impor-

tant that the holding arm is straight while in the reach-

IMPROVE 2023 - 3rd International Conference on Image Processing and Vision Engineering

130

ing phase it is only natural that it is not straight. Con-

sequently, we only should check for a straight arm in

the stabilisation and the preparation phase. Figure 1

illustrates how the detected movements of the spe-

cific joint finally influence the transitions between

the phases.

At the beginning of each route, the climber has

to start with both hands on the start holds. To detect

this, we evaluate whether both hands are not moving

and close to the wall by checking whether the z com-

ponents of the 3-D wrist joints are within a defined

range around the wall plane. Next, we are waiting

for either a hand or a foot movement to jump into the

reaching or preparation phase respectively. The mov-

ing hand is denoted as the next holding hand then.

From the reaching phase, the climber transits to the

stabilisation phase when the hand stops on the hold

and no vertical hip movement is detected any more.

It is possible to jump back to the reaching phase in

case of new hand movements. Otherwise, once a foot

movement occurs, the climber is considered to be in

the preparation phase where he or she re-organises the

feet. Once no foot movement is measured because

the climber has finished re-setting the feet or a hand

movement to the next hold is detected, we jump to

the reaching phase. For the foot movement, we addi-

tionally check whether there is a hip movement. Only

checking for the feet to be at rest would not be a suffi-

cient indicator for the transition to the reaching phase,

because it is possible that the climber re-sets the feet

again and would stay in the preparation phase.

4.6 Error Detection

Figure 2 visualises the error detection metrics, in

which d

fk

denotes the horizontal component of the

distance between foot of the supporting leg and knee,

d

hip

the distance between the hip position of the user

and the reference climber, t

hand

the reaching time of

the reaching hand, d

fw

the distance between one foot

and the wall, ϕ

e

the elbow angle and ϕ

s

the shoulder

angle.

In order to analyse whether error (a) has occurred

during the reaching phase, we check for the support-

ing leg, which is defined to be on the same body side

as the reaching hand, whether d

fk

exceeds a certain

threshold for a certain amount of frames within this

phase. If this is true, we assume that the climber has

shifted the weight over the supporting leg and the er-

ror has not occurred. Otherwise we presume that the

error has occurred. Error (b) is determined in the

reaching phase by comparing the hip distance differ-

ence from the wall between the reference and cur-

rent climber (user) d

hip

, which is calculated from the

z components, against a threshold. If d

hip

is higher

than the threshold, the frame is marked to have error

(b). To find the reference frame that corresponds with

the current user frame, a sequence alignment of the

user with the reference sequence is necessary. For this

alignment, we apply Dynamic Time Warping (DTW)

on the vector containing the x and y components of

the reference’s and user’s hip in wall coordinates with

Euclidean distance as a distance measure. Error (c) is

detected in the reaching phase by measuring the time

t

hand

when the reaching hand is in motion. If this time

exceeds one second, the error is detected. Error (d)

has occurred if one of the feet is detected to exceed a

certain distance d

fw

from the wall measured in z di-

rection in the reaching phase. The errors for shoul-

der relaxing (e) and decoupling (f) show the same

features but are checked in different phases, i. e. sta-

bilisation or preparation phase respectively. For both

errors, the elbow and shoulder angle ϕ

e

and ϕ

s

are

lower than a certain threshold.

5 RESULTS

In first empirical evaluations, the system was tested

by 14 probands of which the majority were begin-

ners. They climbed a reference route and used the de-

veloped app to obtain feedback. Currently the statisti-

cal evaluation in terms of comparison against ground

truth data in form of precision-recall curves is still in

progress, but the application as well as the visual

feedback is presented here.

Figure 7 shows the developed feedback app. (a)

In the start screen, one can select between trainer and

user mode. (b) In the trainer mode, an experienced

climber can record new reference routes. (c) In the

user mode, the user can choose from the recorded

routes. (d) When a route is selected, the user can

record a new trial of his or her own and also review

previous trials of this route. Before the recording

starts, the setup is extrinsically calibrated. The sys-

tem automatically detects when the user has put both

hands on the start holds and from then on records the

sequence. Moreover, the reference solution is pre-

sented as a video. (e) When the user reviews own

recorded trials, he or she sees the detected errors in the

own recording at the bottom and can compare against

the reference at the top that is aligned by DTW.

Examples of generated feedback, i. e. for the er-

rors occurring in the different phases, are presented

in Figure 8. In a separate screen that is not pre-

sented here, the user can thereupon obtain detailed

hints about what can be improved so that he or she can

take this information into account for the next trial.

Climbing with Virtual Mentor by Means of Video-Based Motion Analysis

131

(a) (b) (c) (d) (e)

Figure 7: Feedback application.

(a) Errors in reaching phase. (b) Error in stabilisation phase. (c) Error in preparation phase.

Figure 8: Comparison of user (bottom video) against reference (top video) and feedback given to the user (coloured feedback

bubbles). (a) User’s hip position should be closer to the wall. Moreover, the user should shift the weight to the left leg and

hold longer with the left hand before it reaches to the new hold. Both feet are set, so no feedback bubble appears at this point.

(b) User should lower his body after having reached, so that the shoulder angle is open and the arm straight. (c) User keeps

the arm bent while re-setting the feet. He or she should stretch the arm as the reference does.

6 CONCLUSIONS

This study presented an approach to detect motion er-

rors that commonly appear with beginners in climb-

ing scenarios. We examined skeleton extraction al-

gorithms and found a suitable 2-D algorithm, which

together with an obtained 3-D point cloud was the

basis for 3-D skeleton calculation. Based on move-

ment segmentation by analysing joint velocities, this

study for the first time proposes a method that maps

climbing theory into a finite state machine to repre-

sent climbing phases. By doing this, our work allows

to detect errors that typically occur in those specific

phases. The result is an application that provides valu-

able feedback to beginners. Before, such an approach

has not existed.

Our next steps are the quantitative evaluation of

the climbing motion error detection. For this, we

already have labelled ground truth data and are cur-

rently collecting more data from climbers using boul-

der walls in various climbing halls. In terms of skele-

ton extraction, it is sensible to investigate further

skeleton extraction algorithms that are continuously

appearing on the market. A possible 3-D skeleton

extraction solution that would also enable an imple-

mentation for Android might be the pose estimation

framework MediaPipe provided by Google (Google,

2022).

REFERENCES

Aladdin, R. and Kry, P. (2012). Static pose reconstruction

with an instrumented bouldering wall. In Proceedings

of the 18th ACM symposium on Virtual reality soft-

ware and technology, pages 177–184. ACM.

Apple (2022a). Apple developer homepage ARKit.

https://developer.apple.com/documentation/arkit/

IMPROVE 2023 - 3rd International Conference on Image Processing and Vision Engineering

132

arbodyanchor/3229909-skeleton. Accessed: 2022-

11-10.

Apple (2022b). Apple developer homepage Vision.

https://developer.apple.com/documentation/vision/

detecting human body poses in images. Accessed:

2022-11-10.

Beltr

´

an B., R., Richter, J., and Heinkel., U. (2022). Au-

tomated human movement segmentation by means of

human pose estimation in rgb-d videos for climbing

motion analysis. In Proceedings of the 17th Inter-

national Joint Conference on Computer Vision, Imag-

ing and Computer Graphics Theory and Applications

- Volume 5: VISAPP,, pages 366–373. INSTICC,

SciTePress.

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E., and Sheikh,

Y. (2018). OpenPose: realtime multi-person 2D pose

estimation using Part Affinity Fields. In arXiv preprint

arXiv:1812.08008.

ClimbTrack (2019). ClimbTrack. https://climbtrack.com/.

Accessed: 2022-11-10.

Cordier, P., France, M. M., Bolon, P., and Pailhous, J.

(1994). Thermodynamic study of motor behaviour op-

timization.

Desmarais, Y., Mottet, D., Slangen, P., and Montesinos, P.

(2021). A review of 3d human pose estimation algo-

rithms for markerless motion capture. Computer Vi-

sion and Image Understanding, 212:103275.

Ebert, A., Schmid, K., Marouane, C., and Linnhoff-Popien,

C. (2017). Automated recognition and difficulty as-

sessment of boulder routes. In International Confer-

ence on IoT Technologies for HealthCare, pages 62–

68. Springer.

Fischler, M. A. and Bolles, R. C. (1981). Random sample

consensus: a paradigm for model fitting with appli-

cations to image analysis and automated cartography.

Communications of the ACM, 24(6):381–395.

GitHub (2021). Tensorflow Lite PoseNet - iOS example

application. https://github.com/tensorflow/examples/

tree/master/lite/examples/posenet/ios, visisted on

24/09/2021.

Google (2022). Google MediaPipe Pose Estimation. https:

//google.github.io/mediapipe/solutions/pose. Ac-

cessed: 2022-11-10.

iROZHLAS (2019). Adam Ondra hung with sen-

sors. https://www.irozhlas.cz/sport/ostatni-

sporty/czech-climber-adam-ondra-climbing-data-

sensors 1809140930 jab. Accessed: 2022-11-10.

Kletterkultur (2019). Kilter Board. https://climbing-

culture.com/de/Marken/Kilter/Kilterboard/?net=1.

Accessed: 2022-11-10.

Kosmalla, F., Daiber, F., and Kr

¨

uger, A. (2015). Climb-

sense: Automatic climbing route recognition using

wrist-worn inertia measurement units. In Proceedings

of the 33rd Annual ACM Conference on Human Fac-

tors in Computing Systems, pages 2033–2042. ACM.

Kosmalla, F., Daiber, F., Wiehr, F., and Kr

¨

uger, A. (2017).

ClimbVis - Investigating In-situ Visualizations for Un-

derstanding Climbing Movements by Demonstration.

In Interactive Surfaces and Spaces - ISS ’17, pages

270–279, Brighton, United Kingdom. ACM Press.

Kosmalla, F., Wiehr, F., Daiber, F., Kr

¨

uger, A., and

L

¨

ochtefeld, M. (2016). Climbaware: Investigating

perception and acceptance of wearables in rock climb-

ing. In Proceedings of the 2016 CHI Conference on

Human Factors in Computing Systems, pages 1097–

1108. ACM.

Moon Climbing (2019). Moon Board. https://

www.moonboard.com/. Accessed: 2022-11-10.

NUITRACK (2022). NUITRACK Full Body Tracking.

https://nuitrack.com/#api. Accessed: 2022-11-10.

OpenPose (2019). OpenPose homepage. https:

//github.com/CMU-Perceptual-Computing-Lab/

openpose. Accessed: 2022-11-10.

Pandurevic, D., Draga, P., Sutor, A., and Hochradel, K.

(2022). Analysis of competition and training videos of

speed climbing athletes using feature and human body

keypoint detection algorithms. Sensors, 22(6):2251.

Pandurevic, D., Sutor, A., and Hochradel, K. (2018). Meth-

ods for quantitative evaluation of force and technique

in competitive sport climbing.

Papandreou, G., Zhu, T., Chen, L.-C., Gidaris, S., Tompson,

J., and Murphy, K. (2018). Personlab: Person pose

estimation and instance segmentation with a bottom-

up, part-based, geometric embedding model.

Parsons, C. P., Parsons, I. C., and Parsons, N. H. (2014). In-

teractive climbing wall system using touch sensitive,

illuminating, climbing hold bolts and controller. US

Patent 8,808,145.

Quaine, F. and Martin, L. (1999). A biomechanical study of

equilibrium in sport rock climbing. Gait & Posture,

10(3):233–239.

Quaine, F., Martin, L., and Blanchi, J. (1997a). Effect of

a leg movement on the organisation of the forces at

the holds in a climbing position 3-d kinetic analysis.

Human Movement Science, 16(2-3):337–346.

Quaine, F., Martin, L., and Blanchi, J.-P. (1997b). The ef-

fect of body position and number of supports on wall

reaction forces in rock climbing. Journal of Applied

Biomechanics, 13(1):14–23.

Ramer, U. (1972). An iterative procedure for the polygonal

approximation of plane curves. Computer graphics

and image processing, 1(3):244–256.

Reveret, L., Chapelle, S., Quaine, F., and Legreneur, P.

(2018). 3D Motion Analysis of Speed Climbing Per-

formance. I4th International Rock Climbing Research

Association (IRCRA) Congress, pages 1–5.

Richter, J., Beltr

´

an, R., K

¨

ostermeyer, G., and Heinkel, U.

(2020). Human climbing and bouldering motion anal-

ysis: A survey on sensors, motion capture, analysis

algorithms, recent advances and applications. In Pro-

ceedings of the 15th International Joint Conference

on Computer Vision, Imaging and Computer Graphics

Theory and Applications - Volume 5: VISAPP,, pages

751–758. INSTICC, SciTePress.

Sibella, F., Frosio, I., Schena, F., and Borghese, N. (2007).

3D analysis of the body center of mass in rock climb-

ing. Human Movement Science, 26(6):841–852.

Tension Climbing (2019). Tension Board. https:

//tensionclimbing.com/product/tension-board-sets/.

Accessed: 2022-11-10.

Climbing with Virtual Mentor by Means of Video-Based Motion Analysis

133