Authoring Tools: The Road to Democratizing Augmented Reality for

Education

Mohamed Ez-zaouia

1 a

, Iza Marfisi-Schottman

1 b

and Cendrine Mercier

2 c

1

Le Mans Universit

´

e, LIUM, Le Mans, France

2

Nantes Universit

´

e, CREN UR 2661, Nantes, France

fi

Keywords:

Augmented Reality, Authoring Tools, AR for Education, AR Activities, Design Study, User Study.

Abstract:

Augmented Reality (AR) has great potential to facilitate multisensorial and experiential learning. However,

creating activities in AR for everyday classroom use is far from an easy task for non-experts users, such as

teachers and learners. To examine if and how an authoring approach for AR can be beneficial for educational

contexts, we first designed MIXAP through a participatory design with 19 pilot teachers. MIXAP enables

non-expert users to create AR activities using interactive and visual authoring workflows. To evaluate our

approach with a wider audience of teachers, we conducted a study with 39 teachers examining the usability,

utility, acceptability, and transfer between pilot and non-pilot teachers. We found that this approach can help

teachers create quality educational AR activities. For both groups, the effect sizes were significantly large for

ease of use, emotional experience, and low cognitive load. Additionally, we found that there is no significant

difference between the pilot and non-pilot teachers in terms of ease of use, learnability, emotional experience,

and cognitive load, highlighting the transfer of our approach to a wider audience. Ultimately, we discuss our

results and propose perspectives.

1 INTRODUCTION

Augmented reality (AR) is becoming an important

medium for formal and non-formal learning and train-

ing (Dengel et al., 2022). Because AR creates a

multimodal playground for representing and interact-

ing with content in an immersive way (Roopa et al.,

2021), users can interact and enact better the concepts

through sound, sight, motion, and haptic (Xiao et al.,

2020). Such immersive modalities can support multi-

sensorial and experiential learning (Shams and Seitz,

2008), for many disciplines including, art, design,

science, technology, engineering, mathematics, and

medicine (Ib

´

a

˜

nez and Delgado-Kloos, 2018; Arici

et al., 2019). Research advocates that schools should

integrate AR in their curricula to enable immersive

learning that engages learners and facilitates compre-

hension of content and phenomena (Billinghurst and

Duenser, 2012).

However, authoring AR activities that support

pedagogical objectives, is still far from easy for teach-

a

https://orcid.org/0000-0002-3853-0061

b

https://orcid.org/0000-0002-2046-6698

c

https://orcid.org/0000-0002-3921-583X

ers. Authoring AR content still requires advanced

programming knowledge and skills in specialized

toolkits, such as Unity

1

, Vuforia

2

, ARCore

3

. This

makes authoring AR only accessible to a small group

of people with advanced programming skills. Further-

more, because existing AR toolkits are designed for

general purposes, they lack support for educational

AR content. Currently, it is harder for educators to

harness this emerging learning medium in everyday

classroom.

Authoring tools (Lieberman et al., 2006) offer a

new approach that can democratize AR for educa-

tion by lowering the barriers to creating AR content.

AR authoring tools can provide users with tools that

enable them to create or modify AR artifacts with-

out programming (Ez-Zaouia et al., 2022). This is

promising because people who are not professional

software developers might be able to create AR activ-

ities, using user-friendly and easy-to-use interactions.

For instance, educators can take a photo of an object, a

poster, or a book and add multimodal resources as vir-

1

https://unity.com/

2

https://www.ptc.com/en/products/vuforia

3

https://developers.google.com/ar

Ez-zaouia, M., Marfisi-Schottman, I. and Mercier, C.

Authoring Tools: The Road to Democratizing Augmented Reality for Education.

DOI: 10.5220/0011984200003470

In Proceedings of the 15th International Conference on Computer Supported Education (CSEDU 2023) - Volume 1, pages 115-127

ISBN: 978-989-758-641-5; ISSN: 2184-5026

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

115

tual augmentations, such as texts, audios, videos, im-

ages, or 3D models. While AR authoring approaches

seem very promising for teachers, they have not been

studied extensively, especially not by involving end-

users in the design process. Systematic reviews have

raised several challenges in educational AR, such as

the complexity of the technology, difficulties in us-

ability, and a lack of ways to customize AR contents

(Akc¸ayır and Akc¸ayır, 2017; Yang et al., 2020; Ib

´

a

˜

nez

and Delgado-Kloos, 2018). Other reviews have found

that existing AR authoring tools have limited support

for educational content (Ez-Zaouia et al., 2022; Den-

gel et al., 2022), suggesting that more work is needed.

To examine if and how AR authoring approaches

can benefit teachers, we first designed MIXAP, a pro-

totype that enables teachers to create educational AR

activities through simple authoring workflows (Fig-

ure 2). We designed MIXAP through an iterative and

participatory design with 19 pilot teachers. To eval-

uate our approach with a wider audience of teach-

ers, we designed and conducted a study with two

groups of 39 teachers focusing on usability, utility, ac-

ceptability, and transfer. More specifically, we com-

pared the group of pilot teachers to a second group

of non-pilot teachers. The non-pilot teachers used the

MIXAP for the first time. We found that MIXAP

can support both groups of teachers in creating qual-

ity educational AR activities. We found that for both

groups, the effect sizes of ease of use, emotional ex-

perience, and low cognitive load of [TOOL] were sig-

nificantly large. In addition, we found that there is no

significant difference between the pilot and non-pilot

teachers in terms of ease of use, learnability, emo-

tional experience, and cognitive load, highlighting the

transfer of our approach to a wider audience.

2 BACKGROUND

Numerous systematic reviews have revealed trends,

benefits, and challenges of educational AR applica-

tions (Radu, 2014; Akc¸ayır and Akc¸ayır, 2017; Arici

et al., 2019; Dengel et al., 2022; Hincapie et al.,

2021; Ib

´

a

˜

nez and Delgado-Kloos, 2018; Garz

´

on et al.,

2020). For example, Radu (2014) analyzed 26 stud-

ies that compared AR learning to non-AR learning

and found that AR had several benefits for learn-

ers, such as fostering motivation, collaboration, reten-

tion, and learning spatial structures. Focusing on the

pedagogical approaches taken in AR, Garz

´

on et al.

(2020) reviewed the impact of AR factors, namely

collaborative learning, project-based learning, situ-

ated learning, multimedia learning, intervention du-

ration, the environment of use (e.g., classrooms, out-

doors, field drops, museums). The authors found

that collaborative AR showed the highest impact on

learners. Ib

´

a

˜

nez and Delgado-Kloos (2018) reviewed

AR literature concerning science, technology, engi-

neering, and mathematics fields (STEM) and charac-

terized AR applications, instructional processes, re-

search approaches, and problems reported.

Given the availability of the above-mentioned

studies, in this paper, we instead focus on the creation

of AR content by the teachers themselves using au-

thoring tools. Such tools attempt to make creating,

modifying or extending software artifacts less techni-

cal, easier, and accessible to people who are not pro-

fessional developers. They also provide end-users the

means to adapt the content to their needs and not be

limited to what pre-made artifacts offer. This is par-

ticularly important for education in three main ways.

First, teachers can automate the creation of AR ar-

tifacts. Second, they can customize and personalize

artifacts to suit their teaching needs. And finally, they

can appropriate and take ownership of tools and arti-

facts in their unique ways.

While the authoring approaches are extremely im-

portant for the wider adoption of AR in education,

they have not been studied extensively. Very few

studies reviewed design aspects underlying AR au-

thoring tools. Nebeling and Speicher (2018), clas-

sified existing authoring tools relevant to the rapid

prototyping of AR/VR experiences in terms of four

main categories: screen types, interaction (use of the

camera), 3D content, and 3D games. Mota et al.

(2015) discussed authoring tools under the lens of

two main themes: the authoring paradigms (stand-

alone, plug-in) and deployment strategies (platform-

specific, platform-independent). Dengel et al. (2022)

reviewed 26 AR toolkits cited in scientific research.

They characterized toolkits by their level of required

programming skills (high, low, or medium), level of

interactivity (static, i.e., without user interaction, or

dynamic), affordability: (free or commercial), de-

vice compatibility (mobile, desktop, HMDs, or web)

and collaboration capacity (yes or no). However, the

aforementioned research focused mainly on author-

ing tools that require some level of programming and

mostly the ones cited in scientific research including

non-educational tools. Ez-Zaouia et al. (2022) re-

cently analyzed 21 educational authoring tools that

do not require programming, from both industry and

academia. They formulated a design space of four

design dimensions of AR authoring tools, namely,

(1) authoring workflow (production style, content

sources, collaboration, and platform), (2) AR modal-

ity (object tracking, object augmentation, interaction,

and navigation), (3) AR use (device type, usage, con-

CSEDU 2023 - 15th International Conference on Computer Supported Education

116

tent collection, connectivity, and language) and (4)

Content and User Management (sharing, administra-

tion, and licensing). In addition, these reviews raised

several design challenges of educational AR, such as

usability, lack of customization, expensive technol-

ogy, and lack of holistic models and design princi-

ples for AR (Ez-Zaouia et al., 2022; Dengel et al.,

2022; Akc¸ayır and Akc¸ayır, 2017; Yang et al., 2020;

Ib

´

a

˜

nez and Delgado-Kloos, 2018; Nebeling and Spe-

icher, 2018).

While these studies provided insights into the de-

sign and use of AR, there is still a lack of design-based

research into AR authoring tools for education. To

the best of our knowledge, studies that involve teach-

ers in the design process of AR authoring tools are

very limited in number. We build upon previous stud-

ies (Ez-Zaouia et al., 2022; Dengel et al., 2022, e.g.,)

to better understand if and how authoring approaches

can benefit the design and use of AR activities in ed-

ucational settings. We engaged with teachers in an it-

erative and participatory design process. We designed

an authoring tool to make education AR accessible to

non-export users, taking into account design consid-

erations of the authoring workflows, customization,

multimodality, and interactivity.

3 TEACHER-CENTERED

ITERATIVE DESIGN PROCESS

We conduct our work in the context of a design-based

research project that involves end-users, namely

teachers, learners, and educational managers (who

train teachers). We followed an iterative teacher-

centered design process and went through four main

iterations (Ez-Zaouia, 2020). Figure 1 summarizes

our four main iterations.

3.1 Understanding AR Use in

Classrooms

To understand AR use, teachers’ practices, and chal-

lenges related to integrating AR in everyday class-

rooms, we created a partnership with CANOPE, a

public network that offers professional training for

teachers in France. One of the main focuses of

CANOPE is to help teachers integrate innovative

technologies in their classrooms. This partnership al-

lowed us to identify challenges that CANOPE’s ed-

ucational designers/managers experienced first-hand

in their recent work with teachers on educational AR.

Over several weeks, we conducted several meetings

with the educational managers where they shared with

us (i) their work with teachers, (ii) existing AR tech-

nologies used by teachers, and (iii) challenges that

teachers face with existing AR technologies.

The recurrent challenges in existing AR tech-

nologies that were evoked were: usability difficul-

ties, lack of ways to customize the experiences and

the contents, inadequacy of existing technologies for

teachers, and difficulty to design effective AR ac-

tivities. These findings corroborate previous results

from systematic reviews (Dengel et al., 2022; Akc¸ayır

and Akc¸ayır, 2017; Yang et al., 2020; Ib

´

a

˜

nez and

Delgado-Kloos, 2018; Nebeling and Speicher, 2018),

suggesting that these challenges are not addressed yet.

We traced most of these challenges to the author-

ing approaches of existing AR technologies for ed-

ucation, see a review (Ez-Zaouia et al., 2022). On

one hand, many of the existing AR authoring tech-

nologies offer either off-the-shelf content or editable

templates for AR. However, off-the-shelf and editable

templates provide pre-made activities (one-size-fits-

all) that teachers cannot customize and personalize to

suit their needs best. This is a major barrier for teach-

ers because it prevents them from creating personal-

ized content for more situated AR learning, which is

shown to have the highest impact on learners (Garz

´

on

et al., 2020). On the other hand, some tools allow

users to create AR experiences using their content.

However, most of these tools do not enable the cre-

ation of pedagogical activities that can address class-

rooms’ needs. Ez-Zaouia et al. (2020) found that ex-

isting AR authoring tools lack “built-in” support for

helping teachers and learners use AR to its full peda-

gogical capacity (Ez-Zaouia et al., 2022).

In sum, our domain exploration revealed three

main gaps in existing research and practice around

educational AR: (1) lack of pedagogical approaches

in existing authoring tools, (2) lack of authoring ap-

proaches that are suitable for teachers, and (3) lack

of guidance and principles on how to design effective

educational AR activities.

3.2 Understanding Teachers-needs for

AR Activities

After our domain exploration, we felt important to

formally engage with teachers to understand their

needs for AR. Through CANOPE, we recruited 19

pilot teachers from various disciplines: [Gender:

(women = 8, men = 11), Teaching Years: (min =

2, max = 40), School Level: (elementary = 5, mid-

dle = 11, high = 2, university = 1)]. Teachers have

various technology-use expertise in classrooms, AR

Use: 26.3% and Smartphone Use: 63.2%. We con-

ducted two 3-hour co-design sessions where teachers

Authoring Tools: The Road to Democratizing Augmented Reality for Education

117

Figure 1: Teacher-centered design process we followed in this work, including (1) understanding AR use in classrooms,

(2) understanding teachers-needs for AR, (3) iterative prototyping and preliminary user studies, and (4) formal study with

teachers.

paper-prototyped AR activities they wanted to create

for their classrooms.

AR Elicitation and Ideation. For each session, we

started with a 30-minute elicitation phase, during

which teachers explored 11 AR educational applica-

tions

4

. We then asked them to paper prototype one

or more AR activities for their course. We opted

for paper prototyping to remove technical constraints.

We provided teachers with a toolkit, i.e., large paper

worksheet, markers, tablet screens printed out on pa-

per, and guidelines to help them describe (i) the con-

text in which the AR activity is used (ii) the objects

they wanted to augment, (iii) the augmentations they

wanted to add, and (iv) the interactions they wanted

their students to have access to via the tablet’s screen.

After the paper prototyping, we asked the participants

to present their prototypes to the group. We video-

taped the workshops. We collected the recordings and

24 worksheets. Three authors analyzed the recording

and the worksheets to identify teachers’ needs. The

collected data is open online (Author, 2022).

Pedagogical AR Activities That Teachers Want. In

our analysis, we identified five types of pedagogical

AR activities and two ways of combining AR activi-

ties together to create sequences. The most common

type of activity is image augmentation. In this activ-

ity, teachers would like to add multimodal resources

(texts, images, videos, audios, 3D models, and in-

teractive menus) to images, such as posters, books,

and exercise sheets. For example, a primary school

teacher wanted to augment the pages of a book with

audio recordings of her narrating textual vocabulary

(e.g., “The reindeer has four hooves and 2 antlers”).

She also wanted to augment imaginary illustrations

of animals in a book with real images so that kids

4

Foxar, SpacecraftAR, Voyage AR, DEVAR, AR-

LOOPA, AnatomyAR, ARC, Le Chaudron Magique,

SPART, Mountain Peak AR, SkyView Free

associate real and imaginary illustrations. Another

teacher wanted to augment the photos of his high-

school students with 3D models they created in tech-

nology class. It is not surprising that most teachers

have thought of this type of activity since it is the one

available the most in the authoring tools but it is quite

limited in the number or type of resources that we can

create. The second type of activity is image annota-

tion in which teachers wanted to associate informa-

tion (e.g., legends) to specific points of an object. For

example, a teacher wanted to add specific legends to

different areas on a map.

The third type of activity is image validation, in

which teachers wanted to create activities that learn-

ers can complete on their own by using AR to validate

automatically if the chosen image is correct or not.

For example, a middle school science teacher wanted

to ask students to assemble the pieces of a map cor-

rectly. Another teacher wanted students to identify a

specific part of a machine (e.g., motors) by scanning

image markers attached to the machine. The fourth

type of activity is images association in which teach-

ers wanted to display multimodal resources when two

image markers are visible on the scene at the same

time. For example, a primary school teacher wanted

children to practice recognizing the same letter, writ-

ten in capital and small letters. Another teacher

wanted to use this type of activity for learning con-

cepts by associating different modalities, for example,

learning fruit vocabulary using words (e.g., banana)

and imagery. The fifth type of activity is a images su-

perposition in which teachers wanted to display lay-

ers of information on the top of an image. For exam-

ple, a university geology teacher wanted students to

be able to activate or deactivate layers of information

showing various types of rocks and tectonic plates on

geographic maps. Finally, teachers wanted to create

activity clusters and activity paths by combining ac-

CSEDU 2023 - 15th International Conference on Computer Supported Education

118

tivities without or with predefined order. Clusters and

paths are essential for teachers who design their activ-

ities in a form of a pedagogical sequence. Apart from

image augmentation, the activities that the teachers

wanted are rarely supported by the existing authoring

tools.

3.3 Iterative Prototyping and

Preliminary User-Studies

To address teachers’ needs, we iteratively designed,

over a period of five months, three main prototypes

leading to a working prototype, We decided to use

web technologies for AR, which we knew will facili-

tate rapid prototyping and also support teachers’ vari-

ous needs.

In the first month, we were able to design the first

prototype with the image augmentation activity. This

was important for us to rapidly gather user feedback

using a concrete tool. We conducted a first prelimi-

nary user study with more than 20 teachers in a local

seminar about serious games. We prepared a work-

sheet with 5 activities. In each activity, we asked the

participants to augment an image with different media

resources, namely, text, image, audio, video, and 3D

object. We had several issues with the software during

this user study. In part, the image, audio, video, and

3D object were not loaded correctly in the AR view.

We ended up using only text augmentations with the

participants. Other issues relate to teachers adding

multiple augmentations to a marker. The prototype

had issues handling positions of multiple augmenta-

tions. Teachers also wanted to take a photo of their

objects (e.g., books, flyers) and add them as markers

but the first prototype allowed only uploading existing

images from the user’s device. Even though this first

prototype had many issues, most participants reacted

positively to the visual authoring approach of the tool

(see Section 4). They highlighted that the interface is

simple and user-friendly. Also, the steps we designed

in the editor provided guidance in helping the user in-

crementally create an AR activity.

In the following month, we designed a second

prototype that addressed all the issues that we faced

during the first preliminary user study. Similar to

the first prototype, we focused on the image aug-

mentation activity. We then conducted a second user

study with the help of CANOPE, for which we in-

vited 12 of our partner teachers to evaluate the new

prototype. We asked teachers to bring their educa-

tional materials (e.g., books, flyers, images) so that

they can use the prototype to create their AR activ-

ity. We also had some minor issues. One issue was

that markers that are photos taken by the camera took

too long to process for an AR view, partly because

the teachers’ tablets have limited memory and pro-

cessing units. However, apart from minor issues, the

prototype worked well. In this study, we validated

the design choices of the visual authoring approach.

All the participants reacted positively to using user-

friendly interactions (click, drag, resize), and config-

uration menus with smart-default parameters to per-

sonalize the look and feel of the content (e.g., text

and image styles). In addition, the participants high-

lighted some improvements, such as adding the possi-

bility to record audio or a video rather than uploading

a file from the user’s device.

We decided to combine image annotation and aug-

mentation since the position of the augmentations is

precise enough to annotate a precise part of an im-

age marker. In the following two months, we re-

fined the tool and designed authoring workflows for

the other types of activities. We refined the augmenta-

tion/annotation and designed authoring workflows for

the association, validation, cluster, and path activities.

3.4 Formal Study with Teachers

To evaluate whether an authoring approach can sup-

port teachers in creating various AR activities, we de-

signed a formal study with two groups of teachers.

One group was composed of pilot teachers who part-

nered with the project through CANOPE. The second

group was composed of teachers who were unfamiliar

with the project. We provide details about the study

in the method and report the results in the results sec-

tion.

4 MIXAP: AN AUTHORING

TOOL FOR EDUCATIONAL AR

We designed MIXAP to make creating educational

AR activities easier and less technical for non-expert

users, such as teachers and learners. Based on our

domain exploration with teachers and literature re-

view, we derived three design goals to support teach-

ers’ needs.

4.1 Design Goals

DG1: Incorporate Authoring Workflows to Facili-

tate Creating Pedagogical AR Activities. A major

challenge in authoring tools for AR is the lack of sup-

port and guidelines to help users in creating AR con-

tent for classroom use (Ez-Zaouia et al., 2022; Den-

gel et al., 2022). To alleviate this challenge, we de-

signed five authoring workflows in MIXAP to guide

Authoring Tools: The Road to Democratizing Augmented Reality for Education

119

Figure 2: Main views of the user interface of MIXAP. (a) is the dashboard view which displays all the AR activities of the

user. (b) is the list of AR activities that teachers can create. (c, d, e, f) are views of the editor. Each AR activity has a set of

steps (workflow) to guide the user in creating the activity —steps are placed on top of the editor: (c) naming, (d) marker, (e)

augmentation, and (f) try the activity. The marker is placed in the center of the editor. The palette of tools to add multimodal

resources as augmentations to an activity (text, image, video, audio, modal, and 3D object) are on the left of the editor. Users

add resources drag and drop them on the canvas. MIXAP allows users to customize all aspects of augmentations in terms of

content and appearance (rotate 3D assets, change sizes and styles of assets).

CSEDU 2023 - 15th International Conference on Computer Supported Education

120

end-users in creating the AR activities that we identi-

fied during the participatory design with teachers (i.e.,

augmentation, validation, association, superposition,

cluster, and path). Each workflow has a specific set of

incremental authoring steps.

DG2: Provide a Visual Authoring Approach to

Reduce the Cognitive Burden of Creating Educa-

tional AR Content. A major challenge hindering the

wider adoption of AR in education is, in part, related

to the technical complexity of authoring AR activi-

ties. A visual authoring approach is more suitable

for teachers because it leverages user familiarity with

widely-used tools in education, such as Google docs

and PowerPoint. Such an approach enables users to

author content using user-friendly interactions, such

as drag-and-drop and configuration menus.

DG3: Support Users in Personalizing the Look and

Feel of an AR Activity Content. Another major lim-

itation in existing AR applications for education is

that teachers can not customize the content. Because

personalization is crucial for learning (Mayer, 2005),

MIXAP enables users to personalize all aspects of an

AR activity. In terms of content, users can create or

import different media resources. For example, users

can import files from their devices, such as images,

3D models, audio, and videos. They can take photos

and record audios and videos. They can also fine-tune

the styles, such as size, color, shape, and fonts of the

resources of an AR activity.

4.2 User Interface

The user interface of MIXAP (Figure 2) consists of

four main views: a library, dashboard, editor, and

player.

Library View. Lists all the AR activities that all users

created and shared publicly. Users can create an AR

activity by clicking on the button “add activity” and

then selecting the type of activity they want to create

(DG1, Figure 2-b).

Dashboard View. This view is similar to the library

view, however, it only lists the AR activities of the

user (Figure 2-a). Similarly, the user can also create

their AR activities by clicking on the button “add ac-

tivity”.

Editor View. The editor provides specific authoring

workflows for each of the five AR activities (DG1).

Each authoring workflow provides a few steps to cre-

ate an AR activity using visual and user-friendly in-

teractions (DG2). For example, one step is naming

an activity by providing a title and a description (Fig-

ure 2-c). Another step is adding a marker image of

the activity, which consists of either uploading an im-

age or taking a photo of an object (Figure 2-d). In the

case of an association activity, we prompt the user to

add two marker images. Another step is adding mul-

timodal augmentations on the top of a marker (Fig-

ure 2-e). The augmentations are the media resources

that the user can add to an AR activity, namely, text,

audio, video, modal, and 3d objects. Another step

enables trying the activity in AR view (Figure 2-f).

When the user launches an activity in AR view, we

recognize the marker object through the user’s device

camera, and we project the digital (virtual) augmenta-

tions on the tangible (real) marker object. In addition,

users can customize the size, color, shape, and fonts

of the resources of an AR activity. Finally, users cre-

ate clusters of paths from existing activities by select-

ing two or more activities and grouping them with or

without order.

Player. Launches an activity in AR View (similar to

Figure 2-f but in full screen). The player opens the

user’s device camera and loads the marker as well as

all the augmentations of the activity. The player, then,

(i) scans the scene to detect the maker of the activity

and (ii) projects the augmentations on the scene.

4.3 User Interaction and Customization

Modalities

All the resources in MIXAP have built-in smart-

default parameters (DG1). In addition, MIXAP pro-

vides user-friendly modalities so that users can cus-

tomize the look and feel of an AR activity (DG2,

DG3). The users can upload resources from their lo-

cal devices or create and edit resources using built-

in media-resources tools (images, audios, videos, and

3D models). In terms of interaction, the users can ro-

tate resources in 3D three-dimensional space. They,

scale up or down the size of the resources. And they

can drag resources to arrange them in unique ways on

the canvas. Further, MIXAP offers options to change

text size, font, color, and background.

4.4 Implementation

We implemented the user interface of MIXAP using

Typescript

5

and Reactjs

6

. We use MindARjs

7

for im-

age tracking. We use RxDB

8

to store the resources

of the AR activities in the user’s device. We use Su-

pabase

9

to enable sharing AR activities between dif-

ferent devices.

5

https://www.typescriptlang.org/

6

https://reactjs.org/

7

https://github.com/hiukim/mind-ar-js

8

https://rxdb.info/

9

https://supabase.com/

Authoring Tools: The Road to Democratizing Augmented Reality for Education

121

5 TEACHER STUDY

We conducted a user study with 39 teachers to ex-

amine whether they can easily use MIXAP to create

high-quality educational activities in augmented real-

ity. We used mixed methods analysis and followed an

overall factorial design with the following factors and

levels:

• Group of participants: Pilot Teachers (P) & non-

pilot teachers (NP).

• Category of evaluation: Usability, Learnability,

Utility, Emotion, Cognitive Load, and

• Demographics: Participants’ Age and Discipline

(STEM, Non-STEM, Computer Science Other)

5.1 Hypothesis

We examine seven main hypotheses:

• Usability:

– H1: The usability of MIXAP will be higher than

the median value (Likert = 3).

– H2: The ease of use of MIXAP will be higher

than the median value (Likert = 3).

• Utility:

– H3: The utility of MIXAP in creating AR activ-

ities for teachers will be higher than the median

value (Likert = 3).

• Acceptability:

– H4: The emotional experience of MIXAP will

be higher than the median (Likert = 3).

– H5: The cognitive load emanating from using

MIXAP will be lower than the median value

(Likert = 3).

• Transfer:

– H6: There will be no differences between the

partner and non-partner teachers in terms of us-

ability, ease of use, emotional experience, and

cognitive load.

– H7: There will be no differences between partic-

ipants’ age and discipline in usability, learnabil-

ity, utility, emotional experience, and cognitive

load.

5.2 Participants

The participants were 39 (N=39) teachers [Age: 26-

30 = 1, 31-35 = 1, 36-40 = 3, 41-45 = 15, 46-50 = 12,

> 50 = 7; Discipline: STEM = 22, Non-STEM = 7,

Computer Science = 1, Other = 9]. We divided teach-

ers into two groups: the pilot group (P) with 12 teach-

ers and the non-pilot group (NP) with 27 teachers.

The pilot teachers were familiar with the MIXAP. We

recruited the non-pilot teachers during a local work-

shop, and they were not familiar with the MIXAP

prior to the study. All teachers voluntarily partici-

pated in the study without compensation and signed

a consent for using and analyzing their data for re-

search.

5.3 Educational AR Activities

We prepared four different AR activities

10

for teach-

ers to create using MIXAP (see the example, Fig-

ure 3). One activity involved augmenting a book with

multimodal resources. Another activity involved as-

sociating images. The third activity involved validat-

ing an image. And, the final activity involved creating

a learning cluster or a path from the first three activi-

ties.

5.4 Apparatus

We used three apparatuses: MIXAP, paper sheets

of the four activities, and different props. We in-

stalled MIXAP on 12 tablets (Samsung SM-X200,

SM-T500, 11 inches, 2G RAM, 32G Hard Drive).

We printed out the steps to create the four activities

on paper. We selected a set of props: cards from the

Dixie game, books, and posters, which we provided

the participants with to create resources for AR activ-

ities, such as markers, images, and videos.

5.5 Procedure

The procedure consists of three steps. We first in-

troduced the study and the four AR activities to the

teachers. Then, we asked the teachers to create the

four activities (see example in Figure 3). And, finally,

we asked the teachers to answer a questionnaire

11

.

5.6 Data Collection and Analysis

We collected 39 responses to the questionnaire from

the participants; 12 responses from the partner group

and 27 responses from the non-partner group. For the

usability (H1-2), we used the 10 SUS questions [Q1-

10] to assess the total SUS score as well as ease of use

[Q1,2,3,5,6,7,8,9] and learnability [Q4,10] (Brooke

10

https://drive.google.com/file/d/11I4vJTB-

GgW1Xtmb-sAL0gWPiWVyksUL/view

11

https://forms.gle/GP1NCaEpAtihzqbY6

CSEDU 2023 - 15th International Conference on Computer Supported Education

122

Figure 3: An example of the AR activity that the teachers

created to associate two cards. We introduced this activity

to the teachers as: “This type of activity allows you to vi-

sualize augmentations if two images are visible in the scene

at the same time (e.g., side by side). You can thus create

an automatic correction for your association activities (e.g.,

an association of a picture with a word, an association of a

word in French with a word in another language, etc.)”

et al., 1996; Lewis and Sauro, 2009). We formulated

the question [Q15] along with an open-ended question

[Q16] to assess the utility (H3). For the acceptability

(H4-5), we formulated [Q12,14] to assess emotional

experience and [Q11,13] to assess the cognitive load.

For transfer (H6-7), we analyzed the variance of re-

sponses with respect to the factors Group and Demo-

graphics (Disciple and Age). Questions were on a

5-point Likert scale, except for [Q16].

6 RESULTS

We report and interpret our results using both p-values

for statistical significance and Effect Sizes for quan-

tifying the main difference (small: < .3, moderate:

.3 - .5, large: > .5), with a 95% confidence interval

(Robertson and Kaptein, 2016). We used a nonpara-

metric Wilcoxon test for One-sample tests of partici-

pants’ Likert responses. We used nonparametric fac-

torial analyses of the variance of participants’ Likert

responses using the Aligned Rank Transform (ART)

(Wobbrock et al., 2011).

6.1 Overview

Overall, almost all participants completed the activ-

ities at about the same time. Figure 6 summarizes

the responses to the questionnaire, highlighting par-

ticipants’ positive reactions to the tool.

Figure 4: Utilisability: On the left is a boxplot with median,

min-max, and outliers; right is effect sizes with 95% confi-

dence intervals.

6.2 Usability

We followed (Brooke et al., 1996; Lewis and Sauro,

2009) and calculated scores (on a scale of 0 to 100)

for SUS [Q1-10], ease of use [Q1,2,3,5,6,7,8,9], and

learnability [Q4,10].

As illustrated in Figure 4 (legend P NP), the mean

SUS score for all participants was 73.91, which is

above the standard SUS average of 68 points and is

classified as “good.” A one-sample Wilcoxon test

showed that teachers’ scores were significantly higher

than 68 points, with a medium effect size for total

SUS (p = .015, r = .389), a medium effect size for

ease of use in hand (p = .049, r = .315), and a large

effect size for learnability (p = .009, r = .756).

Analysis by Group showed that the difference was

not significant for SUS, with a medium effect size for

the pilot (P) group (p = 0.107, r = 0.476) and a trend

toward significance for the non-pilot (NP) group (p

= 0.062, r = 0.360). The difference in ease of use

was not significant with a mean effect size for both

groups: P (p = 0.288, r = 0.318) and NP (p = 0.104,

r = 0.314). In contrast, the difference between the

groups for learnability was significant, with a large

effect size for the P group (p = 0.022, r = 0.756) and

a medium effect size for the NP group (p = 0.022, r =

0.443).

Authoring Tools: The Road to Democratizing Augmented Reality for Education

123

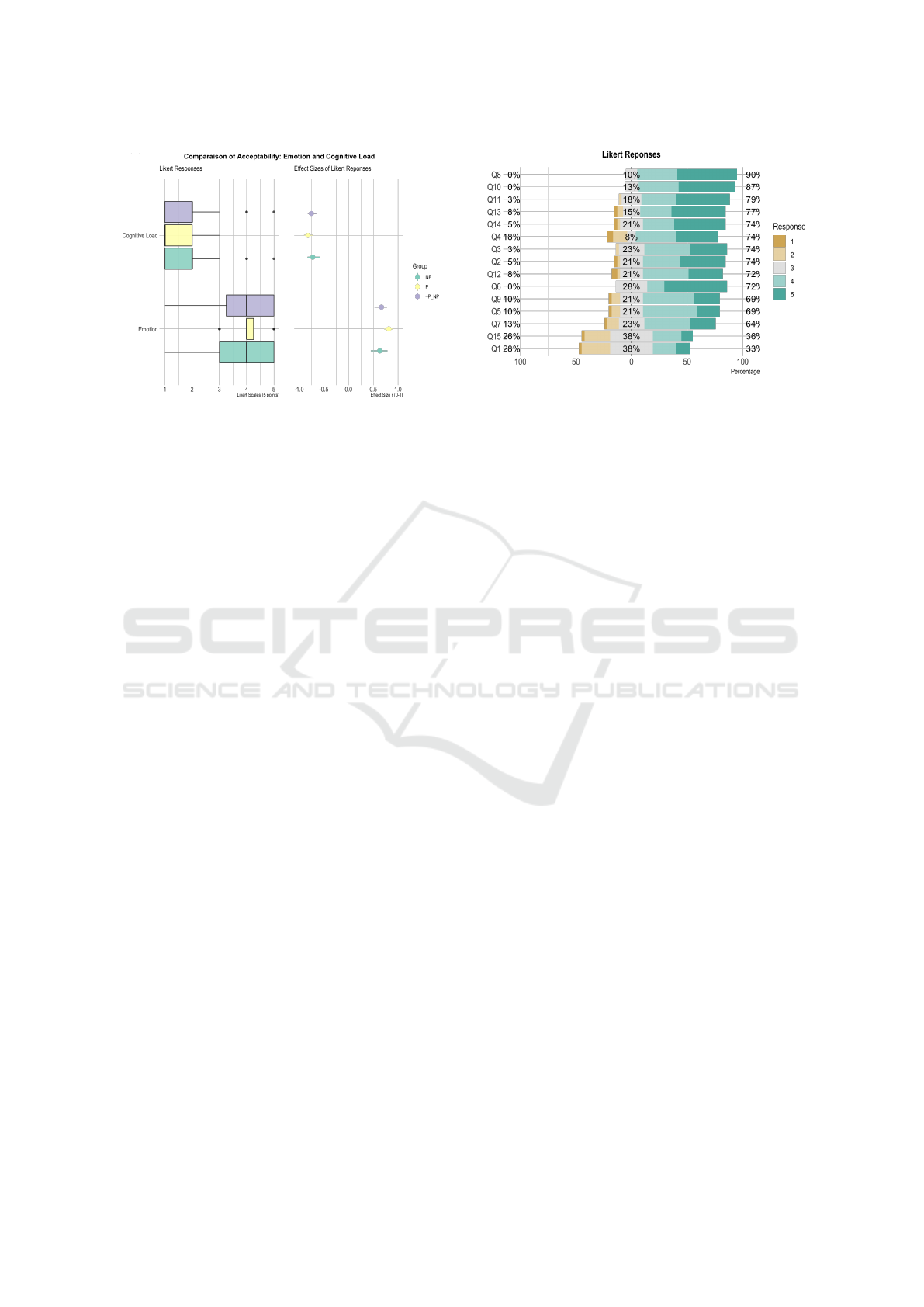

Figure 5: Acceptability: On the left is a boxplot with me-

dian, min-max, and outliers; on the right are the effect sizes

with the 95% confidence interval.

6.3 Utility

We performed a one-sample Wilcoxon test to assess

whether the utility [Q14] is greater than the median

value of 3. For the data combined from both groups,

the utility was not significant with a small effect size

(p = 0.25, r = 0.186). Analysis by Group showed

that the difference in utility was significant for the

pilot group (P) with a large effect size (p = 0.043, r

= 0.598) and not significant for the non-pilot group

(NP) (p = 0.684, r = -0.084). Some teachers elabo-

rated on new features they would like to have in the

tool, such as, for example, an AR activity capable of

recognizing multiple markers automatically and dis-

playing augmentations; adding videos to sheets and

interactive menus; or exploring details in large image

markers. Others have elaborated on technical issues

such as accuracy, stability, or responsiveness of the

augmentations. These elements can have an impact

on the participants’ perceived utility.

6.4 Acceptability

As illustrated in Figure 5, a one-sample Wilcoxon test

showed that Likert responses were significantly dif-

ferent from the median with a large effect size for

emotional experience [Q12,14] (p < 0.001, r = 0.666)

and a large (negative) effect size for cognitive load

[Q11,13] (p < 0.001, r = -0.827). Analysis by Group

showed that the difference in emotional experience

was significant with a large effect size for the pilot (P)

(p < 0.0001, r = 0.814) and non-pilot (NP) groups (p

< 0.0001, r = 0.631). Similarly, the cognitive load

was significantly lower than the median value of 3

with a large effect size for the pilot (N) (p < 0.0001,

r = -0.898) and non-pilot (NP) groups (p < 0.0001, r

= -0.825).

Figure 6: A summary of Likert responses of all the partici-

pants.

6.5 Transfer

We conducted a non-parametric factorial analysis of

the variance of Likert responses using the ART pro-

cedure Wobbrock et al. (2011). The analysis showed

that there was no significant difference in responses

between the levels of the Category: usability, learn-

ability, utility, emotional experience, and cognitive

load (p = n.s) with respect to the participants’ Group

(p = .180), Age (p = .889), and Discipline (p = .520).

7 DISCUSSION

We first summarize our results on the main effects of

the authoring approach. We then discuss our results

concerning the design and use of AR authoring tools

in classrooms.

7.1 Impact of AR Authoring Approach

The analysis of SUS scores validates H1. The ease

of use and the learnability were significant, validat-

ing H2. Thus, the usability of MIXAP is quite rea-

sonable and comparable between pilot and non-pilot

teachers. Both groups were able to create all four ac-

tivities at about the same time. The acceptability of

the tool is important, participants showed positive re-

actions (also observed during the experiments). The

emotional experience was significantly strong, vali-

dating H3. Since we propose authoring workflows

with incremental steps and intuitive interactions, the

cognitive load was significantly low, validating H4.

Regarding the transfer, the factorial analysis of vari-

ance showed no significant difference between the

pilot and non-pilot teachers, validating H6 and H7,

which shows that this approach may be beneficial for

a wider audience of teachers. In contrast, the per-

CSEDU 2023 - 15th International Conference on Computer Supported Education

124

ceived utility was significantly high only for the pi-

lot group, partially validating H5. In our next itera-

tions, we will further examine the utility aspects, par-

ticularly the questions of (1) the appropriation and (2)

the longitudinal impact of our approach. We believe

teachers will appropriate better the tool as we plan

to provide them with video tutorials and resources to

support them in creating educational AR content.

7.2 Participatory Design of AR

Authoring Tools

An authoring tool aims at providing a wide range of

functionalities to support a wider audience of users

with different technological familiarities —while al-

lowing them to (1) create artifacts without program-

ming and (2) appropriate the tool easily (Lieberman

et al., 2006; Ez-Zaouia et al., 2022). In this sense, a

participatory design with teachers allowed us to iden-

tify pedagogical needs that we were able to transpose

into small building blocks to enable users in creat-

ing AR activities using user-friendly interactions. The

transfer analysis showed that this approach is viable.

Even though the tool was only designed based on 19

pilot teachers, the evaluation with 27 non-pilot teach-

ers showed that there was no difference between the

pilot and non-pilot teachers in terms of ease of use,

learnability, usability, emotional experience, and cog-

nitive load. Similarly, there were no differences in the

age and discipline of the participants.

That said, the participatory approach also poses

some challenges. Combining various users’ needs

without making an authoring tool too complex can be

a difficult task. This constraint requires making de-

sign choices such as selecting, validating, and even

rejecting some needs (e.g., needs that are specific to

a context of use or that do not meet common needs

or risk making the tool more complex for the major-

ity of teachers). The constraint is even more severe

when the needs have been identified in the form of

complete pedagogical scenarios or activities. Some

teachers may have difficulty identifying their needs in

the tool —as they had expressed them during the par-

ticipatory design, which could negatively impact their

perception of the tool’s utility. Our analysis of utility

supports this point. Some teachers did not identify

their needs in the tool in an explicit way. An author-

ing tool requires an appropriation effort from the end-

users to adapt its functionalities to meet unique needs.

7.3 Integration of AR Authoring Tools

in the Classroom

Beyond the technical aspects, creating AR activities

by end-users poses a cognitive difficulty. This diffi-

culty stems from the fact that end-users have little fa-

miliarity with AR content creation. This type of pro-

duction has not yet become a convenient task com-

pared to, for example, multimedia productions using

Google Docs or Powerpoints. Teachers may find it

difficult to develop instructional activities in AR or

to adapt their traditional activities to immersive AR

learning. In addition, teachers may not have training

in multisensory and experiential learning. Even if an

authoring tool removes the technical barrier, creating

this type of content requires skills, such as principles

of multimedia, coherence, multimodality, and person-

alization (Yang et al., 2020).

Potentially, a library of educational activities

where teachers can find, create and share AR activ-

ities can help address the above challenges. Our ap-

proach goes in this direction and aims at proposing

small building blocks that teachers can use and com-

bine in an easy manner to create more advanced activ-

ities or even new activities that they have not thought

of. For example, in the context of learning to associate

concepts in a collaborative mode, a teacher was able

to create an original activity where learners combine

AR with tangible artifacts: books, post-its, images,

and cards to complete. Learners in pairs visualize

multimodal augmentations of a book, identify associ-

ations related to the augmentations, and paste post-its

and images on the card. The teacher was able to ap-

propriate the tool while using everyday artifacts in the

classroom and was able to create an immersive envi-

ronment for learning associations of concepts. Shar-

ing these types of activities among teachers can help

in the successful integration of AR authoring tools in

classrooms.

Another important aspect that can improve the ap-

propriation of AR authoring tools by teachers is learn-

ing analytics (e.g., dashboards). We believe providing

teachers with feedback about learners’ experiences,

such as emotional state (Ez-Zaouia et al., 2020; Ez-

zaouia and Lavou

´

e, 2017), progression and engage-

ment (Ez-zaouia. et al., 2020) can support them in

fine-tuning AR activities for their classrooms best.

8 CONCLUSION

AR offers an interesting environment to facilitate

multisensorial, immersive, and engaging learning.

However, creating AR activities is far from an easy

Authoring Tools: The Road to Democratizing Augmented Reality for Education

125

task. We examined an “authoring tool” approach to

making AR less technical and more accessible to non-

expert teachers. We presented our process for de-

signing MIXAP in an iterative, participatory design

approach with pilot teachers. We evaluated our ap-

proach with 39 teachers. The results are very encour-

aging to further explore this approach, especially the

analysis of usage, appropriation, and activities created

by teachers in their classrooms. We hope that our

work provides researchers and designers with ideas

for the design and use of AR-authoring tools to de-

mocratize AR for education.

ACKNOWLEDGMENT

We thank the Rising Star program of Pays de la

Loire, France, for funding the MIXAP research

project. Special thanks to the teachers, including

the pilot: Annabel Le GOFF, Camille POQUET,

Damien DUMOUSSET, Delphine DESHAYES, Elis-

abeth PLANTE, Frederic LLANTE, Laurent HUET,

Nicolas JOUDIN, Tony NEVEU, Vanessa FROC,

Yannick GOURDIN, R

´

egis MOURGUES, Morgane

ACOU-LE NOAN, and Adeline JAN.

REFERENCES

Akc¸ayır, M. and Akc¸ayır, G. (2017). Advantages and chal-

lenges associated with augmented reality for educa-

tion: A systematic review of the literature. Educa-

tional Research Review, 20:1–11.

Arici, F., Yildirim, P., Caliklar,

˚

A., and Yilmaz, R. M.

(2019). Research trends in the use of augmented

reality in science education: Content and biblio-

metric mapping analysis. Computers & Education,

142:103647.

Author (2022). Anonymized. Mendeley Data.

Billinghurst, M. and Duenser, A. (2012). Augmented Real-

ity in the Classroom. Computer, 45(7):56–63.

Brooke, J. et al. (1996). Sus-a quick and dirty usability

scale. Usability evaluation in industry, 189(194):4–7.

Dengel, A., Iqbal, M. Z., Grafe, S., and Mangina, E. (2022).

A review on augmented reality authoring toolkits for

education. Frontiers in Virtual Reality, 3.

Ez-Zaouia, M. (2020). Teacher-centered dashboards design

process. In Companion Proceedings of the 10th Inter-

national Conference on Learning Analytics & Knowl-

edge LAK20, pages 511–528.

Ez-zaouia, M. and Lavou

´

e, E. (2017). Emoda: A tutor

oriented multimodal and contextual emotional dash-

board. In Proceedings of the Seventh International

Learning Analytics & Knowledge Conference, LAK

’17, page 429–438, New York, NY, USA. Association

for Computing Machinery.

Ez-Zaouia, M., Marfisi-Schottman, I., Oueslati, M.,

Mercier, C., Karoui, A., and George, S. (2022). A

design space of educational authoring tools for aug-

mented reality. In International Conference on Games

and Learning Alliance, pages 258–268. Springer.

Ez-Zaouia, M., Tabard, A., and Lavou

´

e, E. (2020).

Emodash: A dashboard supporting retrospective

awareness of emotions in online learning. In-

ternational Journal of Human-Computer Studies,

139:102411.

Ez-zaouia., M., Tabard., A., and Lavou

´

e., E. (2020). Prog-

dash: Lessons learned from a learning dashboard

in-the-wild. In Proceedings of the 12th Interna-

tional Conference on Computer Supported Educa-

tion - Volume 2: CSEDU,, pages 105–117. INSTICC,

SciTePress.

Garz

´

on, J., Kinshuk, Baldiris, S., Guti

´

errez, J., and Pav

´

on,

J. (2020). How do pedagogical approaches affect the

impact of augmented reality on education? A meta-

analysis and research synthesis. Educational Research

Review, 31:100334.

Hincapie, M., Diaz, C., Valencia, A., Contero, M., and

G

¨

uemes-Castorena, D. (2021). Educational appli-

cations of augmented reality: A bibliometric study.

Computers & Electrical Engineering, 93:107289.

Ib

´

a

˜

nez, M.-B. and Delgado-Kloos, C. (2018). Augmented

reality for STEM learning: A systematic review. Com-

puters & Education, 123:109–123.

Lewis, J. R. and Sauro, J. (2009). The factor structure

of the system usability scale. In Human Centered

Design: First International Conference, HCD 2009,

Held as Part of HCI International 2009, San Diego,

CA, USA, July 19-24, 2009 Proceedings 1, pages 94–

103. Springer.

Lieberman, H., Patern

`

o, F., Klann, M., and Wulf, V. (2006).

End-user development: An emerging paradigm. pages

1–8.

Mayer, R. E. (2005). The Cambridge Handbook of Multi-

media Learning. Cambridge university press.

Mota, R. C., Roberto, R. A., and Teichrieb, V. (2015).

[POSTER] Authoring Tools in Augmented Real-

ity: An Analysis and Classification of Content De-

sign Tools. In 2015 IEEE International Symposium

on Mixed and Augmented Reality, pages 164–167,

Fukuoka, Japan. IEEE.

Nebeling, M. and Speicher, M. (2018). The Trouble with

Augmented Reality/Virtual Reality Authoring Tools.

In 2018 IEEE International Symposium on Mixed and

Augmented Reality Adjunct (ISMAR-Adjunct), pages

333–337, Munich, Germany. IEEE.

Radu, I. (2014). Augmented reality in education: a meta-

review and cross-media analysis. Personal and Ubiq-

uitous Computing, 18(6):1533–1543.

Robertson, J. and Kaptein, M. (2016). An introduction to

modern statistical methods in hci. In Modern Statisti-

cal Methods for HCI, pages 1–14. Springer.

Roopa, D., Prabha, R., and Senthil, G. (2021). Revolution-

izing education system with interactive augmented re-

ality for quality education. Materials Today: Proceed-

ings, 46:3860–3863.

CSEDU 2023 - 15th International Conference on Computer Supported Education

126

Shams, L. and Seitz, A. R. (2008). Benefits of multisensory

learning. Trends in cognitive sciences, 12(11):411–

417.

Wobbrock, J. O., Findlater, L., Gergle, D., and Higgins,

J. J. (2011). The aligned rank transform for non-

parametric factorial analyses using only anova proce-

dures. In Proceedings of the SIGCHI Conference on

Human Factors in Computing Systems, CHI ’11, page

143–146, New York, NY, USA. Association for Com-

puting Machinery.

Xiao, M., Feng, Z., Yang, X., Xu, T., and Guo, Q. (2020).

Multimodal interaction design and application in aug-

mented reality for chemical experiment. Virtual Real-

ity & Intelligent Hardware, 2(4):291–304.

Yang, K., Zhou, X., and Radu, I. (2020). XR-Ed Frame-

work: Designing Instruction-driven andLearner-

centered Extended Reality Systems for Education.

arXiv:2010.13779 [cs].

Authoring Tools: The Road to Democratizing Augmented Reality for Education

127