Human-AI Collaboration Types and Standard Tasks for

Decision Support: Production System Configuration Use Case

Alexander Smirnov

a

, Tatiana Levashova

b

and Nikolay Shilov

c

SPC RAS, 39, 14 Line, St. Petersburg, Russia

Keywords: Collaborative Decision Support, Human-AI Collaboration, Collaboration Types, Production System

Configuration.

Abstract: Production systems can be considered as variable systems with dynamic structures and their efficient

configuration requires support by AI. Human-AI collaborative systems seem to be a reasonable way of

organizing such support. The paper studies collaborative decision support systems that can be considered as

an implementation of the human-AI collaboration. It specifies collaboration and interaction types in decision

support systems. Two collaboration types (namely, hybrid intelligence and operational collaboration) are

considered in detail applied to the structural and dynamic production system configuration scenarios. Standard

tasks for collaborative decision support that have to be solved in human-AI collaboration systems are defined

based on these scenarios.

1 INTRODUCTION

Configuration of production systems is a complex

task that cannot be done only manually and requires

certain support (Järvenpää et al., 2019; Mykoniatis &

Harris, 2021). Such systems can be considered as sets

of resources that have some functionality and provide

certain services. Services provided by one resource

are consumed by other resources. Since the resources

are numerous and with a changeable composition,

production systems belong to the class of variable

systems with dynamic structures. In some cases such

systems can be controlled in a centralized way.

Traditionally, production system configuration

has been solved in a two-stage fashion: (i) a structure

of the system is formed at a strategic level (structural

configuration) and (ii) its behavior is optimized at

operational level based on actual demand and demand

forecast (dynamic configuration).

Information technology has been employed to

support configuration tasks for many years. The

decision support system (DSS) incorporates

information from the organization’s database into an

analytical network with the objective of easing and

improving the decisions making.

a

https://orcid.org/0000-0001-8364-073X

b

https://orcid.org/0000-0002-1962-7044

c

https://orcid.org/0000-0002-9264-9127

Methods of collective intelligence (understood

primarily as methods of humans working together to

solve problems) and methods of artificial intelligence

are two complementary (and, in some areas,

competing) ways of decision support (Malone &

Bernstein, 2022; Peeters et al., 2021; Suran et al.,

2021). Usually, these approaches are seen as

alternative (with some tasks being by their nature

more friendly for methods of artificial intelligence,

and others for those of collaborative intelligence).

Presented here research intends to develop methods

for the convergence of these two approaches and to

build human-AI collaborative systems that can

flexibly adapt to changes in the environment and the

uncertainty and imprecision of the problem statement

(through intensive collaboration between humans and

AI agents), and also are of high scalability due to the

use of software tools that implement AI models.

Potential (and existing) ways of convergence of

collective intelligence and AI can be conditionally

divided into two levels: basic and problem-oriented.

The basic level includes processes that take place in a

wide variety of systems in a wide range of

applications, e.g., (Gavriushenko et al., 2020; Peeters

et al., 2021). The problem-oriented level includes

Smirnov, A., Levashova, T. and Shilov, N.

Human-AI Collaboration Types and Standard Tasks for Decision Support: Production System Configuration Use Case.

DOI: 10.5220/0011987400003467

In Proceedings of the 25th International Conference on Enterprise Information Systems (ICEIS 2023) - Volume 1, pages 599-606

ISBN: 978-989-758-648-4; ISSN: 2184-4992

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

599

processes that require taking into account specifics of

a particular application domain, e.g., (Bragilevsky &

Bajic, 2020; Paschen et al., 2020). The paper focuses

on the definition of collaboration and interaction

types in DSS, and specify standard tasks for decision

support in production system configuration scenarios

as the use cases. Thus it tries to address the basic level

generalizing conclusions obtained at the problem-

oriented level. The contribution of the paper is the

specification of collaboration and interaction types, as

well as definition of standard tasks that have to be

solved in human-AI collaboration systems.

The paper is structured as follows. Section 2

presents collaboration and interaction types derived

based on the literature analysis. It is followed by a

configuration case study examples used for extracting

standard tasks arising during human-AI

collaboration. The main results are summarized in the

Conclusion section.

2 COLLABORATION IN

HUMAN-AI DSSs

In this work, a collaborative DSS refers to a system

in which multiple AI agents and humans collaborate

to make a decision, with this the AI agents support

humans and facilitate effective decision making and

the agents and humans are mutually learn (Lee et al.,

2021). Human-AI collaboration can be of various

types. These collaboration types and types of

interactions supporting the collaboration processes

are outlined below.

2.1 Collaboration Types

The collaboration types are identified based on an

anlysis of the research in which different

collaboration levels between AI agents and between

AI agents and humans are described. In the present

research, these levels are investigated from the

perspective of the goals of collaboration partners, the

strategy for achieving the collaboration goal, the

principles of divisions of labour between the partners,

and the learning opportunities.

Collaboration supposes that partners interact to

achieve the shared common goal. In the present

reseach, this goal is the goal of the collaboration or a

decision. At that, each partner can pursue the

individual goals. The research considers individual

goals as kinds of activities for which a partner is

responsible in the process of the general goal

achievement. Different collaboration types address

the relationship between the general and individual

goals differently. This paper distinguishes two sorts

of relation to the goal: individual (for the activities

contributing to achieving the common goal) and

general (for the activities prevailing over individual

interests).

The strategy for achieving the collaboration goal

is a manner of the partners’ behaviors (actions). This

manner can be a reaction in response to some events

after they occur, or the behavior when the partner is

the initiator of the actions, anticipates the effects on

themselves and develops the necessary reactions from

other partners. The former manner is referred as a

reactive strategy, the latter as a proactive one.

The division of labour is the way of assignment of

activities to the partners, that to be carried out to

achieve the collaboration goal. Fixed and situational

divisions are distinguished. The fixed division is

based on the competency needed in the assigned

activities. With this way, each partner focuses on a

narrow set of activities they are distinctively good

at, or are specialists. The situational division

suggests that the partners in response to the situation

agree among themselves who performs what kinds of

activities. This way assumes that there may not be

specialists in all the required activities.

The learning opportunities is an indicator of

whether AI and human can learn in the collaboration

process, and, if so, what form of learning is supported.

Three forms of learning are considered:

1) unidirectional when human trains AI;

2) unidirectional when human teaches human;

3) bidirectional when human and AI mutually learn.

Table 1 summarizes the collaboration types

identified from the perspectives of the partners’

relation to the goal, the manner of the partners’ actions,

the division of labour, and the learning opportunities.

Responsive collaboration (Crowley et al., 2022) is a

form of tightly-coupled interaction where the actions

of each agent are immediately sensed and used to

trigger actions by the other. Even a small lag in

responsiveness can have serious undesirable

consequences (for instance, a delay in informing

about the danger of encountering an obstacle to avoid

a collision when driving a car). The responsive

collaboration suggests that each partner pursues the

individual goals and carries out activities of their

competence scope. The dependence of the actions of

a partner on the the actions of another partner, means

a reactive strategy of the goal achievement. Learning

in the responsive collaboration is about AI learning to

convert perception signals directly into motor

commands or actions in order to tune AI to the

sensorimotor reflexes of the human partner.

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

600

Table 1: Collaboration types.

Collaboration type Goal Strategy of goal

achievement

Division of

activities

Learning

Res

p

onsive Individual Reactive Fixe

d

Unidirectional: human trains AI

Situational Individual Reactive Situational Unidirectional: human trains AI

Deliberative Individual Proactive Fixe

d

Not su

pp

ose

d

Praxical Individual Proactive Fixed Unidirectional: human trains AI,

human teach human

Dele

g

ation General Reactive Situational Unidirectional: human trains AI

H

y

brid intelli

g

ence General Proactive Fixe

d

Mutual: human-AI

Human-in-the-loop General Proactive Fixe

d

Unidirectional: human trains AI

Operational General Proactive Situational Unidirectional: human trains AI,

human teach human

Creative General Proactive Situational Mutual: human-AI

Situational collaboration (Crowley et al., 2022)

refers to a model where perception and actions are

mediated by shared awareness of situation and the

partners’ actions are their response to the situation. As

follows from the definition, the situational

collaboration supposes a situational division of

activities, where each partner pursues the individual

goals; is supported by a reactive strategy because the

actions of the partners are situation responsive; and

requires AI to learn to perceive the situation.

Deliberative collaboration (Nakahashi &

Yamada, 2021; van den Bosch & Bronkhorst, 2018)

is a model where the partners synthesize a

collaborative plan that defines activities to be

performed by human and AI. The partners exchange

the information on the individual goals to built the

plan and stick the proactive strategy of the goal

achievement. The fixed plan has a fixed division of

the activities as a consequence. This collaboration

type does not supposes any learning activities.

Praxical collaboration (Crowley et al., 2022)

involves the exchange of knowledge about how to act

to achieve the goal, acquired as a result of experience

or training. In this collaboration, each action is due to

both the previous action and the expected result from

the partner, which means that the partners follow the

proactive strategy. The exchange of experience /

training knowledge gives evidence of mutual learning

of the partners where one kind of the partners fulfills

the role of teacher, and the other takes the role of a

trainee. Such division of roles assumes that a fixed

division of activities takes place. Exchanging the

knowledge, the partners aim at the individual goals.

Delegation (Candrian & Scherer, 2022; Fuegener

et al., 2022; Lai et al., 2022) is a model when a

preplanning of the parter activities is not supposed;

the partners delegate activities each other in the

process of the goal achievement. The delegation

supposes that the partners share a common goal

without a fixed divisions of activities. This type of

collaboration supports the proactive strategy because

the partners react to the commands from each other in

the delegating process. The delegation can involve

activities on AI learning from human if one of the

partners delegates such activity to human.

Hybrid intelligence (Demartini et al., 2020;

Hemmer et al., 2022; Hooshangi & Sibdari, 2022) is

a way for humans and AI to collaborate where they

extend and supplement strengths of each other, and

where each partner contributes to the goal. With

hybrid intelligence, human learns from AI and

benefits by generating new knowledge, and in return

transfers implicit knowledge from human opinions to

enrich the AI performance. Here, the partners share

the common goal and follow the proactive strategy to

achieve it. This collaboration supposes a fixed

division of activities according to the partner

competencies. In the collaboration process, the AIs

inform human about the results of their activities, and

human transfers them the implicit knowledge, which

ensures mutual learning of the partners.

Human-in-the-loop (Demartini et al., 2020;

Hooshangi & Sibdari, 2022) is a model that requires

human involvement in the collaboration. This type of

collaboration aims at leveraging the ability of AI to

scale the processing to very large amounts of data

while relying on human intelligence to perform very

complex tasks. Human in the loop is similar to the

hybrid intelligence except the former supposes

unidirectional learning model when human trains the

AI and transfers to the AI the implicit knowledge.

Operational collaboration (Crowley et al., 2022;

van den Bosch & Bronkhorst, 2018) is a model when

the partners exchange information on the current and

desired situations, their expression as intentions,

goals and sub-goals, tasks and sub-tasks, and plans of

actions that can be used to attain the desired situation.

This type of collaboration can also concern actions

Human-AI Collaboration Types and Standard Tasks for Decision Support: Production System Configuration Use Case

601

that can be used to attain or maintain a stable situation

as well as detection of threats and opportunities. The

operational collaboration is used in complex systems

where parallel processes (executive and interactive)

take place, many collaborators are involved, and

decision support concerns planning joint actions and

putting them into operation. Such a collaboration

supposes that the partners share the common goal and

follow the proactive strategy. The division of

activities is situational. The operational collaboration

suggests learning AI from human, and mutual

learning of humans from each others as a parallel

process during planning and acting.

Creative collaboration (Crowley et al., 2022)

refers to a model where AI and human work together

to solve a problem or create an original artefact and

where each partner improves and builds on the ideas

of the others. Here the partners share a common goal

and follow the proactive strategy of the goal

achievement. The division of activities is of the

situational nature. Collaborating, the partners

exchange ideas and hypothesis how to achieve the

goal, mutually enrich each other's ideas and,

accordingly, mutually learn.

2.2 Interaction Types

To collaborate, partners need to interact. Types of

interactions between AI and human are adopted from

the taxonomy of design knowledge for hybrid

intelligence systems (Dellermann et al., 2019) and the

research on cooperation of humans and AIs in

complex domains (van den Bosch & Bronkhorst,

2018). The referred works specify the interaction

types as explained below.

Interactions from AI to human:

• Resulting: for humans, AIs are black boxes that

present outcomes of their activities without any

explanations;

• Explainable: AIs present outcomes and the

process resulting in them (explanations), human

understands how AI has produced an outcome;

• Informing: an AI initiates interaction and can

voluntarily (without human requests) provide

information (e.g., detecting misunderstandings,

possible errors of judgment, etc.)

Interactions from human to AI:

• Request: human intiates interaction and requests

explanations from the AI on demand. The

initiative for clarification lies on the part of the

human, and requires from the AI the capability to

determine the purpose of the human’s request,

and to select and generate explanations that fit

the purpose (query-based explanations);

• Learning AI: human trains AI model.

In the paper, the interactions types above are

classified as follows.

Interactions initiated by an AI:

• Informing: an AI presents to human information,

which can be accompanied by appropriate

explanations, without any request from the

human part.

Interactions initiated by human:

• From human to AI: request, learning AI;

• From AI to human: resulting, explainable as

response to the human request;

Several types of interactions can occur in the same

collaboration scenario.

3 HUMAN-AI COLLABORATION

SCENARIOS AND STANDARD

TASKS

Production system configuration is considered as a

use case for AI-human collaboration and deriving

standard collaboration tasks.

Decision support in the area of production system

configuration is characterized by the common goal

for collaboration partners. It also assumes hard

division of activities in accordance to the

competencies of the collaborating parties. As a result,

the hybrid intelligence is chosen as the most

appropriate collaboration type for structural

production system configuration scenarios.

Successful dynamic production system

configuration heavily depends on the analysis of

contextual information and assumes situational

division of activities. The operational collaboration

most fully meets the requirements from the scenario

of dynamic production system configuration and

therefore is chosen for this case.

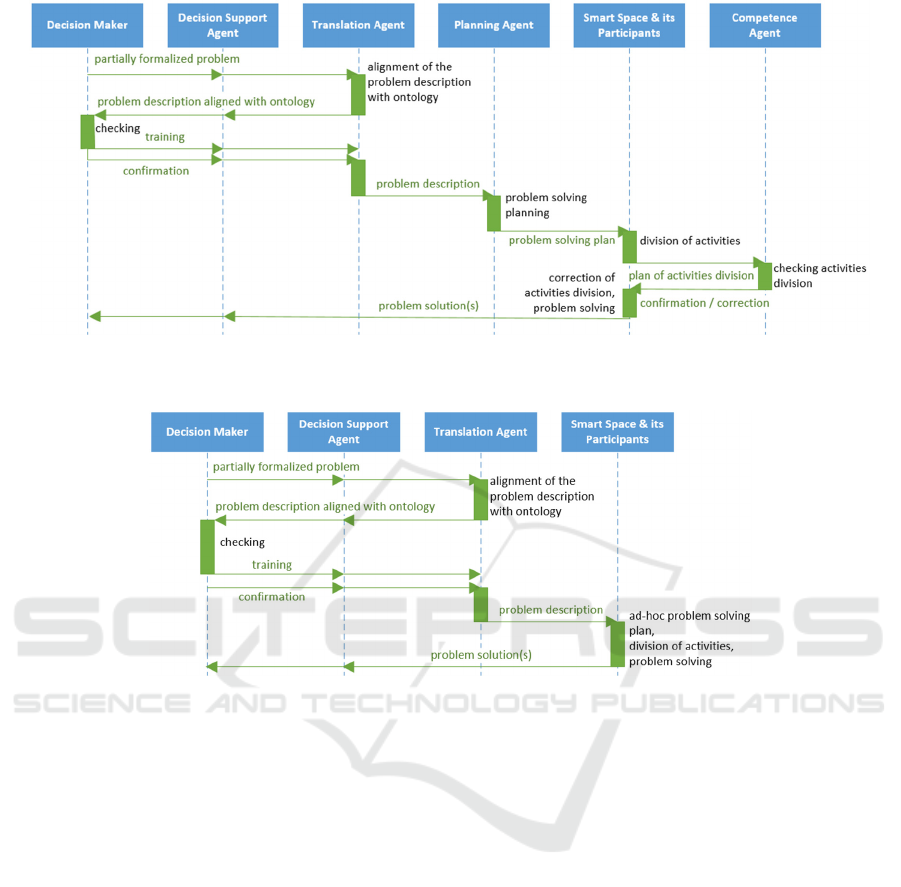

The developed scenarios are described in tables 2,

3 and illustrated in fig. 1, 2 as sequences of stages,

corresponding interaction types, and standard tasks to

be solved. AIs are represented as agents with names

reflecting their functionalities.

One can see that the main difference in the

considered production system configuration

scenarios is centralized (in the hybrid intelligence

collaboration) and decentralized (in the operational

collaboration) problem solving planning. It can be

interpreted as in structural production system

configuration the problem solving is better planned

but slower, whereas solving the dynamic production

system configuration problem is performed faster but

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

602

assumes ad-hoc configuration and usage of the

contextual information.

Below the specified standard tasks are described.

Organizing of the information exchange between

the partners of the collaborative DSS. The task arises

in situations where it is necessary to organize the

exchange of information directly between

collaborating partners or through the publication of

information in the smart space (common information

space providing infrastructure for efficient partner

interactions). To solve this task a form of information

representation that would be understandable to all the

partners as well as methods for information exchange

and information access are required.

Table 2: Structural production system configuration scenario based on the hybrid intelligence collaboration type.

Stage

no.

Stage description

Interaction

type

Standard task

1.

Decision maker describes the structural production

system configuration problem in a partially formalized

way.

Informing

Organization of information

exchange between the partners

of the collaborative DSS

2. Translation agent translates the structural production

system configuration problem description using

ontology

Request or

informing

Translation of the request to the

ontology-based description

3. Translation agent delivers the translated problem

description to the decision maker with explanations of

the translation.

Explainable Ontology-based explanation of

the AI agent actions

4. The decision maker checks if the translated request is

correct. If the decision maker is not satisfied with the

translation result, the translation agent is to be trained.

Learning AI Training of the AI agent based

on human feedback

5. The planning agent identifies the activities that need to

be accomplished to achieve the goal of the collaboration

(decision maker’s problem solving) and creates a work

plan that specifies a) which works the agents have to

perform and which works are to be performed by

humans, and b) for activities the agents must provide

explanations of how the results are achieved.

Request

(or informing)

and resulting

Planning the problem solving

process (centralized)

6. Publishing the problem description in the smart space

(description in natural language for humans and

translated description for humans and agents) and the

plan of problem solving.

Informing Organization of information

exchange between the partners

of the collaborative DSS

7.

Potential partners (agents and humans able to access to

the smart space) announce their willingness to

participate in the problem solving

Informing

Collaborative DSS team

formation

8. The competence agent checks potential DSS team

partners for the competencies required to perform their

chosen activities, delivers the results of the check to

potential partners, and allows/forbids them to participate

in the problem solving in accordance with the

implemented mechanism for recruiting partners to the

team. The invitation/rejection for a potential human

partner is accompanied by an explanation

Resulting

(or explainable)

and informing

Collaborative DSS team

formation

9. Problem solving. Partners who have completed their

activities informed all other team members or following

according to the plan team members, depending on

implementation) and, when planned, provide the

explanation.

Informing and

explainable

a) Organizing of the information

exchange between the

collaborative DSS team partners

b) Ontology-based explanation

of the AI agent actions

10. DSS agent delivers the set of results to the decision

maker

Explainable a) Ontology-based explanation

of AI agent actions

b) Human learning based on the

explanations of the AI agent

Human-AI Collaboration Types and Standard Tasks for Decision Support: Production System Configuration Use Case

603

Figure 1: Sequence diagram of structural production system configuration scenario based on the hybrid intelligence

collaboration type.

Figure 2: Sequence diagram of dynamic production system configuration scenario based on the operational intelligence

collaboration type.

Translation of the request to the ontology-based

description. The task is associated with the

representation of the problem, the decision for which

is the collaboration goal, in a form understandable to

humans and AI agents and allowing to ensure their

interoperability. Ontologies are generally accepted

means to organize such a representation. To solve the

task, it is proposed to represent the problem,

formulated in the request, by ontology means.

Ontology-based explanation of the AI agent

actions. The solution of this task aims at developing

a way for the AI agent to produce the result in a

human-understandable form. Since partners'

interoperability (their ability to interact functionally)

is supported by an ontology, it is natural to suggest

the same form for presenting explanations of the

functions (works, results) performed by the AI.

Planning the problem solving process. The task is

aimed at creating a plan for solving the problem by

the collaboration partners. Depending on the nature

of division of activities between the partners, this task

can be solved in a centralized or decentralized way.

The centralized method implies the presence of the

partner responsible for planning in the DSS. In the

scenarios under consideration, this role is assigned to

a planning agent in the hybrid intelligence scenario.

Decentralized method implies solving the planning

problem through negotiations between the partners,

which corresponds to stage 6 of the operational

collaboration scenario. Such a method has been

presented in (Smirnov & Ponomarev, 2021).

Collaborative DSS team formation. To solve the

task, it is required to choose or develop a mechanism

that enables formation of an efficient team. The

effectiveness of a team may be affected by the

number of its partners, division of activities between

them, their workload, qualifications, and other

indicators. The proposed set of indicators should be

the basis of the selected or proposed mechanism for

forming the human-AI team.

Training of the AI agent based on human

feedback. The emergence of the task is a human

response to the actions of the AI agent, which the

human learns about during informing, resulting, or

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

604

Table 3: Dynamic production system configuration scenario based on the operational collaboration type.

Stage

no.

Stage description

Interaction

type

Standard task

1.

Decision maker describes the problem of

dynamic production system configuration in a

partially formalized way.

Informing

Organization of information exchange

between the partners of the collaborative

DSS

2.

Translation agent translates the structural

production system configuration problem

description using ontology

Request or

informing

Translation of the request to the ontology-

based description

3.

Translation agent delivers the translated

problem description to the decision maker with

explanations of the translation.

Explainable

Ontology-based explanation of the AI agent

actions

4.

The decision maker checks if the translated

request is correct. If the decision maker is not

satisfied with the translation result, the

translation agent is to be trained.

Learning AI

Training of the AI agent based on human

feedback

5.

Publishing the problem description in the smart

space (description in natural language for

humans and translated description for humans

and a

g

ents

)

and the

p

lan of

p

roblem solvin

g

.

Informing

Organization of information exchange

between the partners of the collaborative

DSS

6.

Potential DSS team partners (agents and

humans) develop a common plan of work based

on the information exchange concerning,

collaboration goal, intentions, tasks, context,

and work plans for problem solving

Informing

and

explainable

a) Collaborative DSS team formation

b) Planning the problem solving process

(decentralized)

7.

Problem solving. Partners exchange their

statuses and inform other partners upon

completion of their tasks

Informing

and resulting

Organizing of the information exchange

between the collaborative DSS team

partners

8.

DSS agent delivers the set of results to the

decision maker

Explainable

a) Ontology-based explanation of AI work

b) Human learning based on the

explanations of the AI agent

explainable interactions. As a rule, this task arises

when it is necessary to refine the AI model (for

example, if the existing model gives wrong results).

The problem requires the selection or development of

a learning model, as well as the development of

interfaces for organizing feedback and for the

learning process.

Human learning based on the explanations of the

AI agent. This task is a consequence of solving the

problem of ontology-based explaining the actions by

the AI agent. If human learns something new from the

explanations received, s/he is considered to have

gained new knowledge or experience; that is the AI

agent taught him something. Note: another

consequence of the task of ontology-based explaining

the actions of the AI agent is that the person

understands that the agent works correctly

(incorrectly); this consequence does not lead to the

acquisition of new knowledge by the human.

4 CONCLUSIONS

The paper considers collaboration in human-AI

collaborative decision support systems via a use case

of production system configuration. Nine

collaboration types are defined from the perspectives

of the partners’ relation to the goal, the manner of the

partners’ actions, the division of labour, and the

learning opportunities. Based on the direction of

interactions between human and AI, and the nature of

the exchanged information and knowledge, five

interaction types are defined.

The problems of structural and dynamic

production system configuration are considered as

case studies. It is shown that hybrid intelligence is the

most suitable collaboration type for structural

production system configuration (since it is

characterized by the common goal for collaboration

Human-AI Collaboration Types and Standard Tasks for Decision Support: Production System Configuration Use Case

605

partners and assumes hard division of activities in

accordance to the competencies of the collaborating

parties), and the operational configuration the most

suitable for the dynamic production system

configuration (since it heavily depends on the

analysis of contextual information).

Based on the case study analysis, seven standard

tasks that have to be solved in human-AI

collaboration processes are defined together with

their specifics and ways of solving.

ACKNOWLEDGEMENTS

The research is funded by the Russian Science

Foundation (project 22-11-00214).

REFERENCES

Bragilevsky, L., & Bajic, I. V. (2020). Tensor Completion

Methods for Collaborative Intelligence. IEEE Access, 8,

41162–41174. https://doi.org/10.1109/ACCESS.20

20.2977050

Candrian, C., & Scherer, A. (2022). Rise of the machines:

Delegating decisions to autonomous AI. Computers in

Human Behavior, 134, 107308. https://doi.org/

10.1016/j.chb.2022.107308

Crowley, J. L., Coutaz, J., Grosinger, J., Vazquez-Salceda, J.,

Angulo, C., Sanfeliu, A., Iocchi, L., & Cohn, A. G.

(2022). A Hierarchical Framework for Collaborative

Artificial Intelligence. IEEE Pervasive Computing, 1–10.

https://doi.org/10.1109/MPRV.2022.3208321

Dellermann, D., Calma, A., Lipusch, N., Weber, T., Weigel,

S., & Ebel, P. (2019). The Future of Human-AI

Collaboration: A Taxonomy of Design Knowledge for

Hybrid Intelligence Systems. In T. X. Bui (Ed.),

Proceedings of the 52nd Annual Hawaii International

Conference on System Sciences (pp. 274–283).

https://doi.org/10.24251/HICSS.2019.034

Demartini, G., Mizzaro, S., & Spina, D. (2020). Human-in-

the-loop Artificial Intelligence for Fighting Online

Misinformation: Challenges and Opportunities. The

Bulletin of the Technical Committee on Data

Engineering, 43(3), 65–74.

Fuegener, A., Grahl, J., Gupta, A., & Ketter, W. (2022).

Cognitive challenges in human-AI collaboration:

Investigating the path towards productive delegation.

Information Systems Research, 33(2), 678–696.

Gavriushenko, M., Kaikova, O., & Terziyan, V. (2020).

Bridging human and machine learning for the needs of

collective intelligence development. Procedia

Manufacturing, 42, 302–306. https://doi.org/10.1016/

j.promfg.2020.02.092

Hemmer, P., Schemmer, M., Riefle, L., Rosellen, N.,

Vössing, M., & Kühl, N. (2022). Factors that influence

the adoption of human-AI collaboration in clinical

decision-making. [ArXiv].

Hooshangi, S., & Sibdari, S. (2022). Human-Machine Hybrid

Decision Making with Applications in Auditing. Hawaii

International Conference on System Sciences 2020, 216–

225. https://doi.org/10.24251/HIC SS.2022.026

Järvenpää, E., Siltala, N., Hylli, O., & Lanz, M. (2019).

Implementation of capability matchmaking software

facilitating faster production system design and

reconfiguration planning. Journal of Manufacturing

Systems, 53, 261–270. https://doi.org/10.1016/j.jmsy.

2019.10.003

Lai, V., Carton, S., Bhatnagar, R., Liao, Q. V., Zhang, Y., &

Tan, C. (2022). Human-AI Collaboration via Conditional

Delegation: A Case Study of Content Moderation. CHI

Conference on Human Factors in Computing Systems,

1–18. https://doi.org/10.1145/ 3491102.3501999

Lee, M. H., Siewiorek, D. P. P., Smailagic, A., Bernardino,

A., & Bermúdez i Badia, S. B. (2021). A Human-AI

Collaborative Approach for Clinical Decision Making on

Rehabilitation Assessment. Proceedings of the 2021 CHI

Conference on Human Factors in Computing Systems,

1–14. https://doi.org/10.1145/3411764.34454 72

Malone, T. W., & Bernstein, M. S. (2022). Handbook of

Collective Intelligence. MIT Press.

Mykoniatis, K., & Harris, G. A. (2021). A digital twin

emulator of a modular production system using a data-

driven hybrid modeling and simulation approach.

Journal of Intelligent Manufacturing, 32(7), 1899–1911.

https://doi.org/10.1007/s10845-020-01724-5

Nakahashi, R., & Yamada, S. (2021). Balancing Performance

and Human Autonomy With Implicit Guidance Agent.

Frontiers in Artificial Intelligence, 4, [Electronic

resource]. https://doi.org/10.3389/frai.20 21.736321

Paschen, J., Wilson, M., & Ferreira, J. J. (2020).

Collaborative intelligence: How human and artificial

intelligence create value along the B2B sales funnel.

Business Horizons, 63(3), 403–414. https://doi.org/

10.1016/j.bushor.2020.01.003

Peeters, M. M. M., van Diggelen, J., van den Bosch, K.,

Bronkhorst, A., Neerincx, M. A., Schraagen, J. M., &

Raaijmakers, S. (2021). Hybrid collective intelligence in

a human–AI society. AI & SOCIETY, 36(1), 217–238.

https://doi.org/10.1007/s00146-020-01005-y

Smirnov, A., & Ponomarev, A. (2021). Stimulating Self-

Organization in Human-Machine Collective Intelligence

Environment. 2021 IEEE Conference on Cognitive and

Computational Aspects of Situation Management

(CogSIMA), 94–102. https://doi.org/10.1109/CogSIMA5

1574.2021.9475937

Suran, S., Pattanaik, V., & Draheim, D. (2021). Frameworks

for Collective Intelligence. ACM Computing Surveys,

53(1), 1–36. https://doi.org/10.11 45/3368986

van den Bosch, K., & Bronkhorst, A. (2018). Human-AI

cooperation to benefit military decision making.

Proceedings of Specialist Meeting Big Data & Artificial

Intelligence for Military Decision Making.

https://doi.org/10.14339/STO-MP-IST-160

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

606