Visually-Structured Written Notation Based on Sign Language

for the Deaf and Hard-of-Hearing

Nobuko Kato

1a

, Yuhki Hotta

1

, Akihisa Shitara

2

and Yuhki Shiraishi

1

1

Tsukuba University of Technology, Faculty of Industrial Technology, Japan

2

University of Tsukuba, Graduate School of Library, Information and Media Studies, Japan

Keywords: Sign Language, Spatial Display of Information, Visual Language, Sign Writing, Communication Support.

Abstract: Deaf and hard-of-hearing (DHH) students often face challenges in comprehending highly specialized texts

due to the long time needed to understand their content. This may be due to factors such as the complexity of

Japanese syntax, which differs from Japanese sign language. This study describes the results of a questionnaire

on the notation method that we proposed based on sign language for DHH individuals. The results revealed

that DHH individuals who use sign language correctly answered most questions on sentence structure when

using the proposed notation methods than when using Japanese sentences.

1 INTRODUCTION

In a study in which subjects were tested on their

reading comprehension of texts, the results

demonstrated that there were issues in

comprehending both the structures and meanings of

sentences (Arai et al., 2017). Furthermore, it has been

highlighted that deaf and hard-of-hearing (DHH)

individuals face challenges in acquiring spoken

language. One study reported that variance in

vocabulary and delays in grammar were observed

when comparing children with hearing and DHH

children (Takahashi et al., 2017). One of the

characteristics of language is linearity, and speech

sounds can be represented by one-dimensional time-

series data. For DHH children, difficulty with the

reception and expression of speech is due to the

challenges in processing such linear data.

Meanwhile, the sign language used by DHH

individuals uses a three-dimensional space. For

example, it can use directions and locations in space

to represent objects of action, and "timelines" in space

to indicate the present, past, future, or a specific time

(Engberg-Pedersen, 1995). Using space to represent

abstract linguistic concepts (subject and object) and

nonverbal expressions, such as pointing and facial

expressions to perform grammatical functions can

clarify the structure of sentences (Valli et al., 2011).

It is important to consider that, for some DHH

individuals, the spoken language is their first

a

https://orcid.org/0000-0003-0657-6740

language owing to the use of hearing, whereas others

require visual linguistic input (Marschark and

Knoors, 2012).

Thus, by employing spatial cues akin

to sign language, complex sentences may be better

comprehended by DHH individuals who use visual

language.

The number of DHH individuals who attend

higher education institutions, such as universities, and

work in more specialized fields, has been increasing.

To continue learning throughout their lives, including

reskilling for work, support methods are needed for

DHH individuals.

DHH computing professionals in

the United States are highly interested in reading

assistance tools (Alonzo et al., 2022).

If automatic translation from Japanese as a written

language to sign language notation becomes possible,

it will be easy for DHH individuals to read specialized

texts. Therefore, this study examines the notation

method structured using characteristics of sign

language and describes the results of a questionnaire

on the type of notation method that would be easy for

DHH individuals to comprehend the text

.

2 PRIOR RESEARCH

2.1 Studies on Notating Sign Language

Sign language lacks a notation method, and several

Kato, N., Hotta, Y., Shitara, A. and Shiraishi, Y.

Visually-Structured Written Notation Based on Sign Language for the Deaf and Hard-of-Hearing.

DOI: 10.5220/0011988700003470

In Proceedings of the 15th International Conference on Computer Supported Education (CSEDU 2023) - Volume 2, pages 543-549

ISBN: 978-989-758-641-5; ISSN: 2184-5026

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

543

such methods have been proposed to address this.

One method uses symbols to represent the details of

the finger and hand movements that express words

and sentences. It is advantageous because it allows

users to express words and actions by only looking at

the notation, even if they have never seen it before;

however, the descriptions of each word are lengthy,

and grammar is complicated (Hanke, 2004). A

method of using English words to textualize words

that appear in signed sentences has also been

proposed. The written notation for Japanese sign

language, sIGNDEX, uses romanized Japanese

(rōmaji) to represent words and their own (textual)

symbols to notate facial expressions and other

nonhand movements (Hara et al., 2007). Both of these

methods are used to analyze sign language and are not

used in typical settings, as they require memorizing

numerous symbols unique to each method.

2.2 Studies on the Visual

Representation of Sentence

Structure

Visualizing the sentence structure of Japanese

requires considering which unit of text to base the

visualization on; that is, the entire text, paragraphs,

chunks, and words. Graphic organizers can be used to

visualize entire texts (Minaabad, 2017), and the

dependency analyzer CaboCha can be used to

segment texts into chunks, making it possible to

visualize the dependency structure (Kudo and

Matsumoto, 2002). However, as these tools cannot

grasp the semantic structure of a sentence, for

example, which part is subject and which is the

object, they are not easily adaptable to sign language.

Other examples of diagramming entire texts that

have been proposed include methods of illustrating a

text’s logical structure using a graph representation

(Hasida, 2017).

A graph document is a noncontiguous

type of text that represents the relationships between

textual information as explicit spatial attributes

.

Unlike continuous text, such as written text, this type

of noncontinuous text, in which textual information is

presented simultaneously in a graph, has been found

to facilitate the comprehension of content (Larkin and

Simon, 1987).

Based on a study of college students,

simultaneously presenting information by arranging

it in space, similar to that in illustrations, promotes

the comprehension of entire texts. Additionally, an

important part of promoting comprehension is the

relation of the textual data to entire graphic elements,

such as by drawing boxes around it or arrows pointing

to it (Suzuki and Awazu, 2010).

For DHH individuals, it is appropriate to present

information in a form similar to the spatial

arrangement used in sign languages. Thus far, there

have been notations for sign language research and

translation, but no system has been proposed for DHH

to read. Therefore, in this study, we examine a novel

notation method for DHH.

3 SENTENCE STRUCTURE AND

PROPOSED NOTATION

METHOD

3.1 Comprehending Sentence Structure

In English, word order provides clues for

understanding the structure of a sentence, such as

identifying the subject and object. However, in

Japanese, except for predicates at the end of

sentences, word order can be swapped using particles.

Alternatively, it is not possible to identify subjects

and objects from word order, and because DHH

individuals face challenges in acquiring spoken

Japanese, it is difficult to understand Japanese

sentence structure.

When hearing individuals communicate with

DHH, they often use signed or manually coded

Japanese. When sign words are expressed in Japanese

word order and particles are omitted, it is difficult for

those with hearing impairment to understand the

meaning and structure of the sentence (Chonan,

2001).

In contrast, unique languages developed by deaf

people, such as American sign language (ASL) and

Japanese sign language (JSL), make it possible to

visually describe sentence structure using space,

pointing, and facial and other nonhand expressions

(Valli et al., 2011)

With short texts, DHH individuals can

occasionally comprehend the meanings of sentences

by extrapolating from experience, regardless of

whether Japanese, manually coded Japanese or JSL

are used. However, for specialized texts, it is often

more difficult to achieve this, which makes it

necessary to have a correct understanding of the

sentence structure.

3.2 Characteristics of Sentences in

Specialized Texts

When actual DHH students were tested on their

understanding of sentences containing a variety of

technical terms, it was found that certain features of

CSEDU 2023 - 15th International Conference on Computer Supported Education

544

the language made it difficult for them to understand

Japanese sentences correctly; that is, we observed

that:

•

The subject and object could not be inferred

from word order.

•

The subject and object could not be inferred

from the particle alone.

•

Grammatical roles did not necessarily

coincide with semantic roles.

The following points were considered to be reasons

for the difficulty in understanding the structure of

sentences in specialized texts:

•

The subject is omitted in numerous sentences.

•

There are many long phrases and clauses.

•

There are long or many clauses, or clauses

modifying the subject.

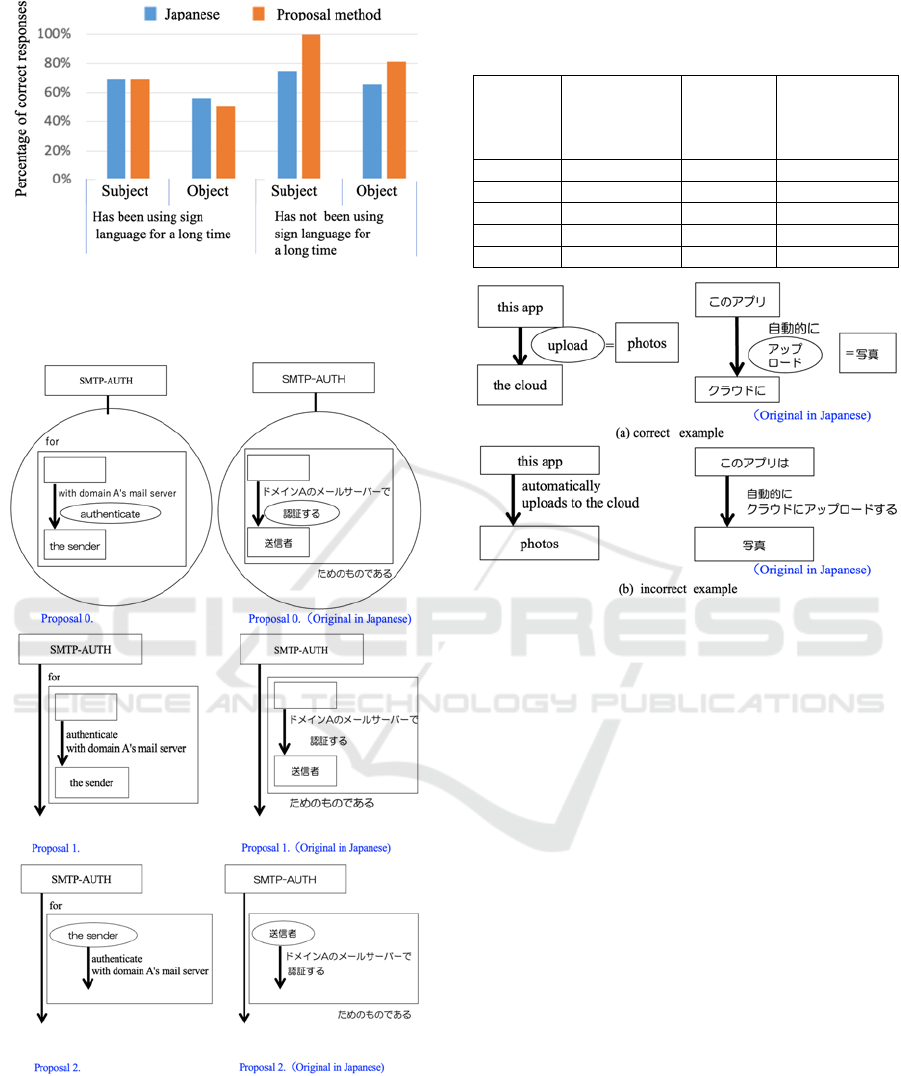

Figure 1: Example of notated rule with our proposed

method(S: subject, V: verb, O1: indirect object, O2: direct

object).

In this study, we attempt to notate technical

sentences with these characteristics using the

proposed notation method.

3.3 Proposed Notation Method

In this study, we propose a notation method that

focuses on the relationship between words and

phrases to make it easy for DHH individuals to

understand the structure of sentences. Therefore, we

used Japanese labels for the individual signed words.

One of the features of sign language is the use of

space, which enables the clarification of subjects and

objects or expresses two things simultaneously, which

is difficult to conduct in spoken language.

We intended our notation method to recreate this

feature on a flat surface and to represent the

relationship between words diagrammatically.

In the experiment, we tested three proposals

(Figure 1), based on a notation method (Tamura and

Shiraishi 2015), that consider the spatial

characteristics of sign languages. Each proposed

method had different symbols surrounding the

predicate and object and different notations when the

subject was omitted.

Figure 2: Sentences to notate in Question 3.

4

EXPERIMENTAL METHOD

A total of 10 DHH students participated in the

experiment. Students were presented with Japanese

sentences written normally and/or diagrammed using

different notation methods. The participants were

then asked to respond to the questionnaire.

The sentences we used and diagrammed were

taken verbatim, or partially revised, from past

problems on exams, such as the

Fundamental Information Technology Engineer

Examination (Information-Technology Promotion

Agency).

In Questionnaire 1, writing examples of Proposals

0, 1, and 2, were presented in nine sentences.

Participants ranked the three proposals based on the

following points.

•

Ease of understanding the meanings of

sentences.

•

Ease to grasp the subject and object of a

sentence.

From the results of Questionnaire 1, the proposal

with the lowest rank sum was selected as the proposal

for each participant.

In Questionnaire 2, we presented sentences

notated with their selected proposal and asked

participants to identify the subjects and objects of all

sentences and provide a subjective rating (on a 6-

point scale from 1: ‘strongly disagree’ to 6: ‘strongly

agree’).

In Questionnaire 3, we instructed participants to

use their preferred notation method to notate the

specialized sentences. In total, participants were

presented with two sentences (Figure 2).

Visually-Structured Written Notation Based on Sign Language for the Deaf and Hard-of-Hearing

545

5

RESULTS AND DISCUSSION

In Questionnaire 1, two participants chose Proposal

0, one chose Proposal 1, and seven chose Proposal 2.

The free responses suggested that, in general, writing

sentences using our notation method was effective to

a certain extent based on the following responses:

•

The words in the sentences were boxed in

order, which made it easy to understand the

meanings.

•

At a glance, it was easy to understand what I

could not understand simply by reading the

text.

•

It was visually easy to understand when the

text was boxed or circled.

However, participants highlighted issues with the

rules of the notation method. These included

•

I do not like writing predicates next to arrows

(Figure 3, Proposal 1).

•

The fewer boxes there are, the easier it is to read,

as opposed to a large number of boxes and

circles (Figure 4).

•

Since I read these from top to bottom, it feels

slightly off to have the first box blank (Figures

5, Proposal 0, and Proposal 1).

•

I mainly chose the ones that are circled or

squared, as it is easy to understand those that

emphasize the object.

Figure 3: Example where the predicate could be written

next to an arrow (Proposal 1).

Figure 4: Examples with many enclosing boxes and

circles.

Figure 5: Sentence where the first box is blank because the

subject is omitted (Proposal 0, Proposal 1).

In Questionnaire 2, we asked eight respondents to

provide subjective ratings (on a scale from 1–6)

regarding the ease of knowing what the subject and

object were and understanding the meanings of the

sentences. We also instructed them to identify the

subjects and objects of the sentences. Figure 6 shows

the percentage of correct answers for the subjects and

objects. An example of a sentence where many

respondents wrongly identified the grammatical

object is shown in Figure 7.

CSEDU 2023 - 15th International Conference on Computer Supported Education

546

Figure 6: Percentage of correct responses for the subject

and object when the sentences were written normally and

diagrammed with our notation methods.

Figure 7: Sentence where most of the respondents wrongly

identified the grammatical object.

Table 1: Percentage of correct responses for each

respondent when diagramming the sentences using our

notation method.

ID

Has not been

using sign

language for a

long time

ID

Has been using

sign language

for a long time

ID_S1 79% ID_L1 100%

ID_S2 71% ID_L2 86%

ID_S3 71% ID_L3 100%

ID_S4 100% ID_L4 57%

Mean 80% Mean 85%

Figure 8: Example responces in Questionnaire 3.

The respondents were classified as having a short

sign language history and long sign language history

based on whether they started using sign language

after entering university or had used it before. The

results demonstrate that there was no difference in the

percentages of correct responses between sentences

written in Japanese and sentences are written using

our notation method for respondents with a short sign

language history. However, the percentage of correct

responses tended to be high for respondents with a

long sign language history when our notation method

was used.

In Questionnaire 3, we instructed the participants

to notate the sentences in our notation method. The

percentage of correct responses for the eight

respondents in Questionnaire 3 is listed in Table 1.

Long-term users of sign language had a higher correct

response rate than non-long-time users of sign

language.

Figure 8 shows example responses in

Questionnaire 3. In the correct and incorrect response

examples, the direct and indirect objects were placed

in opposite directions.

In sign languages, there are verbs called agreement

verbs. The orientation or location of some verbs

includes information about the subject and object of

Visually-Structured Written Notation Based on Sign Language for the Deaf and Hard-of-Hearing

547

the verb (Valli et al., 2011). Our notation method

recreates these spatial sign language expressions. The

limited number of respondents notwithstanding, the

high accuracy rate among those with a long sign

language history may indicate the proposed notation

method's effectiveness for DHH individuals.

In addition, we found that it was difficult to

estimate the different notation method proposals

solely based on subjective ratings because the results

of the subjective evaluation did not coincide with the

percentage of correct answers.

6 CONCLUSIONS

A questionnaire was conducted on the notation

method for visually structuring sentences for DHH

individuals based on examples of writing sentences

with specialized content. The results indicate the

following:

• DHH individuals who are long-term users of

sign language can correctly identify subjects

and objects at a higher rate in sentences using

our notation method than in sentences written

normally in Japanese.

• The percentage of correct responses was also

high when the sentences were notated using

the proposed notation method.

Alternatively, it is expected that when the

participants are accustomed to using spatial

expressions in sign language, they can easily use the

proposed notation method to understand the sentence

structure even if it is used for the first time.

While the free responses to the questionnaire

indicated that diagramming sentences with boxes and

circles around the words was effective to a certain

extent, they also pointed out the following issues:

• Excessive boxes and circles may cause low

subjective evaluation.

• Writing the predicate section next to the

arrow was rated poorly.

• The method of indicating the absence of a

subject using a blank square tended to have a

low subjective evaluation.

This study has two limitations. The first limitation

was the number of participants, because verification

using numerous DHHs is required.

The second aspect concerns the scope of the

applicable text. Ambiguity in written expressions, not

only in Japanese, is caused by the existence of a

structure in the language. In a notation method such

as the proposed method, which attempts to describe

"structure" visually, there are sentences that are

difficult to express visually. We found examples of

both cases where the cause is the sentence itself (e.g.,

the sentence itself can be interpreted in several

manners), where the cause is the notation. Our

notation method offers adjustable granularity of text

chunks.

In the future, we will continue to examine notation

methods that make sentence structures easy for DHH

individuals to understand by conducting surveys with

numerous sentences.

ACKNOWLEDGEMENTS

This study was supported by JSPS KAKENHI (Grant

number 22K02999, 19K11411).

REFERENCES

Alonzo, O., Elliot, L., Dingman, B., Lee, L., Amin, A.,

Huenerfauth, M. (2022). Reading-Assistance Tools

Among Deaf and Hard-of-Hearing Computing

Professionals in the U.S.: Their Reading Experiences,

Interests and Perceptions of Social Accessibility. ACM

Transactions on Accessible Computing. 15, 2, Article

16, pp.1–31.

Arai, N. H., Todo, N., Arai, T., Bunji, K., Sugawara, S.,

Inuzuka, M., Matsuzaki, T., Ozaki, K. (2017). Reading

skill test to diagnose basic language skills in

comparison to machines. Proceedings of the 39th

annual cognitive science society meeting (CogSci),

pp.1556–1561.

Chonan, H. (2001), Grammatical Differences Between

Japanese Sign Language, Pidgin Sign Japanese, and

Manually Coded Japanese: Effects on

Comprehension, The Japanese Journal of Educational

Psychology, 49(4), pp.417–426.

Engberg-Pedersen, E. (1995), Space in Danish Sign

Language: The semantics and morphosyntax of the use

of space in a visual language. Gallaudet University

Press.

Hanke, T. (2004). HamNoSys – Representing sign language

data in language resources and language processing

contexts. Workshop on the Representation and

processing of Sign Languages. 4th International

Conference on Language Resources and Evaluation,

LREC 2004, pp.1–6.

Hara, D., Kanda, K., Nagashima, Y., Ichikawa, A.,

Terauchi, M., Morimoto, K., and Nakazono, K. (2007).

Collaboration between linguistics and engineering in

generating animation of Japanese Sign Language: the

development of sIGNDEX Vol.3. Challenges for

Assistive Technology, pp.261–265.

Hasida, K. (2017). Decentralized, Collaborative, and

Diagrammatic Authoring, The 3rd International

CSEDU 2023 - 15th International Conference on Computer Supported Education

548

Workshop on Argument for Agreement and Assurance

(AAA2017).

Information-Technology Promotion Agency. Past problems

on the fundamental information technology engineer

examination. https://www.jitec.ipa.go.jp/index-e.html

(Accessed 2023-03-05).

Kudo, T., Matsumoto, Y. (2002). Japanese dependency

analysis using cascaded chunking. Proceedings of the

6th Conference on Natural Language Learning 2002

(CoNLL-2002), pp.63–69.

Larkin, J. H., Simon, H. A. (1987). Why a diagram is

(sometimes) worth ten thousand words. Cognitive

Science, 11(1), pp.65–100.

Marschark, M., Knoors, H. (2012). Educating deaf

children: Language, cognition, and learning. Deafness

and Education International, 14(3), pp.136–160.

Minaabad, M. S. (2017). Study of the effect of dynamic

assessment and graphic organizers on EFL learners’

reading comprehension. Journal of Language Teaching

and Research, 8(3), pp.548–555.

Suzuki, A., Awazu, S. (2010). The importance of full

graphic display in a graphic organizer to facilitate

discourse comprehension. Japanese Journal of

Psychology, 81(1), pp.1–8.

Tamura, M., Shiraishi, Y. (2015), Proposal of Information

Support by Writing System Considering Spatial

Characteristic of Sign Languages, IEICE Technical

Report WIT, 114(512), pp.29–32.

Takahashi, N., Isaka, Y., Yamamoto, T., Nakamura, T.

(2017). Vocabulary and grammar differences between

deaf and hearing students. Journal of Deaf Studies and

Deaf Education, 22(1), pp.88–104.

Valli, C., Lucas, C., Mulrooney, K. J., Villanueva, M.

(2011), Linguistics of American Sign Language: An

Introduction. Gallaudet University Press.

Visually-Structured Written Notation Based on Sign Language for the Deaf and Hard-of-Hearing

549