Communicating Emotions During Lectures

Arsalan Ali Sadiq and Georgios Marentakis

a

Department of Computer Science and Communication, Østfold University College, Halden, Norway

Keywords:

Emotions, Communication System, Emotional Communication, Lectures, Students, Teachers.

Abstract:

Teaching and learning are processes that generate a wide range of emotions in both students and lecturers,

which are often kept private and not expressed in the classroom. Emotions may arise in the classroom or

auditorium because of the material being taught, the way it is taught, the interaction with fellow students or the

lecturer, as well as other factors such as the physical conditions of the lecture room. In the classroom, emotion

is primarily communicated in an covert way, as in the gestures or the speech of teachers and students which

may not be sufficient for good communication, as for example, in large auditoria or during online teaching.

In other cases, the emotional load itself may hinder the expression of students and teachers. We report here

on the results of applying a user-centered design approach to the design and development of a system that

allows students to communicate emotion during the lecture in an efficient way, while the lecturer monitors and

responds to them in real-time. Our findings suggest that students are interested in a cross-platform application

that can be run on both their laptop and mobile devices. Furthermore, they wanted a solution that would not

distract them from the lecture and that they could use effortlessly. Based on the evaluation of a prototype,

the overall feedback shows that the system we developed appears to be promising and the system’s operation

causes no disruption or concern while listening or delivering the lecture.

1 INTRODUCTION

Emotion is an ever-present part of our lives, influ-

encing almost every aspect of our actions. Emo-

tion is critical in education and learning-related re-

search, where it has become clear that various links

exist between emotions and learning (Pekrun, 2014;

Sagayadevan and Jeyaraj, 2012; Tyng et al., 2017).

In this sense, classrooms are emotionally and psy-

chologically charged environments. Emotions in the

classroom can be triggered by the content being

taught, how it is delivered, fellow students or the

instructor’s responses, and other factors such as the

classroom environment. Emotions are essential from

an academic standpoint due to their impact on learn-

ing and progress, but learners’ emotional health can

also be viewed as an educational goal. When students

have a better learning environment, appreciative emo-

tional experiences and academic achievement may be

increased (Munoz and Tucker, 2014).

Communication of emotion in the classroom hap-

pens, however, mostly in covert ways and emotion

is mostly encoded in speech or gestures of students

and teachers. In some cases, as in large auditorium,

a

https://orcid.org/0000-0002-6563-9601

the physical setup hinders the communication of emo-

tion, due to low visibility or audibility. The same is

the case in smart tutoring systems or online classes,

which are becoming increasingly popular (Tyng et al.,

2017). Even though exposing students to real-time e-

learning, aims to increase student engagement, com-

munication, in particular this of emotional cues, is ar-

guably worsened in such settings. In other cases, the

teaching style of a lecturer may result in that emotion

is outside the focus. Importantly, emotional load it-

self may make it difficult for students or teachers to

express themselves.

Systems that support the communication of emo-

tion may thus prove to be helpful for both physical

and online teaching. Most systems targeting emo-

tions in the classroom work using machine learning

techniques to automatically recognize students1 emo-

tions. Even if effective, these often recognize a lim-

ited range of emotions and require physiological or

optical signals from the users which may be cumber-

some but also can cause privacy issues. In this paper,

we contribute by presenting the results of applying a

user-centered design approach (Preece et al., 2015) to

understand users and design a system providing stu-

dents the ability to communicate their emotions in the

558

Sadiq, A. and Marentakis, G.

Communicating Emotions During Lectures.

DOI: 10.5220/0011989000003470

In Proceedings of the 15th International Conference on Computer Supported Education (CSEDU 2023) - Volume 2, pages 558-565

ISBN: 978-989-758-641-5; ISSN: 2184-5026

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

classroom and teachers to monitor these emotions and

react accordingly.

2 LITERATURE REVIEW

Emotion emerges as the coordination of multiple sub-

systems or elements, each of which relates to one or

more of the typical expressions of emotional expe-

riences, such as feelings, physical changes, or facial

expressions. It is possible to differentiate between

moods and emotions. Moods typically endure for

longer periods, but they are frequently weaker and

their origin is uncertain. On the other hand, emo-

tions are frequently more intense, last shorter pe-

riods, and have a specific object or cause (Frijda,

1993). Emotions can be measured using convergent

measurement, which involves assessing all compo-

nent changes: ongoing adjustments to evaluation pro-

cedures at all levels of central nervous system pro-

cessing, the neuroendocrine, autonomic, and somatic

nerve systems’ reaction patterns, and shifts in motiva-

tion caused by evaluation results, particularly in terms

of action propensities, body motions and patterns of

facial and vocal expressiveness, and the character of

the subjectively experienced emotional state (Scherer,

2005).

Emotions are commonly described either by us-

ing categories or by using dimensions. With cat-

egories, emotions can be specified using emotion-

specific terms or class labels such as: rage, contempt,

terror, pleasure, sorrow, and surprise or domain-

specific expressiveness categories such as tedium and

bewilderment. Each emotion has its own collection

of characteristics that indicate inciting situations or

behaviours. Emotions can also be described using di-

mensions, typically two (valence and arousal) or three

(valence, arousal, and power). The valence compo-

nent of emotion determines whether it is positive or

negative and extends from painful sensations to pleas-

ant feelings. The arousal dimension describes the

amount of excitement depicted by the feeling, which

might vary from lethargy or tedium to intense exhila-

ration. The power dimension represents the degree of

power, such as emotional control (Mauss and Robin-

son, 2009).

A variety of tools for measuring, reporting, rec-

ognizing emotions exist, ranging from surveys, and

various self-reporting schemes to automatic recog-

nition based on facial movements or physiological

data (Mauss and Robinson, 2009). In human com-

puter interaction, common procedures involve the

self-assessment manikin (SAM) (Bradley and Lang,

1994), the Geneva emotion Wheel (Scherer, 2005),

the Circumplex Model of Affect (Russell, 1980), but

also sliders for valence or arousal (Laurans et al.,

2009; Betella and Verschure, 2016), or referring to

photographs (Pollak et al., 2011). Recently, there was

criticism against using emotional categories or un-

derlying dimensions to measure emotion as these ap-

proaches do not take into account the embodied, dy-

namic, and social nature of the emotional experience

(Boehner et al., 2007; Sengers et al., 2008). Tools

were proposed that allow users to express their emo-

tion in an interactive way by drawing, pressing, touch-

ing, or using other modalities (H

¨

o

¨

ok, 2008; Isbister

et al., 2006; St

˚

ahl et al., 2009; Chang et al., 2001).

Automatic recognition of emotion is also quite com-

mon in affective computing (Kort et al., 2001; Picard,

2000) and has been applied in several setting involv-

ing physiological models, facial expression, move-

ment, voice cues, and other modalities.

Emotions in the Classroom are quite common as

a result of learning and interacting. Various forms

of positive and negative academic emotion categories

have been identified such as pride, optimism, plea-

sure, relaxation, thankfulness, and appreciation as

well as weariness, embarrassment, anxiousness, de-

spair, sorrow, despair, and disdain (Subramainan and

Mahmoud, 2020). Understanding students’ emotional

and behavioural issues in classrooms requires the con-

sideratio of teacher-student encounters, social com-

petence, and learning environment setting (Poulou,

2014). (Sagayadevan and Jeyaraj, 2012) explore the

connection involving the instructor and student com-

munication, emotional commitment particularly ef-

fective emotions displayed inside the lesson, and aca-

demic results like student performance and accom-

plishment. (Brooks and Young, 2015) centered on in-

structor style of communication as a determinant of

student academic experience. (Mazer et al., 2014)

have discovered links among instructors’ communi-

cation patterns and students’ emotional responses.

Smart classrooms often use tools to enhance the

communication between students and teachers. One

variety are clickers, which are essentially classroom

response systems that may employ simple button in-

terfaces or advanced wireless handheld transmitters

to collect student votes and transmit data via infrared

signals (Siau et al., 2006; Lantz, 2010). Clickers

instantly gather and compile student responses, and

then display the aggregated results in the classroom.

Graphical but also tangible systems have also been

proposed such as the ClassBeacons system uses scat-

tered lighting to indicate how instructor divide their

attention among students in the classroom (An et al.,

2019). Some systems target communication of emo-

tion in some capacity. These can be classified as these

Communicating Emotions During Lectures

559

using automatic or direct communication of emo-

tion. In automatic communication of emotion, data

from digital backchannel systems (Jiranantanagorn

et al., 2015), wireless sensors (Di Lascio et al., 2018),

agent-based systems (Ahmed et al., 2013), or cameras

(Sharmila et al., 2018) are used for emotion recog-

nition and automatic emotional communication. The

Subtle Stone (Alsmeyer et al., 2008) is a portable gad-

get that allows students to express their emotions to

their teachers by utilizing seven different colors that

stand for seven different emotions. The instructor’s

interface employs a Subtle Stone to portray all learn-

ers as separate person-shaped entities. A messaging

system that displays the person’s emotional condition

via animated vibrant text was demonstrated in (Wang

et al., 2004). It utilizes a two-dimensional visual dis-

play to show conversational animations and data. This

displays graphics for certain words or phrases. Find-

ings from an experiment performed in an online edu-

cational context indicate that a UI that provides feel-

ings and emotions allows online users to engage with

one another more effectively.

Summary. Emotion is an intensively studied phe-

nomenon which is known to have a large impact on

student’s learning and success. Arguably providing

ways to enhance the communication of emotion in

the classroom would improve the quality of students’

learning experience. And vice-versa bi-directional

communication could help teachers also communi-

cate emotions in the classroom in more flexible ways.

The review above indicates that mostly automatic

methods for recognizing emotion have been used in

the classroom. Even though these are important, they

often face significant problems as they have a re-

stricted number of emotional states and use sensors or

cameras which may prove cumbersome or pose im-

portant privacy concerns. Direct reporting of emo-

tions, on the other hand, is more promising in this

respect, however, it has not been studied extensively.

In particular, the wealth of methods for direct com-

munication of emotion in the literature have not taken

into account neither the potential of different interac-

tion techniques. Emotion can be expressed through

categorical, dimensional, or interactional input inter-

faces. In a categorical interface emotions are selected

using categories, in a dimensional interface emotions

are reported using dimensional spaces, and in an in-

teractional interface emotions are expressed by draw-

ing/interacting with a representation on a screen or

an object. Furthermore, systems for the direct com-

munication of emotions may be graphical or tangible.

The potential of these emotion input spaces and in-

teraction techniques has not been investigated in the

context of communicating emotion in the classroom.

This is what drives this work. By applying a user-

centered design approach (Preece et al., 2015), feed-

back is obtained about different methods for commu-

nicating emotion and different approaches to system

design. In particular, we look into how different in-

teraction and emotion input methods in the literature

are perceived by users for the purpose of communicat-

ing emotion in the classroom. Subsequently, we inte-

grate the feedback we received and design a prototype

which we evaluated. The evaluation aims to help us

understand whether the selected interaction method

and interaction type can be successfully used to com-

municate emotions during the lecture and whether it

allows lecturers to monitor student emotions during

the lecture. The design process involved: 1) gather-

ing requirements, 2) designing alternatives and pro-

totyping, and 3) evaluation. All the participants are

provided informed consent in accordance with regu-

lations of Norwegian Centre of Research Data (NSD).

3 INFORMING

During this phase, we obtained early feedback on

appropriate interaction methods (graphical or tangi-

ble) and emotion input techniques (categorical, di-

mensional, or interactional) for designing systems for

communicating emotion in the classroom. The pro-

cess consisted presenting participants with a presen-

tation and relevant paper prototypes followed by an

interview with two phases and a focus group. The first

phase of the interview was about getting general feed-

back and focusing on the interaction method and the

second about getting feedback on the emotion input

technique. A total of five participants (all university

students, 3 male, 2 female) participated. The focus

group was run in order to contrast participant opinions

but also in order to understand better the implications

of the social aspect of communicating emotions in the

classroom.

The presentation presented the concept of emo-

tion as well as the possibilities offered by systems

communicating emotion in the classroom. Further-

more, several examples of how different graphical and

tangible interaction methods can be used to commu-

nicate emotion were provided drawing on the existing

literature. These were also illustrated by paper pro-

totypes which demonstrated how different interaction

methods could be used to communicate emotion (see

also Figure 1).

The first phase of the interview was more general

and questions investigated the source of participant’s

emotions in the classroom and whether participants

wanted to express these. Participants were also asked

CSEDU 2023 - 15th International Conference on Computer Supported Education

560

(a) (b) (c) (d)

Figure 1: Paper prototypes used in the study. a. ambient light to display emotional response, b. camera prototype to detect

emotion through facial expression, c. smart band and a scale to send emotion, and d. participants of the study examining

different paper prototypes.

to describe relevant situations and whether they be-

lieved a system for communicating emotions would

be helpful. Subsequently the focus shifted on obtain-

ing feedback on the appropriateness of graphical or

tangible user interfaces for communicating emotions.

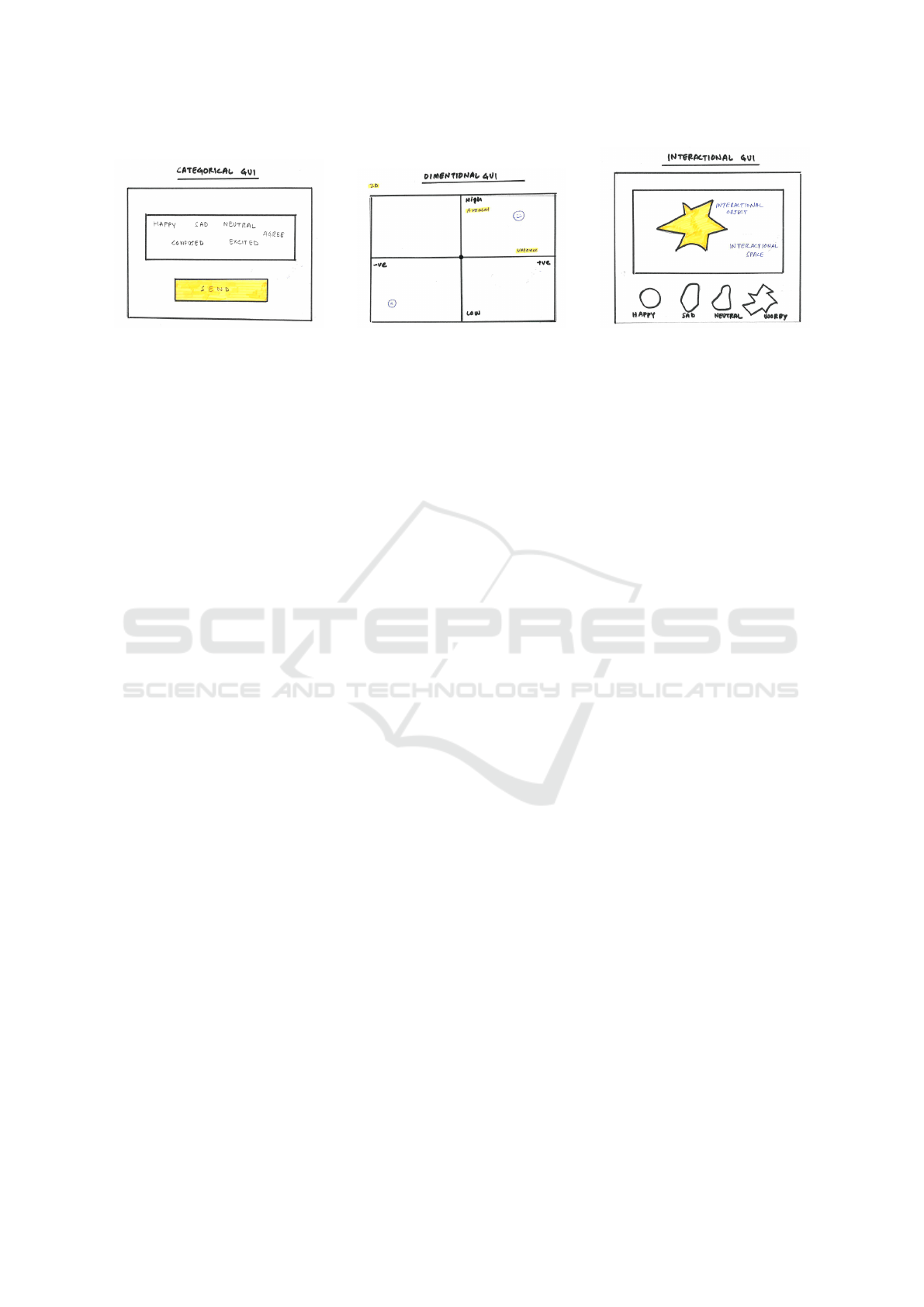

The second phase focused on emotion input tech-

niques. To this end, participants were presented with

paper prototypes of categorical, dimensional, and in-

teractional interactions for graphical or tangible inter-

faces focusing on their interaction method preference

(see Figure 2). In the focus group, we asked partici-

pants to discuss and argue about their chosen method

but also reflect on how well it can adapt to the social

aspects of the teaching and learning.

Results. All participants reported aspects of the

learning material, process, and environment as the

main source of their emotions (i.e. state rather than

trait emotions). All participants agreed on the im-

portance of being able to express their feelings dur-

ing lectures. They reported on numerous occasions

in which they wished to communicate their emotions

in some way but were unable to do so due to feeling

shy, embarrassed, or discouraged. All agreed that a

system for communicating emotion would contribute

much to solve this problem.

Most participants preferred a GUI-based solution

because they found this is simple and quick to in-

teract with, not distracting, less noticeable, and pro-

viding good privacy and possibilities for concealed

communication. They felt that interacting with a tan-

gible system can be distracting, perhaps also noisy,

and likely easily noticeable by others. It seems that

speed, simplicity, and privacy is favored by partici-

pants. The GUI-based approach was also favored be-

cause it could be used both when taking notes on a

laptop but also when interacting with a mobile phone.

Concerning interaction type, it appears that partic-

ipants preferred categorical and dimensional inter-

faces. They found selecting appropriate arousal and

valence to be an easy task. Participants favoured mul-

tiple emojis to choose from, personalized profiles and

emotional feedback history, and comment boxes with

templates, as well as the possibility to provide input in

an anonymous way. The results seem reasonable con-

sidering that the primary goal of students in a class-

room is to listen to the lecture and not get involved in

lenghty interactions. The often private nature of emo-

tions seems to also influence participants responses.

4 DESIGNING AND

PROTOTYPING

Based on user feedback, a number of user scenarios

and stories were developed based on the requirements

to help us understand better user feedback and pos-

sibilities for design. Different design sketches were

also made to elaborate on the requirements. Sub-

sequently we decided to concentrate on prototyping

a graphical cross-platform prototype including a stu-

dent and a teacher view.

The student view (Figure 3 (a)) supported anony-

mous or named input and provided an emotion input

space containing two dimensions with relevant emo-

tions placed on its circumference. The interface re-

sembled this of the Geneva Emotion Wheel (Scherer

et al., 2013) and contained a 12-scale emotional wheel

with 4 intensity values, ranging from 1 to 4, for each

emotion. The student can select an emotion from the

wheel as well as its intensity, 1 being the lowest and

4 being the highest. We adopted the emotions used in

(Kort et al., 2001).

The teacher view had a real-time screen and a

Communicating Emotions During Lectures

561

(a) Categorical (b) Dimensional (c) Interactional

Figure 2: Design sketches based on different interface types.

summary screen. The real-time screen showed arriv-

ing emotions on an ambient color display background

whose color changed based on the average emotional

response (using a green to red colour gradient based

on the emotional responses). When a student sent an

emotion, the emotion appeared on the teacher’s screen

for a few seconds before disappearing. A detailed

view was also available for the lecturer, supporting

analysis and reflection on student input. This view

displayed all the emotions received, user names, etc

in tabular form in the order they were received and a

graph showing emotions based on their time of arrival

during the lecture (see Figure 3).

React JavaScript was used to develop a cross-

platform application that could run both on PC and

Mobile. React was chosen due to its modularity and

ease of use. A firebase database was used on the back-

end to store emotional responses and other related

data used in the application.

5 EVALUATION

A realistic situation was set up in order to evaluate

the system and investigate the effectiveness of the de-

sign choices for students to transmit and for teachers

to observe students’ emotions in real-time (see Fig-

ure 3. In particular, we wanted to observe hwether

the selected emotion input technique and interaction

method was usable for students but also for monitor-

ing and reviewing student emotions by the lecturer.

The intention is not to provide or analyze data about

student emotions during lectures.

Five students and a professor from the school’s

IT department participated in the evaluation. Evalu-

ation was done in a lecture room in which the pro-

fessor lectured on a topic related to machine learn-

ing while the students followed. Before the lecture

began, the prototype was installed on the computers

and smartphones of the participants. During the lec-

ture, users used the prototype on their smartphone or

their PC. Some communicated emotions using their

laptop computers while taking notes and some using

their mobile phones. The first author sat in the room

and observed the users. The students were completely

focused on the lecture most of the time, but occasion-

ally sent emotional responses through the application

when some terminology in that topic was unclear or

when something appeared very interesting. When the

teacher went into greater depth about the topic and

discussed the more technical aspects of Long Short

Term Memory networks, for example, students’ fa-

cial expressions changed and students appeared to be

concerned and students focused on their laptops. The

emotional response log showed this change with neg-

ative emotions communicated during this time. Most

of the students used their laptops to take notes and

send emotional responses, and one student occasion-

ally used his mobile phone during the lecture pe-

riod to send emotional responses. The teacher was

mostly delivering the lecture, but he would occasion-

ally glance at the real-time screen to see the average

emotion of his students. The teacher also went to the

detail version of the screen after the lecture to reflect

on the total number of emotional responses and to

analyse the overall class emotion during the lecture.

Both students and the lecturer provided feedback

after using the system in the classroom using a ques-

tionnaire. In the questionnaire, they rated how easy it

was to use the system during the lecture and whether

they had any difficulties interacting with the system,

whether the system fulfilled their need of sending

emotion, whether it was easy to send appropriate

emotional responses, whether it was comfortable to

use the system in front of other students, and whether

there was any additional functionality they would like

to add to the user interface.

All participants found the system to be easy to use

and effortless and had no particular difficulties. They

also mentioned that the system fulfilled their emo-

tional communication needs to a great extent. Fur-

CSEDU 2023 - 15th International Conference on Computer Supported Education

562

(a) (b) (c)

Figure 3: Prototyping and evaluation: a. student using his mobile device to evaluate the system, b. teacher screens both

real-time and detailed version, c. lecturer during evaluation.

thermore, they did not feel the system invited un-

wanted attention or disturbed in any way. The se-

lection of emotions to chose from was adequate for

most participants, however, some mentioned needing

more time than expected to find emotions in some

cases. Users mentioned they were comfortable with

using the system in front of others. As additional

functionality they recommended: a larger text box to

send messages to teachers to explain the emotion bet-

ter, inclusion of more options for emotions for more

in-depth precision of emotional communication, one-

click option for sending emotion, and that missing

emotions could be added by an additional button etc.

The lecturer also responded to a number of ques-

tions related to how easy it was to monitor the real-

time screen, the extent to which the graphs helped

understand and analyze student emotions, how often

they looked at the screen, and their impression about

the emotional state of students during the lecture. The

lecturer monitored the student emotion every 3-5 min-

utes and found that the real-time view supported easy

and quick monitoring of the students’ emotions and

also a feeling about the overall atmosphere of the

classroom. Lecturer responded that the detail version

of the screen helped a lot to analyse the students’ emo-

tions in more detail and in understanding how it can

be possible to adapt teaching. However, the teacher

mentioned that they would like to participate in a

larger scale evaluation to become able to commend

more specifically on how such a system may affect

teaching and learning. They also suggested to pro-

vide support for specifying thematically the lecture

moments and provide better possibilities to browse

emotions over time.

6 DISCUSSION

Motivated by a desire to improve the communication

of emotion during lectures, we investigated the lit-

erature in order to understand how emotion may be

communicated in the lecture hall. Our investigation

showed that categorical, dimensional, or interactional

input methods have been suggested for communicat-

ing emotion. Furthermore, it also showed that mostly

graphical and tangible interaction techniques are be-

ing used in smart classrooms. To understand better,

how users react to different combinations of the afore-

mentioned interaction types and methods we created

paper prototypes and performed interviews and a fo-

cus group with students.

The results showed that users tend to prefer a

graphical system as it is fast to use and does not ex-

pose their reactions to fellow classmates. Further-

more, users seem to like categorical or dimensional

interfaces for communicating emotion as they are

less willing to engage in more lengthy interactions

or contemplate on their emotional state during lec-

tures. Based on this feedback, we created a graphical

system using which students can communicate their

emotions using an emotion wheel type interface. We

also provided a cross-platform graphical interface so

that a lecturer can monitor each arriving emotion, the

tendency as this emerges by averaging received emo-

tions, and a detailed view for the emotions received.

The evaluation showed that this combination of inter-

action method and emotion input technique provides

an easy way to communicate emotions in the class-

room. The lecturers also found the received informa-

tion relevant and likely useful in planning their future

lectures. We also received several suggestions for ad-

ditional features which we plan to integrate in an ex-

Communicating Emotions During Lectures

563

tended version of the system that will be evaluated on

a larger scale.

Despite the small scale, this study is encouraging

with respect to the potential of systems for directly

communicating emotions in the classroom. Such sys-

tems seem to be favoured by students and teachers

and to be able to enhance communication during lec-

tures. We are motivated to expand on this research,

and design a larger scale study by involving more

students and lecturers in order to understand better

the potential of this system in affecting teaching and

learning. Furthermore, we are interested in investi-

gating other interaction techniques, such as ambient

displays, which were not in the focus of this study.

The resulting system can also be used to help un-

derstand better the development of emotions in the

classroom or the lecture hall and the interaction be-

tween teacher behaviour and student emotion (Zem-

bylas, 2007; Titsworth et al., 2013). In addition, it

can help study the cultural aspects of emotion devel-

opment across the world.

7 CONCLUSION

In this article, a user-centered design approach was

applied to design and develop a system using which

students can communicate their emotional states to

lecturers during lectures. The feedback we received

showed that students are looking for something quick

and easy which does not interfere much with attend-

ing to the lecture or makes their emotions visible to

unwanted receivers. A GUI providing the ability to

select emotions arranged in a two-dimensional space

seemed to be quite appropriate for this application, as

was also confirmed by the evaluation we performed.

The overall feedback shows that the design we pro-

pose is promising and can potentially help deliver a

better teaching and learning experience.

REFERENCES

Ahmed, F. D., Tang, A. Y., Ahmad, A., and Ahmad, M. S.

(2013). Recognizing student emotions using an agent-

based emotion engine. International Journal of Asian

Social Science, 3(9):1897–1905.

Alsmeyer, M., Luckin, R., and Good, J. (2008). Developing

a novel interface for capturing self reports of affect.

In CHI’08 Extended Abstracts on Human Factors in

Computing Systems, pages 2883–2888.

An, P., Bakker, S., Ordanovski, S., Taconis, R., Paffen,

C. L., and Eggen, B. (2019). Unobtrusively enhancing

reflection-in-action of teachers through spatially dis-

tributed ambient information. In Proceedings of the

2019 CHI Conference on Human Factors in Comput-

ing Systems, pages 1–14.

Betella, A. and Verschure, P. F. (2016). The affective slider:

A digital self-assessment scale for the measurement of

human emotions. PloS one, 11(2):e0148037.

Boehner, K., DePaula, R., Dourish, P., and Sengers,

P. (2007). How emotion is made and measured.

International Journal of Human-Computer Studies,

65(4):275–291.

Bradley, M. M. and Lang, P. J. (1994). Measuring emotion:

the self-assessment manikin and the semantic differ-

ential. Journal of behavior therapy and experimental

psychiatry, 25(1):49–59.

Brooks, C. F. and Young, S. L. (2015). Emotion in on-

line college classrooms: Examining the influence of

perceived teacher communication behaviour on stu-

dents’ emotional experiences. Technology, Pedagogy

and Education, 24(4):515–527.

Chang, A., Resner, B., Koerner, B., Wang, X., and Ishii, H.

(2001). Lumitouch: an emotional communication de-

vice. In CHI’01 extended abstracts on Human factors

in computing systems, pages 313–314.

Di Lascio, E., Gashi, S., and Santini, S. (2018). Unobtrusive

assessment of students’ emotional engagement dur-

ing lectures using electrodermal activity sensors. Pro-

ceedings of the ACM on Interactive, Mobile, Wearable

and Ubiquitous Technologies, 2(3):1–21.

Frijda, N. H. (1993). Moods, emotion episodes, and emo-

tions.

H

¨

o

¨

ok, K. (2008). Affective loop experiences–what are

they? In International Conference on Persuasive

Technology, pages 1–12. Springer.

Isbister, K., H

¨

o

¨

ok, K., Sharp, M., and Laaksolahti, J.

(2006). The sensual evaluation instrument: develop-

ing an affective evaluation tool. In Proceedings of the

SIGCHI conference on Human Factors in computing

systems, pages 1163–1172.

Jiranantanagorn, P., Bhardwaj, P., Li, R., Shen, H., Good-

win, R., and Teoh, K.-K. (2015). Designing a mobile

digital backchannel system for monitoring sentiments

and emotions in large lectures. In Proceedings of the

ASWEC 2015 24th Australasian Software Engineering

Conference, pages 141–144.

Kort, B., Reilly, R., and Picard, R. W. (2001). An affec-

tive model of interplay between emotions and learn-

ing: Reengineering educational pedagogy-building a

learning companion. In Proceedings IEEE interna-

tional conference on advanced learning technologies,

pages 43–46. IEEE.

Lantz, M. E. (2010). The use of ‘clickers’ in the classroom:

Teaching innovation or merely an amusing novelty?

Computers in Human Behavior, 26(4):556–561.

Laurans, G., Desmet, P. M., and Hekkert, P. (2009). The

emotion slider: A self-report device for the continu-

ous measurement of emotion. In 2009 3rd Interna-

tional Conference on Affective Computing and Intelli-

gent Interaction and Workshops, pages 1–6. IEEE.

Mauss, I. B. and Robinson, M. D. (2009). Measures of emo-

tion: A review. Cognition and emotion, 23(2):209–

237.

CSEDU 2023 - 15th International Conference on Computer Supported Education

564

Mazer, J. P., McKenna-Buchanan, T. P., Quinlan, M. M.,

and Titsworth, S. (2014). The dark side of emotion in

the classroom: Emotional processes as mediators of

teacher communication behaviors and student nega-

tive emotions. Communication Education, 63(3):149–

168.

Munoz, D. A. and Tucker, C. S. (2014). Assessing students’

emotional states: An approach to identify lectures that

provide an enhanced learning experience. In Interna-

tional Design Engineering Technical Conferences and

Computers and Information in Engineering Confer-

ence, volume 46346, page V003T04A006. American

Society of Mechanical Engineers.

Pekrun, R. (2014). Emotions and learning. educational

practices series-24. UNESCO International Bureau

of Education.

Picard, R. W. (2000). Affective computing. MIT press.

Pollak, J. P., Adams, P., and Gay, G. (2011). Pam: a photo-

graphic affect meter for frequent, in situ measurement

of affect. In Proceedings of the SIGCHI conference on

Human factors in computing systems, pages 725–734.

Poulou, M. (2014). The effects on students’ emotional

and behavioural difficulties of teacher–student inter-

actions, students’ social skills and classroom context.

British Educational Research Journal, 40(6):986–

1004.

Preece, J., Sharp, H., and Rogers, Y. (2015). Interaction de-

sign: beyond human-computer interaction. John Wi-

ley & Sons.

Russell, J. A. (1980). A circumplex model of affect. Journal

of personality and social psychology, 39(6):1161.

Sagayadevan, V. and Jeyaraj, S. (2012). The role of emo-

tional engagement in lecturer-student interaction and

the impact on academic outcomes of student achieve-

ment and learning. Journal of the Scholarship of

Teaching and Learning, 12(3):1–30.

Scherer, K. R. (2005). What are emotions? and how

can they be measured? Social science information,

44(4):695–729.

Scherer, K. R., Shuman, V., Fontaine, J., and Soriano, C.

(2013). The grid meets the wheel: Assessing emo-

tional feeling via self-report. Components of emo-

tional meaning: A sourcebook.

Sengers, P., Boehner, K., Mateas, M., and Gay, G. (2008).

The disenchantment of affect. Personal and Ubiqui-

tous Computing, 12(5):347–358.

Sharmila, S., Kalaivani, A., and Gr, S. (2018). Automatic

facial emotion analysis system for students in class-

room environment. International Journal of Pure and

Applied Mathematics, 119(16):2887–2894.

Siau, K., Sheng, H., and Nah, F.-H. (2006). Use of a class-

room response system to enhance classroom interac-

tivity. IEEE Transactions on Education, 49(3):398–

403.

St

˚

ahl, A., H

¨

o

¨

ok, K., Svensson, M., Taylor, A. S., and Com-

betto, M. (2009). Experiencing the affective diary.

Personal and Ubiquitous Computing, 13(5):365–378.

Subramainan, L. and Mahmoud, M. A. (2020). Academic

emotions review: Types, triggers, reactions, and com-

putational models. In 2020 8th International Con-

ference on Information Technology and Multimedia

(ICIMU), pages 223–230. IEEE.

Titsworth, S., McKenna, T. P., Mazer, J. P., and Quinlan,

M. M. (2013). The bright side of emotion in the class-

room: Do teachers’ behaviors predict students’ enjoy-

ment, hope, and pride? Communication Education,

62(2):191–209.

Tyng, C. M., Amin, H. U., Saad, M. N., and Malik, A. S.

(2017). The influences of emotion on learning and

memory. Frontiers in psychology, 8:1454.

Wang, H., Prendinger, H., and Igarashi, T. (2004). Commu-

nicating emotions in online chat using physiological

sensors and animated text. In CHI’04 extended ab-

stracts on Human factors in computing systems, pages

1171–1174.

Zembylas, M. (2007). Theory and methodology in research-

ing emotions in education. International Journal of

Research & Method in Education, 30(1):57–72.

Communicating Emotions During Lectures

565