UXNator: A Tool for Recommending UX Evaluation Methods

Solano Oliveira

1,2,∗

, Alexandre Cristo

1,2,∗

, Miguel Geovane

1,2

, Andr

´

e Xavier

1,2

, Roseno Silva

1,2

,

Sabrina Rocha

1,2

Leonardo Marques

1,2

, Genildo Gomes

1,2

, Bruno Gadelha

1,2

and Tayana Conte

1,2

1

Federal University of Amazonas (UFAM), Manaus, Amazonas, 69067-005, Brazil

2

USES Research Group - Institute of Computing (IComp), Manaus, Amazonas, Brazil

solano.oliveira, alexandre.cristo, miguel.geovane, andre.xavier, roseno.silva, sabrina.rocha, lcm,

Keywords:

User Experience, UX, UX Methods, Design Sprint.

Abstract:

UX assessment plays a key role in the development of user-quality software systems. Due to the growing

interest, much research has been carried out to propose different UX evaluation methods. However, despite

many methods, quantity is an aspect that makes it challenging to choose which one to use in UX evaluation.

In this paper, we present UXNator, a tool for recommending UX methods based on filters that collect the

responses of interested stakeholders in the evaluation. We conducted a feasibility study to evaluate UXNator’s

initial proposal, collecting the participants’ perceptions of use. We qualitatively analyzed the reports and

identified improvement categories in UXNator. The result presents the positive perception of participants

about UXNator’s goal of recommending UX methods. We intend to improve UXNator based on participant

feedback, looking forward it will become a standard option for queries and recommendations of UX methods.

1 INTRODUCTION

User Experience (UX) is defined as “the user’s per-

ceptions and responses concerning a product or sys-

tem” (Bevan et al., 2015). UX emerged due to the

need to cover aspects related to the user’s feelings

(Law et al., 2009) when interacting with a software

system. According to Russo et al. (2015), current

software no longer seeks only to provide useful and

usable functionalities but rather to provide engaging

experiences for the user, providing good UX.

We can apply UX evaluation methods to meet the

need for products with good UX. According to Saad

et al. (2021), UX methods play an important role in

ensuring the development phase of a system is in the

right way. Such methods are diverse and serve to eval-

uate the UX of products, prototypes, conceptual ideas,

or design details (Vermeeren et al., 2010).

There is yet to be a widely used repository recog-

nized by the UX community for selecting UX evalu-

ation methods. One of the existing alternatives is the

AllAboutUX

1

. AllAboutUX is a repository of UX as-

sessment methods that lists and briefly describes the

methods. It was created in 2010, providing 88 UX as-

sessment methods (Vermeeren et al., 2010). Although

∗

The first two authors contributed equally to this work.

1

https://www.allaboutux.org

the tool maintains a repository of methods, it is still

necessary to find which methods are most appropriate

and meet the assessment requirements of the stake-

holders interested in UX assessment and recommend

them. Additionally, AllAboutUX offers an extensive

range of methods, a factor of difficulty in choosing

methods by the evaluator (Chernev et al., 2015).

Our research goes toward support in choosing a

UX evaluation method. To do so, we searched the lit-

erature on related work. Following the methodology

based on the Design Sprint, we developed a recom-

mendation tool called UXNator. UXnator’s goal is

to recommend methods based on filters answered by

stakeholders. We conducted a feasibility study using

UXNator as a standard tool to choose UX evaluation

methods. Our results show that UXNator allowed rec-

ommending evaluation methods and could be help-

ful to UX stakeholders. In the next section, we will

present the background and related work. Then, in

Section 3, we present our methodology and feasibil-

ity study. In Section 4, we present the results, and in

Section 5, we discuss our findings. Finally, in Section

6, we present our conclusions.

336

Oliveira, S., Cristo, A., Geovane, M., Xavier, A., Silva, R., Rocha, S., Marques, L., Gomes, G., Gadelha, B. and Conte, T.

UXNator: A Tool for Recommending UX Evaluation Methods.

DOI: 10.5220/0011994200003467

In Proceedings of the 25th International Conference on Enterprise Information Systems (ICEIS 2023) - Volume 2, pages 336-343

ISBN: 978-989-758-648-4; ISSN: 2184-4992

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

2 BACKGROUND AND RELATED

WORK

In this section, we discuss concepts pertinent to UX

and related work.

2.1 UX Evaluation

According to ISO 9241-110:2010, UX is defined as:

“a person’s perceptions and responses that result

from the use and/or anticipated use of a product, sys-

tem or service”. UX considers pragmatic aspects,

such as traditional usability features focusing on task

completion, and hedonic aspects, such as emotional

responses to using a product (Hassenzahl, 2018).

UX evaluation methods are similar to usability

ones. However, instead of measuring factors such as

the number of errors or clicks, UX evaluation methods

focus on subjective factors such as satisfaction and

motivation (Vermeeren et al., 2010).

UX evaluation methods are important in ensuring

that the development of a system is progressing in the

right direction (Saad et al., 2021). They intend to help

design a system, ensure that development is progress-

ing correctly, or assess whether it provides good UX

(Vermeeren et al., 2010).

UX gained more importance as software systems

evolved. As a result, several methods for evaluating

the UX of interactive applications have been proposed

(Nakamura et al., 2017). Due to the abundant amount

of existing methods, one problem is the lack of sup-

port for choosing a specific UX evaluation methods.

Our work aims to fill this gap, providing a Web

tool to support the selection of UX evaluation meth-

ods based on the needs of the evaluator. In the follow-

ing subsection, we present related work on the organi-

zation and categorization of UX methods and research

that recommends methods in different contexts.

2.2 Related Work

Previous works such as Vermeeren et al. (2010) and

Rivero and Conte (2017) provides an overview of how

to organize UX evaluation methods. Vermeeren et

al. (2010) give an initial overview of the topic us-

ing categories that allow observing essential features

of the methods. Following a similar approach, Rivero

and Conte (2017) use questions for filtering methods,

with the answers generating categories for the meth-

ods. These categories generated by both surveys were

selected for analysis to determine if they would be

helpful for a recommendation.

To support the selection of proper UX

methods, Darin et al. (2019) use categories:

scale/questionnaire, psycho-physiological, soft-

ware/equipment, two-dimensional diagrams/area

graphs, post-test photos/objects, and others. For

each method, the catalog shows the name, main

idea, general procedure, instrument type, approach,

target users, applications, references, and year of

publication. This is an interesting approach, but it

does not involve narrowing down the user’s choices

based on filters.

Kieffer et al. (2019) classified the methods

into knowledge elicitation, which describes/document

knowledge, and observed-mediated communication,

which aims to facilitate communication and collab-

oration between stakeholders. Knowledge elicita-

tion methods are divided between methods with user

participation and methods without user participation,

which aim to predict the use of a system and use ex-

pert opinions to collect data. User methods gather

data on opinions, feedback, and user behavioral.

A platform developed by Liu et al. (2019) sug-

gests methods based on artificial intelligence (AI) re-

sults. In this sense, chatbots ask the users to answer

three questions: (i) the project phase; (ii) how much

time is available for the evaluation; and (iii) if real

users participate in the process. Through the infor-

mation provided in the users’ answers, the platform

returns a list of varied techniques for different pur-

poses, such as prototyping, information organization,

feedback collection, and UX evaluation methods.

Our work differs from previous research as we

clearly defined our scope of UX software evaluation.

Defining a scope allows for providing a curated col-

lection of methods. The focus on UX facilitates the

filtering and selection process, making the experience

easier for the user overall. Besides, we added to UX-

nator only methods with source material and instruc-

tions on how to use them.

3 METHODOLOGY

In this section, we explain the methodological pro-

cedures for designing and developing UXNator. The

following subsections present: (i) the Design Sprint -

used as a base procedure for the initial prototype de-

sign of the tool, (ii) the UXNator - the tool to support

the choice of UX methods based on questions and (iii)

the study carried out to verify the viability of the tool

and obtain evidence of improvement for it.

3.1 Design Sprint

The Design Sprint is a compendium of tools used in

Design Thinking and stands out for being a process

UXNator: A Tool for Recommending UX Evaluation Methods

337

that produces good effects with the same characteris-

tics as Design Thinking but using a time that revolves

around a week (Ferreira and Canedo, 2020). A team

makes it with the help of stakeholders to come up with

good quality products. The sprints can be made suc-

cessively if the goal is not achieved. However, we

made the idealization of UXnator in only one sprint.

We made the sprint as follows: on the first day

of the design sprint, we made an idealization of the

method recommendation. First, we set targets for our

tool, such as “becoming the standard tool for recom-

mending UX evaluation methods”. After setting our

targets, we made a map of our tool’s usage, depict-

ing how our tool would be used and what features it

would have. Finally, our team and some stakeholders

voted on the essential features and how they would fit

into the map we made.

On the second day, we analyzed competing or

similar tools, such as: Allaboutux (Vermeeren et al.,

2010), DTA4RE (Souza et al., 2020), Design Kit

(Kit, 2019), and Selection Universe (Meireles et al.,

2021). With the observations we made, we performed

a Crazy 8’s, a method recommended for design sprints

that generates one idea per minute for eight minutes

(Ferreira and Canedo, 2020). Each team member then

combined the ideas to produce a tool storyboard.

On the third day, we reconvened to decide which

ideas from the storyboards produced during the pre-

ceding day would be included in our prototype. We

then combined the ideas to create a storyboard to

guide the tool prototyping. We also decided to recom-

mend the methods using a questionnaire with ques-

tions that would filter the available methods. Finally,

we decided to present the results in cards with infor-

mation on the methods.

On the fourth day, we created the initial prototype

(Figure 1). We developed the prototype with the fea-

tures chosen on the third day, so we could build it

quickly for the next day’s test. We designed the pro-

totype to look like a real product, as our purpose was

to make a realistic-looking prototype to test it.

On the fifth and final day, the team conducted a

usability test with seven participants. The test con-

sisted of using the prototype one participant at a time.

The participants observed and navigated the proto-

type with the help of the researchers. After finish-

ing the test, we interviewed the participants, and they

gave their opinions about the tool. During this phase,

the main feedback from participants referred to the

high number of questions in the filtering question-

naire. The results obtained in this phase served as the

basis for the development of UXnator.

Figure 1: Initial prototype.

3.2 UXnator

The design sprint process culminated in developing a

tool whose objective is to recommend UX evaluation

methods in the context of software evaluation. We

called this tool UXnator

2

, in reference to the pop cul-

ture application Akinator

3

, due to the similarity of the

questions that the game asks with the UXnator ques-

tionnaire.

During the design sprint, we used a questionnaire

to help filter methods that fit the users’ needs. We

based the questionnaire development on an analysis

of categories based on previous systematic mapping

work that categorized the UX methods (Vermeeren

et al., 2010; Rivero and Conte, 2017). We used these

categories to generate filters.

The tests we carried out during the design sprint

revealed problems related to the high number of

questions in the questionnaire (five questions). For

instance, one of the questions filtered which tech-

niques provided quantitative results and which pro-

vided qualitative results. In a discussion meeting to

narrow down the filtering issues, we identified that

primarily two factors are essential when evaluating

UX: (i) knowing the stage of the evaluated system and

(ii) who are the participants of the evaluation. There-

fore, we decided to keep only two questions in the

questionnaire of the first version of UXnator. These

questions are: “In what phase is your project?” and

“Who is the evaluator of your project?” These ques-

tions seek, respectively, to understand what stage the

project is at and the profile of the evaluators who will

use the recommended methods. Figure 2 shows the

UXnator interface in the filtering process.

2

https://uxnator.vercel.app

3

https://en.akinator.com

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

338

Figure 2: UXnator filtering process. Left indicates the first question and right the second question.

Once the questions are answered, the tool returns

a list of UX assessment methods in the form of small

cards with a description and a link to the method’s

page on the AllAboutUX website. As the tool is still

in an initial state, we decided to use the AllAboutUx

website as the tool’s database.

In a previous analysis of the methods available on

AllAboutUX, we observed that not all methods cata-

loged on the AllAboutUX website had sufficient doc-

umentation to guide their use or were related to the

software evaluation context. Because of this, we ana-

lyzed the available methods to filter those that UXna-

tor would recommend. For the selection of methods

that would be part of the UXNator recommendation,

filtering was performed based on three criteria:

1. The method is related to software evaluation;

2. The description of the method on the website al-

lows its use;

3. The site’s references describe how to use the

method.

We used the first criterion to remove methods

unrelated to the scope of the recommendation tool.

Then, we used criteria 2 and 3 to identify whether the

methods have documentation on how to use them. We

verify whether it is possible to use the methods with

the description available on the website or with the

reference paper that the website cites. With these cri-

teria, we placed the methods cataloged on the site in

a spreadsheet, where we analyzed which criteria each

method met. Finally, we eliminated two methods for

not meeting the first criterion and 35 for not meeting

Criteria 2 or 3, resulting in 44 methods.

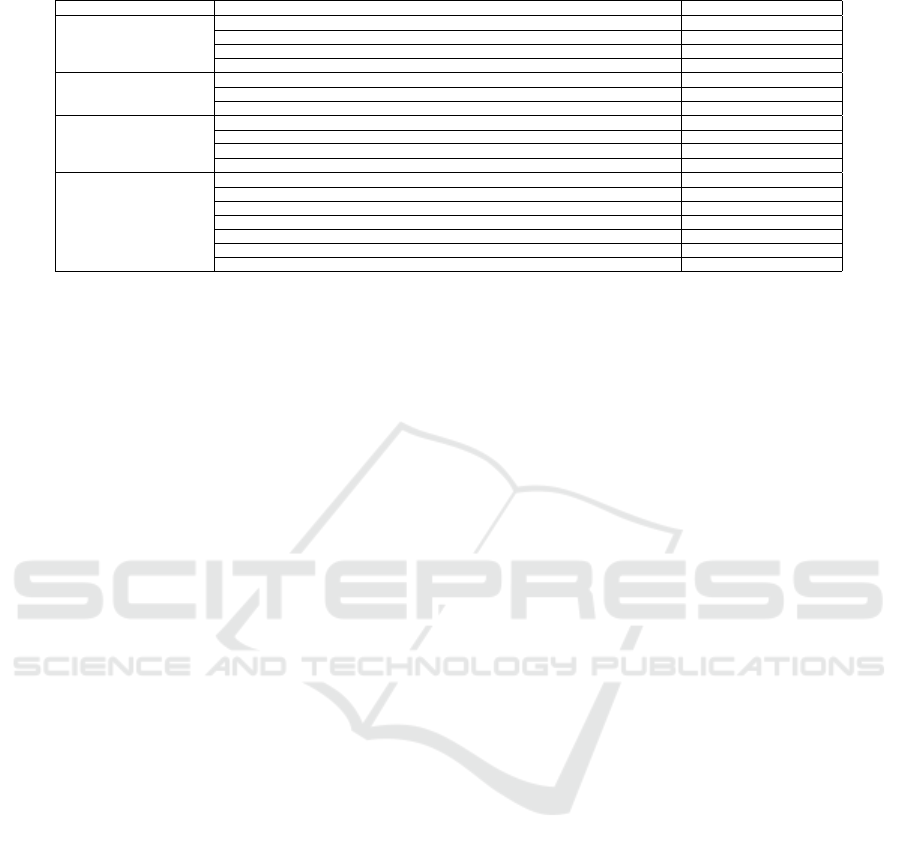

The Table 1 illustrates how method filtering

works, using a sample of ten methods as an example.

In the second column, we have the first filter through

the question: “In what phase is your project?”. To il-

lustrate the filtering process, we consider the option

“Product on the market” as an answer. In this first

filter, three methods were removed, as they did not

apply to evaluating products available to the end user.

Then, the second filter asks: “Who is the project eval-

uator?”. Considering the “Pair of Users” response,

five more methods are removed from the recommen-

dation, leaving two methods to be recommended for

UX evaluation based on the information provided by

the stakeholder. Once the methods are filtered, UX-

nator returns the list of recommended methods.

3.3 Study

We carried out a study with a computer science

class of Human-Computer Interaction (HCI) at Fed-

eral University of Amazonas.

The class consisted of 28 students divided into

teams of 3 to 6 members to carry out an evaluation

activity. The activity aimed to reinforce the topics of

usability and UX assessments. In order to do that, we

instructed the teams to conduct usability and UX as-

sessments on two online teaching tools and then doc-

ument their results and experiences in a report. Since

the students had to conduct UX assessments as part of

the activity, they used UXNator to support the choice

of which UX evaluation methods they would use.

Before making the tool available, we conducted a

pilot test to guarantee that the UXnator returned the

methods properly. Afterward, we presented the tool

to the participants and explained its use.

In total, six teams completed the activity. After

agreeing to the Consent Form, we collected the re-

ports to perform a qualitative analysis of the data. We

present the results of our analysis in Section 4.

4 RESULTS

In this section, we present the results obtained con-

cerning the use of UXNator. We performed a qualita-

UXNator: A Tool for Recommending UX Evaluation Methods

339

Table 1: The method filtering process.

Methods

In what phase is your project?

Answer: Product on the market

Who is the evaluator

of your project?

Answer: Pair of Users

Recommended Methods

2DES, Affect Grid,

Co-Discovery, Emocards,

Expereience Clip,

Group-based Walkthrough,

Property Checklists,

This-or-that, UX Curve,

UX Expert Evaluation

Affect Grid,

Co-Discovery,

Emocards,

Experience Clip,

This-or-that,

UX Curve,

UX Expert Evaluation

Co-Discovery,

Experience Clip

Co-Discovery,

Experience Clip

tive analysis with data collected from participants’ re-

ports. After qualitative analysis, we identified sugges-

tions for improvement UXNator and positive aspects

regarding its usefulness. Table 2 summarizes the cat-

egorization of reports. In the following subsections,

we will describe the main results.

4.1 Improvement Suggestions

4.1.1 Suggested Improvements for the Tool’s

Filter

In our study, a few participants reported difficul-

ties understanding the questions in the questionnaire.

These participants suggested changes or the inclusion

of hints about the options. The difficulty concerning

the questionnaire suggests the absence of questions

aimed at people who do not have in-depth knowledge

of UX evaluation and may want to use the tool.

Participants P4, P12, P18, and P20 had doubts

about classifying the project phase they were evaluat-

ing. Since the filter is a fundamental part of the tool,

it must be adequate for users’ needs. We highlighted

quotes from P18 and P20.

“It is unclear what each stage of the project

means (as conceptual and different from initial,

for example).” - P18

“The lack of information about the project char-

acterization phase leaves the user confused about

the classification of his project.” - P20

Another important suggestion pointed out by P18,

and P20 is the creation of a ranking of the methods

returned through the tool’s filter. Even with fewer

methods, it is important to refine the results further

and make it easier for the user to choose.

“How the methods are presented does not indicate

which I should look at first or why I should choose

one over another.” - P18

“There is no information on which method would

be best for my project, and there does not seem to

be any preference on which method is shown with

priority.” - P20

4.1.2 Suggestions for Improving the Efficiency

of Using the Tool

Regarding the efficiency of using the tool, participant

P20 pointed out that the methods took time to load

and that the lack of information about the use of the

questionnaire caused confusion regarding navigation

on the platform. P18 suggested that specific effects

and animations used to show the questionnaire op-

tions decrease the efficiency of use, generating delay.

“The questionnaire tab does not have much infor-

mation about its functionality and leaves the user

in doubt whether there is the actual use of the tool

or just another feedback questionnaire.” - P20

“A minor issue is that the animation to show the

options takes time.” - P18

Even though the above reports indicate that load-

ing the methods was not a significant issue, ensuring

they do not feel like wasting their time during use is

important. Besides, improving efficiency means im-

proving the user’s perception of the tool.

4.1.3 Suggestions for Including Features

Although we performed a filtering step of the meth-

ods available in UXNator, considering only those

with minimal documentation on their use, some par-

ticipants still reported improvements. For example,

participant P22 reported that UXNator helped select

methods with details about their use. However, par-

ticipant P23 indicated that, even with the documenta-

tion, it is still necessary to present more information.

“Regarding the choices of UX methods in UXNa-

tor, it helped by showing the methods in general,

but the choice was made mainly after checking the

papers that reference the technique, as they show

in more detail how it is applied.” - P22

“It would be nice if UXNator had more informa-

tion about the technique.” - P23

Therefore, we intend to create our repository of

methods for UXNator. The repository will include

more practical information regarding the use of each

method and the insertion of examples of use.

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

340

Table 2: Main suggestions for improvement and Positive Aspects.

Category Suggestions Participants

Suggested improvements

for the tool’s filter

F1. Filtering could be better P2, P14, P23

F2. Quiz choices are confusing

P4, P12, P16, P17, P18, P20

F3. It would be nice if the techniques filtered by the tool were ranked to help with the choice

P18, P20, P26

F4. The platform should include more important questions P2

Suggestions for improving

the efficiency of

using the tool

U1. Slow loading techniques P20

U2. Effects and animations that hinder usability P18

U3. Tool page navigation is confusing P20

Suggestions

for including

features

E1. The platform could have a search bar P5

E2. The level of difficulty of the technique could be shown P11

E3. The platform does not show the number of techniques in each category

P3

E4. There is no documentation of the techniques available on the platform P18, P20, P22, P23, P24

Suggestions

for improving

the interface

I1. The interface could be more presentable or user-friendly

P3, P5, P16, P18, P20

I2. Difficulty reading text on the platform due to the color palette P3, P5, P18

I3. The use of platform fonts is unstandardized P3, P16

I4. Platform pages do no follow a pattern P5

I5. The interface should bring more seriousness, be more formal

P11, P13, P15, P16

I6. The uncomfortable layout of illustrations P16

I7. The display of filtered techniques cards should be more presentable

P16, P17, P18

4.1.4 Suggestions for Improving the Interface

Five participants suggested that the system’s screens

could be more friendly and presentable for the user

(element I1 of Table 2). Participants P3 and P5 sug-

gested improvements in the interface. However, the

aesthetics of the interface did not influence the per-

ception of the tool’s usefulness.

“We use the UXNator system to filter the UX meth-

ods, thus facilitating our choices. I particularly

liked this tool a lot.” - P03

“UXNator was extremely useful, as the system

saves time spent looking for methods that do not

have documentation. In addition, it is very advan-

tageous for searching methods and filtering meth-

ods that do not fit the activity.” - P05

One aspect of improvement in the interface is its

aesthetic appearance. Participants indicated that leav-

ing the tool with a more standard appearance interface

could improve the perception of UXNator.

“UXNator needs to look more serious.” - P13

“I found the interface aesthetics very childish,

considering the system’s target audience.” - P15

Finally, three participants reported how the result

of filtering the methods is presented. Currently, in

UXNator, the arrangement of cards referring to meth-

ods resulting from the filter is vertical, showing one

card at a time. Participant P16 reported that with

this provision, when there are still many methods af-

ter applying the filters, navigability is compromised

(scrolling). This problem may require the user to

scroll the screen a few times to view all the recom-

mended methods cards. Participant P16 indicated that

a better arrangement of the cards would be to place

them side by side so that the user can view more cards

simultaneously without having to scroll a lot.

“Regarding the results returned, the data was well

presented, but the choice of a single sample com-

promised the navigability. Perhaps the presenta-

tion would be better if the cards were placed side

by side.” - P16

Additionally, participant P18 indicated that only

two or three methods are presented in the interface,

so the methods that need to scroll the screen to be

viewed can be forgotten and not considered.

“Considering that only 2 or 3 methods are visible

at any given time, the methods below are forgot-

ten. I would not have looked at the rest if it were

not for the activity.” - P18

Participant P17 indicated another improvement

concerning the font size on the cards. According to

them, the small font size makes methods’ presenta-

tion a little tiring and that this could be improved.

“I also think the presentation of the methods could

be improved. I found it a little tiring because the

letters are so small and close together.” - P17

4.2 Positive Aspects of UXNator

We aimed in our study to conduct an evaluation of

UXNator as a tool to support the selection of UX eval-

uation methods. Therefore, the suggestions for im-

provement collected and described above are essen-

tial for developing the new version. However, one of

the main aspects that we sought with our study was

to verify whether this initial version already contem-

plated the main objective of the tool.

Among the various benefits mentioned, we high-

light precisely the reports related to the tool’s objec-

tive to support the process of choosing UX methods

for evaluation. We noticed that participants under-

stood the purpose and usefulness of UXnator.

“UXNator is a very helpful tool for searching

methods.” - P1

“We use the UXNator system to filter the UX meth-

ods, thus facilitating our choices.” - P3

UXNator: A Tool for Recommending UX Evaluation Methods

341

Regarding the tool’s purpose, participants P5 and

P7 also reported another benefit from filtering meth-

ods. They pointed out that the time spent choosing a

technique is reduced, especially since UXNator only

recommends methods with some documentation on

how to use the recommended technique.

“UXNator was extremely useful, as the system

saves time spent looking for methods that do not

have documentation.” - P5

“UXNator was excellent, both because it halved

our possible choices and, after the choice, we

could see how to apply the methods.” - P7

We can make a relationship between the sug-

gestions for improvements collected with the reports

about the usefulness of UXNator. We highlight that

some participants, even with some difficulties pre-

sented in the interface, still pointed to UXNator as a

tool that has a very relevant purpose.

“UXNator does a lot of what it promises. It

greatly facilitates the search for the ideal UX

method to use...” - P11

“UXNator helped in filtering/selecting methods

because it is a simple and intuitive tool.” - P14

“UXNator has a great concept and is well-

implemented. Maybe it could improve the design

for something more formal.” - P15

Participant P16’s report shows that UXNator al-

ready achieves its main objective. P16’s report shows

that UXnator guided him/her in the decision-making

process and choice of UX evaluation methods.

“The information returned by the page was very

useful in guiding me in choosing the three meth-

ods.” - P16

5 DISCUSSION

UX assessment has played an essential role in the

quality of software systems today. In this sense, much

research over the last few years has been developed

focusing on proposing methods that support UX eval-

uation in different contexts (Pettersson et al., 2018).

Despite many UX evaluation methods in the liter-

ature being considered positive, access to them needs

to be facilitated. There is a big gap between the meth-

ods available in the literature and the target audience

that perform UX evaluation.

Among some repositories available, the main one

is AllAboutUX. The repository attempts to organize

and classify the UX evaluation methods available in

the literature. However, people without much ex-

perience or with some need for specific evaluation

may have difficulties finding a method. The reposi-

tory does not provide as many details on the use of

methods. Also, it does not support the evaluator in

the technique selection process through a personal-

ized recommendation based on the evaluator’s needs.

In this paper, we introduce UXNator. UXNator is

a web platform whose main goal is to recommend UX

evaluation methods that meet the evaluation interests

of the stakeholder. Despite being the first version, the

study we conducted to evaluate its feasibility showed

positive results concerning the tool’s objective. Al-

though we considered only methods with some usage

documentation to be recommended, in our study, we

identified that to provide complete suggestions, more

practical details of the methods are necessary. The

participants’ reports indicated a more detailed recom-

mendation and showed a research opportunity for cre-

ating a repository with standardized information. In-

deed, creating a repository would be an excellent ben-

efit for the UX community and, especially, for the de-

velopment of future research.

Pettersson et al. (2018) recommend that for more

robust results in UX studies, it is necessary to bet-

ter integrate and structure the data. For example, im-

proved cross-analysis of qualitative and quantitative

data will allow for better results. In this sense, a tool

that supports selecting different UX methods is essen-

tial. UXNator can support selecting methods for dif-

ferent needs and development stages.

According to Saad et al. (2021), the main

roadblocks for startups not to adopt UX evaluation

methods during development are difficulties with the

meaning of UX and finding practices and methods

that can bring valuable information to them. UXNator

can be an alternative to help the adoption of UX eval-

uations by startups, since it reduces the time needed

to research and select a technique.

6 CONCLUSION AND FUTURE

WORK

In this paper, we present UXNator to support the se-

lection of UX methods. Considering that there is a

vast choice of UX assessment methods, the impor-

tance of a tool that helps narrow down the options

based on the needs of each assessment is necessary.

To verify the feasibility of using UXNator, we

conducted a study in the context of a HCI course.

Twenty-eight participants who used UXNator as a

standard tool for the course’s UX evaluation activ-

ity participated in the study. We collected the partici-

pants’ perceptions of use and performed a qualitative

analysis concerning the data obtained.

ICEIS 2023 - 25th International Conference on Enterprise Information Systems

342

Our main objective with this study was to inves-

tigate whether UXNator met its primary objective of

recommending UX evaluation methods. In addition,

we get valuable feedback regarding improvements

that can be implemented in the next version of UX-

Nator. As a main result, we noticed that most partic-

ipants had a positive perception of UXNator useful-

ness, especially in the process of selecting which UX

method to use in a specific evaluation.

In future work, we intend to improve UXNator

and create our repository of UX evaluation methods.

We also intend to carry out a study with different pro-

files of participants.

ACKNOWLEDGEMENTS

We thank all the participants in the empirical study.

The present work is the result of the Research and

Development (R&D) project 001/2020, signed with

Federal University of Amazonas and FAEPI, Brazil,

which has funding from Samsung, using resources

from the Informatics Law for the Western Ama-

zon (Federal Law No 8.387/1991), and its disclo-

sure is in accordance with article 39 of Decree No.

10.521/2020. Also supported by CAPES - Financing

Code 001, CNPq process 314174/2020-6, FAPEAM

process 062.00150/2020, and grant #2020/05191-2

S

˜

ao Paulo Research Foundation (FAPESP).

REFERENCES

Bevan, N., Carter, J., and Harker, S. (2015). Iso 9241-

11 revised: What have we learnt about usability

since 1998? In International conference on human-

computer interaction, pages 143–151. Springer.

Chernev, A., B

¨

ockenholt, U., and Goodman, J. (2015).

Choice overload: A conceptual review and meta-

analysis. Journal of Consumer Psychology,

25(2):333–358.

Darin, T., Coelho, B., and Borges, B. (2019). Which in-

strument should i use? supporting decision-making

about the evaluation of user experience. In Inter-

national conference on human-computer interaction,

pages 49–67. Springer.

Ferreira, V. G. and Canedo, E. D. (2020). Design sprint

in classroom: exploring new active learning tools for

project-based learning approach. Journal of Ambient

Intelligence and Humanized Computing, 11(3):1191–

1212.

Hassenzahl, M. (2018). The thing and i: understanding the

relationship between user and product. In Funology 2,

pages 301–313. Springer.

Kieffer, S., Rukonic, L., de Meerendr

´

e, V. K., and Vander-

donckt, J. (2019). Specification of a ux process refer-

ence model towards the strategic planning of ux activ-

ities. In VISIGRAPP (2: HUCAPP), pages 74–85.

Kit, I. D. (2019). The human-centered design toolkit.

IDEO. URL: https://www. ideo. com/work/human-

centered-design-toolkit/[accessed 2020-06-20].

Law, E. L.-C., Roto, V., Hassenzahl, M., Vermeeren, A. P.,

and Kort, J. (2009). Understanding, scoping and defin-

ing user experience: a survey approach. In Proceed-

ings of the SIGCHI conference on human factors in

computing systems, pages 719–728.

Liu, X., He, S., and Maedche, A. (2019). Designing an

ai-based advisory platform for design techniques. In

Proceedings of the 27th European Conference on In-

formation Systems (ECIS).

Meireles, M., Souza, A., Conte, T., and Maldonado, J.

(2021). Organizing the design thinking toolbox: Sup-

porting the requirements elicitation decision making.

In Brazilian Symposium on Software Engineering,

pages 285–290.

Nakamura, W., Marques, L., Rivero, L., Oliveira, E., and

Conte, T. (2017). Are generic ux evaluation tech-

niques enough? a study on the ux evaluation of the

edmodo learning management system. In Brazil-

ian Symposium on Computers in Education (Simp

´

osio

Brasileiro de Inform

´

atica na Educac¸

˜

ao-SBIE), vol-

ume 28, page 1007.

Pettersson, I., Lachner, F., Frison, A.-K., Riener, A., and

Butz, A. (2018). A bermuda triangle? a review of

method application and triangulation in user experi-

ence evaluation. In Proceedings of the 2018 CHI con-

ference on human factors in computing systems, pages

1–16.

Rivero, L. and Conte, T. (2017). A systematic mapping

study on research contributions on ux evaluation tech-

nologies. In Proceedings of the XVI Brazilian sympo-

sium on human factors in computing systems, pages

1–10.

Russo, P., Costabile, M. F., Lanzilotti, R., and Pettit, C. J.

(2015). Usability of planning support systems: An

evaluation framework. In Planning support systems

and smart cities, pages 337–353. Springer.

Saad, J., Martinelli, S., Machado, L. S., de Souza, C. R., Al-

varo, A., and Zaina, L. (2021). Ux work in software

startups: a thematic analysis of the literature. Infor-

mation and Software Technology, 140:106688.

Souza, A., Ferreira, B., Valentim, N., Correa, L., Marczak,

S., and Conte, T. (2020). Supporting the teaching of

design thinking techniques for requirements elicita-

tion through a recommendation tool. IET Software,

14(6):693–701.

Vermeeren, A. P., Law, E. L.-C., Roto, V., Obrist,

M., Hoonhout, J., and V

¨

a

¨

an

¨

anen-Vainio-Mattila, K.

(2010). User experience evaluation methods: current

state and development needs. In Proceedings of the

6th Nordic conference on human-computer interac-

tion: Extending boundaries, pages 521–530.

UXNator: A Tool for Recommending UX Evaluation Methods

343