Conversational Agents for Simulation Applications and Video Games

Ciprian Paduraru, Marina Cernat and Alin Stefanescu

Department of Computer Science, University of Bucharest, Romania

Keywords:

Natural Language Processing, Video Games, Active Assistance, Simulation Applications.

Abstract:

Natural language processing (NLP) applications are becoming increasingly popular today, largely due to recent

advances in theory (machine learning and knowledge representation) and the computational power required

to train and store large language models and data. Since NLP applications such as Alexa, Google Assistant,

Cortana, Siri, and chatGPT are widely used today, we assume that video games and simulation applications

can successfully integrate NLP components into various use cases. The main goal of this paper is to show

that natural language processing solutions can be used to improve user experience and make simulation more

enjoyable. In this paper, we propose a set of methods along with a proven implemented framework that uses

a hierarchical NLP model to create virtual characters (visible or invisible) in the environment that respond to

and collaborate with the user to improve their experience. Our motivation stems from the observation that in

many situations, feedback from a human user during the simulation can be used efficiently to help the user

solve puzzles in real time, make suggestions, and adjust things like difficulty or even performance-related

settings. Our implementation is open source, reusable, and built as a plugin in a publicly available game

engine, the Unreal Engine. Our evaluation and demos, as well as feedback from industry partners, suggest that

the proposed methods could be useful to the game development industry.

1 INTRODUCTION

NLP is one of the most important components in

the development of human-machine interaction. It

studies how computers can be programmed to ana-

lyze and process a large amount of natural language

data. Some of the most challenging topics in natu-

ral language processing are speech recognition, natu-

ral language generation, and natural language under-

standing. According to literature and surveys, sim-

ulation applications and video games are one of the

most valuable sectors in the entertainment industry

(Baltezarevic et al., 2018). Our main contribution

to this area is to work with our industry partners to

identify and develop methods based on modern NLP

techniques that could potentially help the simulation

software and video games industry. The main prob-

lem we are addressing is how to help the player and

respond live to his feedback. The person or entity

assisting the user can be rendered virtually in the

simulation environment, i.e., as a non-playable char-

acter (NPC) or as a narrator/environmental listener.

Together with industry partners, several specific use

cases of NLP in simulation applications and game de-

velopment industry were investigated. The first cate-

gory investigated relates to classical sentiment analy-

sis (Wankhade et al., 2022) problem. An example of

this is when a user is playing with an NPC and indi-

cates, either through speech or text, that the difficulty

level of the simulation has some problems (e.g., too

difficult or not challenging enough). In this case, one

solution would be to dynamically adjust the difficulty

level to provide an engaging experience for the user.

Another concrete case: imagine the user is playing a

soccer game such as FIFA

1

, and is disappointed by

a referee’s decision. The decision could then attract

different unusual noises made by the user. The in-

game referee could then react accordingly and make

the feedback more severe or even penalize the user’s

behavior.

Moreover, we found that NLP techniques can

be used to create NPC companions that physically

appear in the simulated environment and can be

prompted by a voice or text command to help the user.

Concrete situations could be that the user is blocked

at an objective or cannot find the way to a needed lo-

cation. In this case, the NPC companion could under-

stand the user’s request and guide them to a desired

location or provide hints to solve various puzzles.

1

https://www.ea.com/en-gb/games/fifa

Paduraru, C., Cernat, M. and Stefanescu, A.

Conversational Agents for Simulation Applications and Video Games.

DOI: 10.5220/0012060500003538

In Proceedings of the 18th International Conference on Software Technologies (ICSOFT 2023), pages 27-36

ISBN: 978-989-758-665-1; ISSN: 2184-2833

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

27

Another source of frustration for users when in-

teracting with simulated environments is that they

cannot understand various designed mechanics. This

problem is common in the industry and causes users

to abandon the applications before developers can

generate any revenue or enough game satisfaction. In

this case, we see NLP as a potential solution where the

user can ask questions that a chatbot can answer live

to help them. Concrete examples could be questions

about healing mechanisms, finding different items on

a given area, things needed or missing to achieve cer-

tain goals, etc.

The novelty of our work is that it uses modern

NLP techniques to address the above mentioned prob-

lems. We summarize our contributions below.

• Identify concrete problems in simulation applica-

tions and video games that can be solved with

NLP techniques, together with industry partners.

• A reusable and extensible open source frame-

work to help simulation and game developers

add NLP support to their products. The solu-

tion we provide at https://github.com/AGAPIA/

NLPForVideoGames is designed as a plugin for

a game engine commonly used in both industry

and academia, the Unreal Engine

2

. For the ba-

sic part, we use very recent deep learning meth-

ods from the NLP literature, which are suitable

for real-time inference as needed for today’s ap-

plications. User input can be either in the form of

voice or text messages. For evaluation purposes, a

demo is built on top of the framework. Along the

repository, an example from the demo application

can be found on voice commands at https://youtu.

be/Z0JqyTO724M, while for text commands at

https://youtu.be/v2Ls9pboXxc.

• Identify some best practices and specifics for ap-

plying NLP to simulation software and video

games.

The rest of the paper is organized as follows. Sec-

tion 2 presents use cases of NLP application in vari-

ous other industries that provide similar solutions in

a context different from ours. Section 3 presents the

theoretical and technical methods we propose within

our framework for using NLP in video games and

simulation applications. The evaluation from a quan-

titative and qualitative perspective, along with details

about our setup, observations, and datasets are pre-

sented in Section 4. The final section presents our

conclusions and ideas for future work.

2

https://www.unrealengine.com

2 RELATED WORK

As described in the review paper (Allouch et al.,

2021), Conversational Agents (CA) are used in many

fields such as medicine, military, online shopping, etc.

These agents are usually virtual agents that attempt

to engage in conversation with interested humans and

answer their questions, at least until they receive in-

formation from them, which is then passed on to real

human agents. To the authors’ knowledge, however,

there is no previous work that uses modern NLP tech-

niques to achieve the goals we seek in the area of sim-

ulation applications and/or game development. While

the literature for the use of NLP for the defined pur-

pose of this project is new, we establish our founda-

tions by following or referencing existing work in the

literature and transferring, as much as possible, the

methods used by CA from other domains.

An overview of the use of CAs in healthcare is

presented in (Laranjo et al., 2018). The work in

(Dingler et al., 2021) explores CAs for digital health

that are capable of delivering health care at home.

Voice assistants are built with context-aware capabili-

ties to provide health-specific services. In (Sezgin and

D’Arcy, 2022), the authors explore how CAs can help

collect data and improve individual well-being and

healthy lifestyles. The use of CAs has also been ap-

plied to specific topics such as assessing and improv-

ing individuals’ mental health (Sedlakova and Trach-

sel, 2022) and substance use disorders (Ogilvie et al.,

2022).

Another interesting use case of CAs is in emer-

gency situations, as explored in (Stefan et al., 2022),

which describes the requirements and design of ap-

propriate agents in different contexts. An adjacent

remark is made by (Schuetzler et al., 2018), which

shows that people are more willing to discuss with vir-

tual CAs, especially sensitive information, than with

real human personnel.

CAs have also been used for gamification pur-

poses. In (Yunanto et al., 2019), the authors propose

an educational game called Turtle trainer that uses an

NLP approach for its non-playable characters (NPCs).

In the game, NPCs can automatically answer ques-

tions posed by other users in English. Human users

can compete against NPCs, and the winner of a round

is the one who answered the most questions correctly.

While their methods for understanding and answer-

ing questions are based on classical NLP methods,

we follow their strategy of evaluation based on qual-

itative feedback from two perspectives: (a) How do

human users feel about how well their competitors,

i.e., the NPCs, understand and answer the questions,

(b) Does the presence of NPCs in this form repre-

ICSOFT 2023 - 18th International Conference on Software Technologies

28

sent a greater interest for the learning game itself? A

common platform for teaching different languages is

Duolingo. With its support, various learning games,

e.g., language learning through gamification (Mun-

day, 2017), and machine learning-based methods for

performance testing are developed. Using the plat-

form itself and NLP methods, the authors proposed

the use of automatically generated language tests that

can be graded and psychometrically analyzed without

human effort or supervision.

3 METHODS

3.1 Overview

The inference process starts with a user message and

ends with an application-designated component that

resolves the query made at game runtime, as shown

in Figure 1. The first part is to detect the type of mes-

sage and translate it into a text format. Input from the

user side can be both voice and entered text messages.

For speech recognition and synthesis, a locally instan-

tiated model based on the DeepPavlov suite (Burtsev

et al., 2018) is used. The output is then sent to a pre-

processing component that uses classic NLP opera-

tions required for other modules, such as removing

punctuation, making the text lowercase, adding be-

ginning and ending markers for sentences, etc., as de-

tailed in BERT model (Devlin et al., 2018) and ex-

plained below in this section. Spelling mistakes and

abbreviations for the text are also taken into account

for the BERT input (Hu et al., 2020).

3.2 Foundational NLP Models

We first motivate BERT (Devlin et al., 2018) instead

of GPT (Brown et al., 2020) model as the core of our

methods. In short, both models are based on Trans-

former architectures (Vaswani et al., 2017) trained

on large plaintext corpora with different pre-training

strategies that the reader can explore in the recom-

mended literature. Probably the fundamental archi-

tectural difference between the two is that in a trans-

former’s mindset, BERT is the encoder part, while

GPT-3 is the decoder. Therefore, BERT can be more

easily used to refine its encoding and further learn

by appending output layers to produce desired cus-

tomized functions and categories. This architectural

implication of BERT makes it suitable for domain

specific problems, which is also our target case. Each

application has its own data, text, locations, charac-

ters, etc., and the underlying NLP model has to adapt

to these individual use cases. This point of view is

also supported by the work in RoBERTa (Liu et al.,

2019), which fine-tuned BERT to learn a new lan-

guage data set, confirming that the model is better

suited for downstream tasks. Since BERT is pre-

trained on large scale text using two strategies i.e.

masked language model and next sentence, it can pro-

vide contextual features at sentence level. With this

benefit and the fact that it can be easily customized for

custom datasets, the base model chosen can be used

for tasks like intent classification and slot filling, two

of the main tasks we need for our goal.

3.3 Intent Classification and Slot Filling

Intent classification is the assignment of a text to a

particular purpose. A classifier analyzes the given

text and categorizes it into intentions of the user. Fill-

ing slots is a common design pattern in language de-

sign, usually used to avoid asking too many or de-

tailed questions to the user. These slots represent a

goal, starting point, or other piece of information that

needs to be extracted from a text. A shared model

performs multiple tasks simultaneously. Because of

its structure, the intent classification model can easily

be extended to a shared model for classifying inten-

tions and filling slots (Chen et al., 2019b).

To understand a user’s message, a model is used

that processes the data and provides two outputs: (a)

the intent of the message, (b) the slots that represent

parts of the text that can be semantically categorized

and make connections with the application’s knowl-

edge base. To this end, the framework relies primar-

ily on the methods described by (Chen et al., 2019b),

which provide a model for computing the two re-

quired outcomes simultaneously. One feature we have

identified as useful, at least from a real-time simula-

tion perspective, is replacing the WordPiece tokenizer

from the original model with a faster version with to-

kenization complexity O(n) (Song et al., 2021).

This task corresponds to the SemanticExtraction

block from Figure 1 and is described in the following

text with examples and the connection to our current

implementation.

The Intent. Describes the category of the message.

This is formalized as a set, I = {I

1

, I

1

, . . . , I

ni

}. This

can be customized depending on the needs of the de-

veloper. For illustration, three types of concrete in-

tentions are used in our current demo attached to the

repository.

• SentimentAnalysis

The message sent by the user is considered as gen-

eral live feedback to the application, e.g. too dif-

ficult or not challenging enough, graph too low.

In return, the application should take appropriate

Conversational Agents for Simulation Applications and Video Games

29

User

Is using

voice ?

Message

Speech

Recognition

Yes

No

Recognize

message

NLP

Preprocessing

Message

as text

Semantic

Extraction

Solve

Message

Figure 1: Overview of data flow from user message to execution of in-application requests. In the first part, the message is

always converted into a textual representation. Then a series of preprocessing operations are performed so that the output text

is compatible with the needs of the next component in the flow. The SemanticExtraction component extracts the intent of the

message and makes the semantic connections with the application’s custom database to understand what actions are required

to provide feedback on the user message. Details on each individual component are presented along Section 3.

action.

• AnswerQuestion

This category includes user questions related to

the application. Some common examples are

questions about mechanisms that are not clear to

the user. This is a way for the user to request help

with common things, which can increase their en-

gagement with the application.

• DoActionRequest

In general, this category is reserved for messages

addressed to companion NPCs, asking the user

for help in solving various tasks. Typical exam-

ples in simulation applications and games include

asking for help in solving a puzzle or challeng-

ing a character, pointing the way to a certain lo-

cation, or assisting the user with various actions.

This kind of category is usually divided into dif-

ferent sub-actions, e.g. in our demo into three

sub-actions: FollowAction - help in finding a re-

gion or place, i.e. the companion NPC shows the

way (see our supplementary video examples in the

suggested links in Section 1), EliminateEnemy -

ask the NPC to eliminate a particular enemy NPC

or user, and HealSupport - ask the NPC to do

whatever it takes to improve the user’s health in

the virtual simulated environment.

The Slots. Describes the slots in the message that

are important for understanding their connections to

the simulation environment. For each identified slot,

its category type and corresponding values are output

from the message. The slot types form a set derived

from the Inside-Outside-Beginning (IOB or BOI in

the literature) methodology (Zhang et al., 2019). In

this method, B marks the beginning of a sequence of

words belonging to a particular slot category, I marks

the continuation of words in the same slot, and O

marks a word that is outside an identified slot cate-

gory. Each of the words in the message is assigned

one of these three markers. Examples can be found

in Listing 1. The set of slots is further formalized

as S = {S

1

, S

1

, . . . , S

ns

}. Note that different intentions

and multiple semantic parts of different B-types may

occur in the same sentence (especially in longer sen-

tences). In this case, the sentence is split into simpler

sentences as much as possible, so that each sentence

has a different intention. Each of the propositions/in-

tentions and their associated slots are passed along as

different messages.

1 E xa m p le 1:

2 - P l a y er inpu t text : " Show me w h ere t h e p o t i on

shop is "

3 - I n t e nt : F ol l o w A c ti o n

4 - Sl o t s : [ O O O O B - l o c at i o n I - l oc a t io n O ]

5

6 E xa m p le 2:

7 - P l a y er inpu t text : " Find me a ny t h in g to get my

hea l t h f i xed caus e I w i l l be ove r soon " .

8 - I n t e nt : H e a l S up p or t

9 - Sl o t s : [ O O O O B - heal I - h e al I - h e a l I - heal O

O O O O O ]

Listing 1: Examples of two user inputs and their classified

intents and slots.

The classification of intentions and slots follows

the work in (Song et al., 2021). We briefly describe

the classification methods and mechanisms behind

it. First, as required for the BERT (Devlin et al.,

2018) model, the user-input text message is tagged

with markers indicating the beginning of a question

([CLS]) and the end of each sentence [SEP]. We de-

note the input sequence as x = (x

1

, . . . , x

T

), where T is

the sequence length. This is then processed by BERT,

Figure 2, which outputs a hidden state representation

for each input token, i.e. H = (h

1

, . . . , h

T

). Given that

this is a bidirectional model and the fact that the first

token is always the injected [CLS] marker, the intent

of the question can be determined by calculating the

probability of each intent category i ∈ I according to

the formula in Eq. 1 can be determined. This categor-

ical distribution can then be sampled to determine the

intent of each question at runtime.

y

i

= softmax

W

i

h

1

+ b

i

(1)

For slot prediction, all hidden states must be con-

sidered when they are fed into the softmax layer so

ICSOFT 2023 - 18th International Conference on Software Technologies

30

Slots

Semantic

extraction

Message

processed

for BERT

Joint intent

and slots

model

AppActionTranslator

(based on a behavior tree)BERT encoder

x, H

x

AppKnowledgeDB

Intent

use

Figure 2: The SemanticExtraction from Figure 1 component in detail. The input is a preprocessed sequence x of tokens,

which is encoded into a sequence of hidden states by the model BERT (Devlin et al., 2018). Both inputs are passed to the joint

classification model for intention and slots (Chen et al., 2019b). A semantic correlation is then made between the database

knowledge provided by the developer, specific to each application, and the identified slots. The result of this correlation is a

tree of tasks that must be solved by the application in order to provide appropriate feedback. The subcomponents are explained

in more detail in Section 3.3.

that the distribution can be calculated as shown in Eq.

2.

y

s

n

= softmax(W

s

h

n

+ b

s

), n ∈ 1. . . ns (2)

The goal of the training phase is to maximize

the conditional probability of correct classification of

joint slots and intent (Eq. 3), in a supervised man-

ner using the database of application knowledge cre-

ated as mentioned in Section 4. The model BERT is

also fine-tuned to this objective function by minimiz-

ing cross-entropy loss.

p

y

i

, y

s

| x

= p

y

i

| x

ns

∏

n=1

p(y

s

n

| x) (3)

The proposed framework architecture provides a

way to bring in custom knowledge bases for appli-

cations that are generally specific between develop-

ers and titles. This is also shown in Figure 2, where

the AppKnowledgeDB component is used for this pur-

pose by matching identified slot categories, texts, and

actions that need to be performed for the application.

The component that semantically transitions from slot

information to actions to be performed in the applica-

tion is called AppActionTranslator. Its implementa-

tion in our current framework is based on a behav-

ior tree (Paduraru and Paduraru, 2019), which can be

adapted or extended with various methods by any ap-

plication. The method used on the game side can be

as simple as an expert system judging by the intents,

slots and the strings attached to them that relate to the

game units and actions, but behavior trees were pre-

ferred by default to have a better semantic description.

At the lowest level, a Levenshtein distance (Miller

et al., 2009) is used for string comparisons to identify

the application’s slot data and knowledge base. When

processing the inputs, the model assigns a probabil-

ity of match between the application knowledge and

the identified slots. If the threshold is not above a pa-

rameter set by the developer, the framework provides

feedback that the message was not understood. In the

current implementation, a threshold of 0.5 was used,

and the identification score was based on Levenshtein

distance and averaged across slots.

3.4 Question-Response Model

To answer user questions, the models and tools avail-

able in the DeepPavlopv library

3

, called Knowledge

Base Question Answering, are adapted to our require-

ments and reused. In short, the strategy used by the

tools is to provide a ready-to-use general question an-

swering model based on the large Wikidata corpus

(Vrande

ˇ

ci

´

c and Kr

¨

otzsch, 2014). Then, the library

allows the insertion of custom knowledge represen-

tations to enable custom retraining/fine-tuning of the

existing models. At the core of the methods, the same

BERT model is reused to perform the following oper-

ations:

• Recognize a query template from the user mes-

sage. Currently there are 8 categories in our

framework. This is also extensible on the devel-

oper side. The model behind it is based on BERT.

• detection of entities in the message and their as-

sociated strings. Recognized entities are linked to

the entities contained in the knowledge base. Top-

k matches are evaluated based on Levenshtein dis-

tance and stored. The same BERT model is be-

hind the operation.

• ranks the possible relationships and paths in the

set of recognized and linked entities. Thus, this

step provides an evaluation of the valid combi-

nations of entities and semantic relations. For

this step, modified from the original version for

our needs of better linking of entities and a user-

defined knowledge base, we use the methods of

BERT-ER (Chatterjee and Dietz, 2022).

3

https://docs.deeppavlov.ai/en/master/features/models/

kbqa.html

Conversational Agents for Simulation Applications and Video Games

31

• A generator model, based at its core on BERT, is

used to populate the answer query templates. The

slots are filled with the candidate entities and re-

lations found in the previous steps.

4 EVALUATION

This section presents the design used for the evalu-

ation, the creation of the dataset used for the train-

ing, the results of the quantitative and qualitative eval-

uation using automatic and human perception tests,

and finally performance-related aspects to demon-

strate the usefulness of the proposed methods.

4.1 Experimental Design and Discussion

The framework implementation and the demo based

on it work as a plugin in both Unreal Engine ver-

sions 4 and 5 and are available at https://github.com/

AGAPIA/NLPForVideoGames. Examples from the

demo can be viewed in our repository and Figures 3,

4, and 5. The architectural decision to use a plugin

was made to achieve separation of concerns and mini-

mal dependencies between the application implemen-

tation and the proposed framework. During develop-

ment and experimentation, Flask

4

was used to quickly

switch between different models and parts, parame-

ters used for training or inference, etc. In the produc-

tion phase and in our evaluation phase, the models

were deployed locally and accessed through Python

bindings to C++ code, since the engine source code

specific to the application implementation was writ-

ten in C++.

Figure 3: Demo capture of the user requesting details of

self-healing either by speech or text, and then asking the

NPC companion to help them find the healing location.

Note that for speech input for debugging purposes, the un-

derstood message is also displayed as text.

In our evaluation,we used the BERT-Base English

4

https://flask.palletsprojects.com/en/2.2.x

Figure 4: A similar message exchange as in Figure 3, this

time with the request for the teleport action.

Figure 5: The user asks for details about some quests he

can play with the current level, and then asks the NPC to

accompany them there.

uncased model

5

, which has 12 layers, 768 hidden

states, and 12 multi-attention heads. To fine-tune the

model for the common goal of classification and slot

filling, the maximum sequence length was set to 30

and the batch size to 128. Adam (Kingma and Ba,

2014) with an initial learning rate of 5e-4 is preferred

as the optimizer. The dropout probability was set to

0.1. On our custom dataset provided in the demo,

the model was then fine-tuned for approximately 1000

epochs. The threshold for confidence is set to 0.5 in

our evaluation, meaning that the output of the behav-

ior tree must be deemed correct with a probability of

at least 0.5, otherwise the feedback from the applica-

tion side is that the user’s message is not understand-

able.

Fine-Tuning and Transfer Learning. As described

in Sections 3.3 and 3.4, the methods proposed in

our work are divided into several small modules.

Fine-tuning by reusing parts of the existing state-of-

the-art model architecture and pre-trained parameters

on publicly available datasets helps considerably in

answering questions. Without most of the knowl-

edge coming from large scale corpuses, the text an-

swers generated by the application looked unnatural.

Therefore, little fine-tuning is required for question-

answering models or entity linking models to perform

5

https://github.com/google-research/bert

ICSOFT 2023 - 18th International Conference on Software Technologies

32

well. On the other hand, more fine-tuning is required

for the models used to classify messages in query

masks or to identify the entities in the messages. This

is necessary because the semantic information for un-

derstanding user messages must come primarily from

the application knowledge bases, rather than from

Wikidata or other public datasets that were used to

pretrain the models.

Hierarchical Contexts. We decided to add another

layer for both identifying intentions/slots and for the

models to answer questions. This came out of our

evaluation process, where we found that there were

a few different contexts in the application that could

improve feedback results. A specific example from

our demo: when the user is in the shopping area in

the simulated environment, a context variable is set

to shopping. This information was provided in the

training dataset and considered as input to each of the

models during training or fine-tuning. The additional

information is helpful in understanding simple ques-

tions that the user may have difficulty understanding,

e.g., they might ask for what would you recommend

I buy from here? The answer to this question could

vary depending on the context, e.g. shopping oppor-

tunities, locations of craft items, a simple map section

with items that can be collected, etc.

4.2 Datasets Used

The dataset was created by 15 different volunteer stu-

dents from University of Bucharest during their un-

dergraduate studies. We use the 80/20 rule for split-

ting training and evaluation datasets. To train the

models containing slots and intentions on our demo,

the dataset of examples looks like the examples in

Listing 1. In total, there were 1500 pair examples,

split between 8 contexts, and almost the same num-

ber for each of the three classes (SentimentAnalysis,

AnswerQuestion, and DoActionRequest).

For the AnswerQuestion category, each example

also contained a sequence of words that described the

desired answer as text from the annotator’s perspec-

tive. For the other two categories, the example con-

tained the specific application action to be performed

in the demo application with numeric IDs between 1

to 5. Specifically, we had two actions in our demo

for the SentimentAnalysis case: change the difficulty

level and show instructions for performing various

contextual operations. For the DoActionRequest, we

use the three subcategories mentioned in Section 3.3:

FollowAction, EliminateEnemy, HealSupport.

As an aside, participants were asked to include ty-

pos and abbreviations in their texts so that the models

could adapt to them as well.

4.3 Quantitative Evaluation

The quantitative assessment of the joint model is

based on the two outputs of the model, i.e., intentions

and slot identification. For intentions, common met-

rics such as precision, recall, and F1 score are used,

while for slots, only the F1 score is useful due to the

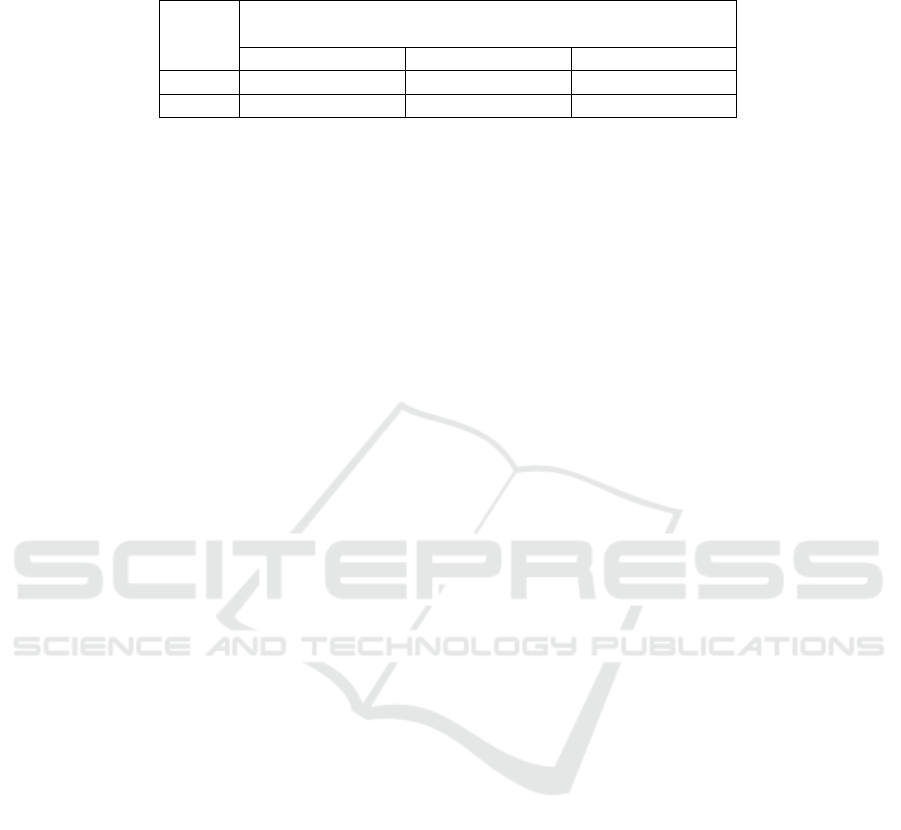

highly imbalanced data. As Table 1 shows, the in-

tent classification model manages to give the correct

answers in most cases, with an overall lower scoring

value for the DoActionRequest, since there is a more

diverse set of actions in the training set.

For the slot classification, where the models were

re-trained to better fit the application knowledge

database, as explained above, an F1 score of 89.621%

is obtained.

The metric METEOR (Banerjee and Lavie, 2005)

is used to score the question response. Since this met-

ric comes from the field of machine translation, it can

also be easily adapted for question answer scoring.

Briefly, the idea behind it is to match the sequence

of words in the (correct) target answer with the pre-

dicted answer, taking into account stemming and syn-

onymy matching between words. The score is formed

from a harmonic mean of precision and recall, with

more weight given to recall. In addition, the method

penalises answers that do not consist of consecutive

paragraphs in the target text, as this is natural to the

human comprehension process. In our dataset of An-

swerQuestionCategory, the METEOR metric scored

0.7095, which is a reliable value that answers look al-

most natural according to the literature (Chen et al.,

2019a).

4.4 Qualitative Evaluation

The experiments and statistical results evaluating our

framework and demo from a qualitative point of view

come from a group of 12 people (volunteers from the

quality assurance departments of our industry part-

ners and students from the University of Bucharest)

who played the demo for two hours and tried to

move through the application asking questions to the

NPCs about different topics. There are three research

questions that we explored during our qualitative

evaluation.

RQ1. Do the application or NPCs provide correct

feedback, i.e., do they seem to correctly understand

the questions or requests asked and respond correctly

in the context?

To assess this, each of the 12 participants played

the demo for 2 hours and were asked to give 100 mes-

sages to the application/NPCs, with equal amounts of

Conversational Agents for Simulation Applications and Video Games

33

Table 1: Quantitative evaluation of the classification model for predicting intentions.

Metric Category

AnswerQuestion SentimentAnalysis DoActionRequest

Precision 0.91744841 0.89811321 0.95881007

Recall 0.978 0.952 0.838

F1-score 0.94675702 0.92427184 0.89434365

input via voice and text. After each response from

the application (including a 1 minute delay for action

types such as path tracking or other user support op-

erations that cannot be evaluated immediately), they

were asked whether the NPCs or the application re-

sponded correctly.

Table 2 shows the averaged feedback from

participants for both types of input, broken down

by category of message. The results show that

users are generally satisfied with the feedback, with

lower ratings for speech input, as expected, due to

the need for another layer to convert speech to text

and, of course, performance degradation along the

way. Scores are also lower in the DoActionRequest

category, as it is more difficult in two ways: (a)

to correctly understand the requested action, (b) to

execute it through application mechanisms, which

also implies using the behaviour trees in the Ap-

pActionTranslator component, as shown in Figure 2.

This can be further improved by providing a larger

dataset of such action requests and dividing them into

a larger set of subcomponents and/or categories.

RQ2. Is the method suitable for real-time use? When

run on a separate thread on a CPU -only, Core i7

gen11 processor, the average time for a full pipeline

inference was 13.24 milliseconds for user input

through a text message. For a voice input, the time

to process and convert the voice to text (Figure 1)

averaged 1.96 seconds for sentences of about 15

words (Figure 1). This proves that the method can

be used in real-time and without bottlenecks on the

simulation side when running on a separate thread.

The time needed to understand the question can be

disguised as a natural process anyway, since humans

also need to understand, process, and formulate an

answer, rather than expecting it in the same frame

when it is asked.

RQ3. Do users feel that conversing with the applica-

tion or NPCs helps them in general (e.g., better under-

standing of the mechanics of the simulation environ-

ment, removal of obstacles, assistance with difficult

puzzles or actions, etc.)?

To assess this question, a response template was

given to each participant at the end of the test. Over-

all, 10 out of 12 participants felt that the discussions

helped them during the demo, while 2 of them wished

they could discover the application and mechanisms

themselves. In contrast to the correctness results

shown in Table 2, users felt that the DoActionRequest

category was the most useful, as it really helped them

get through difficult parts of the demo.

5 CONCLUSION AND FUTURE

WORK

In this paper, we propose a set of methods and tools

for applying NLP techniques to simulation applica-

tions and video games. We provide an open source

software that can be used for both academic and

industrial evaluation purposes. The requirements,

dataset creation, and evaluation of the results have

been created in collaboration with industry partners

that publish software in the relevant field. From our

point of view, this contributes significantly to under-

standing and addressing the right problems and then

evaluating how the proposed methods correctly solve

the requirements and existing problems. Both the

quantitative and qualitative evaluation show that the

use of NLP as an active assistant for the user within

the simulation software can increase user satisfac-

tion and improve user engagement. Our ideas for

future work include first introducing an active learn-

ing methodology where users can provide live feed-

back and improve the models online, rather than just

training them on collected datasets in a supervised

manner. This could greatly improve the models be-

cause once deployed, a large amount of data and users

could interact with the models and tell the algorithms

where they went wrong in providing feedback or un-

derstanding intent. Another planned research topic

is to create an ontology for simulation software and

games in general, similar to the ontology used in web

development, so that common terms and notations can

be used. This would facilitate the adoption and reuse

of the tools in multiple projects.

ICSOFT 2023 - 18th International Conference on Software Technologies

34

Table 2: Qualitative evaluation of the correctness of the feedback depending on the type of input and the respective category

of the message.

Input

type

Category

AnswerQuestion SentimentAnalysis DoActionRequest

Text 91% 87% 79%

Voice 88% 85% 71%

ACKNOWLEDGMENTS

This research was supported by European Union’s

Horizon Europe research and innovation programme

under grant agreement no. 101070455, project DYN-

ABIC. We also thank our game development industry

partners from Amber, Ubisoft, and Electronic Arts for

their feedback.

REFERENCES

Allouch, M., Azaria, A., and Azoulay, R. (2021). Conver-

sational agents: Goals, technologies, vision and chal-

lenges. Sensors, 21(24).

Baltezarevic, R., Baltezarevic, B., and Baltezarevic, V.

(2018). The video gaming industry (from play to rev-

enue). International Review, pages 71–76.

Banerjee, S. and Lavie, A. (2005). METEOR: An automatic

metric for MT evaluation with improved correlation

with human judgments. In Proceedings of the ACL

Workshop on Intrinsic and Extrinsic Evaluation Mea-

sures for Machine Translation and/or Summarization,

pages 65–72, Ann Arbor, Michigan. Association for

Computational Linguistics.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D.,

Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G.,

Askell, A., Agarwal, S., Herbert-Voss, A., Krueger,

G., Henighan, T., Child, R., Ramesh, A., Ziegler, D.,

Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E.,

Litwin, M., Gray, S., Chess, B., Clark, J., Berner,

C., McCandlish, S., Radford, A., Sutskever, I., and

Amodei, D. (2020). Language models are few-shot

learners. In Larochelle, H., Ranzato, M., Hadsell, R.,

Balcan, M., and Lin, H., editors, Advances in Neu-

ral Information Processing Systems, volume 33, pages

1877–1901. Curran Associates, Inc.

Burtsev, M., Seliverstov, A., Airapetyan, R., Arkhipov,

M., Baymurzina, D., Bushkov, N., Gureenkova, O.,

Khakhulin, T., Kuratov, Y., Kuznetsov, D., Litinsky,

A., Logacheva, V., Lymar, A., Malykh, V., Petrov, M.,

Polulyakh, V., Pugachev, L., Sorokin, A., Vikhreva,

M., and Zaynutdinov, M. (2018). DeepPavlov: Open-

source library for dialogue systems. In Proceedings of

ACL 2018, System Demonstrations, pages 122–127,

Melbourne, Australia. Association for Computational

Linguistics.

Chatterjee, S. and Dietz, L. (2022). Bert-er: Query-specific

bert entity representations for entity ranking. In Pro-

ceedings of the 45th International ACM SIGIR Con-

ference on Research and Development in Information

Retrieval, SIGIR ’22, page 1466–1477.

Chen, A., Stanovsky, G., Singh, S., and Gardner, M.

(2019a). Evaluating question answering evaluation. In

Proceedings of the 2nd Workshop on Machine Read-

ing for Question Answering, pages 119–124, Hong

Kong, China. Association for Computational Linguis-

tics.

Chen, Q., Zhuo, Z., and Wang, W. (2019b). Bert for

joint intent classification and slot filling. ArXiv,

abs/1902.10909.

Devlin, J., Chang, M.-W., Lee, K., and Toutanova, K.

(2018). Bert: Pre-training of deep bidirectional trans-

formers for language understanding. arXiv preprint

arXiv:1810.04805.

Dingler, T., Kwasnicka, D., Wei, J., Gong, E., and Olden-

burg, B. (2021). The use and promise of conversa-

tional agents in digital health. Yearbook of Medical

Informatics, 30:191–199.

Hu, Y., Jing, X., Ko, Y., and Rayz, J. T. (2020). Mis-

spelling correction with pre-trained contextual lan-

guage model. 2020 IEEE 19th International Confer-

ence on Cognitive Informatics & Cognitive Comput-

ing (ICCI*CC), pages 144–149.

Kingma, D. P. and Ba, J. (2014). Adam: A method for

stochastic optimization.

Laranjo, L., Dunn, A. G., Tong, H. L., Kocaballi, A. B.,

Chen, J., Bashir, R., Surian, D., Gallego, B., Magrabi,

F., Lau, A. Y. S., and Coiera, E. (2018). Conversa-

tional agents in healthcare: a systematic review. Jour-

nal of the American Medical Informatics Association,

25(9):1248–1258.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D.,

Levy, O., Lewis, M., Zettlemoyer, L., and Stoyanov,

V. (2019). Roberta: A robustly optimized bert pre-

training approach. ArXiv, abs/1907.11692.

Miller, F. P., Vandome, A. F., and McBrewster, J. (2009).

Levenshtein Distance: Information Theory, Computer

Science, String (Computer Science), String Metric,

Damerau Levenshtein Distance, Spell Checker, Ham-

ming Distance. Alpha Press.

Munday, P. (2017). Duolingo. gamified learning through

translation. Journal of Spanish Language Teaching,

4(2):194–198.

Ogilvie, L., Prescott, J., and Carson, J. (2022). The use of

chatbots as supportive agents for people seeking help

with substance use disorder: A systematic review. Eu-

ropean Addiction Research, 28(6):405–418.

Conversational Agents for Simulation Applications and Video Games

35

Paduraru, C. and Paduraru, M. (2019). Automatic difficulty

management and testing in games using a framework

based on behavior trees and genetic algorithms.

Schuetzler, R. M., Giboney, J. S., Grimes, G. M., and Nuna-

maker, J. F. (2018). The influence of conversational

agent embodiment and conversational relevance on

socially desirable responding. Decision Support Sys-

tems, 114:94–102.

Sedlakova, J. and Trachsel, M. (2022). Conversational arti-

ficial intelligence in psychotherapy: A new therapeu-

tic tool or agent? The American Journal of Bioethics,

0(0):1–10. PMID: 35362368.

Sezgin, E. and D’Arcy, S. (2022). Editorial: Voice technol-

ogy and conversational agents in health care delivery.

Frontiers in Public Health, 10.

Song, X., Salcianu, A., Song, Y., Dopson, D., and Zhou,

D. (2021). Fast WordPiece tokenization. In Proceed-

ings of the 2021 Conference on Empirical Methods in

Natural Language Processing, pages 2089–2103. As-

sociation for Computational Linguistics.

Stefan, S., Lennart, H., Felix, B., Christian, E., Milad, M.,

and Bj

¨

orn, R. (2022). Design principles for conversa-

tional agents to support emergency management agen-

cies. International Journal of Information Manage-

ment, 63:102469.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, L. u., and Polosukhin,

I. (2017). Attention is all you need. In Guyon,

I., Luxburg, U. V., Bengio, S., Wallach, H., Fer-

gus, R., Vishwanathan, S., and Garnett, R., editors,

Advances in Neural Information Processing Systems,

volume 30. Curran Associates, Inc.

Vrande

ˇ

ci

´

c, D. and Kr

¨

otzsch, M. (2014). Wikidata: A free

collaborative knowledge base. Communications of the

ACM, 57:78–85.

Wankhade, M., Rao, A., and Kulkarni, C. (2022). A sur-

vey on sentiment analysis methods, applications, and

challenges. Artificial Intelligence Review, 55:1–50.

Yunanto, A. A., Herumurti, D., Rochimah, S., and Kuswar-

dayan, I. (2019). English education game using non-

player character based on natural language process-

ing. Procedia Computer Science, 161:502–508. The

Fifth Information Systems International Conference,

23-24 July 2019, Surabaya, Indonesia.

Zhang, F., Fleyeh, H., Wang, X., and Lu, M. (2019). Con-

struction site accident analysis using text mining and

natural language processing techniques. Automation

in Construction, 99:238–248.

ICSOFT 2023 - 18th International Conference on Software Technologies

36