Towards Usable Scoring of Common Weaknesses

Olutola Adebiyi

a

and Massimiliano Albanese

b

Center for Secure Information Systems, George Mason University, Fairfax, U.S.A.

Keywords:

Vulnerability Scanning, Security Metrics, Software Weaknesses.

Abstract:

As the number and severity of security incidents continue to increase, remediating vulnerabilities and weak-

nesses has become a daunting task due to the sheer number of known vulnerabilities. Different scoring systems

have been developed to provide qualitative and quantitative assessments of the severity of common vulnerabili-

ties and weaknesses, and guide the prioritization of vulnerability remediation. However, these scoring systems

provide only generic rankings of common weaknesses, which do not consider the specific vulnerabilities that

exist in each system. To address this limitation, and building on recent principled approaches to vulnerabil-

ity scoring, we propose new common weakness scoring metrics that consider the findings of vulnerability

scanners, including the number of instances of each vulnerability across a system, and enable system-specific

rankings that can provide actionable intelligence to security administrators. We built a small testbed to evalu-

ate the proposed metrics against an existing metric, and show that the results are consistent with our intuition.

1 INTRODUCTION

The increasing volume of common vulnerabilities and

exposures entries published yearly can hinder secu-

rity administrators from quickly prioritizing those that

pose the highest risk to their organization. Sev-

eral efforts have been made by different organi-

zations, including NIST (Mell et al., 2006) and

MITRE (Christey, 2008), to define metrics for scor-

ing vulnerabilities and helping administrators make

informed decisions about vulnerability prioritization,

remediation, and mitigation. However, organizations

still struggle to properly quantify and prioritize their

vulnerabilities because of the many factors they need

to consider. Additionally, available tools and scoring

systems rely on predefined notions of risk and impact

and use predefined equations to capture the primary

characteristics of a vulnerability, giving administra-

tors very little flexibility.

The first step in vulnerability management is to

understand which types of vulnerabilities are the most

critical for the security of an organization. MITRE’s

Common Weakness and Enumeration (CWE) pro-

vides a way to abstract software-specific flaws into

broader classes of vulnerabilities, referred to as weak-

nesses, and rank these classes of vulnerabilities by

their aggregate severity. Rankings of software weak-

a

https://orcid.org/0000-0002-3207-5096

b

https://orcid.org/0000-0002-2675-5810

nesses are published yearly by MITRE and OWASP,

but these rankings do not effectively assist administra-

tors in prioritizing remediation efforts, as many of the

vulnerabilities mapped to the top-ranking weaknesses

may not exist in their own system.

Many organizations also run periodic vulnerabil-

ity scans to discover unpatched vulnerabilities, but

without proper prioritization, the results of vulner-

ability scanning may not help protect critical assets

from cyber attacks. According to a recent study, only

about 1.4% of published vulnerabilities are known to

have been exploited (Sabottke et al., 2015).

To effectively prioritize vulnerabilities and sup-

port security-related decisions that are specific to tar-

get system, it is important to identify and score the

vulnerabilities that exist on that system rather than

using generic rankings. Information about new vul-

nerabilities is constantly updated in NVD, but scoring

of new vulnerabilities does not keep the pace with the

rate at which new vulnerabilities are discovered. It is

possible to have vulnerabilities on the system which

are yet to be assigned a CVSS score. Figure 1 shows

that over 800 vulnerabilities are yet to be scored as of

March 8, 2023.

1

The Mason Vulnerability Scoring Framework

(MVSF) attempted to addresses some of these con-

cerns by establishing a framework that allows users

1

The live NVD Dashboard can be accessed at

https://nvd.nist.gov/general/nvd-dashboard

Adebiyi, O. and Albanese, M.

Towards Usable Scoring of Common Weaknesses.

DOI: 10.5220/0012090900003555

In Proceedings of the 20th International Conference on Security and Cryptography (SECRYPT 2023), pages 183-191

ISBN: 978-989-758-666-8; ISSN: 2184-7711

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

183

Figure 1: Screenshot of the NVD Dashboard as of 6:00pm EDT on March 08, 2023.

to generate custom ranking by tuning several param-

eters used to calculate vulnerability and weakness

scores (Iganibo et al., 2022). MVSF builds on previ-

ous work aimed at identifying variables that influence

an attacker’s decision to exploit a given vulnerabil-

ity (Iganibo et al., 2021) but it is limited by the lack of

integration with vulnerability scanning tools, which

would enable customization of the rankings based on

the specific vulnerabilities that exist in a given sys-

tem. We address this limitation by designing a simple

yet elegant and effective solution to integrate infor-

mation from vulnerability scanning into the weakness

scoring process. We propose two new scoring met-

rics that consider vulnerability scanning information

at two different levels of granularity. The first metric

considers average exploitation likelihoods and expo-

sure factors across detected vulnerabilities, whereas

the second metric computes weighted averages us-

ing the numbers of instances of each vulnerability as

weights. The results on a small testbed indicate that

these metrics can provide rankings consistent with in-

tuition, and thus can help identify the most critical

weaknesses for an organization.

The remainder of this paper is organized as fol-

lows. Section 2 discusses related work. Section 3 pro-

vides preliminary definitions and describes the base

metrics which this work builds upon. Then, Sec-

tion 4 describes the new metrics for scoring weak-

nesses and the rationale behind them. Finally, Sec-

tion 5 describes the experimental setup and results,

and Section 6 provides some concluding remarks and

a roadmap for future work.

2 RELATED WORK

Vulnerability management aims at effectively and in-

telligently prioritizing remediation efforts based on

actionable recommendations that consider both exter-

nal variables and intrinsic features of existing vulner-

abilities. Organizations have made attempts to score

and rank software vulnerabilities, and find ways to as-

sess and quantify their impact.

The U.S. government, through the National In-

stitute of Standards and Technology maintains the

National Vulnerability Database (NVD) which is a

repository of vulnerability information. NVD is fully

synchronized with MITRE Common Vulnerabilities

and Exposures (CVE) list, and augments it with sever-

ity scores, impact ratings based on the Common Vul-

nerability Scoring System (CVSS). Recent work has

shown the limitations of CVSS. (Ruohonen, 2019)

highlighted the time delays that affect CVSS scoring

work in the context of NVD. (Spring et al., 2021) in-

dicated that the CVSS formula lacks empirical justifi-

cation and does not address some risk elements.

Common Weakness Enumeration (CWE) pro-

vides a community-developed list of software and

hardware weaknesses

2

. MITRE’s Common Weak-

ness Scoring System (CWSS) provides a mecha-

nism for prioritizing software weaknesses in a con-

sistent, flexible, open manner through a collaborative

community-based effort

3

.

The CWE team leverages the Common Vulnera-

bilities and Exposures data and the CVSS scores as-

2

https://cwe.mitre.org/

3

https://cwe.mitre.org/cwss/

SECRYPT 2023 - 20th International Conference on Security and Cryptography

184

sociated with each CVE record to publish a list of Top

25 Most Dangerous Software Weaknesses

4

. The aim

is to provide security professionals with resources to

help mitigate risk in their organizations. Similarly, the

Open Web Application Security Project (OWASP) re-

leases its Top 10 Web Application Security Risks list

yearly

5

. The OWASP Top 10 does not not consider

the number of instances of a CWE in calculation for

the top 10. The ranking is subjective and difficult to

replicate by users as there is no published quantitative

approach to back it up.

Several metrics (Wang et al., 2009; Mukherjee and

Mazumdar, 2018; Wang et al., 2019) use scores from

the Common Vulnerability Scoring Systems (CVSS)

or the Common Weakness Scoring Systems (CWSS)

in isolation or as the dominant factor in determining

the severity of a vulnerability. (Jacobs et al., 2021)

developed a data-driven threat scoring system for pre-

dicting the probability that a vulnerability will be ex-

ploited within the 12 months following public disclo-

sure. Most of these metrics cannot be easily extended

to consider the effect of additional variables, and do

not specifically focus on system-centric evaluations.

Our metrics are designed to provide system-specific

rankings of common weaknesses, building upon ex-

tensible vulnerability-level metrics.

3 PRELIMINARY DEFINITIONS

In this section, we provide an overview of generalized

versions of the base vulnerability metrics that were

originally introduced in (Iganibo et al., 2021), namely

the exploitation likelihood and the exposure factor of

a vulnerability. We also provide an overview of the

metrics used by MITRE to score and rank CWEs and

a generalized version of a similar CWE scoring met-

ric introduced in (Iganibo et al., 2022). The work pre-

sented in this paper builds upon these metrics, but of-

fers a simple yet elegant solution to make vulnerabil-

ity and weakness scoring more useful in practice.

3.1 Exploitation Likelihood

The exploitation likelihood (or simply likelihood)

ρ(v) of a vulnerability v is defined as the probabil-

ity that an attacker will attempt to exploit that vul-

nerability, if given the opportunity. An attacker has

the opportunity to exploit a vulnerability if certain

preconditions are met, most notably if they have ac-

cess to the vulnerable host. Specific preconditions

4

https://cwe.mitre.org/top25/

5

https://owasp.org/Top10/

may vary depending on the characteristics of each

vulnerability, as certain configuration settings may

prevent access to vulnerable portions of the target

software. Several variables may influence the like-

lihood that an attacker will exploit a given vulnera-

bility v, including but not limited to: (i) the vulner-

ability’s exploitability score as determined by CVSS,

Exploitability(v); (ii) the amount of time elapsed since

the vulnerability was made public, Age(v); and (iii) the

set of known IDS rules associated with the vulnerabil-

ity, Known IDS Rules(v). Formally, the exploitation

likelihood is modeled as a function ρ : V → [0, 1] de-

fined by Equation 1.

ρ(v) =

∏

X∈X

↑

l

1 −e

−α

X

·f

X

(X(v))

∏

X∈X

↓

l

e

α

X

·f

X

(X(v))

(1)

where X

↑

l

and X

↓

l

denote the sets of variables that

respectively contribute to increasing and decreasing

the likelihood as their values increase. Each variable

contributes to the overall likelihood as a multiplica-

tive factor between 0 and 1 that is formulated to ac-

count for diminishing returns. Factors corresponding

to variables in X

↑

l

are of the form 1 −e

−α

X

·f

X

(X(v))

,

where X is the variable, α

X

is a tunable parameter,

X(v) is the value of X for v, and f

X

is a monotonically

increasing function used to convert values of X to

scalar values, i.e., x

1

< x

2

=⇒ f

X

(x

1

) ≤ f

X

(x

2

). Sim-

ilarly, factors corresponding to variables in X

↓

l

are of

the form

1

e

α

X

·f

X

(X(v))

= e

−α

X

·f

X

(X(v))

. It is assumed that

each product evaluates to 1 when the corresponding

set of variables is empty, i.e.,

∏

X∈X

(. ..) = 1 when

X =

/

0.

For the analysis presented in this paper, we

selected the three variables mentioned earlier,

that is X

↑

l

= {Exploitability, Age} and X

↓

l

=

{Known IDS Rules}, thus Equation 1 can be instan-

tiated as follows. However, we remind the reader that

the proposed approach for ranking CWEs is indepen-

dent of the specific variables chosen for X

↑

l

and X

↓

l

.

ρ(v) =

∏

X∈{Exploitability,Age}

1 −e

−α

X

·f

X

(X(v))

∏

X∈{Known IDS Rules}

e

α

X

·f

X

(X(v))

(2)

We then define f

Exploitability

(v) = Exploitability(v),

f

Age

(v) =

p

Age(v), and f

Known IDS Rules

(v) =

|Known IDS Rules(v)|, but discussing how these

functions are defined for each variable is beyond the

scope of this work. To simplify the notation, we use

α

E

, α

A

, and α

K

to refer to α

Exploitability

, α

Age

, and

α

Known IDS Rules

respectively. Thus, Equation 2 can be

rewritten as follows.

Towards Usable Scoring of Common Weaknesses

185

ρ(v) =

1 −e

−α

E

·Exploitability(v)

·

1 −e

−α

A

·

√

Age(v)

e

α

K

·|Known IDS Rules}(v)|

(3)

3.2 Exposure Factor

The exposure factor e f (v) of a vulnerability v is de-

fined as the relative loss of utility of an asset due to

a vulnerability exploit. The term is borrowed from

classic risk analysis terminology, where the expo-

sure factor (EF) represents the relative damage that

an undesirable event – a cyber attack in our case –

would cause to the affected asset. The single loss ex-

pectancy (SLE) of such an incident is then computed

as the product between its exposure factor and the as-

set value (AV), that is S LE = EF ×AV . Several vari-

ables may increase or decrease the exposure factor of

a vulnerability v, including but not limited to: (i) the

vulnerability’s impact score as captured by CVSS,

Impact(v); and the set of deployed IDS rules asso-

ciated with the vulnerability, Deployed IDS Rules(v).

Formally, the exposure factor is defined as a function

e f : V → [0, 1] defined by Equation 4.

e f (v) =

∏

X∈X

↑

e

1 −e

−α

X

·f

X

(X(v))

∏

X∈X

↓

e

e

α

X

·f

X

(X(v))

(4)

where X

↑

e

and X

↓

e

denote the sets of variables that

respectively contribute to increasing and decreasing

the exposure as their values increase. Similar to the

likelihood, each variable contributes to the exposure

factor as a multiplicative factor between 0 and 1 that

accounts for diminishing returns. Factors correspond-

ing to variables in X

↑

e

are of the form 1−e

−α

X

·f

X

(X(v))

,

and factors corresponding to variables in X

↓

e

are of the

form

1

e

α

X

·f

X

(X(v))

= e

−α

X

·f

X

(X(v))

.

For our analysis, we considered the CVSS impact

score as the only variable affecting the exposure fac-

tor, that is X

↑

e

= {Impact} and X

↓

e

=

/

0, thus Equa-

tion 4 can be instantiated as follows. However, as

mentioned earlier, the proposed approach for ranking

CWEs is independent of the specific variables chosen

for X

↑

e

and X

↓

e

.

e f (v) =

∏

X∈{Impact}

1 −e

−α

X

·f

X

(X(v))

(5)

We then define f

Impact

(v) = Impact(v) and, to

simplify the notation, we use α

I

to refer to α

Impact

.

Thus, Equation 5 can be rewritten as follows.

e f (v) = 1 −e

−α

I

·Impact(v)

(6)

3.3 Common Weakness Score

MITRE publishes a yearly ranking of the top 25

most dangerous software weaknesses. Each weak-

ness is scored based on the number of vulnerabilities

mapped to that weakness and the average severity of

such vulnerabilities. The score proposed in (Iganibo

et al., 2022) is semantically equivalent to MITRE’s

score, but relies on the more general vulnerability

metrics introduced in (Iganibo et al., 2021), which

allow administrators to control the ranking by fine-

tuning several parameters used in the computation of

vulnerability-level metrics, whereas MITRE’s score

relies on fixed severity scores from CVSS. In the fol-

lowing, we provide the background on CWE ranking

and an overview of the state of the art.

Equation 9 defines the set of CVEs mapped to

each CWE W

i

, and Equation 10 defines the frequen-

cies of all CWEs, that is the set of cardinalities of

the sets of vulnerabilities associated with each CWE.

This set of frequencies is used in Equation 11 to de-

rive a normalization factor max(Freqs)−min(Freqs).

C(W

i

) =

CV E

j

∈ NV D,CV E

j

→W

i

(9)

Freqs =

{

|C(W

i

)|,W

i

∈ NV D

}

(10)

Equations 11 and 12 respectively compute a fre-

quency and a severity score for each CWE, where the

severity is based on the average CVSS score of all

CVEs in that CWE category. Frequency and severity

scores are both normalized between 0 and 1.

Fr(W

i

) =

|C(W

i

)|−min(Freqs)

max(Freqs)−min(Freqs)

(11)

Sv

MIT RE

(W

i

) =

avg

W

i

(CV SS) −min(CV SS)

max(CV SS) −min(CVSS)

(12)

Finally, Equation 13 defines the overall score that

MITRE assigns to each CWE as the product of its fre-

quency and severity scores, normalized between 0 and

100.

S

MIT RE

(W

i

) = Fr(W

i

) ·Sv

MIT RE

(W

i

) ·100 (13)

In (Iganibo et al., 2022), the severity of a weak-

ness W

i

is defined as the product of the average like-

lihood ρ(W

i

) of vulnerabilities mapped to W

i

and the

average exposure factor e f (W

i

) of such vulnerabili-

ties, as shown in Equation 14 below.

Sv

MV SF

(W

i

) = ρ(W

i

) ·e f (W

i

) (14)

SECRYPT 2023 - 20th International Conference on Security and Cryptography

186

S

MV SF

(W

i

) = |C(W

i

)|·

avg

v∈C(W

i

)

∏

X∈X

↑

l

1 −e

−α

X

·f

X

(X(v))

∏

X∈X

↓

l

e

α

X

·f

X

(X(v))

·

avg

v∈C(W

i

)

∏

X∈X

↑

e

1 −e

−α

X

·f

X

(X(v))

∏

X∈X

↓

e

e

α

X

·f

X

(X(v))

(7)

S

MV SF

(W

i

) = |C(W

i

)|·

avg

v∈C(W

i

)

1 −e

−α

E

·Exploitability(v)

·

1 −e

−α

A

·

√

Age(v)

e

α

K

·|Known IDS Rules}(v)|

·

avg

v∈C(W

i

)

1 −e

−α

I

·Impact(v)

(8)

where ρ(W

i

) and e f (W

i

) are defined by Equations 15

and 16 respectively.

ρ(W

i

) =

avg

v∈C(W

i

)

ρ(v) (15)

e f (W

i

) =

avg

v∈C(W

i

)

e f (v) (16)

Thus, an alternative common weakness score is

given by Equation 17, where the frequency Fr(W

i

) in

Equation 13 is replaced by |C(W

i

)| and the average

severity Sv

MIT RE

(W

i

) is replaced by Sv

MV SF

(W

i

).

S

MV SF

(W

i

) = |C(W

i

)|·Sv

MV SF

(W

i

) (17)

Combining Equations 1, 4, 15, 16, and 17, we can

rewrite Equation 17 as Equation 7, which provides

a generalization of the the expression for S

MV SF

(W

i

)

that was introduced in (Iganibo et al., 2022). We will

use this score as the baseline for our analysis, but be-

fore introducing the proposed approach to CWE rank-

ing and the new metrics, we can instantiate Equation 7

based on the set of variables chosen for our analysis.

Thus, considering Equations 3 and 6, we can rewrite

the expression for S

MV SF

(CW E

i

) as Equation 8.

4 NEW METRICS

In this section, we propose two new metrics, referred

to as the system weakness score and the weighted

weakness score, that can turn CWE rankings into

actionable intelligence for system administrators by

integrating information gained through vulnerability

scanning into the ranking process. The proposed ap-

proach refines the CWE scoring metric defined in

Section 3.3 by only considering the vulnerabilities

that were identified in the target system. To further

refine the analysis, the second metric also considers

the number of instances of each discovered vulner-

ability. In the following, we use C

s

to denote the

set of distinct CVEs identified during vulnerability

scanning. We then use C

s

(W

i

) to denote the set of

CVEs in W

i

that were identified by the scanner, that is

C

s

(W

i

) = C

S

∩C(W

i

).

4.1 System Weakness Score

The score of a CWE category, as defined by Equa-

tion 7 or its instance Equation 8, is computed by con-

sidering all CVEs in that CWE category. However, as

discussed earlier, any real-world system may only ex-

pose a limited number of such vulnerabilities, making

the resulting score of limited utility for system admin-

istrators. Thus, we redefine the weakness-level like-

lihood and exposure factor used in Equation 14 using

Equations 18 and 19 below, which compute averages

only over the vulnerabilities identified by the scanner.

ρ(W

i

) =

avg

v∈C

s

(W

i

)

ρ(v) (18)

e f (W

i

) =

avg

v∈C

s

(W

i

)

e f (v) (19)

Accordingly, we defined a new CWE scoring met-

rics as follow.

S

†

(W

i

) = |C

s

(W

i

)|·

avg

v∈C

s

(W

i

)

ρ(v) ·

avg

v∈C

s

(W

i

)

e f (v) (20)

The specific sets of variables used in the computa-

tion of the vulnerability-level likelihood and exposure

factor are independent of the CWE scoring metric.

For the purpose of our analysis, we will continue to

use the variables identified in Section 3. Thus, com-

bining Equations 3, 6, and 20, we obtain Equation 21.

4.2 Weighted Weakness Score

The metric introduced in the previous section and de-

fined by Equation 20 provides more actionable in-

telligence to system administrators than the original

metric, as it only considers vulnerabilities that were

found in the system. However, in large systems, the

same vulnerabilities may exist on different hosts, and

some vulnerabilities may be more common than oth-

ers. Therefore a seemingly less severe vulnerability

may require attention if it is present on a large num-

ber of machines across the network, offering attack-

ers multiple potential entry points. To address this

concern, we further refine our metric to consider the

number of instances of each vulnerability identified

by the scanner.

Towards Usable Scoring of Common Weaknesses

187

S

†

(W

i

) = |C

s

(W

i

)|·

avg

v∈C

s

(W

i

)

1 −e

−α

E

·Exploitability(v)

·

1 −e

−α

A

·

√

Age(v)

e

α

K

·|Known IDS Rules}(v)|

·

avg

v∈C

s

(W

i

)

1 −e

−α

I

·Impact(v)

(21)

Let I(v) denote the number of instances of vulner-

ability v identified by the scanner across all hosts. We

can then redefine the weakness-level likelihood and

exposure factor used in Equation 14 through Equa-

tions 22 and 23 below, which compute weighted aver-

ages over the vulnerabilities identified by the scanner,

using the numbers of instances as weights.

ρ(W

i

) =

∑

v∈C

s

(W

i

)

I(v) ·ρ(v)

∑

v∈C

s

(W

i

)

I(v)

(22)

e f (W

i

) =

∑

v∈C

s

(W

i

)

I(v) ·e f (v)

∑

v∈C

s

(W

i

)

I(v)

(23)

Accordingly, Equation 24 defines a new CWE

scoring metric that uses the weakness-level likelihood

and exposure factor defined by Equations 22 and 23.

As mentioned for the previous metric, the spe-

cific sets of variables used in the computation of the

vulnerability-level likelihood and exposure factor are

independent of the CWE scoring metric. For the pur-

pose of our analysis and for consistency with the S

†

metric, we will continue to use the variables identi-

fied in Section 3. Thus, combining Equations 3, 6,

and 24, we can obtain an equation similar to Equa-

tions 8 and 21, but we omit it due to the complexity

of the expression.

5 EVALUATION

This section describes the experiments we conducted

to evaluate the proposed metrics. First, we briefly de-

scribe the experimental setup, and then present the re-

sults in detail.

5.1 Experimental Setup

The test environment consisted of of a set of virtual

machines with different operating systems, including

Windows and Linux, and different sets of exposed

vulnerabilities. Table 1 provides a summary of the

machines used, including information about operating

system and exposed vulnerabilities. These machines

were scanned using Nessus Vulnerability Scanner to

obtain an inventory of existing system vulnerabilities.

Nessus uses scripts written in Nessus Attack Script-

ing Language (NASL) to detect vulnerabilities as they

are discovered and released into the public domain.

These scripts, referred to as plugins

6

, include infor-

mation about the vulnerability, set of remediation ac-

tions, and the algorithm to test for the presence of the

security issue.

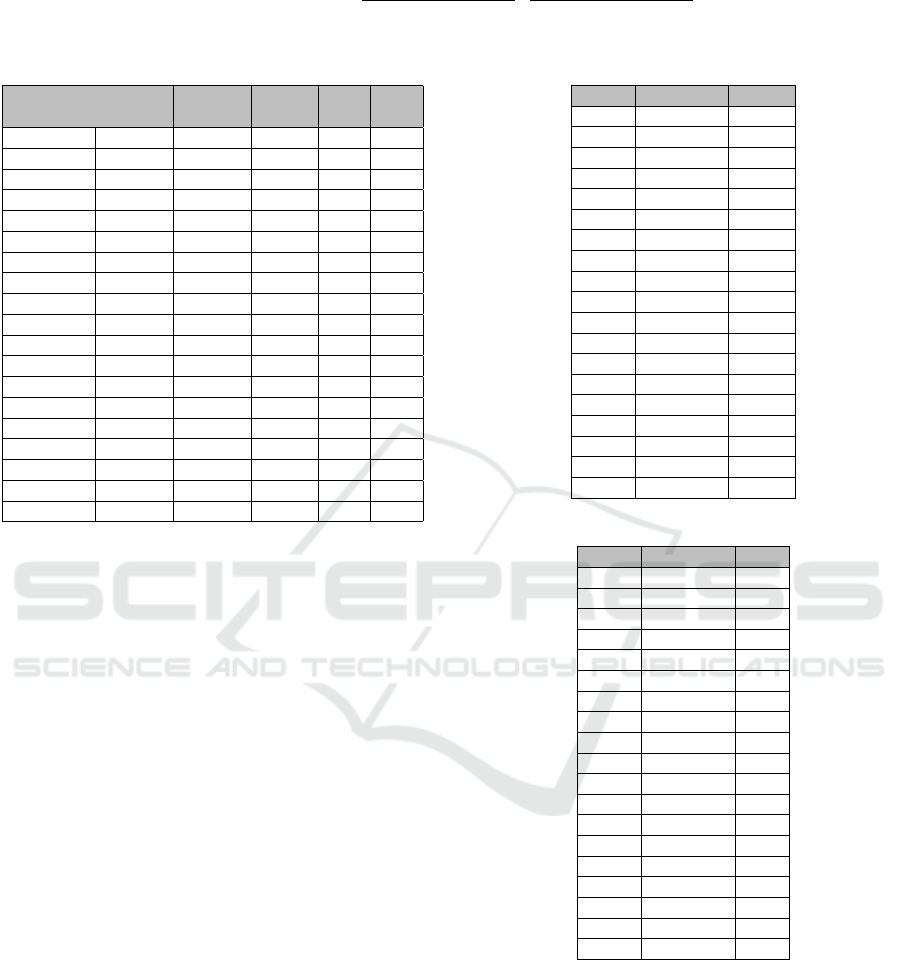

Table 1: Test machines using in the evaluation.

ID Operating System # CVEs

1 Windows 11 1

2 Windows 10 0

3 Windows Server 2008 R2 6

4 Windows Server 2008 R2 18

5 Linux Kernel 2.6 on Ubuntu 14.04 1

6 Linux Kernel 3.13 on Ubuntu 14.04 7

7 Linux Kernel 2.6 on Ubuntu 8.04 39

5.2 Results

A total of 72 vulnerability instances were identified

on the 7 machines in our test environment, including

52 distinct CVEs. These CVEs fall under different

CWE categories, as shown in Table 2. Note that NVD

is only using a subset of CWE for mapping instead

of the entire CWE list. Vulnerabilities that are not

mapped to any CWE in this subset are included in a

special category, NVD-CWE-Other. For each CWE,

Table 2 shows the total number of CVEs mapped to

that CWE, that is |C(W

i

)| and the number of CVEs

found by the scanner, that is |C

s

(W

i

)|. The first is used

as a basis for computing S

MV SF

whereas the second is

used in the computation of our new metrics.

For each identified CWE category, we computed

the baseline score S

MV SF

and the two new scores S

†

and S

∗

. Table 2 provides a summary of the results,

which reveal that different scoring metrics may lead

to significantly different outcomes in terms of rank-

ing. We can also notice that scores computed using

the baseline metric may be orders of magnitude larger

than our new scores. This is expected as S

MV SF

(W

i

)

is heavily dominated by the total number of CVEs in

W

i

, which is usually large.

Common weakness scoring based on the vulnera-

bilities that exist on a system can provide useful in-

sights for the organization. When researchers, ven-

dors or users report identified vulnerabilities, their

scores are calculated based on metrics that approx-

imate ease and impact of an exploit. The scoring

does not consider if the vulnerability exists on a spe-

cific system or a safeguard has been implemented to

protect the system. When vulnerability-level scores

6

https://www.tenable.com/plugins/families/about

SECRYPT 2023 - 20th International Conference on Security and Cryptography

188

S

∗

(W

i

) = |C

s

(W

i

)|·

∑

v∈C

s

(W

i

)

I(v) ·ρ(v)

∑

v∈C

s

(W

i

)

I(v)

·

∑

v∈C

s

(W

i

)

I(v) ·e f (v)

∑

v∈C

s

(W

i

)

I(v)

(24)

Table 2: Common Weakness Scores Summary.

CWE ID # CVEs # CVEs S

MV SF

S

†

S

∗

(total) (scan)

CWE-16 74 1 54 0.68 0.68

CWE-20 4,306 14 3,145 7.64 7.73

CWE-74 568 2 421 1.76 0.88

CWE-79 12,633 1 8,341 0.68 0.68

CWE-89 4,982 1 4,093 0.88 0.88

CWE-94 1,252 1 1,037 0.88 0.88

CWE-200 2,613 14 1,709 3.99 3.65

CWE-254 9 2 6 1.65 1.65

CWE-264 703 2 501 1.70 1.13

CWE-284 187 2 137 1.76 1.76

CWE-310 196 8 143 3.59 3.77

CWE-326 223 2 155 0.68 0.68

CWE-327 262 2 180 0.70 0.70

CWE-331 27 1 19 0.70 0.70

CWE-400 1,021 1 725 0.70 0.70

CWE-617 290 2 200 1.34 1.34

CWE-732 818 2 559 1.48 1.48

CWE-787 6,478 1 5,029 0.88 0.88

Others 1,839 13 1,307 8.91 9.03

are aggregated to compute weakness level-scores, one

may risk to lose sight of the problem at hand. In fact,

weakness ranking approaches like MITRE’s CWE

Top 25 effort, while providing useful information

about general trends in the security landscape, cannot

provide system-specific actionable insights to admin-

istrators.

In order to help identify the most severe weak-

nesses that threaten a specific system and guide the

prioritization of vulnerability remediation, we must

only consider vulnerabilities that actually exist in the

system when abstracting vulnerability-level metrics

into weakness level metrics. The proposed metrics

address this need, with the second metric going a step

further and giving more weight to vulnerabilities that

exists on multiple machines across the network.

In the following, we discuss how the different

scoring metrics impact the ranking of CWEs. Ta-

bles 3, 4, and 5 show how the CWEs identified in

our test environment rank based on S

MV SF

, S

†

, and

S

∗

respectively. The ranking based on S

MV SF

is simi-

lar to the most recent version of MITRE’s CWE Top

25. This result is expected as the authors of (Iganibo

et al., 2022) claim that the two rankings are seman-

tically equivalent and are highly correlated. In par-

ticular, the top 4 CWEs are the same, with only two

position switched between the two rankings.

The ranking shown in Table 4 offers some insights

about the effectiveness of our approach. For instance,

CWE-79 slipped from the first position to position 17

Table 3: Ranking based on S

MV SF

.

Rank CWE ID S

MV SF

1 CWE-79 8,391

2 CWE-787 5,029

3 CWE-89 4,093

4 CWE-20 3,145

5 CWE-200 1,709

6 Others 1,307

7 CWE-94 1,037

8 CWE-400 725

9 CWE-732 559

10 CWE-264 501

11 CWE-74 421

12 CWE-617 200

13 CWE-327 180

14 CWE-326 155

15 CWE-310 143

16 CWE-284 137

17 CWE-16 54

18 CWE-331 19

19 CWE-254 6

Table 4: Ranking based on S

†

.

Rank CWE ID S

†

1 Others 8.91

2 CWE-20 7.64

3 CWE-200 3.99

4 CWE-310 3.59

5 CWE-284 1.76

6 CWE-264 1.70

7 CWE-254 1.65

8 CWE-732 1.48

9 CWE-617 1.34

10 CWE-74 1.76

11 CWE-787 0.88

11 CWE-89 0.88

11 CWE-94 0.88

14 CWE-327 0.70

14 CWE-331 0.70

14 CWE-400 0.70

17 CWE-16 0.68

17 CWE-326 0.68

17 CWE-79 0.68

based on S

†

. In fact, while over 12,000 vulnerabil-

ities are mapped to CWE-79, only a single instance

of one such CVEs was found across our test environ-

ment, thus making CWE-79 less dangerous than other

CWEs. On the other side, CWE-310 jumped from po-

sition 15 to the fourth position. In fact, while CWE-

310 includes only less than 200 CVEs, 8 instances of

such vulnerabilities were found across our test envi-

ronment.

From the ranking shown in Table 5, we can ob-

serve that CWE-310 went up one more position in

Towards Usable Scoring of Common Weaknesses

189

the ranking, surpassing CWE-200. This can be ex-

plained by considering that, although there are more

vulnerabilities mapped to CWE-200 than to CWE-

310 and the average severity is comparable across the

two CWEs, the vulnerability in CWE-200 with the

highest number of instances has lower-than-average

severity and the vulnerability in CWE-310 with the

highest number of instances has higher-than-average

severity, thus shifting the weighted average in favor of

CWE-310.

Finally, we can observe that CWE-20 and CWE-

200 rank high in all three rankings. These can be ex-

plained by considering that these two CWEs have a

significant number of mapped CVEs (4.3k and 2.6k

respectively) – which increases their S

MV SF

score –

and have the largest number of vulnerability instances

discovered by the scanner – which increases their S

†

and S

∗

scores.

Table 5: Ranking based on S

∗

.

Rank CWE ID S

∗

1 Others 9.03

2 CWE-20 7.73

3 CWE-310 3.77

4 CWE-200 3.65

5 CWE-284 1.76

6 CWE-254 1.65

7 CWE-732 1.48

8 CWE-617 1.34

9 CWE-264 1.13

10 CWE-74 0.88

10 CWE-787 0.88

10 CWE-89 0.88

10 CWE-94 0.88

14 CWE-327 0.70

14 CWE-331 0.70

14 CWE-400 0.70

17 CWE-16 0.68

17 CWE-326 0.68

17 CWE-79 0.68

In summary, the analysis of these results confirms

that the proposed metrics work as expected and can

effectively identify the most severe weaknesses for a

given system.

6 CONCLUSIONS

Building upon the existing body of work on vulner-

ability metrics and ranking of common weaknesses,

we have proposed a simple yet elegant approach for

ranking weaknesses that integrates the results of vul-

nerability scanning. Accordingly, we have defined

two new scoring metrics to enable the generation of

system-specific rankings that can provide administra-

tors with actionable intelligence to guide vulnerabil-

ity remediation. Future work may involve establish-

ing a collaboration with MITRE to further evaluate

and possibly standardize the proposed metrics within

the context of the CWE framework, and working with

vendors of scanning software to explore the integra-

tion of our solution into their products.

ACKNOWLEDGEMENTS

This work was funded in part by the National Science

Foundation under award CNS-1822094.

REFERENCES

Christey, S. (2008). The evolution of the CWE development

and research views. Technical report, The MITRE

Corporation.

Iganibo, I., Albanese, M., Mosko, M., Bier, E., and Brito,

A. E. (2021). Vulnerability metrics for graph-based

configuration security. In Proceedings of the 18th In-

ternational Conference on Security and Cryptography

(SECRYPT 2021), pages 259–270. SciTePress.

Iganibo, I., Albanese, M., Turkmen, K., Campbell, T.,

and Mosko, M. (2022). Mason vulnerability scoring

framework: A customizable framework for scoring

common vulnerabilities and weaknesses. In Proceed-

ings of the 19th International Conference on Security

and Cryptography (SECRYPT 2022), pages 215–225,

Lisbon, Portugal. SciTePress.

Jacobs, J., Romanosky, S., Edwards, B., Adjerid, I., and

Roytman, M. (2021). Exploit prediction scoring sys-

tem (EPSS). Digital Threats: Research and Practice,

2(3).

Mell, P., Scarfone, K., and Romanosky, S. (2006). Com-

mon Vulnerability Scoring System. IEEE Security &

Privacy, 4(6):85–89.

Mukherjee, P. and Mazumdar, C. (2018). Attack difficulty

metric for assessment of network security. In Proceed-

ings of 13th International Conference on Availability,

Reliability and Security (ARES 2018), Hamburg, Ger-

many. ACM.

Ruohonen, J. (2019). A look at the time delays in CVSS

vulnerability scoring. Applied Computing and Infor-

matics, 15(2):129–135.

Sabottke, C., Suciu, O., and Dumitras

,

, T. (2015). Vulner-

ability disclosure in the age of social media: Exploit-

ing twitter for predicting real-world exploits. In 24th

USENIX Security Symposium (USENIX Security 15),

pages 1041–1056.

Spring, J., Hatleback, E., Householder, A., Manion, A., and

Shick, D. (2021). Time to change the CVSS? IEEE

Security & Privacy, 19(2):74–78.

Wang, J. A., Wang, H., Guo, M., and Xia, M. (2009). Se-

curity metrics for software systems. In Proceedings of

SECRYPT 2023 - 20th International Conference on Security and Cryptography

190

the 47th Annual Southeast Regional Conference (ACM

SE 2009), Clemson, SC, USA. ACM.

Wang, L., Zhang, Z., Li, W., Liu, Z., and Liu, H.

(2019). An attack surface metric suitable for het-

erogeneous redundant system with the voting mech-

anism. In Proceedings of the International Confer-

ence on Computer Information Science and Applica-

tion Technology (CISAT 2018), volume 1168 of Jour-

nal of Physics: Conference Series, Daqing, China.

IOP Publishing.

Towards Usable Scoring of Common Weaknesses

191