Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites

Using Novel Cloaking Techniques

Wenhao Li

1,2

a

, Yon gqing He

2

, Zhimin Wang

2

, Saleh Mansor Alqahtani

1

and Priyadarsi Nanda

1 b

1

Faculty of Engineering and IT, University of Technology Sydney, NSW 2007, Australia

2

Chengdu MeetSec Technology Co., Ltd., Chengdu, China

Keywords:

Cloaking Techniques, Evasion Techniques, Phishing Blacklists, Anti-Phishing Entities, Phishing Websites.

Abstract:

The proliferation of phishing attacks pose substantial threats to global prosperity amidst the Fourth Indus-

trial Revolution. Given the burgeoning number of Internet users and devices, cyber criminals are harnessing

phishing toolkits and Phishing-as-a-Service (PhaaS) platforms to spawn numerous fraudulent websites. In

retaliation, assorted detection mechanisms, with anti-phishing blacklists acting as a primary line of defense

against phishing sites, have been proposed. Yet, adversaries have contrived cloaking techniques to dodge

this detection method. T his study endeavors to unearth the shortcomings of prevailing blacklists and thereby

bolster the efficacy of detection strategies for Anti-Phishing Entities (APEs). This paper presents an exhaus-

tive analysis of innovat ive and practicable attacks on current anti- phishing blacklists, unmasking potential

weaknesses in these protection mechanisms hitherto unexplored in prior research. Additionally, we divulge

potential loopholes exploitable by attackers and appraise their effectiveness against popular browser blacklists.

1 INTRODUCTION

Phishing, a prevalent form of cybercrime lever-

aging social engineering, manipulates vic-

tims’ trust to purloin funds or sensitive data

(Pujara and Chaudhari, 2018). Recent years have

witnessed a threefold surge in the incidence of these

attacks (APWG, 2022). As projected by Cyber-

crime Magazine, the global cost of cybercrime is

set to reach $10.5 trillion by 2025 (Freeze, 2018),

manifesting cybercrime as a significant threat to

prosperity in the Fourth Industrial Revolution

(World Economic Forum, 2020). Perpetrator s ex-

ecute phishing attacks via diverse channels such

as social media, email, and text messagin g, often

deploying frau dulent websites mimicking legitimate

ones (Gupta et al., 2016). A striking escalation is

evident in the quantity of distinct phishing websites

identified—316,747 in D e cember 2021, up from

a mere 60,926 in December 2017 (APWG, 2017;

APWG, 2022). This trend owes to the expanding

Internet user base and the proliferation of connected

devices, o ffering an ap pealing landscape for crim-

inal activity. Additionally, the advent of phishing

a

https://orcid.org/0009-0007-4342-6676

b

https://orcid.org/0000-0002-5748-155X

toolkits and PhaaS platforms enable the creation

of numerous phishing websites a t a reduced cost,

requirin g minimal coding expertise (Han et al., 2016;

Alabdan, 2020). Numerous approaches have b een

developed to detect phishing websites, including

blacklist/whitelist-based, visual similarity-based,

heuristic-based, machine learning-based, and deep

learning-based detection (Al-Ahma di et al., 2 022).

These methods function independently or f orm part

of the mechanisms integrated into the anti-phishing

ecosystem, where br owsers w ie ld significant in-

fluence via black lists—access contro l mechanisms

that issue warnings for identified phishing web sites

(Bell and Komisarczuk, 2020). Var ious browsers

rely on distinct feed sources for their blacklists. For

instance, Safari, Google Chrome, and Firefox utilize

the Go ogle Safe Browsing (GSB) blacklist, Edge

deploys Microsoft SmartScreen’s (MS SmartScree n)

blacklist, while other browsers may source blacklists

from a combination o f APEs. Despite serving as

critical first-line defenses against phishing web-

sites (O est et al., 2019), these blacklists operate as

Blackbox, employing security crawlers to automate

detection. However, the intrinsic divergence of this

detection method from no rmal user requests has

spurred the development of evasion techniques,

Li, W., He, Y., Wang, Z., Alqahtani, S. and Nanda, P.

Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites Using Novel Cloaking Techniques.

DOI: 10.5220/0012135600003555

In Proceedings of the 20th International Conference on Security and Cryptography (SECRYPT 2023), pages 813-821

ISBN: 978-989-758-666-8; ISSN: 2184-7711

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

813

allowing phishing websites to evade de te ction and

continue luring unsuspecting users. Consequently,

an analysis of the potential shortcomings in curr ent

blacklists can enhance the effectiveness of APEs’

detection strategies and better shield users from

phishing attacks.

In this paper, we explore innovative and practi-

cal attacks against current anti-phishing blacklists to

shed light on an d rectify potential weaknesses in ex-

isting protective mechan isms. We propose and scru-

tinize advanced clo a king techniques hitherto over-

looked, uncovering significant potential deficiencies

in APEs that may be exploited by attackers. T he main

contributions of this paper include:

• Comprehe nsive investigation of curre nt cloaking

techniques used by phishing websites, suggest-

ing potential improvements for APEs from an at-

tacker’s perspective.

• Proposition and exploration of novel cloaking

techniques aimed at dete cting APEs. We c onduct

preliminar y experiments to under stand APE re-

sponses to our techniques, thereby revealing sev-

eral uncovered flaws in popular APE s such as

GSB, MS SmartScreen, APWG, and ESET.

• Presentation of a scheme and design of a frame-

work, supplemented by an experiment evaluat-

ing our clo aking techniq ues against current anti-

phishing blacklists, highlighting potential threats

to Internet users.

2 RELATED WORKS

Understanding the prevalent cloaking techniques

against anti-phishing blacklists a nd potential strate-

gies, attackers might exploit to avoid APE detection

is crucial. Earlier studies have ca tegorized the cloak-

ing techniques emp loyed by phishing sites as: server-

side cloaking, client-side cloaking, and fingerprinting.

These schemes are implementatio n specific and sub-

divide these categories further into User Interaction,

Fingerprinting, and Bo t behavior ( Zhang et al., 2021).

In this p aper, we f ocus on server-side and client-side

cloaking techniques, bot behavior, and fingerp rinting

as client-side te chniques encompass user interac tion

techniques.

Server-side cloak ing leverages attributes of HTTP

Requests such as Hostnam e, Referrer, User Agent,

Cookie, and client IP addresses. These attributes al-

low phishers to disting uish between requests from

known anti-phishing entities or security crawlers and

genuine user reque sts. Phishers utilize these attributes

to gain addition a l info rmation about users, enab ling

them to filter or redirect requests server-side to

evade detection (Oest et al., 2018; Oest et al., 2019;

Oest et al., 2020a).

Client-side cloaking entails JavaScript code to

verify user in te raction where verification methods in-

clude sending pop-up alerts, trackin g mouse behavior,

employing reCAPTCHA, or requ iring session initi-

ation or apply obfuscation methods, thereby thwart-

ing static code analysis by APEs. Such methods

aid in filtering out APE detections and luring gen-

uine users (Maroofi et al., 2020; Oest et al., 2020b;

Zhang et al., 2021).

Bot behavior identification metrics encompass

timing and randomization (Zhang et al., 2021).

Phishers may dela y the display of malicious content,

leveraging the fact that bots or human inspec-

tors may not stay on the page long enough for

the content to load. Similarly, discrepancies in

JavaScript execution times c a n indicate bot activity

(Acharya and Vadrevu, 2021). Randomization tech-

niques, like employing a random numb er generator to

determine content display, can further evade detection

by APEs (Zhang et al., 2021).

Basic fingerprinting techniques utilize HTTP re-

quests a nd JavaScript to extract parameters like

User Agent, Referrer, and Cookie, enabling phish-

ers to identify APEs. Advanced fingerprinting tech-

niques deploy web APIs such as Canvas and We-

bGL to collect unique client fingerprints. These

sophisticated methods facilitate m ore precise iden-

tification, enhanc ing phishers’ evasion capabilities

(Acharya and Vadrevu, 2021).

Our research identified two principal stra tegies

employed to elude detection by APEs: identifica-

tion of authentic human users and discer nment of

APEs. In order to comprehend state-o f-the-art cloak-

ing methodologies employed by contemporary phish-

ing sites, we examined multiple PhaaS providers’

sites to investigate their functionalities and poten-

tial evasion capabilities. Our observations revealed

the application of a new function, Virtual Ma-

chine Detection, which potentially aids in evasion.

Phishing sites use numerous features to detect vir-

tual machines, including CPU c ore count, memory

size, WebGL renderer and User Agent information,

Webdriver, the quantity of installed browser plugins,

and language information sourced from WebGL or

JavaScript. We questioned whether this inform ation

could effectively identify APEs. Moreover, we delved

into potential browser and protocol level features that

could enable p recise fingerprinting or assist in APE

identification. Recent privacy resear ch highlighte d

two significant trac king techniques: Cache-based

tracking and the use of Accept-CH headers in HTTP

SECRYPT 2023 - 20th International Conference on Security and Cryptography

814

requests (Ali et al., 2023). We questioned if these

tracking techniques could offer additional avenues

for phish ing sites to identify APEs. Subsequently,

we conducted experiments to assess their potential

in evading anti-phishing detectio n and evaluated the

efficacy of these innovative techniqu es against anti-

phishing blacklists.

3 PRELIMINARIES

In our investigation, we introduce three novel tech -

niques and execute three sets of p reliminary experi-

ments to compreh e nd the resp onses of APEs to our

methods. To evaluate this, we select security crawlers

like GSB and MS SmartScreen, which supply black-

list feeds to prevalent browsers including Chrome,

Safari, Fire fox, and Edge, owing to their extensive

user base. We also inc orpora te two critical security

crawlers, APWG and ESET, given their importance in

the anti-phishing ecosystem. PhishTank is excluded

as it is no longer accepting registrations. The aim of

these preliminar y experim e nts is to evaluate the feasi-

bility of usin g the proposed technique s to elude APE

detection, an d expose the potential threats these secu-

rity crawlers present to APEs.

3.1 Virtual Machine Detection

3.1.1 Background

Virtual machine detection, frequently utilized by ran-

somware, trojans, and other malicious software to

differentiate user environments, is a growing con-

cern in the cybersecurity domain. Such software

discerns whether it’s operating within a virtual en-

vironm ent, thus allowing it to modify its behavior,

evade detection , and target real users. Phishing web-

sites now exploit this strategy, gathering environmen-

tal fingerprints to identify virtual machines. They

leverage Web APIs like WebGL and Canvas, as we ll

as JavaScript Objects, to collect visitor-specific data

such as scre en rendering details and color depth. Dark

web PhaaS providers have repor te dly integrated vir-

tual machine detection tools into their services, en-

abling them to filter genuine users. As indicated by

(Lin et al., 2022), numer ous active phishing websites

are emp loying these techniques to h arvest browser

fingerpr int data—an increasingly prevalent practice.

This harvested data enables attackers to circumvent

Two Factor Authentica tion (2FA) and misuse stolen

credentials. Our initial experiment aime d to investi-

gate if techniques related to virtual machine dete c tion

could assist phishers in distinguishing between nor-

mal user requests and security crawlers, thereby po-

tentially evading detection strategies of APEs.

3.1.2 Experimental Set Up

In our survey of existing browser-side virtual machine

detection techniques and prevalent user device inf or-

mation, we selected features such as me mory size,

CPU core count, screen color depth/wid th/height,

User-Agent h e aders, and WebG L-extracted data for

virtual machine detection and subsequent featu re

dataset creation. Figure 1 pr e sents an overview

of the design a spects in our experiment. Initially,

we integrated JavaScript code that gathered feature

informa tion in to our experimental website pages.

These sites were d e ployed on cloud servers and

classified into four gr oups. The URLs of each

group were then individually submitted to GSB, MS

SmartScreen, APWG, and ESET, which a re four

promin ent APE s, to prompt anti-phishin g crawler re-

quests. The JavaScript code executed in APEs’ se-

curity c rawler browsers or during manual inspection,

amassing p ertinent data and re turning it to the expe r-

imental website servers to lo g featur es match ing our

dataset. The backend of these sites processed the data

for storage in a database. Up on data collection com-

pletion, we analyzed these records and the a dditional

database-store d data to evaluate the feasibility of us-

ing browser-side virtual machine detection techniques

to evade detection from APEs.

Return selected Features

Security Crawlers/Bots

visit the Webpage

Save experimental Data

Selected Features

Screen Width

Screen Height

Memory Size

WebGL Info

……

Report URLs

Deploy Websites

Data Analysis

①

①

②

③

④

⑤

⑥

Figure 1: The Experiment Design of Virtual Machine De-

tection.

3.1.3 Observed Results

GSB. Our experiment reveals distinct differences be-

tween the characteristics of GSB’s crawler and those

of typical users, particularly in memory size, num-

ber of browser plugins installed, and screen dimen-

sions. The se findings suggest that virtual machine

detection te c hniques could potentially bypass GSB’s

existing phishing dete ction mechanism. Analysis of

the crawler’s feature data sent to the server, upon re-

moving d uplicates, showed few unique values in the

Renderer feature acquired through WebG L. Notably,

Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites Using Novel Cloaking Techniques

815

we observed consistent Renderer information from

crawlers across geographically dispe rsed p hishing

website servers. This concurs with previous findings

on utilizing WebGL to generate hidden images and

compute ima ge hashes (Acharya an d Vadrevu, 2021),

albeit our dire ct method of acquiring renderer infor-

mation via WebGL differs from their schemes.

MS SmartScreen. MS SmartScreen exhib ited sim-

ilar traits to GSB, with clear differences in memory

size, screen dimensions, and WebGL-derived Ren-

derer features c ompared to typical user profiles.

APWG. Crawlers from APWG displayed signifi-

cant variations in screen dimensions, number of

browser plugins, and WebGL-obtained Renderer fea-

tures, compared to an o rdinary user. After removing

duplicates, we noted a paucity of unique renderer in -

formation from APWG as well.

ESET. We observed substantial disparities in the

number of CPU c ores, memory size, User-Agent

headers, number of browser plugins, and Renderer

features obtained via WebGL between ESET and or-

dinary users. ESET also demonstrated a less numbe r

of unique renderer values.

3.2 Cache-based Mechanism

3.2.1 Background

Web cache mechanisms aim to alleviate server re-

quest loads, diminish bandwidth utilization, and en-

hance system perform ance. Prominent w e b caching

technologies encompass database cache, CDN cache,

DNS cache, proxy server cache, and browser cache.

The latter involves a browser storing resources ob-

tained fro m HTTP re quests locally, ena bling direct

access to webpages via cache during subsequent vis-

its and precluding repeated server requests. Typically,

browser caching strategies, implemented via HTTP

header settings, consist of local cache and negoti-

ated cache. The form er relies on resource expira tion

time parameters (i.e. , "expires" and "cache-control"),

while the latter hing es on resource modification status

(i.e., "last-modified," "If-modified-since," "Etag ," and

"If-None-Match"). Both strategies result in client-

side, rather than server-side, resou rce loading. Essen-

tially, when a browser accesses a resource, if the lo-

cal cache matc hes, c a ched content is used; otherwise,

a server re quest verifies a negotiated c ache matc h.

Consequently, we suggested a technique to deter-

mine identity ba sed on security crawler access behav-

ior, and conducted preliminary experiments to assess

whether APEs demonstrate cache behavior consis-

tent with regular browser users when visiting phishing

websites.

3.2.2 Experimental Set UP

For this experiment, we constructed a webpage (route:

/index) that incorporated an image (route: /img. jpg),

with the image’s HTTP response header featurin g a

Cache-Control header (max-age=86400), mandating

a 24-ho ur browser cache. To enforce this caching be-

havior, we designed a redirection that compelled mul-

tiple visits to ou r webpage. Clients w e re redirected

to the index page, with varying parameters conveyed

in the URL via the HTML Meta tag, and redirection

was capped at three instances. Consequently, under

default cache strategies employed by Chrome, Fire-

fox, Edge, and Safari, clients would initiate th e fol-

lowing request sequence: /index->/img.jpg (Cached) -

>/index?A->/index?B->/index?C, where, A, B, and C

represent query strings in the URL.

Our experimental websites were deployed on a

cloud server and the URLs were categorized into four

groups, each submitted separately to the four major

APEs: GSB, MS SmartScreen , APWG, and ESET.

The backend of these websites recorded each request

for /index and /img.jp g. During post data collec-

tions, we analyzed requ e sts with URL query strings

named NULL, A, B, and C, along with /img.jpg re-

quests, treating the m as one group. By assessing the

number of /img.jpg requests within this group, we

could gauge the likelihood o f using a browser caching

mechanism to evade detection from APEs.

Impor tantly, we observed during our experiment

that some APEs tended to shift IPs upon redirec-

tion, hindering ou r ability to trace re directions and

tally accesses initiated by a single security crawler.

We thus updated our method with a unique design

(Figure 2) to track specific security crawler reque sts

using a sequence RequestGroupID: [RandomID-1,

RandomID-2, ..., RandomID-N]. Initially, the first Re-

questGrou pID and associated Random ID are g e ner-

ated and the server responds with the RandomID ,

which is subsequently used a s the URL’s query string

for the next redirection. Each client visit to the in-

dex pag e generate s a new RandomID related to the

RequestGroupID until the preset re direction limit is

reached. The initial RandomID is used as the URL pa-

rameter for all subsequent image requests, enabling us

to c ount the number of image file reque sts containing

the same RandomID under the same RequestGroupID

in the URL.

3.2.3 Observed Results

GSB. Our experiment revealed that GSB’s anti-

phishing crawlers exhibit d istinc t behaviours when

accessing static website resour ces compared to reg-

ular user browsers, initiating new re quests for re-

SECRYPT 2023 - 20th International Conference on Security and Cryptography

816

APEs

Experimental

Website

/index page loads an

image with cache policy

/index<img src="img.jpg?RandomID-1">

/img.jpg?RandomID-1

Cache-Control: max-age=86400

Initial Request

/index?RandomID-N<img src="img.jpg?RandomID-1">

/img.jpg?RandomID-1

Cache-Control: max-age=86400

N th Request

.

.

.

Redirect to /index?RandomID-1, 2, 3, 4...

Cache-Control: max-age=86400

Report URLs

Deploy Websites

Data Analysis

Save experimental Data

Count the Number of Requests to

/img.jpg?RandomID

⑦

⑧

①

②

③

④

⑤

⑥

Figure 2: The Experiment Design of Cache-based Mecha-

nism.

sources that should have been cached. Hence, cache

mechanisms might effectively identify GSB crawlers

and could potentially by pass the current GSB phish-

ing detection process.

MS SmartScreen. The experiment data suggest that

MS SmartScreen crawlers’ be haviour towards cached

resources is identical to tha t of regular user s, imply -

ing cache mechanisms cannot identify these crawlers.

APWG. In this experiment, APWG demon-

strated adherence to cac he policies on some occa-

sions—requesting /img.jpg only once upon the ini-

tial visit and n ot on subsequent redirected requests.

However, instances of non-adherence—requesting

/img.jpg multiple tim es within the same requ e st

set—were also o bserved. APWG’s a nti-phishing

crawlers exhibited varied behaviours, leading to the

discovery of some issues:

• In some of APWG’s request data, we fou nd that

APWG’s anti-phishing crawlers could not effec-

tively execute all redirect requests, sometimes

failing to execute the third redirect, eith er only re-

questing the /index page without para meters, or

only executing the /index?A page after the first

redirect, or at most requesting the /index?B page

after the second redirect, which is also signifi-

cantly different from the behavior of normal users.

• APWG’s crawlers invariably disregarded page re-

fresh delay times set in HTML, executing all redi-

rects to the /index page and /img.jpg requests far

quicker than the specified refresh time.

ESET. Similar to APWG, ESE T showed partial com-

pliance with browser cache policies during the exper-

iment. Notably, the behaviours of their anti-phishing

crawlers also exposed the same issues as APWG:

• In all of ESET’s request data, we found that

ESET’s anti-phishing crawlers c ould n ot effec-

tively execute all redirect requ e sts, these anti-

phishing crawlers always failed to execute the

third redirect, either only requ esting the /index

page without parameters, or only executing the

/index?A page after the first redirect, or at most re-

questing the /index?B page after the second redi-

rect, which is also significantly different from the

behavior of normal users.

• Like APWG’s crawlers, ESET’s ignored HTML -

set page refresh delays, executing all possible

redirect /index page and /img.jpg requests much

faster than the allotted refresh time.

Therefore, we postulate that cache mechanisms

can partially identify anti-phishing crawlers from

APWG and ESET. This experiment also unveiled ad-

ditional abnormal behaviours by APWG and ESET

when visiting phishing websites, potentially ex-

ploitable alongside cache mechanisms.

3.3 Client Hints in HTTP Header

3.3.1 Background

The use of Client Hints in th e HTTP header, akin

to browser cache, is a technique employed to opti-

mize perfo rmance and enhance user exper ience. This

method allows clients to actively convey certain char-

acteristics to the server, such as device type, operating

system, and network information. Consequently, the

server can deliver personalized content based on these

characteristics, fostering improved browsing experi-

ences. Upon a user’s webpage visit, the server can

issue an Accept- CH request to the browser, soliciting

Client Hints. The client reciprocates by r eturning the

requested data in the HTTP header. Notwithstanding

the fact that Accept-CH lacks universal browser com -

patibility, it is sup ported by major desktop browsers,

including Chrome, Edge, and Opera. Interestingly, re-

cent cybersecurity and privacy research su ggests po-

tential user tracking v ia this vector (Ali et al., 2023).

Thus, we conducted a preliminary experiment to a s-

certain whether the Client Hints returned by security

crawlers differ fro m those of regular users, there by

exploring the feasibility of identifying APEs using

Client Hints.

3.3.2 Experimental Set Up

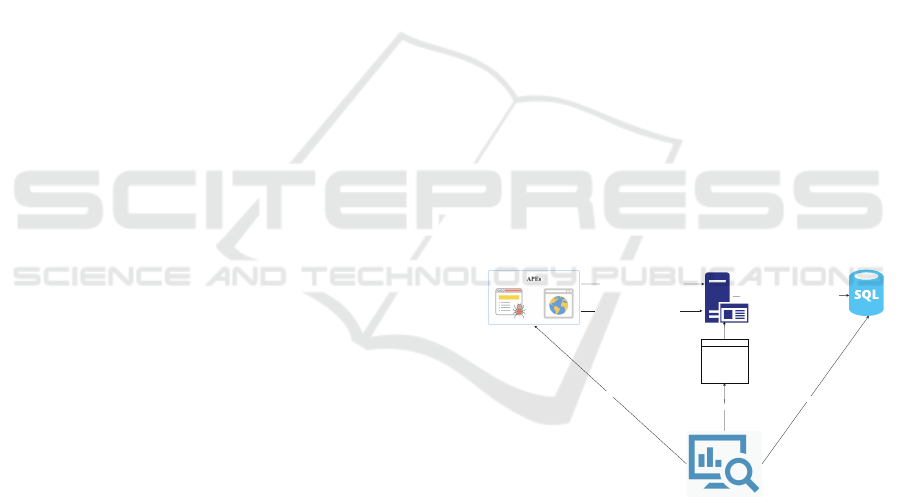

Figure 3 depicts the design of this experiment,

wherein we crafted a single-page website featuring

an image. When /in dex was acc essed from a reg-

ular user’s browser, it would automatically solicit

/img.jpg. The resp onse returned encompassed the

Accept-CH direc tive, incorporating all hints that the

server sought. Desktop browsers co mpatible with

Accept-CH appended some or all of the listed client

hint headers in sub sequent requests. We launched

these websites on a cloud server, categorizing the

URLs into f our groups for submission to the four key

APEs: GSB, MS SmartScreen , APWG, and ESET.

Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites Using Novel Cloaking Techniques

817

The backend of these websites logged all ac quired

data, including HTTP headers. Following data col-

lection, we scrutinized the HTTP headers in requests

related to the Client Hints we outlined in Accept-CH

and contrasted these with data fr om real user browsers

supporting Client Hints. This facilitated an assess-

ment of the potential for utilizing Client Hints in re-

quest hea ders to evade detection by security crawlers.

APEs

Experimental

Website

/index

responds with Accept-CH

and loads img.jpg

/index

Accept-CH:sec-ch-ua-platform,downlink...

/img.jpg

Report URLs

Deploy Websites

Data Analysis

Save experimental Data

Analyze HTTP Headers

GET /img.jpg HTTP/1.1

Host: exmaple.com

......

Sec-Ch-Ua-Platform: "Windows"

Downlink: 9.8

......

①

②

③

④

⑤

⑥

⑦

Figure 3: The Experiment Design of Client Hints.

3.3.3 Observed Results

GSB. Our exper iment reveals that GSB’s anti-

phishing crawlers fully support Accept-CH in relation

to browser type and versio n. However, the se crawlers

inconsistently adhere to the Accept-CH directive, of-

ten n eglecting to incorporate all spec ified Client Hints

in subsequ e nt requests. A noticeable omission is all

User Agent Client Hints (UA-CH) beginning with

Sec-CH-UA. This discrepancy results in a near 50%

reduction in Client Hints in the HTTP request headers

compare d to norma l users u nder identical Accept-CH

specifications. This significa nt difference suggests

that Client Hints could serve as an effective identi-

fier for GSB, potentially offering a method to by pass

current phishing detection mec hanisms.

MS SmartScreen. The performance of MS

SmartScreen’s anti-phishing crawlers clo sely mirrors

that of n ormal users in terms of Client Hints’ content

and items in HTTP req uest headers. Consequently, it

is not possible to distinguish MS SmartScre en based

solely on Client Hints.

APWG. A majority of APWG’s anti- phishing

crawlers sup port Client Hints, with a mere 2% of re-

quests originating from unsupported browsers. The

complianc e of th ese crawlers with Accept-CH closely

aligns with that of GSB, primarily ignoring UA-CH

headers beginning with Sec-CH-UA. Interestingly, a

subset of APWG’s crawlers return NULL values for

these headers, differing from typical user behavior.

This information suggests that Client Hints can serve

as an effective identifier for APWG, p otentially offer-

ing a method to bypass its current phish ing detection

mechanisms.

ESET. Our study of ESET reveals a diverse array of

clients with varying degrees of Client Hints support.

Approximately 10% of these clients exhibit beh av-

ior consistent with typical users in th eir adherence to

Accept-CH. The remaining majority disp la y behavior

similar to GSB or APWG, either ignoring Sec-CH-

UA starting UA-CH headers or returning NULL val-

ues. This suggests that Clien t Hints can be effectively

used to identify ESET crawlers, providing a poten-

tial method to bypass their existing phishing dete ction

mechanisms.

3.4 Summary

Upon conducting preliminary investigations into Vir-

tual Machine Detection, Cache-based Mechanisms,

and the use of Client Hints from HTTP requests, we

posit that these me thods offer innovative avenues for

APE identification and evasion, to varying degrees.

This analysis also uncovers the potential vulnerabili-

ties these four APEs might enc ounter. Here, we enu-

merate the primary risks:

• GSB crawlers clearly indicate virtualization tech-

nology usage. Throug h the use of Web APIs and

JavaScript code, po te ntial attackers may identify

specific browser a nd device features, thereby dis-

tinguishing GSB crawlers and evading GSB de-

tection. The range of Renderers obtained via We-

bGL for GSB crawlers is significantly limited,

permitting attackers to acquire this information,

construct a feature dataset, a nd subsequently iden-

tify GSB crawlers. Lastly, GSB crawlers do not

adhere to browser caching policies and do not

fully adher e to the Accept-CH directive of Client

Hints when visiting websites. The se distinctive

behaviors could be exploited by attackers to filter

GSB requests and dodge detection.

• MS SmartScreen crawlers display user-like be-

havior when visiting phish ing websites, posing

challenges for identification and evasion through

the cache-based mechanism or Client Hints. De-

spite this, M S SmartScreen does reveal limita-

tions in handling virtualization feature detection

and Renderer diversity. These shortcoming s pro-

vide potential avenues for detectio n evasion.

• APWG crawlers em ulate real-user behaviors

when accessing phishing websites, in cluding par-

tial adherence to browser cache behaviors. This

similarity confers a degree of resistance to cache-

based mechanisms. However, APWG’s defense

against virtual machine detection and the use

of Client Hints is inadequate. Additionally, its

handling of web page redirection and d elayed

SECRYPT 2023 - 20th International Conference on Security and Cryptography

818

page refresh diverges from typical user behav-

ior. These disparities allow attackers to identify

APWG crawlers and evade detection by employ-

ing multiple redirec ts or delayed webp age load-

ing.

• ESET has a more obvious use of virtualization

technology, with similar resilience as APWG in

virtual machine detection techniques and use of

Client Hin ts in HTTP requests. I t also has incon-

sistent behaviors in resourc e consumption activi-

ties (redirection and delay e d pa ge refresh) com -

pared to the behavior of real user. Attackers can

exploit these flaws to evade detection from ESET.

Table 1 presents a comparative analysis of the

effectiveness of three potential cloaking techniques

against APEs. To further evaluate these methods

against anti-phishing blacklists and to assess their po-

tential risks to Internet users, we have designed an

additional experimen t to observe the real blacklisting

behavior of mainstrea m browsers against these tech-

niques.

Table 1: The comparison of effectiveness of potential cloaks

against APEs

GSB

MS SmartScreen

APWG

ESET

Virtual Machine Detection

√

√

√

√

Cache-based Mechanism

√

×

√

√

Client Hints

√

×

√

√

APEs

Potential Cloaks

-> More effective -> Less effective × -> Not effective

1. Configure the Phishing

Website Per Experiment

2. Deploy the Phishing

Websites on the Cloud

3. Configure Domains for

Phishing Websites

4. Report the URLs of

Phishing Websites to APEs

5. Monitor Blacklisting on

our designed Platform

6. Disable the Websites &

Analyze the Data

Figure 4: The overview process of the experiment.

4 PROPOSED SCHEME

Figure 4 offers an overview of our experim ental pro-

cedure. Initially, we developed co rresponding simu-

lated phishing web sites incorporating the aforemen-

tioned techniques. 2 0 such web sites including three

experimental groups and one control were deployed

on the cloud, featuring independent domain names

and uniqu e random paths to circumvent any effects

of unrelate d internet scanning or accidental c rawling.

Given that no primary browsers publicly acknowl-

edge the use of APWG and ESET for their blacklist

data, we focused on GSB and MS SmartScree n whose

blacklists extend to do minant browsers such as Safari,

Chrome, Firefox, and Edge. This allowed our results

to reflect the broa d impact of cloakin g techn iques on

internet users.

Subsequently, we scrutinized the blacklisting be-

havior on Windows desktop b rowsers and macOS

platforms over a 72-hour period. In line with past

research citing an average phishing website lif e span

of around 21 hours (Oest et al., 2020a), we deemed

this timeframe sufficient. Post monitoring, we dis-

continued these simulate d websites and analysed the

obtained data.

To automate o ur process, we engineere d a sys-

tem that chiefly embraces a BS/CS hybrid architec-

ture, f eaturing five modules: Control Nod e, Monitor-

ing Node, Task Manager, Clo ud Server Manager, and

Visualized Management Platform (illustrated in Fig-

ure 5). This system enables automated deployment of

simulated phishing websites, scheduled monitoring of

blacklisting behavior, and data visualization. Operat-

ing in a distributed fashion, it offers scalability, flexi-

bility, and a utomated data and resource adjustment.

OCR Module

Cloud Server

Monitoring Node

Windows/MacOSChrome/Saf

ri/FireFox/Edge

Task

APEs

Database

Task Manager

Visualized Management Platform

Task

Management

Cloud Server

Management

Data

Visualization

Log

Control Node

Node control

Open Friefox...

Screenshot...

Close Firefox...

http://

Screenshot

Simulated Phishing Websites

Data Analysis

Cloud Server Manager

Figure 5: The modules and workflow of proposed system.

5 EXPERIMENT SETUP

We conducted an experiment encompassing three ex-

perimental and one contr ol group to evaluate the ef-

ficacy of three poten tial cloaking techniques against

anti-phishing blacklists: virtual machine detection,

the cache-based mechanism, and the application of

Client Hints in HTTP headers. Each experimental

group utilized one technique to simulate five identi-

cal phishing web sites.

Experiment A – Baseline: The websites in Exper-

iment A imitated Facebook’s login page without any

cloaking techniques. This group served as a blacklist-

ing effectiveness baseline in comp arison to the other

groups that utilized cloaking tech niques.

Experiment B – Virtual Machine Detectio n: This

experiment’s web sites used virtual machine d etec-

tion techniques. Leveraging the limited diversity o f

Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites Using Novel Cloaking Techniques

819

the WebGL Renderer noted in the prelimina ry ex-

periment, we processed previously collected WebGL

Renderer data to compile a feature dataset. These

features, along with other detection features from

the dataset, wer e integrated into the phishing web-

site using the GoFrame framework. The backend re-

ceived various data from crawlers through frontend

JavaScript code and compared these fea tures with the

dataset. Requests initially landing on a benign page

were classified based o n their match with the data set,

and according ly, directed to either a benign or phish-

ing webpage.

Experiment C – Cache-based Mech anism: This

group’s websites employed a Cache-based Mecha-

nism. Based on crawler beh avior observed in th e pre-

liminary experiment, we implemented two redirects

on the we bsites with an imag e on the pag e. Similar

to the preliminary experiment m ethod, we identified

requests initiated by the same crawler and logged th e

image request frequency on the backend. After an ini-

tial landing on a benign webpage, the image request

count determine d the subsequent re direction, either to

a benign or phishing webpage.

Experiment D – Utilization of Client Hints: Based

on preliminary experiment data, we developed a set

of rules for Client Hints in HTTP headers and im-

plemented them in the backend of all Experiment D

websites. Clients initially accessed a benign landing

page, receiving a server response with Accept-CH,

specifying required client hints for the HTTP headers.

If no match was found with our rules set, the client

was redirected to a phishing webpage . Conversely, a

match identified the client as a security crawler, redi-

recting it to a benign webpage to avoid detection.

6 RESULT AND ANALYSIS

For 72 hours, our monitoring platfor m observed six

desktop browsers, each acc essing five URLs per ex-

periment, culminatin g in a total of 30 monitored

URLs per experiment. Figure 6 r eveals that all phish-

ing websites from Experiment A were blacklisted

within 12 hours by Chrome and Firefox on Windows,

and by Chrom e, Firefo x, and Safari on ma c O S. In

contrast, three websites from Experiment A re mained

unblack listed by Edg e o n Windows at the end of our

observation period. Remarkably, the simulated phish-

ing websites in Experiments B and D, employing vir-

tual machine detectio n techniques and HTTP Client

Hints headers respectively, evaded blacklisting by all

browsers th roughout the experiment. As depicted in

Figure 7, only th ree websites from Experiment C, uti-

lizing a cache -based mechanism, were blacklisted by

Edge on Windows after 7 to 7.5 h ours; all others re-

mained unblacklisted.

Figure 6: Number of blacklisted websites in all browsers of

test over the submission ti me.

Figure 7: Number of blacklisted websites in all browsers of

Cache-based Mechanism over the submission time.

Our findings suggest that b oth virtual machine

detection techniques an d the use of Client Hints

in HTTP headers can effectively evade detection

from G SB and MS SmartScreen crawlers, thereby

avoiding blacklisting and potentially exposing Inter-

net users to risk. Similarly, the cache-based mech-

anism de monstrated substantial evasion capabilities

against GSB crawlers and to a lesser extent against

MS SmartScreen, posing potential risks to users.

7 CONCLUSION

Anti-phishing blacklists constitute a critical mecha-

nism for safeguarding Internet users from phishing

websites and are extensively integrated into a multi-

tude of browsers. This paper explores c ontemporary

cloaking techn iques deployed by phishing websites,

shedding light on vu lnerabilities and potential hazards

inherent in current anti-phishing blacklists. We pro-

pose three novel cloaking strategies that have adeptly

SECRYPT 2023 - 20th International Conference on Security and Cryptography

820

circumvented detection from mainstream APEs and

assess their efficacy using a bespoke framework. This

paper a lso underlines poten tial strategies for bypass-

ing APE detection. Future studies could further delve

into these avenues, investigating real-world ap plica-

tion of these cloaking techniques or conducting large-

scale evaluations of these methodologies.

REFERENCES

Acharya, B. and Vadrevu, P. (2021). Phishprint: Evad-

ing phishing detection crawlers by prior profiling. In

USENIX Security Symposium, pages 3775–3792.

Al-Ahmadi, S., Alotaibi, A., and Alsaleh, O. (2022). Pdgan:

Phishing detection with generative adversarial net-

works. IEEE Access, 10:42459–42468. https://doi.

org/10.1109/ACCESS.2022.3168235.

Alabdan, R. (2020). Phishing attacks survey: Types,

vectors, and technical approaches. Future Internet,

12(10). https://doi.org/10.3390/fi12100168.

Ali, M. M., Chitale, B., Ghasemisharif, M., Kanich, C.,

Nikiforakis, N., and Polakis, J. (2023). Navigating

murky waters: Automated browser feature testi ng for

uncovering tracking vectors. In P roceedings 2023

Network and Distributed System Security Symposium.

Network and Distributed System Security Symposium,

San Diego, CA, USA. htt ps://doi.org/10.14722/ndss.

2023.24072.

APWG (2017). Phishing activity trends report, 4th quarter

2017. https://docs.apwg.org//reports/apwg_trends_

report_q4_2017.pdf.

APWG (2022). Phishing activity trends r eport, 4th quar-

ter 2021. https://docs.apwg.org/reports/apwg_trends_

report_q4_2021.pdf.

Bell, S. and Komisarczuk, P. (2020). An analysis of phish-

ing blacklists: Google safe browsing, openphish, and

phishtank. In Proceedings of the Australasian Com-

puter Science Week Multiconference, ACSW ’20, New

York, NY, USA. Association for Computing Machin-

ery. https://doi.org/10.1145/3373017.3373020.

Freeze, D. (2018). Cybercrime To Cost

The World $10.5 Trillion Annually By

2025. https://cybersecurityventures.com/

cybercrime-damages-6-t r illion-by-2021/.

Gupta, S., Singhal, A., and Kapoor, A. (2016). A litera-

ture survey on social engineering attacks: Phishing

attack. In 2016 International Conference on Com-

puting, Communication and Automation (IC CCA),

pages 537–540. https://doi.org/10.1109/CCAA.2016.

7813778.

Han, X., Kheir, N., and Balzarotti, D. (2016). PhishEye:

Live Monitoring of Sandboxed Phishing Kit s. In Pro-

ceedings of the 2016 ACM SIGSAC Conference on

Computer and Communications Security, CCS ’16,

pages 1402–1413, New York, NY, US A . Association

for Computing Machinery. https://dl.acm.org/doi/10.

1145/2976749.2978330.

Lin, X., Ilia, P. , Solanki, S., and Polakis, J. (2022). Phish in

sheep’s clothing: Exploring the authentication pitfalls

of browser fingerprinting. In 31st USENIX Security

Symposium (USENIX Security 22), pages 1651–1668.

Maroofi, S ., Korczy

´

nski, M., and Duda, A. (2020). Are you

human? resilience of phishing detection to evasion

techniques based on human verification. In Proceed-

ings of the ACM Internet Measurement Conference,

IMC ’20, page 78–86, New York, NY, USA. Asso-

ciation for Computing Machinery. https://doi.org/10.

1145/3419394.3423632.

Oest, A., Safaei, Y., Doupé, A., Ahn, G.-J., Wardman, B.,

and Tyers, K. (2019). Phishfarm: A scalable frame-

work for measuring the effectiveness of evasion tech-

niques against browser phishing blacklists. In 2019

IEEE Symposium on Security and Privacy (SP), pages

1344–1361. https://doi.org/10.1109/SP.2019.00049.

Oest, A., Safaei, Y., Zhang, P., Wardman, B., Tyers, K.,

Shoshitaishvili, Y., Doupé, A., and Ahn, G. (2020a).

Phishtime: Continuous longitudinal measurement of

the effectiveness of anti-phishing blacklists. In Pro-

ceedings of the 29th USENIX Security Symposium,

Proceedings of the 29th USENIX Security Sympo-

sium, pages 379–396. USENIX Association.

Oest, A., Safei, Y., Doupé, A., Ahn, G.-J., Wardman, B.,

and Warner, G. (2018). Inside a phisher’s mind:

Understanding the anti-phishing ecosystem through

phishing kit analysis. In 2018 APWG Symposium

on Electronic Crime Research (eCrime), pages 1–12.

https://doi.org/10.1109/ECRIME.2018.8376206.

Oest, A., Zhang, P., Wardman, B., Nunes, E., Burgis,

J., Zand, A., Thomas, K., Doupé, A., and Ahn, G.

(2020b). Sunrise to sunset: Analyzing the end-to-

end life cycle and effectiveness of phishing attacks at

scale. In Proceedings of the 29th USENIX Security

Symposium, Proceedings of the 29th USENIX Secu-

rity Symposium, pages 361–377. USENIX Associa-

tion.

Pujara, P. and Chaudhari, M. (2018). Phishing website

detection using machine learning: A review. In-

ternational Journal of Scientific Research in Com-

puter Science, Engineering and Information Technol-

ogy, 3(7):395–399.

World Economic Forum (2020). Partnership against

Cybercrime. https://www3.weforum.org/docs/WEF_

Partnership_against_Cybercrime_report_2020.pdf.

Zhang, P., Oest, A., Cho, H., Sun, Z., Johnson, R., Ward-

man, B., Sarker, S., Kapravelos, A., Bao, T., Wang, R.,

Shoshitaishvili, Y., Doupé, A., and Ahn, G.-J. (2021).

Crawlphish: Large-scale analysis of client-side cloak-

ing techniques in phishing. In 2021 IEEE Sympo-

sium on Security and Privacy (SP), pages 1109–1124.

https://doi.org/10.1109/SP40001.2021.00021.

Uncovering Flaws in Anti-Phishing Blacklists for Phishing Websites Using Novel Cloaking Techniques

821