Evaluating Deep Learning Assisted Automated Aquaculture Net Pens

Inspection Using ROV

Waseem Akram, Muhayyuddin Ahmed, Lakmal Seneviratne and Irfan Hussain

Khalifa University Center for Autonomous Robotic Systems (KUCARS), Khalifa University, U.A.E.

Keywords:

Aquaculture, Net Defect Detection, Deep Learning, Marine Vehicle.

Abstract:

In marine aquaculture, inspecting sea cages is an essential activity for managing both the facilities’ environ-

mental impact and the quality of the fish development process. Fish escape from fish farms into the open

sea due to net damage, which can result in significant financial losses and compromise the nearby marine

ecosystem. The traditional inspection system in use relies on visual inspection by expert divers or Remotely

Operated Vehicles (ROVs), which is not only laborious, time-consuming, and inaccurate but also largely de-

pendent on the level of knowledge of the operator and has a poor degree of verifiability. This article presents

a robotic-based automatic net defect detection system for aquaculture net pens oriented to on-ROV process-

ing and real-time detection. The proposed system takes a video stream from an onboard camera of the ROV,

employs a deep learning detector, and segments the defective part of the image from the background under

different underwater conditions. The system was first tested using a set of collected images for comparison

with the state-of-the-art approaches and then using the ROV inspection sequences to evaluate its effectiveness

in real-world scenarios. Results show that our approach presents high levels of accuracy even for adverse

scenarios and is adequate for real-time processing on embedded platforms.

1 INTRODUCTION

Marine aquaculture has become an essential part of

meeting the growing demand for high-quality pro-

tein while also preserving the ocean environment (Gui

et al., 2019). There are various types of marine aqua-

culture facilities, such as deep-sea cage farming, raft

farming, deep-sea platform farming, and net enclo-

sure farming (Yan et al., 2018). Advancements in

engineering and construction have significantly im-

proved the wave resistance of aquaculture facilities

like cages and net enclosures (Zhou et al., 2018).

However, the netting used in these facilities is a cru-

cial component that is easily damaged and difficult

to detect, leading to the escape of cultured fish and

causing substantial economic losses every year. The

detection of net damage is currently impeding the de-

velopment of marine aquaculture facility (Wei et al.,

2020).

At present, the standard practice for identifying

damage in sea cages involves human visual inspec-

tion, which can be carried out directly by professional

divers or remotely by observing video feeds captured

with Remotely Operated Vehicles (ROVs) (Wei et al.,

2020) as shown in Figure 1. However, relying on

(a) ROV. (b) Net hole.

(c) Plastic. (d) Vegetation.

Figure 1: Automatic aquaculture inspection using ROV. (a)

shows an ROV during aquaculture net pens inspection, (b)

shows an example of the hole on the net, (c) shows an ex-

ample of plastic entangled on the net, and (d) shows an

example of vegetation attached to the net. Courtesy of

(Roboflow, 2022).

professional divers for inspections can be expensive,

time-consuming, and poses safety risks. Furthermore,

it provides low coverage, verifiability, and repeatabil-

ity (Akram et al., 2022). In contrast, analyzing images

586

Akram, W., Ahmed, M., Seneviratne, L. and Hussain, I.

Evaluating Deep Learning Assisted Automated Aquaculture Net Pens Inspection Using ROV.

DOI: 10.5220/0012160900003543

In Proceedings of the 20th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2023) - Volume 1, pages 586-591

ISBN: 978-989-758-670-5; ISSN: 2184-2809

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

captured by ROVs equipped with different types of

cameras eliminates safety risks and offers the poten-

tial to implement computer-assisted damage detection

procedures through computer vision techniques. Re-

cent developments have shown the use of deep learn-

ing approaches (Sun et al., 2020; Yang et al., 2021)

such as Mask R-RCNN (He et al., ), Fast-RCNN (Gir-

shick et al., 2015), YOLO (Jocher et al., 2022), and

SSD (Liu et al., 2016) for automatic aquaculture net

damage detection.

Various sensor modalities, such as cameras or

acoustic-based sensors, are used for automatic inspec-

tion operations of net cage structure integrity. Com-

puter vision-based approach, such as (Zhang et al.,

2022) proposed by Zhang et al. uses Mask-RCNN

(He et al., ) for net hole detection problems in a lab-

oratory setup. The experimental results demonstrated

an Average Precision score of 94.48%. Similarly,

Liao et al. (Liao et al., 2022) proposed a MobileNet-

SSD network model for hole detection in open-sea

fish cages. In this work, net hole detection is per-

formed by fusing MobileNet with the SSD network

model. The results showed an average precision score

of 88.5%. Tao et al. (Tao et al., 2018), applied deep

learning to detect net holes using the YOLOv1 (You

Only Look Once) algorithm on images captured un-

der controlled lab conditions. In contrast, Madshaven

et al. (Madshaven et al., 2022), employed a combi-

nation of classical computer vision and image pro-

cessing techniques for tracking, alongside neural net-

works for segmenting the net structure and classify-

ing scene content, including the detection of irregu-

larities caused by fish or seaweed. Qiu et al. (Qiu

et al., 2020), utilized image-enhancing methods for

net structure analysis and marine growth segmenta-

tion.

In previous literature on aquaculture inspection

and monitoring perspective, most of the studies have

focused on the hole detection problem. However,

apart from the net hole, other serious problems can

damage the net structure. For example, vegetation

can grow and become attached to the net pens and

cause fouling, which can reduce water flow and oxy-

genation. They can also damage the net which in-

creases the risk of escape for the fish. In addition,

plastic waste can entangle and harm fish, reduce wa-

ter flow and oxygenation, and leach harmful chemi-

cals into the water. To mitigate the negative effects of

net holes, vegetation, and plastic waste on aquaculture

net pens, it is important to implement proper net de-

fect detection methods to perform regular inspection

and monitoring activity to prevent the accumulation

and damage of these net abnormalities.

1.1 Contributions

This paper introduces deep learning coupled with the

ROV method that focuses on detecting irregularities

in aquaculture net pens, which is a critical task within

the full inspection process. The method proposed

in this paper employs a deep learning-based detector

to identify areas where potential net defects such as

plants, holes, and plastic exist in the net structure. We

have evaluated different variants of the YOLO deep

learning model to identify the net defects in real time

using the ROV. The proposed approach leverages tra-

ditional computer vision and image processing meth-

ods and works under realistic lighting conditions. The

method has been tested at a lab setup for fish nets of

10x10m long mesh placed in the pool.

2 PROPOSED METHOD

Our proposed method for identifying net damage

involves utilizing both image and video processing

techniques in a cohesive workflow. The approach

aims to identify and track net defects such as vege-

tation, holes, and plastic in the aqua-net. To achieve

this, an ROV named Blueye Pro ROV X, equipped

with an HD camera is utilized to conduct a controlled

inspection campaign in the Marine Pool at Khalifa

University, UAE. The camera remains at a fixed dis-

tance from the area of interest throughout the inspec-

tion, with the option to adjust the distance as needed.

The net is 10x10m long mesh placed in the pool

where vegetation and plastic are attached to the net.

In addition, there are net holes at different location on

the net surface. The net is spread around the side of

the pool, and the ROV is allowed to inspect the net at

constant speed to record the experimental data.

The ROV as shown in Figure 2, has six thrusters

and is equipped with Doppler Velocity Log (DVL),

Inertial Measurement Unit (IMU), and camera sensor.

The ROV can operate in saltwater, brackish water, or

freshwater for up to 2 hours on a charge and can de-

scend to a depth of 300 meters when tethered by a

cable measuring 400 meters in length. In addition, a

forward-facing camera capable of 25-30 frames per

second (fps) in full HD has been placed for use in

making high-quality video streams of the surrounding

area. The captured video feed is then used as input for

the processing flow.

The video that was captured has dimensions of

1920 x 1080 and a frame rate of 30 frames per sec-

ond. To extract image sequences of a total of 510, we

captured one frame every five seconds from the video.

For training, we utilized 90% images from the dataset,

Evaluating Deep Learning Assisted Automated Aquaculture Net Pens Inspection Using ROV

587

Figure 2: The Blueye Prov ROV x deployed for aqua-net

inspection.

while the remainder was allocated for testing.

To validate and show the effectiveness of the

method, the ROV is subjected to perform the real-time

detection of the aqua net defects. To achieve this, we

deploy the ROV in the pool. The initial position of the

ROV is at a constant distance parallel to the net. The

ROV communicates with the surface computer via a

tether line for video transmission. Moreover, the ROV

moment is manually controlled through a remote con-

troller. The online camera recording was then used on

the surface computer and given to the detector mod-

ule. The detector module was allowed to display the

detection along with a bounding box.

2.1 Detection Approaches

Deep learning models have proven to be highly effec-

tive in detecting and precisely locating the affected re-

gion within an input image. Numerous cutting-edge

models have been introduced in the research literature

to address this task. In our study, we conducted tests

and evaluations on several widely employed detection

models, namely YOLOv4 (Bochkovskiy et al., 2020),

YOLOv5 (Jocher et al., 2022), YOLOv7 (Wang et al.,

2023), and YOLOv8 (Jocher et al., 2023), to identify

and localize vegetation, plastic, and holes using the

Aqua-Net detection method.

YOLOv4 represents an enhanced iteration of

YOLOv3, surpassing it in terms of mean average pre-

cision (mAP) and FPS. It is recognized as a one-stage

object detection approach, which comprises three key

components: backbone, neck, and head. The back-

bone component, referred to as CSPDarknet53, is

a pre-trained Convolutional Neural Network (CNN)

with 53 CNN layers. Its principal objective is to ex-

tract features and generate feature maps from the in-

put images. The neck component incorporates spatial

pyramid pooling (SPP) and a path aggregation net-

work (PAN), linking the backbone to the head. Lastly,

the head component processes the aggregated features

and makes predictions regarding bounding boxes and

classification.

The architecture of YOLOv5 is based on a back-

bone network, typically a CNN, which extracts fea-

tures from the input image. These features are then

passed through several additional layers, including

convolutional, upsampling, and fusion layers, to gen-

erate high-resolution feature maps. The model further

utilizes anchor boxes, which are pre-defined boxes of

various sizes and aspect ratios, to predict bounding

boxes for objects. YOLOv5 predicts the coordinates

of bounding boxes relative to the grid cells and re-

fines them with respect to anchor boxes. To train the

YOLOv5 model, a large labeled dataset is required,

along with bounding box annotations for the objects

of interest. The model is trained using techniques like

backpropagation and gradient descent to optimize the

network parameters and improve object detection per-

formance. YOLOv5 offers different model sizes (e.g.,

YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x)

that vary in terms of depth and computational com-

plexity. These different variants provide a trade-off

between speed and accuracy, allowing users to choose

the model that suits their specific requirements.

Yolov7s represents a compact variant of YOLOv7,

which is an improved version of YOLOv6 in terms of

mean average precision (mAP), detection speed, and

inference performance. YOLOv7 introduces an ex-

tension of the Efficient Layer Aggregation Network

(ELAN) called extended ELAN (EELAN). The net-

work incorporates various fundamental techniques,

such as expand, shuffle, and merge, to enhance its

learning capability without disrupting the gradient

flow. These enhancements are aimed at improving

the network’s performance while maintaining its ef-

ficiency and preserving the flow of gradients. In ad-

dition, YOLOv7 also emphasizes the utilization of

methods such as trainable ”bag-of-freebies” and op-

timization modules. The model incorporates differ-

ent computational blocks to extract more distinct fea-

tures. These feature maps from each computational

block are grouped into sets of a specific size, denoted

as ”s,” and subsequently concatenated. Finally, the

cardinality is merged by shuffling the group feature

map, completing the overall process.

The YOLOv8n model, as the nano edition of the

YOLOv8 family, stands out for its compact size, ex-

ceptional speed, and improved detection capabilities.

It is particularly well-suited for object detection and

classification tasks. Moreover, when combined with

instance segmentation and object tracking techniques,

it proves to be at the forefront of the State-of-the-Art

(SOTA), delivering advanced performance in various

computer vision applications. The model is charac-

terized as anchor-free, indicating that instead of esti-

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

588

mating the deviation from a predefined anchor box,

it directly predicts the center point of an object in

an image. In YOLOv8, image augmentation is per-

formed at each epoch to enhance the training pro-

cess. The technique employed is mosaic augmenta-

tion, where four images are stitched together to cre-

ate a mosaic, thereby encouraging the model to learn

from new spatial arrangements. One notable change

in the YOLOv8 architecture is the replacement of the

c3 module with the c2f module. In the c2f module,

the outputs from Bottleneck layers are concatenated,

whereas in the previous c3 module, only the output

from the last Bottleneck layer was utilized. Addition-

ally, the first set of 6x6 convolutional layers in the

Backbone module is substituted with a 3x3 convolu-

tional block, bringing about a structural modification

in YOLOv8.

3 IMPLEMENTATION AND

EXPERIMENTAL SETUP

In order to perform the aquaculture net defect de-

tection including vegetation, plastic, and net holes,

the system is tested in real-time using ROV. The ex-

perimental environment is developed on a core i9-

10940@3.30 GHz processor, 128GB RAM, and a sin-

gle NVIDIA Quadro RTX 6000 GPU equipped with

a CUDA toolkit. Python version 3.8.10 was utilized,

alongside the PyTorch 1.13.1 cuda 11.7 framework.

Additionally, the model underwent training for 300

epochs, with each epoch consisting of 512 iterations.

The collected images having a resolution of 1920 x

1080 pixels are annotated by the LabelImg annotation

tool, and the TXT annotation file in YOLO format is

created, then the ratio of training set and testing set is

set to 9:1.

4 RESULTS AND DISCUSSIONS

The best-trained model was analyzed and embed-

ded on the ROV for real-time detection which helps

the user to timely detect the aqua-net defects within

aquaculture. First, the experimental results of the

YOLOv4, YOLOv5, YOLOv7, and YOLOv8 are

shown in Table 1. According to Table 1, YOLOv5

has an advantage over other YOLO model variants in

terms of mAP metrics. YOLOv4 showed a better pre-

cision score than the other models. In terms of Recall,

YOLOv4 has achieved a 100% precision score. Simi-

larly, a higher F1 score was achieved by all models. It

is noted that the YOLOV7 has less detection perfor-

Table 1: Performance of YOLOV detectors.

Detector mAP Precision Recall F1 score

YOLOv4 0.9901 0.9998 0.9980 0.9990

YOLOv5 0.9950 0.9895 0.9901 0.9922

YOLOv7 0.9656 0.9698 0.9006 0.9290

YOLOv8 0.9946 0.9766 0.9998 0.9881

mance compared to other YOLO variants.

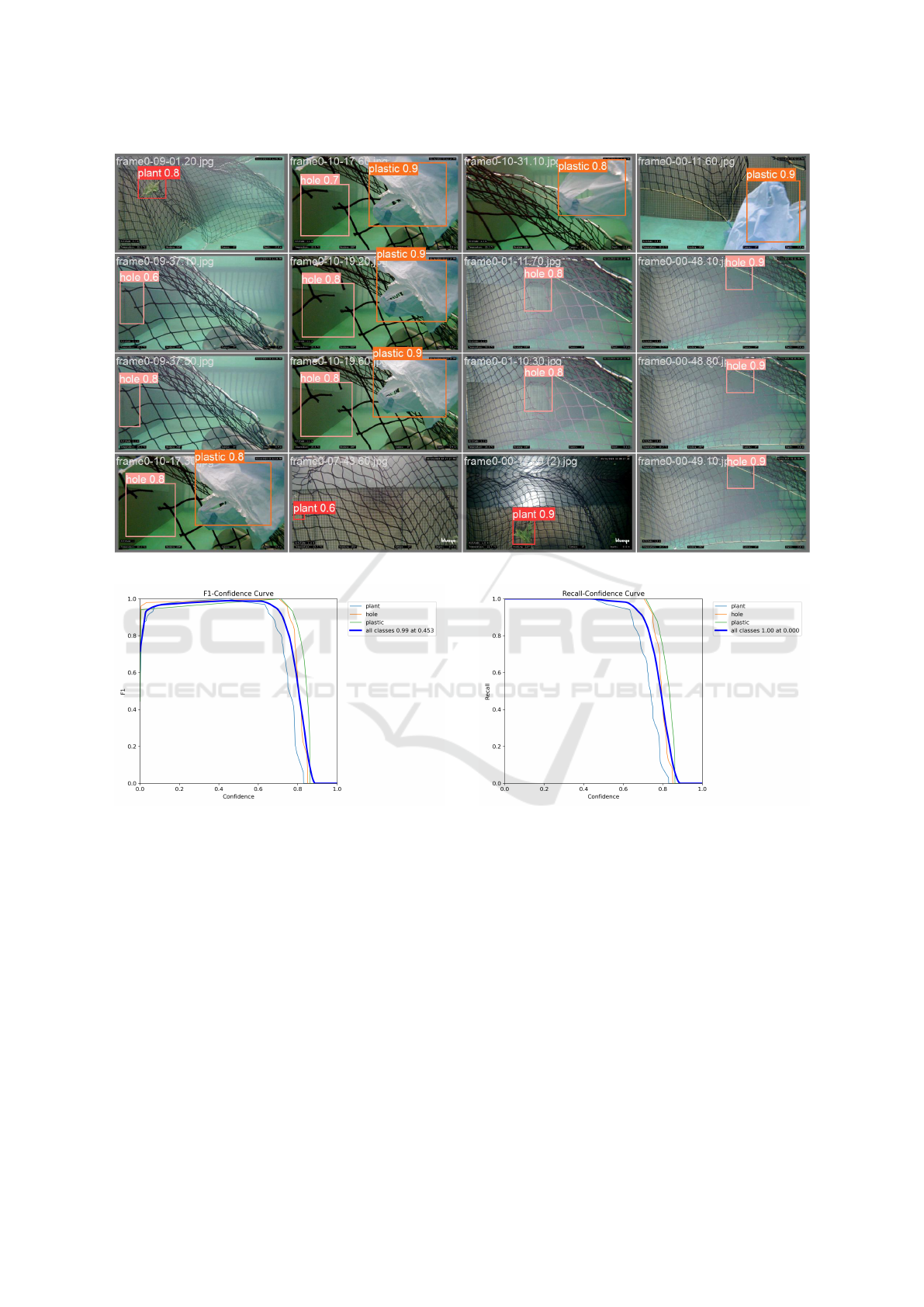

The aqua-net detection visualization results are

shown in Figure 3. Here, the analysis was performed

to view the detected aqua-net defects i.e., plant, holes,

and plastic after training the YOLOv5 model. The

model was deployed on the unseen real-time images

in a pool set up to check the feasibility of the model

after training on the collected custom dataset. The

threshold value was set to 0.3, which means if the

confidence score is greater or equal to 30% then the

model categorizes it into the relevant class. In some

cases, the percentage of defections is a little lower.

The performance variation is due to the presence of

different inputs in real time. Moreover, the model

showed the ability to detect the net defect of differ-

ent sizes as can be noted in Figure 3.

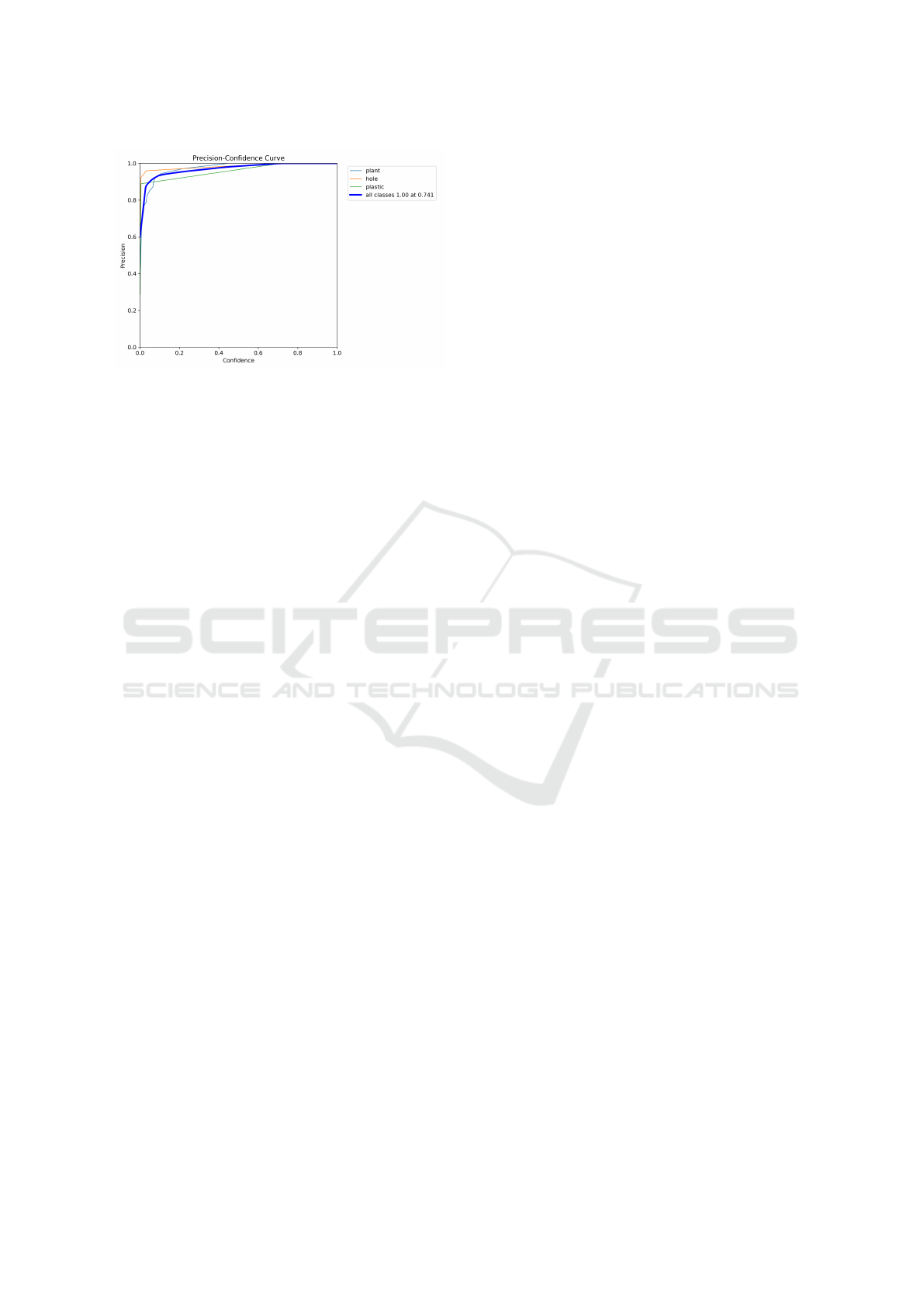

Moreover, the performance of the detection

yolov5 model is shown in Figures 4, 5, and 6 in terms

of F1 curve, Precision curve, and Recall curve. In

Figure 4, among all three classes i.e., plant, hole, and

plastic, the hole class achieved higher at the start,

while the plastic class has the advantage over the oth-

ers after training completion. However, the plant class

achieved fewer scores than the others. Furthermore,

it is seen that the F1 scores of all classes are non

significantly different from each other. The Recall

curve as shown in Figure 5 demonstrates better de-

tection performance for both plastic and hole classes.

Similarly, the precision curve as shown in Figure 6

also demonstrated better detection performance for all

three classes i.e., plant, hole, and plastic aqua-net de-

fects. It can also be noted that as the confidence level

reaches 0.741, the Precision of 100% is achieved by

the adopted model. The obtained results indicate the

effectiveness of the YOLOv5 detector for the aqua-

net defect detection tasks.

To further evaluate the detection performance of

our current research, we took into account the stud-

ies conducted by Zhang et al. in (Zhang et al., 2022)

and Liao et al. in (Liao et al., 2022). In the domain

of computer vision, Zhang et al. introduced a method

that utilizes Mask-RCNN (He et al., ) to address the

net hole detection problem within a controlled labo-

ratory environment. Their experimental findings ex-

hibited an Average Precision score of 94.48%. Sim-

ilarly, Liao et al. proposed a network model called

MobileNet-SSD for detecting holes in fish cages lo-

cated in open-sea environments. In their work, net

Evaluating Deep Learning Assisted Automated Aquaculture Net Pens Inspection Using ROV

589

Figure 3: Example of successful detection of aqua-net defects using YOLOv5.

Figure 4: Performance evaluation of the model through F1

curve.

hole detection is achieved by combining MobileNet

with the SSD network model. The results demon-

strated an average precision score of 88.5%. Our

study employed YOLOv4, YOLOv5, YOLOv7, and

YOLOv8 models to detect aqua-net defects, and we

achieved a 99% performance across all metrics, in-

cluding mAP, precision, recall, and F1 score. These

results are presented in Table 1.

5 CONCLUSIONS

In this research paper, we introduce the utilization

of YOLO-aqua-net models for detecting plant, holes,

Figure 5: Performance evaluation of the model through Re-

call curve.

and plastic within an aquaculture environment. The

study involved the use of datasets acquired from a

laboratory setup, where an ROV was employed to

gather, test, and validate the proposed approach. Deep

learning models based on YOLOV architecture were

trained and evaluated to detect various defects in

aqua-nets. The results demonstrate that all variations

of YOLO models exhibit better real-time detection of

aqua-net defects, making them suitable for real-time

inspection tasks in aquaculture net pens.

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

590

Figure 6: Performance evaluation of the model through Pre-

cision curve curve.

ACKNOWLEDGEMENTS

This publication is based upon work supported by the

Khalifa University of Science and Technology under

Award No. CIRA-2021-085, FSU-2021-019, RC1-

2018-KUCARS.

REFERENCES

Akram, W., Casavola, A., Kapetanovi

´

c, N., and Mi

ˇ

skovic,

N. (2022). A visual servoing scheme for autonomous

aquaculture net pens inspection using rov. Sensors,

22(9):3525.

Bochkovskiy, A., Wang, C.-Y., and Liao, H.-Y. M. (2020).

YOLOv4: Optimal Speed and Accuracy of Object De-

tection. arXiv, 2020.

Girshick, R. et al. (2015). Fast R-CNN. ICCV, 2015.

Gui, F., Zhu, H., and Feng, D. (2019). Research progress

on hydrodynamic characteristics of marine aquacul-

ture netting. Fish. Mod, 46:9–14.

He, K., Gkioxari, G., Dollar, P., and Girshick, R. Mask

R-CNN. IEEE International Conference on Computer

Vision (ICCV), 2017.

Jocher, G., Chaurasia, A., and Qiu, J. (2023). Yolo by ultr-

alytics.

Jocher, G., Chaurasia, A., Stoken, A., Borovec, J.,

NanoCode012, Kwon, Y., Michael, K., TaoXie, Fang,

J., imyhxy, Lorna, Yifu, Z., Wong, C., V, A., Montes,

D., Wang, Z., Fati, C., Nadar, J., Laughing, UnglvK-

itDe, Sonck, V., tkianai, yxNONG, Skalski, P., Hogan,

A., Nair, D., Strobel, M., and Jain, M. (2022). ultralyt-

ics/yolov5: v7.0 - YOLOv5 SOTA Realtime Instance

Segmentation.

Liao, W., Zhang, S., Wu, Y., An, D., and Wei, Y. (2022). Re-

search on intelligent damage detection of far-sea cage

based on machine vision and deep learning. Aquacul-

tural Engineering, 96:102219.

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S.,

Fu, C.-Y., and Berg, A. C. (2016). SSD: Single Shot

MultiBox Detector. ECCV, 2016.

Madshaven, A., Schellewald, C., and Stahl, A. (2022). Hole

detection in aquaculture net cages from video footage.

In Fourteenth International Conference on Machine

Vision (ICMV 2021), volume 12084, pages 258–267.

SPIE.

Qiu, W., Pakrashi, V., and Ghosh, B. (2020). Fishing

net health state estimation using underwater imaging.

Journal of Marine Science and Engineering, 8(9):707.

Roboflow (2022). Net Defect Detection Dataset-v1. Re-

trieved: December 14th, 2022.

Sun, M., Yang, X., and Xie, Y. (2020). Deep learning in

aquaculture: A review. J. Comput, 31(1):294–319.

Tao, Q., Huang, K., Qin, C., Guo, B., Lam, R., and

Zhang, F. (2018). Omnidirectional surface vehicle for

fish cage inspection. In OCEANS 2018 MTS/IEEE

Charleston, pages 1–6. IEEE.

Wang, C.-Y., Bochkovskiy, A., and Liao, H.-Y. M. (2023).

Yolov7: Trainable bag-of-freebies sets new state-of-

the-art for real-time object detectors. In Proceedings

of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition, pages 7464–7475.

Wei, Y., Wei, Q., and An, D. (2020). Intelligent monitor-

ing and control technologies of open sea cage culture:

A review. Computers and electronics in agriculture,

169:105119.

Yan, G., Ni, X., and Mo, J. (2018). Research status and

development tendency of deep sea aquaculture equip-

ments: a review. Journal of Dalian Ocean University,

33(1):123–129.

Yang, X., Zhang, S., Liu, J., Gao, Q., Dong, S., and Zhou,

C. (2021). Deep learning for smart fish farming: ap-

plications, opportunities and challenges. Reviews in

Aquaculture, 13(1):66–90.

Zhang, Z., Gui, F., Qu, X., and Feng, D. (2022). Net-

ting damage detection for marine aquaculture facili-

ties based on improved mask r-cnn. Journal of Marine

Science and Engineering, 10(7):996.

Zhou, W., Shi, J., Yu, W., Liu, F., Song, W., Gui, F., and

Yang, F. (2018). Current situation and development

trend of marine seine culture in china. Fish. Inf. Strat-

egy, 33:259–266.

Evaluating Deep Learning Assisted Automated Aquaculture Net Pens Inspection Using ROV

591