Locally Convex Neural Lyapunov Functions and Region of Attraction

Maximization for Stability of Nonlinear Systems

Lucas Hugo

1

, Philippe Feyel

2

and David Saussi´e

1

a

1

Robotics and Autonomous Systems Laboratory, Polytechnique Montr´eal, Montr´eal, Qu´ebec H3T1J4, Canada

2

Safran Electronics & Defense Canada, Montr´eal, Canada

Keywords:

Lyapunov Functions, Stability, Neural Network Optimization, Convexity, Region of Attraction.

Abstract:

The Lyapunov principle involves to find a positive Lyapunov function wi th a local minimum at the equilibrium

point, whose time derivative is negative with a local maximum at that point. As a validation, it is usual to

check the sign of the Hessian eigenvalues which can be complex: it requires to know a formal expression of

the system dynamics, and especially a differentiable one. In order to circumvent this, we propose in this paper

a scheme allowing to validate these functions without computing the Hessian. Two methods are proposed

to force the convexity of the function near the equilibrium; one uses a neural single network to model the

Lyapunov function, the other uses an additional one to approximate its time derivative. The training process

is designed to maximize the region of attraction of the locally convex neural Lyapunov function trained. The

use of examples allows us to validate t he efficiency of this approach, by comparing it with the Hessian-based

approach.

1 INTRODUCTION

Lyapunov theory is a commonly used tool in control

theory to analyse stability of dynamical systems. A

Lyapunov function can be used to de te rmine the equi-

librium stability of nonlinear systems, as well as to

estimate the Region of Attraction (RoA) or establish

a stabilizing c ontrol (Mawhin, 2005; Khalil, 2001).

This type of potential function keeps track of the en-

ergy dissipated by a system. In addition to modelling

physical en ergy, a Lyapunov function can also rep-

resent abstract quantities provided it fulfills the fol-

lowing p roperties: (1) it must be a positive definite

function locally, (2) it must have continuous partial

derivatives, and (3) its time derivative a long any state

trajectory must be negative semi- definite.

There are many ways of finding a Lyapunov func-

tion in the literature, each one of th em with its draw-

backs and a dvantages (Giesl and Hafstein, 2015), but

recently optimization methods based on neural net-

works training have increased significantly. Indeed,

the ability of neural networks to represent a func-

tion has been well established since the ea rly 90s

(Cybenko, 1989; Hornik et al., 1989; Hornik , 1991;

Liang and Srikant, 2017). Neural approximations

a

https://orcid.org/0000-0002-4228-1109

were first used to determine Lyapunov candidates for

nonlinear autonomous systems in (Prokhorov, 1994)

and various metho ds have since been proposed to

guide network training.

(Petridis and Petridis, 2006) develop an Hessian-

based approa c h to ensure a strict validation of

Lyapunov properties near an equilibrium and

(Bocquillon et al., 2022) extend it to prove asymp-

totic, exponential or ISS stability of continuou s or

discrete time systems usin g a genetic algorithm. The

network structure in these works is restricted to a

single hidden layer network, and they require to know

a differentiable expression of the system d ynamics.

(Richards et al., 2018) propose a training framework

based on an iterative gradient-d escent to estimate the

largest possible RoA fo r general nonlinear dynamica l

systems with structural properties on activation

functions and weighting matr ic e s. To completely

avoid structural limitations o n the trained network,

(Chang et al., 2020) repea ts multiple gradient-based

optimization pro blems to identify states that vio-

late Lya punov conditions and (Ga by et al., 2022)

propose a versatile neural ne twork architecture

called Lyapunov-Net that ensure positive defin iteness

of the Lyapunov candida te while simplifying the

optimization problem formulation. Nevertheless a

concession is made in terms of training efficiency

Hugo, L., Feyel, P. and Saussié, D.

Locally Convex Neural Lyapunov Functions and Region of Attraction Maximization for Stability of Nonlinear Systems.

DOI: 10.5220/0012180300003543

In Proceedings of the 20th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2023) - Volume 1, pages 29-36

ISBN: 978-989-758-670-5; ISSN: 2184-2809

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

29

as the c ost functions used needs to converge to 0 so

that the Lyapunov candidate obtained strictly validate

Lyapunov properties, especially near the equilibrium.

To circumvent this issue (Chang and Gao, 2021)

introdu ce the c oncept of Almost Lyapunov functions,

theoretically relevant but difficult to validate in

practice.

To provide a tool with the sam e validity guarantee

as the Hessian-b ased approach, but app lica ble to gen-

eral non-linear systems that are not necessarily dif-

ferentiable, we propose two methods to train locally

convex neural Lyapunov functions with the flexibil-

ity of an optimization-based approach. The fra me-

work is designed so tha t time der ivative function local

concavity is guided by the cost function while loca l

convexity of the Lyapunov function is stru cturally en-

forced, allowing a great liberty of network structure,

while maximizing the RoA.

This pa per is structured as follows. Preliminaries

related to Lyapunov theory are introduc e d in section

2. In section 3, we present the two methods to train

locally convex neural Lyapunov function, one uses a

single network to model the Lyapunov function, the

other uses an additional network to approx imate its

time derivative. Finally, we illustrate in section 4 the

efficiency of our approaches with examples, by com-

paring it with the Hessian-based approach.

2 PRELIMINARIES

Notations and definitions used in this paper are intro-

duced in this section. Let R denote the set of real

numbers, ||.|| denote the eu clidean norm on R

n

,

F

the

disjoint union between sets, and X ⊂ R

n

, a set c on-

taining x = 0.

This pa per deals with following autonom ous sys-

tems:

˙

x = f(x) (1)

where f : X −→ R

n

is a locally Lipschitz map with

at least one equilibrium point x

e

, that is f(x

e

) = 0.

Throu ghout this pap er, the equilibrium point is com-

monly set at the origin, x

e

= 0 without loss of gener-

ality.

Theorem 2.1 (Lyapunov Theory). (Khalil, 2001)

Let V : X −→R be a continuously differentiable func-

tion,

V (x

e

) = 0 and V (x) > 0 in X −{x

e

} (2)

˙

V (x) ≤ 0 in X (3)

then, x

e

= 0 is stable. Moreover, if

˙

V (x) < 0 in X −{x

e

} (4)

then, x

e

= 0 is asymptotically stable.

For some c > 0, the surface V (x) = c is called a

level set of V, and the (possibly conservative) sub-

set Ω

c

= {x ∈ X|V (x) ≤ c} ⊂ X ⊂ R

n

is an estimate

of the Region of Attraction (RoA). The system will

converge to 0 from every initial point x

0

belonging to

Ω

c

.

A neural network Lyapunov func tion takes any

state vector o f the system as an input, an d gives a

scalar value at output. In this paper, th e parameter

vector for a Lyapunov function candidate is noted as

θ

θ

θ, the candida te itself is noted as V

θ

θ

θ

, and its time

derivative function is noted as

˙

V

θ

θ

θ

.

3 TRAINING PROCESS

We describe how to train a locally convex neura l Lya-

punov function, so that the Lyapunov conditions can

be verified to ensure that the equilibrium point of the

system (1) is lo c ally asymptotically stable.

3.1 Single Network Approach

To define the structure of th e function to be trained,

we use the Lyap unov-Net formulation prop osed in

(Gaby et al., 2 022). The neural network used is noted

φ

φ

φ

θ

θ

θ

, its output size is m ∈ N

∗

, and we fix:

V

θ

θ

θ

(x) = ||φ

φ

φ

θ

θ

θ

(x) −φ

φ

φ

θ

θ

θ

(0)||

2

+ α log(1 + ||x||

2

) (5)

where the para meter α > 0 can be fixed by the

user. The neural network φ

φ

φ

θ

θ

θ

can be arbitrarily con-

structed as discussed in (Gaby et al., 2022). Any

other function γ : R

m

−→ R

+

can be used to replace

the term ||φ

φ

φ

θ

θ

θ

(x) −φ

φ

φ

θ

θ

θ

(0)||

2

by γ(φ

φ

φ

θ

θ

θ

(x) −φ

φ

φ

θ

θ

θ

(0)), as

long as γ(0) = 0 and γ is locally convex twice differ-

entiable at 0.

Proposition. The function defined in (5) is locally

convex in 0 if network activation functions are twice

differentiable in 0.

Proof. It is sufficient to prove the positivity of the

Hessian at 0. We have:

∇V

θ

θ

θ

(x) = 2 (φ

φ

φ

θ

θ

θ

(x) −φ

φ

φ

θ

θ

θ

(0))

⊤

∇φ

φ

φ

θ

θ

θ

(x) +

2αx

⊤

1 + ||x||

2

∇

2

V

θ

θ

θ

(x) = 2

(∇φ

φ

φ

θ

θ

θ

(x))

⊤

∇φ

φ

φ

θ

θ

θ

(x)

+ (φ

φ

φ

θ

θ

θ

(x) −φ

φ

φ

θ

θ

θ

(0))

⊤

⊗∇

2

φ

φ

φ

θ

θ

θ

(x)

+

2αI

n

1 + ||x||

2

−

4αxx

⊤

(1 + ||x||

2

)

2

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

30

with ⊗ a tensor contraction. Assuming x = 0, we

have:

∇

2

V

θ

θ

θ

(0) = 2 (∇φ

φ

φ

θ

θ

θ

(0))

⊤

∇φ

φ

φ

θ

θ

θ

(0) + 2αI

n

≻ 0

Regardless of the activation functions used and the

network arch itecture in φ

φ

φ

θ

θ

θ

, the Hessian of V

θ

θ

θ

is pos-

itive definite for any α > 0. In other words, V

θ

θ

θ

is

convex near equilibrium when differentiable activa-

tion func tions are used.

This proof can be extended to any γ function in-

troduced previously.

The cost function used for the training contains th ree

different terms:

L

θ

θ

θ

= c

1

L

1

+ c

2

L

2

+ c

3

L

3

(6)

with c

1

, c

2

, c

3

> 0 user-fixed hyperparame te rs once

and for all.

The first term L

1

is used to force

˙

V

θ

θ

θ

to be strictly

negative in the largest possible set within the domain

X. It is similar to the formulation c ommonly p roposed

in literature about neural Lyapunov functions:

L

1

=

1

N

1

∑

i∈X

max(0,

˙

V

θ

θ

θ

(x

i

)) (7)

where X = {1 ≤ i ≤ N

1

, x

i

∈ X} is a subset of state

vectors picked in X and N

1

= |X |.

This formulation benefits samples with large am-

plitudes rathe r than tho se close to the equilibrium.

Since we may n ot know the true RoA of the system

before training, some of the term

˙

V

θ

θ

θ

(x

i

) may prevent

convergence to 0, so the term L

1

is not necessarily

sufficient to have a good accuracy of the result near

the equilibr ium.

To ensure

˙

V

θ

θ

θ

to be strictly negative near 0, the term

L

2

is in troduced. It consists of a Manhattan distance

between

˙

V

θ

θ

θ

and a negative definite locally concave

function h : R

n

−→ R

−

:

L

2

=

1

N

1

∑

i∈X

˙

V

θ

θ

θ

(x

i

) −h(x

i

)

(8)

In this paper, the function h chosen is:

h(x) = −β log(1 + ||x||

2

) (9)

where the parameter β > 0 can be fixed by the user.

Any other function can be used, as long as it is null at

0, negative definite near 0 and loca lly concave. How-

ever log(1 + ||x||

2

) ≈ ||x||

2

as x −→0 and g rows very

slowly as ||x|| increases, w hich makes it really suit-

able for the task.

For a candidate V

θ

θ

θ

estimated for all x

i

∈ X , one

can comp ute an approximate level set of V

θ

θ

θ

:

˜c = min

i∈X

V

θ

θ

θ

(x

i

) s.t.

˙

V

θ

θ

θ

(x

i

) > 0 (10)

If any of the x

i

verify the c ondition on the time deriva-

tive, we take ˜c = ∞.

Finally, in order to find the largest RoA estimate

at the end of the training, we add the term L

3

defined

as follow:

L

3

= 1 −

N

2

N

1

(11)

where N

2

= |A| and A = {x

i

∈ X , V (x

i

) < ˜c}. (11)

tends towards 0 the more points are included in the

RoA estimate.

The best case is obtained when the system con-

sidered is stable for all x ∈ X, i.e. the cost function

L

θ

θ

θ

(6) can converge to 0. Generally speaking, we ar-

range the terms of the cost functions using constant

terms c

1

, c

2

, c

3

. As L

1

(7) is the on ly term that de-

pends on the system dynamics through

˙

V

θ

θ

θ

, while L

3

(11) is of interest only if th e other two ter ms impact

the training enoug h to exploit an estimate of the RoA ,

we usually set c

1

> c

2

> c

3

during training. We can

then get rid of c

1

as an hyperparameter by fixing it to

1 and then fix c

2

, c

3

such that c

3

< c

2

< 1.

Any optimization process ca n be used for training

as lo ng as it can minimize L

θ

θ

θ

. The pseudocode of an

algorithm iteration is provided in Algorithm 1.

Algorithm 1: Single Network Approach Iteration.

1: Input: network φ

φ

φ

θ

θ

θ

, dynamical system f , param-

eters α, β, h yperparameters c

1

, c

2

, c

3

, set X ⊂ X

2: Co mpute candidate s V

θ

θ

θ

(X ) and

˙

V

θ

θ

θ

(X )

3: Co mpute approximate level set ˜c (10)

4: Co mpute cost fu nction L

θ

θ

θ

(6)

5: Update weigh ts of φ

φ

φ

θ

θ

θ

to minimize L

θ

θ

θ

6: Return: Updated ne twork φ

φ

φ

θ

θ

θ

In section 4, we describe a gradien t descent train-

ing algorithm 3 imple mented in Matlab to train the

network, however, other algorithms can be picked to

find the optimal vector of par ameters θ

θ

θ.

3.2 Two-Network Approach

In order to make the method indepen dent of the

choice of h (9) and thus give ourselves every chance

of maximizing the RoA, we propose to use a sec-

ondary neural network as an optimal function h to ap-

proxim ate the Lyapunov candidate time d erivative.

We write θ

θ

θ

1

the parame te r vector and φ

φ

φ

θ

θ

θ

1

the neu-

ral network of the Lyapun ov candidate V

θ

θ

θ

1

. The pa-

rameter vector of the second network is noted θ

θ

θ

2

, this

network is then no te d φ

φ

φ

θ

θ

θ

2

, and the time derivative ap-

proxim ator is noted H

θ

θ

θ

2

. Th e structure of this approx-

imator is negative d e finite by design:

H

θ

θ

θ

2

(x) = −||φ

φ

φ

θ

θ

θ

2

(x) −φ

φ

φ

θ

θ

θ

2

(0)||

2

−β log(1 + ||x||

2

)

(12)

Locally Convex Neural Lyapunov Functions and Region of Attraction Maximization for Stability of Nonlinear Systems

31

where the parameter β > 0 is a once and for all user-

fixed parameter. H

θ

θ

θ

2

is locally concave near the e qui-

librium if the activation functions used for the net-

work φ

φ

φ

θ

θ

θ

2

are differentiable, as demonstrated in sub-

section 3.1. β is deliberately noted in the same way

as in (9), as we fix a unique value for it in order to

consider H

θ

θ

θ

2

as an op timal estimator of h.

The cost term L

2

(8) is then replaced by L

2

′

:

L

2

′

=

1

N

2

∑

i∈X

˙

V

θ

θ

θ

1

(x

i

) −H

θ

θ

θ

2

(x

i

)

(13)

The cost func tion used for the training is noted L

Θ

Θ

Θ

with Θ

Θ

Θ = [θ

θ

θ

1

;θ

θ

θ

2

]. It is equal to:

L

Θ

Θ

Θ

= c

1

L

1

+ c

2

L

2

′

+ c

3

L

3

(14)

The pseudocode of an algorithm iteration is pro-

vided in Algorithm 2.

Algorithm 2: Two-Network Approach I teration.

1: Input: networks φ

φ

φ

θ

θ

θ

1

,φ

φ

φ

θ

θ

θ

2

, dynamical system f ,

parameters α, β, hyperparame te rs c

1

, c

2

, c

3

, set

X ⊂ X

2: Compute candidates V

θ

θ

θ

1

(X ),

˙

V

θ

θ

θ

1

(X ) and H

θ

θ

θ

2

(X )

3: Compute approximate level set ˜c (10)

4: Compute cost function L

Θ

Θ

Θ

(14)

5: Update w eights of φ

φ

φ

θ

θ

θ

1

and φ

φ

φ

θ

θ

θ

2

to minimize L

Θ

Θ

Θ

6: Return: Updated networks φ

φ

φ

θ

θ

θ

1

,φ

φ

φ

θ

θ

θ

2

Although this formulation has some obvious

shortcomings, inc luding the increase in parameters

involved, and thus a longer computation time, these

parameters are not necessarily prohibitive if a suitable

Lyapunov function is to be found for the system under

consideration.

4 EXAMPLES

In the following section, we demon stra te that the pro-

posed meth ods are efficient through n umerical exper-

iments.

4.1 Training Method

The neural network function is trained using Matlab

Automatic Differentiation and the standard ADAM

network training algorithm (Kingma and Ba, 2017),

from the R2 023A Matlab Deep Lear ning Toolbox.

The documentation for the

adamupdate

Matlab func-

tion is available at (Matlab, 2019).

In order to compute a grad ie nt that can effec-

tively influe nce the training d irection, an equiva lence

of N

2

= |A| directly related to θ

θ

θ (resp. θ

θ

θ

1

) is used in

(11). It is noted

˜

N

2

and is equal to:

˜

N

2

=

∑

i∈X

max(0, σ( ˜c −V

θ

θ

θ

(x

i

))) (15)

with σ the sigmoid function.

In g eneral, the number of neurons per layer is de-

termined by the dimension of the system, the num-

ber of hidden layers is first set at 1, th e n increased if

necessary, and the networks parameters are bounde d

between −1 and 1. Lyapunov candidates are judged

accordin g to th e size of their largest RoA estimate Ω

c

as defined in section 2.

The pseudoco de of the algorithm proposed is pro-

vided in Algorithm 3. In ord er to have sufficient tr ain-

ing data, we use a grid of initial con ditions X

0

⊂ X.

For each x

0

in X

0

, the system studie d is simulated

with Simu link, and the trajectory is collected into X.

We define n

E

as the number of epochs and n

I

as the

number of iterations per epoc h. Since there is no

guaran tee that the cost function will converge, we fix

the total numbe r of iterations N

I

= n

E

×n

I

. For each

epoch the table X is shuffled and n

I

is fixed so that:

X :=

n

I

G

j=1

X

b, j

(16)

where X

b, j

are training data ba tc hes of size N

1

.

At ea ch iteration, update is driven by the cost

function gradien t:

• for the single network approach we compute

∇

θ

θ

θ

L

θ

θ

θ

to update φ

φ

φ

θ

θ

θ

;

• for the two-network approach we compute ∇

θ

θ

θ

1

L

Θ

Θ

Θ

and ∇

θ

θ

θ

2

L

Θ

Θ

Θ

to update φ

φ

φ

θ

θ

θ

1

and φ

φ

φ

θ

θ

θ

2

respectively.

4.2 Parameter Setting

In all the exam ples, a learning rate o f 0.01 is cho-

sen for the training of the network modeling V

θ

θ

θ

. The

learning rate of the second neural network is fixed to

0.02 when used to match

˙

V

θ

θ

θ

quickly, especially dur-

ing the first iterations, as empirically ob served during

the algorith m imple mentation.

Each network has the same structure with tanh ac-

tivation func tions and a single hid den layer. The hid-

den layer neurons number is fix to 4n and the out-

put dimension is fix to 2n as this allows a suitable

optimization time and sufficiently significant results

for the study of the examp le s considered. For a two-

dimensional system, i.e. n = 2, we then have a total

of 60 pa rameters to train by network.

As c

1

is commonly fixed to 1, hy perparameters

c

2

, c

3

can be fixed by trial and error and their common

values are c

2

= 0.1 and c

3

= 0.01. Settings α and β

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

32

Algorithm 3: Network Training Implementation.

1: Input: dynamical system f , parameters α, β, hy-

perpara meters c

1

, c

2

, c

3

, initial set X

0

of sampled

states in X, number of epochs n

E

, numbe r of iter-

ations per epoch n

I

, batch size N

1

2: Initialize φ

φ

φ

θ

θ

θ

(resp. φ

φ

φ

θ

θ

θ

1

,φ

φ

φ

θ

θ

θ

2

)

3: for all x

0

∈ X

0

do

4: Simulate the trajectory in itialized at x

0

5: Update data table X with measures x(t)

6: end for

7: for i = 0, 1, ..., n

E

do

8: Shuffle X

9: for j = 0, 1, ..., n

I

do

10: Get a batch X

b, j

from X of size N

1

11: Compute algorithm 1 o r 2 with X = X

b, j

12: end for

13: end for

14: Return: φ

φ

φ

θ

θ

θ

(resp. φ

φ

φ

θ

θ

θ

1

,φ

φ

φ

θ

θ

θ

2

)

are also fixed by trial and error and then kept constant

for each of the systems consid e red. Table 1 lists the

selected values.

Table 1: (α, β) setting.

Benchmarks Example 1 Example 2

α 0.1 0.1

β 0.1 1

Table 2 includes inf ormation about the data set

used for tra ining and the fixed number of iteration.

Table 2: |X

0

|—|X

0

|—|X

0

| is the number of initial condi-

tions considered, |X

0

|—X—|X

0

| is the number of training

data obtained with Simulink. |X

0

|N

I

—X

0

| is the number of

training iterations, and |X

0

|N

1

—X

0

| is the batch si ze fixed

for each of the examples considered.

Benchmarks Example 1 Example 2

|X

0

| 1681 961

|X| 9.9 ×10

5

4.7 ×10

5

N

I

270 270

N

1

1 ×10

5

5 ×10

4

4.3 Experiments

The two systems considered aims to illustrate the abil-

ity of our methods to treat general nonlin ear systems.

The first example is a differentiable two-

dimensional nonlinear sy stem. We com pare

our results with anoth e r training method based

on the Hessian-based approach described in

(Bocquillon et al., 2022) and, to properly com-

pare methods, the cost term L

3

(11) is adde d to the

training framework suggested:

Evolution of the Training Process. Let Q and

λ

be as defined in (Bocquillon et al., 2 022). The cost

function Q is rewritten as follow:

Q = λ + L

3

(17)

The best c ase is obtained when the system considered

is stable for all x ∈ X, i.e. Q < 0 after trainin g. Gen-

erally speakin g, the Lyapunov candidate obtained is

valid if

λ < 0 at the end of the training.

The second example is a 2d -system defined to demon-

strate briefly the ability of our methods to find a suit-

able Lyapunov function even if the system is not dif-

ferentiable.

Both examples are defined so that a large part of

their domain of d efinition is unstable. Ou r goal is to

test the ability of our methods to find a suitable Lya-

punov function even if part of the data c onsidered dur-

ing training are part of unstable trajectories.

Example 1.

(

˙x

1

= −x

1

+ x

2

+

1

2

(e

x

1

−1)

˙x

2

= −x

1

−x

2

+ x

1

x

2

+ x

1

cosx

1

(18)

The domain considered is X = [−6;6]

2

and 0 is a sta-

ble equilibr ium.

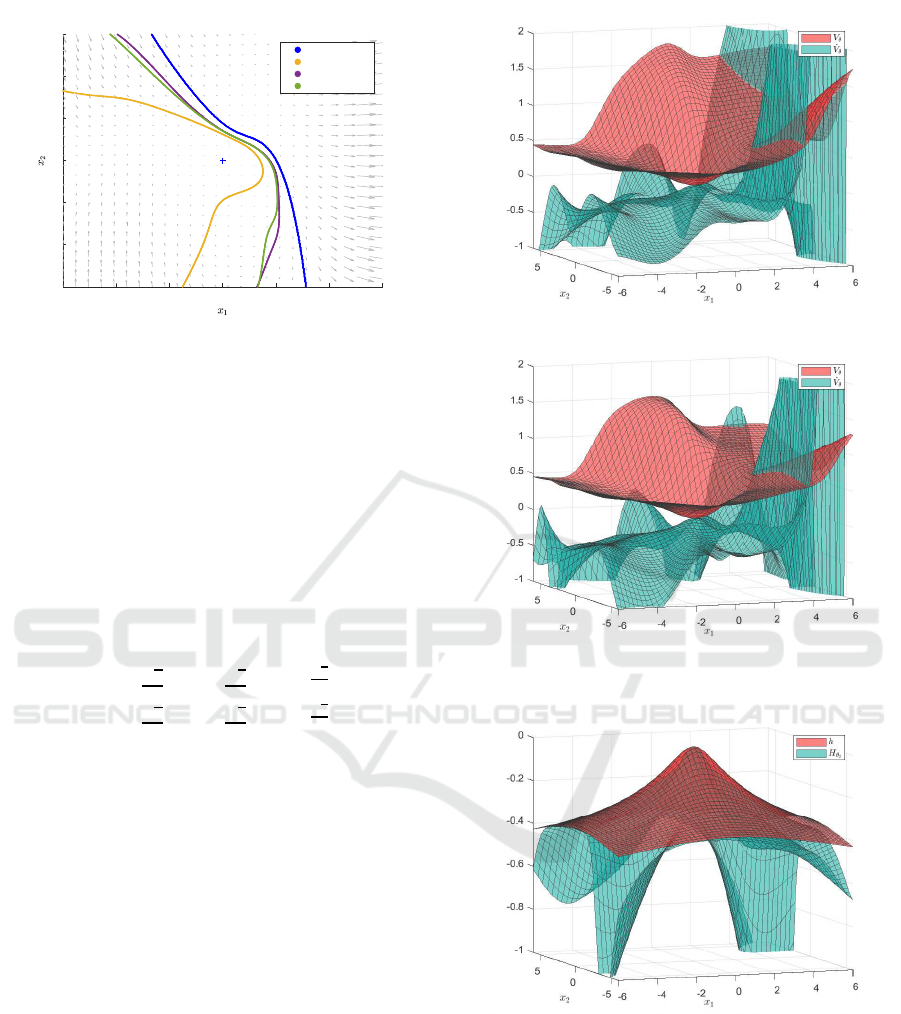

In Figure 1, Regions of Attraction (RoA) of

Lyapunov functions obtained by the single network

method a nd the two-network method, as long as

Hessian-based approach, are compared. The regions

obtained are not included in the display window,

which is limited to the size of X

0

, as tra je ctories con-

sidered during training may leave the window before

converging to 0. In view of this result, our training

methods can be considered efficient, since they al-

low us to find Lyapunov functions with regions of at-

traction close to the true region of attraction. As we

have not precisely optimized the parameter settings,

we can hope that with an hyperparameter optimiza-

tion method we can obtain an even more accura te es-

timate of the tru e Ro A.

Lyapunov functions obtained for th e single-

network method and the two-network meth od, and

their time derivative functions, are respectively d is-

played in Figures 2a and 2b. As can be seen, 0 is a

local minimum for V

θ

θ

θ

, and a local maximum for

˙

V

θ

θ

θ

,

on the points grid considered for display.

In order to compare single network and two-

network approachs, Figure 3 shows functions h (10)

and H

θ

θ

θ

2

(12). As described in section 3, the second

is defined by training, whereas the fir st is not. As we

obtained a similar RoA for the two methods, it is dif-

ficult to conclude he re abou t a possible advantage of

Locally Convex Neural Lyapunov Functions and Region of Attraction Maximization for Stability of Nonlinear Systems

33

-6 -4 -2 0 2 4 6

-6

-4

-2

0

2

4

6

Phase plan

True RoA

Hessian

One Network

Two Networks

Figure 1: RoA comparison - Example 1.

using the two-network approach instead of the single

one. However, we can emphasize the supposed ad-

vantage of using it, since H

θ

θ

θ

2

is visually closer to

˙

V

θ

θ

θ

1

in Figure 2b than h to

˙

V

θ

θ

θ

in Figure 2a. Final values of

cost terms L

2

(8) and L

′

2

(13), estimate d for all x ∈ X,

provide a num e rical overview of the difference be -

tween the two approaches. We have L

2

= 7.7 ×10

−4

and L

′

2

= 4.4 ×10

−3

, which confirm the visual o bser-

vation. The performance measurement study detailed

in section 4.4 would allow us to conclude.

Example 2.

˙x

1

= −

x

1

−

√

2

2

x

1

−

√

2

2

+ e

x

2

−

√

2

2

−1

˙x

2

= −

x

2

−

√

2

2

x

2

−

√

2

2

+ e

x

1

−

√

2

2

−1

(19)

The domain con sidered is X = [−4 ; 4]

2

and x

0

=

[−7.4.10

−3

;−7.4.10

−3

] is a stable equilibrium.

In Figure 4, Regions of Attraction (RoA) of

Lyapunov functions obtained by the single network

method and the two-network method are compared.

The second method seems to be more efficient but we

will see in section 4.4 if it comes from a better random

seed at initialization or a more efficient approach.

Lyapunov functions obtained for th e single-

network method and the two-network method, and

their time derivative functions, are respectively dis-

played in Figures 5a and 5b, and the same co nclusion

as before can be made about their values at the equi-

librium.

Figure 6 shows functions h (10) and H

θ

θ

θ

2

(12), and

we apply the same argumentation as for example 1.

H

θ

θ

θ

2

is visually closer to

˙

V

θ

θ

θ

1

in Figure 5b than h to

˙

V

θ

θ

θ

in Figure 5a. For all x ∈X, we have L

2

= 0.1531 and

L

′

2

= 0.0478, which confirm the visual observation.

We rely on section 4.4 to compare the effectiveness

of the two approache s.

(a) Single Network Approach.

(b) Double Network Approach.

Figure 2: Lyapunov functions comparison - Example 1.

Figure 3: Estimator comparison - Example 1.

4.4 Performance Measurement

Due to the stochastic na ture of our methods, we sub-

ject each system to a series of 50 succ e ssive runs

using the same training data set and different seeds

in o rder to test wheth er their r e sults are parameter-

dependent.

We list the minimum value t

min

, the average value

t

mean

and the standard d eviation t

std

of the tra ining

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

34

-4 -3 -2 -1 0 1 2 3 4

-4

-3

-2

-1

0

1

2

3

4

Phase plan

True RoA

One Network

Two Networks

Figure 4: RoA comparison - Example 2.

(a) Single Network Approach.

(b) Double Network Approach.

Figure 5: Lyapunov functions comparison - Example 2.

time obtained, the minimum va lue L

min

, the average

value L

mean

and the standard d eviation L

std

of the fi-

nal cost fu nction obtained, as long as the maximum

value D

max

, the average value D

mean

and the standa rd

deviation D

std

of the size of the RoA obtained as a

percentage compared to the size of the initial set X

0

in Table 3 for the single network method. The same

results are presented in Table 4 for the two-network

Figure 6: Estimator comparison - Example 2.

Table 3: Single Network Approach Performance Measure-

ment.

Benchmarks Example 1 Example 2

t

min

(s) 21.59 9.49

t

mean

(s) 22.35 10.77

t

std

(s) 1.34 2.24

L

min

6.1 ×10

−3

2.64 ×10

−2

L

mean

6.6 ×10

−3

3.05 ×10

−2

L

std

6.8 ×10

−4

1.9 ×10

−3

D

max

(%) 82.7 71.6

D

mean

(%) 19.2 32.4

D

std

(%) 13.35 21.9

method. The results presented in section 4.3 corre-

spond to the D

max

values.

The difference in execution times between the two

examples can be linked to the number of training data

items considered, as shown in Table 2. As the nu mber

of iterations is equally fixed for each run, it is consis-

tent to obtain low standart deviations for L and t.

The evolution of D

mean

between the two ap-

proach e s is significant, with an incr ease of 5.5% for

example 1 and 11.9% for example 2, which tends to

validate the advantages of the two-network ap proach.

If the differentiability of the system considered is un-

known or uncertain, the second method is therefore

recommended. Since this approach requires twice the

parameters to be train ed, the average calculation time

is, as expected, the shortcoming.

5 CONCLUSIONS

In conclu sio n, this paper addresses th e challenge

of valid ating Lyapunov proper ties w ithout explicitly

computing the H e ssian eigenvalues and proposes two

approa c hes to so. By app lying them to examples,

the paper demonstrates the effectiveness of the pro-

cess in validating the Lyapunov principle. A c ompar-

Locally Convex Neural Lyapunov Functions and Region of Attraction Maximization for Stability of Nonlinear Systems

35

Table 4: Two-Network Approach Perf ormance Measure-

ment.

Benchmarks Example 1 Example 2

t

min

(s) 32.43 16.77

t

mean

(s) 33.90 18.33

t

std

(s) 2.33 1.74

L

min

5.4 ×10

−3

2.53 ×10

−2

L

mean

5.8 ×10

−3

2.91 ×10

−2

L

std

3.6 ×10

−4

2.1 ×10

−3

D

max

(%) 77.1 86.2

D

mean

(%) 24.7 44.3

D

std

(%) 15.8 24.5

ison with a Hessian-based approa c h co nfirms the effi-

ciency of the proposed training scheme. The ability to

validate Lyapunov functions with out explicitly com-

puting the Hessian pr ovides a valuable alter native, es-

pecially in cases where a differentiable expression of

the system dynamics is not readily available.

This research opens up possibilities for broade r

application of the Lyapun ov princip le , making it more

accessible and applicable in various domains. Further

investigations can explore the extension of those ap-

proach e s to more complex systems and practical sce-

narios, ensuring their robustness and reliability, or can

focus on hyperparameters optimization to increase the

probability to find the largest Region of Attra ction for

the system considered.

REFERENCES

Bocquillon, B., Feyel, P., Sandou, G., and Rodriguez-

Ayerbe, P. (2022). A comprehensive framework to

determine lyapunov functions for a set of continuous

time stability problems. In IECON 2022 – 48th An-

nual Conference of the IEEE Industrial Electronics

Society, pages 1–6.

Chang, Y.-C. and Gao, S. (2021). Stabilizing Neural Con-

trol Using Self- Learned Almost Lyapunov Critics. I n

2021 IEEE International Conference on Robotics and

Automation (ICRA), pages 1803–1809.

Chang, Y.-C., Roohi, N. , and Gao, S. (2020). Neural Lya-

punov Control. arXiv:2005.00611 [cs, eess, stat].

Cybenko, G. (1989). Approximation by superpositions of a

sigmoidal function. Mathematics of Control, Signals

and Systems, 2(4):303–314.

Gaby, N., Zhang, F., and Ye, X. (2022). Lyapunov-net: A

deep neural network architecture for lyapunov func-

tion approximation. In 2022 IEEE 61st Conference

on Decision and Control (CDC), pages 2091–2096.

Giesl, P. and Hafstein, S. (2015). R eview on computational

methods for Lyapunov functions. Discrete and Con-

tinuous Dynamical Systems - B, 20(8):2291.

Hornik, K. ( 1991). Approximation capabilities of mul-

tilayer feedforward networks. Neural Networks,

4(2):251 – 257.

Hornik, K., Stinchcombe, M., and White, H. (1989). Multi-

layer feedforward networks are universal approxima-

tors. Neural Networks, 2(5):359 – 366.

Khalil, H. K . (2001). Nonlinear Systems. Pearson, Upper

Saddle River, NJ, 3 edition.

Kingma, D. P. and Ba, J. (2017). Adam: A Method for

Stochastic Optimization. arXiv:1412.6980 [ cs].

Liang, S. and Srikant, R. (2017). Why deep neural net-

works for function approximation? 5th International

Conference on Learning Representations, ICLR 2017

- Conference Track Proceedings.

Matlab (2019). Update parameters using adaptive moment

estimation (Adam) - MATLAB adamupdate.

Mawhin, J. (2005). Alexandr Mikhailovich Liapunov, The

general problem of the stability of motion (1892),

pages 664–676. Elsevier.

Petridis, V. and Petridis, S. (2006). Construction of neu-

ral network based lyapunov functions. IEEE Interna-

tional Conference on Neural Networks - Conference

Proceedings, pages 5059 – 5065.

Prokhorov, D. (1994). A Lyapunov machine for stabilit y

analysis of nonlinear systems. In Proceedings of 1994

IEEE International Conference on Neural Networks

(ICNN’94), volume 2, pages 1028–1031 vol.2.

Richards, S. M., Berkenkamp, F., and Krause, A. (2018).

The Lyapunov Neural Network: Adaptive Stability

Certification for Safe Learning of Dynamical Sys-

tems. arXiv:1808.00924 [cs].

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

36