User-Centered Design and Iterative Refinement: Promoting Student

Learning with an Interactive Dashboard

Gilbert Drzyzga

a

and Thorleif Harder

b

Institute for Interactive Systems, Technische Hochschule Lübeck, Germany

Keywords: Usability, User Experience User-Centered Design, Interaction Principles, Design Study, Learner Dashboards.

Abstract: The study uses a user-centered design methodology to develop a prototype for an interactive student dash-

board that focuses on user needs. This includes iterative testing and integration of user feedback to develop a

usable interface that presents academic data in a more understandable and intuitive manner. Key features of

the dashboard include academic progress tracking and personalized recommendations based on machine

learning. The primary target audience is online students who may study in isolation and have less physical

contact with their peers. The learner dashboard (LD) will be developed as a plug-in to the university's learning

management system. The study presents the results of a workshop with students experienced in human-com-

puter interaction. They evaluated a prototype of the LD using established interaction principles. The research

provides critical insights for future advancements in educational technology and drives the creation of more

interactive, personalized, and easy-to-use tools in the academic landscape.

1 INTRODUCTION

In recent years, digital study offerings have become

increasingly important, which is partly due to the fact

that students can individually organize their learning

process (Getto et al., 2018). This gives students the

opportunity to learn largely independent of time and

place, thus offering them more flexibility (Wannema-

cher et al., 2016). The digital nature of online learning

and the delivery of learning content via the Internet

creates a physical distance. As a result, students often

do not have the opportunity to learn with other peers

or to contact instructors in person to discuss learning

outcomes (Koi-Akrofi et al., 2020; Kaufmann and

Vallade, 2021) for example. This paper presents a

user-centered design (UCD) approach to a learner

dashboard (LD) that is being developed as a plug-in

to a learning management system (LMS) of a higher

education network to support online students in their

learning process (Janneck et al., 2021). It highlights

the benefits of research that involves students in iden-

tifying and solving problems related to its functional-

ity and interaction. In the field of educational technol-

ogy, the terms "learner dashboard" and "learning

a

https://orcid.org/0000-0003-4983-9862

b

https://orcid.org/0000-0002-9099-2351

1

ISO 9241-110:2020 (en) Ergonomics of human-system

interaction — Part 110: Interaction principles, https://www.

iso.org/standard/75258.html, accessed June 20, 2023

dashboard" are often used synonymously. However,

there are some subtle differences in terminology. The

term "learner dashboard" focuses more on the per-

spective of the individual learner. It is an individual-

ized tool that allows students to monitor their own

progress, set goals, and adjust their learning strategy.

This supports, for example, self-regulated learning

(Matcha et al., 2019; Viberg et al., 2020). In contrast,

the term "learning dashboard" is commonly used to

describe digital tools for visualizing data about learn-

ing. It is used by educators to track, understand, and

improve student learning. It can display data about

student performance, engagement, and learning pro-

gress (Siemens and Baker, 2012, Verbert et al., 2014).

We will use the first definition in this paper as the

dashboard we are developing takes more of a learner's

- in our case, student's - perspective by, among other

things, providing opportunities for self-regulation

(Lehmann et al., 2014; Barnard-Brak et al., 2010).

In the study, students were asked to evaluate an

interactive prototype of the LD based on wireframes

according to the design and interaction principles out-

lined in EN ISO 9241-110:20201. The principles are

340

Drzyzga, G. and Harder, T.

User-Centered Design and Iterative Refinement: Promoting Student Learning with an Interactive Dashboard.

DOI: 10.5220/0012191300003584

In Proceedings of the 19th International Conference on Web Information Systems and Technologies (WEBIST 2023), pages 340-346

ISBN: 978-989-758-672-9; ISSN: 2184-3252

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

established heuristics and are described as the "Ergo-

nomics of human-system interaction - Part 110: Inter-

action principles". It is a standard that describes prin-

ciples of interaction between a user and a system that

are formulated in general terms, independent of situ-

ations of use, application, environment or technology.

The seven interaction principles are: Suitability for

the user’s tasks, Self-descriptiveness, Conformity

with user expectations, Learnability, Controllability,

Use error robustness and User engagement. The study

is part of a multi-stage UCD design approach with

evaluations ranging from traditional design principles

to eye-tracking studies of cognitive requirements in

the area of usability and user experience (UX) testing

and analysis (Drzyzga & Harder, 2022). The aim of

the study was to encourage students to participate in

the development of the LD and to improve its acces-

sibility and intuitiveness by taking into account their

expertise. By involving students early on, interaction

issues can be identified and discussed, ensuring that

the usability of the LD can be effectively optimized

at an early stage. As argued in the workshop by Ver-

bert et al. 2020, it is important to use an iterative, user-

centered approach, focusing first on UX and then on

impact evaluation, to avoid biasing the results. In this

phase of our design study, we focused on an interac-

tive UX exploration. We wanted to identify potential

problem areas, points of failure that could prevent a

smooth interaction between the user and the LD.

These could range from functionality issues to inter-

face design challenges, from cognitive or information

overload (Chen et al., 2011; Shrivastav and Hiltz

2013) to lack of intuitive navigation. We wanted to

find ways to make the LD more intuitive, more acces-

sible and to enhance the usability. This analysis was

a thorough and iterative process that involved active

student participation, continuous feedback. The data

collected was then processed and turned into deliver-

ables. These insights formed the basis of our recom-

mendations for the development and improvement of

the LD, ensuring that it is aligned with user needs and

preferences.

2 METHOD

The methodological approach included a four-step

process consisting of the definition of the scope of

prototype evaluation, pre-evaluation preparation ses-

sions, the evaluation and careful analysis of feedback

to identify the interaction with the prototype on the

seven interaction principles. The research in this

study focused on the interactions with the prototype.

The prototype itself was developed as a clickable pro-

totype based on literature research, expert interviews

and subsequent evaluation of wireframes.

2.1 Definition of the Scope of the

Evaluation of the Prototype

To evaluate the validity and effectiveness of our in-

teractive design, we made the prototype (Figure 1)

available to 24 students enrolled in a human-com-

puter interaction (HCI) module. We chose this course

for our study because the target group - online stu-

dents, in mixed age and gender groups - applied for

it. The students who participated in the workshop

were undergraduate students in the university's online

Media Informatics program. They were asked to

solve the following problem:

"Evaluate the prototype on the basis of the interaction

principles (dialog principles) according to DIN EN

ISO 9241-110. Using concrete examples, explain to

what extent a principle has been implemented posi-

tively or negatively. In case of negative aspects, give

suggestions for improvement".

Figure 1: LD's wireframe used in the workshop with three

cards (calendar, learning activity analysis, study progress).

User-Centered Design and Iterative Refinement: Promoting Student Learning with an Interactive Dashboard

341

The results of the evaluation were copied by the stu-

dents as text into a submission field in the LMS of the

university. This was followed by a group discussion.

Under the guidance of a university instructor, they

were prepared for the workshop topics by reviewing

the course script and participating in weekly one-hour

web conferences to discuss related topics (e.g., Usa-

bility, HCI). Successful completion of the half day

workshop was required to register for the final exam

of the course. This assignment was specially designed

and delivered via a four-hour online workshop using

an Internet browser. To ensure academic rigor and

maintain the integrity of the evaluation process, the

entire process was supervised and monitored by three

studied faculty members.

2.2 Pre-Evaluation Preparation

The pre-evaluation phase was designed to prepare

students for the upcoming evaluation process. The

workshop was scheduled so that the students would

have acquired the necessary expertise. The faculty

members made sure that the students understood

these principles well and knew how to apply them

during the evaluation process.

2.3 Evaluation Process

The evaluation process itself consists of two parts.

The first part was the students' interaction with the

prototype and exploration of its various features. The

second part was their evaluation of the prototype on

the basis of the seven interaction principles.

2.4 Post-Evaluation Analysis

In the post-evaluation analysis, two of the three qual-

ified faculty members in Media Informatics, experi-

enced in usability and UX, analyzed the students'

feedback. They looked for any patterns or recurring

themes that the students found during their interaction

with the prototype. The feedback was thoroughly an-

alyzed. On completion of the study, the necessary

changes were made to the prototype based on the re-

sults of the feedback. However, the analysis went fur-

ther to uncover the underlying factors that influence

user interaction, engagement, and overall satisfaction.

In our study, we took a similar approach, carefully

examining each aspect of the LD's functionality.

2

https://www.figma.com/, accessed June 20, 2023

3 DEVELOPING THE LD IN A

COLLABORATIVE DESIGN

PROCESS

The LD has been carefully designed with a focus on

key interaction elements. We will describe the key in-

teraction elements, the collaborative design tool used,

and the positive impact of iterative testing on the

overall design process.

3.1 Interaction Elements of the LD

The LD interface, and in particular the cards offered,

are driven by interaction elements such as the ques-

tion mark icon, the zoom-in / zoom-out-icons, the

pencil icon, and the menu. In order to provide an en-

gaging and interactive UX, these elements have been

well selected and designed with clickable functional-

ity. The question mark icon was added to provide im-

mediate help and guidance when needed, while the

zoom-in / zoom-out-icons allows the user to easily

examine data and details in more detail. The pencil

icon at the top of the LD was added to allow users to

add or delete cards, and the menu was designed to en-

sure easy navigation through the LD.

3.2 The Collaborative Design Tool

In order to create these interactive elements, we used

a tool for the collaborative design of interfaces

2

. This

tool, with its ability to generate interactive prototypes,

is well known in the digital design community. We

used this tool because it allowed us to implement the

ideas and developments so far in a collaborative way,

both synchronously and asynchronously.

3.3 Iterative Testing and Its Impact

The iterative testing feature of the design tool was im-

portant for our design process. It allowed us to test

prototypes in real time, gather feedback, make neces-

sary adjustments, and retest, thus promoting an envi-

ronment of continuous improvement.

4 DEVELOPING WITH USER

FEEDBACK IN MIND

UX analysis was an important part of our study. It

provided critical insights for the redesign process.

The value of such an analysis, as highlighted in our

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

342

study, reinforces that UX analysis is a necessary part

of design (Vlasenko et al., 2022; Luther et al., 2020).

4.1 The Role of Wireframing in User

Interface Development

Conceptualizing and refining a wireframe is an essen-

tial step in the development of an interactive user in-

terface. According to Hampshire et al. (2022),

wireframes provide representations of the design, it’s

a representation of how the structure, layout, content

and function of the product could be. The developed

wireframe served as a basic structure for the subse-

quent development stages of the LD, and also as a vis-

ual guide that represented the basic structure of the

LD. It allowed us to plan the upcoming layout and

interaction patterns in detail.

4.2 Development of an Interactive

Prototype

The creation of a low-fidelity, interactive proto-type

was the next step after the wireframe refinement. The

final prototype of the LD at this stage of our develop-

ment process was featured with 14 different views,

each of which was designed with a specific purpose

in mind to enhance the user's learning process. These

views were complemented by a series of modal dialog

boxes, each of which prompted the user for specific

input or provided critical information.

5 RESULTS

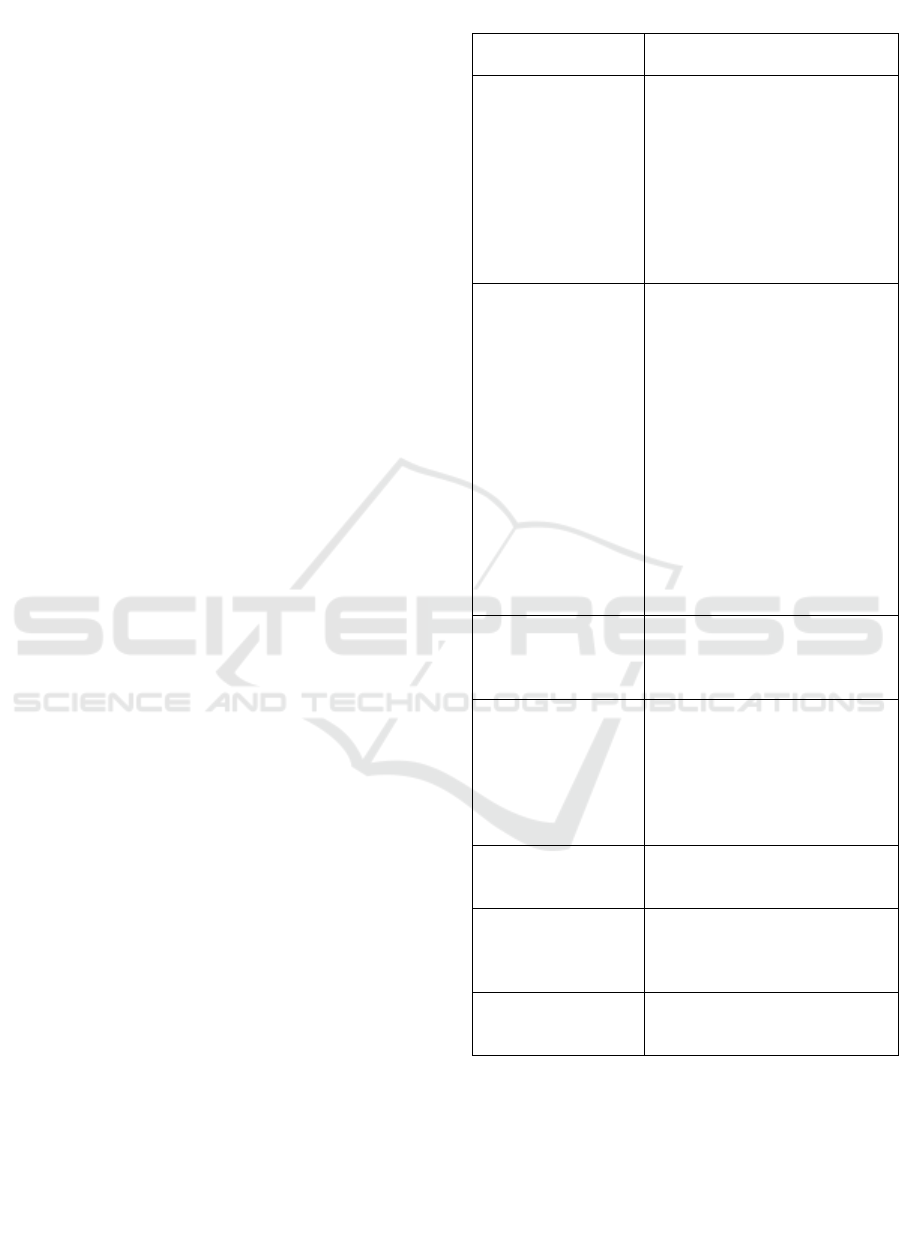

A summary of the results is given in Table 1 and is

explained in detail in the following chapters. In total,

a comprehensive 54-page ISO 216 A4 report care-

fully summarizes the anonymized results of the stu-

dent evaluations. It presents what the students found

and suggests possible further improvements. It should

be noted that not all students wrote something on each

of the 7 interaction principles, which may be due to

the limited time available.

5.1 Suitability for the User's Tasks

The interaction principle "suitability for the user's

tasks" was evaluated differently by 5 of the students.

Some described the evaluation as positive, while oth-

ers found the system inappropriate. It seems that the

system tries to collect user data mainly through infor-

mation input. This can lead to a high workload for us-

ers who spend a lot of time with the material. Some

Table 1: Summary of the results.

Interaction Princi-

ple

Keywords

Suitability for the us-

er's tasks

reducing nested information,

measuring workload (e.g., of-

fline learning), user data/analy-

sis of learning activities (e.g.,

optional data logging by own in-

puts), calendar view (see Fig. 2,

not easy to understand (the bar

was interpreted as remaining

time – what it was not in-

tended

)

)

Self-descriptiveness

clear understanding (e.g. of pre-

dictions), navigation line (e.g.,

breadcrumb), prominent recom-

mendation, unique headings,

confusion (e.g., position vs.

functionality: close button and

enlargement of the content, see

Fig. 1 icon in top right corner of

card), intuitive icon (e.g., zoom-

in/zoom-out icon vs. maximiz-

ing), drop-down list (labeling),

semester/module Information,

accurate predictions need refine-

ment, improved clarity and usa-

bility, small bar at top of details

p

ane

Conformity with

user expectations

back button, consistent place-

ment, layout confusion (e.g.

close-button vs „enlarged“-icon,

see Fig. 1 top right corner)

Learnability

intuitive delete function (calen-

dar view or edit card function

(not clear where to add new

card)), inconsistent information

(e.g. interaction elements vs

info-elements), text to long (lim-

its the overview, see Fi

g

. 3

)

Controllability

automatic logout, clear naviga-

tion, undo option, controllability

of addin

g

and deletin

g

cards

Use error robustness

date format, spell checker,

prompting whether an action is

to be performed, option to reset

to ori

g

inal state

User Engagement

learning progress (e.g. infor-

mation-based vs diagrams), clar-

it

y

of interface

students have suggested improvements, such as auto-

matically disconnecting from the system after a cer-

tain amount of time, or the ability to control the col-

lection of relevant information when the material is

used offline as a PDF or the misinterpretation of the

calendar view where the bar was interpreted as re-

maining time – what it was not intended (Figure 2).

User-Centered Design and Iterative Refinement: Promoting Student Learning with an Interactive Dashboard

343

Figure 2: Calendar view (LD-Card).

Others have commented positively on the calendar

view of the dashboard, which provides relevant infor-

mation, and the analysis of learning activities, which

can help improve learning progress. It seems that stu-

dents value different aspects of the interaction princi-

ple, resulting in a mixed evaluation.

5.2 Self-Descriptiveness

The interaction principle "self-descriptiveness" was

evaluated differently by 17 of the students. It was

stated that the icons needed more textual labels for a

clearer understanding of the functionalities. A "return

to start" button and a navigation line indicating the

user's current location would improve system orien-

tation. The recommendation section should be more

prominent to improve UX. Distinguishing different

views with unique headings would eliminate confu-

sion. The maximize arrows should be replaced with a

more intuitive icon. Semester/module selection

should be more highlighted. The learning activity

analysis graph needs refinement for accurate predic-

tions. More information on individual semester/mod-

ule tiles and an improved calendar feature to detect

completed dates would improve clarity and usability.

Finally, a small bar at the top of the details pane

would improve functionality by allowing view selec-

tion when details take up the entire screen.

5.3 Conformity with User Expectations

The interaction principle "conformity with user ex-

pectations" was evaluated differently by 16 of the stu-

dents. Several areas for improvement were identified

from student feedback on the usability of a prototype.

One issue that the students found inconsistent and

confusing was the positioning of the back button.

They suggested that consistent placement of the back

button could significantly improve the UX. The visi-

bility of fields was also a concern. The students felt

that their interaction with the prototype was hindered

by the fact that important fields were not easily acces-

sible. They recommended a design change to make

these fields more accessible. The icon selection and

calendar view were also criticized by the students.

They found the icons unclear, which made it difficult

to understand their functions. Meanwhile, the calen-

dar view was considered inadequate and the students

suggested improvements to make it more user-

friendly. Students also noted problems with naviga-

tion and closing. Navigating was found to be mislead-

ing and closing was found to be less intuitive. Stu-

dents felt that the usability of the prototype could be

greatly improved with better navigation design and a

more straightforward close function. Problems were

also found with the detail view and certain featureless

functions. The students felt that the detail view could

be improved to provide more relevant information,

and the non-functional features were seen as unnec-

essary and confusing.

5.4 Learnability

The interaction principle "learnability" was evaluated

differently by 21 of the students. They provided feed-

back on the learnability of the prototype was insight-

ful and highlighted several areas for improvement.

One notable concern was the lack of an intuitive de-

lete function, which caused some confusion. The in-

terface layout was also found to be confusing, and in-

consistencies in module information added to the con-

fusion. In order to address these issues, the students

have suggested a number of improvements. They sug-

gest introducing short help texts to guide users

through the interface and better illustrate the features.

Some graphics are explained in full-screen mode, and

long texts open up again, making it difficult to keep

track. They also recommend moving the detailed ex-

planations of the graphs to a more logical and user-

friendly layout. Another popular suggestion was to

provide more prominent access to progress recom-

mendations. This would improve the transparency of

the tool. In addition, students suggested adding a se-

mester or module selection feature, which could

greatly improve the tool's usability. The ability to ma-

nipulate tiles was also mentioned, as was the need for

clear instructions on how to use the system. Students

felt that these changes, along with the addition of vis-

ual cues, could help users navigate the tool more eas-

ily. The idea of an introductory tutorial was also

raised. This could guide new users through the tool,

explaining its features and how to use them. Mouse

hover assistance was also suggested as a way to im-

prove learnability.

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

344

5.5 Controllability

The interaction principle "controllability" was evalu-

ated differently by 7 of the students. In terms of con-

trollability, students noted that that an automatic log-

out feature should be implemented to ensure that us-

ers do not accidentally leave the application open and

waste resources. They suggested that there should be

a clear way for users to return to the default setting,

such as allowing them to move tiles back to their orig-

inal position. Ensure that all buttons can be used for

control purposes, including providing an "escape"

button to allow users to navigate back to the previous

page. They also noted to consider adding a "back"

button or arrow on the page, especially when using a

smartphone, as it provides a clear way for users to

navigate back in their browsing history. Providing a

direct undo option to restore removed tiles, rather

than requiring users to go through an editing and dia-

log process. Feedback indicates that they would like

to remove individual influencing variables from the

grading or change the percentage grading as this may

be useful for certain modules where users are not us-

ing the learning material. However, this would not be

indicative of the student's actual learning progress if

the student were to make extensive use of the instruc-

tor's tutorials and weekly web conferences. It was

noted that the controllability of adding and deleting

cards in the LD could be increased by allowing users

to navigate out of edit mode without having to pre-

cisely click the pencil icon in the top right corner

again, as this can be frustrating for users who may ac-

cidentally exit edit mode.

5.6 Use Error Robustness

The interaction principle "use error robustness" was

evaluated differently by 4 of the students. The stu-

dents noted that the prototype has room for improve-

ment in terms of error robustness, since the user can

make different entries in the calendar and there is a

possibility of errors in the date format. A logical date

format should be used and a spell checker should be

implemented to avoid errors. Regarding the editing of

the LD's cards, it is judged that deleting a card is easy,

but an additional prompt such as "Are you sure you

want to remove the calendar card?" could be added to

ensure that the user's intent is clear. The prototype has

no way to enter custom tasks, which limits the robust-

ness of the input masks. A feature like "Reset to orig-

inal state" could improve this significantly by allow-

ing the user to reset all items individually if they are

accidentally deleted.

5.7 User Engagement

The interaction principle "user engagement" was

evaluated differently by 13 of the students. Based on

the individual statements, it can be concluded that the

interaction principle of "user engagement" has been

implemented to varying degrees, both positively and

negatively. Some students appreciate the potential of

the prototype to increase motivation and engagement

through concrete examples such as the display of

learning progress and recommendations for improve-

ment. Others express concerns about the clarity of the

interface, the need for further explanation of how cer-

tain information is calculated, and the potential for

distraction from the learning content. Overall, there

seems to be a mixed response to the implementation

of this interaction principle.

6 DISCUSSIONS

The aim of the study was to examine an LD at an early

stage of development for possible interaction incon-

sistencies. To do this, we gave the developed LD to

24 online students, who could also be the later users,

when the LD is integrated into one or more of their

courses. They had to evaluate the LD in a half-day

workshop based on the seven interaction principles of

EN ISO 9241-110:2020. The students' feedback high-

lights the importance of applying a UCD approach to

the development of an LD. This is consistent with the

findings of Vesin et al. (2018), who emphasised the

importance of UCD in creating adaptive learning sys-

tems. These findings helped us to conduct an UX

analysis and provided us with valuable insights,

which we were able to use to refine the prototype. The

issues identified suggest that the prototype can bene-

fit significantly from refinement of its user interface

and functionality. In summary, it is clear from this

evaluation that there is great value in having a tool

evaluated user-centered by students, for students, as

only they know the challenges of student life. In ad-

dition, due to the course's focus on fundamental HCI

topics, participants had background knowledge that,

combined with their student-centered perspective, en-

abled them to evaluate the UX of the LD and provide

informed feedback. The results show that the research

could be repeated in the same way as part of the de-

sign study, but it has to be taken into account that the

time available was not sufficient for all students, as

shown by the different number and partly reduced

length of feedbacks on the individual interaction prin-

ciples. Also, the population may not be generalizable

to all online students.

User-Centered Design and Iterative Refinement: Promoting Student Learning with an Interactive Dashboard

345

7 OUTLOOK

Through the feedback received, we are able to imple-

ment optimizations that could significantly improve

the user interface, help features, and result in an over-

all improved UX to better meet user expectations.

Taken together, these improvements could signifi-

cantly increase usability, intuitiveness, and intelligi-

bility to provide a tool that does not overwhelm the

user. Furthermore, it would help them to reflect on

and gain insight into their own learning process in the

isolated environment in which online students often

find themselves. As we move forward, it is important

to continue to engage with students and iteratively re-

fine the tool based on their feedback. After the opti-

mization and subsequent further development of the

LD, we will take a look at the cognitive demands of

using the LD to also question the psychological as-

pects.

ACKNOWLEDGEMENTS

This work was funded by the German Federal Minis-

try of Education, grant No. 01PX21001B.

REFERENCES

Barnard-Brak, L., Paton, V. O., & Lan, W. Y. (2010). Pro-

files in self-regulated learning in the online learning en-

vironment. International Review of Research in Open

and Distributed Learning, 11(1), 61-80.

Caddick, R., & Cable, S. (2011). Communicating the user

experience: A practical guide for creating useful UX

documentation. John Wiley & Sons.

Chen, C. Y., Pedersen, S., & Murphy, K. L. (2011). Learn-

ers’ perceived information overload in online learning

via computer-mediated communication. Research in

Learning Technology, 19(2).

Courage, C., & Baxter, K. (2005). Understanding your us-

ers: A practical guide to user requirements methods,

tools, and techniques. Gulf Professional Publishing.

Drzyzga, G., & Harder, T. (2022). Student-centered Devel-

opment of an Online Software Tool to Provide Learning

Support Feedback: A Design-study Approach.

Getto, Barbara, Patrick Hintze, und

Michael Kerres. (2018). (Wie) Kann Digitalisierung

zur Hochschulentwicklung beitragen?

Hampshire, N., Califano, G., Spinks, D. (2022).

Wireframes. In: Mastering Collaboration in a Product

Team. Apress, Berkeley, CA.

https://doi.org/10.1007/978-1-4842-8254-0_45

Janneck, M., Merceron, A., Sauer, P. (2021). Workshop on

addressing dropout rates in higher education, online –

everywhere. In Companion Proceedings of the 11th

Learning Analytics and Knowledge Conference (LAK

2021), pp. 261–269

Kaufmann, R., & Vallade, J. I. (2021). Online student per-

ceptions of their communication preparedness. E-learn-

ing and Digital Media, 18(1), 86-104.

Koi-Akrofi, G. Y., Owusu-Oware, E., & Tanye, H. (2020).

Challenges of distance, blended, and online learning: A

literature-based approach. International Journal on In-

tegrating Technology in Education, 9(4), 17-39.

Lehmann, T., Hähnlein, I., & Ifenthaler, D. (2014). Cogni-

tive, metacognitive and motivational perspectives on

preflection in self-regulated online learning. Computers

in human behavior, 32, 313-323.

Luther, L., Tiberius, V., & Brem, A. (2020). User Experi-

ence (UX) in business, management, and psychology:

A bibliometric mapping of the current state of research.

Multimodal Technologies and Interaction, 4(2), 18.

Matcha, W., Gašević, D., & Pardo, A. (2019). A systematic

review of empirical studies on learning analytics dash-

boards: A self-regulated learning perspective. IEEE

transactions on learning technologies, 13(2), 226-245.

Shrivastav, H., & Hiltz, S. R. (2013). Information overload

in technology-based education: A meta-analysis.

Siemens, G., & Baker, R. S. D. (2012, April). Learning an-

alytics and educational data mining: towards communi-

cation and collaboration. In Proceedings of the 2nd in-

ternational conference on learning analytics and

knowledge (pp. 252-254).

Verbert, K., Govaerts, S., Duval, E., Santos, J. L., Van

Assche, F., Parra, G., & Klerkx, J. (2014). Learning

dashboards: an overview and future research opportu-

nities. Personal and Ubiquitous Computing, 18, 1499-

1514.

Verbert, K., Ochoa, X., De Croon, R., Dourado, R. A., &

De Laet, T. (2020, March). Learning analytics dash-

boards: the past, the present and the future. In Proceed-

ings of the tenth international conference on learning

analytics & knowledge (pp. 35-40).

Vesin, B., Mangaroska, K., & Giannakos, M. (2018).

Learning in smart environments: user-centered design

and analytics of an adaptive learning system. Smart

Learning Environments, 5(1), 25.

DOI:10.1186/s40561-018-0071-0

Viberg, O., Khalil, M., & Baars, M. (2020, March). Self-

regulated learning and learning analytics in online

learning environments: A review of empirical research.

In Proceedings of the tenth international conference on

learning analytics & knowledge (pp. 524-533).

Vlasenko, K. V., Lovianova, I. V., Volkov, S. V., Sitak, I.

V., Chumak, O. O., Krasnoshchok, A. V., ... & Seme-

rikov, S. O. (2022, March). UI/UX design of educa-

tional on-line courses. In CTE Workshop Proceed-

ings (Vol. 9, pp. 184-199).

Wannemacher, Klaus, Imke Jungermann, Julia Scholz,

Hacer Tercanli, und Anna von Villiez. (2016). „Digitale

Lernszenarien im Hochschulbereich“.

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

346