Towards Developing an Ontology for Safety of Navigation Sensors in

Autonomous Vehicles

Mohammed Alharbi

1,2 a

and Hassan A. Karimi

1

1

Geoinformatics Laboratory, School of Computing and Information, University of Pittsburgh, Pittsburgh, PA 15260, U.S.A.

2

College of Computer Science and Engineering, Taibah University, Medina 42353, Saudi Arabia

Keywords:

Autonomous Vehicles, Ontologies, Sensor Uncertainty, Uncertainty Assessment, Autonomous Navigation.

Abstract:

Understanding and handling uncertainties associated with navigation sensors in autonomous vehicles (AVs)

is vital to enhancing their safety and reliability. Given the unpredictable nature of real-world driving envi-

ronments, accurate interpretation and management of such uncertainties can significantly improve navigation

decision-making in AVs. This paper proposes a novel semantic model (ontology) for navigation sensors and

their interactions in AVs, focusing specifically on sensor uncertainties. At the heart of this new ontology is

understanding the sources of sensor uncertainties within specific environments. The ultimate goal of the pro-

posed ontology is to standardize knowledge of AV navigation systems for the purpose of alleviating safety

concerns that stand in the way of widespread AV adoption. The proposed ontology was evaluated with scenar-

ios to demonstrate its functionality.

1 INTRODUCTION

Autonomous vehicles (AVs), equipped with advanced

navigation sensors, stand as a pivotal development to-

wards mitigating the widespread casualties and in-

juries resulting from human driving errors. AVs’

perception sensors, algorithms, and electronics con-

stantly monitor driving space and execute naviga-

tion tasks such as planning paths, maneuvering, and

detecting objects. Human behaviors contribute to

around 90% of fatal car accidents, positioning au-

tomation as a revolutionary safety solution (National

Highway Traffic Safety Administration, 2022). How-

ever, to achieve a high level of safety, AVs should

be deliberately engineered to favor safety over conve-

nience and speed. Otherwise, their superior percep-

tion would only prevent about a third of these acci-

dents (Mueller et al., 2020).

AV navigation systems face vulnerabilities stem-

ming from the inherent limitations of their sensors

and algorithms. For instance, camera-based object

detection techniques are compromised in rainy condi-

tions, while the accuracy of global navigation satellite

systems (GNSSs) degrades due to physical obstruc-

tions like buildings and tunnels. These limitations re-

sult in uncertainties and failures in navigation tasks,

a

https://orcid.org/0000-0002-9293-4905

raising legitimate concerns about the safety of passen-

gers, properties, and other road users. Consequently,

the development of AV navigation that is reliable and

trustworthy remains a challenge, particularly for intri-

cate navigation tasks.

Addressing this challenge has prompted intensi-

fied efforts in researching, developing, and validat-

ing automated driving systems. A typical reliability

metric for AVs is distance traveled without accidents

or failures. Experiencing adequate failure and acci-

dent rates requires AVs to drive hundreds of millions

of miles successfully, taking hundreds of years for a

fleet of 100 vehicles driving at a speed of 25 miles

per hour (Kalra and Paddock, 2016). Moreover, such

tests are unlikely to expose AVs to the full spectrum

of driving conditions.

To overcome the problem of high-mileage travel

testing, advanced or complementary assessment tech-

niques are required. An example of such techniques

is scenario-based that prioritizes safety (Riedmaier

et al., 2020). Such scenarios can be generated us-

ing knowledge-based (e.g., Chen and Kloul, 2018) or

data-driven (e.g., Krajewski et al., 2018; Zhou and

del Re, 2017) approaches. In the former, ontologies

are widely used for organizing and preserving ex-

pert knowledge, while the latter leverages machine-

learning and data fusion methods for pattern recog-

nition and scenario classification. However, the cur-

Alharbi, M. and Karimi, H.

Towards Developing an Ontology for Safety of Navigation Sensors in Autonomous Vehicles.

DOI: 10.5220/0012207300003598

In Proceedings of the 15th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2023) - Volume 2: KEOD, pages 231-239

ISBN: 978-989-758-671-2; ISSN: 2184-3228

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

231

rent literature on scenario-based techniques is cen-

tered around safety aspects related to driving maneu-

vers (e.g., Zhou and del Re, 2017), road conditions

(e.g., Chen and Kloul, 2018), intersections (e.g., Je-

senski et al., 2019), and difficult weather conditions

(e.g., Gyllenhammar et al., 2020), with little or no at-

tention paid to navigation sensor safety aspects.

To fill this research gap, we propose a semantic

model (ontology) for AVs to understand and handle

uncertainties associated with navigation sensors. The

fundamental idea underlying our ontology involves

identifying and recognizing sources of sensor limita-

tion within a specific space.

The paper is structured as follows. Section 2 sum-

marizes relevant AV ontologies. Section 3 introduces

the proposed ontology. Section 4 examines three sce-

narios to demonstrate the efficacy of the proposed on-

tology in enhancing the safety and reliability of AVs.

Finally, Section 5 concludes the paper with the key

findings and potential future research directions.

2 RELATED WORKS

To date, several ontologies related to AVs have been

proposed. Schlenoff et al. (2003) developed an on-

tology to represent objects in the AV surroundings

for enhanced path planning. The ontology integrated

rules for collision damage estimation with respect to

each object, allowing the path planner to evaluate the

rules and decide whether a specific object should be

avoided. Regele (2008) introduced an ontology for

common traffic features like intersections, multi-lane

roads, opposing traffic lanes, and bi-directional lanes

to support AV decision-making processes. H

¨

ulsen

et al. (2011) proposed an extended traffic-oriented on-

tology that covers complex traffic situations involv-

ing roads with multiple lanes, vehicles, traffic lights,

and signs. Zhao et al. (2015a, 2016, 2015b) devel-

oped an ontology centered on safety improvement,

targeting intersections without traffic lights and nar-

row roads in urban areas. In collaborative naviga-

tion, an ontology was created to regulate communi-

cations between vehicles, pedestrians, and infrastruc-

tures in order to avoid collisions (Syzdykbayev et al.,

2019). A situational-awareness ontology was intro-

duced to guide the behaviors of AVs inside manufac-

turing plants (El Asmar et al., 2021). The ontology

has the capacity to analyze current and predicted sit-

uations in a smart facility where interactions between

agents are critical.

The proposed ontology is different than these

aforementioned ontologies that are intended to en-

hance path planning, traffic modeling, and situational

awareness in AVs in that its emphasis is on sensor un-

certainty. The objectives of the proposed ontology are

to provide a comprehensive model of AV navigation

in order to assess the impact of varying environments

on navigation sensors’ performance, identify the limi-

tations of navigation sensors in specific environments,

and provide standardized knowledge for safe AV nav-

igation.

3 AV NAVIGATION ONTOLOGY

3.1 Vision

An ontology that prioritizes the safety of AV nav-

igation sensors can be articulated through an anal-

ogy highlighting humans’ interaction with the envi-

ronment to navigate. In humans, five sensors—nose,

tongue, eyes, ears, and hands—collect data and con-

vey it to the brain via neurons. The brain processes

and encodes this information in perception areas, en-

abling the extraction of relevant features and aiding

in performing various tasks such as object detection,

identification, and proximity. The outcomes of cer-

tain tasks can trigger actions directed towards actua-

tors, which control the movements of hand and foot

extremities.

These outcomes may be susceptible to uncer-

tainties that can arise from either an internal data-

processing issue, such as cognitive impairment, or

an external factor, such as a severe weather condi-

tion. Given its complexity, the human brain possesses

the ability to account for these uncertainty sources by

evaluating the quality of collected data and percep-

tions. It can analyze data to detect patterns, anoma-

lies, faults, and events, and to determine appropriate

responses. However, uncertainties occasionally orig-

inate from sensor limitations as the quality of sen-

sors varies among people. Thus, people have to be

aware of their own limitations and plan environments

accordingly. For instance, people who have visual im-

pairments may opt for accessible environments, fea-

turing easily detectable and safe walking paths.

3.2 Design

To ensure the applicability and effectiveness of an on-

tology, its creation should adhere to well-established

guidelines, like the six-step process proposed by (Noy

and McGuinness, 2001). The initial step involves

identifying the scope and domain of the ontology,

which, in this case, pertains to sensor uncertainty

related to navigation in AVs. The second step en-

tails augmenting the domain knowledge by leverag-

KEOD 2023 - 15th International Conference on Knowledge Engineering and Ontology Development

232

ing concepts from existing ontologies. The proposed

ontology incorporates several concepts pertaining to

road, time, and sensor, which are widely accepted in

the AI community (El Asmar et al., 2021; Paull et al.,

2012; Syzdykbayev et al., 2019; Zhao et al., 2015b).

Section 3.3 provides further details about these con-

cepts. The third step defines important concepts and

terms to be incorporated into the ontology. This step

also establishes term interactions. The fourth step or-

ganizes these concepts hierarchically based on shared

properties. The fifth step details concept properties,

including descriptive attributes and interrelation prop-

erties. Lastly, the sixth step sets restrictions on ontol-

ogy properties.

The proposed ontology considers common navi-

gation sensors in AVs: GNSSs, inertial measurement

units (IMUs), cameras, light detection and ranging (li-

dar), and radio detection and ranging (radar). GNSSs

utilize a constellation of navigation satellites orbiting

the earth and are used for AV positioning. IMUs pro-

vide AVs with 6-DoF motion or state measurements,

including orientation, velocity, and gravity. Cameras

capture visual images that perceive color and texture

information. Lidar and radar, as range sensors, mea-

sure distances to objects in a space using laser beams

and radio waves, respectively.

Uncertainty sources in AV navigation can be cate-

gorized into sensor, algorithm, and environment. Un-

certainty in sensors is inherent to their characteristics.

GNSS performance is considerably impacted by the

surrounding conditions, such as obstacles that block

line-of-sight or cause multipath problems, satellite

availability and geometry, and the ionospheric scin-

tillation phenomenon (Yu and Liu, 2021).

IMUs, comprising accelerometers, gyroscopes,

and magnetometers, are susceptible to accumulated

error, leading to drift due to rounding of fractions dur-

ing calculations. While drifts can be resolved by aug-

menting IMUs using other sensors like GNSSs, in this

work, each sensor is conceptualized and treated inde-

pendently because each sensor has its own limitations,

and data fusion is typically handled by navigation al-

gorithms.

Camera challenges in AV navigation include lens

distortion, calibration, and environmental conditions.

Lens distortion, due to curved lenses, causes straight

lines to appear curvilinear in images. Calibration is

sensitive, and its accuracy is limited, even under con-

trolled conditions (O’Mahony et al., 2018). Environ-

mental conditions like poor illumination, fog, rain,

snow, and sun glare can easily obscure important fea-

tures in the environment. Furthermore, image quality

is determined by several technical specifications, in-

cluding lens focal length, aperture, and resolution.

In radar, the signal frequency and wavelength have

an inverse correlation; the frequency increases with

decreasing wavelength. Signal resolution and angle

could also result in scattered and incomplete represen-

tations of objects (Bilik et al., 2019). Lidar’s funda-

mental properties are field of view (FoV), range, and

resolution. The vertical and horizontal angles where

lights are transmitted determine the FoV. The term

“Range” refers to the maximum detectable distance,

while resolution denotes the point cloud density.

Navigation sensors often underperform in harsh

conditions, leading to navigation-related uncertainty.

Difficulties arise in extreme weather conditions (e.g.,

haze, rain, and snow), in the presence of obstacles,

and on complex roads, where sensors may malfunc-

tion (e.g., radar interference with conductive materi-

als and poor visibility by cameras). Therefore, un-

derstanding the tolerance and impact of such environ-

ments on AV navigation is critical for robust perfor-

mance.

Algorithm performance also contributes to AV

navigation uncertainties. Though accuracy is the pri-

mary performance metric for algorithms, even algo-

rithms with a high accuracy, typically based on ma-

chine learning models, might not ensure optimal AV

navigation in some real-world scenarios.

For a comprehensive understanding of sensor un-

certainty in AV navigation, various competency ques-

tions can be used to evaluate the proposed ontology.

Examples of such questions are as follows. What

are common AV navigation characteristics? What are

common components of AV navigation? What are

typical tasks of AV navigation? Which properties

of a sensor directly affect its performance? Which

weather conditions and road features have an inverse

effect on sensor performance? Which road features

cause sensor uncertainty? What degree of uncertainty

is associated with a navigation task under specific

environmental conditions and sensor configurations?

Which sensor is susceptible to a particular source of

uncertainty?

3.3 Model

Drawing upon a thorough examination of existing

AV ontologies and literature addressing uncertainty in

AVs (e.g., Alharbi and Karimi, 2020, 2021; El As-

mar et al., 2021; Paull et al., 2012; Syzdykbayev

et al., 2019; Zhao et al., 2015a, 2016, 2015b) as

well as a deep understanding of the functional prin-

ciples of AVs, described by Pendleton et al. (2017),

as our foundation, we devised the metadata or at-

tribute structure and established the primary concepts

and their relationships. The result is the ontology de-

Towards Developing an Ontology for Safety of Navigation Sensors in Autonomous Vehicles

233

Environment

Snow

Weather

Condition

FoggyRainySunny

densi t ypr ec i pi t at i on

angl e

typeOf

typeOf

typeOf

typeOf

hasPart

Obstacle

Pedestrian

Object

existsIn

typeOf

typeOf

Road

Lane

Sensor

IMU

GNSS

Radar

Camera

Lidar

observes

Navigation

Task

Controller

utilizes

undertakes

Autonomous

Vehicle

State

belongsTo

updates

operatesOn

Slippery

Road

Driving

Condition

typeOf

affects

time:

Temporal

Entity

tagsAnEventBy

Observation

pullsDataFr om

Component

typeOf

includes

Module

Actuator

typeOf

typeOf

implements

typeOf

Acceleration

Steering

Braking

Driving

Action

typeOf

typeOf

typeOf

controls

hasPart

typeOf

typeOf

typeOf

typeOf

typeOf

wi dt h

mar ker

dr i f t

r esol ut i on

f r equency

aper t ur e

angl e

r esol ut i on

f ocal Lengt h

pul seRat e

accur acy

r ange

channel Count

H_FoV

V_FoV

hasPart

hasPart

dept h

x- l oc at i on

y- l oc at i on

z- l oc at i on

Road

Featur e

describes

x- l oc at i on

y- l oc at i on

Localization

and

Mapping

Perception

Planning

hasPart

Internal

Sensor

typeOf

External

Sensor

typeOf

Legend

Concept

float data type property

integer data type property

improves

impairs

impairs

impairs

al gor i t hmAccur acy

degradesPerformanceOf

obscur es

obscur es

Feild of

View

shrinks

malfunctions

utilizes

assesses

misleads

Nor mal

Road

typeOf

Sun

Light

Visibility

contributesTo

Road

Light

contributesTo

Air

Quality

contributesTo

Decision

Quality

Faulty

Uncertain

show s

typeOf

typeOf

allow sMonitoring

Inclination

Cur vature

Road

Type

is-a

is-a

is-a

Urban

Rural

typeOf

typeOf

typeOf

Highway

Building

Vehicle

Tree

typeOf

typeOf

typeOf

Other

typeOf

pr oduces

x- l oc at i on

y- l oc at i on

z- l oc at i on

yaw

r ol l

pi t c h

vel oci t y

Tunnel

is-a

definesAnAreaOf

ordinary r elation

safety relation

subsumption relation

is-a

pr ec i si on

accur acy

vi si bi l i t y

di r ect i on

string data type property

shrinks

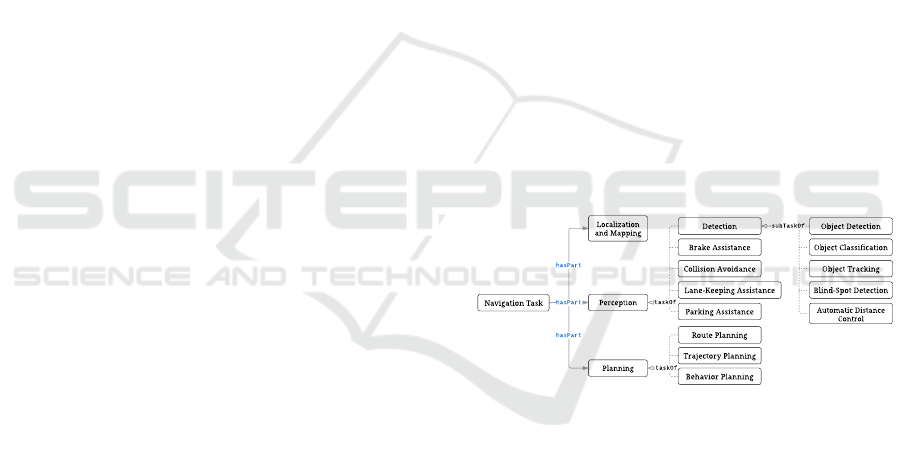

Figure 1: An ontology for safety of navigation sensors in autonomous vehicles.

picted in Figure 1. The core concept of this ontol-

ogy is Autonomous Vehicle, which holds relationships

with Environment and Component (i.e., Sensor and

Module). Although our ontology borrows some con-

cepts from existing ontologies, including Lane, Road

Type, Highway, Driving Condition, Sensor, Vehicle,

Temporal Entity, Observation, and Road, we employ

these concepts in the proposed ontology in different

ways to meet the goal of AV navigation safety.

3.3.1 Component

Component denotes the essential elements that make

up a navigation system in AVs and can be classi-

fied into Module and Sensor. Module represents the

AV components that are used to process sensor data

in order to compute and execute the vehicle’s State

and Driving Action (i.e., Acceleration, Steering, or

Braking). The typical structure of Module consists of

two units: Controller and Actuator. The former con-

trols driving actions and updates the vehicle’s State,

while the latter implements those actions. State is cap-

tured through data properties: velocity, x-location, y-

location, z-location, yaw, roll, pitch, and direction.

Sensor represents devices engineered to detect and

measure physical phenomena during navigation. In

our ontology, Sensor is grouped, based on the infor-

mation each sensor provides and its mode of opera-

tion, into: External Sensor (Lidar, Radar, and Cam-

era) and Internal Sensor (GNSS and IMU). The for-

mer provides information about the shape, position,

and movement of objects in the vehicle’s environ-

ment, and the latter monitors various internal param-

eters, such as velocity and location.

Quality of Sensor is captured in data proper-

ties: Resolution, Aperture, and FocalLength for Cam-

era; ChannelCount, PulseRate, Accuracy, FoV, and

Range for Lidar; Resolution, Frequency, and Angle

for Radar; Accuracy and Precision for GNSS; Drift

for IMU. Another concept related to Sensor (via pulls-

DataFrom) is Observation. This concept represents

the intermediate or initial measurements made by a

sensor, which are utilized by Navigation Task. Sensor

can only observe a specific Field of View that defines

an area of Environment.

KEOD 2023 - 15th International Conference on Knowledge Engineering and Ontology Development

234

3.3.2 Environment

Environment describes the surroundings in which

an AV operates. This concept includes Road and

Weather Condition that could affect the vehicle’s abil-

ity to navigate and function safely. Road has Road

Type that specifies the design and function of a road,

such as Highway, Rural, and Urban. Road can be de-

scribed by Road Feature that serves its purpose, de-

pending on the type and location of the road. For

the purpose of our ontology, we consider only fea-

tures of roads which may cause sensors to fail such

as Tunnel, Lane (i.e., marker and width), Inclina-

tion, and Curvature. Of the road features, Inclina-

tion and Curvature might shrink the Field of View of

Sensor. Weather Condition, categorized into Sunny,

Rainy, Foggy, and Snow, could adversely affect sensor

performance. These conditions are quantified using

angle, precipitation, density, and depth, respectively.

Sever weather conditions could impair Environment

and affects Driving Condition, resulting in Normal or

Slippery roads, and may also obscure Obstacles. Two

common types of Obstacle are Pedestrian and Object,

such as Building, Vehicle, Tree, among Other objects.

If obstacles, such as debris, interfere with AVs’ sen-

sors, it could potentially impact the vehicle’s ability

to accurately perceive its environment and make safe

driving decisions. Sensor also cannot observe objects

with reflective or transparent materials. As a result,

Obstacle can malfunction Sensor.

Another environment-related concept is Visibility,

which expresses how well an object can be seen in

Environment, typically expressed in terms of distance

at, or clarity with, which the object can be seen. Vis-

ibility can be affected by a variety of contributors, in-

cluding Sun Light, Road Light, and Air Quality. Good

visibility is important for a variety of reasons, includ-

ing safety and navigation since it allows for monitor-

ing Environment. Considering the complexity and dy-

namicity of Environment factors, Environment is very

likely to degrade the performance of AV Component.

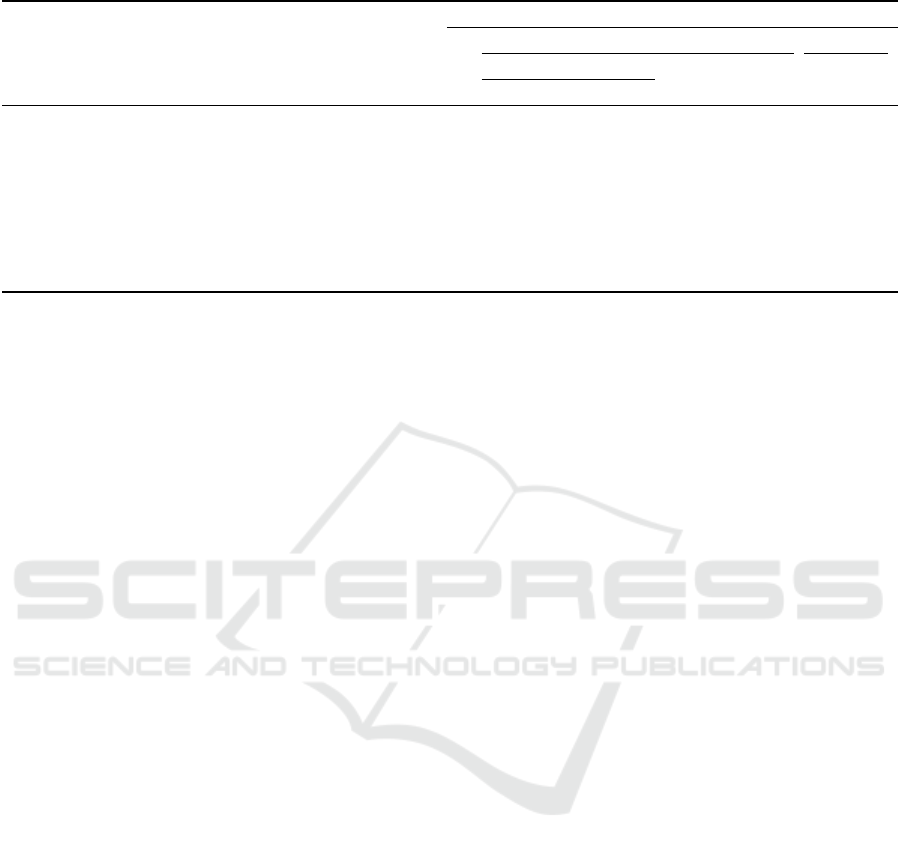

3.3.3 Navigation Tasks

The concept of Navigation Task consists of subclasses

that include Localization and Mapping (LM), Percep-

tion, and Planning (linked to Navigation Task through

hasPart). The taxonomy of Navigation Task is de-

picted in Figure 2. LM enables AVs to pinpoint

their location within the space. Perception has sev-

eral subtasks: Brake Assistance (BA) inspects the area

around AVs and helps them stop ahead of impending

crash with an obstacle; Collision Avoidance (CA) an-

alyzes AV trajectories to maneuver obstacles; Lane-

Keeping Assistance (LKA) actively aids AVs to main-

tain themselves in the center of the lane; Parking As-

sistance (PA) locates vacant parking spots, which then

parks the vehicle comfortably; Detection includes

tasks such as Object Detection (OD), Object Classifi-

cation (OC), Object Tracking (OT), Blind-Spot Detec-

tion (BSD), Automatic Distance Control (ADC), all

connected using subTaskOf. BSD monitors zones at

the sides of the vehicle, which navigation sensors can-

not sense, requiring dedicated sensors. ADC detects

the vehicle ahead and ensures that a safe distance is

maintained. Planning represents Route or RP (i.e., an

itinerary between two places), Trajectory or TP (i.e.,

a local path with respect to vehicle and space con-

straints), and Behavior or BP (i.e., driving decisions

such as lane changes). The taskOf relationship links

the descendant concepts with Perception and Plan-

ning. Each navigation task is executed by a set of

algorithms that are designed to be resilient to sensor

failures or malfunctions so that certain risks can be

mitigated. These algorithms can encounter unknown

circumstances, including sensors with manufacturing

defects, wear and tear, or software bugs, which can af-

fect their performance. Thus, Sensor can mislead the

algorithms as they could be error sources. The qual-

ity of a navigation task in the ontology is expressed

through the algorithmAccuracy data property (princi-

pally in percent).

Navigation Task

Automatic Distance

Control

Object Tracking

Brake Assistance

Collision Avoidance

Lane-Keeping Assistance

Object Classification

Blind-Spot Detection

Parking Assistance

Route Planning

hasPar t

hasPar t

t askOf

t askOf

Localization

and Mapping

Perception

Planning

Trajectory Planning

Behavior Planning

Detection Object Detection

subTaskOf

hasPar t

Figure 2: Taxonomy of navigation tasks.

3.3.4 Decision Quality

Decision Quality provides knowledge about the ex-

tent to which a decision is likely to produce a de-

sired Driving Action. Decision Quality is a mea-

sure of the effectiveness and appropriateness of a

decision, taking into account the available sensors,

their constraints, and the objectives of the situation

in which AVs operate. Sub-concepts like Faulty and

Uncertain further describe Decision Quality. Faulty

quality indicates incorrect decisions due to issues

with the sensors involved in processing that decision,

whereas Uncertain quality implies noise-induced dis-

ruptions. Navigation decisions are typically made by

Controller, which is related to Decision Quality via

the shows relationship.

Towards Developing an Ontology for Safety of Navigation Sensors in Autonomous Vehicles

235

Table 1: Ontology rules.

Impacted Navigation Tasks (F: failure, U: uncertainty)

Perception Planning

Detection

Rule # Condition LM OD OC OT BSD ADC CA LKA BA PA RP TP BP

1 AV is deployed without GNSS. F F

2 AV drives in Tunnel. U U

3 AV is deployed without IMU. U U U U U U U

4 AV is deployed without Radar. U U U U U U U U U

5 AV is deployed without Lidar. U U U U U U U U U U

6 AV is deployed without Camera. U U F U U U U U U U U

7 AV drives on Road without lane markers. U U U U

8 AV drives on a Slippery road or on extreme weather conditions

such as Snow, Foggy, or Rainy.

U U U U U U U U U U U

9 AV drives in an environment with low Visibility. U U U U U U U U U U U

10 AV navigates while facing sun glare. U U U U U U U U U U U

3.4 Constraints

Constraints indicate the conditions under which a

property (i.e., object or data properties) or a relation

holds true for a given ontological element. These con-

straints include cardinality restrictions, which specify

the number of times a property can be utilized, and

value restrictions, which specify the types of values

that a property can take, so that the relationships be-

tween entities in the ontology are consistent and logi-

cal. Beyond these domain-related constraints, we de-

signed various property constraints in our ontology to

ensure its integrity and accuracy by limiting the per-

missible ways that the properties can be used. As can

be seen in Figure 1, the ontology treats all data prop-

erties as either integer, float, or string, all defined by

the XML Schema (Biron et al., 2004)). Alongside

data types, we define the following constraints.

Constraint 1 (Autonomous Vehicle). Each Autonomous Ve-

hicle must include some navigation Component to ensure

the presence of automated driving features.

Constraint 2 (Component). Each Component must be clas-

sified as either Sensor, for collecting data, or Module, for

processing data and control.

Constraint 3 (Module). Each Module must possess a des-

ignated role of either Actuator, for physically control-

ling AVs, or Controller, for data processing and decision-

making.

Constraint 4 (Controller). Each Controller must be capa-

ble of handling one or multiple Navigation Task simultane-

ously.

Constraint 5 (Environment). Each Road must inherently

exhibit descriptive Feature.

Constraint 6 (Sensor). Each Sensor must be evaluated sep-

arately with no interactions with other sensors since each

sensor is of a different type with its own specifications.

Constraint 7 (Environment). Weather Condition must be

uniquely associated with each Environment.

Constraint 8 (Algorithm). Accuracy of each algorithm

must fall within an acceptable range, which is defined as

80 to 100 percent.

3.5 Ontology Rules

Rules represent statements that establish connec-

tions between concepts and properties and facilitate

drawing inferences and imposing restrictions on the

knowledge contained in the ontology. In particu-

lar, rules are designed to explicitly model situations

where factors could impact the quality of decisions

made by navigation algorithms in AVs. Appropriate

rules ensure that the ontology remains consistent, co-

herent, and accurate, preventing errors or inconsisten-

cies. A total of ten rules have been established in the

proposed ontology. Table 1 summarizes these rules

and what Navigation Task is affected by each rule.

Rule 1. GNSS malfunctioning considers all decisions,

made by Controller (LM or RP), Faulty.

Rule 2. Passing through a tunnel makes all decisions, made

by Controller (LM or RP), Faulty due to GNSS signal block-

age.

Rule 3. IMU malfunctioning considers all decisions, made

by Controller (LM, OT, CA, BA, PA, TP, or BP), Uncertain.

Rule 4. Radar malfunctioning considers all decisions,

made by Controller (OD, OT, BSD, ADC, CA, BA, PA, TP,

or BP), Uncertain.

Rule 5. Lidar malfunctioning considers all decisions, made

by Controller (OD, OC, OT, BSD, ADC, CA, BA, PA, TP,

or BP), Uncertain.

Rule 6. Camera malfunctioning considers all decisions,

made by Controller (LM, OD, OT, BSD, LKA, CA, BA,

PA, TP, or BP), Uncertain.

Rule 7. Navigating on a road without lane markers con-

siders all decisions, made by Controller (LM, LKA, TP, or

BP), Uncertain.

KEOD 2023 - 15th International Conference on Knowledge Engineering and Ontology Development

236

Table 2: Evaluation scenarios descriptions and results.

Scenario # Weather

Condition

Visibility Road Sensor Impacted

Sensor

Navigation

Task

Ontology

Inference

1 Normal Clear Urban GNSS GNSS LM Uncertain

Heavy traffic Camera IMU, Lidar OD Uncertain

2 Heavy rain Poor Highway

GNSS

IMU

Camera

Lidar

Radar

Camera

LM Uncertain

OD Uncertain

3 Not applicable Clear Tunnel GNSS LM Uncertain

OD Certain

Rule 8. Traveling on a slippery road or under adverse

weather conditions, such as snow, fog, or rain considers all

decisions, made by Controller (LM, OD, OC, OT, LKA,

CA, BA, PA, RP, TP, or BP), Uncertain.

Rule 9. Low-visibility conditions consider all decisions,

made by Controller (LM, OD, OC, OT, LKA, CA, BA, PA,

RP, TP, or BP), Uncertain.

Rule 10. During sunrise or sunset, an AV traveling towards

east or west and relying on visual sensors may face glare

conditions that impair their performance. Such conditions

consider all decisions, made by Controller (LM, OD, OC,

OT, LKA, CA, BA, PA, TP, or BP), Uncertain.

3.6 Ontology Development

The proposed ontology was implemented in Prot

´

eg

´

e,

a well-established open-source platform for creat-

ing ontologies (Musen, 2015). To ensure flexibil-

ity and standardization, the ontology was encoded

in the Web Ontology Language (OWL) format since

it is a W3C recommendation and is based on the

Resource Description Framework (RDF) and RDF

Schema (RDFS), facilitating its sharing and integra-

tion. Prot

´

eg

´

e along with OWL offers a comprehen-

sive range of tools for defining classes, properties,

and their relationships, and for articulating rules and

constraints. To detect inconsistencies and enable in-

ference on the ontology, we employed Pellet model

reasoner supported by Prot

´

eg

´

e (Sirin et al., 2007).

Prot

´

eg

´

e also provides DL (description logic) queries

for retrieving ontology information. The ontology

constraints were encoded as class expressions. To

specify complex logical expressions and inference

rules for reasoning over the ontology, the Semantic

Web Rule Language (SWRL) was adopted, which

facilitated automated inference and performance of

complex reasoning tasks by Pellet, enhancing the on-

tology’s effectiveness and usefulness.

The final version of the ontology consists of 71

classes, 140 object properties, and 39 data proper-

ties. The selected classes effectively capture the es-

sential features and relationships in the ontology do-

main. The properties define the relationships be-

tween the classes and articulate the constraints and

rules that govern the ontology. In addition to the

classes and properties, we also included 635 axioms

in the ontology to formally represent the relation-

ships and constraints between the classes and proper-

ties. These axioms specify the logical rules that must

be followed to maintain consistency and coherence

within the ontology, thereby enabling a more com-

plete and nuanced representation of the knowledge in

the ontology domain. To further enhance the reason-

ing capabilities of the ontology, we also encoded 10

SWRL rules. The following link can be used to gain

access to the ontology: https://github.com/MHarbi/

Safety-AV-Nav-Ontology.

4 EVALUATION

The ontology was evaluated to assess its effective-

ness in addressing AV navigation safety. This evalua-

tion involved the execution of ontology queries within

Prot

´

eg

´

e 5.6.1, installed on a MacBook Pro running

macOS 12.6.9. Model reasoning was conducted us-

ing Pellet 2.2.0. To evaluate the ontology’s effective-

ness, three scenarios were devised, as described in lit-

erature (Degbelo, 2017; Obrst et al., 2007). These

scenarios were designed to investigate specific as-

pects related to AV navigation safety. Specifically,

the competency questions guiding this evaluation as

follows. What are the implemented navigation tasks?

What is the level of uncertainty for each navigation

task? What is the identifiable source(s) of uncer-

tainty? Which sensor is impacted by these sources?

Table 2 provides descriptions for the scenarios and re-

sults. In each scenario, the AV used only LM and OD,

which were identified by the ontology successfully, to

make navigation-related decisions.

In the first scenario, an AV is navigating through

heavy urban traffic under normal weather conditions

with clear visibility. Within this context, the AV’s

cameras and GNSS operate optimally but the IMU

and lidar do not. The ontology model reasoning infers

Uncertain decisions for both navigation tasks (LM

and OD). These uncertainties are primarily attributed

Towards Developing an Ontology for Safety of Navigation Sensors in Autonomous Vehicles

237

to the lack of sensors like IMU and lidar, which play

an important role in enhancing the vehicle’s percep-

tion, particularly in intricate traffic scenarios.

The second scenario takes a place on a highway

under heavy rain. Despite having a full array of sen-

sors, the ontology still marked both navigation task

decisions as Uncertain. This is because the adverse

weather condition severely impedes visibility and,

consequently, the performance of cameras. In partic-

ular, low-visibility conditions would impair the cam-

eras’ efficacy in identifying nearby vehicles and ob-

stacles as well as localizing the AV.

The third scenario is a tunnel environment where

camera visibility and weather conditions are not fac-

tors of concern. Here, the ontology rated OD as cer-

tain but LM as Uncertain. Upon entering the tunnel,

the AV’s GNSS experiences signal degradation or to-

tal loss due to the surrounding infrastructure. This

compromises the utility of GNSS as a solo sensor

for position estimation. In contrast, the IMU con-

sistently delivers precise information on the vehicle’s

state, irrespective of GNSS signal availability. Addi-

tionally, cameras remain functional in the tunnel en-

vironment under adequate artificial illumination, al-

lowing for object and environmental feature detec-

tion. Meanwhile, the lidar sensor can supply maps

of the tunnel environment. In this scenario, despite

the temporary loss of GNSS signals inside the tunnel,

the sensor fusion algorithms can overcome potential

navigational task failures. Upon exiting, the AV re-

establishes GNSS signal and adjusts its global path

planning accordingly.

The evaluation of the ontology reveals insights

into its capacity to assess the quality and reliability

of navigation tasks under diverse and challenging cir-

cumstances. By comprehending these circumstances,

AVs can adapt proper strategies to avoid potential pit-

falls and optimise sensor resources to navigate safely

through diverse and dynamic environments.

5 CONCLUSIONS AND FUTURE

WORKS

In this paper, we propose an ontology for AV nav-

igation sensor uncertainty. Utilizing the knowledge

represented in this ontology, uncertainties from dif-

ferent sources affecting sensor performance can be as-

sessed and handled for safe operation. We evaluated

the proposed ontology with three AV driving scenar-

ios where typically navigation sensors are potentially

challenged and prone to uncertainties. Our evaluation

results indicate that the proposed ontology can detect

the uncertainty sources, the impacted sensors and the

navigation tasks to handle safety of AV navigation.

While the results of these initial validations of the

proposed ontology are promising, the ontology, like

any new ontology in any domain, needs to be tested

with a wide variety of scenarios, examined by the AV

community, and updated based on the received feed-

back.

REFERENCES

Alharbi, M. and Karimi, H. A. (2020). A global path plan-

ner for safe navigation of autonomous vehicles in un-

certain environments. Sensors, 20(21):6103–6103.

Alharbi, M. and Karimi, H. A. (2021). Context-aware sen-

sor uncertainty estimation for autonomous vehicles.

Vehicles, 3(4):721–735.

Bilik, I., Longman, O., Villeval, S., and Tabrikian, J. (2019).

The rise of radar for autonomous vehicles: Signal pro-

cessing solutions and future research directions. IEEE

signal processing Magazine, 36(5):20–31.

Biron, P. V., Permanente, K., and Malhotra, A. (2004).

XML schema part 2: Datatypes. W3C recommen-

dation, W3C. https://www.w3.org/TR/2004/REC-

xmlschema-2-20041028.

Chen, W. and Kloul, L. (2018). An ontology-based ap-

proach to generate the advanced driver assistance use

cases of highway traffic. In 10th International Joint

Conference on Knowledge Discovery, Knowledge En-

gineering and Knowledge Management - KEOD,

(IC3K 2018), pages 75–83. SciTePress.

Degbelo, A. (2017). A snapshot of ontology evaluation cri-

teria and strategies. In 13th International Conference

on Semantic Systems, page 1–8.

El Asmar, B., Chelly, S., and F

¨

arber, M. (2021). Aware: An

ontology for situational awareness of autonomous ve-

hicles in manufacturing. In Proceedings of the AAAI

Workshops on Commonsense Knowledge Graphs

(CSKGs).

Gyllenhammar, M., Johansson, R., Warg, F., Chen, D.,

Heyn, H.-M., Sanfridson, M., S

¨

oderberg, J., Thors

´

en,

A., and Ursing, S. (2020). Towards an operational

design domain that supports the safety argumentation

of an automated driving system. In 10th European

Congress on Embedded Real Time Systems (ERTS

2020), TOULOUSE, France.

H

¨

ulsen, M., Z

¨

ollner, J. M., and Weiss, C. (2011). Traffic in-

tersection situation description ontology for advanced

driver assistance. In 2011 IEEE Intelligent Vehicles

Symposium (IV), pages 993–999.

Jesenski, S., Stellet, J. E., Schiegg, F., and Z

¨

ollner, J. M.

(2019). Generation of scenes in intersections for the

validation of highly automated driving functions. In

2019 IEEE Intelligent Vehicles Symposium (IV), pages

502–509. IEEE.

Kalra, N. and Paddock, S. M. (2016). Driving to safety:

How many miles of driving would it take to demon-

strate autonomous vehicle reliability? Transportation

Research Part A: Policy and Practice, 94:182–193.

KEOD 2023 - 15th International Conference on Knowledge Engineering and Ontology Development

238

Krajewski, R., Moers, T., Nerger, D., and Eckstein, L.

(2018). Data-driven maneuver modeling using gen-

erative adversarial networks and variational autoen-

coders for safety validation of highly automated ve-

hicles. In 2018 21st International Conference on In-

telligent Transportation Systems (ITSC), pages 2383–

2390. IEEE.

Mueller, A. S., Cicchino, J. B., and Zuby, D. S. (2020).

What humanlike errors do autonomous vehicles need

to avoid to maximize safety? Journal of Safety Re-

search, 75:310–318.

Musen, M. A. (2015). The prot

´

eg

´

e project: A look back and

a look forward. AI Matters, 1(4):4–12.

National Highway Traffic Safety Administration (2022).

Traffic safety facts 2020 data: Summary of motor ve-

hicle crashes. Report DOT HS 813 369, U.S. Depart-

ment of Transportation.

Noy, N. F. and McGuinness, D. L. (2001). Ontology de-

velopment 101: A guide to creating your first ontol-

ogy. Report KSL-01-05 and SMI-2001-0880, Stan-

ford knowledge systems laboratory.

Obrst, L., Ceusters, W., Mani, I., Ray, S., and Smith, B.

(2007). The evaluation of ontologies. In Baker,

C. J. O. and Cheung, K.-H., editors, Semantic Web:

Revolutionizing Knowledge Discovery in the Life Sci-

ences, pages 139–158. Springer US, Boston, MA.

O’Mahony, N., Campbell, S., Krpalkova, L., Riordan,

D., Walsh, J., Murphy, A., and Ryan, C. (2018).

Computer vision for 3d perception. In Arai, K., ,

Kapoor, S., , and Bhatia, R., editors, Intelligent Sys-

tems and Applications, IntelliSys 2018, pages 788–

804. Springer International Publishing.

Paull, L., Severac, G., Raffo, G. V., Angel, J. M., Boley,

H., Durst, P. J., Gray, W., Habib, M., Nguyen, B., Ra-

gavan, S. V., et al. (2012). Towards an ontology for

autonomous robots. In 2012 IEEE/RSJ International

Conference on Intelligent Robots and Systems, pages

1359–1364.

Pendleton, S. D., Andersen, H., Du, X., Shen, X., Megh-

jani, M., Eng, Y. H., Rus, D., and Ang, M. H. (2017).

Perception, planning, control, and coordination for au-

tonomous vehicles. Machines, 5(1):6.

Regele, R. (2008). Using ontology-based traffic models for

more efficient decision making of autonomous vehi-

cles. In Fourth International Conference on Auto-

nomic and Autonomous Systems (ICAS’08), pages 94–

99.

Riedmaier, S., Ponn, T., Ludwig, D., Schick, B., and Dier-

meyer, F. (2020). Survey on scenario-based safety

assessment of automated vehicles. IEEE Access,

8:87456–87477.

Schlenoff, C., Balakirsky, S., Uschold, M., Provine, R.

O. N., and Smith, S. (2003). Using ontologies to

aid navigation planning in autonomous vehicles. The

Knowledge Engineering Review, 18(3):243–255.

Sirin, E., Parsia, B., Grau, B. C., Kalyanpur, A., and Katz,

Y. (2007). Pellet: A practical owl-dl reasoner. Journal

of Web Semantics, 5(2):51–53. Elsevier - Software

Engineering and the Semantic Web.

Syzdykbayev, M., Hajari, H., and Karimi, H. A. (2019).

An ontology for collaborative navigation among au-

tonomous cars, drivers, and pedestrians in smart cities.

In 2019 4th International Conference on Smart and

Sustainable Technologies (SpliTech), pages 1–6.

Yu, S. and Liu, Z. (2021). The ionospheric condition and

gps positioning performance during the 2013 tropical

cyclone usagi event in the hong kong region. Earth,

Planets and Space, 73(1):66.

Zhao, L., Ichise, R., Mita, S., and Sasaki, Y. (2015a).

Core ontologies for safe autonomous driving. In 14th

International Semantic Web Conference (Posters &

Demonstrations).

Zhao, L., Ichise, R., Sasaki, Y., Liu, Z., and Yoshikawa, T.

(2016). Fast decision making using ontology-based

knowledge base. In 2016 IEEE Intelligent Vehicles

Symposium (IV), pages 173–178.

Zhao, L., Ichise, R., Yoshikawa, T., Naito, T., Kakinami,

T., and Sasaki, Y. (2015b). Ontology-based deci-

sion making on uncontrolled intersections and narrow

roads. In 2015 IEEE Intelligent Vehicles Symposium

(IV), pages 83–88.

Zhou, J. and del Re, L. (2017). Identification of critical

cases of adas safety by fot based parameterization of

a catalogue. In 2017 11th Asian Control Conference

(ASCC), pages 453–458. IEEE.

Towards Developing an Ontology for Safety of Navigation Sensors in Autonomous Vehicles

239