Quantifying Fairness Disparities in Graph-Based Neural Network

Recommender Systems for Protected Groups

Nikzad Chizari

1 a

, Keywan Tajfar

2 b

, Niloufar Shoeibi

1 c

and Mar

´

ıa N. Moreno-Garc

´

ıa

1 d

1

Department of Computer Science and Automation, Science Faculty, University of Salamanca,

Plaza de los Ca

´

ıdos sn, 37008 Salamanca, Spain

2

College of Science, School of Mathematics, Statistics, and Computer Science, Department of Statistics,

University of Tehran, Tehran, Iran

Keywords:

Recommender Systems, Bias, Fairness, Graph-Based Neural Networks.

Abstract:

The wide acceptance of Recommender Systems (RS) among users for product and service suggestions has led

to the proposal of multiple recommendation methods that have contributed to solving the problems presented

by these systems. However, the focus on bias problems is much more limited. Some of the most successful

and recent methods, such as Graph Neural Networks (GNNs), present problems of bias amplification and

unfairness that need to be detected, measured, and addressed. In this study, an analysis of RS fairness is

conducted, focusing on measuring unfairness toward protected groups, including gender and age. We quantify

fairness disparities within these groups and evaluate recommendation quality for item lists using a metric based

on Normalized Discounted Cumulative Gain (NDCG). Most bias assessment metrics in the literature are only

valid for the rating prediction approach, but RS usually provide recommendations in the form of item lists.

The metric for lists enhances the understanding of fairness dynamics in GNN-based RS, providing a more

comprehensive perspective on the quality and equity of recommendations among different user groups.

1 INTRODUCTION

The abundance of information poses a challenge for

users to find products that align with their prefer-

ences, and to address this, Recommender Systems

(RS) have proven to be essential tools. These systems

are now widely integrated into diverse applications

like E-commerce platforms, entertainment platforms,

social networks, and lifestyle apps (Ricci et al., 2022;

Zheng and Wang, 2022; P

´

erez-Marcos et al., 2020;

Lin et al., 2022; Chen et al., 2020). RS cannot only

help lessen the problem of information overload but

also lead to personalization based on users’ interests

(Rajeswari and Hariharan, 2016).

A great amount of research work in this area has

been dedicated to enhancing the performance of RS

and addressing their issues, among which bias mitiga-

tion is one of the most recent. Two of the most critical

issues for RS are bias and fairness, which can lead to

discrimination. A systematic and persistent departure

a

https://orcid.org/0000-0002-7300-6126

b

https://orcid.org/0000-0001-7624-5328

c

https://orcid.org/0000-0003-4171-1653

d

https://orcid.org/0000-0003-2809-3707

from a true value or an accurate portrayal of reality

is referred to as bias, which occurs when a variety

of elements that affect the decision-making or judg-

ment process are present. Biases often come from un-

derlying imbalances and inequalities in data, result-

ing in biased recommendations that can influence in

users’ choices of consumption (Boratto and Marras,

2021; Misztal-Radecka and Indurkhya, 2021). Also,

algorithm design can result in bias and discrimina-

tion in automated decisions (Misztal-Radecka and In-

durkhya, 2021; Gao et al., 2022b).

The widespread use of artificial intelligence and

machine learning techniques in society has resulted

in undesirable effects due to biased models, including

economic, legal, ethical, and security issues that can

harm companies (Di Noia et al., 2022; Fahse et al.,

2021; Kordzadeh and Ghasemaghaei, 2022; Boratto

et al., 2021; Boratto and Marras, 2021; Wang et al.,

2023). Moreover, users may be dissatisfied with bi-

ased recommendations, further exacerbating the prob-

lem (Gao et al., 2022a). In addition, mitigation of

bias is a concern of international organizations whose

regulations include obligations related to this issue,

especially in sensitive areas. (Di Noia et al., 2022).

176

Chizari, N., Tajfar, K., Shoeibi, N. and Moreno-García, M.

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups.

DOI: 10.5220/0012258700003584

In Proceedings of the 19th International Conference on Web Information Systems and Technologies (WEBIST 2023), pages 176-187

ISBN: 978-989-758-672-9; ISSN: 2184-3252

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

The effects of decision making based on biased mod-

els can also be ethical and lead to decisions that dis-

criminate against minority or marginalized groups.

Recent advances in deep learning, including

Graph Neural Networks (GNNs), have improved per-

formance of RS and addressed challenges, even with

sparse data (Mu, 2018; Yu et al., 2023). GNNs excel

at capturing relationships in graph data through mes-

sage passing (Zhou et al., 2020) and have gained pop-

ularity for various graph-related tasks (Dong et al.,

2022b; Zhang et al., 2021; Wu et al., 2020b). How-

ever, they raise concerns about bias and fairness,

potentially discriminating against demographic sub-

groups defined by sensitive attributes like age, gen-

der, or race. Addressing biases in GNNs remains rela-

tively unexplored (Dong et al., 2022b; Dai and Wang,

2021; Dong et al., 2022a; Chen et al., 2022; Xu et al.,

2021; Zeng et al., 2021; Chizari et al., 2022).

In RS, user-item interactions can be viewed as

graphs, with the potential for improvement through

additional data like social dynamics or context. While

neural network-based methods, especially deep learn-

ing, have gained traction in RS, they excel at captur-

ing complex user-item relationships. However, they

are limited to Euclidean data, struggling with intricate

high-order structures (Zhou et al., 2020; Gao et al.,

2022b). Recent advancements in Graph Neural Net-

works (GNNs) have addressed these limitations by

extending deep learning’s capabilities to handle non-

Euclidean complexities (Bronstein et al., 2017; Li,

2023).

Several investigations have underscored the influ-

ence of graph structures and the underlying message-

passing mechanisms within GNNs, shedding light

on their propensity to accentuate both fairness con-

cerns and broader social biases (Chizari et al., 2022;

Dai and Wang, 2021; Chizari et al., 2023). No-

tably, within the landscape of social networks featur-

ing graph architectures, nodes characterized by anal-

ogous sensitive attributes often exhibit a predilection

for establishing connections with one another, distin-

guishing them from nodes marked by disparate at-

tributes. This observable phenomenon engenders an

environment wherein nodes sharing comparable sen-

sitive traits become recipients of akin representations

stemming from the amalgamation of neighboring fea-

tures within the GNN framework. Conversely, nodes

endowed with distinct sensitive attributes garner di-

vergent representations. The ramifications of this dy-

namic are palpable, as it introduces a discernible bias

into the decision-making trajectory (Dai and Wang,

2021).

Sensitive attributes in data, encompassing char-

acteristics like race, gender, sexual orientation, reli-

gion, age, and disability status, are considered pri-

vate and protected by privacy laws due to the po-

tential for discrimination and harm (Oneto and Chi-

appa, 2020). Discrimination concerns socially sig-

nificant categories associated with these attributes,

legally protected in the United States (Barocas et al.,

2017). Recognizing these sensitive attributes is essen-

tial in RS to ensure fairness and prevent biased rec-

ommendations that may be viewed as discriminatory

under European or US laws.

In this study, the aim is to measure group unfair-

ness and subgroup unfairness with sensitive attributes.

We focus on the evaluation of item recommendation

lists since there is hardly any work in the literature

aimed at this type of output of RS, but most of it is

focused on the rating prediction approach.

2 STATE OF THE ART

In this section, we present a comprehensive overview

of prior research endeavors. This segment delves into

the realm of bias and fairness challenges, exploring

various evaluation approaches. The survey encom-

passes multiple layers, ranging from machine learn-

ing (ML) to GNN-based RS. We direct particular at-

tention toward GNN-based RS models and the diverse

array of fairness evaluation metrics employed in this

context with respect to sensitive groups.

2.1 Bias and Fairness in Machine

Learning (ML)

Machine learning (ML) models, which are trained on

human-generated data, can inherit biases present in

the data (Alelyani, 2021; Zeng et al., 2021). These

biases can emerge due to various factors during data

collection and sampling (Bruce et al., 2020). Unfortu-

nately, such biases can persist in ML models, leading

to unfair decisions and suboptimal outcomes (Fahse

et al., 2021; Gao et al., 2022b; Mehrabi et al., 2021).

The ML models themselves can even exacerbate these

biases, impacting decision-making processes (Bern-

hardt et al., 2022). It’s evident that bias can manifest

throughout the ML lifecycle, spanning data collec-

tion, pre-processing, algorithm design, model train-

ing, and result interpretation (Alelyani, 2021; Zeng

et al., 2021). These biases can also originate ex-

ternally from societal inequalities and discrimination

(Bruce et al., 2020).

Numerous research endeavors focus on identify-

ing and assessing biases and unfairness, especially

concerning protected groups like gender, age, and

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

177

race. Various metrics rooted in statistical parame-

ters are employed in these studies. Recent exper-

iments emphasize the need to understand bias ori-

gins in specific contexts, pinpoint problems, and

conduct accurate evaluations, providing a founda-

tion for bias mitigation techniques (Caton and Haas,

2020; Verma and Rubin, 2018; Feldman et al.,

2015; Hardt et al., 2016; Alelyani, 2021). Metrics

can be categorized as individual-level or group-level

\cite{caton2020fairness}. Individual-level metrics

assess treatment equality for individuals with simi-

lar attributes, while group-level metrics evaluate dis-

parate treatment among various groups.

2.2 Bias and Fairness in GNN-Based

Models

Graph Neural Network (GNN)-based models have

recently garnered attention due to their strong per-

formance and applicability in various graph learning

tasks (Dong et al., 2022b; Zhang et al., 2021; Wu

et al., 2020b). However, despite their achievements,

these algorithms are not immune to bias and fair-

ness challenges. GNNs can inadvertently exhibit bias

towards specific demographic subgroups defined by

sensitive attributes such as age, gender, and race. Fur-

thermore, research efforts towards understanding and

measuring biases in GNNs have been relatively lim-

ited (Dong et al., 2022b; Dai and Wang, 2021; Dong

et al., 2022a; Chen et al., 2022; Xu et al., 2021; Zeng

et al., 2021).

Bias challenges within GNN algorithms stem

from various factors, including biases embedded in

the input network structure. While the message-

passing mechanism is commonly associated with ex-

acerbating bias, other aspects of the GNN’s net-

work structure are also influential. Understanding

how structural biases manifest as biased predictions

present challenges due to gaps in comprehension,

such as the Fairness Notion Gap, Usability Gap, and

Faithfulness Gap elucidated in (Dong et al., 2022b).

The Fairness Notion Gap concerns instance-level bias

evaluation, the Usability Gap pertains to fairness in-

fluenced by computational graph edges and their con-

tributions, and the Faithfulness Gap addresses ensur-

ing accurate bias explanations. The work in (Dong

et al., 2022b) addresses these gaps by introducing a

bias evaluation metric for node predictions and an

explanatory framework. This metric quantifies node

contributions to the divergence between output dis-

tributions of sensitive node subgroups based on at-

tributes. While the literature explores various strate-

gies to mitigate biases in GNN-based models, focused

research on this aspect remains relatively limited.

In this realm, some studies including (Dai and

Wang, 2021) and (Li et al., 2021) aim to combat dis-

crimination and enhance fairness in GNNs with con-

sideration to sensitive attribute information. In (Dai

and Wang, 2021), a method is introduced that reduces

bias while maintaining high accuracy in node classi-

fication. On the other hand, (Li et al., 2021) presents

an approach for learning a fair adjacency matrix with

strong graph structural constraints, aiming to achieve

fair link prediction while minimizing the impact on

accuracy. Additionally, (Loveland et al., 2022) pro-

poses two model-agnostic algorithms for edge edit-

ing, leveraging gradient information from a fairness

loss to identify edges that promote fairness enhance-

ments.

2.3 Bias and Fairness in RS

Bias and fairness challenges within the environment

of RS, encompass varied interpretations and can be

categorized into distinct groups. Viewing this from

a broader perspective, bias can be segmented into

three classes akin to the divisions outlined for Ma-

chine Learning (ML) in (Mehrabi et al., 2021). These

classes encompass bias in input data, signifying the

data collection phase involving users; algorithmic

bias in the model, manifesting during the learning

phase of recommendation models based on the col-

lected data; and bias in results, which impact subse-

quent user decisions and actions (Chen et al., 2020;

Baeza-Yates, 2016). Expanding upon the intricacies

of bias, these three classes can be further broken down

into sub-classes, creating an interconnected circular

framework.

Bias in data, stemming from disparities in test

and training data distribution, manifests in various

forms including selection bias, exposure bias, con-

formity bias, and position bias. Selection bias oc-

curs when skewed rating distributions inadequately

represent the entire rating spectrum. Exposure bias

arises from users predominantly encountering spe-

cific items, leading to unobserved interactions that

may not reflect their true preferences. Conformity

bias emerges when users mimic the behavior of oth-

ers due to skewed interaction labels. Position bias

is seen when users favor items in higher positions

over genuinely relevant ones (Chen et al., 2020; Sun

et al., 2019). Algorithmic bias can occur through-

out model creation, data pre-processing, training, and

evaluation stages. Inductive bias, a constructive ele-

ment, enhances model generalization by making as-

sumptions that improve learning from training data

and informed decision-making on unseen test data

(Chen et al., 2020). Outcomes of bias fall into two

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

178

categories: popularity bias and unfairness. Popularity

bias results from the long-tail effect in ratings, where

a few popular items dominate user interactions, po-

tentially leading to elevated scores for them at the

expense of less popular items (Ahanger et al., 2022;

Chen et al., 2020). Unfairness arises from systematic

discrimination against specific groups (Chen et al.,

2020).

These various forms of biases collectively con-

tribute to a circular pattern, wherein biases in the

data are propagated to the models, subsequently in-

fluencing the outcomes. This cycle is completed as

biases from the outcomes find their way back to the

data. Throughout each of these stages, new biases

can be introduced, thus perpetuating the cycle (Fab-

bri et al., 2022; Chen et al., 2020). This cyclical be-

havior adds complexity to the task of identifying and

addressing biases, further emphasizing the challenge

of bias recognition and mitigation (Mansoury et al.,

2021; Chen et al., 2020).

Conversational Recommender Systems (CRS) are

investigated in (Lin et al., 2022) to explore popularity

bias systematically, introducing metrics from differ-

ent angles such as exposure, success, and conversa-

tional utility. Similarly, (Abdollahpouri et al., 2019)

addresses popularity bias and long-tail distribution in

RS, proposing metrics like Average Recommendation

Popularity (ARP), Average Percentage of Long Tail

Items (APLT), and Average Coverage of Long Tail

items (ACLT). However, the focus extends beyond

popularity bias to the concern of unfairness towards

protected groups due to biased recommendations. In

the RS field, fairness has gained significance, being

recognized as a resource allocation tool that shapes

information exposure for users (Wang et al., 2021).

This concept of fairness is categorized into process

fairness (relating to the recommendation model) and

output fairness (influencing users’ information expe-

riences).

• Process fairness pertains to equitable allocation

within the models, features (e.g., race, gender),

and learned representations.

• Outcome fairness, known as distributive justice,

ensures fairness in recommendation results (Wang

et al., 2021). Outcome fairness comprises two

sub-categories: Grouped by Target and Grouped

by Concept.

• Grouped by Target includes group-level and

individual-level fairness. Group-level fairness

involves fair outcomes across different groups,

while individual-level fairness ensures fairness at

the individual level (Wang et al., 2021).

• Grouped by Concept consists of multiple catego-

rizations:

– Consistent Fairness at the individual level em-

phasizes uniform treatment for similar individ-

uals.

– Consistent Fairness at the group level strives for

equitable treatment across different groups.

– Calibrated fairness, or merit-based fairness, re-

lates an individual’s merit to the outcome value.

– Counterfactual fairness mandates identical out-

comes in both real and counterfactual scenar-

ios.

– Envy-free fairness prevents individuals from

envying others’ outcomes.

– Rawlsian maximin fairness maximizes results

for the weakest individual or group.

– Maximin-shared Fairness ensures outcomes

surpass each individual’s (or group’s) maximin

share (Wang et al., 2021).

The correlation between bias and fairness is very im-

portant. An in-depth examination is carried out in

(Boratto et al., 2022) to address methods for alle-

viating consumer unfairness in the context of rat-

ing prediction using real-world datasets (LastFM and

Movielens). The study entails a three-fold analysis.

Firstly, the influence of bias mitigation on model ac-

curacy, measured through metrics like NDCG/RMSE,

is evaluated. Secondly, the impact of bias mitigation

on unfairness is assessed. Lastly, the study explores

whether disparate impact invariably harms minority

groups, as Demographic Parity (DP) indicates. This

investigation underscores the complexities involved

in this domain and proposes potential solutions and

optimization strategies. The selection of appropri-

ate metrics for conducting such evaluations is also

deemed crucial. This comprehensive study holds sub-

stantial relevance in the field.

2.4 Bias and Fairness in GNN-Based RS

The adoption of GNN-based RS has shown promise

in enhancing result accuracy, as noted in previous

studies (Steck et al., 2021; Khan et al., 2021; Mu,

2018). However, this improved performance often

comes at the cost of introducing bias and fairness

issues (Chizari et al., 2023; Dai and Wang, 2021).

The inherent graph structure and the message-passing

mechanism within GNNs can exacerbate bias prob-

lems, leading to inequitable outcomes. Furthermore,

many RS applications are situated within social net-

work contexts, where graph structures are prevalent.

In such systems, nodes sharing similar sensitive at-

tributes tend to establish connections with one an-

other, distinguishing them from nodes with differing

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

179

sensitive attributes (e.g., the formation of connections

among young individuals in social networks). This

phenomenon creates an environment where nodes of

comparable sensitive features receive akin represen-

tations through the aggregation of neighbor features

within GNNs, while nodes with distinct sensitive

features receive disparate representations. This dy-

namic results in a pronounced bias issue influencing

decision-making processes (Dai and Wang, 2021).

In GNN-based RS, specific sensitive attributes

can exacerbate existing biases within the network,

prompting the need to quantify fairness in these con-

texts. To tackle this issue, relevant metrics should

consider the distribution of positive classifications

across distinct groups defined by various values of

the sensitive attribute (Rahman et al., 2019; Wu et al.,

2020a).

Recent research in GNN-based RS has addressed

fairness issues and sensitive attributes. For example,

(Rahman et al., 2019) focuses on quantifying and rec-

tifying fairness problems, particularly group fairness

and disparate impact, in graph embeddings. It intro-

duces a concept called ”equality of representation” to

assess fairness in friendship-based RS. These meth-

ods are applied to real-world datasets, leading to the

development of a fairness-aware graph embedding al-

gorithm that effectively mitigates bias and improves

key metrics.

Study of (Wu et al., 2021), the aim is to make fair

recommendations by filtering sensitive information

from representation learning. They use user and item

embeddings, sensitive features, and a graph-based ad-

versarial training process. Fairness is assessed with

metrics like AUC for binary attributes and micro-

averaged F1 for multivalued attributes, considering

gender attribute imbalance. The model is tested on

Lastfm-360K and MovieLens datasets.

In summary, despite the absence of sensitive fea-

tures being a significant challenge in GNN-based RS,

most research in this domain has focused on tack-

ling discrimination against minorities or addressing

information leakage issues. These types of unfairness

and discrimination run contrary to existing regula-

tions and anti-discriminatory laws. Additionally, un-

derstating the behavior of certain algorithms against

bias and fairness is absolutely important. To do so, it

is essential to use appropriate metrics that fit the do-

main and models to have more reliable results.

3 METHODOLOGY

In the present section, the methodology used in this

research is explained along with information regard-

ing benchmark datasets and used metrics.

The primary objective of this research is to as-

sess and quantify the degree of unfairness experienced

by specific protected groups, namely gender and age,

with a high degree of accuracy. In order to achieve

this goal, the study focuses on the quantification of

fairness disparities. These disparities serve as metrics

to evaluate the quality and fairness of recommended

items for these particular groups. In essence, the re-

search aims to provide a robust and comprehensive

assessment of the biases and inequities present in RS

concerning gender and age attributes.

In addition, this study employs the NDCG (Nor-

malized Discounted Cumulative Gain) evaluation

metric as a specific measurement for assessing the

recommendation quality within each of the protected

groups. NDCG is a widely recognized metric used in

RS to evaluate lists of recommended items.

The NDCG metric offers a more in-depth evalu-

ation of recommendation quality by considering the

position and relevance of items within recommenda-

tion lists. It takes into account both the order and im-

portance of recommended items, making it particu-

larly suitable for measuring the quality of recommen-

dations in this context (Chia et al., 2022).

By utilizing NDCG as a specific evaluation metric

for protected groups, this research aims to provide a

comprehensive assessment of recommendation qual-

ity while ensuring fairness and equity for all users, re-

gardless of their gender or age (age was discretized

into two intervals, lower and higher 30 years old).

This approach allows for a more nuanced understand-

ing of the performance of recommender systems and

their impact on different demographic groups.

3.1 Benchmark Datasets

In this experiment, three real-world datasets are used

to reach more accurate generalization. These datasets

are well-known in the RS field and include certain

characteristics that match bias and fairness assess-

ment. Their selection was influenced by the inclusion

of the specific sensitive attributes and biases being

investigated. Therefore, the chosen datasets include

users’ gender and age as sensitive attributes, along

with an uneven distribution of instances across var-

ious attribute values. The three real-world datasets

used in this study are MovieLens 100K, LastFM

100K, and Book Recommendation. Detailed descrip-

tions and Exploratory Data Analysis (EDA) for them

are provided below.

• MovieLens 100K. MovieLens (gro, 2021) is a

well-established resource frequently used in re-

search within the field of RS. MovieLens is a non-

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

180

commercial online movie recommendation plat-

form, and its dataset has been incrementally col-

lected through random sampling from the website.

This dataset comprises user ratings for movies,

quantified on a star scale within the range of 1

to 5. Additionally, this dataset encompasses user

information, including ”Gender” and ”Age” at-

tributes, which have been identified as sensitive

features according to capAI guidelines (Floridi

et al., 2022).

• LastFM 100K. The LastFM dataset (Celma,

2010) is a widely recognized resource in the field

of RS, particularly for music recommendations.

This dataset encompasses user and artist infor-

mation drawn from various regions around the

world. Rather than utilizing a conventional rat-

ing system, this dataset quantifies user interac-

tions based on the number of times each user has

listened to individual artists, denoted as ”weight.”

For the purposes of this research, we have uti-

lized a pre-processed subset of the LastFM 360K

dataset, which is well-suited for RS implemen-

tation. Within this subset, we have specifically

chosen 100,000 interactions to form the basis of

our study. In accordance with capAI guidelines

(Floridi et al., 2022), gender and age are identified

as sensitive attributes within this dataset. Notably,

the dataset represents the frequency with which

users have listened to specific music, which has

been normalized to a scale ranging from 1 to 5 to

enhance precision in the analysis.

• Book Recommendation 100K. The dataset used

in a study by (Mobius, 2020) encompasses user

ratings for a diverse array of books. For the pur-

pose of our experiment, we have selected a rep-

resentative 100,000-sample subset of this dataset.

It’s worth noting that this sample faithfully mir-

rors the distribution characteristics of the original

dataset.

3.2 Recommendation Approaches

In this experiment, various types of models are uti-

lized to achieve a better range of results, hence,

providing superior comparison. Three distinct rec-

ommendation approaches are used in this research

including Collaborative Filtering (CF), Matrix Fac-

torization (MF), and GNN-based approaches. The

goal was to choose the most representative algo-

rithms within each category for comprehensive anal-

ysis. This diverse selection of methods allows us to

expedite the evaluation of bias and fairness. In the

upcoming section, we will provide an overview of the

methodologies corresponding to each approach uti-

lized in this study.

3.3 Evaluation Metrics

In this section, the description and the categorization

of used metrics are shown. In order to have a com-

prehensive understanding of model performance and

bias and fairness aspects, two different types of met-

rics are used, for the assessment of reliability, as well

as for bias and unfairness. As mentioned above, we

have focused on the evaluation of item recommenda-

tion lists by means of rank metrics. In this context,

various values of K have been employed to determine

the top-K ranked items within the list, with K repre-

senting the list’s size.

3.3.1 Model Evaluation Metrics

The results presented complement the studies previ-

ously carried out(Chizari et al., 2023; Chizari et al.,

2022) where various types of well-known perfor-

mance metrics were used. These are Mean Recip-

rocal Rank (MRR), Normalized Discounted Cumula-

tive Gain (NDCG), Precision, Recall and item Hit Ra-

tio (HR).This work has focused on the evaluation of

NDCG for protected and unprotected groups for both

the age and gender attributes.

3.3.2 Bias and Fairness Metrics

In addition to the above assessment, we will delve into

several bias and fairness evaluation metrics, with a

particular emphasis on user-centric fairness measures.

We have previously studied and provided a detailed

exposition of the following bias and unfairness met-

rics (Chizari et al., 2023; Chizari et al., 2022):

• Average Popularity (Naghiaei et al., 2022)

• Gini Index(Sun et al., 2019; Lazovich et al., 2022)

• Item Coverage (Wang and Wang, 2022)

• Differential Fairness (DF) for sensitive attribute

gender (Islam et al., 2021; Foulds et al., 2019)

• Value Unfairness (Aalam et al., 2022; Yao and

Huang, 2017; Farnadi et al., 2018)

• Absolute Unfairness (Yao and Huang, 2017; Far-

nadi et al., 2018)

In this experimental analysis, with the aim of gaining

a thorough insight into how models behave in terms of

bias and fairness, a new metric of relative difference

between groups wasproposed. This metric is also im-

plemented on list of top-K ranked items.

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

181

3.3.3 Proposed Metric

This research includes another metric proposed in or-

der to measure the accuracy of recommendation for

each protected and unprotected group (gender and

age). To achieve this, initially, NDCG@k, which is

designed to measure the effectiveness of a recommen-

dation system by assessing the relevance and rank-

ing of recommended items, is computed separately

for mentioned groups. Subsequently, the relative dif-

ference between the NDCG@5k values for these two

groups is calculated to assess their proximity or dis-

parity. This is achieved by subtracting the NDCG@k

for group 2 (e.g., males) from that of group 1 (e.g.,

females), then dividing the result by the average of

the two values, and finally multiplying the outcome

by 100 as it can be seen in below:

|NDCG@k group1 − NDCG@k group2|

((NDCG@k group1 + NDCG@k group2)/2)

∗ 100

(1)

This particular metric serves as a dedicated and in-

sightful tool for evaluating fairness among protected

groups in the context of RS. It offers a unique perspec-

tive on fairness by focusing on how recommendations

perform within these specific groups. Furthermore, it

complements other fairness metrics used in the eval-

uation process, providing a more comprehensive and

robust understanding of fairness outcomes. By com-

paring the results obtained from this metric with those

derived from other fairness metrics, the research gains

additional validation and a deeper insight into the fair-

ness dynamics within the recommendation system.

This approach enhances the credibility and complete-

ness of the fairness assessment, ultimately contribut-

ing to a more thorough and meaningful analysis.

4 EXPERIMENTAL SETUP

4.1 Hardware Used

The research was carried out on a high-performance

system featuring a Ryzen 7 5800H CPU, which offers

8 cores and 16 threads, operating at base and turbo

frequencies of 3.2 GHz and 4.4 GHz, respectively.

This AMD processor, based on the Zen 3 architecture,

provided the computational power needed for our

tasks. Additionally, the system was equipped with an

RTX 3060 Mobile GPU, known for its 6GB VRAM,

3840 CUDA cores, and 120 Tensor Cores. This GPU,

part of NVIDIA’s Ampere architecture, proved essen-

tial for tasks such as machine learning model train-

ing. The system boasted a total of 16GB DDR4 RAM,

with approximately 15GB available for research pur-

poses, ensuring efficient execution of complex com-

putations and data handling.

4.2 Software and Libraries Used

Python, with CPython as the core interpreter, served

as the primary programming language. The research

was based on Recbole, an open-source library, and a

modified fork named Recbole-FairRec. Custom met-

rics and models were integrated into this library, re-

sulting in Recbole-Optimized. Key Python libraries

included TensorFlow and PyTorch for machine learn-

ing and deep learning, NumPy for numerical comput-

ing, Pandas for data manipulation, and Scikit-learn for

various machine learning tasks. Additional industry-

standard libraries were used as needed for specific re-

search requirements.

5 RESULTS

In the following sections, we delve into the results

of our investigation into recommendation system fair-

ness, with a specific focus on evaluating biases and

unfairness concerning protected groups, including

gender and age. Our study uses various evaluation

metrics, including model evaluation metrics, bias and

fairness metrics, and NDCG for quality of recom-

mendation. Through experimentation, the aim is to

shed light on the effectiveness of fairness-aware rec-

ommendation models and their impact on recommen-

dation quality for different demographic segments.

5.1 Model Evaluation Results

In this section, a comprehensive and insightful com-

parative analysis of our evaluation results.

First, the different groups of sensitive attributes

studied were evaluated separately using the NDCG

metric for recommendation lists. The following fig-

ures 1 and 2 show the results obtained for the three

datasets described in section 3.1 and for the eight rec-

ommendation methods tested.

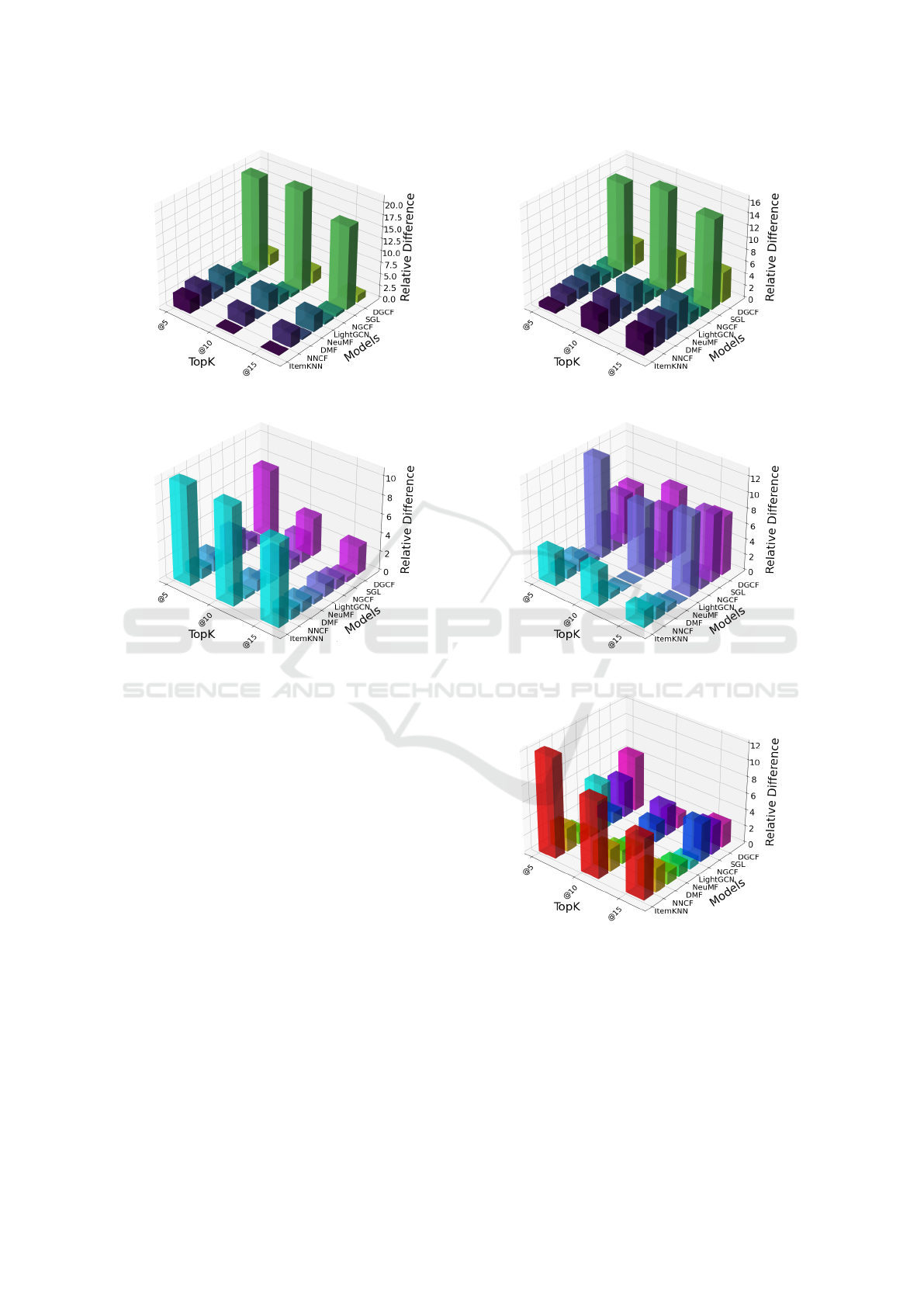

5.2 Bias and Fairness Results

The results of the proposed metric that evaluates

the relative difference on the performance between

groups is provided secondly. Figures 3 and 4 show

these results for three datasets on the previously men-

tioned models.

In Figure 1 NDCG performance for sensitive at-

tribute gender is provided on two datasets. Higher

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

182

(a) MovieLens.

(b) LastFM.

Figure 1: Results of NDCG performance for sensitive at-

tribute gender on MovieLens and LastFM.

NDCG indicates better performance of the model. As

it can be seen on MovieLens SGL performed poorly

with respect to this metric. The difference between

the quality of predictions for each group is also very

clear. This can count as gender discrimination toward

a protected group. Among GNN models NGCF pro-

vides high accuracy with small differences between

groups. On the other hand, on the LastFM dataset,

all GNN models performed with lower performance

compared with traditional models. ItemKNN and

DGCG, moreover, show high differences among all

the used methods.

Figure 2 indicates the NDCG performance for

sensitive attribute age for all datasets. Again on

LastFM and Book Recommendation GNN models

provide lower accuracy in comparison to conventional

models. The quality differences between GNN mod-

els are higher in the LastFM dataset which indicates a

higher rate of unfairness based on this dataset. SGL,

also performs poorly on MovieLens.

The following figures are provided to show the rel-

(a) MovieLens.

(b) LastFM.

(c) Book Recommendation.

Figure 2: Results of NDCG performance for sensitive at-

tribute age on MovieLens, LastFM, and Book Recommen-

dation.

ative difference of the NDCG metric with respect to

sensitive attributes.

Figure 3 shows the unfairness relative differ-

ence of sensitive attribute gender for MovieLens and

LastFM. It can be seen that on the MovieLens dataset,

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

183

(a) MovieLens.

(b) LastFM.

Figure 3: Results of Unfairness Relative Difference of sen-

sitive attribute gender for MovieLens and LastFM.

SGL has a significant relative difference which in-

dicates high unfairness compared with other used

models. Other used models performed moderately

with respect to relative difference on MovieLens.

On the other hand, on the LastFM dataset, DGCF

shows higher unfairness among GNN methods and

ItemKNN takes the first place regarding relative dif-

ference within all methods.

Figure 4 shows the unfairness relative difference

of sensitive attribute gender for all datasets. SGL

again, provides significant unfairness on MovieLens,

although DGCF also shows a high unfairness com-

pared with the rest. On the LastFM dataset, almost

all GNN models show high unfairness except NGCF.

LightGCN can be chosen as the most unfair one. For

the Book Recommendation dataset, it can be wit-

nessed that GNN models performed moderately and

the most unfair method is ItemKNN.

(a) MovieLens.

(b) LastFM.

(c) Book Recommendation.

Figure 4: Results of Unfairness Relative Difference of sen-

sitive attribute age for MovieLens, LastFM, and Book Rec-

ommendation.

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

184

6 CONCLUSIONS AND FUTURE

WORK

Fairness in RS holds great significance from both the

user and service provider perspectives. Users rely

on RS to receive personalized recommendations that

align with their preferences and interests, while ser-

vice providers aim to enhance user satisfaction and

engagement. To assess and evaluate fairness in RS,

a range of metrics have been developed in the state-

of-the-art research. These metrics encompass various

aspects, including individual and group fairness, pro-

viding valuable insights into recommendation quality

for different user segments.

In this study, we provide a metric specifically de-

signed to measure fairness disparities within RS rec-

ommendations, offering a fresh perspective on bias

analysis. Unlike existing metrics, our new approach

quantifies the differences in recommendation quality

for protected groups, including gender and age. This

metric allows us to evaluate how well the recommen-

dations cater to the unique preferences and needs of

these groups, shedding light on any potential biases

or disparities in the system.

The introduction of this metric provides several

benefits. Firstly, it enhances our understanding of

fairness in RS by focusing on the quality of recom-

mendations received by specific user groups, enabling

a more granular assessment of bias. Secondly, it em-

powers service providers to tailor their recommenda-

tion algorithms to ensure fairness and inclusivity for

all users. By having this information, RS platforms

can make data-driven decisions to improve recom-

mendation accuracy and user satisfaction, ultimately

leading to a more equitable and effective RS ecosys-

tem.

In our analysis of the three datasets (MovieLens,

LastFM, and BookRec), we observed varying degrees

of fairness and bias among different recommendation

models across sensitive attributes, such as gender and

age.

In the MovieLens dataset, models like DMF,

LightGCN, NGCF, and DGCF demonstrated rela-

tively fair recommendations for both male and fe-

male users, promoting fairness regardless of gender.

They continued to exhibit fairness when considering

the age-sensitive attribute, ensuring equitable recom-

mendations for users across different age groups. in

contrast, SGL did not provide fair recommendations

in this dataset

Turning our attention to the LastFM dataset,

NNCF, DMF, and NeuMF models displayed com-

mendable fairness across protected groups, regardless

of both sensitive attributes. These models maintained

minimal differences in NDCG accuracy between male

and female users, indicating fairness in recommenda-

tions for both groups. The LightGCN model exhibited

unique behavior, showing a higher relative NDCG

difference in the age-sensitive attribute but a lower

difference in the gender-sensitive attribute

In the BookRec dataset, the relative difference in

NDCG accuracy was generally low across various

models. However, models exhibited some inconsis-

tencies in their results, emphasizing the need for com-

prehensive fairness assessments.

For future work, the aim is to enhance the scal-

ability of the used metrics to be capable of working

on various features in different fields. These meth-

ods, moreover, can be applied to different sub-groups

which can provide us with more detailed informa-

tion regarding unfairness. Another type of accuracy

method can also be used in order to measure the ac-

curacy of recommended items in certain advantaged

or disadvantaged groups.

REFERENCES

(2021). Movielens.

Aalam, S. W., Ahanger, A. B., Bhat, M. R., and Assad,

A. (2022). Evaluation of fairness in recommender

systems: A review. In International Conference

on Emerging Technologies in Computer Engineering,

pages 456–465. Springer.

Abdollahpouri, H., Burke, R., and Mobasher, B. (2019).

Managing popularity bias in recommender systems

with personalized re-ranking. In The thirty-second in-

ternational flairs conference.

Ahanger, A. B., Aalam, S. W., Bhat, M. R., and Assad, A.

(2022). Popularity bias in recommender systems-a re-

view. In International Conference on Emerging Tech-

nologies in Computer Engineering, pages 431–444.

Springer.

Alelyani, S. (2021). Detection and evaluation of machine

learning bias. Applied Sciences, 11(14):6271.

Baeza-Yates, R. (2016). Data and algorithmic bias in the

web. In Proceedings of the 8th ACM Conference on

Web Science, pages 1–1.

Barocas, S., Hardt, M., and Narayanan, A. (2017). Fairness

in machine learning. Nips tutorial, 1:2017.

Bernhardt, M., Jones, C., and Glocker, B. (2022). Potential

sources of dataset bias complicate investigation of un-

derdiagnosis by machine learning algorithms. Nature

Medicine, 28(6):1157–1158.

Boratto, L., Fenu, G., and Marras, M. (2021). Connecting

user and item perspectives in popularity debiasing for

collaborative recommendation. Information Process-

ing & Management, 58(1):102387.

Boratto, L., Fenu, G., Marras, M., and Medda, G. (2022).

Consumer fairness in recommender systems: Contex-

tualizing definitions and mitigations. In European

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

185

Conference on Information Retrieval, pages 552–566.

Springer.

Boratto, L. and Marras, M. (2021). Advances in bias-aware

recommendation on the web. In Proceedings of the

14th ACM International Conference on Web Search

and Data Mining, pages 1147–1149.

Bronstein, M. M., Bruna, J., LeCun, Y., Szlam, A., and Van-

dergheynst, P. (2017). Geometric deep learning: going

beyond euclidean data. IEEE Signal Processing Mag-

azine, 34(4):18–42.

Bruce, P., Bruce, A., and Gedeck, P. (2020). Practical

statistics for Data Scientists, 2nd edition. O’Reilly

Media, Inc.

Caton, S. and Haas, C. (2020). Fairness in machine learn-

ing: A survey. arXiv preprint arXiv:2010.04053.

Celma, O. (2010). Music Recommendation and Discovery

in the Long Tail. Springer.

Chen, J., Dong, H., Wang, X., Feng, F., Wang, M., and

He, X. (2020). Bias and debias in recommender sys-

tem: A survey and future directions. arXiv preprint

arXiv:2010.03240.

Chen, Z., Xiao, T., and Kuang, K. (2022). Ba-gnn: On

learning bias-aware graph neural network. In 2022

IEEE 38th International Conference on Data Engi-

neering (ICDE), pages 3012–3024. IEEE.

Chia, P. J., Tagliabue, J., Bianchi, F., He, C., and Ko, B.

(2022). Beyond ndcg: behavioral testing of recom-

mender systems with reclist. In Companion Proceed-

ings of the Web Conference 2022, pages 99–104.

Chizari, N., Shoeibi, N., and Moreno-Garc

´

ıa, M. N. (2022).

A comparative analysis of bias amplification in graph

neural network approaches for recommender systems.

Electronics, 11(20):3301.

Chizari, N., Tajfar, K., and Moreno-Garc

´

ıa, M. N. (2023).

Bias assessment approaches for addressing user-

centered fairness in gnn-based recommender systems.

Information, 14(2):131.

Dai, E. and Wang, S. (2021). Say no to the discrimina-

tion: Learning fair graph neural networks with limited

sensitive attribute information. In Proceedings of the

14th ACM International Conference on Web Search

and Data Mining, pages 680–688.

Di Noia, T., Tintarev, N., Fatourou, P., and Schedl, M.

(2022). Recommender systems under european ai reg-

ulations. Communications of the ACM, 65(4):69–73.

Dong, Y., Liu, N., Jalaian, B., and Li, J. (2022a). Edits:

Modeling and mitigating data bias for graph neural

networks. In Proceedings of the ACM Web Confer-

ence 2022, pages 1259–1269.

Dong, Y., Wang, S., Wang, Y., Derr, T., and Li, J. (2022b).

On structural explanation of bias in graph neural net-

works. In Proceedings of the 28th ACM SIGKDD

Conference on Knowledge Discovery and Data Min-

ing, pages 316–326.

Fabbri, F., Croci, M. L., Bonchi, F., and Castillo, C. (2022).

Exposure inequality in people recommender systems:

The long-term effects. In Proceedings of the Inter-

national AAAI Conference on Web and Social Media,

volume 16, pages 194–204.

Fahse, T., Huber, V., and Giffen, B. v. (2021). Managing

bias in machine learning projects. In International

Conference on Wirtschaftsinformatik, pages 94–109.

Springer.

Farnadi, G., Kouki, P., Thompson, S. K., Srinivasan, S., and

Getoor, L. (2018). A fairness-aware hybrid recom-

mender system. arXiv preprint arXiv:1809.09030.

Feldman, M., Friedler, S. A., Moeller, J., Scheidegger, C.,

and Venkatasubramanian, S. (2015). Certifying and

removing disparate impact. In proceedings of the 21th

ACM SIGKDD international conference on knowl-

edge discovery and data mining, pages 259–268.

Floridi, L., Holweg, M., Taddeo, M., Amaya Silva, J.,

M

¨

okander, J., and Wen, Y. (2022). capai-a procedure

for conducting conformity assessment of ai systems in

line with the eu artificial intelligence act. Available at

SSRN 4064091.

Foulds, J. R., Islam, R., Keya, K. N., and Pan, S. (2019).

Differential fairness. UMBC Faculty Collection.

Gao, C., Lei, W., Chen, J., Wang, S., He, X., Li, S., Li, B.,

Zhang, Y., and Jiang, P. (2022a). Cirs: Bursting fil-

ter bubbles by counterfactual interactive recommender

system. arXiv preprint arXiv:2204.01266.

Gao, C., Wang, X., He, X., and Li, Y. (2022b). Graph neural

networks for recommender system. In Proceedings of

the Fifteenth ACM International Conference on Web

Search and Data Mining, pages 1623–1625.

Hardt, M., Price, E., and Srebro, N. (2016). Equality of op-

portunity in supervised learning. Advances in neural

information processing systems, 29.

Islam, R., Keya, K. N., Zeng, Z., Pan, S., and Foulds, J.

(2021). Debiasing career recommendations with neu-

ral fair collaborative filtering. In Proceedings of the

Web Conference 2021, pages 3779–3790.

Khan, Z. Y., Niu, Z., Sandiwarno, S., and Prince, R. (2021).

Deep learning techniques for rating prediction: a sur-

vey of the state-of-the-art. Artificial Intelligence Re-

view, 54(1):95–135.

Kordzadeh, N. and Ghasemaghaei, M. (2022). Algorithmic

bias: review, synthesis, and future research directions.

European Journal of Information Systems, 31(3):388–

409.

Lazovich, T., Belli, L., Gonzales, A., Bower, A., Tantipong-

pipat, U., Lum, K., Huszar, F., and Chowdhury, R.

(2022). Measuring disparate outcomes of content rec-

ommendation algorithms with distributional inequal-

ity metrics. arXiv preprint arXiv:2202.01615.

Li, P., Wang, Y., Zhao, H., Hong, P., and Liu, H. (2021).

On dyadic fairness: Exploring and mitigating bias in

graph connections. In International Conference on

Learning Representations.

Li, X. (2023). Graph Learning in Recommender Systems:

Toward Structures and Causality. PhD thesis, Univer-

sity of Illinois at Chicago.

Lin, S., Wang, J., Zhu, Z., and Caverlee, J. (2022).

Quantifying and mitigating popularity bias in con-

versational recommender systems. arXiv preprint

arXiv:2208.03298.

Loveland, D., Pan, J., Bhathena, A. F., and Lu, Y. (2022).

Fairedit: Preserving fairness in graph neural net-

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

186

works through greedy graph editing. arXiv preprint

arXiv:2201.03681.

Mansoury, M., Abdollahpouri, H., Pechenizkiy, M.,

Mobasher, B., and Burke, R. (2021). A graph-based

approach for mitigating multi-sided exposure bias in

recommender systems. ACM Transactions on Infor-

mation Systems (TOIS), 40(2):1–31.

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., and

Galstyan, A. (2021). A survey on bias and fairness in

machine learning. ACM Computing Surveys (CSUR),

54(6):1–35.

Misztal-Radecka, J. and Indurkhya, B. (2021). Bias-aware

hierarchical clustering for detecting the discriminated

groups of users in recommendation systems. Informa-

tion Processing & Management, 58(3):102519.

Mobius, A. (2020). Book recommendation dataset.

Mu, R. (2018). A survey of recommender systems based on

deep learning. Ieee Access, 6:69009–69022.

Naghiaei, M., Rahmani, H. A., and Dehghan, M. (2022).

The unfairness of popularity bias in book recommen-

dation. arXiv preprint arXiv:2202.13446.

Oneto, L. and Chiappa, S. (2020). Fairness in machine

learning. In Recent trends in learning from data: Tu-

torials from the inns big data and deep learning con-

ference (innsbddl2019), pages 155–196. Springer.

P

´

erez-Marcos, J., Mart

´

ın-G

´

omez, L., Jim

´

enez-Bravo,

D. M., L

´

opez, V. F., and Moreno-Garc

´

ıa, M. N.

(2020). Hybrid system for video game recommen-

dation based on implicit ratings and social networks.

Journal of Ambient Intelligence and Humanized Com-

puting, 11(11):4525–4535.

Rahman, T., Surma, B., Backes, M., and Zhang, Y. (2019).

Fairwalk: Towards fair graph embedding.

Rajeswari, J. and Hariharan, S. (2016). Personalized search

recommender system: State of art, experimental re-

sults and investigations. International Journal of Ed-

ucation and Management Engineering, 6(3):1–8.

Ricci, F., Rokach, L., and Shapira, B. (2022). Recom-

mender systems: Techniques, applications, and chal-

lenges. Recommender Systems Handbook, pages 1–

35.

Steck, H., Baltrunas, L., Elahi, E., Liang, D., Raimond,

Y., and Basilico, J. (2021). Deep learning for recom-

mender systems: A netflix case study. AI Magazine,

42(3):7–18.

Sun, W., Khenissi, S., Nasraoui, O., and Shafto, P. (2019).

Debiasing the human-recommender system feedback

loop in collaborative filtering. In Companion Proceed-

ings of The 2019 World Wide Web Conference, pages

645–651.

Verma, S. and Rubin, J. (2018). Fairness definitions ex-

plained. In 2018 ieee/acm international workshop on

software fairness (fairware), pages 1–7. IEEE.

Wang, S., Hu, L., Wang, Y., He, X., Sheng, Q. Z., Orgun,

M. A., Cao, L., Ricci, F., and Yu, P. S. (2021).

Graph learning based recommender systems: A re-

view. arXiv preprint arXiv:2105.06339.

Wang, X. and Wang, W. H. (2022). Providing item-side in-

dividual fairness for deep recommender systems. In

2022 ACM Conference on Fairness, Accountability,

and Transparency, pages 117–127.

Wang, Y., Ma, W., Zhang, M., Liu, Y., and Ma, S. (2023). A

survey on the fairness of recommender systems. ACM

Transactions on Information Systems, 41(3):1–43.

Wu, L., Chen, L., Shao, P., Hong, R., Wang, X., and Wang,

M. (2021). Learning fair representations for recom-

mendation: A graph-based perspective. In Proceed-

ings of the Web Conference 2021, pages 2198–2208.

Wu, S., Sun, F., Zhang, W., Xie, X., and Cui, B. (2020a).

Graph neural networks in recommender systems: a

survey. ACM Computing Surveys (CSUR).

Wu, Z., Pan, S., Chen, F., Long, G., Zhang, C., and Philip,

S. Y. (2020b). A comprehensive survey on graph neu-

ral networks. IEEE transactions on neural networks

and learning systems, 32(1):4–24.

Xu, B., Shen, H., Sun, B., An, R., Cao, Q., and Cheng,

X. (2021). Towards consumer loan fraud detection:

Graph neural networks with role-constrained condi-

tional random field. In Proceedings of the AAAI Con-

ference on Artificial Intelligence, volume 35, pages

4537–4545.

Yao, S. and Huang, B. (2017). Beyond parity: Fairness ob-

jectives for collaborative filtering. Advances in neural

information processing systems, 30.

Yu, J., Yin, H., Xia, X., Chen, T., Li, J., and Huang, Z.

(2023). Self-supervised learning for recommender

systems: A survey. IEEE Transactions on Knowledge

and Data Engineering.

Zeng, Z., Islam, R., Keya, K. N., Foulds, J., Song, Y., and

Pan, S. (2021). Fair representation learning for hetero-

geneous information networks. In Proceedings of the

International AAAI Conference on Weblogs and So-

cial Media, volume 15.

Zhang, Q., Wipf, D., Gan, Q., and Song, L. (2021). A bi-

ased graph neural network sampler with near-optimal

regret. Advances in Neural Information Processing

Systems, 34:8833–8844.

Zheng, Y. and Wang, D. X. (2022). A survey of rec-

ommender systems with multi-objective optimization.

Neurocomputing, 474:141–153.

Zhou, J., Cui, G., Hu, S., Zhang, Z., Yang, C., Liu, Z.,

Wang, L., Li, C., and Sun, M. (2020). Graph neu-

ral networks: A review of methods and applications.

AI Open, 1:57–81.

Quantifying Fairness Disparities in Graph-Based Neural Network Recommender Systems for Protected Groups

187