From Point Cloud Perception Toward People Detection

Assia Belbachir

1,2 a

, Antonio M. Ortiz

1 b

, Atle Aalerud

1 c

and Ahmed Nabil Belbachir

1 d

1

NORCE Norwegian Research Centre, Norway

2

Sorbonne Universit

´

e, LIP6-UMR 7606 CNRS, France

Keywords:

Point Cloud, LiDAR, People Detection.

Abstract:

Point clouds have become significant data inputs for 3D representation, enabling accurate analysis of 3D

scenes and objects. People detection from point clouds is a challenging task due to data sparsity, irregular-

ity, occlusion, and real-time detection constraints. Existing methods based on handcrafted features or deep

learning have limitations in handling occlusions, pose variations, and fast detection. This paper introduces a

Random Forest classifier for people detection in point clouds, aiming to achieve both accuracy and fast perfor-

mance. The point cloud data are acquired using a multi-point LiDAR system. First experiments demonstrate

the effectiveness of the approach and its efficient detection compared to Multiple Layer Perceptron (MLP) in

our collected Dataset.

1 INTRODUCTION

Point cloud perception has emerged as a crucial re-

search area in computer vision, enabling accurate

analysis and understanding of 3D scenes and objects.

With the increasing availability of depth sensors such

as LiDAR and RGB-D cameras, point clouds have be-

come a popular representation for capturing the ge-

ometric structure of the environment. A key appli-

cation of point cloud perception is people detection,

which plays an important role in various domains in-

cluding autonomous driving, surveillance, and human

robot interaction, among others.

People detection from point clouds is a challeng-

ing task. The inherent sparsity, irregularity, and occlu-

sion present in point clouds make accurate detection

of humans a complex problem. Existing approaches

often rely on handcrafted features or deep learning-

based methods that primarily focus on local geometric

patterns or global contextual information. However,

these approaches have limitations in dealing with oc-

clusion, pose variations, and cluttered scenes, leading

to sub optimal performance.

Two main methods are used for people detection:

(1) handcrafted feature-based methods and (2) deep

a

https://orcid.org/0000-0002-1294-8478

b

https://orcid.org/0000-0002-7145-8241

c

https://orcid.org/0000-0001-6462-235X

d

https://orcid.org/0000-0001-9233-3723

learning-based methods. Handcrafted feature-based

methods extract geometric features, such as local sur-

face normals, curvatures, or multi-scale shape de-

scriptors, to represent the local characteristics of point

neighborhoods. These methods can use clustering or

classification algorithms to differentiate humans from

other objects or background. While these approaches

have shown promising results in some scenarios, they

struggle to handle complex occlusion patterns, pose

variations, and real-time detection.

Recently, deep learning-based methods have

gained significant attention, leveraging the ability of

deep neural networks to learn features directly from

point clouds. These methods often employ archi-

tectures such as PointNet (Qi et al., 2017b), Point-

Net++ (Qi et al., 2017d), or Graph Convolutional Net-

works (GCNs) (Li et al., 2018a) to capture local and

global contextual information. Deep learning-based

approaches have shown superior performance in han-

dling complex scenes, occlusion, and pose variations.

However, they may suffer from high computational

costs and require large amounts of labeled training

data.

The Random Forest classifier (RFC) is a well-

established machine learning algorithm with a strong

track record of high performance across various do-

mains. While the field of machine learning continu-

ally evolves, the Random Forest classifier remains a

robust and reliable choice for classification tasks in

term of scalability and reactivity (Cutler et al., 2007).

520

Belbachir, A., Ortiz, A., Aalerud, A. and Belbachir, A.

From Point Cloud Perception Toward People Detection.

DOI: 10.5220/0012258800003543

In Proceedings of the 20th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2023) - Volume 1, pages 520-526

ISBN: 978-989-758-670-5; ISSN: 2184-2809

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

Before training our model for RFC, we removed the

ground information. We conducted a comparison be-

tween the Random Forest Classifier (RFC) and the

Multi-Layer Perceptron (MLP) through various eval-

uation metrics using the datasets.

The objective of this paper is twofold: first, it

applies Random Forest classification mechanisms for

people detection from a point cloud perception, and

going one step beyond, the use of a trained model

enables real-time detection of people. The point

cloud perception used as input for the Random For-

est classification is acquired through a multi-point Li-

DAR (Aalerud et al., 2020) that uses a combination

of multiple mirrors to increase the number of detected

points, thus increasing the resolution and data quality.

The remainder of this paper is organised as fol-

lows: Section 2 reviews the related State of the Art

and presents the main drawbacks of previous work;

Section 3 details the fundamentals of the proposed ap-

proach and the main novelties of this work; Section 4

presents the experiments and results highlighting the

obtained performance; and finally, Section 5 presents

some conclusions and future work, paving the way to

the application of the proposed approach in diverse

domains.

2 STATE OF THE ART

The literature presents multiple Point Cloud meth-

ods that can be classified into seven categories: (i)

Point Cloud Segmentation (such as PointNet (Qi

et al., 2017c), PointNet++ (Qi et al., 2017e), and

PointCNN (Li et al., 2018b)), (ii) Point Cloud Reg-

istration (Huang et al., 2021), (iii) Point Cloud Re-

construction (Lin et al., 2018), (iv) Point Cloud Clas-

sification (Uy et al., 2019), (v) Point Cloud Denois-

ing (Javaheri et al., 2017), (vi) Point Cloud Genera-

tion (Yang et al., 2019a), and (vii) Point Cloud Com-

pression (Cao et al., 2019).

This paper focuses on people detection, which in-

volves point cloud segmentation followed by classifi-

cation.

The work in (Dai et al., 2021) presents MV3D,

a multi-view 3D object detection network that uti-

lizes point cloud data for autonomous driving appli-

cations. It combines bird’s-eye view and front view

representations with 3D voxel-based feature learning.

MV3D achieves state-of-the-art performance in ob-

ject detection tasks, including people detection. How-

ever, MV3D’s computational complexity and reliance

on multi-view inputs may limit its real-time applica-

bility in resource-constrained scenarios.

Frustum PointNets (Qi et al., 2017a) introduces a

frustum-based approach for 3D object detection, in-

cluding people detection, using RGB-D data. The

method extracts point features within frustum regions

and employs PointNet to classify and regress object

properties. Frustum PointNets demonstrates superior

performance on challenging datasets. While effective,

the frustum-based approach may suffer from incom-

plete object detection when objects extend outside the

frustum, limiting its performance in scenarios with

large-scale occlusion.

The authors of PointRCNN (Shi et al., 2019) pro-

pose a two-stage framework for 3D object detection

that generates proposals and performs accurate de-

tection using point cloud data. The method lever-

ages region proposal network (RPN) and PointNet++

for feature extraction, achieving state-of-the-art re-

sults in various object detection benchmarks, includ-

ing people detection. However, the effectiveness of

this method relies on region proposal, which may

limit its efficiency for real-time applications. Addi-

tionally, it may struggle with densely packed scenes

due to limited coverage of the proposed solution.

STD (Yang et al., 2019b) is a sparse-to-dense 3D

object detection framework that reconstructs dense

and complete point clouds from sparse input. The

method leverages depth completion and 3D feature

learning to improve object detection accuracy. STD

achieves competitive results in object detection, in-

cluding human detection tasks. However, STD’s re-

liance on depth completion may introduce errors in

scenes with challenging depth estimation or limited

sensor capabilities.

Complex-YOLO (Simon et al., 2018) proposes a

real-time 3D object detection framework for point

clouds. The method employs complex convolu-

tional layers and anchor-based predictions to achieve

efficient and accurate object detection. Complex-

YOLO demonstrates competitive performance on var-

ious datasets. However, it may struggle with highly

occluded objects due to limitations in the anchor-

based prediction scheme.

Another technique introduces Histograms of Ori-

ented Gradients (HOG) features combined with a

Random Forest classifier for people detection (Dalal

et al., 2006). This method captures shape and ap-

pearance characteristics of people, leading to accu-

rate detection. However, the HOG-based approach

may struggle with complex occlusion patterns and

require careful parameter tuning for optimal perfor-

mance. Additionally, using HOG for real-time de-

tection is not appropriate as it may lack of the nec-

essary performance despite some efforts for reducing

features calculation time (Pedersoli et al., 2008).

Other solutions are available such as the “Segment

From Point Cloud Perception Toward People Detection

521

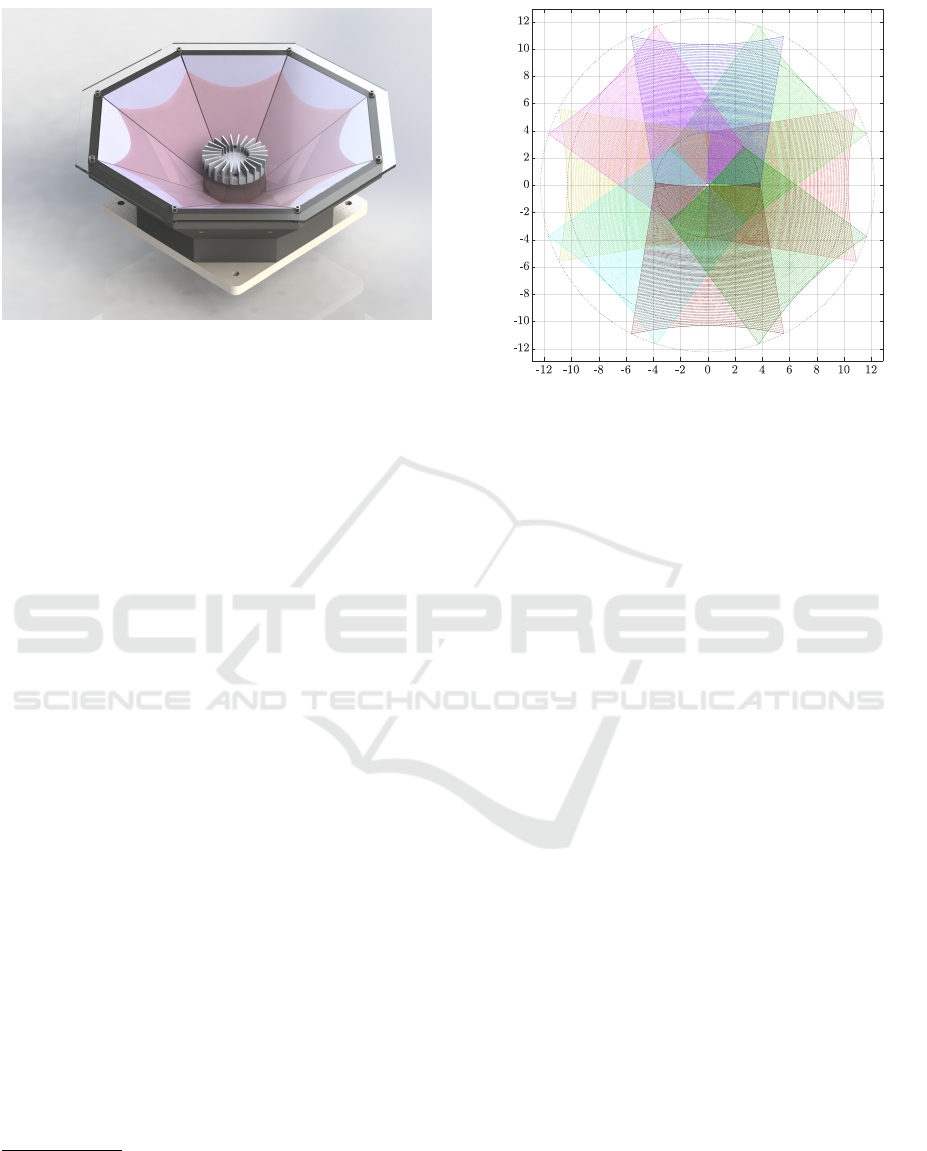

Figure 1: LiDAR used to capture the dataset input

data (Aalerud et al., 2020).

Anything” project by Meta

1

, which focuses on image

segmentation.

The work presented in this paper utilizes a newly

developed LiDAR to accurately locate people using a

Random Forest classifier, outperforming state of the

art solutions reducing installation and data process-

ing costs, with a potential application in diverse fields

such as industrial environments, autonomous driving,

human-robot interaction, safety and security, surveil-

lance, etc.

3 PROPOSED APPROACH

This section provides an overview of the dataset used

in the present study, including details on its collection

process and characteristics. Then, the methodology

used for people detection is explained, outlining the

steps involved in recognising people entities within

the dataset.

3.1 Sensor Description

The dataset is reliant on a LiDAR system that has its

origins in the research described in (Aalerud et al.,

2020). This LiDAR system prototype, as shown in

Figure 1, stands out due to its innovative design, fea-

turing eight individual mirror segments inclined at an

angle of 34

◦

. More specifically, the LiDAR model

used in this system is the OS1-128, produced by

Ouster

2

. The OS1-128 boasts an impressive array of

128 laser emitters evenly distributed over a scanning

1

Segment Anything link available at https://segment-

anything.com/, consulted on October 2023

2

Additional details can be found at https://ouster.com/

blog/introducing-the-os-1-128-lidar-sensor/, accessed in

October 2023

Figure 2: Illustration LiDAR’s coverage in meter from a

distance of 10 meter.

span of 45

◦

. Each of these laser emitters can generate

a remarkable 4096 individual data points in a single

frame.

For the dataset generation process, a configuration

was chosen that employs 2048 data points per frame

and operates at a consistent frame rate of 10 frames

per second (10 fps). This configuration was selected

to facilitate the capture of detailed and dynamic envi-

ronmental data, making it well-suited for various ap-

plications where precision and real-time sensing are

critical.

A visual representation of the LiDAR system’s ca-

pabilities can be seen in Figure 2 from a 10-meter dis-

tance. This Figure provides an informative illustra-

tion of the system’s field of view (FOV), demonstrat-

ing its ability to detect objects and surfaces within a

meter range when situated 10 meters away from the

subject. Additionally, this FOV visualization high-

lights the LiDAR system’s capacity to capture densely

packed spatial data for a specific area of interest.

3.2 Dataset

The dataset was collected in a crowded environment

(conference), where several humans were walking,

sitting, and standing with tables around, stairs, walls,

etc. An example of the collected point cloud is illus-

trated in Figure 3. The LiDAR provides relative posi-

tional information, represented by the coordinates (x,

y, and z). In our case of study, the LiDAR is fixed

in a specific height in order to collect the positional

information.

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

522

Figure 3: Visualisation of the obtained point cloud using the

developed LiDAR.

3.3 Developed Architecture

Figure 4 represents the developed architecture in or-

der to detect people from the perceived point cloud.

First, raw data are collected from the LiDAR. Second,

information related to the ground is removed. In the

context of ground removal in point cloud data, a re-

gion growing algorithm can be applied to identify and

separate the ground points from other objects. The al-

gorithm typically starts with a seed point known to

be on the ground surface. These points are more or

less known due to the knowledge of the approximate

sensor position. It then examines the neighboring

points and checks if they satisfy certain criteria, such

as having similar elevation values within a predefined

threshold. If a neighboring point meets the criteria, it

is added to the region. This process continues in an

iterative way until no more points can be added to the

region. By utilizing a region growing algorithm, the

ground points are segmented and then removed for

the case of study. Third, the main dataset is used to

train our Random Forest Classifier. The generalized

developed algorithm is shown in Algorithm 1.

Random Forest Classifier. The Random Forest

classifier is an ensemble learning algorithm that com-

bines the outputs of multiple decision tree classifiers

to perform classification tasks (Belgiu and Dr

˘

agut¸,

2016). It creates an ensemble, or a collection, of de-

cision trees where each tree is trained independently

on a randomly sampled subset of the training data.

During the training process, each decision tree in the

ensemble learns to classify the data based on different

features and thresholds.

Data: Collected Database

Result: People detection in the Point Cloud

Generation of training (TrDB) and testing

database (TsDB);

while Point Cloud Perception ∈ TrDB do

Read current perception;

Ground removal;

Features extraction;

Training using Random Forest Classifier;

end

while Point Cloud Perception ∈ TsDB do

Read current perception;

Ground removal;

Features extraction;

if Features corresponds to people then

people detection;

end

end

Algorithm 1: The developed algorithm to detect people.

When making predictions using the Random For-

est classifier, each decision tree in the ensemble in-

dependently assigns a class label to the input data.

The final prediction is determined by combining the

predictions of all the decision trees, typically through

majority voting. The class label that receives the ma-

jority of votes from the decision trees is selected as

the final predicted class label.

Training and Testing. For training, the labeled

dataset is used to train the Random Forest classifier,

enabling it to learn the distinguishing features of peo-

ple entities. The classifier leverages the collective

knowledge of the decision trees to make accurate pre-

dictions. During the testing phase, the trained Ran-

dom Forest classifier is deployed on new, unseen point

cloud data to detect the presence of people. The clas-

sifier assigns class labels to the input data based on the

majority votes from the decision trees in the ensem-

ble. This majority voting mechanism ensures robust

and reliable people detection results.

By training and testing the Random Forest clas-

sifier on the labeled dataset, the proposed approach

achieves fast people detection in point cloud data. Ad-

ditionally, we removed the ground before training our

data which contributes to accurate and reliable detec-

tion of people in real-world scenarios.

4 EXPERIMENTAL RESULTS

To evaluate the performance of the proposed ap-

proach, a series of experiments were conducted on

From Point Cloud Perception Toward People Detection

523

Raw data from LiDAR

Testing for human

detection

Training RFC for

human detection

Main labeled dataset

Ground Removal

Figure 4: Illustration of the developed methods for human detection.

the dataset comprising various real-world scenarios.

The dataset consisted of point cloud data captured

from a conference. We divided our dataset into two:

Dataset1 and Dataset2. Each of them contains 19 hu-

mans each, which are walking, standing, and sitting.

Figure 5: Illustration of a human detection example. Blue

color represents the perceived point cloud from the LiDAR

while red color represents the detected humans in the scene

using the Random Forest classifier.

It is worth mentioning that all experiments pre-

sented herein have been performed using a MacBook

Pro with Apple M1 Pro processor and 16GB RAM,

and no GPU, cluster, or advanced equipment has been

used, proving that the proposed solution can be easily

replicated and effectively implemented in real-world

scenarios.

In the conducted experiments, Dataset1 and

Dataset2, were utilized. These datasets include peo-

ple activity and behavior during conference sessions

and were collected using the LiDAR that was record-

ing from an elevation of 10 meters.

Dataset1, despite being smaller, still encompasses

a diverse range of people poses and activities ob-

served during the recorded scene. It serves as a

representative sample to evaluate the proposed ap-

Table 1: Example of annotated data.

" psr " : {

" p o s itio n " : {

" x ": 1 . 8 860 3 4 1036 1 4 074 4 ,

" y ": 2 . 0 482 0 5 2752 1 9 747 3 ,

" z ": - 1 . 914 0 9 1 776 4 0 416 9 6

} ,

" scale " : {

" x ": 0 . 4 826 4 1 3579 9 7 475 6 ,

" y ": 0 . 8 237 3 5 1830 1 4 531 1 ,

" z ": 1 . 80 1 308 2 47 0 3 36 1 8

} ,

" r o t atio n " : {

" x ": 0 . 0 13 9 4 2 77 9 1 0 76 5 5 656 ,

" y ": -0 . 0 0 76293 5 6 6 41117 0 5 4 ,

" z ": 1 . 11 9 411 5 76 2 1 45 9 7

}

proach and assess its performance on a relatively lim-

ited dataset from a conference. The smaller size of

Dataset1 allows for a focused analysis of the approach

effectiveness in detecting people with different con-

figurations within the conference environment.

On the other hand, Dataset2 is a larger and more

comprehensive dataset, capturing a broader range of

people behaviors. With its increased size and va-

riety of instances, Dataset2 provides a more exten-

sive and robust evaluation of the proposed approach.

The larger amount of data in Dataset2 enables a more

comprehensive assessment of the approach’s perfor-

mance across various scenarios and configurations en-

countered during the conference.

By using both Dataset1 and Dataset2, the experi-

ments provide insights into the scalability of the pro-

posed approach. The evaluation results obtained from

these datasets contribute on understanding the ap-

proach’s performance in both limited and extensive

data settings derived from conference environments.

Each dataset is divided into a learning (80%) and a

testing dataset (20%). We annotated each person with

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

524

Table 2: Illustration of the obtained results for human detection using RFC (Random Forest Classifier) and MLP (Multi-Layer

Perceptron).

Method Dataset Precision Computation time (s) Learning time (s)

RFC Data1 0.95 0.013 0.08

MLP Data1 0.95 0.013 0.079

RFC Data2 0.95 0.014 0.069

MLP Data2 0.74 0.016 0.079

three types of information: positional information rel-

ative to the liDAR (x, y and z), scale (x, y and z), and

rotation (x, y and z) parameters, thus providing more

information to feature selection and learning. An ex-

ample of an annotated data is represented in Table 1.

The availability of annotated data serves as a solid

foundation for learning and evaluating the imple-

mented approach. The evaluation of the proposed ap-

proach encompasses a comprehensive analysis based

on multiple evaluation metrics, including Precision,

Recall, F1-score, Support, Computation time, and

Learning time.

This comprehensive assessment provides a thor-

ough understanding of the approach’s performance

across various dimensions, ensuring a robust evalu-

ation of its effectiveness.

Precision measures the accuracy of the positive

predictions for a given class. It is calculated as the

ratio of true positives to the sum of true positives and

false positives. A higher precision indicates fewer

false positives. Computation time represents the time

that is spent in order to detect a human from a point

cloud perception. Learning time refers to the duration

taken by the method to acquire knowledge from the

provided training data.

Table 2 shows the obtained results using Random

Forest classifier (RCF) and Multi-Layer Perceptron

(MLP) (Goodfellow et al., 2016). From Dataset1, we

can see that both approaches are similar in all the cri-

teria. Even from the computational and learning time

both approaches are similar.

However, for Dataset2, Random Forest classi-

fier gives a better result in term computational time

(13.5% faster). Additionally, the learning time of

RFC is faster (12,7%) than MLP.

This result confirms that, using our LiDAR which

perceives 10fps, the proposed approach can be con-

sidered as a real-time solution. Due to its fast learn-

ing, we can also think of embedding our approach as

continuous learning technique if new objects need to

be detected. Therefore, RCF can be extended to hu-

man tracking.

5 CONCLUSIONS

This paper presented an approach for fast people de-

tection in point cloud perception using a Random For-

est classifier. The main challenge addressed is related

to the fast detection of people using real collected

data.

The utilization of a multi-point LiDAR system

contributed to enhanced data quality and resolution,

enabling robust and fast people detection. The exper-

imental results demonstrated the effectiveness of the

approach in diverse environments, showcasing its po-

tential for practical applications in various domains.

This paper contributes to the advancement of a

precise and fast people detection in point cloud per-

ception by providing a reliable and efficient solu-

tion with a new developed LiDAR. The approach was

compared to Multi-Layer Perceptron (MLP). For a

bigger dataset scenario, the proposed approach has

obtained better results.

The scalability and versatility of the Random For-

est classifier make it an appealing choice for extend-

ing the approach to other object recognition tasks in

point cloud analysis. The proposed approach has

the potential to significantly impact the fields of au-

tonomous driving, surveillance, human-robot interac-

tion, industrial environments, safety and security, etc.,

enabling safer and more efficient interactions between

humans and intelligent systems, with a high market

potential.

Overall, the proposed approach opens new av-

enues for advancing in real-time human tracking in

point cloud perception, contributing to the broader

goal of improving situational awareness and enabling

intelligent systems to effectively perceive and interact

with their dynamic environments.

Future work includes larger scale testing and fur-

ther validation activities in different environments

(e.g. industrial areas, roads, etc.), as well as the com-

parison with other existing approaches for real-time

people detection.

From Point Cloud Perception Toward People Detection

525

ACKNOWLEDGEMENTS

This work is co-funded by the Research Council of

Norway under the project entitled “Next Generation

3D Machine Vision with Embedded Visual Comput-

ing” with grant number 325748.

REFERENCES

Aalerud, A., Dybedal, J., and Subedi, D. (2020). Reshaping

field of view and resolution with segmented reflectors:

Bridging the gap between rotating and solid-state li-

dars. Sensors, 20(12).

Belgiu, M. and Dr

˘

agut¸, L. (2016). Random forest in remote

sensing: A review of applications and future direc-

tions. ISPRS journal of photogrammetry and remote

sensing, 114:24–31.

Cao, C., Preda, M., and Zaharia, T. (2019). 3d point cloud

compression: A survey. In The 24th International

Conference on 3D Web Technology, pages 1–9.

Cutler, D. R., Edwards Jr., T. C., Beard, K. H., Cutler, A.,

Hess, K. T., Gibson, J., and Lawler, J. J. (2007). Ran-

dom forests for classification in ecology. Ecology,

88(11):2783–2792.

Dai, D., Chen, Z., Bao, P., and Wang, J. (2021). A review of

3d object detection for autonomous driving of electric

vehicles. World Electric Vehicle Journal, 12(3).

Dalal, N., Triggs, B., and Schmid, C. (2006). Human de-

tection using oriented histograms of flow and appear-

ance. volume 3952, pages 428–441.

Goodfellow, I., Bengio, Y., and Courville, A. (2016). Deep

learning. Nature, 521(7553):436–444.

Huang, X., Mei, G., Zhang, J., and Abbas, R. (2021).

A comprehensive survey on point cloud registration.

arXiv preprint arXiv:2103.02690.

Javaheri, A., Brites, C., Pereira, F., and Ascenso, J. (2017).

Subjective and objective quality evaluation of 3d point

cloud denoising algorithms. In 2017 IEEE Interna-

tional Conference on Multimedia & Expo Workshops

(ICMEW), pages 1–6. IEEE.

Li, Y., Bu, R., Sun, M., Wu, W., Di, X., and Chen, B.

(2018a). Pointcnn: Convolution on x-transformed

points. In Bengio, S., Wallach, H., Larochelle, H.,

Grauman, K., Cesa-Bianchi, N., and Garnett, R., edi-

tors, Advances in Neural Information Processing Sys-

tems, volume 31. Curran Associates, Inc.

Li, Y., Bu, R., Sun, M., Wu, W., Di, X., and Chen, B.

(2018b). Pointcnn: Convolution on x-transformed

points. Advances in neural information processing

systems, 31.

Lin, C.-H., Kong, C., and Lucey, S. (2018). Learning ef-

ficient point cloud generation for dense 3d object re-

construction. In proceedings of the AAAI Conference

on Artificial Intelligence, volume 32.

Pedersoli, M., Gonzalez, J., Chakraborty, B., and Vil-

lanueva, J. J. (2008). Enhancing real-time human

detection based on histograms of oriented gradients.

In Computer Recognition Systems 2, pages 739–746.

Springer.

Qi, C. R., Liu, W., Wu, C., Su, H., and Guibas, L. J. (2017a).

Frustum pointnets for 3d object detection from RGB-

D data. CoRR, abs/1711.08488.

Qi, C. R., Su, H., Mo, K., and Guibas, L. J. (2017b). Point-

net: Deep learning on point sets for 3d classification

and segmentation.

Qi, C. R., Su, H., Mo, K., and Guibas, L. J. (2017c). Point-

net: Deep learning on point sets for 3d classification

and segmentation. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 652–660.

Qi, C. R., Yi, L., Su, H., and Guibas, L. J. (2017d). Point-

net++: Deep hierarchical feature learning on point sets

in a metric space. In Guyon, I., Luxburg, U. V., Ben-

gio, S., Wallach, H., Fergus, R., Vishwanathan, S., and

Garnett, R., editors, Advances in Neural Information

Processing Systems, volume 30. Curran Associates,

Inc.

Qi, C. R., Yi, L., Su, H., and Guibas, L. J. (2017e). Point-

net++: Deep hierarchical feature learning on point sets

in a metric space. Advances in neural information pro-

cessing systems, 30.

Shi, S., Wang, X., and Li, H. (2019). Pointrcnn: 3d object

proposal generation and detection from point cloud.

In IEEE Conference on Computer Vision and Pattern

Recognition, CVPR 2019, Long Beach, CA, USA, June

16-20, 2019, pages 770–779. Computer Vision Foun-

dation / IEEE.

Simon, M., Milz, S., Amende, K., and Gross, H. (2018).

Complex-yolo: Real-time 3d object detection on point

clouds. CoRR, abs/1803.06199.

Uy, M. A., Pham, Q.-H., Hua, B.-S., Nguyen, T., and

Yeung, S.-K. (2019). Revisiting point cloud clas-

sification: A new benchmark dataset and classifica-

tion model on real-world data. In Proceedings of the

IEEE/CVF international conference on computer vi-

sion, pages 1588–1597.

Yang, G., Huang, X., Hao, Z., Liu, M.-Y., Belongie, S., and

Hariharan, B. (2019a). Pointflow: 3d point cloud gen-

eration with continuous normalizing flows. In Pro-

ceedings of the IEEE/CVF international conference

on computer vision, pages 4541–4550.

Yang, Z., Sun, Y., Liu, S., Shen, X., and Jia, J. (2019b).

STD: sparse-to-dense 3d object detector for point

cloud. CoRR, abs/1907.10471.

ICINCO 2023 - 20th International Conference on Informatics in Control, Automation and Robotics

526