Encoding Techniques for Handling Categorical Data in Machine

Learning-Based Software Development Effort Estimation

Mohamed Hosni

a

MOSI Research Team, ENSAM, University Moulay Ismail of Meknes, Meknes, Morocco

Keywords:

Categorical Data, Encoder, Software Effort Estimation, Ensemble Effort Estimation.

Abstract:

Planning, controlling, and monitoring a software project primarily rely on the estimates of the software de-

velopment effort. These estimates are usually conducted during the early stages of the software life cycle.

At this phase, the available information about the software product is categorical in nature, and only a few

numerical data points are available. Therefore, building an accurate effort estimator begins with determining

how to process the categorical data that characterizes the software project. This paper aims to shed light on

the ways in which categorical data can be treated in software development effort estimation (SDEE) datasets

through encoding techniques. Four encoders were used in this study, including one-hot encoder, label encoder,

count encoder, and target encoder. Four well-known machine learning (ML) estimators and a homogeneous

ensemble were utilized. The empirical analysis was conducted using four datasets. The datasets generated by

means of the one-hot encoder appeared to be suitable for the ML estimators, as they resulted in more accu-

rate estimation. The ensemble, which combined four variants of the same technique trained using different

datasets generated by means of encoder techniques, demonstrated an equal or better performance compared to

the single ML estimation technique. The overall results are promising and pave the way for a new approach to

handling categorical data in SDEE datasets.

1 INTRODUCTION

Software development effort estimation (SDEE) is de-

fined as the process of determining the amount of

effort required to develop a software system (Wen

et al., 2012). Delivering an accurate estimate is

crucial for the success of the software development

project, as an inaccurate estimate can lead to project

failure (Oliveira et al., 2010). To avoid such prob-

lems, over the past five decades, researchers have de-

veloped and evaluated several estimation techniques

aimed at providing an accurate estimate of the ef-

fort required to develop a software project (Ali and

Gravino, 2019). These estimation techniques can be

classified into three main categories (Jorgensen and

Shepperd, 2006): expert judgment, algorithmic tech-

niques, and machine learning (ML) techniques.

Wen et al. conducted a systematic literature re-

view (SLR) on the use of machine learning (ML)

techniques in SDEE studies conducted between 1991

and 2010 (Wen et al., 2012). They identified eight

different ML techniques that had been investigated

a

https://orcid.org/0000-0001-7336-4276

in the 84 selected studies. Among these techniques,

Analogy and Artificial Neural Networks (ANN) were

the most frequently adopted. The review highlighted

the increasing popularity of ML techniques in SDEE

over the past three decades and demonstrated that es-

timates produced using these techniques were more

accurate compared to other estimation methods. In

recent literature, a new approach called Ensemble Ef-

fort Estimation (EEE) has been proposed, which in-

volves combining multiple SDEE techniques under a

specific combination rule (Kocaguneli et al., 2011).

The overall conclusions drawn from the literature of

SDEE indicate that this new approach generates more

accurate estimates compared to using a single tech-

nique (Cabral et al., 2023).

Nevertheless, accurately estimating the effort re-

quired for software development is a complex under-

taking, especially in the early stages of the software

life cycle when the available information tends to be

more descriptive rather than quantifiable (Amazal and

Idri, 2019). Indeed, predictive techniques rely on con-

structing their models using a set of features (i.e., cost

drivers) that define software projects. Most SDEE

techniques generate predictions by using numerical

460

Hosni, M.

Encoding Techniques for Handling Categorical Data in Machine Learning-Based Software Development Effort Estimation.

DOI: 10.5220/0012259400003598

In Proceedings of the 15th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2023) - Volume 1: KDIR, pages 460-467

ISBN: 978-989-758-671-2; ISSN: 2184-3228

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

attributes. However, during the initial stages of the

software life cycle, the available information tends to

be more categorical than numerical. Furthermore, the

historical datasets contain a significant number of cat-

egorical features, such as COCOMO 81 and ISBSG

datasets, among others.

The categorical attributes within SDEE can be

measured either nominally or ordinally. To address

this limitation, various approaches have been ex-

plored in the existing literature (Angelis et al., 2001;

Li et al., 2007). These techniques include the use

of Euclidean and Manhattan distance, decision tree-

based classification, fuzzy logic, and the grey rela-

tional coefficient. Many of the proposed methods for

handling categorical attributes employ hybrid tech-

niques, combining a specific approach for categori-

cal attribute handling with the SDEE technique itself.

As a result, the process of constructing the estimation

technique and the preprocessing stage become insepa-

rable. For instance, distance measures like Euclidean

and Manhattan distance are specific to predictive tech-

niques that predict effort based on similarity, making

them specific to this type of predictive technique and

not applicable to other SDEE techniques such as De-

cision Trees (DT), Support Vector Regression (SVR),

or ANN.

Indeed, handling categorical attributes is consid-

ered a part of the feature engineering process, which

is a key step in data preprocessing. It involves

converting categorical attributes into their numerical

counterparts. It’s important to note that preparing the

data for a predictive model is a distinct task from the

modeling process. Once the original data has been

preprocessed and all the categorical attributes have

been transformed into numerical format, the model-

ing process (i.e., building the SDEE technique) can

begin independently from the preprocessing stage.

This paper aims to examine the utilization of cat-

egorical preprocessing techniques and evaluate their

impact on the predictive performance of SDEE tech-

niques, including both single and ensemble methods.

The original dataset, which includes categorical fea-

tures, is preprocessed using different encoder tech-

niques to convert them into numerical attributes. Four

encoder techniques, namely One-Hot encoding, La-

bel encoding, Counter encoding, and Target encod-

ing, are used for that purpose. Next, several ML tech-

niques are constructed, including K-nearest neighbor,

DT, SVR, and Multilayer Perceptron (MLP) neural

networks. These techniques are trained using datasets

generated from the original dataset, with each dataset

being processed using a different encoder technique.

Furthermore, an ensemble is created by combining

four variants of the same technique, each trained on a

dataset generated using a different encoder technique.

The final output of ensemble is generated by the aver-

age rule. The predictive capabilities of these methods

are assessed using various unbiased metrics.

Toward these aims, two research questions (RQs)

have been examined:

• (RQ1): Among the four encoder techniques em-

ployed in this study, which one yields superior

performance when used with a single ML tech-

nique?

• (RQ2): Does the utilization of different encoder

techniques alongside the same SDEE technique

(ensemble technique) result in more accurate es-

timates compared to using a single SDEE tech-

nique?

The primary contributions of this empirical work are

as follows:

• The application of four encoder techniques to pro-

cess categorical attributes in SDEE datasets.

• Investigation of the impact of encoders on the ac-

curacy of SDEE techniques.

• Assessment of the effect of encoders on the per-

formance of the EEE approach.

To the best of our knowledge, no existing research has

explored the impact of encoder techniques on the pre-

dictive capabilities of SDEE-based ML techniques.

The remainder of this paper is structured as fol-

lows: Section 2 presents the main findings of related

work in literature. Section 3 defines the feature en-

coding techniques utilized in this study. Section 4

provides the empirical design employed in this re-

search. Section 5 discusses the empirical results ob-

tained from the experiments. Section 6 presents future

work and concludes the paper.

2 RELATED WORK

Wen et al. conducted a SLR to investigate the utiliza-

tion of SDEE based on ML techniques for estimat-

ing software development effort (Wen et al., 2012).

The review examined 84 papers published between

1991 and 2010. Through this review, eight ML tech-

niques were identified: CBR or analogy, ANN, DTs,

bayesian networks, SVR, genetic algorithms, genetic

programming, and association rules. These tech-

niques have been employed to estimate software de-

velopment effort. Among them, CBR and ANN were

found to be the most commonly used techniques, ac-

counting for 37 and 26 respectively. The review also

revealed that the estimation derived from ML tech-

niques, particularly CBR and ANN, exhibited higher

Encoding Techniques for Handling Categorical Data in Machine Learning-Based Software Development Effort Estimation

461

accuracy compared to non-ML techniques. Another

recent review conducted by Asad et al. focused on

the application of ML techniques in SDEE (Ali and

Gravino, 2019). This review highlighted that ANN

and SVR techniques were extensively investigated in

the literature. Furthermore, both techniques were

found to generate more accurate estimates compared

to other ML and non-ML techniques. In terms of

performance metrics, both reviews identified Mean

Magnitude of Relative Error (MMRE) and Prediction

within 25 of actual effort (Pred(25)) as commonly

used performance measures.

Recently, there has been growing interest in ex-

ploring ensemble methods in the context of SDEE

(Hosni et al., 2018a; Azhar et al., 2013). This ap-

proach involves predicting software development ef-

fort by combining multiple techniques using a specific

combination rule. Two types of ensembles are de-

fined: homogeneous ensembles, which combine dif-

ferent variants of the same estimation technique, and

heterogeneous ensembles, which incorporate multiple

different estimation techniques. Idri et al. conducted

a SLR to examine the use of EEE (Idri et al., 2016).

Their review analyzed 24 papers published between

2000 and 2016. The findings revealed that 16 SDEE

techniques were employed to construct EEE, with the

homogeneous ensemble being the most commonly

used type. Additionally, 20 combiners were utilized

to combine the output of single estimators, and linear

rules were the most frequently adopted. In terms of

performance accuracy, the review indicated that the

ensemble approach consistently generated more ac-

curate results than using a single estimator. A recent

update of this SLR was conducted by Cabral et al.

(Cabral et al., 2023), and their findings aligned with

those reported by Idri et al. They also observed an in-

crease in research work in the last five years, driven by

the promising results obtained through the ensemble

approach.

The treatment of categorical data in SDEE

datasets has been the focus of several papers in the

literature. Amazal et al. conducted a systematic map-

ping study to explore the handling of categorical data

in SDEE (Amazal and Idri, 2019). The review in-

cluded 27 papers that addressed this topic. The find-

ings revealed that Euclidean distance, fuzzy logic,

and fuzzy clustering techniques were commonly em-

ployed to handle categorical data, particularly when

utilizing the analogy technique. On the other hand,

when using regression techniques, most papers uti-

lized Analysis of Variance and combinations of cat-

egories. Furthermore, the review identified several

SDEE techniques that have been investigated in the

literature, namely analogy, Regression, and Classifi-

cation and Regression Trees (CART). Additionally,

various techniques were used to handle categorical

features, including Euclidean distance, classification

by DT, fuzzy clustering, grey relational coefficient,

and local similarity. These techniques were com-

bined with specific SDEE techniques, resulting in hy-

brid methods. The objective of these approaches,

as explored in the literature, is to enhance the accu-

racy of specific SDEE techniques when working with

datasets that contain categorical effort drivers.

However, handling categorical attributes is typi-

cally considered as part of the feature engineering

phase in the ML process. This phase is essential and

occurs before constructing an ML model. The focus

of this paper is to address categorical attributes inde-

pendently of the predictive model employed. In other

words, the aim is to generate a dataset comprising

just numerical attributes obtained through a specific

encoder technique applied to the original dataset that

contains categorical attributes (Breskuvien

˙

e and Dze-

myda, 2023), (De La Bourdonnaye and Daniel, 2021).

By doing so, the paper aims to preprocess the data

and transform the categorical attributes into numeri-

cal representations, which can then be further used as

input for different ML estimators.

3 FEATURE ENCODING

TECHNIQUES

This section provides a brief description of the four

features encoding techniques used in this paper.

These techniques differ from each other in the pro-

cess of transforming the categorical attributes into nu-

merical ones. In fact, the process of transforming

categorical attributes into numerical ones is crucial

for ML technique to effectively process (Breskuvien

˙

e

and Dzemyda, 2023; De La Bourdonnaye and Daniel,

2021).

One Hot Encoder: is a technique used to convert a

categorical attribute into a numerical representation.

The process begins by determining the number of dis-

tinct categories within the categorical attribute. Sub-

sequently, a set of columns is created, with each col-

umn representing one of the distinct categories. Bi-

nary values (0 or 1) are then assigned to these columns

based on whether a data point belongs to a particular

category or not. The number of columns generated is

equal to the number of distinct categories present in

the attribute.

Label Encoder: is a simple encoding technique. It

consists of assigning a unique numerical value of each

category value presents in the categorical attribute.

The numerical value starts by 0 or 1 and it increments

KDIR 2023 - 15th International Conference on Knowledge Discovery and Information Retrieval

462

for each category. This technique can work for both

types of categorical attributes ordinal and nominal.

Count Encoder: also known as counting encoder, is

a technique that replaces categorical values with nu-

merical values based on the number of occurrences

of each category in an attribute vector. This encoder

provides additional information to the dataset by con-

sidering the frequency of a specific category’s ap-

pearance, rather than solely relying on the categorical

value of the attribute.

Target Encoder: also known as target-based encod-

ing, the idea of this encoder involves replacing the

categorical values of a categorical feature with numer-

ical values that reflect the relationship between each

category and the target variable. Instead of using the

original categorical value, a statistical value derived

from the target variable is used. This statistical value

can be the median, average, or mode value of the tar-

get attribute, for instances belonging to that specific

category. In this study, the average value of the target

variable was adopted as the statistical value for target

encoding.

4 EMPIRICAL DESIGN

4.1 Machine Learning Used

Four machine learning (ML) techniques, namely

KNN, SVR, MLP and DT, have been selected to build

the SDEE techniques. These techniques are com-

monly used in SDEE literature (Kocaguneli et al.,

2011; Kocaguneli et al., 2009; Hosni et al., 2018b).

Additionally, a homogeneous ensemble has been cre-

ated using the average combiner. In this ensemble,

four variants of a specific ML technique are trained on

the four preprocessed datasets. Each variant is trained

independently in a preprocessed dataset by means of

a specific encoder technique, and their predictions are

combined using the average combiner.

4.2 Performance Metrics and Statistical

Test

Most of SLRs conducted in the literature of SDEE

claim that the MMRE and Prediction level are the

most frequently used to assess the accuracy perfor-

mance SDEE techniques. These performance cri-

teria are based on the mean relative error (MRE).

This criterion was criticized by several researchers in

literature for being biased towards underestimation.

To avoid this shortcoming several alternative perfor-

mance metrics have been proposed such as Mean

Absolute Error (MAE), Mean Balanced Relative Er-

ror (MBRE), Mean Inverted Balanced Relative Er-

ror (MIBRE) which are considered less venerable to

bias and asymmetry, and Logarithmic Standard Devi-

ation (LSD) (Miyazaki et al., 1991; Foss et al., 2003).

Therefore, in this paper we adopted these metrics

along with their median value, except for LSD and

Pred(25). Another criterion was proposed by Shep-

perd and MacDonell called Standardized Accuracy

(SA) (Kocaguneli and Menzies, 2013). This criterion

compares a predictive model to a baseline estimator

created by a random guessing approach.

To verify if the predictions of a model are gener-

ated by chance and if there is an improvement over

random guessing the Effect Size criterion was used.

The leave-one-out cross validation (LOOCV)

technique was used to construct our proposed predic-

tive techniques.

The Scott-Knott (SK) statistical test based on AE

was performed to identify the techniques that share

similar predictive capabilities.

4.3 Datasets

Four datasets were chosen for the empirical analysis

conducted in this paper. Three of these datasets were

selected from the PROMISE repository, while one

dataset was obtained from the ISBSG. These datasets

were suitable for the experiments raised in this pa-

per since they contain a large number of categorical

attributes. Table 1 provides an overview of the char-

acteristics of the five selected datasets.

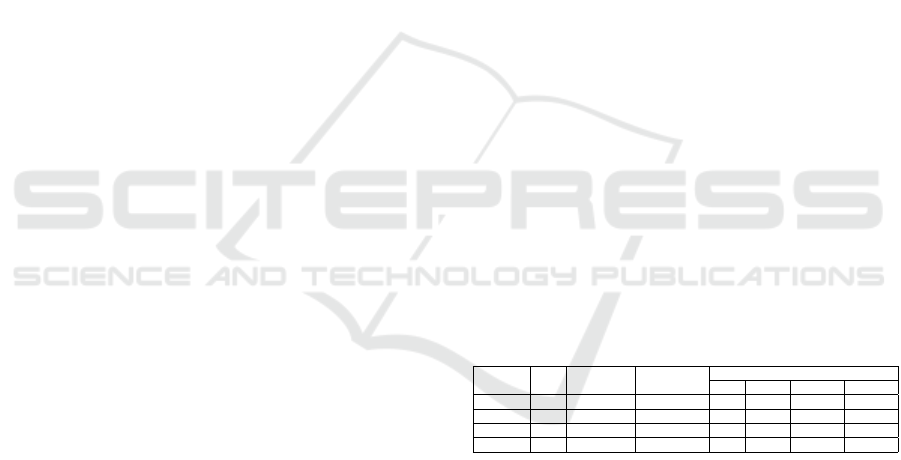

Table 1: Characteristics of the employed datasets.

Dataset Size Numerical Categorical

Effort

Min Max Mean Median

ISBSG 266 6 5 47 54620 4790.10 2322.50

Nasa93 93 2 20 8.4 8211 624.41 252

Maxwell 63 2 22 583 63694 8109.54 5100

USP05 203 1 13 0.5 400 11.58 3

4.4 Methodology Used

The following steps were followed for each dataset to

build the proposed SDEE techniques in this study:

For the Single SDEE Techniques:

Step 1: Categorical feature transformation: The se-

lected encoder techniques, namely One Hot Encod-

ing (OH), Label Encoding (LE), Counting Encod-

ing (CE), and Target Encoding (TE), were applied to

transform categorical features into numerical repre-

sentations. This resulted in four distinct datasets.

Step 2: ML technique construction: Each of the four

ML techniques was built using the grid search opti-

mization technique and 10-fold cross-validation.

Step 3: Parameter selection: The optimal parame-

Encoding Techniques for Handling Categorical Data in Machine Learning-Based Software Development Effort Estimation

463

ters for each ML technique were selected based on

the specific dataset.

Step 4: Reasonability assessment: The optimized ML

techniques were evaluated using performance met-

rics, such as SA and effect size, to determine their

effectiveness.

Step 5: Performance evaluation: The selected ML

techniques were assessed in terms of eight perfor-

mance metrics MAE, MdAE, MIBRE, MdIBRE,

MBRE, MdBRE, Pred(25) and LSD). The LOOCV

technique was employed for this evaluation.

Step 6: Statistical analysis: Perform statistical analy-

sis based on the AE using the SK test.

For the Ensemble Methods:

Step 1: Homogeneous ensemble construction: For

each ML technique, build four variants technique in

each of the four preprocessed datasets and combine

their estimates using the average combiner.

Step 2: Ensemble performance evaluation: The per-

formance accuracy of the constructed ensembles was

measured using the performance metrics mentioned

earlier.

Step 3: Techniques ranking: The constructed tech-

niques, both single ML techniques and ensembles,

were ranked using the Borda count voting system

based on the performance metrics.

Step 4: Statistical analysis: Statistical analysis was

performed using the SK test based on AE.

To ensure clarity, we utilized the following abbrevi-

ations: SDEE technique + Encoder. For instance,

KNNOH represents the KNN technique trained on a

dataset where categorical features were transformed

into numerical ones using the One Hot (OH) encod-

ing technique.

5 EMPIRICAL ANALYSIS

5.1 Single SDEE Evaluation

We initiate our empirical analysis by preprocessing

the data to convert categorical attributes into numer-

ical values. This procedure was performed on each

dataset using the four encoders employed in this pa-

per. Table 2 presents the feature counts for each

dataset based on the encoder utilized. The results in-

dicate that the number of features increased across all

datasets when the one-hot encoder was applied. This

outcome is expected since the one-hot encoder cre-

ates a column for each category within a categorical

attribute, and some attributes contain more than eight

categories. For the remaining encoders (label encod-

ing, count encoder, and target encoder), the number

of features in the processed dataset remains the same

as the original dataset. This is because these encoders

replace categories with numerical values based on ei-

ther the category’s frequency or its relationship with

the target variable. A total of 16 datasets were utilized

in this study, as each dataset was processed using the

four encoders.

Table 2: Number of features after the encoding step.

Encoder Maxwell ISBSG Nasa93 USP05

OH 93 58 85 302

LE 24 11 22 14

TE 24 11 22 14

CE 24 11 22 14

Next, we proceed to build our estimation tech-

niques using the grid search optimization technique

to determine the optimal parameter values that mini-

mize the MAE in each of the 16 datasets. This process

is carried out using the 10-fold cross-validation tech-

nique. To assess the performance of the constructed

techniques, we compare them to a baseline estima-

tor, specifically the random guessing estimator. The

performance of the four ML techniques is compared

against the 5% quantile of this baseline estimator. The

results obtained suggest that the developed techniques

consistently outperform the baseline method across

all datasets. Furthermore, all the techniques exhibit

significant improvements over the random guessing

estimator. Among the techniques, the KNN technique

achieves the highest level of accuracy in all datasets,

regardless of the encoder technique used for prepro-

cessing. On the other hand, the SVR technique falls

short of achieving 75 accuracy in terms of SA in

all datasets. The DT and MLP techniques generally

achieve higher levels of accuracy, with the exception

of the Maxwell dataset, where the MLPCE has the

lowest SA value.

Afterwards, the performance accuracy of the pro-

posed techniques is assessed using eight performance

criteria through the LOOCV technique. The final

ranking is determined using the Borda count voting

system based on the selected accuracy criteria. The

use of eight performance criteria is justified by the

fact that different criteria can result in different rank-

ings for a given estimator, as each criterion captures a

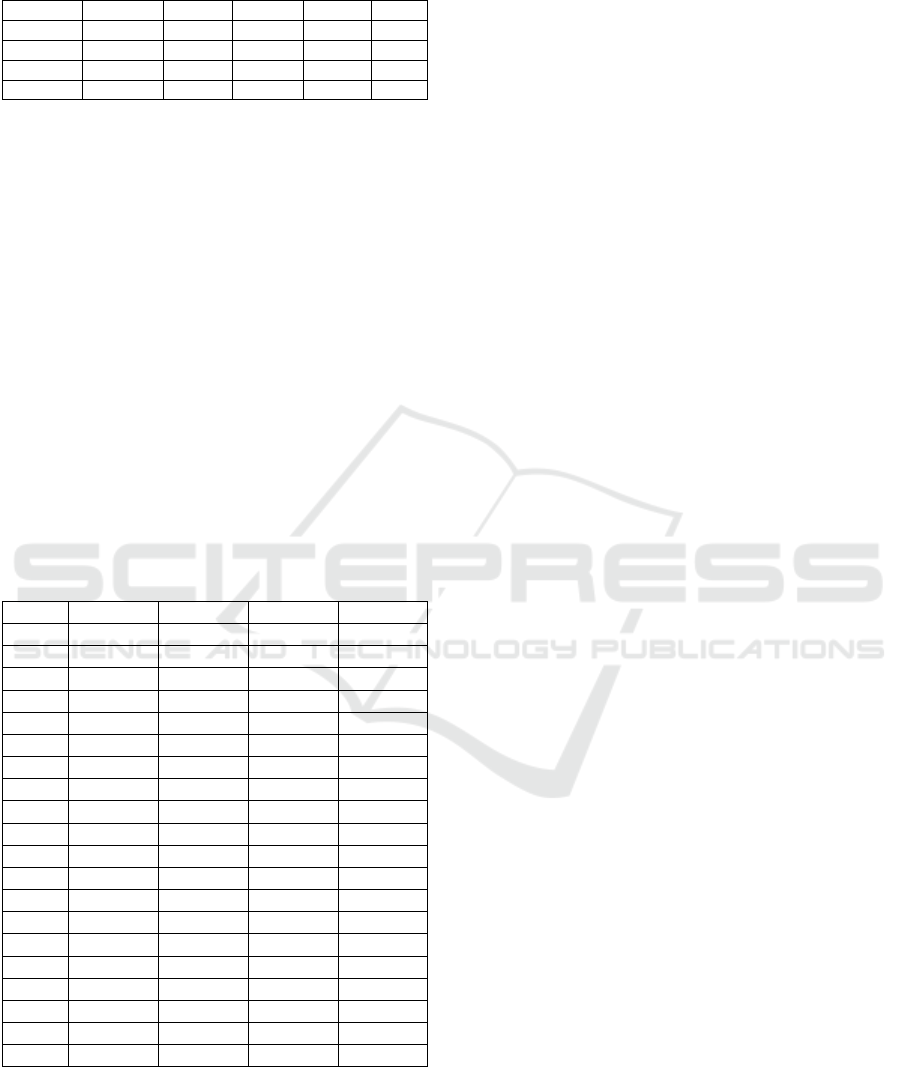

specific aspect of performance accuracy. Table 3 dis-

plays the rankings obtained from the voting system.

The rankings reveal that the DT and KNN techniques

consistently dominate the top six positions across all

datasets, regardless of the encoder used. The only ex-

ception is the Maxwell dataset, where the MLPOH

technique is ranked fourth.

The key findings from the ranking are as follows:

• The KNN technique achieved the top rank in the

KDIR 2023 - 15th International Conference on Knowledge Discovery and Information Retrieval

464

Table 3: Rank of the 16 SDEE techniques.

Rank Maxwell ISBSG Nasa93 USP05

1 DTOH KNNLE DTOH KNNLE

2 KNNTE KNNCE DTLE KNNOH

3 KNNOH KNNOH DTCE KNNTE

4 MLPOH KNNTE KNNOH DTOH

5 KNNCE DTTE DTTE KNNCE

6 DTLE DTCE KNNCE DTCE

7 MLPLE MLPOH MLPLE DTTE

8 SVROH DTOH SVROH SVROH

9 MLPTE DTLE KNNLE MLPCE

10 DTCE MLPTE MLPCE SVRTE

11 KNNLE MLPLE MLPTE SVRCE

12 DTTE MLPCE KNNTE SVRLE

13 SVRCE SVROH SVRTE MLPOH

14 SVRTE SVRTE SVRLE MLPTE

15 SVRLE SVRLE SVRCE DTLE

16 MLPCE SVRCE MLPOH MLPLE

ISBSG and USP05 datasets when LE technique

was used. In the remaining datasets, where the

OH encoder was used, the DT technique obtained

the highest rank.

• The SVR techniques consistently received lower

rankings, with the ISBSG dataset showing the

lowest positions.

• Three MLP techniques (trained in dataset prepro-

cessed by CE, OH, LE techniques) were ranked

last in three datasets.

• MLPOH achieved higher performance accuracy

compared to other MLP techniques when using

the remaining encoders in the Maxwell and IS-

BSG datasets. In the NASA93 dataset, MLPLE

demonstrated greater accuracy than the other

MLP techniques, while in the USP05 dataset,

MLPCE outperformed the others.

• SVROH outperformed the remaining SVR tech-

niques in all datasets.

• DTOH achieved a higher rank than other DT

techniques in all datasets, except for the ISBSG

dataset where DTTE was ranked higher.

• KNNLE outperformed other KNN techniques

in two datasets, while KNNTE and KNNOH

achieved better positions than other KNN tech-

niques in one dataset each.

To further validate the obtained results, we performed

the Scott-Knott test to cluster the different techniques

with similar predictive properties based on their AE.

The SK test identified varying numbers of clus-

ters in each dataset with different techniques. Specif-

ically, it identified 11 clusters in the Maxwell dataset,

eight clusters in the USP05 dataset, six clusters in

the Nasa93 dataset, and four clusters in the ISBSG

dataset.

The main observations than can be noticed are:

• In the Maxwell dataset, the best cluster consists of

three different ML techniques: DTOH, KNNTE,

and MLP with OH and LE encoders. On the other

hand, the worst cluster contains the MLPCE tech-

nique.

• In the ISBSG dataset, the best cluster comprises

the four KNN techniques, while the worst cluster

contains three MLP techniques and the four SVR

techniques.

• In the Nasa93 dataset, the best cluster includes six

techniques: the four DT techniques and two KNN

techniques (with OH and CE encoders). The last

cluster contains the MLPOH and SVRCE tech-

niques.

• In the USP05 dataset, the best cluster consists of

six techniques: the four KNN techniques and two

DT techniques (DTCE and DTOH). The MLPE

technique forms the worst cluster. Based on these

empirical findings, we can draw the following

conclusions:

• The KNN technique emerges as the most accurate

among the techniques used in this study, as one of

its variants belongs to the best cluster identified

by the SK test in all datasets.

• The DT technique produces more accurate esti-

mates, as at least one of its variants belongs to the

best cluster in three datasets.

• The SVR techniques perform poorly in this study,

as none of their variants belong to the best cluster.

• The MLP technique appears to be a viable al-

ternative to the DT and KNN techniques in the

Maxwell dataset, as two of its variants (MLPOH

and MLPLE) are members of the best cluster in

this dataset.

Regarding the encoder techniques, Table 4 provides

the frequency of each encoder in the best cluster iden-

tified by the SK test in each dataset. It is observed

that the OH encoder appears seven times in the best

cluster across all datasets, followed by the CE tech-

nique, which appears five times. The remaining two

techniques appear four times. These results suggest a

slight preference for the OH encoding technique com-

pared to the other techniques.

5.2 Ensemble Methods Evaluation

The next step involves constructing a homogeneous

ensemble that incorporates four variants of the same

Encoding Techniques for Handling Categorical Data in Machine Learning-Based Software Development Effort Estimation

465

Table 4: Number of occurrences of each encoder in the best

cluster.

Encoder Maxwell ISBSG Nasa93 USP05 Total

OH 2 1 2 2 7

CE 0 1 2 2 5

LE 1 1 1 1 4

TE 1 1 1 1 4

ML technique trained on the four processed datasets

using the average rule. All the constructed ensem-

bles outperform the 5% quantile of random guessing,

as their members achieve the same, and the combina-

tion rule used is a linear rule. The EAKNN ensemble

performs the best in the ISBSG and USP05 datasets,

while the EADT ensemble is the top estimator in the

remaining two datasets.The EASVR ensemble con-

sistently ranks last in all datasets.

Table 5 displays the rankings obtained using the

Borda count method for twenty techniques based on

the performance indicators used. It is observed that

none of the developed ensembles achieved a top-four

ranking in all datasets, with the best position achieved

by the EAKNN ensemble in the ISBSG dataset. How-

ever, none of the ensembles obtained the last position

in any dataset. To further validate these results, the

SK based on AE was performed.

Table 5: Rank of the twenty SDEE techniques, ensembles

are in bold.

Rank Maxwell ISBSG Nasa93 USP05

1 DTOH KNNLE DTOH KNNLE

2 KNNTE KNNCE DTLE KNNOH

3 KNNOH KNNOH DTCE KNNTE

4 MLPOH KNNTE KNNOH DTOH

5 KNNCE EAKNN DTTE KNNCE

6 DTLE DTTE KNNCE EAKNN

7 MLPLE DTCE EADT DTCE

8 EAKNN EADT EAKNN DTTE

9 SVROH MLPOH MLPLE EADT

10 EADT EAMLP EAMLP SVROH

11 MLPTE DTOH SVROH MLPCE

12 DTCE DTLE KNNLE EASVR

13 EAMLP MLPTE EASVR SVRTE

14 KNNLE MLPLE MLPCE SVRCE

15 DTTE MLPCE MLPTE SVRLE

16 EASVR SVROH KNNTE MLPOH

17 SVRCE SVRTE SVRTE EAMLP

18 SVRTE EASVR SVRLE MLPTE

19 SVRLE SVRLE SVRCE DTLE

20 MLPCE SVRCE MLPOH MLPLE

Different clusters have been identified in every

dataset. The Maxwell dataset had the largest num-

ber of clusters with 11, followed by USP05 with nine

clusters. Nasa93 had seven clusters, and ISBSG had

five clusters. From the SK test results, the following

observations can be made:

• In the Maxwell dataset, the best cluster does not

include any ensemble technique. However, none

of the ensembles belonged to the worst cluster.

• In the ISBSG dataset, the EKNN ensemble is part

of the best cluster along with the four variants

of the KNN technique. The EASVR ensemble is

found in the worst cluster.

• In the Nasa93 dataset, the EADT ensemble is part

of the best cluster along with its four constituents

and two KNN variants. Additionally, the remain-

ing three ensembles were not grouped in the worst

cluster.

• In the USP05 dataset, the EAKNN ensemble is

found in the best cluster along with its members

and two DT variants.

In summary, none of the ensemble techniques ap-

pear to be consistently more accurate than all variants

of the four ML techniques constructed in this study.

However, the combination of multiple accurate tech-

niques, such as EADT and EAKNN, generates more

accurate predictions than other ML techniques. More-

over, except for the EASVR ensemble, none of the

ensemble methods perform worse than all the ML es-

timators constructed in this study. Based on these

results, we can conclude that the ensemble methods

achieve equal or better performance than the indi-

vidual techniques constructed, providing evidence for

their effectiveness.

6 CONCLUSION AND FURTHER

WORK

This study investigated the impact of encoder tech-

niques on the prediction accuracy of SDEE tech-

niques by processing categorical cost drivers in SDEE

datasets. Four encoders were used to convert cat-

egorical attributes into numerical ones, resulting in

four datasets. ML techniques (KNN, SVR, MLP,

and DT) were optimized in each dataset using grid

search optimization. A homogeneous ensemble was

constructed by combining one estimation technique

trained on each dataset using the average as a com-

biner. The proposed techniques were evaluated on

the four datasets using various accuracy indicators

through LOOCV. In short, the main findings related

to the RQs discussed in this paper are as follows:

(RQ1): No conclusive evidence exists for the best en-

coder technique to enhance effort estimation perfor-

mance. However, empirical results suggest that one

hot encoding may be a favorable choice among the

KDIR 2023 - 15th International Conference on Knowledge Discovery and Information Retrieval

466

encoders used in this study. Further experiments are

required to reach a final conclusion on the best encod-

ing technique.

(RQ2): The results show that both single techniques

and the homogeneous ensemble developed in this

study demonstrate similar predictive accuracy levels.

In certain cases, the single KNN technique outper-

forms the ensemble technique, regardless of the en-

coder used for dataset processing.

Exploring alternative encoding techniques for pro-

cessing categorical data in SDEE datasets is an im-

portant research direction. Additionally, investigating

heterogeneous ensembles that incorporate different

ML techniques trained on various processed datasets

is crucial to determine whether encoder techniques

can serve as a source of diversity in ensemble ap-

proaches.

REFERENCES

Ali, A. and Gravino, C. (2019). A systematic literature

review of software effort prediction using machine

learning methods. Journal of software: evolution and

process, 31(10):e2211.

Amazal, F. A. and Idri, A. (2019). Handling of categori-

cal data in software development effort estimation: a

systematic mapping study. In 2019 Federated Confer-

ence on Computer Science and Information Systems

(FedCSIS), pages 763–770. IEEE.

Angelis, L., Stamelos, I., and Morisio, M. (2001). Building

a software cost estimation model based on categorical

data. In Proceedings Seventh International Software

Metrics Symposium, pages 4–15. IEEE.

Azhar, D., Riddle, P., Mendes, E., Mittas, N., and Ange-

lis, L. (2013). Using ensembles for web effort es-

timation. In 2013 ACM/IEEE International Sympo-

sium on Empirical Software Engineering and Mea-

surement, pages 173–182. IEEE.

Breskuvien

˙

e, D. and Dzemyda, G. (2023). Categorical

feature encoding techniques for improved classifier

performance when dealing with imbalanced data of

fraudulent transactions. INTERNATIONAL JOURNAL

OF COMPUTERS COMMUNICATIONS & CON-

TROL, 18(3).

Cabral, J. T. H. d. A., Oliveira, A. L., and da Silva, F. Q.

(2023). Ensemble effort estimation: An updated and

extended systematic literature review. Journal of Sys-

tems and Software, 195:111542.

De La Bourdonnaye, F. and Daniel, F. (2021). Evalu-

ating categorical encoding methods on a real credit

card fraud detection database. arXiv preprint

arXiv:2112.12024.

Foss, T., Stensrud, E., Kitchenham, B., and Myrtveit, I.

(2003). A simulation study of the model evaluation

criterion mmre. IEEE Transactions on software engi-

neering, 29(11):985–995.

Hosni, M., Idri, A., and Abran, A. (2018a). Improved ef-

fort estimation of heterogeneous ensembles using fil-

ter feature selection. In ICSOFT, pages 439–446.

Hosni, M., Idri, A., Abran, A., and Nassif, A. B. (2018b).

On the value of parameter tuning in heterogeneous en-

sembles effort estimation. Soft Computing, 22:5977–

6010.

Idri, A., Hosni, M., and Abran, A. (2016). Systematic liter-

ature review of ensemble effort estimation. Journal of

Systems and Software, 118:151–175.

Jorgensen, M. and Shepperd, M. (2006). A systematic re-

view of software development cost estimation stud-

ies. IEEE Transactions on software engineering,

33(1):33–53.

Kocaguneli, E., Kultur, Y., and Bener, A. (2009). Com-

bining multiple learners induced on multiple datasets

for software effort prediction. In International Sym-

posium on Software Reliability Engineering (ISSRE).

Kocaguneli, E. and Menzies, T. (2013). Software effort

models should be assessed via leave-one-out valida-

tion. Journal of Systems and Software, 86(7):1879–

1890.

Kocaguneli, E., Menzies, T., and Keung, J. W. (2011). On

the value of ensemble effort estimation. IEEE Trans-

actions on Software Engineering, 38(6):1403–1416.

Li, J., Ruhe, G., Al-Emran, A., and Richter, M. M. (2007).

A flexible method for software effort estimation by

analogy. Empirical Software Engineering, 12:65–106.

Miyazaki, Y., Takanou, A., Nozaki, H., Nakagawa, N., and

Okada, K. (1991). Method to estimate parameter val-

ues in software prediction models. Information and

Software Technology, 33(3):239–243.

Oliveira, A. L., Braga, P. L., Lima, R. M., and Corn

´

elio,

M. L. (2010). Ga-based method for feature selection

and parameters optimization for machine learning re-

gression applied to software effort estimation. infor-

mation and Software Technology, 52(11):1155–1166.

Wen, J., Li, S., Lin, Z., Hu, Y., and Huang, C. (2012). Sys-

tematic literature review of machine learning based

software development effort estimation models. In-

formation and Software Technology, 54(1):41–59.

Encoding Techniques for Handling Categorical Data in Machine Learning-Based Software Development Effort Estimation

467