Deep Learning for Active Robotic Perception

Nikolaos Passalis

a

, Pavlos Tosidis, Theodoros Manousis and Anastasios Tefas

b

Computational Intelligence and Deep Learning Group, AIIA Lab.,

Department of Informatics, Aristotle University of Thessaloniki, Greece

Keywords:

Active Perception, Deep Learning, Active Vision, Active Robotic Perception.

Abstract:

Deep Learning (DL) has brought significant advancements in recent years, greatly enhancing various challeng-

ing computer vision tasks. These tasks include but are not limited to object detection and recognition, scene

segmentation, and face recognition, among others. DL’s advanced perception capabilities have also paved

the way for powerful tools in the realm of robotics, resulting in remarkable applications such as autonomous

vehicles, drones, and robots capable of seamless interaction with humans, such as collaborative manufactur-

ing. However, despite these remarkable achievements in DL within these domains, a significant limitation

persists: most existing methods adhere to a static inference paradigm inherited from traditional computer vi-

sion pipelines. Indeed, DL models typically perform inference on a fixed and static input, ignoring the fact

that robots possess the capability to interact with their environment to gain a better understanding of their

surroundings. This process, known as ”active perception”, closely mirrors how humans and various animals

interact and comprehend their environment. For instance, humans tend to examine objects from different an-

gles, when being uncertain, while some animals have specialized muscles that allow them to orient their ears

towards the source of an auditory signal. Active perception offers numerous advantages, enhancing both the

accuracy and efficiency of the perception process. However, incorporating deep learning and active perception

in robotics also comes with several challenges, e.g., the training process often requires interactive simulation

environments and dictates the use of more advanced approaches, such as deep reinforcement learning, the de-

ployment pipelines should be appropriately modified to enable control within the perception algorithms, etc.

In this paper, we will go through recent breakthroughs in deep learning that facilitate active perception across

various robotics applications, as well as provide key application examples. These applications span from face

recognition and pose estimation to object detection and real-time high-resolution analysis.

1 INTRODUCTION

In recent years, Deep Learning (DL) has led to signif-

icant advancements in a range of challenging com-

puter vision and robotic perception tasks (LeCun

et al., 2015). These tasks encompass but are not re-

stricted to object detection and recognition (Redmon

et al., 2016), scene segmentation (Badrinarayanan

et al., 2017), and face recognition (Wen et al., 2016),

among others. DL’s sophisticated perception capabil-

ities have also yielded potent tools for diverse robotics

applications, resulting in the emergence of impres-

sive use cases, such as self-driving vehicles (Bojarski

et al., 2016), unmanned aerial vehicles (drones) (Pas-

salis et al., 2018), and robots capable of seamless in-

teraction with humans, notably in collaborative man-

a

https://orcid.org/0000-0003-1177-9139

b

https://orcid.org/0000-0003-1288-3667

ufacturing scenarios (Liu et al., 2019).

Despite the recent accomplishments of DL in

these domains, a notable limitation plagues most ex-

isting approaches since they adhere to a static infer-

ence paradigm, which follows the traditional com-

puter vision pipeline. Therefore, DL models perform

inference on a fixed and static input, ignoring the abil-

ity of robots, as well as cyber-physical systems (Li,

2018; Loukas et al., 2017), to interact with their en-

vironment in order to enhance their perception. For

example, we can consider the task of face recogni-

tion, where a robot captures a suboptimal profile view

of a subject to be recognized. A conventional static

perception-based DL model may struggle to identify

the subject from a specific angle, particularly if it

lacks training on profile face images for such angles.

However, it is often feasible for the robot to attain a

better and more distinguishing view by adjusting its

position relative to the human subject. Consequently,

Passalis, N., Tosidis, P., Manousis, T. and Tefas, A.

Deep Learning for Active Robotic Perception.

DOI: 10.5220/0012295800003595

In Proceedings of the 15th International Joint Conference on Computational Intelligence (IJCCI 2023), pages 15-21

ISBN: 978-989-758-674-3; ISSN: 2184-3236

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

15

in such scenarios, the same DL model will likely suc-

ceed in recognizing the subject after the robot repo-

sitions itself for a more suitable angle. This method-

ology, known as active perception (Aloimonos, 2013;

Bajcsy et al., 2018; Shen and How, 2019), enables

the manipulation of the robot or sensor to obtain a

clearer and more informative view or signal, ulti-

mately enhancing the perception capabilities and sit-

uational awareness of robotic systems. Note that this

process closely mirrors how humans and various ani-

mals engage with and percept their surroundings. For

instance, humans tend to explore different perspec-

tives when processing complex visual stimuli, while

many mammals possess specialized ear muscles that

pivot their ears toward the source of an auditory sig-

nal in order to acquire a clearer version of the sig-

nal (Heffner and Heffner, 1992).

A number of recent, although relatively basic, ap-

proaches have illustrated that active perception can

indeed enhance the perceptual capabilities of various

models. For example, works such as (Ammirato

et al., 2017) and (Passalis and Tefas, 2020), demon-

strated that developing a deep learning system that

predicts the next best move for a robot can signif-

icantly improve the accuracy of various perception

tasks, such as object detection and face recognition,

where the viewing angle, occlusions and the scale of

each object can have a significant effect on the percep-

tion accuracy. Similar findings have also been doc-

umented in more recent research spanning a variety

of domains (Han et al., 2019; Tosidis et al., 2022;

Kakaletsis and Nikolaidis, 2023). It is also impor-

tant to emphasize that active perception methodolo-

gies can also enable the development of less compu-

tationally intensive deep learning models. This oc-

curs because these models are trained to address a

less complex problem. For example, in (Passalis and

Tefas, 2020), it is demonstrated that more lightweight

face recognition models can be used when DL mod-

els can actively interact with the environment in order

to acquire a more informative frontal view of the sub-

jects.

However, training active perception models dif-

fers significantly compared to traditional static per-

ception approaches, since models must learn also the

dynamics of the perception process in order to pro-

vide control feedback. For example, an active face

recognition model should also learn how perception

accuracy varies as the robot moves around a subject,

as well as the direction in which a robot should move

in order to improve the accuracy of face recognition.

Therefore, it becomes clear that training active per-

ception models introduces additional challenges, both

with respect to acquiring the necessary data for train-

ing, as well as for extending the traditional (usually

supervised) learning pipelines to support such setups.

The main aim of this paper is to introduce the main

active perception approaches used for training DL-

based active perception models for different applica-

tions. To this end, we will first present and discuss

the different options for acquiring the necessary data

used for training active perception models. Next, we

will present different training approaches that extend

traditional supervised learning methods for active per-

ception or employ reinforcement learning methods

to provide active perception feedback. Finally, we

will discuss applications in various fields related to

robotics, as well as discuss implications and practical

issues.

The rest of this paper is structured as follows.

First, in Section 2 we present the different method-

ologies for acquiring data for active perception, while

in Section 3 we present different learning approaches

that are used for training active perception DL mod-

els. Then, in Section 4, we provide an overview of

applications for different perception applications. Fi-

nally, Section 5 concludes this paper.

2 DATA FOR ACTIVE

PERCEPTION

As discussed in Section 1, training active perception

models requires a shift from traditional static percep-

tion methods, presenting a distinctive challenge. This

distinction arises from the necessity for active percep-

tion models to not only grasp the static aspects of ob-

ject recognition but also to encompass the dynamics

inherent in the perception process, allowing them to

generate control feedback. For instance, when con-

sidering an active face recognition model, the model

should acquire knowledge concerning the optimal di-

rection in which the robot should navigate to enhance

the accuracy of face recognition. Unfortunately, a no-

table constraint emerges as a significant portion of

the available datasets does not inherently facilitate the

training of models for active perception tasks. Current

literature can be roughly categorized into three dis-

tinct methodologies that can be used for getting data

suitable for active perception: a) simulation-based

training, b) multi-view dataset-based training, and c)

on-demand (synthetic) data generation. An overview

of the different approaches, among with benefits and

drawbacks, is provided in Table 1.

Ideally, an active perception model would learn

as it interacts with its environment. However, get-

ting ground truth data in real-time is typically infea-

sible. Therefore, in most cases, active perception

IJCCI 2023 - 15th International Joint Conference on Computational Intelligence

16

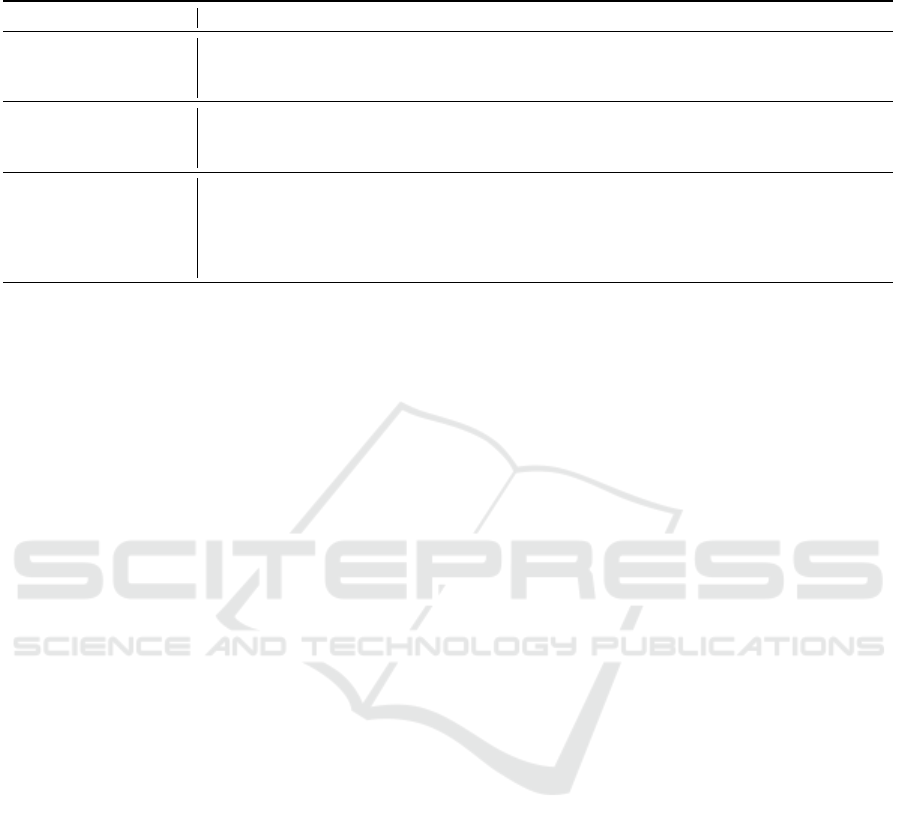

Table 1: Comparing different approaches that can be used for acquiring data that can be used for training active perception

models.

Approach Benefits Drawbacks Examples

Simulation-based train-

ing

flexible, any movement

can be simulated

computationally-demanding,

sim-to-real gap

(Ginargyros et al., 2023; Tzi-

mas et al., 2020; Tosidis et al.,

2022)

Multi-view dataset-

based training

real data used, no sim-

to-real gap

limited flexibility, limited num-

ber of control actions, missing

data

(Passalis and Tefas, 2020;

Georgiadis et al., 2023)

On-demand (synthetic)

data generation through

manipulation

less susceptible to sim-

to-real gap, faster than

simulation-based train-

ing

less accurate simulation of con-

trol actions, perception dynam-

ics might not be accurately

modeled

(Dimaridou et al., 2023;

Passalis and Tefas, 2021;

Kakaletsis and Nikolaidis,

2023; Manousis et al., 2023;

Bozinis et al., 2021)

models are trained in an offline fashion. The first

category of methods employs realistic simulation en-

vironments, e.g., as in (Tosidis et al., 2022; Ginar-

gyros et al., 2023), in order to simulate the effect

of various movements and allow the agent to learn

how perception accuracy varies when performing dif-

ferent actions. This approach provides great flexi-

bility since any action can be simulated and the ef-

fect of the movement of a robot can be easily ob-

tained. However, such approaches are computation-

ally demanding, since they rely on realistic simula-

tion environments and graphics engines, such as We-

bots (Michel, 2004) and Unity (Haas, 2014), slow-

ing down the training process. Furthermore, these

approaches are also hindered by the so-called “sim-

to-real” gap (Zhao et al., 2020), since the agents are

trained using data generated by a simulator.

The second category of approaches, called “multi-

view dataset-based training” in this paper, employs

datasets that contain multiple views of the same

scene. In this way, the effect of various movements

can be quantified by fetching the view that would

correspond to the result of the said movement. For

example, in (Passalis and Tefas, 2020), the multiple

views around a person are used to simulate the effect

of an agent moving around, enabling training active

perception models that learn how to maximize face

recognition accuracy. Such approaches can overcome

the issues of computational complexity and the “sim-

to-real” gap. However, they are often too restrictive,

since the datasets should already contain the images

that can be used for every possible action an agent

can perform. This often leads to huge datasets, as well

as to agents that can be trained for a limited number

of control actions. Furthermore, such approaches of-

ten have to handle missing data, since, in many cases,

there are missing data in the corresponding multi-

view datasets.

Then, methods that generate “on-demand” data

have also been proposed. Such approaches can try

to simulate the effect of various movements start-

ing from real data and then appropriately manipulat-

ing the data, e.g., simulating occlusions (Dimaridou

et al., 2023). Another approach is to generate multi-

ple views that can then be used either for deciding the

best course of action or training the agent (Kakaletsis

and Nikolaidis, 2023). These approaches fall in be-

tween simulation-based and multi-view dataset-based

approaches since they employ real images that have

been appropriately manipulated to simulate the effect

of active perception. Therefore, even though they are

less susceptible to the sim-to-real gap and they are

typically faster, they often provide less accuracy in

simulating the effect of active perception feedback,

leading to models that might fail to capture all details

of the dynamics of the active perception process.

3 LEARNING METHODOLOGIES

FOR ACTIVE PERCEPTION

Training active perception models also departs from

the typical supervised learning approach that is fol-

lowed in many perception applications, such as face

recognition (Wen et al., 2016), object detection (Red-

mon et al., 2016) and pose estimation (Zheng et al.,

2023). Active perception models should not only

analyze and understand their input but also provide

some kind of control feedback, that can be then sub-

sequently used for improving perception accuracy.

Therefore, they tend to incorporate elements typi-

cally found in planning (Sun et al., 2021) and con-

trol (Tsounis et al., 2020) approaches used in robotics

applications. The degree to which such elements

are part of each model depends on the specific ap-

plication requirements. In recent literature, two ap-

Deep Learning for Active Robotic Perception

17

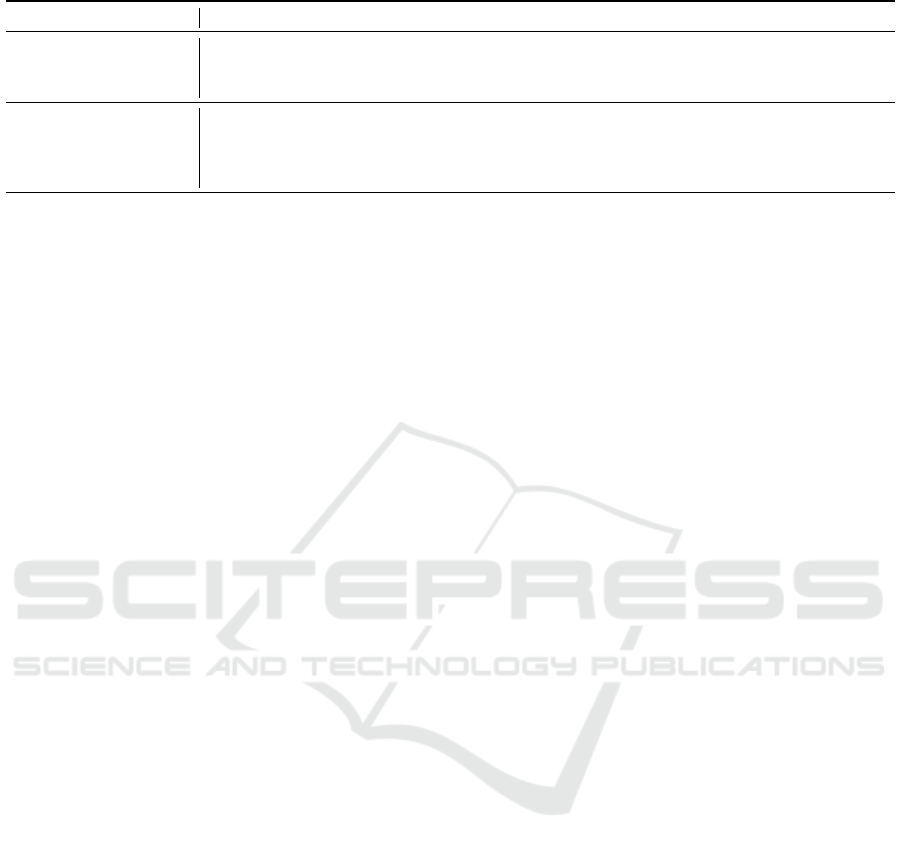

Table 2: Comparing different learning paradigms that can be used training active perception models.

Approach Benefits Drawbacks Examples

Deep Reinforcement

Learning

directly optimizes the

active perception model

slow convergence, low sample

efficiency, (usually) requires

simulation environments

(Bozinis et al., 2021; Tzimas

et al., 2020; Tosidis et al., 2022)

Supervised Learning can work with any

kind of data, easier and

faster to train

requires carefully design

heuristics to construct ground

truth data

(Passalis and Tefas, 2020; Gi-

nargyros et al., 2023; Dimari-

dou et al., 2023; Manousis

et al., 2023)

proaches are prevalent: a) deep reinforcement learn-

ing (DRL)-based training and b) supervised training

through carefully designed ground truth. An overview

of these two different approaches, along with benefits

and drawbacks, is provided in Table 2.

DRL has achieved remarkable progress in recent

years, providing beyond human performance in many

cases (Mnih et al., 2013). Such approaches naturally

fit active perception, since they enable models to learn

how to provide control feedback to maximize percep-

tion accuracy through the interaction with an environ-

ment. Such approaches almost exclusively require

the use of a simulation environment to be trained.

Even though DRL methods enable discovering com-

plex policies that can directly optimize the objective

at hand, i.e., perception accuracy, they suffer from

low sample efficiency, long training times, and un-

stable convergence (Buckman et al., 2018). On the

other hand, the supervised method typically follows

an “imitation” learning training paradigm (Hua et al.,

2021), where the best actions to be performed are

found through an extensive search in the action space.

This is better understood with the following example.

A DRL-agent training to perform active face recogni-

tion, e.g., (Tosidis et al., 2022), would learn using the

reward signal from the environment, e.g., confidence

in correctly recognizing a person. On the other hand,

a supervised approach, such as (Passalis and Tefas,

2020), would first require simulating the effect of

various movements/actions and then provide ground

truth data on which action should the agent perform at

each step. This also enables supervised approaches to

work with any kind of data available, since the actions

to be evaluated can be dictated by the capacity of the

dataset to support the corresponding action. There-

fore, even though supervised approaches can provide

more stable and faster convergence and typically do

not require a complex simulation environment, they

rely on hand-crafted heuristic-based approaches to

constructing the ground truth data.

4 ACTIVE PERCEPTION FOR

ROBOTIC APPLICATIONS

Several recent active perception approaches have

been proposed for a variety of different applications.

In the rest of this Section, we briefly overview meth-

ods proposed for different applications, as well as

discuss practical issues that often arise in robotics.

Among the most prominent applications of active per-

ception is face recognition. Indeed, early DL-based

approaches extended embedding-based active percep-

tion methods into active ones by including an addi-

tional head that predicts the next best movement that

a robot should perform in order to increase face recog-

nition confidence (Passalis and Tefas, 2020). This ap-

proach assumes that the robot moves on a predefined

trajectory around the target in order to be compati-

ble with the multi-view dataset employed. Then, the

model is trained to both maximize face recognition

confidence, following a constrastive learning objec-

tive, as well as to regress the direction of movement

leading to the best face recognition accuracy. Note

that this direction is calculated by leveraging the mul-

tiple views available in the dataset and then selecting

the one that maximizes the confidence for the next

active perception step. The experimental evaluation

demonstrated the effectiveness of this approach over

static perception for a variety of different active per-

ception steps. However, this approach used a dataset

with a small number of individuals and a relatively

small number of possible control movements. Later

methods, such as (Dimaridou et al., 2023), build upon

this approach by a) simulating the effect of various oc-

clusions on large-scale face recognition datasets, and

b) regressing both the direction and distance the robot

should move.

A simple DRL approach for training a DRL agent

to perform drone control in order to acquire frontal

views that can be used for face recognition was ini-

tially proposed in (Tzimas et al., 2020), highlighting

the potential of DRL methods for active perception

tasks. A more sophisticated approach was also re-

IJCCI 2023 - 15th International Joint Conference on Computational Intelligence

18

cently proposed building upon DRL in (Tosidis et al.,

2022). This approach leverages a realistic simulation

environment, built using Webots (Michel, 2004), and

directly trains a DRL agent to perform control in a

drone that flies around humans in order to maximize

face recognition confidence. The experimental eval-

uation demonstrated that the trained agent was able

to perform control in a variety of different situations.

However, the sim-to-real gap remains with DRL ap-

proaches, which can be a limiting factor in directly

applying such approaches in real applications.

A supervised approach was also proposed for ob-

ject detection in (Ginargyros et al., 2023), where a

rich dataset for potential movements was built us-

ing a simulation environment. This approach enabled

the models to learn the object detection confidence

manifold for different types of objects, e.g., cars and

humans, while taking into account possible occlu-

sions, allowing them to perform control tailored to the

unique characteristics of different cases. To this end, a

separate navigation proposal network was trained ac-

cording to the confidence manifold of each object, en-

abling the model to learn to propose trajectories that

will maximize object detection confidence. At the

same time, this paper also revealed limitations that

are often intrinsic to the current state-of-the-art ob-

ject detection models, since it provided a structured

approach for revealing the confidence manifold of ob-

ject detectors. A dataset that can support active vision

for object detection was also proposed in (Ammirato

et al., 2017), and a DRL agent was also trained and

evaluated. This paper demonstrated that it is possible,

given the appropriate dataset and annotations, to di-

rectly train DRL agents to perform control for active

vision tasks.

Another line of research focuses on performing

virtual control, i.e., not physically altering the posi-

tion of a robot or the parameters of a physical sen-

sor, but rather selectively analyzing specific parts of

the input in order to improve perception accuracy,

while reducing the computational load. Such ap-

proaches can be especially useful in cases where high-

resolution input images must be analyzed, while the

object of interest lies only in a small area within the

input. An especially promising approach was pre-

sented in (Manousis et al., 2023), where the heat

map extracted from a low-resolution version of a

high-resolution image was used to drive the percep-

tion process. To this end, the proposed method first

identified a region of interest in the original image

by looking for potential activations (i.e., parts where

the DL model detects something, but not necessar-

ily with high confidence), in a low-resolution ver-

sion of the input, and then performed targeted crop-

Figure 1: Active perception outputs can be represented in

a homogeneous way using an application agnostic control

specification defined by OpenDR (Passalis et al., 2022).

ping into the high-resolution image in order to select

the area that needs to be analyzed. The experimen-

tal evaluation demonstrated that significant accuracy

and speed improvements can be acquired using the

proposed method. However, a limitation of such ap-

proaches is that as the size of the region of interest

grows, the performance benefit obtained using active

perception is becoming smaller. It is worth noting that

such approaches can be also easily adjusted to per-

form control of the parameters of a camera, e.g., phys-

ical zoom, in order to acquire signals that are easier to

analyze.

Another significant issue when implementing ac-

tive perception models is the existence of a common

way of expressing the outcomes of active perception.

This is especially important, since in many robotics

systems, different models might be employed for dif-

ferent perception tasks. Having to handle a com-

pletely different form of output for different models

significantly complicates the development process.

OpenDR toolkit (Passalis et al., 2022) has provided

a common application agnostic control specification

for standardizing such active perception outputs. This

specification ensures that algorithms designed for ac-

tive perception can effectively process the result. To

this end, four control axes have been identified, as

shown in Fig. 1. For all axes, it is assumed that the

robot moves in a sphere and a real value from −1 to

1 is provided for the movement on each axis. Using

this way of expressing the output of active percep-

tion approaches holds the credentials for simplifying

the development of active perception-enable robotics

systems, by enabling the the efficient re-use of com-

ponents related to handling and executing the feed-

back provided by active perception algorithms.

Deep Learning for Active Robotic Perception

19

5 CONCLUSIONS

DL has revolutionized computer vision and robotics

by enabling remarkable advancements in perception

tasks. However, as discussed in this paper, a signif-

icant limitation persists in many existing DL-based

systems: the static inference paradigm. Most DL

models operate on fixed, static inputs, neglecting the

potential benefits of active perception – a process that

mimics how humans and certain animals interact with

their environment to better understand it. Active per-

ception offers advantages in terms of accuracy and ef-

ficiency, making it a crucial area of exploration for

enhancing robotic perception. While the incorpora-

tion of deep learning and active perception in robotics

presents numerous opportunities, it also poses sev-

eral challenges. Training often necessitates interac-

tive simulation environments and more advanced ap-

proaches like deep reinforcement learning. Moreover,

deployment pipelines need to be adapted to enable

control within perception algorithms. These chal-

lenges highlight the importance of ongoing research

and development in this field.

ACKNOWLEDGMENTS

This work was supported by the European Union’s

Horizon 2020 Research and Innovation Program

(OpenDR) under Grant 871449. This publication re-

flects the authors’ views only. The European Com-

mission is not responsible for any use that may be

made of the information it contains.

REFERENCES

Aloimonos, Y. (2013). Active perception. Psychology Press.

Ammirato, P., Poirson, P., Park, E., Ko

ˇ

seck

´

a, J., and Berg,

A. C. (2017). A dataset for developing and bench-

marking active vision. In Proceedings of the IEEE In-

ternational Conference on Robotics and Automation,

pages 1378–1385.

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017).

Segnet: A deep convolutional encoder-decoder ar-

chitecture for image segmentation. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

39(12):2481–2495.

Bajcsy, R., Aloimonos, Y., and Tsotsos, J. K. (2018).

Revisiting active perception. Autonomous Robots,

42(2):177–196.

Bojarski, M., Del Testa, D., Dworakowski, D., Firner,

B., Flepp, B., Goyal, P., Jackel, L. D., Monfort,

M., Muller, U., Zhang, J., et al. (2016). End to

end learning for self-driving cars. arXiv preprint

arXiv:1604.07316.

Bozinis, T., Passalis, N., and Tefas, A. (2021). Improv-

ing visual question answering using active perception

on static images. In Proceedings of the International

Conference on Pattern Recognition, pages 879–884.

Buckman, J., Hafner, D., Tucker, G., Brevdo, E., and Lee,

H. (2018). Sample-efficient reinforcement learning

with stochastic ensemble value expansion. Proceed-

ings of the Advances in Neural Information Process-

ing Systems, 31.

Dimaridou, V., Passalis, N., and Tefas, A. (2023). Deep ac-

tive robotic perception for improving face recognition

under occlusions. In Proceedings of the IEEE Sympo-

sium Series on Computational Intelligence (accepted),

page 1.

Georgiadis, C., Passalis, N., and Nikolaidis, N. (2023). Ac-

tiveface: A synthetic active perception dataset for face

recognition. In Proceedings of the International Work-

shop on Multimedia Signal Processing (accepted),

page 1.

Ginargyros, S., Passalis, N., and Tefas, A. (2023). Deep ac-

tive perception for object detection using navigation

proposals. In Proceedings of the IEEE Symposium Se-

ries on Computational Intelligence (accepted), page 1.

Haas, J. K. (2014). A history of the unity game engine.

Han, X., Liu, H., Sun, F., and Zhang, X. (2019). Active

object detection with multistep action prediction us-

ing deep q-network. IEEE Transactions on Industrial

Informatics, 15(6):3723–3731.

Heffner, R. S. and Heffner, H. E. (1992). Evolution of sound

localization in mammals. In The evolutionary biology

of hearing, pages 691–715.

Hua, J., Zeng, L., Li, G., and Ju, Z. (2021). Learning for

a robot: Deep reinforcement learning, imitation learn-

ing, transfer learning. Sensors, 21(4):1278.

Kakaletsis, E. and Nikolaidis, N. (2023). Using synthesized

facial views for active face recognition. Machine Vi-

sion and Applications, 34(4):62.

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learn-

ing. Nature, 521(7553):436–444.

Li, J.-h. (2018). Cyber security meets artificial intelligence:

a survey. Frontiers of Information Technology & Elec-

tronic Engineering, 19(12):1462–1474.

Liu, Q., Liu, Z., Xu, W., Tang, Q., Zhou, Z., and Pham, D. T.

(2019). Human-robot collaboration in disassembly for

sustainable manufacturing. International Journal of

Production Research, 57(12):4027–4044.

Loukas, G., Vuong, T., Heartfield, R., Sakellari, G., Yoon,

Y., and Gan, D. (2017). Cloud-based cyber-physical

intrusion detection for vehicles using deep learning.

IEEE Access, 6:3491–3508.

Manousis, T., Passalis, N., and Tefas, A. (2023). Enabling

high-resolution pose estimation in real time using ac-

tive perception. In Proceedings of the IEEE Interna-

tional Conference on Image Processing, pages 2425–

2429.

Michel, O. (2004). Cyberbotics ltd. webots™: professional

mobile robot simulation. International Journal of Ad-

vanced Robotic Systems, 1(1):5.

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A.,

Antonoglou, I., Wierstra, D., and Riedmiller, M.

IJCCI 2023 - 15th International Joint Conference on Computational Intelligence

20

(2013). Playing atari with deep reinforcement learn-

ing. arXiv preprint arXiv:1312.5602.

Passalis, N., Pedrazzi, S., Babuska, R., Burgard, W., Dias,

D., Ferro, F., Gabbouj, M., Green, O., Iosifidis, A.,

Kayacan, E., et al. (2022). OpenDR: An open toolkit

for enabling high performance, low footprint deep

learning for robotics. In Proceedings of the IEEE/RSJ

International Conference on Intelligent Robots and

Systems, pages 12479–12484.

Passalis, N. and Tefas, A. (2020). Leveraging active percep-

tion for improving embedding-based deep face recog-

nition. In Proceedings of the IEEE International

Workshop on Multimedia Signal Processing, pages 1–

6.

Passalis, N. and Tefas, A. (2021). Pseudo-active vision for

improving deep visual perception through neural sen-

sory refinement. In Proceedings of the IEEE Interna-

tional Conference on Image Processing, pages 2763–

2767.

Passalis, N., Tefas, A., and Pitas, I. (2018). Efficient cam-

era control using 2d visual information for unmanned

aerial vehicle-based cinematography. In Proceedings

of the IEEE International Symposium on Circuits and

Systems, pages 1–5.

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A.

(2016). You only look once: Unified, real-time object

detection. In Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition, pages 779–

788.

Shen, M. and How, J. P. (2019). Active perception in ad-

versarial scenarios using maximum entropy deep re-

inforcement learning. In Proceedings of the Interna-

tional Conference on Robotics and Automation, pages

3384–3390. IEEE.

Sun, H., Zhang, W., Yu, R., and Zhang, Y. (2021). Motion

planning for mobile robots—focusing on deep rein-

forcement learning: A systematic review. IEEE Ac-

cess, 9:69061–69081.

Tosidis, P., Passalis, N., and Tefas, A. (2022). Active vi-

sion control policies for face recognition using deep

reinforcement learning. In Proceedings of the 30th

European Signal Processing Conference, pages 1087–

1091.

Tsounis, V., Alge, M., Lee, J., Farshidian, F., and Hut-

ter, M. (2020). Deepgait: Planning and control of

quadrupedal gaits using deep reinforcement learning.

IEEE Robotics and Automation Letters, 5(2):3699–

3706.

Tzimas, A., Passalis, N., and Tefas, A. (2020). Leveraging

deep reinforcement learning for active shooting under

open-world setting. In Proceedings of the IEEE Inter-

national Conference on Multimedia and Expo, pages

1–6.

Wen, Y., Zhang, K., Li, Z., and Qiao, Y. (2016). A discrim-

inative feature learning approach for deep face recog-

nition. In Proceedings of the European Conference on

Computer Vision, pages 499–515.

Zhao, W., Queralta, J. P., and Westerlund, T. (2020). Sim-

to-real transfer in deep reinforcement learning for

robotics: a survey. In Proceedings of the IEEE Sym-

posium Series on Computational Intelligence, pages

737–744.

Zheng, C., Wu, W., Chen, C., Yang, T., Zhu, S., Shen, J.,

Kehtarnavaz, N., and Shah, M. (2023). Deep learning-

based human pose estimation: A survey. ACM Com-

puting Surveys, 56(1):1–37.

Deep Learning for Active Robotic Perception

21