Gesture Controlled Device with Vocalizer for Physically Challenged

People

Nithin M A

1 a

, Kartik Ghanekar

1 b

Prajwal A M

1 c

, Prajwal B R

1 d

and Tanuja H

1 e

1

Department of Electrical and Electronics Engineering, Dayananda Sagar College of Engineering, Bengaluru, India

tanuja-eee@dayanandasagar.edu

Keywords: Hand Gesture Vocalizer, Sign Language, Communication of Speech Impaired, Accelerometer Sensor.

Abstract: Hand Gesture Vocalizer is a social project that aims to raise the morale of the speech and hearing impaired

by enabling them to communicate better with the society. This project aims to meet the need to create an

electronic device that can translate sign language to speech in order to eliminate the communication of speech

impaired and the general public. A Motion Controlled vehicles are robots that can be controlled by simple

human movements. The Robot and Motion device connect wirelessly via wireless radio. We can use the

accelerometer along with the glove box to control the car movement by direction. These sensors are designed

to replace the traditional remote control used to operate it. It will allow the user to control forward, reverse,

left and right movement while using the same accelerometer sensor to control the vehicle's accelerator pedal.

The main advantage of this mechanism is the car, which can smoothly make sharp turns. The robotic arm is

a glove that imitates the movements of the human hand.

1 INTRODUCTION

Humans have the ability to speak languages to

communicate and interact. Unfortunately, not

everyone can speak and hear. Sign languages used by

people who cannot speak or hear in the community is

not understandable to general public. A gesture

vocalizer is a gesture-based glove that includes a

combination of hand shapes, hand gestures, and facial

expressions for clarity in what the speaker is thinking.

Normal people do not use the sign language to

communicate with other dumb people as they do not

know the meaning of the sign even with other people

who always know the meaning of language.

However, people cannot interpret normal speaking

mute and deaf sign language, and not everyone can

learn the language. So, another option for

is that we

can use a computer or a smartphone as a modem. A

computer or smartphone can take input from a mute

person and convert it to text and audio. We

use sensor- based technology. We have created a

a

https://orcid.org/0009-0005-2540-9798

b

https://orcid.org/0009-0009-3351-129X

c

https://orcid.org/0009-0008-7436-0507

d

https://orcid.org/0009-0006-0900-1407

e

https://orcid.org/0009-0008-9880-132X

module that converts finger movements into sound. A

more humane, succinct, and natural approach to

human-computer interaction is required in

intelligence. Related equipment can perform remote

operation duties on unmanned platforms in uncharted

regions through human-computer interaction.

Wearing appropriate gear, the driver can wirelessly

and remotely control the smart car. A new entry point

for the evolution of human-computer interaction is

gesture recognition. The physical gadget is controlled

by the conversion of gestures as a signal change,

assisting users in finishing the designated tasks. The

entertainment and gaming industries have used

somatosensory-based human-computer interface

technologies.

Robots that can be controlled by hand gestures

rather than traditional buttons are known as gesture-

controlled robots. We only have to wear a small

transmitting gadget with an acceleration meter or

glove in your hand. This will send the robot the proper

command, enabling it to carry out our instructions.

M A, N., Ghanekar, K., A M, P., B R, P. and H, T.

Gesture Controlled Device with Vocalizer for Physically Challenged People.

DOI: 10.5220/0012504300003808

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Intelligent and Sustainable Power and Energy Systems (ISPES 2023), pages 9-14

ISBN: 978-989-758-689-7

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

9

ADCs for analogue to digital conversion and an ADC

were integrated in the transmitting device. By using an

RF Transmitter module, it will send an encoder. The

encoded data is received and decoded at the receiving

end by an RF Receiver module. A microcontroller

processes this data next, and our

motor driver uses the

results to regulate the motors. To make the

task simple

and straightforward to do, it is now necessary to

divide it into separate modules receiver and

transmitter.

2 LITERATURE REVIEW

(Kadam K. et.al,2012) “American Sign Language

Interpreter” discussed an effective use of glove for

implementing an interactive sign language teaching

programme. They believe that, with the use of glove,

signs can be made with more accuracy and better

consistency. Having a glove also would enable to

practice sign language without having to be next to a

computer.

(M. S Kasar.et.al ,2019) have developed a smart

talking glove for speech patients and people with

disabilities. Often times, people with aphasia

communicate in sign language that people cannot

understand. They created programs to solve this

problem, allowing non-speaking patients to

communicate with people. They use a flexible sensor

that sits on the glove to detect finger movements.

Depending on the finger movement, the ATmega328

microcontroller will display the information on the

LCD.

(Prerana K. C , 2019) proposed a method of Hand

Gesture Recognition

system. A system that can read the

values for a particular gesture

done by the user and

predict the output for the gesture. This system is not

that reliable because the dataset for all the alphabets

and frequently used words was not created.

(Kunjumon, J., & Megalingam, R. K, 2010) in

their work Communication is just a means of

conveying information from one place, person or

group to another or with each other. Voice

communication is how people interact with other

people. Because perhaps not all of us have a physical

disability that prevents us from verbally sharing our

thoughts. It is difficult for deaf people to communicate

their thoughts and ideas to people. Most people do not

know the language, which makes it difficult for people

who remain silent to communicate with others. There

are tools that can convert sign language to text that

can be used in other languages.

3 METHODOLOGIES

The Gesture vocalizer is nothing but a normal glove

with 4 fingers that can be adjusted by connecting the

fingers together or using glue. Help the sensor bend

correctly and provide the correct voltage drop, the

controller can sense the voltage drop in the

appropriate direction. The glove used in this system

has 4 flexible sensors. The flexible sensors used in

this module are mounted on each finger of the

gesture control glove. The efficiencies of flexible

sensors depend on the bending moment and the

measurement by sign.

The result is then sent to the microcontroller

where the same work is done and converted to

digital format. The result of this output changes as

the variable is bent. The microcontroller uses the

output from the converter and the Arduino converts

the received signal to text and sends it to the

Bluetooth module, which will be connected to the

phone to convert the text to speech.

For moving vehicle there is serial

communication between the two Arduino nano

boards. One Arduino is used for sending the signals

and the other Arduino is used for receiving those

signals and move the moving vehicle accordingly.

There is an accelerometer sensor that can sense the

gesture of our hand. The transmitter sends the

signals via an Zigbee rf module that we use for

wireless communication.

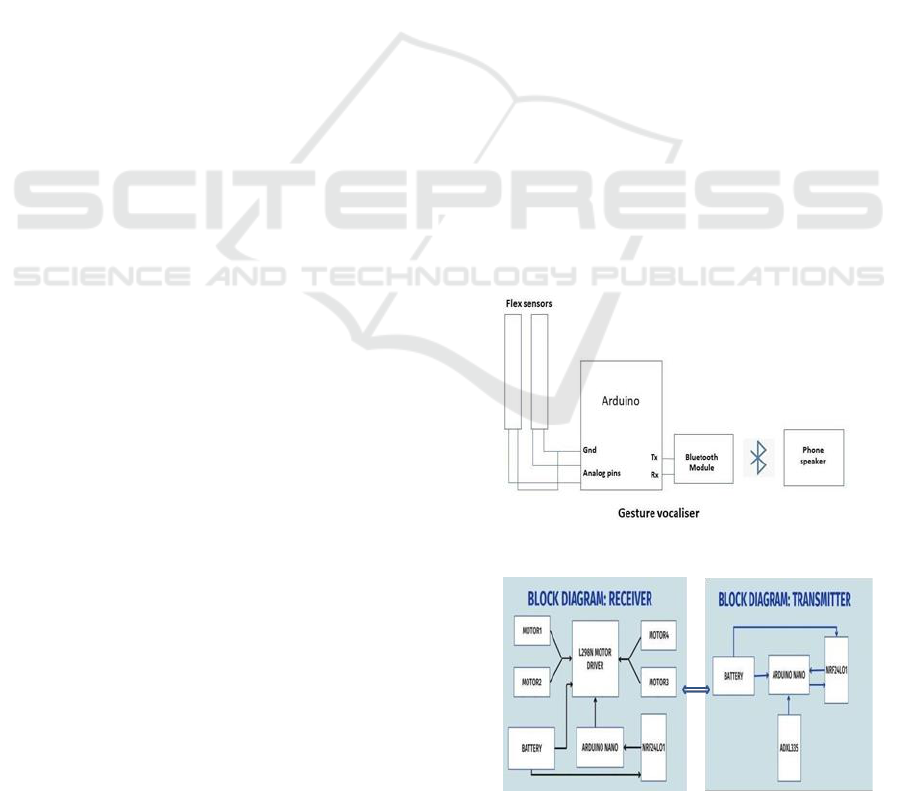

Fig 1. Block diagram for Gesture based vocaliser.

Fig 2. Block diagram Gesture based moving vehicle.

ISPES 2023 - International Conference on Intelligent and Sustainable Power and Energy Systems

10

We have to tie the transmitter circuit on our

hands and the moving vehicle will move as we

move our hands. For controlling the dc motors, we

have in L298D motor driver module.

4 TOOLS USED FOR THE

PROJECT WORK

BLUETOOTH MODULE (HC-05)

HC-05 is a Bluetooth module designed for transparent

wireless communication. It is preconfigured as a

Bluetooth slave device. When combined with a

Bluetooth host device such as PCs, smartphones and

tablets, its operation becomes transparent to users.

Bluetooth-enabled devices automatically detect each

other when they are close to each other. Bluetooth

uses 79 different radios in about 2 small bands with

4GHz. This frequency band is also used by Wi-Fi, but

Bluetooth uses little energy and is less effective for

Wi-Fi communication.

4.1 Arduino Uno Microcontroller Board

The hub of the Arduino ecosystem is the commonly

used microcontroller board known as the Arduino

UNO. It's intended to give both novice and seasoned

developers an approachable and user-friendly

platform to build interactive projects and prototypes.

The ATmega328P microcontroller, on which the

Arduino UNO board is based, has a variety of digital

and analogue input/output pins that are simple to

programme and control. It has a 16 MHz quartz

crystal, 6 analogue input pins, 14 digital input/output

pins (of which 6 can be used as PWM outputs), a USB

connection for programming and power, an ICSP

header, and a reset button.

The Arduino UNO allows users to quickly

prototype because of its simple programming

environment and a large library of pre-built

functionality.

4.2 Flex Sensor

A flex sensor, or bending sensor, is a sensor that

measures the

amount of deflection or bending. Usually,

the sensor is connected

somewhere and the resistance

of the main sensor is changed by bending the ground.

Since the resistance is directly proportional to the

amount of bending, it is often called a flexible

potentiometer. Flexible sensors, computer interfaces,

rehabilitation, security systems and even music

interfaces are widely used in research.

Fig 3. Complete block diagram.

4.3 Zigbee

Zigbee is an ideal communications technology for

establishing personal connections through small, low-

power radios such as home appliances, medical data

collection, and other low-demand, low-bandwidth

applications designed for small devices that require

wireless connectivity. The technology outlined in the

Zigbee specification is designed to be simpler and

cheaper than other Wireless Local Area Networks

(WPAN) such as Bluetooth or more wireless

networks such as Wi-Fi. "ZIGBEE" represents the

international standard for regional integration, battery

life, economy and efficiency.

4.4 Arduino Nano

Arduino Nano is a small, complete, enhanced

development based on the ATmega328. It has more

or less the same functionality as the Arduino, but in a

different package. It has only DC power and can use a

Mini-B USB cable instead of a standard cable.

Arduino Nano is a micro controller-based device with

16 digital pins that can be used for many purposes. It

can be used for almost any project from small to large.

It can also be used to build and build new apps.

4.5 Accelerometer

An accelerometer is a device that detects various

types of acceleration or vibration. Acceleration is the

change in velocity caused by the motion of an object.

The accelerometer absorbs the vibrations created by

the body and uses it to understand the direction of the

body. Accelerometers are just one of many sensors

that video telematics systems use to capture important

Gesture Controlled Device with Vocalizer for Physically Challenged People

11

vehicle information. These systems may include

multi-axis accelerometers for speed, acceleration and

deceleration, gyroscopes to detect rotation and

direction, and GPS to detect position. The basic

working principle of an accelerometer is to convert

energy into electricity. When a large object touches

the sensor, it bounces off and starts moving. As soon

as he moved, he began to accelerate.

4.6 Motor Driver

A generator is an electrical device that converts

electricity into electricity. Thus, the drive allows you

to use electricity for automatic operation. There are

many types of electric motors. These types include

DC motors, stepper motors and servo motors. The

working model of the engine and its different

characteristics. The driver itself is the interface

between the motor and the microcontroller. This is

because the microcontroller and the motor operate at

different speeds. The motor will use a higher current

level than the microcontroller. First, the

microcontroller sends the signal to the motor. The

received signals are then interpreted and processed in

the engine. The motor has two power input pins. Pin 1

powers the driver and pin 2 powers the motor using

the motor IC.

5 TESTING

For gesture vocalizer we tested many combinations of

finger gestures, with each finger gesture there was

bending of flex sensor and as we know the working of

flex sensor, and the resistance of the main sensor is

changed by bending the ground. Since the resistance

is directly proportional to the amount of bending, it is

often called a flexible potentiometer. So, we tested for

different combinations and noted down the voltage

drop occurring in the flex sensors. So, as we had a

plan to make gesture vocalizer glove so when the

person is unable to speak when he wears the glove as

shown in below figure. For different hand gesture we

tested different voltage value as we will show that

what is the voltage drop for different hand gesture.

6 SOFTWARE MPLEMENTATION

We utilize Arduino IDE, for coding we had written

the code firstly for gesture vocalizer and uploaded the

code to microcontroller (Arduino UNO). We had

written code to take output from flex sensors and take

the value from the sensor and store it in a variable.

And had written if conditions in the code that if the

value of thevariable is more than the calibrated

value then the predefined words will be sent to the

Bluetooth module.

Bluetooth communication was declared the code.

And for the Gesture based moving vehicle the

codewas written to take the x axis and y axis value

based on hand gestures and if conditions were written

based on these conditions the instruction was sent to

the receiver part through Zigbee module and this code

and uploaded into Arduino NANO (Transmitter).

And the other code was uploaded to another Arduino

NANO (Receiver) which had the code to initialize

and run the motor driver based on the instructions

given from transmitter.

7

RESULTS AND ANALYSIS

Fasten the moving vehicle with a smart glove and

control the straight forward and rotation of the car

from with hand gestures. The operation of the car is

always stable after many adjustments. Checking the

three-axis acceleration data and three-axis gyro data

by reading the MPU6050 in real time, and calculate

the Roll angle and Pitch angle through the built-in

filter algorithm. The system is then sent to Operation

and the 3D status of the MPU6050 is displayed in real

time in the serial monitor. By monitoring the angle of

the MPU6050, the angle of the MPU6050 can be kept

in real time and the 3D events of the MPU6050 can

be made. Seen by making an intuitive demo Interface.

We could see that the behavior at a given moment

when the MPU6050 rotates and the value of the

response angle is. It proves that there is no problem

with the MPU6050 module and that the character

angle can be calculated normally uses gesture

recognition technology, software and programming

to realize moving vehicle control.

In this control system, the gesture based moving

vehicle has good manoeuvrable ability handling and

stability, and can perform forward, backward, left

turn, right turn, etc. The tests were conducted as

shown in testing section and reanalysed that the as

specified the as expected, for the respective hand

gestures we got the respective words which we had

predefined and the Bluetooth module was sending the

text to the android mobile accurately and the

conversion of text to speech was done with accuracy.

ISPES 2023 - International Conference on Intelligent and Sustainable Power and Energy Systems

12

Fig 4.

WATER

TABLET

HELP

EMERGEN

CY

FRONT

BACK

Fig 5.

8 CONCLUSIONS

The inspiration behind this project are ideas that will

help solve the language problems of deaf and hearing

communities. Trying to translate these finger gestures

into words is just minutes away from this ultimate

goal. The vocalizer created by executes the steps

required by. This project uses as a prototype to check

the feasibility of, which uses the glove sensor to

recognize the gestures and convert the text received

through Bluetooth module and the predefined word

will be printed in the app and can be heard from the

mobile speaker. This gesture controlled moving

vehicle are designed to replace traditional remote

controls used to drive vehicles. It will allow us to

control forward and backward, left and right

movement while using the same sensor to control the

throttle of the vehicle as hand gestures. Since the hand

appears to be angled to the right, the robot moves in

the right direction. The vehicle is in stop mode as the

hand is still fixed to the environment. From testing,

about 80% of it worked as expected; the rest were less

due to background noise, being negative usage letters.

A motion- controlled robot offers a better way to

control the device. The commands given for the robot

to move in a certain direction in the environment are

based on gestures from the. Unlike existing systems,

the user can control the robot with hand gestures

without using external devices to support feedback

REFERENCES

Kadam, K., Rucha Ganu, Ankita Bhosekar, & Santa Ram

Joshi. (2012). American Sign Language Interpreter.

https://doi.org/10.1109/t4e.2012.45

Manandhar, S., Bajracharya, S., Karki, S., & Jha, A. K.

(2019). Hand Gesture Vocalizer for Dumb and Deaf

People. SCITECH Nepal, 14(1), 22–29.

https://doi.org/10.3126/scitech.v14i1.25530.

Manandhar, S., Bajracharya, S., Karki, S., & Jha, A. K.

(2019). Hand Gesture Vocalizer for Dumb and Deaf

Gesture Controlled Device with Vocalizer for Physically Challenged People

13

People. SCITECH Nepal, 14(1), 22–29.

https://doi.org/10.3126/scitech.v14i1.25530

Wu, X.-H., Su, M.-C., & Wang, P. (2010). A hand-gesture-

based control interface for a car-robot.

https://doi.org/10.1109/iros.2010.5650294

Alam, M., & Yousuf, M. A. (2019, February 1). Designing

and Implementation of a Wireless Gesture Controlled

Robot for Disabled and Elderly People. IEEE Xplore.

https://doi.org/10.1109/ECACE.2019.8679290

Kunjumon, J., & Megalingam, R. K. (2019). Hand Gesture

Recognition System For Translating Indian Sign

Language Into Text And Speech. 2019 International

Conference on Smart Systems and Inventive

Technology (ICSSIT).

ISPES 2023 - International Conference on Intelligent and Sustainable Power and Energy Systems

14