FACE RECOGNITION IN BIOMETRIC VENDING MACHINES

J.J. Astrain

†

, J. Villadangos

‡

, A. C

´

ordoba

†

, M. Prieto

‡

†

Dpt. Matem

´

atica e Inform

´

atica,

‡

Dpt. Autom

´

atica y Computaci

´

on

Universidad P

´

ublica de Navarra

Campus de Arrosad

´

ıa. 31006 Pamplona (Spain)

Keywords:

Biometric vending machine, face authentication, face detection, face recognition, face verification.

Abstract:

Many Biometric security systems are used to grant restricted access to certain resources. This paper presents

a biometric system for automatic vending machines. It ensures that products submitted to legal restrictions are

only sold to authorized purchasers. Making use of an identity card, the system checks if the purchaser verifies

all the restrictions to authorize the product sale. No special cards or codes are needed, since the system only

scans the ID card of the user and verifies that the purchaser is the ownership of the card taking a photograph.

The simplicity of the system and the high recognition rates obtained make the biometric system an interesting

element to be included in automatic vending machines in order to sell restricted products in certain areas.

1 INTRODUCTION

One of the main problems of vending machines is to

grant that certain products, whose sale is restricted to

adults, are only performed to authorized purchasers.

Products as tobacco or alcoholic drinks cannot be of-

fered in certain places to all the customers. If the

vending machine is not placed in a restricted area,

where only adults can access, some security facili-

ties are required. In order to grant that only autho-

rized purchasers can buy a certain product (a beer,

for example) on a vending machine placed in a pub-

lic access area, a biometric authentication system is

proposed in this work. According to Automatic Mer-

chandiser magazine, the vending industry’s revenues

in 2001 were 24.34 billion compared with 17.4 bil-

lion in 1992. Relevance of this kind of sales is really

important, and is growing considerably.

Several kinds of products cannot be sold using au-

tomatic vending machines due to legal restrictions.

Tobacco, alcoholic drinks or tickets to spectacles re-

stricted to adults, are some of this kinds of products.

Biometric measures include terms as fingerprint, ge-

netic fingerprint, facial, iris and voice recognition and

many others. Considering individual physiological

characteristics of users, and making use of identifi-

cation cards, it is possible to ensure that only autho-

rized users can access certain resources. In our case,

we want to ensure authorized sales over vending ma-

chines.

Biometric techniques can be used to certify that

only adults buy these products. So, we propose the

use of biometric procedures to determine if a con-

sumer is or not an adult, and if he does, the sale is

authorized. In our case, we propose the use of face

recognition techniques (Yang et al., 2002) to ensure

that the ID card of the consumer corresponds with the

card owner, and that the consumer is an adult. The use

of passwords, intelligent cards, codes or keys are not

an alternative for this problem because minors cannot

buy restricted products even if the ID card is modified.

Every citizen is obligated by the government to have

and carry an identity card. This document ID has al-

ways a photograph of the document’s owner, and the

date of birth is also registered. So, having an ID card,

we can extract the birth date and calculate the age of

the consumer. If this age is upper than the minimum

age established to authorize a sale, the machine must

verify if the ID card corresponds to the user that wants

to perform the sale. If it does, sale is authorized and

the user can insert the coins needed to buy the prod-

uct. At this point, the machine sales the product, and

records the sale data.

The rest of the paper is organized as follows: sec-

tion 2 is devoted to present the system architecture

proposed in this work; section 3 introduces the face

components detection layer, and section 4 the fa-

cial authentication layer; experimental results are pre-

293

J. Astrain J., Villadangos J., Córdoba A. and Prieto M. (2004).

FACE RECOGNITION IN BIOMETRIC VENDING MACHINES.

In Proceedings of the First International Conference on E-Business and Telecommunication Networks, pages 293-298

DOI: 10.5220/0001393202930298

Copyright

c

SciTePress

sented in section 5; and finally, conclusions and refer-

ences end the paper.

2 SYSTEM ARCHITECTURE

In order to authorize a sale, a recognition of the pur-

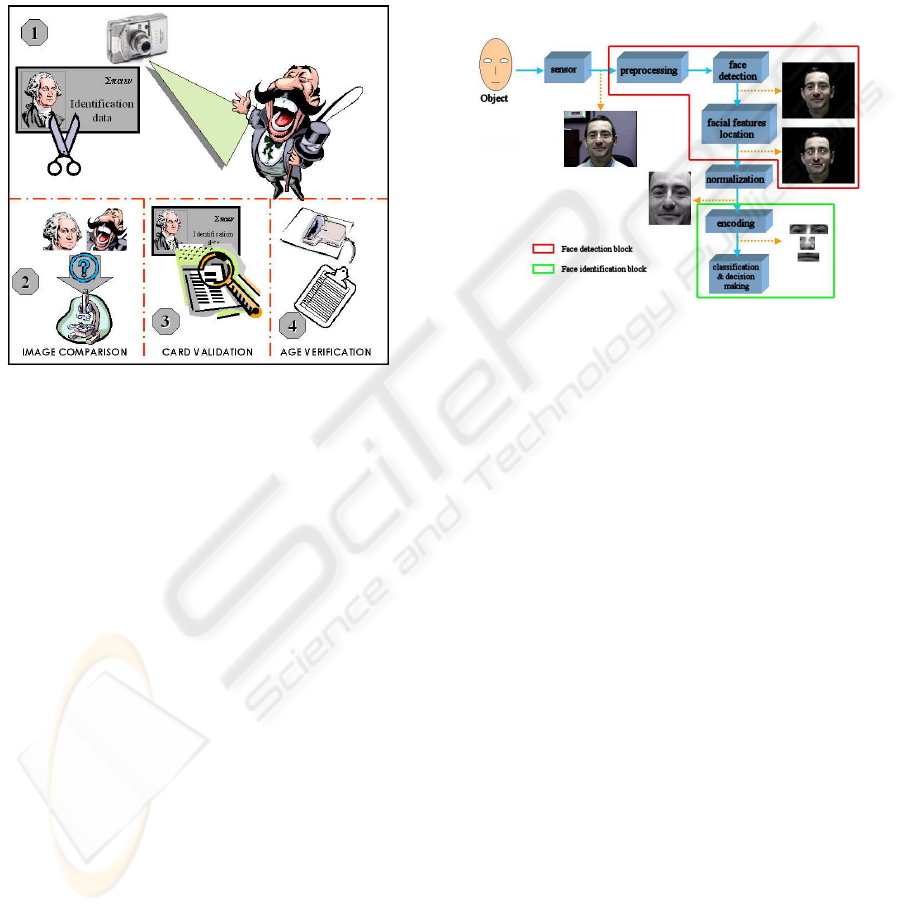

chaser is needed. Figure 1 illustrates the process fol-

lowed by the system before a sale.

Figure 1: Process followed by the system before a sale.

First of all, the vending machine takes a photo of

the purchaser and scans his personal card ID (see fig-

ure 2 (1)). Then, the system performs the location of

the face, both in the card ID and in the photograph.

After that, a preprocessing step including the image

alignment and scale is performed, and then, the faces

are normalized. Finally, the normalized images are

adequately encoded and compared (figure 2 (2)), per-

forming the final recognition.

If the recognition is successfully performed, the

system can ensure that the consumer is the real owner

of the card ID, any case else, the sale is rejected. Once

verified the ownership of the document, the system

verifies that the card ID has not been manipulated,

and is an authentic document making use of the dif-

ferent security check-points that every card ID has.

Then, the system locates the area where is placed the

birth date of the purchaser (figure 2 (3)). Using an

OCR layer, the system extracts the information and

verifies that the purchaser has at least the minimum

age required to authorize the sale. If these tree requi-

sites (card ID ownership, card ID validation and pur-

chaser’s age) are verified, the sale is authorized. Any

case else, the sale is rejected.

The application here proposed only requires a cer-

tain age of the potential purchasers to authorize a sale,

but some other criteria can be taken into account.

Every citizen has an ID card provided by its gov-

ernment, so no additional cards are needed. Then,

sales are not restricted to a limited number of clients

having special cards or codes.

The identity of a customer is verified using its phys-

iological characteristics. But biometric data is often

noisy because of the environmental noise or the vari-

ability over time of the users. This problem can be

solved combining several biometric techniques as it

is introduced in section 4.

Figure 2 summarizes the system architecture.

Figure 2: System architecture.

3 FACE COMPONENTS

DETECTION

Considering reality, customers may not stand in front

of camera properly. However, the purchaser is inter-

ested in performing the sale, we can assume he is go-

ing to place himself in a good angle in order to grant

the biometric recognition, and then, the sale.

Face detection starts locating the eyes in the pic-

ture, since they can be detected with a high precision

level. Once obtained their location, image can be nor-

malized in order to extract more biometric character-

istics (Brunelli and Poggio, 1993) and to match them

with the pattern obtained from the image placed in the

ID card (Brunelli and Poggio, 1993; Lam and Yan,

1998).

As it was introduced in (Brunelli and Poggio,

1993), eyes are placed in the center zone of the image,

between the upper limit of the face, and the downer

limit of the chin, and the separation between eyes are

approximately the size of an eye.

Making use of a grey level distribution image we

have implemented an algorithm that that works with

the border distribution of the image end detects all the

zones where abrupt intensity changes take place due

to the high amount of borders existent. Border projec-

tion allows to detect the approximate zone where eyes

ICETE 2004 - SECURITY AND RELIABILITY IN INFORMATION SYSTEMS AND NETWORKS

294

are located, since eyes are usually the most structured

part of the face.

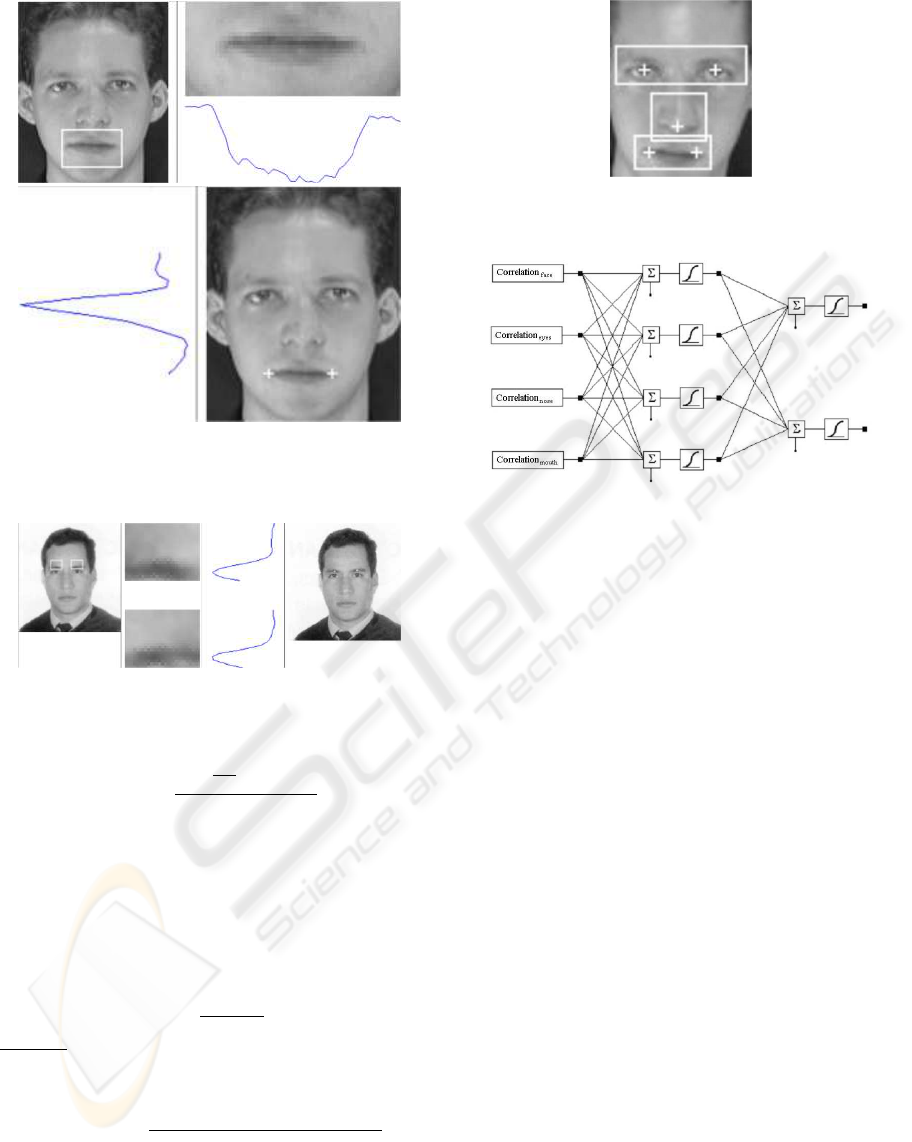

Figure 3: Vertical and horizontal border detection.

Eyes detection algorithm is implemented following

a multilayer design. Figure 3 illustrates the border de-

tection process. First of all, an horizontal line placed

in the longitudinal eyes edge is obtained. Once lo-

cated this edge, face limits are detected (see figure 4)

and the vertical edge of the nose is obtained (see fig-

ure 5). Then, two zones, where eyes could be sited,

are defined. With the aid of two windows (see figure

6), less intensity pixels of the zones are located in or-

der to obtain the pupils (see figure 7). At this point,

eyes location can be clearly detected (see figure 8).

Once eyes detected, the image is normalized having

both eyes horizontally aligned. Then, other face com-

ponents (nose, eyebrows and mouth) are much more

easily detected, since the searching zone is consider-

ably reduced.

Figure 4: Face limits detection.

The process followed to detect exactly the eyes lo-

cation is the extraction of the related eye’s windows,

their equalization, the image conversion to black and

white format, its filtering, and its respectively closure

and expansion (morphological operations) execution.

Then nose, mouth and eyebrows are detected. Us-

ing the horizontal projection of the vertical gradient

(Brunelli and Poggio, 1993), the vertical position of

the nose base can be obtained. Horizontal position

can be obtained as the average point between the two

reference points obtained in the eyes detection layer.

Figures 9, 10, 11 illustrate the process followed in the

detection process.

Figure 5: Nose edge detection.

Figure 6: Windows where searching eyes.

4 FACIAL AUTHENTICATION

In order to certify that the user is the owner of the ID

card introduced in the vending machine, both images

(the one scanned from the ID card and photograph)

are matched.

Once detected the most relevant facial components

(eyes, mouth, nose and eyebrows) in both images, is

time to compare them. Then, different techniques can

be used. Correlation techniques (Brunelli and Pog-

gio, 1993) perform well when images are correctly

normalized, since they are sensible to size, rotation

and position variations. So, an important effort is per-

formed to normalize the images (see figure 12). Four

different normalizations are considered: image with-

out any preprocessing (I(x, y)), where I(x, y) is the

image matrix for coordinates x and y; image normal-

ized using the average intensity for each pixel zone (<

I

D

(x, y) >); image gradient magnitude (G(I(x, y)));

and Laplacian normalization (L(I(x, y))).

Correlation between both images is performed

comparing each component window itself. The

mouse window obtained by the camera is compared

with the mouse window obtained by the scanner, and

so one with the rest of components. Each window cor-

relation is maximized itself, granting that each com-

ponent window supplies the high possible amount of

information. Taking into account that all the win-

dows do not supply the same information, and know-

ing that this information can be erroneous in certain

FACE RECOGNITION IN BIOMETRIC VENDING MACHINES

295

Figure 7: Horizontal line connecting the pupils (white) and

upper face limit (black).

Figure 8: Eyes location.

cases (glasses, mustache...), a multiple-classification

subsystem is proposed. Each component has its own

weight that is provided by a neural network as it is

illustrated in figure 13. We have randomly selected

a similar number (100) of purchasers and impostors

to obtain the samples used in the training process.

For the validation process we have used 100 samples

corresponding (different of the previous ones) to pur-

chasers, and 400 samples corresponding to impostors.

(different of the previous ones)test. Inputs of the neu-

ral network are the correlation coefficients of the dif-

ferent windows (nose, eyes, mouth and face). The

internal layer has four neurones and the output layer

has two neurones. All the neurones have a sigmoidal

logarithmic activation function.

Table 1 summarizes the results obtained for differ-

ent training experiments performed by the neural net-

work over 6 different sample sets (containing 2500

Table 1: FAR and FRR obtained by the neural network

(training set).

Recognition rate (%) FAR (%) FRR (%)

98.446 10.5614 4.1026

98.7013 8.5964 7.6923

96.6234 2.7595 14.359

99.2208 7.929 6.6667

99.2208 9.0731 3.5897

100 7.161 8.2051

Figure 9: Nose detection: horizontal projection of the ver-

tical gradient (up) and vertical projection of the horizontal

gradient (down).

images each one of them). The neural network has

four neurones in the intermediate layer, and two neu-

rones in the output layer. Each one of the four in-

put neurones corresponds to the four face compo-

nent windows (mouth, nose, eyes and eyebrows) pre-

viously obtained. One of the output neurones indi-

cates the certainty degree (µ

c

∈ [0, 1]) correspond-

ing to an impostor (both images don’t match), and the

second neuron indicates the certainty degree (µ

u

∈

[0, 1]) corresponding to a real purchaser (both images

match). The system considers a user be an authorized

client whenever the difference between both degrees

µ

c

and µ

d

are upper than a certain threshold. Select-

ing this threshold we can select different values for the

False Acceptation Rate (FAR) an for the False Rejec-

tion Rate (FRR).

The neural network minimizes the quadratic aver-

age error of the outputs. So, when training with a high

number of impostor samples, the network minimizes

the FAR rate and the FRR presents high values. Then,

it is possible to select different parameters according

to the rate to maximize. In our case, is better to lost a

sale than vending a product to a non authorized pur-

chaser.

The standard correlation index C(I, J) reduces the

influence of the ambient illumination and its value

varies from −1 to 1, where 1 implies that both im-

ages are identical, and −1 implies that both images

are completely different.

C(I, J) =

P

m

x=1

P

n

y=1

[I(x, y) −

¯

I]·[J(x, y) −

¯

J]

q

P

m

x=1

P

n

y=1

[I(x, y) −

¯

I]

2

·

P

m

x=1

P

n

y=1

[J(x, y) −

¯

J]

2

ICETE 2004 - SECURITY AND RELIABILITY IN INFORMATION SYSTEMS AND NETWORKS

296

Figure 10: Mouth detection: horizontal projection of the

vertical gradient (up) and vertical projection of the horizon-

tal gradient (down).

Figure 11: Eyebrows detection (left and right respectively).

C(I, J) =

I·J −

¯

I·

¯

J

σ(I(x, y))·σ(J(x, y))

If images are normalized having null average and a

unity variance, there exists a relationship between the

euclidean distance and the standard correlation index

(Romano et al., 1996).

d

euclidean(L

2

)

(I, J) =

m

X

x=1

n

X

y=1

|(I(x, y) − J(x, y)|

Being I

0

(x, y) =

I(x,y)−

¯

I

σ

I

and J

0

(x, y) =

J(x,y)−

¯

J

σ

J

, the correlation index is obtained (Brunelli

and Messelodi, 1995):

C(I

0

, J

0

) = 1 −

P

m

x=1

P

n

y=1

|I

0

(x, y) − J

0

(x, y)|

P

m

x=1

P

n

y=1

|I

0

(x, y)| + |J

0

(x, y)|

In order to include all de information available, a

new correlation index C is defined. This index in-

cludes a weighted combination of all the windows de-

fined for the face elements.

Figure 12: Normalized image taking into account facial

component windows.

Figure 13: Neural network (forward back propagation) to

obtain the correlation indexes.

C = k

f ace

·c

f ace

+ k

eyes

·c

eyes

+ k

nose

·c

nose

+ k

mouth

·c

mouth

Experimental results show that better results are

obtained for the following values: k

face

= 4, k

eyes

=

4, k

nose

= 2, k

mouth

= 1.

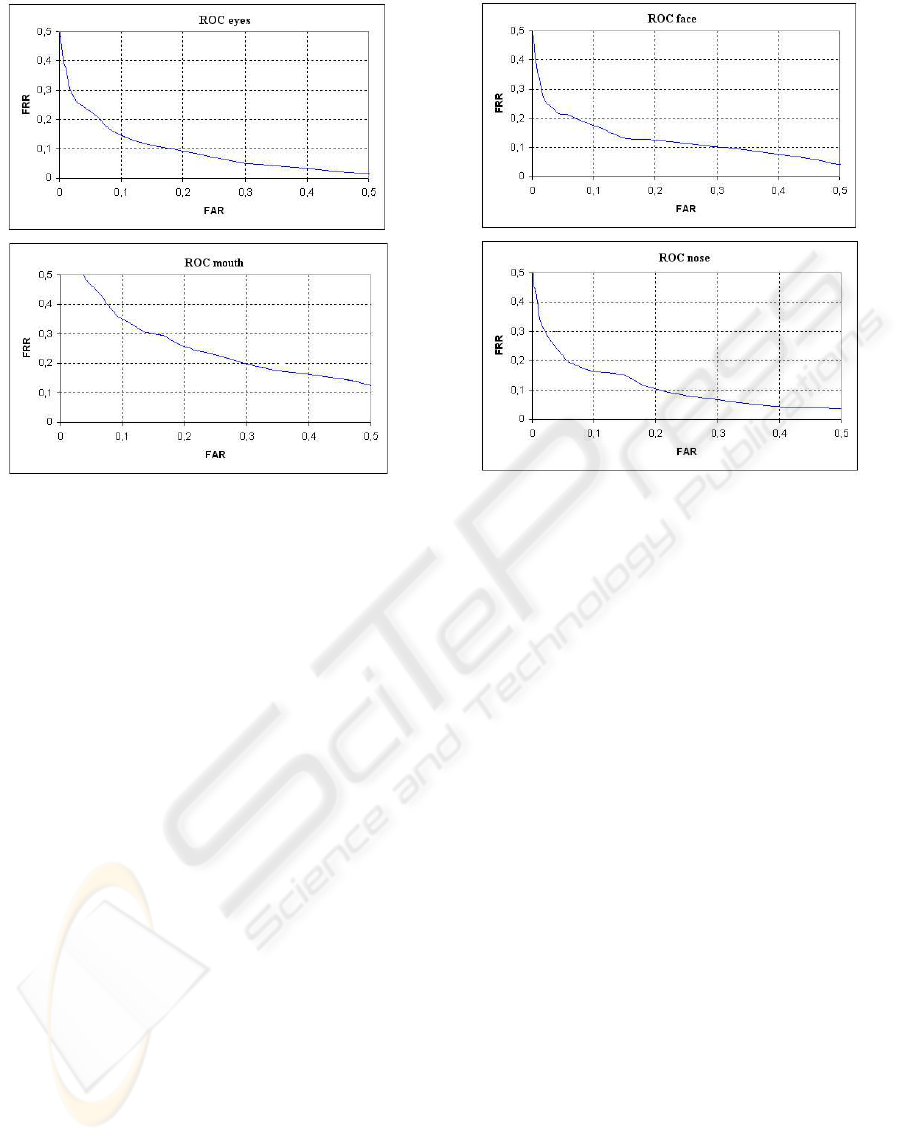

5 EXPERIMENTAL RESULTS

Experiments performed in order to validate the sys-

tem proposed shows that system obtains good per-

formance in terms of calculus time and recognition

rate. To study recognition raters, some indexes must

be defined: False Rejection Rate (FRR), False Accep-

tance Rate (FAR) and Receiver Operating Character-

istic (ROC). Figures 14 and 15 show the ROC rates

obtained.

Experiments have been performed over a 19,460

samples, being 390 of them authorized clients, and

19,070 impostors. The correct sales rate obtained is

96.9 %, and the time employed to compute each sale

is 6.2 seconds (0.4 seconds for size and position nor-

malization, 3 for intensity normalization and windows

extraction, 0.7 for windows correlation maximization,

0.1 for correlation indexes calculus, and 2 seconds to

the rest of operations) over a 450MHz Intel Pentium

III using MS Windows 98 and Matlab 5.2. Images

used have 280x280 pixels.

FACE RECOGNITION IN BIOMETRIC VENDING MACHINES

297

Figure 14: ROC obtained for different facial component

windows.

Finally, an OCR layer is needed in order to detect

if the sale is authorized or not. If the vending ma-

chine sells alcoholic drinks, the system must verify

that the purchaser is an adult, so the system locates

the client’s birth date in its ID card. Once located,

the image zone containing this date is processed by

an OCR subsystem that obtains the date in a format

that can be processed by the final decision module of

the vending machine. This module allows or denies

the sale according to the age of the purchaser.

6 CONCLUSIONS

Making use of biometric techniques, automatic vend-

ing machines can sell products nowadays restricted to

adult zones or private places.

Adding a small digital camera and a scanner to the

current vending machines, it is possible to build the

proposed biometric security system that allows to sell

restricted products everywhere ensuring that sales are

performed only if purchasers are authorized.

Low computational and hardware costs make inter-

esting to use this system.

REFERENCES

Brunelli, R. and Messelodi, S. (1995). Robust estimation

of correlation with applications to computer vision. In

Figure 15: ROC obtained for different facial component

windows.

Pattern Recognition, Vol. 28, No. 6, pp. 833-841.

Brunelli, R. and Poggio, T. (1993). Face recognition: Fea-

tures versus templates. In IEEE Transactions on Pat-

tern Analysis and Machine Intelligence, Vol. 15, No.

10, pp. 1042-105.

Lam, K. and Yan, H. (1998). An analytic-to-holistic ap-

proach for face recognition based on a single frontal

view. In IEEE Transactions on Pattern Analysis and

Machine Intellingence, Vol. 20, No. 7, pp. 673-686.

Romano, R., Beymer, D., and Poggio, T. (1996). Face ver-

ification for real-time applications. In Proceedings of

Image Understanding Workshop, Vol. 1, pp. 747-756.

Yang, M., Kriegman, D., and Ahuja, N. (2002). Detect-

ing faces in images: A survey. In IEEE Transactions

on Pattern Analysis and Machine Intelligence, Vol. 24,

No. 1, pp. 34-58.

ICETE 2004 - SECURITY AND RELIABILITY IN INFORMATION SYSTEMS AND NETWORKS

298