RESULT COMPARISON OF TWO ROUGH SET BASED

DISCRETIZATION ALGORITHMS

Shanchan Wu, Wenyuan Wang

Department of Automation, Tsinghua University,Beijing 100084, P.R.C.

K

eywords: Rought set, Cuts, Discretization, Data mining

Abstract: The area of knowledge discovery and data mining is growing rapidly. A large number of methods are

employed to mine knowledge. Many of the methods rely of discrete data. However, most of the datasets

used in real application have attributes with continuous values. To make the data mining techniques useful

for such datasets, discretization is performed as a preprocessing step of the data mining. In this paper, we

discuss rough set based discretization. We use UCI data sets to do experiments to compare the quality of

Local discretization and Global discretization based on rough set. Our experiments show that Global

discretization and Local discretization are dataset sensitive. Neither of them is always better than the other,

though in some cases Global discretization generates far better results than Local discretization.

1 INTRODUCTION

Rough Set theory is a tool to tackle fuzzy and

uncertainty knowledge. It was put forward firstly by

Z.Pawlak (Pawlak Z, 1982). In decades, rough set

theory has been successfully implemented in Data

mining, artificial intelligence and pattern

recognition.

But rough set and many other methods used in

data mining can't deal with continuous attributes and

a very large proportion of real data sets include

continuous variables. One solution to this problem is

to partition numeric variables into a number of

intervals and treat each interval as a category. This

process is usually termed dicretization.

Several methods have been proposed to

discretize data as a preprocessing step for the data

mining process. Nguyen S. H. proposed the named

discretization approach based on rough set methods

and boolean reasoning (

Nguyen, 1995, 1997). The

main idea is to seek possibly minimum number of

discrete intervals, and at the same time it should not

weaken the indiscernibility. It has been proven that

Optimal Discretization Problem is NP-complete

(

Nguyen, 1995). In this paper, we examine two

discretization algorithms based on rough set, Local

Discretization and Global Discretization (Hung Son

Nguyen, 1996). We do experiments to compare the

results of the two algorithms.

This paper is organized as follows. In Section 2,

we describe discretization based on rough set. Then

we explain determination of candidate cuts and

calculating of discernibility of cuts in Section 3. In

Section 4, we describe the local discretization

algorithm and global discretization algorithm and in

section 5 we show the experiment results. Finally

Section 6 concludes this paper.

2 DESCRIPTION OF ROUGH SET

BASED DISCRETIZATION

An information system is defined as follows:

AaFVAUS

aa

∈= ),,,,( (1)

where

{}

n

xxxU ,,,

21

L= is a finite set of

objects(n is the number of objects), A is a finite set

of attributes,

a

aA

V

V

∈

=

U

, and

a

V is a domain of

attribute a,

aa

VAUF →×: is a total function

such that

ai

Vaxf ∈),( for each Aa ∈ , Ux

i

∈ .

An information system S in definition (1) is

called a decision system or decision table when the

attributes in S can be divided into condition

attributes C and decision attributes D. i.e.

DCA U= , and

φ

=DC I .

In information systems, each subset of attributes

AI ⊆ determines a binary relation as follows:

{

}

() , ,() ()

I

ND I x y U U a I a x a y=< >∈ × ∀∈ =

511

Wu S. and Wang W. (2004).

RESULT COMPARISON OF TWO ROUGH SET BASED DISCRETIZATION ALGORITHMS.

In Proceedings of the Sixth International Conference on Enterprise Information Systems, pages 511-514

DOI: 10.5220/0002611505110514

Copyright

c

SciTePress

It is easily shown that

)(IIND is an

equivalence relation on the sets U and is called an

indiscernible relation. The partition of U as defined

by B will be denoted U/B and the equivalence

classes introduced by B will be denoted [u]

B.

In

particular, [u]

{d}

will be called the decision classes of

the decision system.

Let

S ),},{,( fVdAU ∪= be a decision table

where

}=

n

x,,x,x,{x U

321

L . Assuming that

RrI

aa

⊂= ),[V

a

for any Aa ∈ where R is the

set of real numbers.

Assume now that the

S is a consistent decision

table. Let

D

a

be a partition of V

a

(for Aa ∈ ) into

subintervals, i.e.

{

}

0112 1

a

D[ ,),[,),,[, ),

kk

aaa aa aa a

plppp pp r

+

== =L where

0112 1

a

V[ ,)[,) [, ),

kk

aaa aa aa a

p

lp pp pp r

+

== ∪ ∪∪ =L and

a

k

a

k

aaaaa

rpppprp =<<<<<=

+1210

L

Any D

a

is uniquely defined by the set of cuts on

},{:

21 k

aaaa

pppV L (empty if card(D

a

) = 1). The

set of cuts on V

a

defined by D

a

can be identified by

D

a

. A family }:{

aa

VaDD ∈= of partitions on S

can be represented in the form

a

Aa

Da ×

∈

U

}{

Any

a

Dv)(a, ∈ will be also called a cut on

a

V .

Then the family

}:{

aa

VaDD ∈= defines from

}){,( dAUS ∪= a new decision table

}){,( dAUS

pp

∪= , where }:{A

p

Aaa

p

∈=

and

),[)()(a

1p +

∈⇔=

i

a

i

a

ppxaix for any

Ux ∈ and },0{ ki L∈ .

After discretization, the original decision system

is replaced with the new one. And different sets of

cuts will construct different new decision systems. It

is obvious that discretization process is associated

with loss of information. Usually, the task of

discretization is to determine a minimal set of cuts

from a given decision system and keeping the

discernibility between objects and the rationality of

the selected cuts can be evaluated by the following

criteria(Nguyen H S, 1995, 1997): (1) Consistency

of P. For any objects

Uvu, ∈ , they are satisfying

if u, v are discerned by A, then u, v are discerned by

P;(2) Irreducibility. There is no

P

P

⊂

′

, satisfying

the consistency; (3) Optimality. For any

P

′

satisfying consistency, it follows

)P(card(P)

′

≤ card , then P is optimal cuts. It has

been proven that Optimal Discretization Problem is

NP-complete (Nguyen H S, 1995).

3 DETERMINATION OF

CANDIDATE CUTS AND

CALCULATION OF

DISCERNIBILITY OF CUTS

Let ),},{,(S fVdAU ∪= be a decision

system. An arbitrary condition attribute Aa ∈ ,

defines a sequence

a

n

a

2

a

1

a

vvv <<< L , where

{}{}

Uxxa ∈= :)(v,,v,v

a

n

a

2

a

1

a

L , Then the

set of all possible cuts on a is defined by:

⎪

⎭

⎪

⎬

⎫

⎪

⎩

⎪

⎨

⎧

+

++

=

−

)

2

vv

(a,,),

2

vv

(a,),

2

vv

(a,C

a

n

a

1n

a

3

a

2

a

2

a

1

a

aa

L .

The set of all possible cuts on all attributes is

denoted by:

U

Aa

a

C

∈

=

A

C .This method usually

generates a large set of candidate cuts. In order to

reduce the number of candidate cuts, we can use

bound cuts (Jian-Hua Dai, 2002).

Since we are only interested in separating objects

that have different decision values, each cut in our

representation is given information about how many

objects from each decision class are to the left and to

the right of the cut, i.e. how many pairs of objects

with different decision values that are discerned

from each other. The algorithm where this measure

is later used sequentially deals with each attribute

and the set of cuts that may be introduced on that

attribute. By assuming that we can totally order all

objects so that they primarily are sorted on the value

of the current attribute and secondly in some

arbitrary order, we use the algorithm 1 to calculate

Table 1: Discernibility Conventions the discernibility

value of a cut.

(a,c) A cut point c on an attribute a dividing all

objects in a decision system in two parts

})(:{ cuaUu <∈ and })(:{ cuaUu >∈

D A set of cuts (a,c)

AllCuts All possible cuts on the decision system

L

{}

DcaU ∈),( or ABBU ⊆,

),(),,( carcal

XX

number of elements that are to the left/right of

the cut (a, c) in the equivalence class X

),(),,( carcal

X

i

X

i

number of elements with

decision value i in equivalence class X that

are to the left/right of the cut (a, c)

j

c a value indicating where the cut is made

ICEIS 2004 - ARTIFICIAL INTELLIGENCE AND DECISION SUPPORT SYSTEMS

512

Before turning to the details of algorithm 1,

internal notations used in the algorithm are explained

in table 1. These notations are also used in the next

section.

Algorithm 1: Calculate the discernibility value of

a cut

Input: information system S }){,( dAU ∪= ,

candidate cut (a, c

j

) on condition attribute Aa ∈

Output: the discernibility value of the cut (a, c

j

)

Method:

0N ←

for each

L

X

∈ do

()

1

N N ( , ) ( , ) ( , ) ( , )

d

XX XX

ii

i

l

acrac lacra

c

=

←+ ⋅ − ⋅

∑

return N

The discernibility value returned by algorithm 1

is equal to the number of pairs of objects from S

discerned by cut (a,c

j

). The proof that this algorithm

is correct can be found in (Hung Son Nguyen, 1996).

4 LOCAL DISCRETIZATION AND

GLOBAL DISCRETIZATION

In this section, we describe Local Discretization and

Global Discretization algorithms (Hung Son Nguyen,

1996). Local Discretization algorithm works by

finding a maximally discerning cut (see algorithm 1)

from the set of all possible cuts (AllCuts) and then

dividing the dataset into two subsets as long as there

are objects with different decision values.

Algorithm 2. Local discretization

Input: information system

S }){,( dAU ∪= ,

all candidate cuts in

S

Output: new information system after being

discretized

Method:

N

UM

C

LASSES

(S)

Return number of decision classes in S

T

RAVERSE

(S)

If N

UM

C

LASSES

(S) > 1 Then

from AllCuts select cut point

),(

**

ca

which has maximal discernibility value

using algorithm 1

{}

),(

**

caDD ∪←

{}

),(\

**

caAllCutsAllCuts ←

{}

cxaUxU <∈← )(:

*

1

{}

cxaUxU ≥∈← )(:

*

2

T

RAVERSE

(U

1

)

T

RAVERSE

(U

2

)

L

OCAL

D

ISCRETIZATION

(S)

AllCuts

← the set of all possible cuts in S

φ

←D

T

RAVERSE

(U)

Discretize S using the cuts in D

In algorithm 3 (Hung Son Nguyen, 1996), it

works with decision classes and check each

consecutive cut that is added to the final set D

against all objects that are not completely separated

into equivalence classes uniform w.r.t. decision

value by the current set of cuts. It splits the decision

classes into smaller and smaller parts until they are

uniform with respect to the decision values of the

objects.

Algorithm 3. Global Discretization

Input: information system

S }){,( dAU ∪= ,

all candidate cuts in

S

Output: new information system after being

discretized

Method:

N

UM

C

LASSES

(S)

Return number of decision classes in S

G

LOBAL

D

ISCRETIZATION

(S)

AllCuts

← the set of all possible cuts in S

φ

←D

BUL /← , B is the set of attributes that will

not be discretized

repeat

from AllCuts select cut point ),(

**

ca

which has maximal discernibility value

using algorithm 1

{}

),(

**

caDD ∪←

{}

),(\

**

caAllCutsAllCuts ←

for each

L

X

∈ do

}{\ XLL ←

if N

UM

C

LASSES

(X) > 1 then

})(:{

**

1

cxaXxX ≤∈←

})(:{

**

2

cxaXxX >∈←

},{

21

XXLL ∪←

until

φ

=L

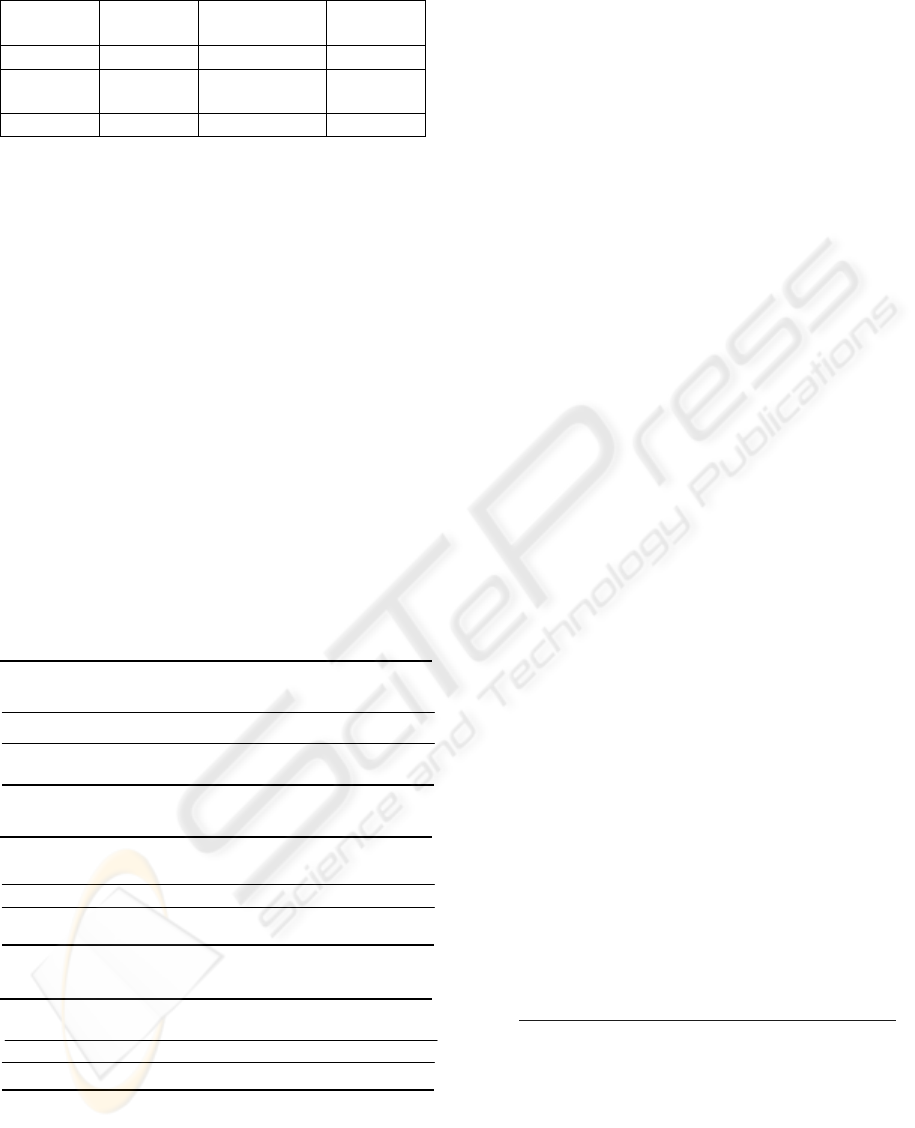

5 EXPERIMENTS AND ANALYSIS

We do our experiments on three data sets from UCI

named abalone and iris and liver disorders

respectively, which can be downloaded from the

website (MLR). Some information about the data

sets is shown in table 2.

RESULT COMPARISON OF TWO ROUGH SET BASED DISCRETIZATION ALGORITHMS

513

Table 2: The data sets for experiments

Name #objects #continuous

attributes

#decision

classes

Iris 150 4 3

liver-

disorders

345 6 2

Abalone 4177 7 29

We make comparative experiments between local

discretization algorithm and global algorithm,

comparing the number of result cuts discretizing

continuous attributes. The results are shown in table

3, table 4, and table 5 respectively. In the tables,

#cuts L denotes the number of result cuts generated

by local discretization algorithm and #cuts G by

global discretization algorithm.

As the two algorithms are both applied on

consistent information systems and maintain the

original indiscernibility, the smaller number of the

result cuts, the better the algorithm is. From the

comparisons we know that for liver disorders dataset

and abalone dataset, the number of result cuts

generated by global algorithm is far smaller than by

local algorithm. But it is larger for liver iris dataset.

So we can’t say that global algorithm is always

better than local algorithm.

For liver iris data set, the number of result cuts of

attribute sepal_length generated by global algorithm

is far larger than by local algorithm, and the number

Table 3: Comparison of the results on liver disorders.

Attribute Mcv alkphos sgpt Sgo Gam- drinks total

magt

#cuts L 20 22 20 25 30 23 140

#cuts G 3 4 3 2 5 3 20

Table 4: Comparison of the results on iris.

Attribute sepal_ sepal_ petal_ petal_ Total

Length width length width

#cuts L 3 3 6 1 13

#cuts G 34 2 4 2 42

Table 5: Comparison of the results on abalone

Attri- len- diam- hei- Whole shucked viscera shell total

bute gth eter ght weight weight weight weight

#cuts L 421 389 419 539 564 674 555 3561

#cuts G 20 21 30 7 32 32 30 172

of result cuts of other attributes is almost equal. But

for two other data sets, the number of result cuts for

all attributes generated by global algorithm is far

smaller than by local algorithm. Hence, we can say

that the two algorithms are data set sensitive, and we

can conjecture that their quality depends on the

distributions of the values of the attributes and their

decision classes.

6 CONCLUSIONS

For discretization based on rough set, we should

seek possible minimum number of discrete internals,

and at the same time it should not weaken the

indiscernibility ability. This paper examines two

algorithms (Hung Son Nguyen,1996), local

discretization and global discretization. Our

experiments show that the discretization algorithms

are dataset sensitive. Neither of them always

generates smaller number of result cuts. On some

datasets, one algorithm generates fewer result cuts,

but on other datasets it is contrary. We can

conjecture that the quality of the two algorithms

depends on the distributions of the values of the

continuous dataset attributes and their decision

classes. How the distributions affect the results is

what we will study further. With that, we can use

some methods to improve the algorithms.

REFERENCES

Pawlak Z (1982, November 5). Rough Sets. Int'l J.

Computer & Science [J], 11, 341-356.

Nguyen H S, Skowron A (1995). Quantization of real

value attributes. Proceedings of Second Joint Annual

Conf. on Information Science, Wrightsville Beach,

North Carolina, 34-37.

Nguyen H S (1997). Discretization of Real Value

Attributes: Boolean reasoning Approach [PhD

Dissertation]. Warsaw University Warsaw, Poland.

Hung Son Nguyen, Sinh Hoa Nguyen (1996). Some

efficient algorithms for rough set methods. In 6th

International conference on Information Processing

and Management of Uncertainty in Knowledge-Based

Systems, 1451-1456.

Jian-Hua Dai, Yuan-Xiang Li (2002, November 4-5).

Study on discretization based on rough set theory.

Proceedings of the First International Conference on

Machine Learning and Cybernetics, 3, 1371-1373.

MLR, http://www.ics.uci.edu/~mlearn/MLRepository.html

.

ICEIS 2004 - ARTIFICIAL INTELLIGENCE AND DECISION SUPPORT SYSTEMS

514