PREVISE

A Human-Scale Virtual Environment with Haptic Feedback

Françoix-Xavier Inglese

1

, Philippe Lucidarme

2

, Paul Richard

1

, Jean-Louis Ferrier

1

1

Laboratoire d’Ingéniérie des Systèmes Automatisés FRE 2656 CNRS, AngersUniversity,

62 Avenue Notre-Dam. du Lac, Angers, France

2

IUT d’Angers, 4, Bd Lavoisier, BP 42018, 49016, Angers Cedex

Keywords: Virtual reality, virtual environment, human-scale, haptic, application.

Abstract: This paper presents a human-scale multi-modal virtual environment. User interacts with virtual worlds using

a large-scale bimanual haptic interface called the SPIDAR-H. This interface is used to track user’s hands

movements and to display various aspects of force feedback associated mainly with contact, weight, and

inertia. In order to increase the accuracy of the system, a calibration method is proposed. A large-scale

virtual reality application was developed to evaluate the effect of haptic sensation in human performance in

tasks involving manual interaction with dynamic virtual objects. The user reaches for and grasps a flying

ball. Stereoscopic viewing and auditory feedback are provided to improve user’s immersion

1 INTRODUCTION

Virtual Reality (VR) is a computer-generated

immersive environment with which users have real-

time interactions that may involve visual feedback,

3D sound, haptic feedback, and even smell and taste

(Burdea, 1996 ; Richard, 1999 ; Bohm, 1992 ;

Chapin, 1992 ; Burdea, 1993 ; Sundgren, 1992 ;

Papin, 2003). By providing both multi-modal

interaction techniques and multi-sensorial

immersion, VR presents an exciting medium for the

study of human behavior and performance.

However, large-scale multi-modal Virtual

Environments (including haptic feedback) are few

(Richard, 1996 ; Bouguila, 2000).

Among the many interaction techniques developed

so far, the virtual hand metaphor is the most suited

for interaction with moving virtual objects

(Bowman, 2004). In this context tactual feedback is

an important source of information.

Therefore, we developed a human-scale Virtual

Environment (VE) based on the SPIDAR-H haptic

interface. In order to increase the accuracy of the

system, a calibration method is proposed. A large-

scale virtual reality application was developed to

evaluate the effect of haptic feedback on human

performance in tasks involving manual interaction

with dynamic virtual objects. In the next section, we

describe the multi-modal human-scale virtual

environment. Then we describe the proposed

calibration method. Finally, the virtual ball catching

application is described.

2 HUMAN-SCALE VE

2.1 Description

Our multi-modal VE is based on the SPIDAR

interface (Figure 1). In this system, a total of 8

motors for both hands are placed as surrounding the

user (Sato, 2001). Motors set up near the screen and

behind the user; drive the strings (strings between

hands and motors) attachments. One end of string

attachment is wrapped around a pulley driven by a

DC motor and the other is connected to the user’s

hand.

By controlling the tension and length of each

string attachment, the SPIDAR-H generates an

appropriate force using four string attachments

connected to a hand attachment. Because it is a

string-based system, it has a transparent property so

that the user can easily see the virtual world. It also

provides a space where the user can freely move

around. The string attachments are soft, so there is

no risk of the user hurting himself if he would get

entangled in the strings. This human-scale haptic

140

Inglese F., Lucidarme P., Richard P. and Ferrier J. (2005).

PREVISE - A Human-Scale Virtual Environment with Haptic Feedback.

In Proceedings of the Second International Conference on Informatics in Control, Automation and Robotics - Robotics and Automation, pages 140-145

DOI: 10.5220/0001167401400145

Copyright

c

SciTePress

device allows the user to manipulate virtual objects

and to naturally convey object physical properties to

the user’s body. Stereoscopic images are displayed

on a retro-projected large screen (2m x 2,5m) and

viewed using polarized glasses. A 5.1 immersive

sound system is used for simulation realism,

auditory feedback and sensorial immersion.

Olfactory information can be provided using a

battery of olfactory displays.

2.2 VE Workspace

Workspace is a well-know concept in robotics. The

workspace of a robot is the minimum space that

contains all the reachable positions of the end-

effector. In the case of the SPIDAR-H, the

workspace is also an important concept. This

workspace is divided in two spaces:

Reachable space : as defined by Tarrin and al. in

(Tarin, 2003) “The reachable space gather every

points users can reach with hands”

Haptic space: gather every point where the

system can produce a force in any direction.

The global workspace is defined by the

intersection of these two spaces. The figures 2.a and

2.b show the workspace of the reachable space; the

volume is mixed up with the frame of the SPIDAR.

The figures 2.c and d. show the haptic space. In

(Tarin, 2003), the authors consider that the

manipulation space is defined by the intersection of

right and left hand workspace, i.e. the intersection of

the four spaces described on figure 2. This

hypothesis is only true when the two hand

attachments are linked together (for position and

orientation for example as in SPIDAR-G (Kim,

2002)). In the following, hands are not linked; we

differentiate the spaces for right and left hand.

Note that the haptic space is described by a

tetrahedron. This shape is a theoretical workspace, in

practice; the real workspace is smaller than this

shape. Besides, the faces of the tetrahedron are not

included in the workspace. It seems natural that

when the hand attachment is located on the face, a

force can not be produced in any direction outside of

the space. To generalize, when the hand attachment

is close to the centre of the tetrahedron, the

SPIDAR-H is more efficient (it can produce an

important force in any direction).

In the following, we consider that the workspace is

approximately defined by the space described by

figures 2.c. and 2.d.

Encode

r

Pulley

Strin

g

s

Figure 1: Framework of the SPIDAR-H

DC Moto

r

(c) (d)

1

23

4

5

67

8

1

2 3

4

5

6 7

8

1

23

4

5

67

8

1

2 3

4

5

6 7

8

(a) (b)

Figure 2: reachable and haptic space of the SPIDAR-H.

(a. right hand reachable space, b. left hand reachable

space, c. right hand haptic space and d. left hand haptic

space)

PREVISE - A Human-Scale Virtual Environment with Haptic Feedback

141

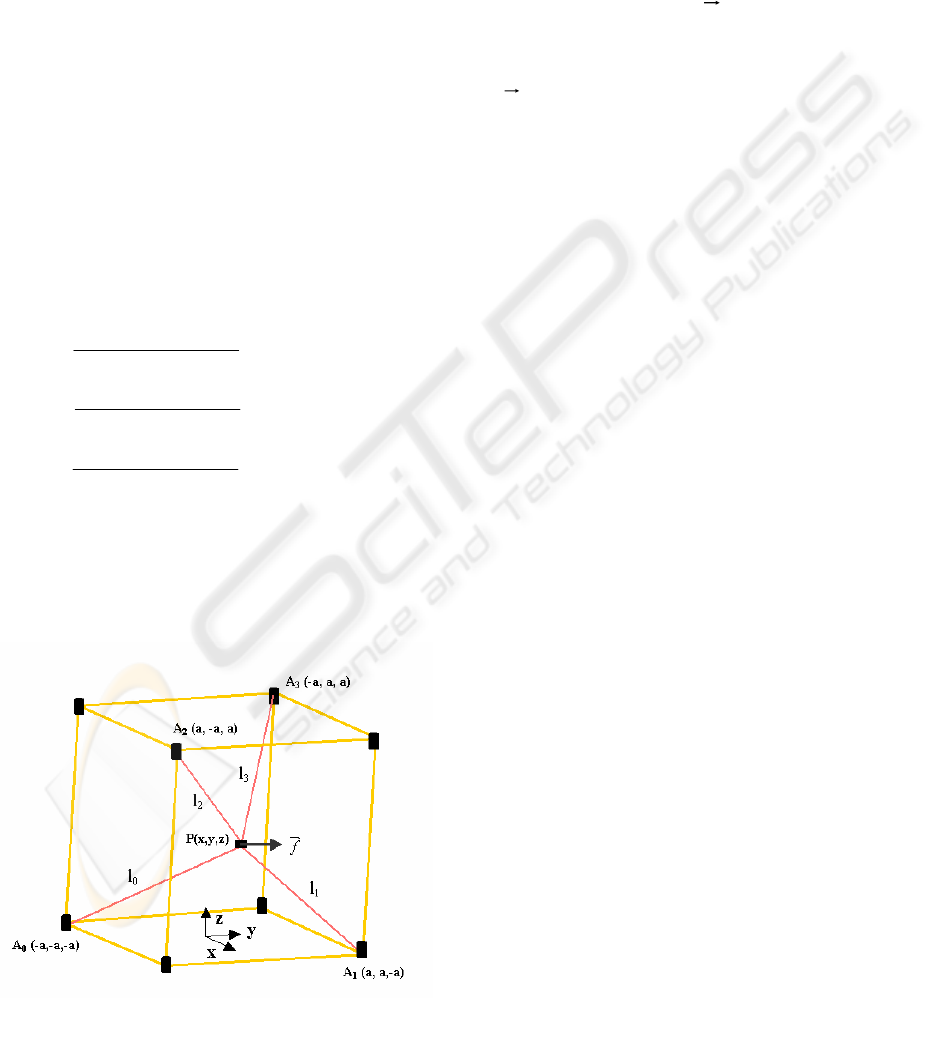

2.3 Position measurement

Let the coordinates of the hand attachment position

be P(x,y,z), which represent in the same time the

hand position, and the length of the i

th

string be li

(i=0, ..., 3). To simplify the problem, let the four

actuators (motor, pulley, encoder) Ai be on four not

adjacent vertexes of the cubic frame, as shown on

figure 3. Then P(x,y,z) must satisfy the following

equations (1-4).

After substracting the respective adjacent equations

among equation (1)-(4) and solve the simultaneous

equations, we can obtain the position of a hand

attachment (hand) as the following equation (5):

The coordinates origin is set to the centre of the

framework. The position measurement range of all

x, y, and z in [-1.25m, +1.25m]. The absolute static

position measurement error is less than 1.5cm inside

the position measurement range.

2.4 Force control

SPIDAR-H uses the resultant force of tension from

strings to provide force display. As the hand

attachment is suspended by four strings, giving

certain tensions to each of them by the mean of

motors, the resultant force occurs at the position of

the hand attachment, where transmitted to and felt

by the operator’s hand.

Let the resultant force be f and unit vector of the

tension be

i

u

G

(i=0,1,2,3), the resultant force is :

Where a

i represents the tension value of each

string attachment. By controlling all of the a

i ,a

resultant force in any direction can be composed.

2.5 Calibration method

As the workspace, calibration is also a well-known

concept in robotics. Calibration is a technique that

computes all the parameters of a robot, for example

the length of the links between two axes. On real

robots, this technique generally requires an external

measurement device that provide the absolute

position of the end-effector (coordinate

measurement machinery, theodolites). Unlike robots,

the SPIDAR-H can be calibrated without external

system according to the following hypothesis:

• The parameters to calibrate are the position

of each motor.

• The exact length of each string is known at

any time.

• The strings are long enough to reach any

motor.

• The junction of the string is considered as a

point.

In order to complete the calibration, it is

necessary to set the position and orientation of the

original frame. We have no warranty that the motors

are placed exactly on the corner of the cube, so,

taking the cube as original frame may be a wrong

hypothesis. Let’s define a new frame independent

from the cube. The origin of the frame is located on

motor 4. Concerning the orientation of the frame, we

arbitrary fixed the following relationship:

• the v axis is given by the vector [motor4 –

motor2],

• the plan v-w passes though motor 7.

Figure 3: Position measurement and resultant force

)4()²()²()²(²

)3()²()²()²(²

)2()²()²()²(²

)1()²()²()²(²

3

2

1

0

azayaxl

azayaxl

azayaxl

azayaxl

−+−++=

−+++−=

++−+−=

+

+

+++=

⎪

⎪

⎪

⎩

⎪

⎪

⎪

⎨

⎧

−−+

=

−+−

=

+−−

=

a

llll

z

a

llll

y

a

llll

x

8

²)²²²(

)5(

8

²)²²²(

8

²)²²²(

3210

3210

3210

)0(

3

0

〉=

∑

=

ii

i

i

auaf

G

ICINCO 2005 - ROBOTICS AND AUTOMATION

142

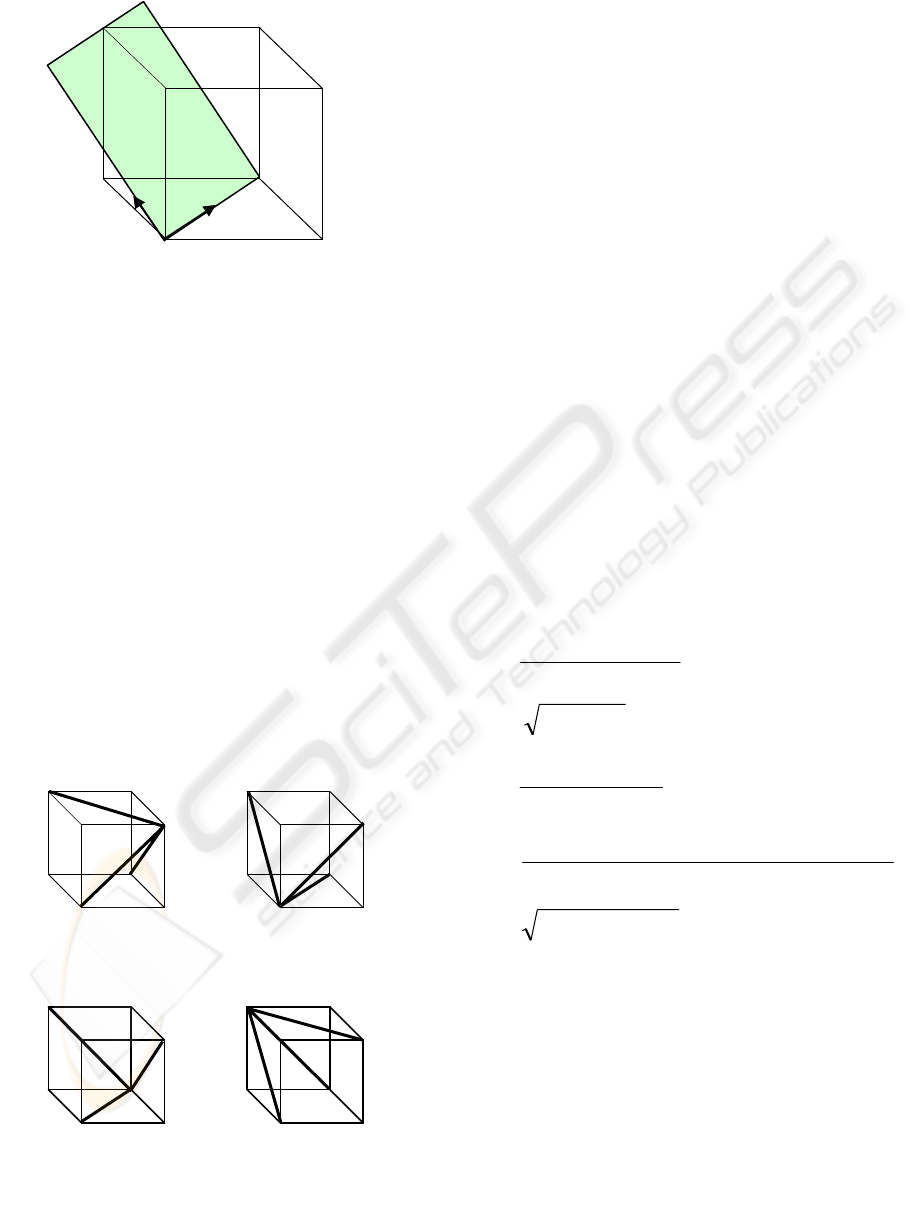

The figure 4 illustrates the position and orientation

of the frame. According to the previous

relationships, the position of each motor can be

defined by:

The first phase of calibration consists in positioning

the end-effector of the SPIDAR-H at some positions

called “exciting positions”. Figure 5 shows the 4

exciting positions.

Each position produces 3 data: the length of the

three strings:

• Position A

2

(a) gives l

45

, l

25

and l

57

,

• Position A

0

(b) gives l

42

, l

47

and l

45

,

• Position A

1

(c) gives l

42

, l

25

and l

27

,

• Position A

3

(d) gives l

47

, l

27

and l

57

.

Where l

ij

is the distance between motor i and

motor j. These lengths provide the six equations of

the following system where v

2

, v

7

, w

7

, u

5

, v

5

and w

5

are the six parameters to calibrate.

The system is solvable and provides the following

parameters:

Theses results prove that the SPIDAR-H can be

calibrated without external system. The correctness

of this demonstration was confirmed by simulations.

Note 1: if the position and orientation of any motor

is known in an external frame, it becomes easy to

transfer the position of each motor in this new

external frame.

Note 2: if a high accuracy is needed, last square

methods can be used (each length is measured at

least two times: l

45

is given by positions a. and b.).

2

5

7

4

v

w

Figure 4: position and orientation of the original

frame

⎥

⎥

⎥

⎦

⎤

⎢

⎢

⎢

⎣

⎡

0

0

0

:4Motor

⎥

⎥

⎥

⎦

⎤

⎢

⎢

⎢

⎣

⎡

0

0

:2

2

vMotor

⎥

⎥

⎥

⎦

⎤

⎢

⎢

⎢

⎣

⎡

7

7

0

:7

w

vMotor

⎥

⎥

⎥

⎦

⎤

⎢

⎢

⎢

⎣

⎡

5

5

5

:5

w

v

u

Motor

2

4

5

7

2

4

5

7

2

4

5

7

2

4

5

7

(a) (b)

(c) (d)

Figure 5: exciting positions

⎪

⎪

⎪

⎪

⎩

⎪

⎪

⎪

⎪

⎨

⎧

−+−+=

+−+=

+−=

++=

+=

=

)²()²(²²

²)²(²²

²)²(²

²²²²

²²²

²²

5757575

525525

72727

55545

7747

242

wwvvul

wvvul

wvyl

wvul

wvl

vl

²²

7177

vlw −=

2

21727

7

2

²²²

v

vll

v

+

+

−

=

422

lv

=

2

22545

5

2

²²²

v

vll

v

+

−

=

7

725577525

5

2

²)²()²(²²

w

wvvvvll

w

+

−−

−

+

−

=

²²²

55157

wvlu −−=

PREVISE - A Human-Scale Virtual Environment with Haptic Feedback

143

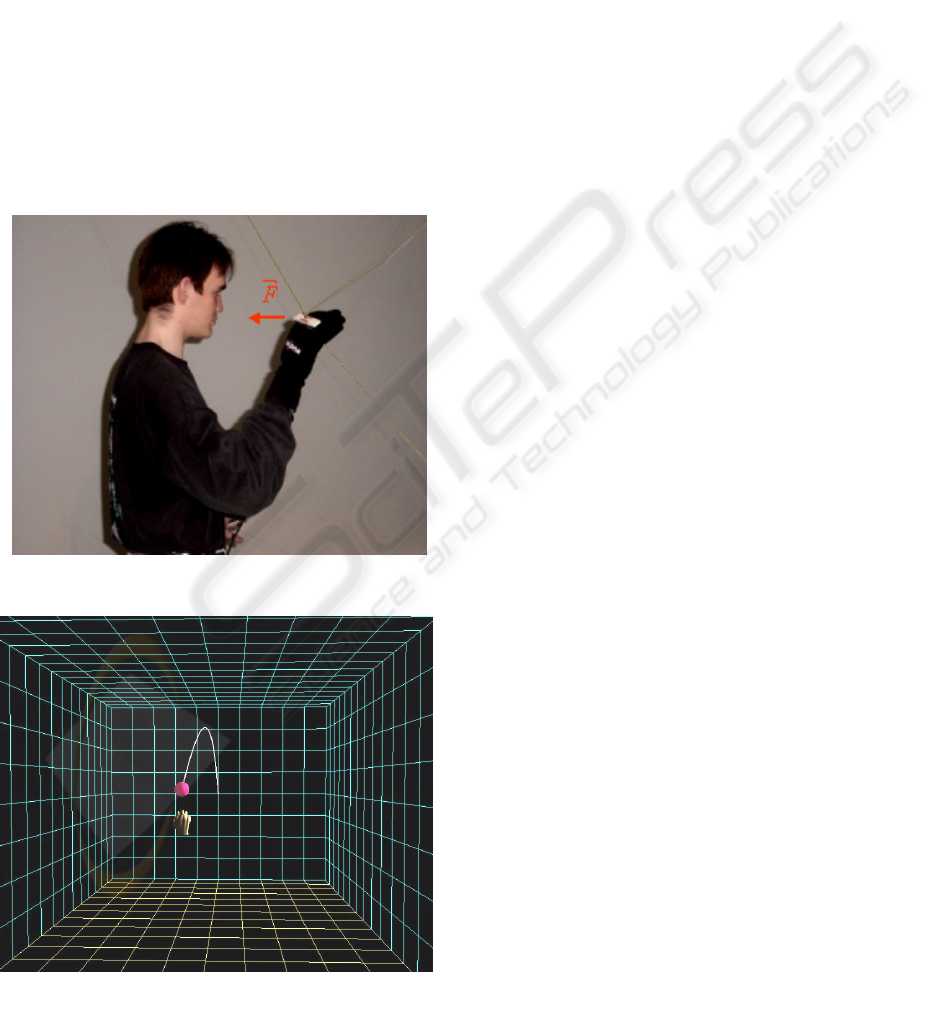

3 VIRTUAL CATCH BALL

The virtual catch ball application was developed for

human performance evaluation in tasks involving

reaching and grasping movements towards flying

virtual objects. Such experimental paradigm has

been used in many studies (Bideau, 2003 ; Zaal,

2003 ; Richard, 1994). However, a few of these

involve haptic feedback (Richard, 1998 ; Jeong,

2004). In our application, the user catches a flying

ball using a data glove (NoDNA) integrated in the

SPIDAR interface. The user could therefore feel the

ball hitting his/her virtual hand. A user catching a

virtual ball is shown on Figure 6. A snapshot of the

ball catching environment is illustrated in Figure 7.

The catch ball simulation was developed in C/C++.

OpenGL Graphics Library (Woo, 1999) was used as

well as some video games tools and tutorials (Astle,

2001).

4 CONCLUSION AND FUTURE

WORK

We present a human-scale multi-modal virtual

environment with haptic feedback. The user interacts

with virtual worlds using a large-scale bimanual

haptic interface called the SPIDAR-H. This interface

is used to track user’s hands movements and to

display various aspects of force feedback associated

mainly with contact, weight, and inertia. In order to

increase the accuracy of the system, a calibration

method is proposed. A virtual reality application was

developed. The user reaches for and grasps a flying

ball. Stereoscopic viewing and auditory feedback are

provided to improve user’s immersion.

In the near future, human performance evaluation

involving ball catching tasks will be carried out.

Moreover, the developed VE will serve as an

experimental test-bed for the evaluation of different

interaction techniques.

REFERENCES

G. Burdea, Ph. Coiffet., and P. Richard, ”Integration of

multi-modal I/Os for Virtual Environments”,

International Journal of Human-Computer Interaction

(IJHCI), Special Issue on Human-Virtual Environment

Interaction, March, (1), pp. 5-24, 1996.

P. Richard, and Ph. Coiffet, ”Dextrous haptic interaction

in Virtual Environments: human performance

evaluation”, Proceedings of the 8th IEEE International

Workshop on Robot and Human Interaction, October

27-29, Pisa, Italy, pp. 315-320, 1999.

K. Bohm, K. Hubner, and W. Vaanaen, ”GIVEN: Gesture

driven Interactions in Virtual Environments. A Toolkit

Approach to 3D Interactions”, Proceedings Interfaces

to Real and Virtual Worlds, Montpellier, France,

March, pp. 243-254, 1992.

W. Chapin, and S. Foster, “Virtual Environment Display

for a 3D Audio Room Simulation”, Proceedings of

SPIE Stereoscopic Display and Applications, Vol.12,

1992.

G. Burdea, D. Gomez, and N. Langrana, , ”Distributed

Virtual Force Feedback”, IEEE Workshop on Force

Display in Virtual Environments and its Application to

Robotic Teleoperation, Atlanta, May 2, 1993.

H. Sundgren, F. Winquist, and I. Lundstrom, “Artificial

Olfactory System Based on Field Effect Devices”,

Proceedings of Interfaces to Real and Virtual Worlds,

Montpellier, France, pp. 463-472, March. 1992.

J-P. Papin, M. Bouallagui, A. Ouali, P. Richard, A. Tijou,

P. Poisson, W. Bartoli, ”DIODE: Smell-diffusion in

real and virtual environments”, 5th International

Fi

g

ure 6: A user catchin

g

a virtual fl

y

in

g

ball

Fi

g

ure 7: Sna

p

shot of the ball catchin

g

worl

d

ICINCO 2005 - ROBOTICS AND AUTOMATION

144

Conference on Virtual Reality (VRIC 03), Laval,

France, pp.113-117, May 14-17, 2003.

Bowman, D.A., Kruijff, E., LaViola, J.J., and Poupyrev, I.,

3D User Interfaces: Theory and Practice, Addison

Wesley / Pearson Education, Summer 2004.

Richard P., Birebent G., Burdea G., Gomez D., Langrana

N., and Coiffet Ph., (1996) "Effect of frame rate and

force feedback on virtual objects manipulation",

Presence - Teleoperators and Virtual Environments,

MIT Press, 15, pp. 95-108.

L. Bouguila, M. Ishii, M. Sato, “A Large Workspace

Haptic Device For Human-Scale Virtual

Environments”, Workshop on Haptic Human-

Computer Interaction, Scotland, 2000

Sato, M. “Evolution of SPIDAR”, 3

rd

International Virtual

Reality Conference (VRIC 2001), Laval, May, France.

N. Tarrin, S. Coquillart, S. Hasegawa, L. Bouguila, M.

Sato, “The sthand attachmented haptic workbench: a

new haptic workbench solution” Eurographics 2003, P.

Brunet and D. Fellner, vol. 22, Number 3.

S. Kim, S. Hasegawa, Y. Koike, M. Sato, “Tension based

7-DOF force feedback device: SPIDAR-G”, Proc. of

the IEEE Virtual Reality 2002.

B. Bideau, R. Kulpa, S. Ménardais, L. Fradet, F. Multon,

P. Delamarche, B. Arnaldi, “Real handball goalkeeper

vs. virtual handball thrower”, Presence: Teleoperators

and Virtual Environment, 12:4 (August 2003), Fourth

international workshop on presence, pp. 411 - 421

F. T. J. M. Zaal, & C. F. Michales, “The Information for

Catching Fly Balls: Judging and Intercepting Virtual

Balls in a CAVE”, Journal of Experimental

Psychology: Human Perception and Performance,

2003, 29:3, pp. 537-555

Richard P., Burdea G., and Coiffet Ph., (1994) "Effect of

graphics update rate on human performance in a

dynamic Virtual World", Proceedings of the Third

International Conference on Automation Technology

(Automation' 94), July, Taipei, Taiwan, Vol. 6. pp.1-5.

Richard P., Hareux Ph., Burdea G., and Coiffet Ph.,

(1998) "Effect of stereoscopic viewing on human

tracking performance in dynamic virtual

environments", Proceedings of the First International

Conference on Virtual Worlds (Virtual

Worlds'98), International Institute of Multimedia, July

1-3, Paris, France, LNAI 1434, pp. 97-106

Jeong S., Naoki H., Sato M., “A Novel Interaction System

with Force Feedback between Real and Virtual

Human”, ACM SIGCHI International Conference on

Advances in Computer Entertainment Technology ACE

2004, Singapore, June 2004

NoDNA, http://www.nodna.com

M. Woo, J. Neider, T. Davis, D. Shreiner., ”OpenGL(R)

Programming Guide : The Official Guide to Learning

OpenGL”, Version 1.2 (3rd Edition), OpenGL

Architecture Review Board, Addison-Wesley Pub Co;

3rd edition ; ISBN: 0201604582, 1999.

Astle, K. Hawkins A. LaMothe, ”OpenGL Game

Programming” Game Development Series, Premier

Press, ISBN: 0761533303, 2001.

PREVISE - A Human-Scale Virtual Environment with Haptic Feedback

145