A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR

CORRECTNESS AND PERFORMANCE

Amit Kumar Mandal, Chittaranjan Mandal

School of Information Technology

IIT Kharagpur, WB 721302, India

Chris Reade

Kingston Business School

Kingston University

Keywords:

Automatic Evaluation, XML Schema, Program Testing, Course Management System.

Abstract:

This paper describes a model and implementation of a system for automatically testing, evaluating, grading

and providing critical feedback for the submitted programming assignments. Complete automation of the

evaluation process, with proper attention towards monitoring student’s progress and performing a structured

level analysis is addressed. The tool provides on-line support to both the evaluators and students with the level

of granularity, flexibility and consistency that is difficult or impossible to achieve manually.

1 INTRODUCTION

The paper presents both the internal working and ex-

ternal interface of an automated tool that assists the

evaluators/tutors by automatically evaluating, mark-

ing and providing critical feedback for the program-

ming assignments submitted by the students. The tool

helps the evaluator by reducing the manual work by a

considerable amount. As a result the evaluator saves

time that can be applied to other productive work,

such as setting up intelligent and useful assignments

for the students, spending more time with the students

to clear misunderstandings and other problems.

The problem of automatic and semi-automatic

evaluation has been highlighted several times in the

past and a considerable amount of innovative work

has been suggested to overcome the problem. In this

paper we will be discussing the key approaches in the

literature. Although our approach is currently limited

to evaluating only C programs, the design and im-

plementation of the system has been done in such a

way that it takes over almost all of the burden from

the shoulders of the students and the tutors. The tool

can be controlled and used through the Internet. The

evaluator and students can be in any part of the world

and they can freely communicate through the system.

Hence, the system helps in bringing alive the scenario

of distant learning which is a crucial improvement for

any large educational institute or university because

they might have extension centers anywhere across

the world. The same tutors can provide services to

all the students in any such extension center.

2 MOTIVATION

The scenario that motivated us to build such a system

was the huge cohorts of students in almost all big ed-

ucational institutions or universities across the world.

In almost all the big engineering institutes or univer-

sities the intake of undergraduates is around 600 - 800

students. As a part of their curriculum, at the place of

development of this tool, the students need to attend

labs and courses and in every lab each student has to

submit about 9-12 assignments and take up to three

lab tests. That amounts to nearly 10000 submissions

per semester. Even if the load is distributed among 20

evaluators, each evaluator is required to test almost

500 assignments. Without automation, the evaluators

would be busy most of time in testing and grading

work at the expense of time spent with the students

and also on setting up useful assignments for them.

3 RELATED WORK

A variety of noteworthy systems have been devel-

oped to address the problem of automatic and semi-

automatic evaluation of programming assignments.

Some of the early systems include TRY (Reek, 1989)

and ASSYST (Jackson and M., 1997). Schemer-

196

Kumar Mandal A., Mandal C. and Reade C. (2006).

A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR CORRECTNESS AND PERFORMANCE.

In Proceedings of WEBIST 2006 - Second International Conference on Web Information Systems and Technologies - Society, e-Business and

e-Government / e-Learning, pages 196-203

DOI: 10.5220/0001251601960203

Copyright

c

SciTePress

obo (Saikkonen et al., 2001) is an automatic assess-

ment system for programming exercises written in

the Scheme programming language, and takes as in-

put a solution to an exercise and checks for correct-

ness of function by comparing return values against

the model solution. The automatic evaluation sys-

tems that are being developed nowadays have a web

interface, so that the system can be accessed univer-

sally through any platform. Such systems include

GAME (Blumenstein et al., 2004), SUBMIT (Pisan

et al., 2003) and (Juedes, 2003). Other systems

such as (Baker et al., 1999), Pisan et al(2003) and

Juedes(2003) developed a mechanism for providing

a detailed and rapid feedback to the student. Sys-

tems like (Luck and Joy, 1999), (Benford et al., 1993)

are integrated with a course maker system in order to

manage the files and records in a better way.

Most of the early systems were dedicated to solve

a particular problem. For example, some systems are

concerned with the grading issue thus turning their

attention from quality testing. Others are concerned

with providing formative feedback and loosing their

attention towards grading. We are concerned with ad-

dressing all aspects of evaluation whether it is testing,

grading or feedback. The whole design and imple-

mentation of our system was focused toward bringing

comfort to the evaluators as well as students, without

being partial towards the quality of testing and grad-

ing being done. Our aim was to test the programs

from all possible dimensions i.e. testing on random

inputs, testing on user defined inputs, testing on ex-

ecution time as well as space complexity, perform-

ing a perfect style assessment of the programs. Sec-

ondly, security was given much attention because, as

the system was to be used by fresh under graduate stu-

dents who do not have many programming skills, they

might unknowingly cause harm to the system. Our

third focus of attention was grading, which should

not impose crude classification such as zero or full

marks. Efforts have been made to make the grading

process simulate the manual grading process as much

as possible. Fourthly, the comments should not be

confined to just a general list of errors that any stu-

dent is likely to commit. All the comments should be

specific to a particular assignment. As has been al-

ready mentioned, the system currently supports only

the C language, so during implementation, the fifth

and foremost focus was to make the model and design

as general as possible so that the system could be eas-

ily upgraded to support other popular languages like

C++, Java etc.

4 SYSTEM OVERVIEW

4.1 Testing Approach

Black Box testing, Grey Box testing, and White Box

testing are the choices available in literature to test

a particular program. In Black Box testing, a par-

ticular submission is treated as a single entity and

the overall output of the programs are tested. In

Grey Box testing, component/function final output is

tested. White box testing allows structure, program-

ming logic as well as behavior to be evaluated. In an

environment where the students are learning to pro-

gram, testing only the conformance of the final output

is not workable because conformance to an overall in-

put/output requirement is particularly hard to achieve.

Evaluations that relied on this would exclude con-

structive evaluation for a majority of students. Grey

Box approaches involve exercising individual func-

tions within the student’s programs and are, unfortu-

nately, language sensitive. Looking at the pitfalls of

the above two strategies our decision was to choose

the White Box approach, which is more general than

the Black Box approach. White Box testing involves

exercising the intermediate results generated by the

functions, therefore, whether the program has been

written in a particular way, following a particular al-

gorithm and using certain data structures can be as-

certained with greater probability.

4.2 High Level System Architecture

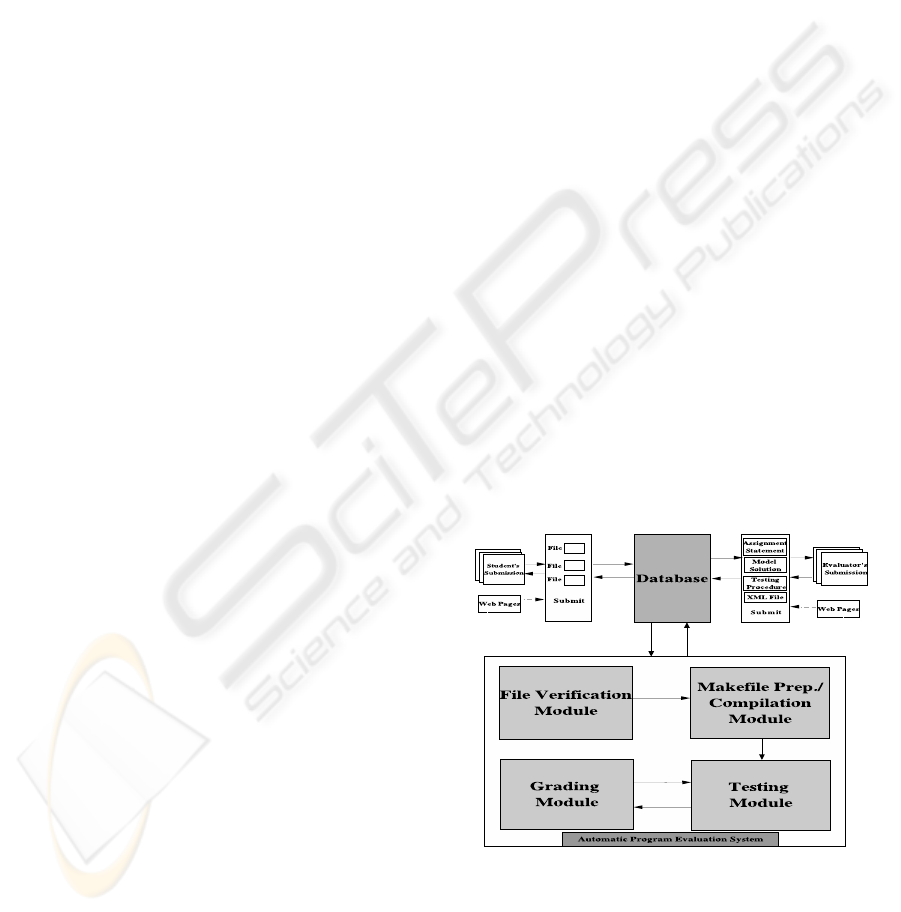

Figure 1: High Level System Architecture.

Fig.1 shows the High Level System Architecture

diagram, the whole process of automatic evaluation

begins with the evaluator’s submission of the assign-

ment descriptor, model solution of the problem stated

A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR CORRECTNESS AND PERFORMANCE

197

in the assignment descriptor, testing procedure and an

XML file. After the evaluator has finished the submis-

sion for a particular assignment, the system checks

the validity of the XML file against an XML Schema.

If the result of XML validation is negative, the sys-

tem halts any further processing of the file and the

errors are returned to the evaluator. The evaluator has

to correct the errors and then resubmit the files. If the

validation of the XML file against the XML Schema

is positive, then the assignment description becomes

available for students to download or view and subse-

quently submit their solutions.

These submissions are stored in a database. The

system accepts the submissions only if they are sub-

mitted within the valid dates specified by the evalua-

tor. The next criteria that should be passed for com-

pletion of submission is that proper file names are

used, as specified by the evaluator in the assignment

description. The above operations are performed by

the File Verification module. After its operation is

complete the next module which comes into the pic-

ture is the Makefile Prep/Compilation module with

the task of preparing a makefile and then using this

makefile to compile the code. This module gener-

ates feedback based on the result of the compilation.

I.e. if there are any compilation errors, then the sys-

tem rejects the submission and returns the compila-

tion errors to the student. Thus the submission is com-

plete only if the student is able to pass the operations

of both file verification module and the compilation

module. After the submissions are complete the next

module executed is the testing module which includes

both dynamic testing as well as static testing. The dy-

namic testing module accepts students’ submissions

as input, generates random numbers using the random

number generator module

1

(not shown in figure). It

then uses the testing procedures provided by the eval-

uator to test a program on the randomly generated

inputs. Sometimes it is not enough to test the pro-

gram on randomly generated inputs (e.g. where the

test fails for some boundary conditions). By using the

randomly generated inputs we cannot be sure that the

program undergoes specific boundary tests on which

the test may fail.

To overcome this drawback, facilities have been

provided by which the evaluator can specify his/her

own test cases. The Evaluators have the freedom to

specify the user defined inputs either directly in the

XML file or he may also specify the user defined in-

puts in a text file (if the number of inputs is signif-

icant). This is an optional facility and the evaluator

may or may not be using it based on the requirements

of the assignment. If the results of testing the pro-

gram on random input as well as user defined inputs

1

This module generate the random numbers required for

testing the programs

are positive, then the next phase of testing that be-

gins is the correctness of the program on execution

time as well as space complexity. During this test-

ing, the execution time and the space usage of the

user program are compared against the execution time

and the space usage of the model solutions submitted

by the evaluator. During this testing, the execution

time of the student program as well as the evalua-

tor’s program are determined for a large number of

inputs. The execution time of the student’s program

and the evaluator’s program are compared and ana-

lyzed to determine whether the execution time is ac-

ceptable or not. If the test for space and time complex-

ity goes well then the next phase is the Static Analysis

or the Style analysis. Static testing involves measur-

ing some of the characteristics of the program such as,

average number of characters per line, percentage of

blank lines, percentage of comments included in the

program, total program length, total number of condi-

tional statements, total number of goto, continue and

break statements, total number of looping statements,

etc. All these characteristics are measured and com-

pared with the model values specified by the evaluator

in the XML file. Testing and Grading modules works

hand in hand as testing and grading are done simulta-

neously. For example, after testing a program on ran-

dom inputs as well as user defined inputs is over, the

grading for that part is done at that moment, without

waiting until all testing is complete. If at any point

of time during testing, the programs are rejected or

aborted, then the grading that has been done up to that

point is discarded (but feedback is not discarded).

Security is a non-trivial concern in our system

because automatic evaluation, almost invariably, in-

volves executing potentially unsafe programs. This

system is designed under the assumption that pro-

grams may be unsafe and executes programs within

a ’sand-box’ using regular system restrictions.

5 AN EXAMPLE

Let us start with an example that is very common for

a data structures course, for example: the Mergesort

Program. As the regular structure of a C program con-

sists of a main function and a number of other func-

tions performing different activities, we have decided

to break down the Mergesort Program into two func-

tions (1) Mergesort function - This function performs

the sorting by recursively calling itself and the merge

function. (2) Merge function - This function will ac-

cept two sorted arrays as input and then merge them

into a third array which is also sorted. As a White

Box testing strategy is followed, testing and award-

ing marks on correct output generated by the program

will be of no use because this will not distinguish be-

WEBIST 2006 - E-LEARNING

198

tween different methods of sorting (Quick sort, Selec-

tion sort, Heap sort, Insertion sort etc.).

5.1 Our Approach

Our approach is to test the intermediate results that the

function is generating, instead of a function final out-

put or the program final output. This section explains

one approach by which the mergesort program can be

tested. Our aim is to test that the student has writ-

ten the mergesort and the merge function correctly.

Our strategy is to check each step in the mergesort

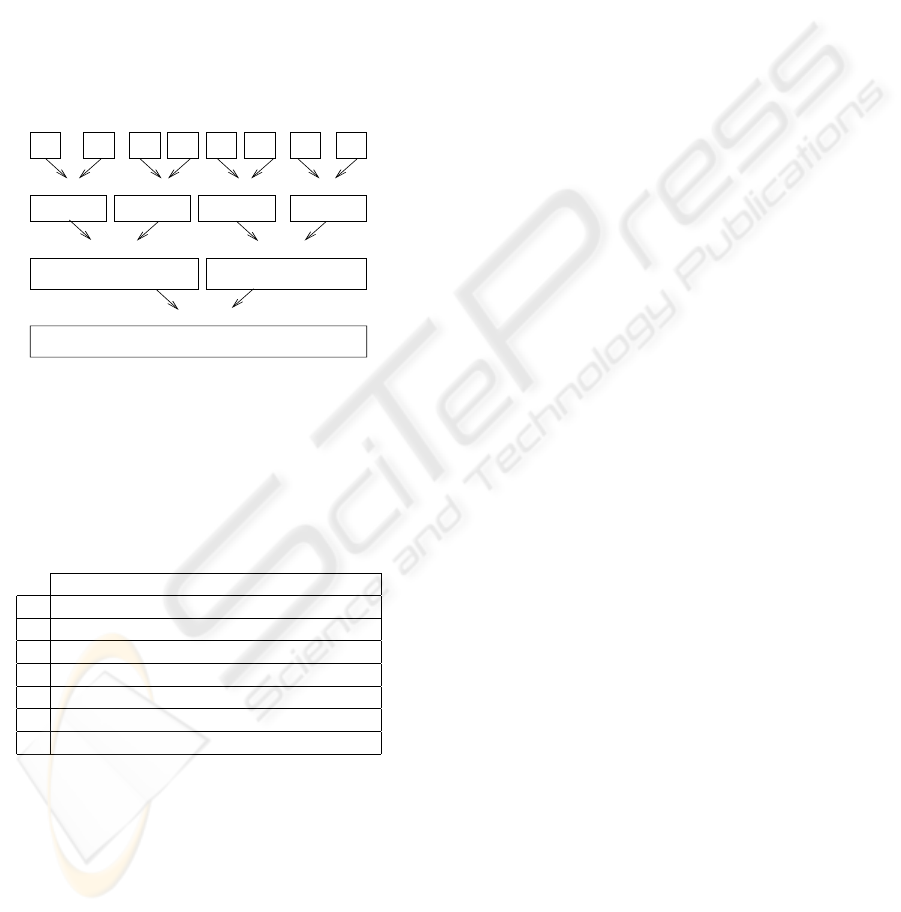

program. Fig.2 explains the general working of the

mergesort program. Our approach is to catch data at

each step

2 4 23 42 1 9 12 4

5

2 4 23 42 9 45 1 2

4 2 23 42 9 45 12 1

1 2 4 9 12 23 42 45

mergge #1 mergge #2

mergge #3

mergge #4 mergge #5

mergge #6

mergge #7

Figure 2: Working Principle of Mergesort program.

(i.e. merge#1 to merge#7 of the example). This

can be done by using an extra two dimensional array

of size (n) * (n-1). The idea is to transfer the array

contents to this two dimensional array at the end of

the merge function.

01234567

0 242342 94512 1

1 242342 94512 1

2 242342 94512 1

3 242342 94512 1

4 242342 945 112

5 242342 1 91245

6 1 2 4 9 12 23 42 45

Figure 3: Two Dimensional Array.

After the execution of the student‘s program, a two

dimensional array similar to Fig.3 will be created, this

array can be compared with the two dimensional array

that is formed by the model solution. If both the ar-

rays are exactly the same then the student is awarded

marks, but if the arrays are not similar, then the ac-

tion initiated depends upon the action specified by the

evaluator in the XML file. As has been mentioned

previously, the array contents need to be transfered

to the two dimensional array at the end of the merge

function. This operation should be performed by the

function as shown in Fig.4.

transfer(int

*

a, int size, int

*

b)

{int i;

for (i=0;i<size;i++)

*

(b + depth

*

size +i) =

*

(a+i);

depth = depth + 1;

}

Figure 4: Auxiliary Transfer Function.

5.2 Assignment Descriptor for the

Mergesort Program

The assignment descriptor is one of the crucial sub-

missions necessary for the successful completion of

the whole auto-evaluation process. If the assignment

descriptor is not setup up in a correct manner, it would

be difficult for the student to understand the prob-

lem statement properly and most students will end up

with a faulty submission. We have considered some

of the general-purpose properties that an assignment

descriptor should have: (1)Simple and easy to un-

derstand language should be used, so that it becomes

easy for the student to understand the problem state-

ment; (2)Prototypes of the function to be submitted

by the student must be specified clearly in the de-

scriptor; (3) The necessary makefile and the auxiliary

functions (for example, the transfer function shown in

Fig.4) should be supplied as a part of the assignment

descriptor to make it easier for the student to reach the

submission stage.

5.3 Evaluator’s Interaction

The Automatic Evaluation process cannot be accom-

plished without the valuable support of the evaluator.

The evaluator needs to communicate a large number

of inputs to the system. Since the number of inputs

are large, providing a web interface is not a good idea

to accept the values because it is cumbersome both

for the evaluator to enter the values and for the sys-

tem to accept and manage the data properly. Our idea

is that, the evaluator will provide the inputs in a single

XML file. In this XML file the evaluator is required

to use predefined XML tags and attributes to specify

the inputs. The XML is used to specify the follow-

ing information: (1)Name of the files to be submitted

by the students. (2)How to generate the test cases.

(3)How to generate the makefile. (4)Marks distribu-

tion for each function to be tested (5)Necessary inputs

to carry out static analysis of the program

A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR CORRECTNESS AND PERFORMANCE

199

Along with the XML file, the evaluator is also re-

quired to submit the model solution for the prob-

lem, Testing Procedure and the Assignment descrip-

tor. The XML file is crucial because it controls the

working of the system and if the XML file is wrong

then the whole automatic evaluation process becomes

unstable. Therefore it is necessary to ensure that the

XML file is correct which can be done, to some ex-

tent, by validating the XML file against the XML

Schema.

2

. Fig.5 shows only a part of the main XML

file that is submitted. This portion of the XML is used

by the module that checks for proper file submissions.

Fig.6 shows the part of the XML schema that is used

to validate the part of the XML file as shown in Fig.5.

<source_files>

<file name="main_mergesort.c">

<text>File containing the main

function</text>

</file><file name="merge.c">

<text>File containing the merge-

function</text>

</file><file name="mergesort.c">

<text>File containing the merge-

sort function</text>

</file></source_files>

Figure 5: XML Spec. for checking file submission.

<xs:element name = "source_files">

<xs:complexType><xs:sequence>

<xs:element name="file" maxOccurs-

="unbounded">

<xs:complexType> <xs:sequence>

<xs:element name="text" minOccurs

="0"> <xs:simpleType>

<xs:restriction base="xs:string">

<xs:minLength value="0"/>

<xs:maxLength value="75"/>

</xs:restriction></xs:simpleType>

</xs:element></xs:sequence>

<xs:attribute name="name" type=

"xs:string">

</xs:attribute></xs:complexType>

</xs:element> </xs:sequence>

</xs:complexType> </xs:element>

Figure 6: XML Schema validating XML in Fig.5.

2

The purpose of the XML Schema is to define legal

building blocks of an XML document. An XML Schema

can be used to define the elements that can appear in a docu-

ment, define attributes that can appear in a document, define

data types of elements and attributes etc

5.4 Input Generation

The Automatic Program Evaluation System is a so-

phisticated tool, which evaluates the program using

the following criteria:

• Correctness on dynamically generated random

numbers

• Correctness on user defined inputs

• Correctness on time as well as space complexity

• Performs style assessment of the Programs

• Number of Looping statements

• Number of Conditional statement.

Initially the programs are tested on randomly gen-

erated inputs. For effective testing we cannot rely on

fixed data because they are vulnerable to replay at-

tacks. Evaluators have the option to write down their

own routines in the testing procedures to generate in-

puts or, alternatively, the system provides some assis-

tance in the generation of inputs. Currently the op-

tions are supported are as follows:

random integers:

(array / single)

(un-sorted/sorted ascending/sorted

descending)

(positive / negative / mixed)

random floats:

(array / single)

(un-sorted/sorted ascending/sorted

descending)

(positive / negative / mixed)

strings:

(array / single)

(fixed length/variable length)

The evaluator can express his/her choice of the ran-

dom numbers on which he/she wants the program-

ming assignments to be tested in the XML file. Fig.7

shows the example of the XML statement that is used

to generate input for the mergesort program.

<testing>

<generation iterations="100">

<input inputvar="a" vartype="array"

type="float" range="positive"min= "10"

max="5000"sequence="ascend">

<arraysize>50</arraysize></input>

</generation>

<user

specified source="included">

<input values="6,45,67,32,69,2,4">

</input>

<input values="5,89,39,95,79,7">

</input></user

specified></testing>

Figure 7: XML Specification for Input Generation.

WEBIST 2006 - E-LEARNING

200

The evaluator/tutor should specify the inputs in the

testing element, the generation element is used to

specify random inputs to be generated. This element

has only one attribute named iterations and the iter-

ations attribute can be used to specify the number of

times the evaluator needs to test a program on ran-

dom inputs. Any number of input elements can be

placed between its starting and closing generation tag.

The input element offers a lot of attributes to spec-

ify the type of random number to be generated. The

vartype attribute of an input element is used to spec-

ify whether the randomly generated value should be

an array or single value. The min and max attributes

can be used to generate the inputs within a range. If

the attribute is not mentioned explicitly in the XML

then the system sets these attributes to the default val-

ues. The type attribute can be set to one of the three

values integer, float or string. Another important at-

tribute of input elements is the range attribute. This

attribute is used to specify whether positive, nega-

tive or mixed (mixture of positive and negative) val-

ues is to be generated. The inputvar is the attribute

that is used to name the variable to be generated. For

example in Fig.7, the array which is generated ran-

domly will be stored in a. Sometimes the evaluator

may choose to generate the values in some order (as-

cending or descending) and this problem is resolved

by the sequence attribute which can be set to any of

the two values ( ascend or descend). Every element

that is specified between the tags of user

specified is

used to supply user defined inputs to the program.

The user

specified elements have only one attribute

named source which can take one of the two values

(included or file). If the attribute is set to included

then the system looks for the user defined inputs in the

XML itself, on the other hand if the attribute is set to

file, then the system looks for the user defined inputs

in a text file. Similar to the generation element, the

user

specified element can have any number of input

elements, but here the input element can have only

one attribute named values. All the user defined in-

puts should be specified following the same sequence

as followed for the generation of random inputs.

In Fig.7 an array(vartype=“array”) of size

50(<arraysize>50</arraysize>), containing ascend-

ing(sequence=“ascend”) positive(range=“positive”)

float(type=“float”) values in the range of

10(min=“10”) and 5000(max=“5000”) is generated

and supplied as input to the mergesort program. The

above procedure is iterated 100(iterations=“100”)

times. Fig.7 also shows that, two user defined

inputs have been provided by the evaluator, these

user defined inputs are supplied as is to the testing

procedures.

5.5 Grading the Programs

The grading process is made as flexible and granular

as possible. During testing of a program on user de-

fined inputs, suppose the program is unsuccessful in

satisfying the output of the model solution. At this

moment the XML is parsed to determine the choice

mentioned by the evaluator. The evaluator may have

chosen to abort the test and award zero marks to the

student or, alternatively, the evaluator/tutor may have

chosen to move forward and test the program on other

criteria. If the evaluator had decided to test the pro-

gram on other criteria, then the tutor has the flexibility

to either award full marks, zero marks or a fraction of

the full marks meant for testing on random inputs and

user defined inputs. Fig.8 shows the example XML

for grading the Mergesort program.

<status marks="50" abort on fail="true">

<item value="0" factor="1"

fail="false"><text>Fine</text></item>

<item value="1"factor="0.6"

fail="false"><text>Improper</text>

</item> <item value="2" factor="0"

fail="true"><text>Wrong</text></item>

</status>

Figure 8: XML Specification for Grading.

Based on the result of the execution, some value is

awarded to the program. For example, if the result of

execution of the mergesort program is correct for both

the user defined inputs as well as random inputs then

the value awarded to the program is 0. If the result

is incorrect for user defined inputs or random inputs,

then the value awarded is 1. If the result of execution

is incorrect for both the tests, then the value awarded

is 2. Based on the value awarded, marking is done,

i.e. if the value is 0 full marks are awarded for the

test (factor = “1”), if the value is 1, then only 60%

marks are awarded (factor=“0.6”) and if the value is

2 then no marks are awarded, the program is given

fail status(fail=“true”) and the program execution is

aborted(abort

on fail=“true”).

5.6 Commenting on Programs

The system generates feedback comments, which are

specific to a particular assignment. During testing

the programs on random and user-defined inputs, if

the test fails for some particular random input, then

the comment would contain the specific information

about the random input on which the test has failed.

The student can run his/her program on the random

input and find out what is wrong in the program. If

A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR CORRECTNESS AND PERFORMANCE

201

the test fails for user defined inputs, then the system

never returns the user-defined inputs because that may

cause serious security flaws in the system. Instead,

the system returns an error message mentioned by the

tutor in the XML for that particular input.

5.7 Testing the Programs on Time

and Space Complexity

Testing programs on their execution times and space

complexity is an important idea because different al-

gorithm often have different requirements of space

and time during execution. For example, bubble sort

is an in-place algorithm but inefficient, the mergesort

algorithm is efficient if extra space is used, the quick

sort algorithm is an in-place algorithm and usually

very efficient, the heap sort algorithm is an in-place

algorithm and efficient. This section shows the analy-

sis between two mergesort programs. One is submit-

ted by the student and the other is submitted by the

evaluator. These programs are different in the num-

ber of variables declared and the dynamic memory al-

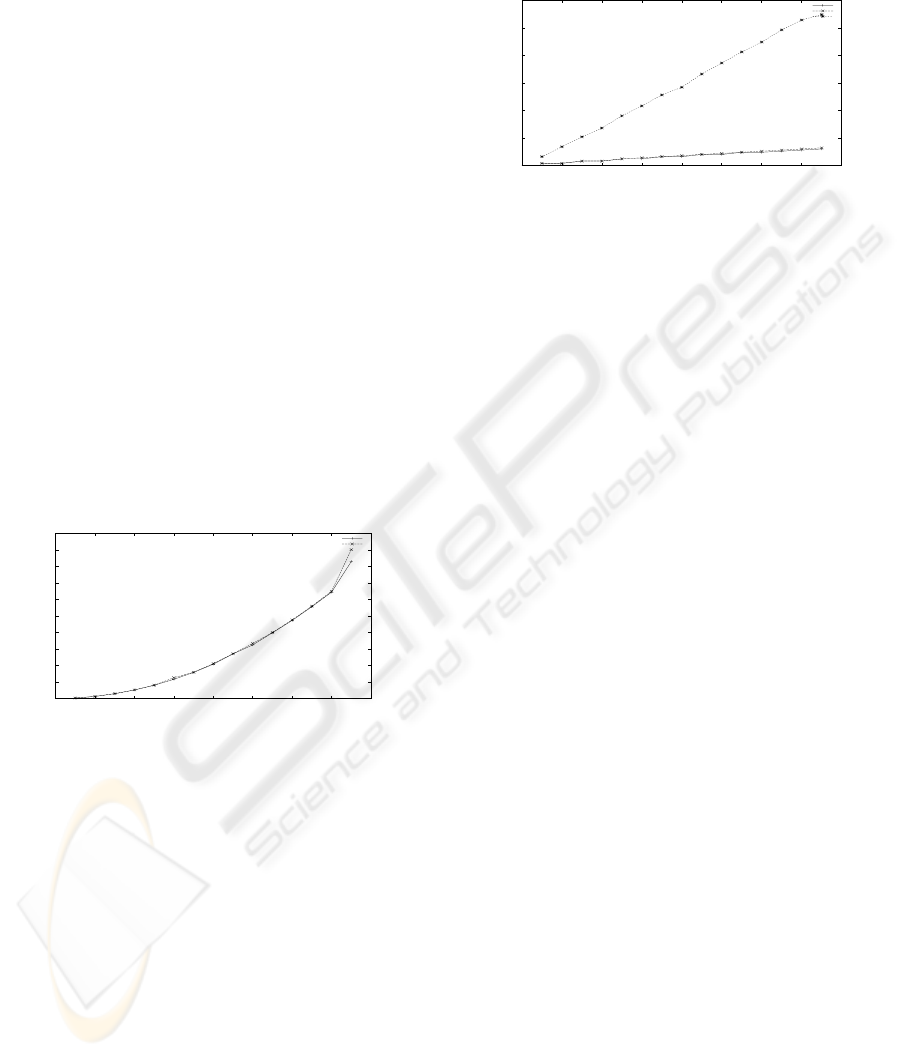

located during the execution of the programs. Fig.9

shows the execution time of the two mergesort pro-

grams.

0

200

400

600

800

1000

1200

1400

1600

1800

2000

0 1000 2000 3000 4000 5000 6000 7000 8000

Time in milliseconds

Inputs

Execution Time Comparision

Model MergeSort Program

Student MergeSort Program

Figure 9: Execution times of Mergesort program.

As the execution time of the programs are nearly

same, this suggests that we need to test the programs

further. For a particular input the system executes the

model program N times and determines the accept-

able range of execution time for the student’s pro-

gram. For the same input the system then executes the

student’s program, determines the execution time and

then checks whether it falls within the range deter-

mined after the execution of the evaluator’s mergesort

program. The above procedure will be iterated for a

large number of inputs. After getting results for all

the inputs, the system decides whether the program is

acceptable or not. The same procedure is followed to

test the programs on space usage. Fig.10 shows the

space complexity of the above two programs i.e. the

model mergesort program and the student’s mergesort

program. Fig.10 also shows the space requirement of

another mergesort program where the student has not

used the memory properly.

300

400

500

600

700

800

900

0 1000 2000 3000 4000 5000 6000 7000 8000

Space Complexity (KiloBytes)

Inputs

Space Complexity Comparision

Model MergeSort Program

Student MergeSort Program

Unhealthy Student MergeSort Program

Figure 10: Space Complexity of MergeSort programs.

Here the space required shows the virtual memory

resident set size which is equal to the stack size plus

data size plus the size of the binary file being exe-

cuted. It is evident from Fig.10 that if the student is

using the memory properly, then the amount of mem-

ory required for the execution of the student’s pro-

gram will not vary drastically from the memory re-

quirement of the Model Solution. When the student

is not using the memory properly, for example when

the student is not freeing the dynamically allocated

memory, then the memory requirements of the pro-

gram will increase drastically as compared to that of

the model solution. These problematic programs get

easily caught with the help of the same procedure as

explained for testing the programs on execution times.

6 SECURITY

From the outset of the implementation we have been

concerned with making the system as secure as pos-

sible because we cannot rule out the possibility of a

malicious program, that may intentionally or uninten-

tionally cause damage to the system. Therefore, the

system has been implemented under the assumption

that the programs may be unsafe. The first aspect

that has been taken into consideration is that, pro-

grams may intentionally or unintentionally have calls

to delete arbitrary files. To override the above possi-

bility, a separate login named as ’test’ login has been

created. During testing, the system uses Secure Shell

(ssh) with RSA encryption-based authentication to

execute necessary commands in this login. Necessary

files are transferred to this login (’test’ login) securely

using the Secure Copy Protocol. As the test login do

not have access to other directories, the programs ex-

ecuting in this login will not be able to delete them.

To save the files that are present at the test login we

have used the change file attributes on a Linux second

extended file system. Only superusers have access to

these attributes, and no user can modify them. After

WEBIST 2006 - E-LEARNING

202

the file attributes are changed they cannot be deleted

or renamed, no link can be created to this file and no

data can be written to the file. Only a superuser or a

process possessing the CAP

LINUX IMMUTABLE

capability can set or clear this attribute.

The next aspect under consideration is the problem

of the presence of infinite loops in the student’s pro-

gram. In order to solve the problem of infinite loops,

the system limits the resources available to the pro-

grams. Both the soft limit and the hard limit have to

be specified in seconds. The soft limit is the value that

the kernel enforces for the corresponding resource.

The hard limit acts as a ceiling for the soft limit. Af-

ter the soft limit is over the system sends a SIGXCPU

signal to the process, if the process does not stop ex-

ecuting then the system sends SIGXCPU signal ev-

ery second till the hard limit is reached, after the hard

limit is reached the system sends a SIGKILL signal

to the process. The setrlimit command has been used

to limit both execution time and memory usage, same

command can also be used to limit several other re-

sources, such as data and stack segment sizes, num-

ber of processes per uid and also the execution time.

Care has been taken so that the student is not able to

change the resources available to his/her program.

7 CONCLUSION

Our system has the potential to open up new hori-

zons in the field of Automatic Evaluation of program-

ming assignments by making the mechanism rela-

tively simple to use. We have illustrated the flexi-

bility of our system and explained the details of the

automatic evaluation process with an example in a

systematic way, ordering the phases serially as they

occur in the practical environment. This covers: de-

ciding the testing approach, designing the assignment

descriptor, the teacher’s interaction with the system,

and testing programs. The tool pin-points each possi-

ble dimension for testing the programs i.e. testing the

programs on random inputs, testing the programs on

user defined inputs, testingthe programs on time com-

plexity, space usage and style assessment of the stu-

dent’s program. Students benefit because they know

the status of their program before final submission is

done. Evaluators benefit because they do not have to

spending hours for grading the programs. But achiev-

ing peak evaluation benefits of our approach, requires

further research and with more extensive use as well

as studying the experience of the evaluators and stu-

dents using the system. Currently we have built the

system to work with a single programming language

i.e. the ‘C’ language. This is done in the first in-

stance to gain experience with the interactive nature

of the system in a simple form. Implementation doors

have been kept open, so that we can extend the sys-

tem to test programming assignments in other popu-

lar languages such as C++, Java etc by making small

changes in the code.

REFERENCES

Baker, R. S., Boilen, M., Goodrich, M. T., Tamassia, R.,

and Stibel, B. A. (1999). Tester and visualizers for

teaching data structures. In Proceedings of the ACM

30th SIGCSE Tech. Symposium on Computer Science

Education, pages 261–265.

Benford, S. D., Burke, K. E., and Foxley, E. (1993). A

system to teach programming in a quality controlled

environment. The Software Quality Journal pp 177-

197, pages 177–197.

Blumenstein, M., Green, S., Nguyen, A., and V., M. (2004).

An experimental analysis of game: A generic auto-

mated marking environment. In Proceedings of the 9th

annual SIGCSE conference on Innovation and tech-

nology in computer science education, pages 67–71.

Jackson, D. and M., U. (1997). Grading student pro-

gramming using assyst. In Proceedings of 28th ACM

SIGCSE Tech. Symposium on Computer Science Edu-

cation, pages 335–339.

Juedes, D. W. (2003). Experiences in web based grading. In

33rd ASEE/IEEE Frontiers in Education Conference.

Luck, M. and Joy, M. (1999). A secure online submis-

sion system. In Software-Practice and Experience,

29(8):721–740.

Pisan, Y., Richards, D., Sloane, A., Koncek, H., and

Mitchell, S. (2003). Submit! a web-based system

for automatic program critiquing. In Proceedings of

the fifth Australasian Computing Education Confer-

ence (ACE 2003), pages 59–68.

Reek, K. A. (1989). The try system or how to avoid testing

students programs. In Proceedings of SIGCSE, pages

112–116.

Saikkonen, R., Malmi, L., and Korhonen, A. (2001). Fully

automatic assessment of programming exercises. In

Proceedings of the 6th annual conference on Innova-

tion and Technology in Computer Science Education

(ITiCSE), pages 133–136.

A SYSTEM FOR AUTOMATIC EVALUATION OF PROGRAMS FOR CORRECTNESS AND PERFORMANCE

203