A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES

Biswarup Choudhury

Indian Institute of Technology-Bombay

Mumbai

Sharat Chandran

Indian Institute of Technology-Bombay

Mumbai

Keywords:

Image based Relighting, Survey, Image based Techniques.

Abstract:

Image-based Relighting (IBRL) has recently attracted a lot of research interest for its ability to relight real

objects or scenes, from novel illuminations captured in natural/synthetic environments. Complex lighting ef-

fects such as subsurface scattering, interreflection, shadowing, mesostructural self-occlusion, refraction and

other relevant phenomena can be generated using IBRL. The main advantage of Image-based graphics is that

the rendering time is independent of scene complexity as the rendering is actually a process of manipulating

image pixels, instead of simulating light transport. The goal of this paper is to provide a complete and sys-

tematic overview of the research in Image-based Relighting. We observe that essentially all IBRL techniques

can be broadly classified into three categories, based on how the scene/illumination information is captured:

Reflectance function based, Basis function based, and Plenoptic function based. We discuss the characteristics

of each of these categories and their representative methods. We also discuss about sampling density and types

of light source, relevant issues of IBRL.

1 INTRODUCTION

Image-based Modeling and Rendering (IBMR) syn-

thesizes realistic images from pre-recorded images

without a complex and long rendering process as in

traditional geometry-based computer graphics. The

major drawback of IBMR is its inherent rigidity. Most

IBMR techniques assume that the static illumina-

tion condition. Obviously, these assumptions cannot

fully satisfy the computer graphics needs since illu-

mination modification is a key operation in computer

graphics.

The ability to control illumination of the modeled

scene, enhances the three-dimensional illusion, which

in turn improves viewers’ understanding of the envi-

ronment (Fig. 1). If the illumination can be modified

by relighting the images, instead of rendering the geo-

metric models, the time for image synthesis will be

independent of the scene complexity. This also saves

the artist/designer enormous amount of time in fine

tuning the illumination conditions to achieve realistic

atmospheres. Applications range from global illumi-

nation and lighting design to augmented and mixed

reality, where real and virtual objects are combined

with consistent illumination. Two major motivations

for IBRL are :

• Allows the user to vary illuminance of the whole

(or only interesting portions of the) scene improv-

ing recognition and satisfaction.

• Brings us a step closer to realizing the use of

image-based entities as the basic rendering primi-

tives/entities.

For didactic purposes, we classify image-based re-

lighting techniques into three categories, namely:

Reflectance-based, Basis Function-based, Plenoptic

Function-based. These categories should be actually

viewed as a continuum rather absolute discrete ones,

since there are techniques that defy these strict cate-

gorizations.

Reflectance Function-based Relighting tech-

niques explicitly estimate the reflectance function at

each visible point of the object or scene. This is

also known as the Anisotropic Reflection Model (Ka-

jiya, 1985) or the Bidirectional Surface Scattering

Reflectance Distribution Function (BSSRDF) (Jensen

et al., 2001). It is defined as the ratio of the outgoing

to the incoming radiance. Reflectance estimation can

be achieved with calibrated light setup, which provide

full control of the incident illumination. A reflectance

function is modeled with the data of the scene, cap-

176

Choudhury B. and Chandran S. (2006).

A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES.

In Proceedings of the First International Conference on Computer Graphics Theory and Applications, pages 176-183

DOI: 10.5220/0001359201760183

Copyright

c

SciTePress

(a) (b) (c)

Figure 1: IBRL: The skull is relighted with the illumination of different environments. Notice that the lighted and dark parts

of the skull correctly corresponds to the lighting in the scene.

tured under varying illumination and view direction.

Techniques then apply novel illumination and use the

reflectance function calculated, for generating novel

illumination effects in the scene.

Basis Function-based Relighting techniques take

advantage of the linearity of the rendering operator

with respect to illumination, for a fixed scene. Re-

rendering is accomplished via linear combination of a

set of pre-rendered “basis” images. These techniques,

for the purpose of computing a solution determine a

time-independent basis - a small number of “genera-

tive” global solutions - that suffice to simulate the set

of images under varying illumination and viewpoint.

Plenoptic Function-based Relighting techniques

are based on the computational model, the Plenoptic

Function (Adelson and Bergen, 1991). The original

plenoptic function aggregates all the illumination and

the scene changing factors in a single “time” parame-

ter. So most research concentrates on view interpo-

lation and leaves the time parameter untouched (illu-

mination and scene static). The plenoptic function-

based relighting techniques extract out the illumina-

tion component from the aggregate time parameter,

and facilitate relighting of scenes.

The remainder of the paper is organized as follows:

Section 2, Section 3 and Section 4 discuss each of

the three relighting categories, along with their repre-

sentative methods. In Section 5, we discuss some of

the other relevant issues of relighting. We then pro-

vide some directions of future research in Section 6.

Finally, we provide our concluding remarks in Sec-

tion 7.

2 REFLECTANCE FUNCTION

A reflectance function is the measurement of how ma-

terials reflect light, or more specifically, how they

transform incident illumination into radiant illumi-

nation. The Bidirectional Reflectance Distribution

Function (BRDF) (Nicodemus et al., 1977) is a gen-

eral form of representing surface reflectivity. A bet-

ter representation is the Bidirectional Surface Scat-

tering Reflectance Distribution Function (BSSRDF)

(Jensen et al., 2001), which model effects such as

color bleeding, translucency and diffusion of light

across shadow boundaries, otherwise impossible with

a BRDF model. As introduced by (Debevec et al.,

2000), the reflectance function R, an 8D function, de-

termines the light transfer between light entering a

bounding volume at a direction and position ψ

incident

and leaving at ψ

exitant

:

R = R(ψ

incident

,ψ

exitant

)

This calculated reflectance function can be used to

compute relit images of the objects, lit with novel il-

lumination. The computation for each relit pixel is

reduced to multiplying corresponding coefficients of

the reflectance function and the incident illumination.

L

exitant

(ω)=

Ω

RL

incident

(ω)dω

where, Ω is the space of all light directions over a

hemisphere centered around the object to be illumi-

nated (ω ∈ Ω). For every viewing direction, each

pixel in an image stores its appearance under all il-

lumination directions. Thus each pixel in an image is

a sample of the reflectance function.

We classify the estimation of reflectance functions

into three different categories: Forward, Inverse and

Pre-computed radiance transport.

2.1 Forward

The forward methods of estimating reflectance func-

tions sample these functions exhaustively and tabu-

late the results. For each incident illumination, they

store the reflectance function weights for a fixed ob-

served direction. The forward method of estimating

reflectance functions can further be divided into two

categories, on the basis of illumination information

provided, Known and Unknown.

The techniques with known illumination incorpo-

rate the information in their setup. The user is pro-

vided full control over the direction, position and

A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES

177

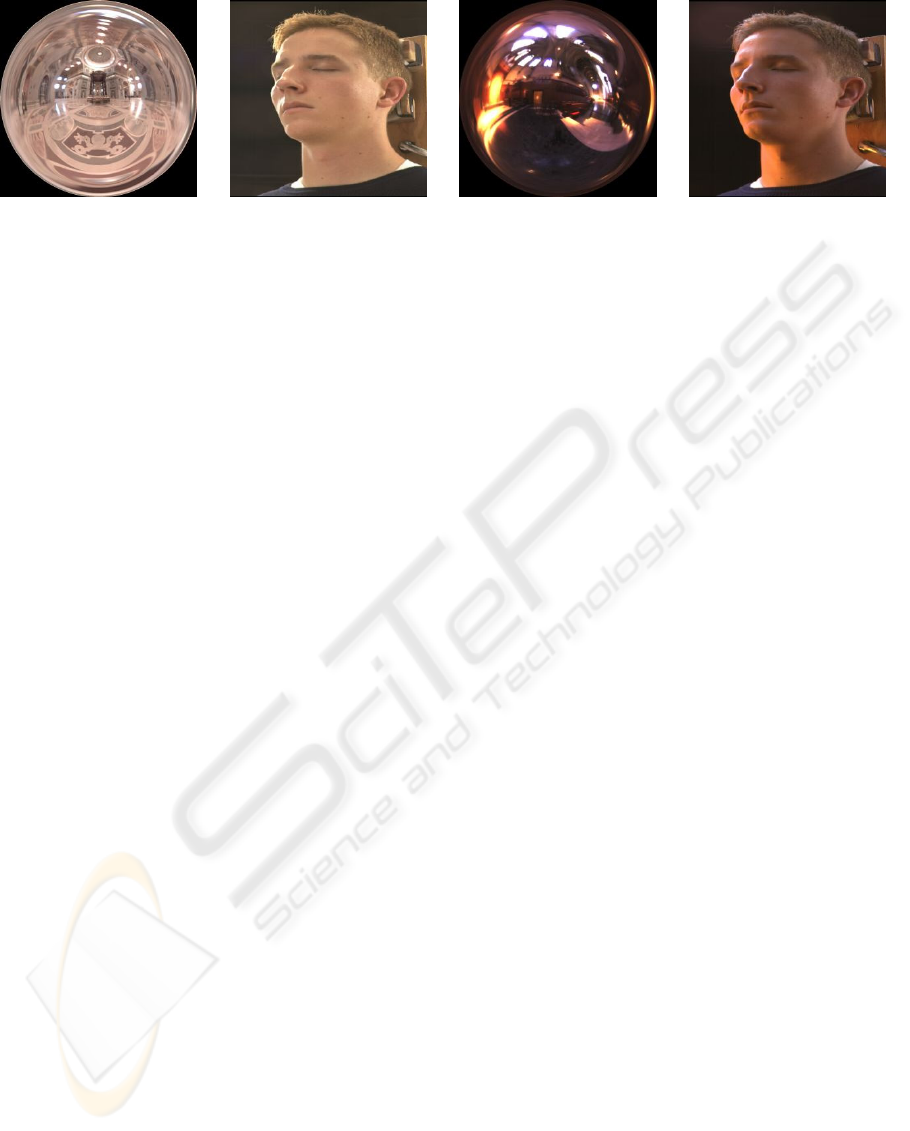

(a) Illumination 1 (b) Relit face using Illumi-

nation 1 of Fig 2(a)

(c) Illumination 2 (d) Relit face using Illumi-

nation 2 of Fig 2(c)

Figure 2: Mirrored ball, representing illumination of an environment, used for relighting faces(Debevec et al., 2000).

type of incident illumination. This information is di-

rectly used for finding the reflectance properties of the

scene. (Debevec et al., 2000) use the highest reso-

lution incident illumination with roughly 2000 direc-

tions and construct a reflectance function for each ob-

served image pixel from its values over the space of il-

lumination directions (Fig. 2). (Masselus et al., 2004)

sample the reflectance functions from real objects

by illuminating the object from a set of directions

while recording the photographs. They reconstruct a

smooth and continuous reflectance function, from the

sampled reflectance functions, using the multilevel B-

spline technique. (Masselus et al., 2003) exploit the

richness in the angular and spatial variation of the

incident illumination, and measure six-dimensional

slices of the eight-dimensional reflectance field, for

a fixed viewpoint. On the other hand, (Malzbender

et al., 2001) store the coefficients of a biquadratic

polynomial for each texel, thereby improving upon

the compactness of the representation, and uses it to

reconstruct the surface color under varied illumina-

tion conditions.

(Wong et al., 1997), (Wong et al., 2001) propose a

concept of apparent-BRDF to represent the outgoing

radiance distribution passing through the pixel win-

dow on the image plane. By treating each image

as an ordinary surface element, the radiance distrib-

ution of the pixel under various illumination condi-

tions is recorded in a table. (Koudelka et al., 2001)

samples the surface’s incident field to reconstruct a

non-parametric apparent BRDF at each visible point

on the surface. (Boivin and Gagalowicz, 2001) itera-

tively produces an approximation of the reflectance

model of diffuse, specular, isotropic or anisotropic

textured objects using a single image and the 3D geo-

metric model of the scene.

Techniques with unknown incident illumination

information estimate it. (Nishino and Nayar, 2004)

use eyes of a human subject and compute a large field

of view of the illumination distribution of the envi-

ronment surrounding a person, using the characteris-

tics of the imaging system formed by the cornea of an

eye and a camera viewing it. Their assumption of a

human subject in the scene, at all times, may not be

practical though. (Lensch et al., 2003) used six steel

spheres to recover the light source positions. They fit

an average BRDF function to the different materials

of the objects in the scene. Some other techniques

(Fuchs et al., 2005),(Tchou et al., 2004) indirectly

compute the incident illumination information by us-

ing black snooker ball/non-metallic sphere. A very

early work of IBRL, Inverse Rendering (Marschner,

1998), solves for unknown lighting and reflectance

properties of a scene, for relighting purposes.

2.2 Inverse

The inverse problem of estimation of reflectance func-

tions can be be stated as follows: Given an observa-

tion, what are the weights and parameters of the basis

functions that best explain the observation?

Inverse methods observe an output and compute the

probability that it came from a particular region in the

incident illumination domain. The incident illumi-

nation is typically represented by a bounded region,

such as an environment map, which is modeled as a

sum of basis functions [rectangular (Zongker et al.,

1999) or Gaussian kernels (Chuang et al., 2000)].

They capture an environment matte, which in addition

to capturing the foreground object and its traditional

matte, also describes how the object refracts and re-

flects light. This can then be placed in a new environ-

ment, where it will refract and reflect light from that

scene. Techniques (Matusik et al., 2004), (Peers and

Dutre, 2003) have been proposed which progressively

refine the approximation of the reflectance function

with an increasing number of samples.

For a more accurate reflectance estimation, (Ma-

tusik et al., 2002) combine a forward method (De-

bevec et al., 2000) for the low-frequency surface re-

flectance function and an inverse method, environ-

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

178

(a) View 1: Relit Scene 1 (b) View 1: Relit Scene 2 (c) View 2: Relit Scene 1 (d) View 2: Relit Scene 2

Figure 3: Pre-computed radiance transport based IBRL, depicting all-frequency shadows, reflections and highlights along

with view variation (Ng et al., 2004).

ment matting (Chuang et al., 2000), for the high-

frequency surface reflectance function. This is used

for capturing all the complex lighting effects, like

high-frequency reflections and refractions.

2.3 Pre-computed Radiance

Transport

A global transport simulator creates functions over

the object’s surface, representing transfer of ar-

bitrary incident lighting, into transferred radiance

which includes global effects like shadows, self-

interreflections, occlusion and scattering effects.

When the actual lighting condition is substituted at

run-time, the resulting model provides global illumi-

nation effects.

The radiance transport is pre-computed using a de-

tailed model of the scene (Sloan et al., 2002). To im-

prove upon the rendering performance, the incident

illumination can be represented using spherical har-

monics (Kautz et al., 2002), (Ramamoorthi and Han-

rahan, 2001), (Sloan et al., 2002) or wavelets (Ng

et al., 2003). The reflectance field, stored per vertex

as a transfer matrix, can be compressed using PCA

(Sloan et al., 2003) or wavelets (Ng et al., 2003).

(Ng et al., 2004) focuses on relighting for chang-

ing illumination and viewpoint, while including all-

frequency shadows, reflections and lighting (Fig. 3).

They propose a novel triple product integrals based

technique of factorizing the visibility and the mate-

rial properties. Recently, (Wang et al., 2005) pre-

sented a method of relighting translucent objects un-

der all-frequency lighting. They apply a two-pass hi-

erarchical technique for computing non-linearly ap-

proximated transport vectors due to diffuse multiple

scattering.

3 BASIS FUNCTION

Basis Function based techniques decompose the lumi-

nous intensity distributions into a series of basis func-

tions, and illuminances are obtained by simply sum-

ming luminance from each light source whose lumi-

nous intensity distribution obey each basis function.

Assuming multiple light sources, luminance at a cer-

tain point is obtained by calculating the luminance

from each light source and summing them. In gen-

eral, luminance calculation obeys the two following

rules of superposition:

1. The image resulting from an additive combination

of two illuminants is just the sum of the images

resulting from each of the illuminations indepen-

dently.

2. Multiplying the intensity of the illumination

sources by a factor of α results in a rendered im-

age that is multiplied by the same factor α.

These techniques calculate luminance in the case of

alterations in the luminous distributions and the di-

rection of light sources. The luminous intensity dis-

tribution of a point light source is expressed as the

sum of a series of basis distributions. Luminance due

to light source, whose luminance intensity distribu-

tion corresponds to one of the basis distributions, is

calculated in advance and stored as basis luminance.

Using the aforementioned property 1, the luminance

due to the light source with luminous intensity dis-

tribution, is calculated by summing the pre-calculated

basis luminances corresponding to each individual ba-

sis distribution. Using property 2, the luminance due

to a light source, whose luminous intensity distribu-

tion can be expressed as the weighted sum of the ba-

sis distributions, is obtained by multiplying each basis

luminance with corresponding weights and summing

them. Thus, once the basis luminance is calculated

in the pre-process, the resulting luminance can be ob-

tained quickly by calculating the weighted sum of the

basis luminances. Some desirable properties of a ba-

sis set of illumination functions are:

1. The basis functions should be general enough to

form any light source, one desires.

2. The number of basis functions should be small,

since this corresponds to the number of basis im-

ages we must actually store and render.

A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES

179

We classify the type of basis functions (used in Re-

lighting) into five categories and provide their corre-

sponding representative methods:

1. Steerable Functions(Nimeroff et al., 1994).

2. Spherical Harmonics Function (Dobashi et al.,

1995).

3. Singular Value Decomposition (Principal Compo-

nent Analysis) (Georghiades et al., 2001), (Osad-

chy and Keren, 2001), (Hawkins et al., 2004).

4. N-mode SVD: Multilinear Algebra of higher-order

Tensors (Vasilescu and Terzopoulos, 2004), (Fu-

rukawa et al., 2002), (Suykens et al., 2003), (Tong

et al., 2002).

5. Sampling Illumination Space (Masselus et al.,

2002), (Wenger et al., 2005), (Georghiades, 2003).

4 PLENOPTIC FUNCTION

The appearance of the world can be thought of

as the dense array of light rays filling the space,

which can be observed by posing eyes or cameras in

space. These light rays can be represented through the

plenoptic function (from plenus, complete or full; and

optics) (Adelson and Bergen, 1991). The plenoptic

function is a 7D function that models a 3D dynamic

environment by recording the light rays at every space

location (V

x

,V

y

,V

z

), towards every possible direc-

tion (θ, φ), over any range of wavelengths (λ) and at

any time (t), i.e.,

P = P

(7)

(V

x

,V

y

,V

z

,θ,φ,λ,t)

An image of a scene with a pinhole camera records

the light rays passing through the camera’s center-of-

projection. They can also be considered as samples

of the plenoptic function. Basically, the function tells

us how the environment looks when our eye is posi-

tioned at V =(V

x

,V

y

,V

z

). The time parameter t actu-

ally models all the other unmentioned factors such as

the change of illumination and the scene.

Plenoptic Function-based relighting techniques

propose new formulations of the plenoptic function,

which explicitly specify the illumination component.

Using these formulations, one can generate complex

lighting effects. One can simulate various lighting

configurations such as multiple light sources, light

sources with different colors and also arbitrary types

of light sources (Section 5.1).

4.1 Representative Techniques

(Wong and Heng, 2004) discuss a new formulation of

the plenoptic function, Plenoptic Illumination Func-

tion, which explicitly specifies the illumination com-

ponent. They propose a local illumination model,

which utilizes the rules of superposition for relight-

ing under various lighting configurations. (Lin et al.,

2002) on the other hand, propose a representation

of the plenoptic function, the reflected irradiance

field. The reflected irradiance field stores the reflec-

tion of surface irradiance as an illuminating point light

source moves on a plane. With the reflected irradiance

field, the relit object/scene can be synthesized simply

by interpolating and superimposing appropriate sam-

ple reflections.

5 DISCUSSION

In this section, we discuss some of the relevant issues

involving IBRL.

5.1 Light Source Type

Illumination is a complex and high-dimensional func-

tion of computer graphics. To reduce the dimension-

ality and to analyze their complexity and practical-

ity, it is necessary to assume a specific type of light

source. Two types of light sources most commonly

used are:

1. Directional Light Source (DLS): A DLS emits

parallel rays which do not diverge or become dim-

mer with distance. It is parametrized using only

two variables (θ, φ), which denotes the direction of

the light vector. For planar surfaces lighted by a

DLS, the degree of shading will be the same right

across the surface. The computations required for

directional lights are therefore considerably less.

Using a DLS is also more meaningful, because the

captured pixel value in an image tells us what the

surface elements behind the pixel window actually

look like, when all surface elements are illuminated

by parallel rays in the direction of the viewing

point. DLS serves well with synthetic object/scene

where it is used to approximate the light coming

from an extremely distant light source. But it poses

practical difficulties for capturing real and large ob-

ject/scene. They can be approximated with strong

spotlights at a distance which greatly exceeds the

size of the object/scene.

2. Point Light Source (PLS): A PLS shines uni-

formly in all directions. Its intensity decreases with

the distance to the light source. A PLS is para-

metrized using three variables (P

x

,P

y

,P

z

), which

denote the 3D position of the PLS in space. As

a result, the angle between the light source and

the normals of the various affected surfaces can

change dramatically from one surface to the next.

In the presence of multiple light sources, this means

that for every vertex, one has to determine the

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

180

direction of the light vector corresponding to a

light source. This requires determination of the

depth map of the images using computer vision al-

gorithms, which though provide good approxima-

tions, make the lighting calculations computation-

ally intensive. Point light source are usually close

to the observer and so more practical for real and

large objects/scenes.

5.2 Sampling

Sampling is one of the key issues of image-based

graphics. It is a non-trivial problem because it in-

volves the complex relationship among three ele-

ments: the depth and texture of the scene, the number

of sample images, and the rendering solution. One

needs to determine the minimum sampling rate for

anti-aliased image-based rendering. Comparatively,

very little research (Chai et al., 2000), (Shum and

Kang, 2000), (Zhang and Chen, 2004), (Zhang and

Chen, 2001), (Zhang and Chen, 2003) has gone into

trying to tackle this problem.

In the context of IBRL, sampling deals with the il-

lumination component for efficient and realistic re-

lighting (Wong and Heng, 2004). (Lin et al., 2002)

prove that there exists a geometry-independent bound

of the sampling interval, which is analytically bound

to the BRDF of the scene. It ensures that the intensity

error in the relit image is smaller than a user-specified

tolerance, thus eliminating noticeable artifacts.

6 FUTURE DIRECTIONS

A lot of research remains to be done in IBRL. Some

ideas are:

1. Efficient Representation: BRDF function-based

IBRL techniques require huge number of samples

to accurately estimate a reflectance function. Most

techniques, for practical purposes, consider low-

frequency components, which compromises with

the visual quality of the rendered image. Almost

all Basis function-based techniques also require a

number of basis images for relighting. Thus, a lot

of research is required to find accurate and efficient

representations of a scene, which capture all the

complex phenomenas of lighting and reflectance

functions. A related area which deserves consid-

erable investigation, is IBRL for real and large en-

vironments.

2. Sampling: Most techniques do not deal with the

minimum sampling density required for anti-aliased

IBRL. (Lin et al., 2002) discuss about a geometry-

independent sampling density based on radiomet-

ric tolerance. Though this serves our purpose of ef-

ficient sampling of certain scenes, what we need is

a photometric tolerance, which takes into account

the response function of human vision. (Dumont

et al., 2005) discuss the importance of psychophys-

ical quality scale for realistic IBRL of glossy sur-

faces.

3. Compression: No matter how much the storage

and memory increase in the future, compression

is always useful to keep the IBRL data at a man-

ageable size. A high compression ratio in IBRL

relies heavily on how good the images can be pre-

dicted. The sampled images for IBL, usually have a

strong inter-pixel and intra-pixel correlation, which

needs to be harnessed for efficient compression.

Currently, techniques such as spherical harmonics,

vector quantization, direct cosine transform and

spherical wavelets are used for compressing the

datasets of IBRL, but all of these have their own

inherent disadvantages.

4. Dynamics: Most IBRL techniques deal with sta-

tic environments, in terms of change in geome-

try of the scene/object. With the development of

high-end graphics processors, it is conceivable that

IBRL can be applied to dynamic environments.

7 FINAL REMARKS

We have surveyed the field of Image-based Relight-

ing. In particular, we observe that IBRL techniques

can be classified into three categories based on how

they capture the scene/illumination information: Re-

flectance Function-based, Basis Function-based and

Plenoptic Function-based. We have presented each

of the categories along with their corresponding rep-

resentative methods. Relevant issues of IBRL like

type of light source and sampling have also been dis-

cussed.

It is interesting to note the trade-off between geom-

etry and images, needed for anti-aliased image-based

rendering. Efficient representation, realistic render-

ing, limitations of computer vision algorithms and

computational costs should motivate researchers to

invent efficient Image-based Relighting techniques in

future.

REFERENCES

Adelson, E. H. and Bergen, J. R. (1991). The plenoptic

function and the elements of early vision. Computa-

tional Models of Visual Processing, pages 3–20.

Boivin, S. and Gagalowicz, A. (2001). Image-based ren-

dering of diffuse, specular and glossy surfaces from a

single image. In SIGGRAPH ’01: Proceedings of the

28th annual conference on Computer graphics and in-

A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES

181

teractive techniques, pages 107–116, New York, NY,

USA. ACM Press.

Chai, J.-X., Chan, S.-C., Shum, H.-Y., and Tong, X. (2000).

Plenoptic sampling. In SIGGRAPH ’00: Proceedings

of the 27th annual conference on Computer graph-

ics and interactive techniques, pages 307–318, New

York, NY, USA. ACM Press/Addison-Wesley Pub-

lishing Co.

Chuang, Y.-Y., Zongker, D. E., Hindorff, J., Curless, B.,

Salesin, D. H., and Szeliski, R. (2000). Environment

matting extensions: towards higher accuracy and real-

time capture. In SIGGRAPH ’00: Proceedings of the

27th annual conference on Computer graphics and in-

teractive techniques, pages 121–130, New York, NY,

USA. ACM Press/Addison-Wesley Publishing Co.

Debevec, P., Hawkins, T., Tchou, C., Duiker, H.-P., Sarokin,

W., and Sagar, M. (2000). Acquiring the reflectance

field of a human face. In SIGGRAPH ’00: Pro-

ceedings of the 27th annual conference on Computer

graphics and interactive techniques, pages 145–156,

New York, NY, USA. ACM Press/Addison-Wesley

Publishing Co.

Dobashi, Y., Kaneda, K., Nakatani, H., and Yamashita, H.

(1995). A quick rendering method using basis func-

tions for interactive lighting design. Computer Graph-

ics Forum, 14(3):229–240.

Dumont, O., Masselus, V., Zaenen, P., Wagemans, J., and

Dutre, P. (2005). A perceptual quality scale for image-

based relighting of glossy surfaces. In Tech. Rep.

CW417, Katholieke Universiteit Leuven, June 2005.

Fuchs, M., Blanz, V., and Seidel, H.-P. (2005). Bayesian

relighting. In Rendering Techniques, pages 157–164.

Furukawa, R., Kawasaki, H., Ikeuchi, K., and Sakauchi,

M. (2002). Appearance based object modeling using

texture database: acquisition, compression and ren-

dering. In EGRW ’02: Proceedings of the 13th Eu-

rographics workshop on Rendering, pages 257–266,

Aire-la-Ville, Switzerland, Switzerland. Eurographics

Association.

Georghiades, A. S. (2003). Recovering 3-d shape and re-

flectance from a small number of photographs. In

EGRW ’03: Proceedings of the 14th Eurograph-

ics workshop on Rendering, pages 230–240, Aire-la-

Ville, Switzerland, Switzerland. Eurographics Associ-

ation.

Georghiades, A. S., Belhumeur, P. N., and Kriegman, D. J.

(2001). From few to many: Illumination cone models

for face recognition under variable lighting and pose.

IEEE Trans. Pattern Anal. Mach. Intell., 23(6):643–

660.

Hawkins, T., Wenger, A., Tchou, C., Gardner, A.,

G

¨

oransson, F., and Debevec, P. E. (2004). Animatable

facial reflectance fields. In Rendering Techniques,

pages 309–321.

Jensen, H. W., Marschner, S. R., Levoy, M., and Hanra-

han, P. (2001). A practical model for subsurface light

transport. In SIGGRAPH ’01: Proceedings of the 28th

annual conference on Computer graphics and interac-

tive techniques, pages 511–518, New York, NY, USA.

ACM Press.

Kajiya, J. T. (1985). Anisotropic reflection models. In

SIGGRAPH ’85: Proceedings of the 12th annual con-

ference on Computer graphics and interactive tech-

niques, pages 15–21, New York, NY, USA. ACM

Press.

Kautz, J., Sloan, P.-P., and Snyder, J. (2002). Fast, arbi-

trary brdf shading for low-frequency lighting using

spherical harmonics. In EGRW ’02: Proceedings of

the 13th Eurographics workshop on Rendering, pages

291–296, Aire-la-Ville, Switzerland, Switzerland. Eu-

rographics Association.

Koudelka, M. L., Belhumeur, P. N., Magda, S., and Krieg-

man, D. J. (2001). Image-based modeling and ren-

dering of surfaces with arbitrary brdfs. In CVPR (1),

pages 568–575.

Lensch, H. P. A., Kautz, J., Goesele, M., Heidrich, W., and

Seidel, H.-P. (2003). Image-based reconstruction of

spatial appearance and geometric detail. ACM Trans.

Graph., 22(2):234–257.

Lin, Z., Wong, T.-T., and Shum, H.-Y. (2002). Relight-

ing with the reflected irradiance field: Representation,

sampling and reconstruction. Int. J. Comput. Vision,

49(2-3):229–246.

Malzbender, T., Gelb, D., and Wolters, H. (2001). Polyno-

mial texture maps. In SIGGRAPH ’01: Proceedings

of the 28th annual conference on Computer graph-

ics and interactive techniques, pages 519–528, New

York, NY, USA. ACM Press.

Marschner, S. R. (1998). Inverse rendering for computer

graphics. PhD thesis, Cornell University. Adviser-

Donald P. Greenberg.

Masselus, V., Dutre;, P., and Anrys, F. (2002). The free-

form light stage. In EGRW ’02: Proceedings of

the 13th Eurographics workshop on Rendering, pages

247–256, Aire-la-Ville, Switzerland, Switzerland. Eu-

rographics Association.

Masselus, V., Peers, P., Dutre;, P., and Willems, Y. D.

(2003). Relighting with 4d incident light fields. ACM

Trans. Graph., 22(3):613–620.

Masselus, V., Peers, P., Dutre, P., and Willems, Y. D. (2004).

Smooth reconstruction and compact representation of

reflectance functions for image-based relighting. In

Rendering Techniques, pages 287–298.

Matusik, W., Loper, M., and Pfister, H. (2004).

Progressively-refined reflectance functions from nat-

ural illumination. In Rendering Techniques, pages

299–308.

Matusik, W., Pfister, H., Ziegler, R., Ngan, A., and McMil-

lan, L. (2002). Acquisition and rendering of transpar-

ent and refractive objects. In EGRW ’02: Proceedings

of the 13th Eurographics workshop on Rendering,

pages 267–278, Aire-la-Ville, Switzerland, Switzer-

land. Eurographics Association.

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

182

Ng, R., Ramamoorthi, R., and Hanrahan, P. (2003). All-

frequency shadows using non-linear wavelet lighting

approximation. ACM Trans. Graph., 22(3):376–381.

Ng, R., Ramamoorthi, R., and Hanrahan, P. (2004). Triple

product wavelet integrals for all-frequency relighting.

ACM Trans. Graph., 23(3):477–487.

Nicodemus, F., Richmond, J., Hsia, J. J., Ginsberg, I. W.,

and Limperis, T. (1977). Geometric considerations

and nomenclature for reflectance. In NBS Monograph

160, National Bureau of Standards (US).

Nimeroff, J. S., Simoncelli, E., and Dorsey, J. (1994). Effi-

cient Re-rendering of Naturally Illuminated Environ-

ments. In Fifth Eurographics Workshop on Render-

ing, pages 359–373, Darmstadt, Germany. Springer-

Verlag.

Nishino, K. and Nayar, S. K. (2004). Eyes for relighting.

ACM Trans. Graph., 23(3):704–711.

Osadchy, M. and Keren, D. (2001). Image detection under

varying illumination and pose. In ICCV, pages 668–

673.

Peers, P. and Dutre, P. (2003). Wavelet environment mat-

ting. In EGRW ’03: Proceedings of the 14th Eu-

rographics workshop on Rendering, pages 157–166,

Aire-la-Ville, Switzerland, Switzerland. Eurographics

Association.

Ramamoorthi, R. and Hanrahan, P. (2001). An efficient

representation for irradiance environment maps. In

SIGGRAPH ’01: Proceedings of the 28th annual con-

ference on Computer graphics and interactive tech-

niques, pages 497–500, New York, NY, USA. ACM

Press.

Shum, H.-Y. and Kang, S. B. (2000). A review of

image-based rendering techniques. In IEEE/SPIE Vi-

sual Communications and Image Processing (VCIP),

pages 2–13.

Sloan, P.-P., Hall, J., Hart, J., and Snyder, J. (2003). Clus-

tered principal components for precomputed radiance

transfer. ACM Trans. Graph., 22(3):382–391.

Sloan, P.-P., Kautz, J., and Snyder, J. (2002). Precomputed

radiance transfer for real-time rendering in dynamic,

low-frequency lighting environments. In SIGGRAPH

’02: Proceedings of the 29th annual conference on

Computer graphics and interactive techniques, pages

527–536, New York, NY, USA. ACM Press.

Suykens, F., Vom, K., Lagae, A., and Dutre, P. (2003). Inter-

active rendering with bidirectional texture functions.

Computer Graphics Forum, 22(3).

Tchou, C., Stumpfel, J., Einarsson, P., Fajardo, M., and

Debevec, P. (2004). Unlighting the parthenon. SIG-

GRAPH 2004 Sketch.

Tong, X., Zhang, J., Liu, L., Wang, X., Guo, B., and Shum,

H.-Y. (2002). Synthesis of bidirectional texture func-

tions on arbitrary surfaces. In SIGGRAPH ’02: Pro-

ceedings of the 29th annual conference on Computer

graphics and interactive techniques, pages 665–672,

New York, NY, USA. ACM Press.

Vasilescu, M. A. O. and Terzopoulos, D. (2004). Tensortex-

tures: multilinear image-based rendering. ACM Trans.

Graph., 23(3):336–342.

Wang, R., Tran, J., and Luebke, D. (2005). All-frequency

interactive relighting of translucent objects with sin-

gle and multiple scattering. ACM Trans. Graph.,

24(3):1202–1207.

Wenger, A., Gardner, A., Tchou, C., Unger, J., Hawkins,

T., and Debevec, P. (2005). Performance relighting

and reflectance transformation with time-multiplexed

illumination. ACM Trans. Graph., 24(3):756–764.

Wong, T.-T. and Heng, P.-A. (2004). Image-based relight-

ing: representation and compression. Integrated im-

age and graphics technologies, pages 161–180.

Wong, T.-T., Heng, P.-A., and Fu, C.-W. (2001). Interactive

relighting of panoramas. IEEE Comput. Graph. Appl.,

21(2):32–41.

Wong, T.-T., Heng, P.-A., Or, S.-H., and Ng, W.-Y. (1997).

Image-based rendering with controllable illumination.

In Proceedings of the Eurographics Workshop on Ren-

dering Techniques ’97, pages 13–22, London, UK.

Springer-Verlag.

Zhang, C. and Chen, T. (2001). Generalized plenoptic sam-

pling. In Tech. Rep. AMP01-06, Carnegie Mellon

Technical Report, 2001.

Zhang, C. and Chen, T. (2003). Spectral analysis for sam-

pling image-based rendering data. In IEEE Transac-

tion on Circuit, System on Video Technology 13, 11

(2003), 1038– 1050. 1.

Zhang, C. and Chen, T. (2004). A survey on image-based

rendering: Representation, sampling and compres-

sion. SPIC 2004, 19(1):1–28.

Zongker, D. E., Werner, D. M., Curless, B., and Salesin,

D. H. (1999). Environment matting and composit-

ing. In SIGGRAPH ’99: Proceedings of the 26th an-

nual conference on Computer graphics and interac-

tive techniques, pages 205–214, New York, NY, USA.

ACM Press/Addison-Wesley Publishing Co.

A SURVEY OF IMAGE-BASED RELIGHTING TECHNIQUES

183