VISIBILITY BASED DETECTION AND REMOVAL OF

SEMI-TRANSPARENT BLOTCHES ON ARCHIVED DOCUMENTS

Vittoria Bruni, Andrew Crawford, Domenico Vitulano

Istituto per le Applicazioni del Calcolo ”M. Picone” - C.N.R.

Viale del Policlinico 137, 00161 Rome, Italy

Filippo Stanco

DEEI - Universit

´

a di Trieste

Via A. Valerio 10, Trieste, Italy

Keywords:

Image restoration, ancient documents, visibility laws, semi-transparent blotches.

Abstract:

This paper focuses on a novel model for digital suppression of semi-transparent blotches, caused by the contact

between water and paper of antique documents. The proposed model is based on laws regulating the human

visual system and provides a fast and automatic algorithm both in detection and restoration. Experimental

results show the great potentialities of the proposed model in solving also critical situations.

1 INTRODUCTION

Digital restoration provides a new way of preserving

and recovering archived documents with non-invasive

techniques. In fact, it allows us to give the document

its original appearance and to make it more readable

and visually pleasing. Digitized documents can be

affected by different kinds of damage, which can be

classified in two main classes:

• physical and chemical degradation, such as cracks,

scratches, holes, added stamps, smeary spots (wa-

ter or grease), foxing, warping, bleed-through and

so on — see for instance (Tonazzini et al., 2004;

Stanco et al., 2003; Brown and Seales, 2004) and

references therein;

• damages which are caused during the conversion of

the document in its digital format, such as scanning

defects, show-through, uneven background and so

on — see for instance (Shi and Govindaraju, 2004;

Sharma, 2001).

A lot of research work has been done for trying to

solve one or more of the aforementioned problems.

In fact, each defect can be processed using a specific

and oriented strategy or employing integrated envi-

ronments that are able to select the best restoration

technique for it (Cannon et al., 2003). Moreover, a re-

ally useful approach needs a low computational effort

and user’s independence.

In this paper we deal with semi-transparent

blotches. They are common defects on ancient doc-

uments and are caused by the contact between paper

and water. They usually appear as irregular regions

with darker intensity with respect to the original pa-

per. The detection phase is very delicate. In fact, the

geometric shape of the degradation is not significant

since it changes from a blotch to another also in the

same image. It is the same for both color and degree

of transparency. On the other hand, their removal is

critical whenever they involve characters. We assume

that the image we wish to restore is composed of char-

acters which are darker than their background. In this

case the darkness of the background can approach the

one of the text, making the discrimination between

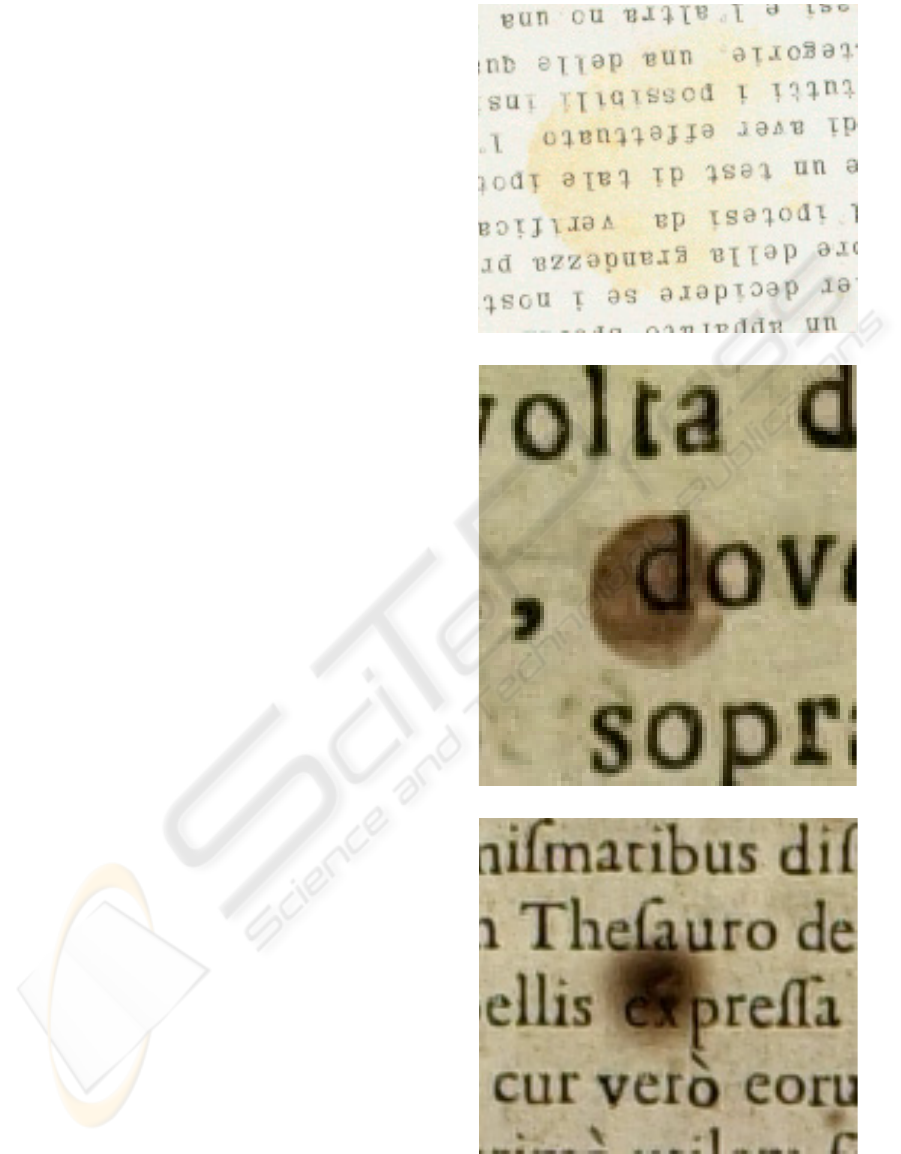

the two objects somewhat difficult. Fig. 1 shows three

successive steps in the evolution process of humidity

damage. In the first case, water has been in contact

with the paper for a limited time and the damage is

only slight. In the second case, the time of contact has

been longer and the resulting damage is more evident.

Finally, in the third case, after long term humidity, the

effects are drastic causing significant damage to char-

acters information. Hence, the goal of a recovering

algorithm consists of enhancing the text but, at the

same time, restoring the background without altering

its artistic features like color, textures and eventual

drawings and pictures.

With regard to text segmentation, a lot of work has

been done, since it corresponds to a sort of text bi-

narization (Valverde and Grigat, 2000; Oguro et al.,

1997) which is a common problem in faxed or table

form document — not necessarily ancient. It is often

solved by using global (Solihin and Leedham, 1999)

or adaptive thresholds (Hwang and Chang, 1998;

64

Bruni V., Crawford A., Vitulano D. and Stanco F. (2006).

VISIBILITY BASED DETECTION AND REMOVAL OF SEMI-TRANSPARENT BLOTCHES ON ARCHIVED DOCUMENTS.

In Proceedings of the First International Conference on Computer Vision Theory and Applications, pages 64-71

DOI: 10.5220/0001371700640071

Copyright

c

SciTePress

Yang and Yan, 2000). The threshold requires some

assumptions on the characters, the background or

the defect like width, frequency content and uniform

color — an interesting comparison between thresh-

olding based approaches is in (Leedham et al., 2002).

On the other hand, linear and non linear methods

are also proposed. They use some prior probability

models for establishing the nature of a pixel (fore-

ground or background) (Zheng and Kanungo, 2001;

Huggett and Fitzgerald, 1995) or multispectral acqui-

sitions for better distinguishing between background

and text, whenever their intensities assume very close

values (Tonazzini et al., 2004). Nonetheless, they

are constrained by the width of the characters, as

in (Huggett and Fitzgerald, 1995) or they assume

the mixture problem linear, see for instance (Tonazz-

ini et al., 2004). Mathematical morphology is also

widely used for a further step of text restoration.

Moreover, binarization and text enhancement tech-

niques do not include the restoration of the back-

ground, which is assumed uniform. Some recent

works try to locally model the background with planes

(Shi and Govindaraju, 2004) or using multispectral

acquisition (Easton et al., 2004), but they result com-

putationally expensive. An attempt to automatize the

process using very simple operations is in (Ramponi

et al., 2005). In this case, the text is distinguished

from the rest by means of Otsu threshold while the

degradation is detected by means of its color. A ratio-

nal filter (RF) and a merging operation are then used

in the restoration step. Nonetheless, it is almost in-

sensitive to slight changes of the intensity values and

then it is not successful for highly degraded text.

In this paper, the proposed model simulates human

perception for detecting blotches while it uses visi-

bility laws (Foley, 1994; Hontsch and Karam, 2000)

for removing them. It represents a first attempt to in-

troduce the laws regulating the human visual system

(HVS) in the restoration of text documents and shows

great potentialities also in critical cases.

In fact, visibility laws enable the enhancement of

the text and, at the same time, allow us to also recover

the structure of the background, i.e. irregularities, tex-

tures and color, thanks to the simultaneous measure of

local and global features of the image. These proper-

ties make the algorithm completely automatic since

visibility parameters are independently estimated on

the image under study. In that way the model results

completely independent of thresholds to be tuned, dif-

ferently from binarization strategies, and it is able to

automatically adapt to images with different size and

resolution.

In the detection step, the same process employed

by the human visual system at a low level of attention

(Gonzalez and Woods, 2002; Hontsch and Karam,

2000) is performed. It consists of a low pass filtering

of the image oriented to extract the regions of the im-

(a)

(b)

(c)

Figure 1: (a) Slight semi-transparent blotch; (b) dark semi-

transparent blotch where the text is visible; (c) dark semi-

transparent blotch where not all the text is visible.

VISIBILITY BASED DETECTION AND REMOVAL OF SEMI-TRANSPARENT BLOTCHES ON ARCHIVED

DOCUMENTS

65

age containing semi transparent blotches. On the con-

trary, the restoration exploits the transparency of the

defect by reducing its intensity under the level of hu-

man visual perception. The attenuation is balanced by

local and global measures of the perception for each

degraded pixels. The philosophy adopted in this pa-

per is pretty similar to the de-scratching of archived

films in (Bruni and Vitulano, 2004; Bruni et al., 2004)

since also semi-transparent blotches cover without

completely removing original image information.

The outline of the paper is the following. Sec-

tion 2 presents a novel model for both detection and

restoration of semi-transparent blotches and briefly

explains the human perception laws that guide both

phases. Some experimental results and discussions

are respectively contained in Sections 3 and 4.

2 THE PROPOSED MODEL

As mentioned in the Introduction, the detection of

semi-transparent blotches is not a trivial problem

since the degradation cannot be formally modelled. In

other words, we don’t know exactly what we are look-

ing for. In fact, the shape of the degradation changes

from one image to another and in the same paper we

can find completely different blotches. This fact de-

pends on the porosity of the paper, the degree of hu-

midity and also the time of the contact between the

water and the paper. Therefore, it is difficult to auto-

matically separate the blotch from the remaining part

of the document, even using multistage thresholding

strategy (Shi and Govindaraju, 2004). Nonetheless,

the Human Visual System (HVS) is able to detect

blotches at the first glance and to distinguish it from

the remaining components of the scene (background

and text in this case). In the following subsection,

we will show how it is possible to select degraded

regions by modelling HVS using a guided low pass

filtering operation. A binary mask is the output of

the detection phase. The mask is a lookup table for

the regions of the paper which are affected by the

degradation. These regions do not contain only blotch

but also non uniform background and text. Moreover,

since the blotch is semitransparent, these components

are not completely damaged (see Fig. 1). Therefore,

the restoration consists of separating the blotch from

the text and attenuating blotch intensity, accounting

for some visibility constraints, i.e. contrast sensitivity

and contrast masking (Hontsch and Karam, 2000; Fo-

ley, 1994). Contrast sensitivity measures the visibility

of each component of the degraded area with respect

to a uniform background. Contrast masking is the vis-

ibility of an object (target) with respect to another one

(masker) (page 28 of (Winkler, 2005)). In our case

the target is the pixel to be restored while the masker

is composed of the neighbouring pixels. The aim of

the restoration phase is to reduce the intensity of the

blotch till it is no longer visible and without creat-

ing artifacts in the image, as deeply shown in Section

2.2. In fact, blotches hide without completely destroy-

ing the underlying original information, as shown in

Fig. 1.

The RGB components of the image to be restored

are converted in the YCbCr space (Gonzalez and

Woods, 2002). In fact, it is proven that human eye

is more sensitive to changes in the luminance compo-

nent (Y) rather than in the chrominance ones (C

b

,C

r

)

(Mojsilovic et al., 1999). Hence, the algorithm of de-

tection will act directly on Y (luminance) component,

while restoration is performed on all three compo-

nents. Both phases will be analysed in depth in the

following subsections.

2.1 Detection

HVS detects blotches at a low level of attention, i.e.

during the coarsest step of the analysis of the scene

under study. This situation can be simulated by per-

forming a low pass filtering of the image. In fact,

blurring gives an image with homogeneous regions

in colour, where the blotch becomes darker with re-

spect to the background while the text disappears

since mainly characterized by high frequencies (see

Fig. 2a). More precisely, the luminance component

Y

0

of the degraded image is embedded in a family of

images Y

r

which are defined as follows:

Y

r

= Y

0

∗ H

r

,

where r indicates the resolution and H

r

is a low pass

filter whose support depends on r. Then, the prob-

lem consists of finding the right level of resolution

r, in which it is possible to automatically extract the

blotch with a simple binarization (see Fig. 2b). The

level of resolution is set to be the point which realizes

a good separation between the main image compo-

nents in the rate-distortion curve. This latter is used

in signal compression and it correlates the number of

bits used for coding a given signal versus the error

for the corresponding approximation (Gonzalez and

Woods, 2002). Here, rate is taken as the resolution

of our image, since it indicates the quantity of infor-

mation. The distortion is measured via the number of

lost bins — i.e. zero bins of the image histogram.

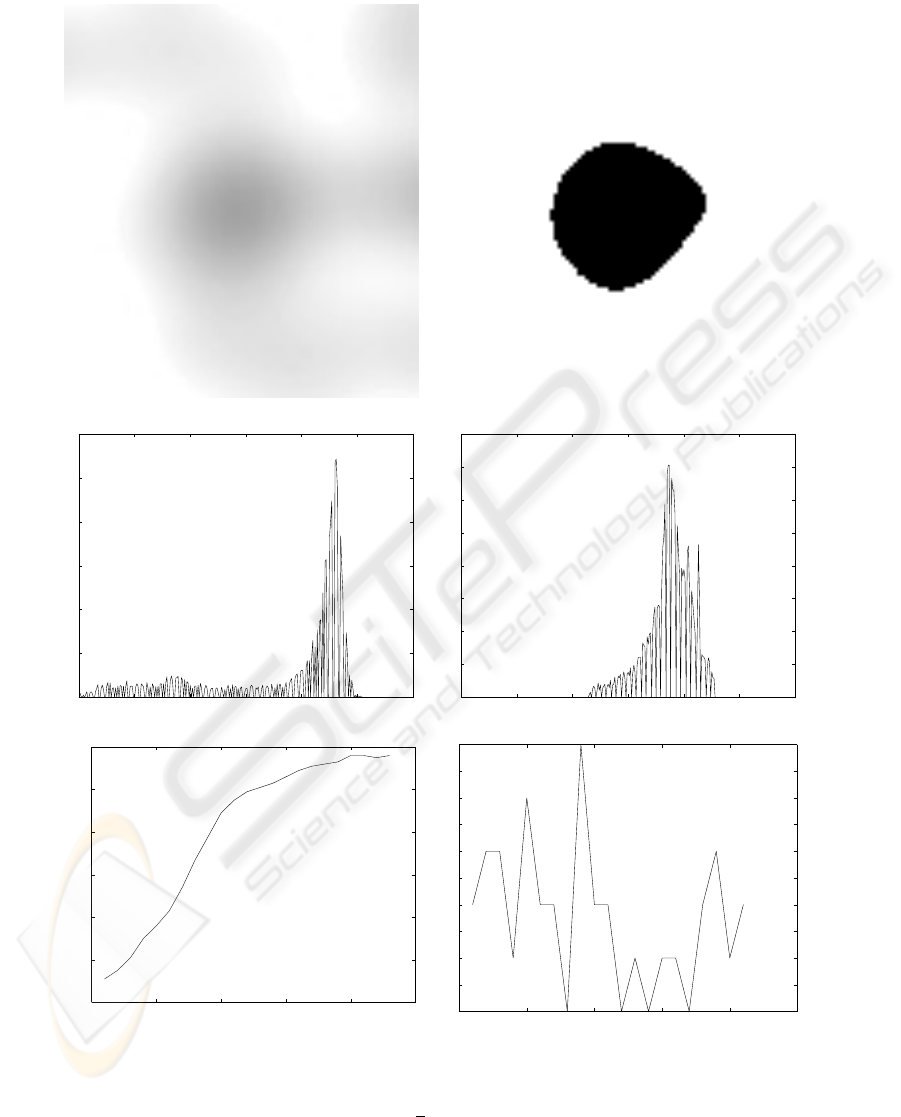

Figs. 2c and 2d show that the blurring effect cor-

responds to a regularization of the histogram of the

luminance component. It consists of a reduction of its

admissible values in a continuous manner. However,

this reduction is not proportional to the level of the

blurring but it is faster for smaller levels, slowing as

the blurring increases. The Occam filter strategy as in

(Natarajan, 1995) can be applied to achieve the best

VISAPP 2006 - IMAGE FORMATION AND PROCESSING

66

(a) (b)

0 50 100 150 200 250 30

0

0

1

00

2

00

3

00

4

00

5

00

6

00

0 50 100 150 200 250 30

0

0

50

1

00

1

50

2

00

2

50

3

00

3

50

4

00

(c) (d)

0 5 10 15 20 2

5

100

120

140

160

180

200

220

filter radius

number of zero bins

0 5 10 15 20 2

5

0

0

.5

1

1

.5

2

2

.5

3

3

.5

4

4

.5

5

(e) (f)

Figure 2: Top) Blurred paper with a semi-transparent blotch (a); its corresponding mask (b). Middle) Original image his-

togram (c); blurred image histogram (d). Bottom) The level of the optimum blurring has been automatically selected using

an Occam filter based strategy, i.e. selecting the maximum (

r = 10) of the discrete second derivative (f) of the rate-distortion

curve (e) in the left (see text for details).

VISIBILITY BASED DETECTION AND REMOVAL OF SEMI-TRANSPARENT BLOTCHES ON ARCHIVED

DOCUMENTS

67

level of blurring, i.e. the trade-off between regular-

ization and the number of significant components of

the scene. More precisely, we construct the curve of

the number of zero bins of the histogram function ver-

sus the level of blurring, as depicted in Fig. 2c, and

we get the modulus maximum of its second deriva-

tive, i.e. the point having the greatest change of cur-

vature (see Fig. 2f). Hence, ∀ r ∈ R

+

, let Γ

r

= g(r)

the number of zero bins of the histogram of Y

r

, hence

r : max

r∈R

+

∂

2

∂r

2

g(r)

=

∂

2

∂r

2

g(r)

r=r

.

It is worth noticing that in the experiments r is a

discrete variable and then also the second derivative

must be discretized.

If H

r

is a gaussian function, the resolution r will

correspond to the radius of the filter.

The final mask will be then the quantized (2 lev-

els) and binarized blurred image, as shown in Fig. 2b.

Hence, the output of the detection phase is

∪

K

i=1

B

i

= {(x, y) ∈ R

2

: |Y

r

(x, y)| >T},

where T is the mean value of Y

r

, B

i

is the i

th

blotch

and K is the number of non intersecting detected

blotches.

2.2 Restoration

From the detection phase, we achieve the region of

the blotch B. We assume that it could contain text that

must be preserved and eventually recovered. We also

assume that the text is darker than the background but

it is also possible to consider the symmetric case. Ac-

cording to the visibility laws, we perform our algo-

rithm along image rows and then along columns. In

fact, it is well known that the human visual system has

a preferential sensitiveness to these two orientations

(Hontsch and Karam, 2000). The restored image will

be the combination of the results achieved in both di-

rections. From now on, we will consider a row of the

luminance component of the image.

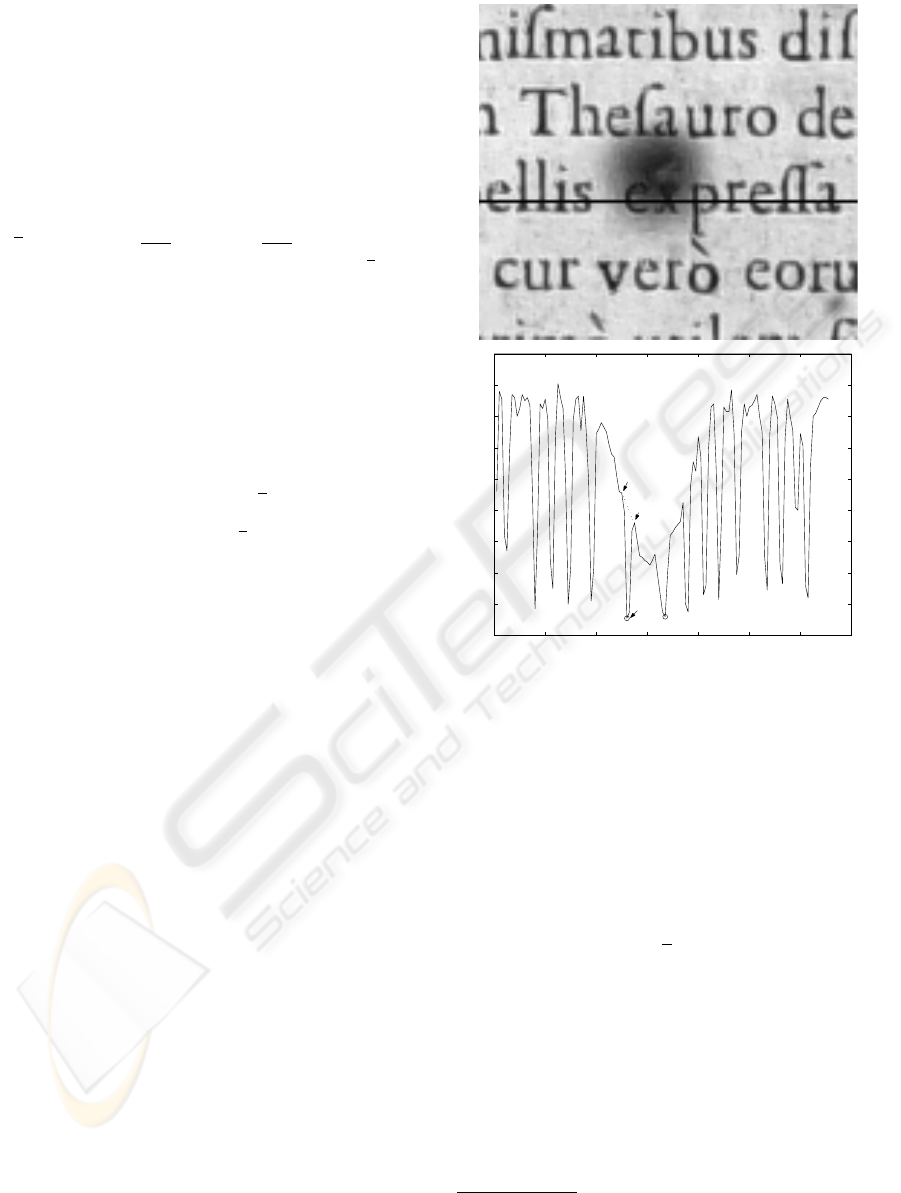

Three different kinds of information can be distin-

guished in a row of the image intersecting the region

B: text, blotch and background (see Fig. 3). Text is

the darkest component and it is represented by min-

ima in the signal of Fig. 3. The first phase of the

restoration process consists of discarding text from

the remaining components of B with respect to a per-

ception based threshold: characters will correspond

to those minima in the analyzed signal whose energy

exceeds the minimum energy value for a perceivable

pixel. From now on we will indicate with M the set

of minima of the row signal while N will represent

the set of maxima. Hence, the energy E

k

of each can-

didate minimum M

k

is defined as the area of the tri-

0 20 40 60 80 100 120 14

0

20

40

60

80

1

00

1

20

1

40

1

60

1

80

2

00

N

k

l

N

k

r

M

k

Figure 3: Row no. 69 of Fig 1c. intersecting the detected

region. The lowest minima correspond to the text while

the envelope of maxima outside the degraded region gives a

good estimation of the background.

angle whose vertices are M

k

and its adjacent maxima

N

l

k

and N

r

k

1

(see Fig. 3).

On the other hand, the energy for a just perceivable

pixel is

E

m

=

1

2

∆f∆x,

where ∆f is the minimum detection threshold

(Hontsch and Karam, 2000) and ∆x is the average of

the distance between two maxima points. The min-

imum detection threshold is computed with respect

to the background f. It represents the least perceiv-

able difference of luminance values between two sub-

sequent pixels. It can be computed from the Weber’s

law (Gonzalez and Woods, 2002), i.e.

1

Subscript indicates the associated minimum while su-

perscript indicates the position (left or right) with respect to

it.

VISAPP 2006 - IMAGE FORMATION AND PROCESSING

68

0 20 40 60 80 100 120 14

0

−

80

−

60

−

40

−

20

0

20

40

60

M

k

N

k

l

N

k

r

0 20 40 60 80 100 120 14

0

20

40

60

80

1

00

1

20

1

40

1

60

1

80

2

00

Figure 4: Top) Cross section of the row in Fig. 3. Bot-

tom) Degraded (dotted) and restored row using the proposed

method (solid). The restoration phase suitably increases the

value of pixels between text components, leaving minima

corresponding to the text unchanged.

∆f

f

= c (1)

where c =0.02. The latter gives the minimum per-

ceivable difference of luminance values between uni-

form objects. In this work we compute the back-

ground value f as the average of maxima points that

are not included in the blotch region, since it is as-

sumed to be the lighter component of the image.

In order to improve text discrimination, the same

energy based analysis is performed on the cross sec-

tion of the analysed signal. The cross section corre-

sponds to the correction of each pixel value with its

local mean. Hence, it can be considered a high pass

filter, which tries to enhance text high frequencies (see

Fig. 4). The number of taps of the filter is the peak

of the histogram of the distance between adjacent ex-

trema points in the original signal.

Let T = {(x, y) ∈ B : Y (x, y) is text}.

Once the text is extracted, the remaining pixels

{Y (x, y)}

(x,y)∈B−T

of the blotch are attenuated ac-

cording to their importance in the scene. In other

words, each Y (x, y) is attenuated measuring its vis-

ibility with respect to the image background (con-

trast sensitivity) and its neighbouring pixels (contrast

masking). The attenuation coefficient γ

cs

correspond-

ing to the contrast sensitivity is computed from the

Weber’s law (1) as follows

γ

cs

(x, y)=

f − Y (x, y)

Y (x, y)

, ∀ (x, y) ∈ B −T.

The attenuation coefficient γ

cm

(x, y) measuring the

contrast masking can be computed similarly. In this

case, the background value f in the Weber’s law is

substituted for the intensity value of the adjacent pixel

of Y (x, y). The final restoration coefficient γ(x, y)

can be then written as

γ(x, y)=1+γ

cm

(x, y)+γ

cs

(x, y), ∀(x, y) ∈ B−T .

As a matter of facts, it corresponds to an amplifica-

tion coefficient of the luminance value since the aim

of restoration is the lightning of the blotch while the

original degraded signal is not zero mean. Hence, the

restored pixel

˜

Y (x, y) is given by

˜

Y (x, y)=γY (x, y), ∀ (x, y) ∈ B −T.

The restored row in Fig. 3 is depicted in Fig. 4. It is

worth stressing that γ

cs

tries to approach the restored

pixel value to the background, while γ

cm

regulates

the relationship between subsequent pixels and then

preserves the local image texture.

For the chrominance components C

b

and C

r

,a

mean value of the color associated to the background

is substituted for the degraded one.

3 SOME EXPERIMENTAL

RESULTS

The proposed model has been tested on scanned

copies of real antique documents. Over 50 im-

ages have been processed having different size and

scanned at 300 dpi. They have been kindly supplied

by Istituto per le Applicazioni del Calcolo and Biblion

Centro Studi sul Libro Antico Onlus. Since their orig-

inal clean version is unknown, there are not objective

measures for evaluating the final result, like MSE or

similar. This fact emphasizes the importance of the

use of visibility laws in both detection and restoration

phases. A successful restoration tool has to hide the

degradation without introducing artifacts, i.e. without

changing both local and global content of the scene.

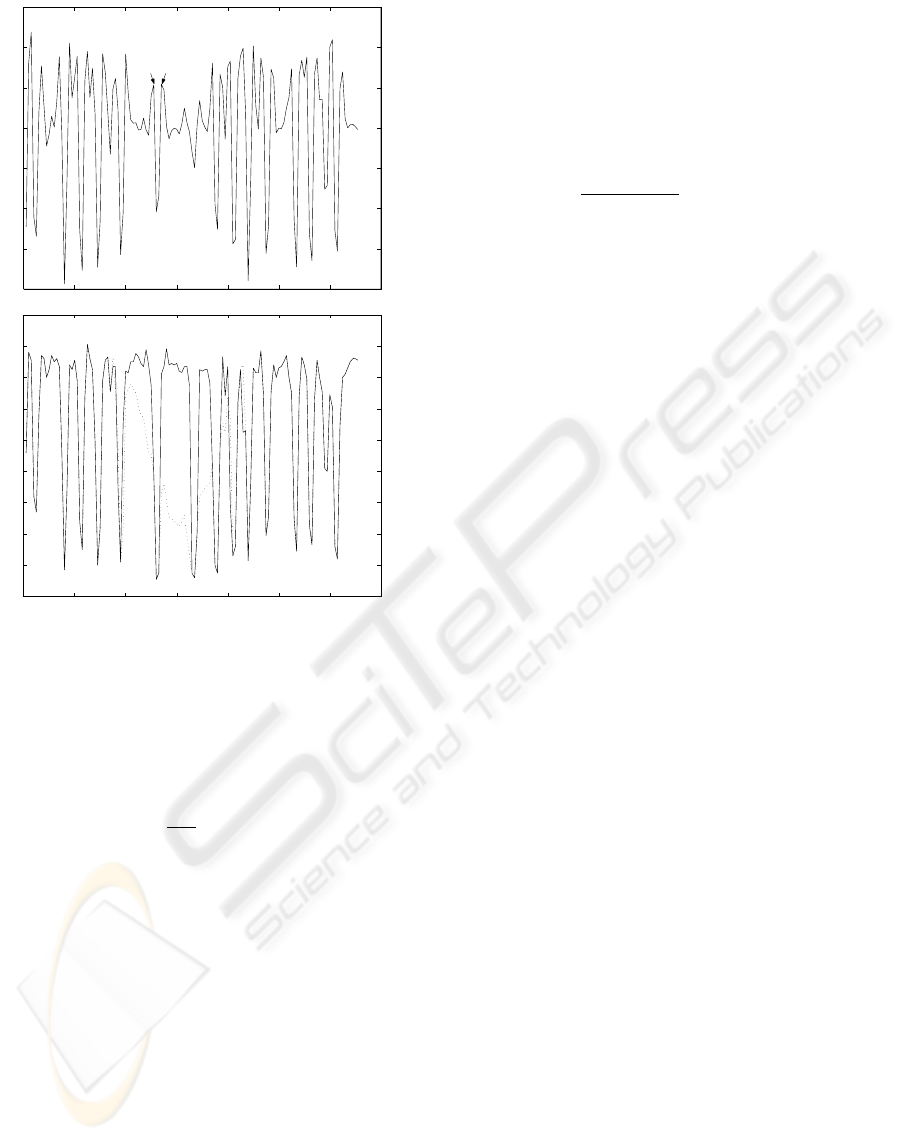

In Figs. 5 some results achieved on selected test

images are shown. Original degraded images are de-

picted in Figs. 1b and 1c. They are representative

VISIBILITY BASED DETECTION AND REMOVAL OF SEMI-TRANSPARENT BLOTCHES ON ARCHIVED

DOCUMENTS

69

of two different stages of the degradation. In the first

case (topmost) the blotch has altered the image but the

text is quite distinguishable. The bottommost figure

represents a difficult case of study, since the colour

of the blotch approaches that of the text. Notice that

the letters e and x are almost completely covered by

humidity in their topmost part, as shown in Fig. 1c.

Nonetheless, the proposed algorithm is able to recover

them thanks to its analysis of the local visibility of a

given object. In particular, it is worth noticing the de-

tection and restoration of the letter x.

In order to further stress that the algorithm for de-

tecting blotch regions results very effective as well as

fast and simple, since based on a global contrast mea-

sure of the whole scene, another example is shown in

Fig. 6. Also in this case, the critical case represented

by the bottommost blotch is detected by the proposed

framework.

4 CONCLUSIONS

In this paper we have proposed a novel model for

detecting and restoring semi-transparent blotches on

archived documents. The model is based on the per-

ception laws regulating the human visual system. The

algorithm is very fast and completely automatic both

in detection and restoration, under the assumption that

text is darker than the background or viceversa. In

fact, it performs very simple operations on the de-

graded area without requiring user’s settings. This al-

lows the model to be completely independent of the

user making it manageable also by non expert opera-

tors.

Future research will be oriented to enlarge the cases

of study trying to build visibility based filters (opera-

tors), able to model the different appearance of hu-

midity degradation.

ACKNOWLEDGEMENTS

We wish to thank Digital Codex and Biblion Centro

Studi sul Libro Antico Onlus for providing some of

the pictures used in the experiments. The original

documents belong to M. Picone Archive of Istituto

per le Applicazioni del Calcolo (CNR) in Rome and

Redentoristi Library at S. Maria della Consolazione in

Venice, Italy. This work has been partially supported

by the project FIRB (RBNE039LLC).

Figure 5: Restored images in Fig. 1b and Fig. 1c using the

proposed algorithm.

REFERENCES

Brown, M. and Seales, W. (2004). Image restoration of ar-

bitrarly warped documents. In IEEE Transactions on

Pattern Analysis and Machine Intelligence, 26(10).

Bruni, V., Kokaram, A., and Vitulano, D. (2004). A fast re-

moval of line scratches in old movies. In Proc. of In-

ternational Conference on Pattern Recognition 2004.

Bruni, V. and Vitulano, D. (2004). A generalized model

for scratch detection. In IEEE Transactions on Image

Processing 14(1).

Cannon, M., .Fugate, M., Hush, D., and Scovel, C. (2003).

VISAPP 2006 - IMAGE FORMATION AND PROCESSING

70

Selecting a restoration technique to minimize ocr er-

ror. In IEEE Transactions on Neural Networks, 14(3).

Easton, R., Knox, K., and Christens-Barry, W. (2004). Mul-

tispectral imaging of the archimedes palimsest. In

Proc. of IEEE 32

nd

Conference on Applied Imagery

Pattern Recognition 2003.

Foley, J. (1994). Human luminance pattern-vision mecha-

nism: Masking experiments require a new model. In

Journal of the Optical Society of America 11(6).

Gonzalez, R. and Woods, R. (2002). Digital Image Process-

ing. Prentice Hall Inc., Upper Saddle River, New Jer-

sey, 2

nd

edition.

Hontsch, I. and Karam, L. (2000). Locally adaptive percep-

tual image coding. In IEEE Transactions on Image

Processing 9(9).

Huggett, A. and Fitzgerald, W. (1995). Pseudo 2-

dimensional hidden markov models for document

restoration. In Proc. of IEE Colloquium on Docu-

ment Image Processing and Multimedia Environments

1995.

Hwang, W. and Chang, F. (1998). Character extraction for

documents using wavelet maxima. In Image and Vi-

sion Computing 16.

Leedham, G., Varma, S., Patankar, A., and Govindaraju, V.

(2002). Separating text and background in degraded

document images - a comparison of global threshold-

ing techniques for multi-stage thresholding. In Proc.

of International Workshop on the Frontiers of Hand-

writing Recognition 2002.

Mojsilovic, A., Hu, J., and Safranek, R. (1999). Perceptu-

ally based color texture features and metrics for image

retrieval. In Proc. of International Conference on Im-

age Processing 1999, 3.

Natarajan, B. (1995). Filtering random noise from deter-

ministic signals via data compression. In IEEE Trans-

actions On Signal Processing 43(11).

Oguro, M., Akiyama, T., and Ogura, K. (1997). Faxed doc-

ument image restoration using gray level representa-

tion. In Proc. of the 4

th

Int. Conf. on Document Analy-

sis and Recognition.

Ramponi, G., Stanco, F., Dello Russo, W., Pelusi, S., and

Mauro, P. (2005). Digital automated restoration of

manuscripts and antique printed books. In Proc. EVA

2005.

Sharma, G. (2001). Show-through cancellation in scans of

duplex printed documents. In IEEE Transactions on

Image Processing 10(5).

Shi, X. and Govindaraju, V. (2004). Historical document

image enhancement using background light intensity

normalization. In Proc. of International Conference

on Pattern Recognition 2004.

Solihin, Y. and Leedham, C. (1999). A new class of global

thresholding techniques for handwriting images. In

IEEE Transactions on Paatern Analysis and Machine

Intelligence, 21.

Stanco, F., Ramponi, G., and de Polo, A. (2003). Towards

the automated restoration of old photographic prints:

a survey. In Proc. IEEE-EUROCON 2003.

Tonazzini, A., Bedini, L., and Salerno, E. (2004). Inde-

pendent component analysis for document restoration.

In International Journal on Documents Analysis and

Recognition 7.

Valverde, J. S. and Grigat, R. (2000). Optimum binariza-

tion of technical documents. In Proc. of International

Conference on Image Processing 2000.

Winkler, S. (2005). Digital Video Quality. John Wiley &

Sons, Ltd.

Yang, Y. and Yan, H. (2000). An adaptive logical method

for binarization of degraded document images. In Pat-

tern Recognition, 33.

Zheng, Q. and Kanungo, T. (2001). Morphological degrada-

tion models and their use in document image restora-

tion. In Proc. of International Conference on Image

Processing, Vol.1.

Figure 6: Top) Original image. Bottom) Detected blotches

(black regions ) using the algorithm in section 2.1 and

r =

12.

VISIBILITY BASED DETECTION AND REMOVAL OF SEMI-TRANSPARENT BLOTCHES ON ARCHIVED

DOCUMENTS

71