A MULTI-AGENT BASED FRAMEWORK FOR SUPPORTING

LEARNING IN ADAPTIVE AUTOMATED NEGOTIATION

R

ˆ

omulo Oliveira, Herman Gomes

Federal University of Campina Grande

Av. Apr

´

ıgio Veloso, 882 - Campina Grande, Paraiba, Brazil

Alan Silva, Ig Bittencourt, Evandro Costa

Federal University of Alagoas

Campus A.C. Sim

˜

oes - BR 104 - Km 14 - Macei

´

o, Alagoas, Brazil

Keywords:

Trading agents, multi-agent architecture, cognitive models, machine learning.

Abstract:

We propose a multi-agent based framework for supporting adaptive bilateral automated negotiation during

buyer-seller agent interactions. In this work, these interactions are viewed as a cooperative game (from the idea

of two-person game theory, nonzerosum game), where the players try to reach an agreement about a certain

negotiation object that is offered by one player to another. The final agreement is assumed to be satisfactory to

both parts. To achieve effectively this goal, we modelled each player as a multi-agent system with its respective

environment. In doing so, we aim at providing an effective means to collect relevant information to help agents

to make good decisions, that is, how to choose the “best way to play” among a set of alternatives. Then we

define a mechanism to model the opponent player and other mechanisms for monitoring relevant variables

from the player´ environment. Also, we maintain the context of the current game and keep the most relevant

information of previous games. Additionally, we integrate all the information to be used in the refinement of

the game strategies governing the multi-agent system.

1 INTRODUCTION

A recent trend within electronic commerce systems

is to provide automated negotiation, that is one of

the hottest research topic in Artificial Intelligence and

Economy. Lately this topic is receiving more at-

tention from the scientific community with the chal-

lenges related for providing more realistic and fea-

sible solutions. Following this track, is proposed a

multi-agent based framework for supporting adaptive

bilateral automated negotiation during buyer-seller

agent interactions. In this work, these interactions are

viewed as a cooperative game (from the idea of two-

person game theory, nonzerosum game), where the

players try to reach an agreement of a certain nego-

tiation object that is offered by one player to another.

The final agreement is assumed to be satisfactory to

both parts. A particular challenge for researchers in

adaptive automated negotiation concerns how to ef-

fectively model the opponent and for monitoring the

environment, here assumed as dynamic.

To achieve effectively the issues above, each player

is modelled as a MAS with its respective environ-

ment. In doing so, we aim at providing an effective

means to collect relevant information to help agents to

make good decisions, that is, how to choose the “best

way to play” among a set of alternatives. Following,

is used some machine learning technique, neural net-

work, to define a mechanism to model the opponent

players and other mechanisms for monitoring relevant

variables from the player environment. Also, is main-

tained the context of the current game and keep track

the most relevant information of previous games. Ad-

ditionally, is integrated all the information to be used

in the refinement of the game strategies governing the

multi-agent system. To this end, is used a Q-learning

algorithm.

2 ENVIRONMENT DESCRIPTION

In a system to negotiate in the e-commerce, the ne-

gotiator, before being seen as only one entity (Fatima

et al., 2004; Narayanan and Jennings, 2005), is de-

signed as a Multiagent System (MAS), where their

members collaborate between them (Filho and Costa,

2003; Bartolini et al., 2004; Sardinha et al., 2005).

Thereby, necessaries tasks as analyze of proposals,

learning, perception of changes in the market and oth-

ers are distributed for the members in the MAS.

An essential item in a negotiation is the exchange

of messages, however, two things have to be resolved:

153

Oliveira R., Gomes H., Silva A., Bittencourt I. and Costa E. (2006).

A MULTI-AGENT BASED FRAMEWORK FOR SUPPORTING LEARNING IN ADAPTIVE AUTOMATED NEGOTIATION.

In Proceedings of the Eighth International Conference on Enterprise Information Systems - SAIC, pages 153-158

DOI: 10.5220/0002452601530158

Copyright

c

SciTePress

• Physical Communication between the agents:: in-

volves all the interaction process between the

agents, since the communication protocol until the

use of software structure. FIPA (Foundation for

Intelligent Physical Agents) resolved part of this

stage by means of the development of an universal

standard protocol to MAS. Jade (Java Agent De-

velopment) implements FIPA’s standards, resolv-

ing this item completely;

• Negotiation Protocol: protocol to manage the ex-

change of proposals in the negotiation environ-

ment. There are some works that propose solution

for this stage (Filho and Costa, 2003).

What follows, are approached some fundamentals

details involved in framework building, divided into

three subsections: negotiation objects, negotiation

protocol and negotiator model.

2.1 The Negotiation Object

The negotiation object is represented for a vector of

attributes that assumes the values in a determinate

range (Filho and Costa, 2003). Thereby, in negotia-

tion, the negotiation object has a subset of attributes

that belongs to a set of attributes pre-adjusted of the

negotiation object. In others words:

Consider A = {a

1

,a

2

,a

3

, ..., a

m

} a set of possible

attributes pre-adjusted in a negotiated process;

and also, C = {c

1

,c

2

,c

3

, ..., c

n

} a set of charac-

teristics that composes a physical description of the

negotiation object.

Finally, a negotiation object is defined as: o =

{C

,A

}, where A

⊆ A and C

⊆ C. The Fi-

gure 1 illustrates this definition. All attributes a ∈ A

and characteristics c ∈ C

are decision variables with

discreet or linear values, and its respectives domains

should be predefined. During this paper, the decision

variables are called as the set (a

i

∪ c

i

) represented by

the object o

i

.

P ossible object‘s characteristics

P ossible decision variables

in negotiation

N egotiable object

Object‘s caracteristics

chosen in negotiation

Decision variables

chosen in negotiation

Figure 1: Composition of a negotiation object.

2.2 Negotiation Protocol

The Negotiation Protocol is responsible for organi-

zing the actions of the entities involved in the nego-

tiation concerning the time. According to (Filho and

Costa, 2003; Debenham, 2003), are necessaries that

the agents holds actions defined during the negotia-

tion: accept a proposal; give up of the negotiation;

send a proposal; suggest alternatives products; sug-

gest correlates products.

Algorithm 1 General Algorithm of Negotiation

1 - The Buyer-MAS search the Seller-MAS;

2 - The negotiators define the negotiation objects with its weight and

restrictions in the pre-negotiation;

3 - One of the negotiators send a propose;

4 - The other negotiator analyze his utility criteria and send a response

informing if the proposal was accepted or not;

IF Proposal Rejected THEN

IF Negotiators want continue THEN

Go to step 3;

ELSE

Go to step 7;

END IF

END IF

5 - Negotiators evaluate the general result, that is kept in a historic;

Is percepted that for each action, there is a neces-

sity of a reasoning considering the knowledge of the

domain and motivations that justify the presence of

AI techniques as a possible solution.

The Negotiation Protocol is divided into three

steps, showed in Algorithm 1: pre-negotiation, nego-

tiation and general evaluation of negotiation. Each

one of these steps are approached below.

2.2.1 Pre-Negotiation

As far as Rubens’s work (Filho and Costa, 2003) con-

cerned, the negotiation should be started with a pre-

negotiation, where the agents should define which one

of the negotiate object and which ones attributes that

should be considered in the negotiation. Preferences

and restrictions of the negotiation object are com-

bined between the negotiators, as showed in Subsec-

tion 2.1.

Each attribute a

i

and c

i

considered in the nego-

tiation receives influence for a weight w

i

( where

w

i

=1) that represents the importance degree of

the attribute in the negotiation. In this way, for in-

stance, a negotiator can gives more importance for an

attribute, such as price, than the others, such as time of

delivery. If the characteristics of the negotiated object

were fixable and unnegotiable (for instance, a specific

book ), all the weights referents to c

i

will be nulls.

The negotiator can solicits some personal datas for

his opponent, with the aim the treatment negotiation

in a different way, D = d

1

,d

2

,d

3

, ..., d

n

. Then, the

negotiator can use only the set D

⊆ D.

2.2.2 Competitive Negotiation

Here occur the exchange of proposals aiming a deal

between the two parts. For the successful of a safe

ICEIS 2006 - SOFTWARE AGENTS AND INTERNET COMPUTING

154

deal and to measure how interesting are the generated

proposals, is necessary the existence of auxiliary

functions (Faratin et al., 2002; Klein et al., 2003).

With this in mind, each agent has two own functions,

often used during the negotiation:

- Utility Function

This function evaluates how useful is a proposal.

Considering criterions defined for each negotiator,

and adjusts of each weight w

i

to the attributes in-

volved in the negotiation, the utility function U

T

, for

convenience,returns a value inside the range [0, 1].

U

T

(P )=

n

i=1

w

i

u

ai

(P ), where U

T

(P ) ∈ [0, 1] (1)

- Similarity Function

Is a function based in fuzzy similarity that calcu-

lates the similarity degree between two proposals, re-

turning values in the range [0, 1]. Two proposals are

similar when their contents (attributes and values) are

similar. The similarity degree is a physical measure

of semblances and is not influenced for the utility of

the proposals. Then, two proposals can have a high

similarity degree and hold different utilities and vice

versa.

Consider the following proposals, each one of then

is based and composed in a unique object.

R = {r

a1

,r

a2

, ..., r

an

,r

c1

,r

c2

, ..., r

cm

}

S = {s

a1

,s

a2

, ..., s

an

,s

c1

,s

c2

, ..., s

cm

}

The function that calculates the similarity between

two attributes r

i

,s

i

is given for: sim(r

i

,s

i

)=

1|h

i

(r

i

) − h

i

(s

i

)|∈[0, 1], where h

i

() is the simi-

larity function to the i

th

attribute that returns values

in the range [0, 1].

Now, the function SIM() that calculates the simi-

larity between two proposals is defined for:

SIM (R, S)= (w

i

∗ sim(r

i

,s

i

)) ∈ [0, 1] (2)

When a proposal P

1

is received, the negotiator

agent responsible for receiving the proposal can per-

forms any attitude mentioned in the begin of this sec-

tion. Besides that, to send another proposal P

2

, the

negotiator should have how measure the proposal re-

ceived, and then:

• To generate proposals P

2

i with utility higher than

the P

1

utility;

• To choose the most similar for P

1

between the pro-

posals P

2

i generated;

• Send the proposal;

This process occurs repeatedly until that the negotia-

tion finish due to a negative deal (Zeng and Sycara,

1997). A deal occurs in the negotiation process when

a proposal is accepted for both parts (Jennings et al.,

2001; Faratin et al., 2002). There are facts that can in-

fluence in change of behavior and attitudes of the ne-

gotiators agents: the time, data of the negotiation en-

vironment, analyze of opponent profile (Faratin et al.,

2002). An attitude can be viewed as an action of

the agent that influences the trajectory of the nego-

tiation (Zhang et al., 2004). Are cited as examples

of attitudes: send ultimates, finish the negotiation,

change the negotiation strategy, send alternative pro-

ducts, show correlates products, others.

Grouping negotiators profiles for their characteris-

tics and associate for this profiles a set of adequate

weight w

i

, the chances of the proposals been accepted

by opponent negotiator improve considerable. Retak-

ing the exposition of the prior paragraph, we will try

to find a set of weight w

i

∈ B

that best represent, or

assimilate, with the set of weight w

i

∈ B.

2.2.3 General Evaluation of Negotiation

Here, an evaluation process classifies how good was

the negotiation (general outcomes) and also the set of

techniques used to reach these outcomes. Based on

the data acquired during the various negotiations, are

used some AI techniques to tuning the strategy and

to minimizing the time spent in the process (Wong

et al., 2000); data mining to propose correlate pro-

ducts; Q-learning to reinforce the set of ideal weight

to the elaboration of good proposals. In the next sec-

tion, is showed that this evaluation will be kept as his-

torical, and is provided to the agent negotiator.

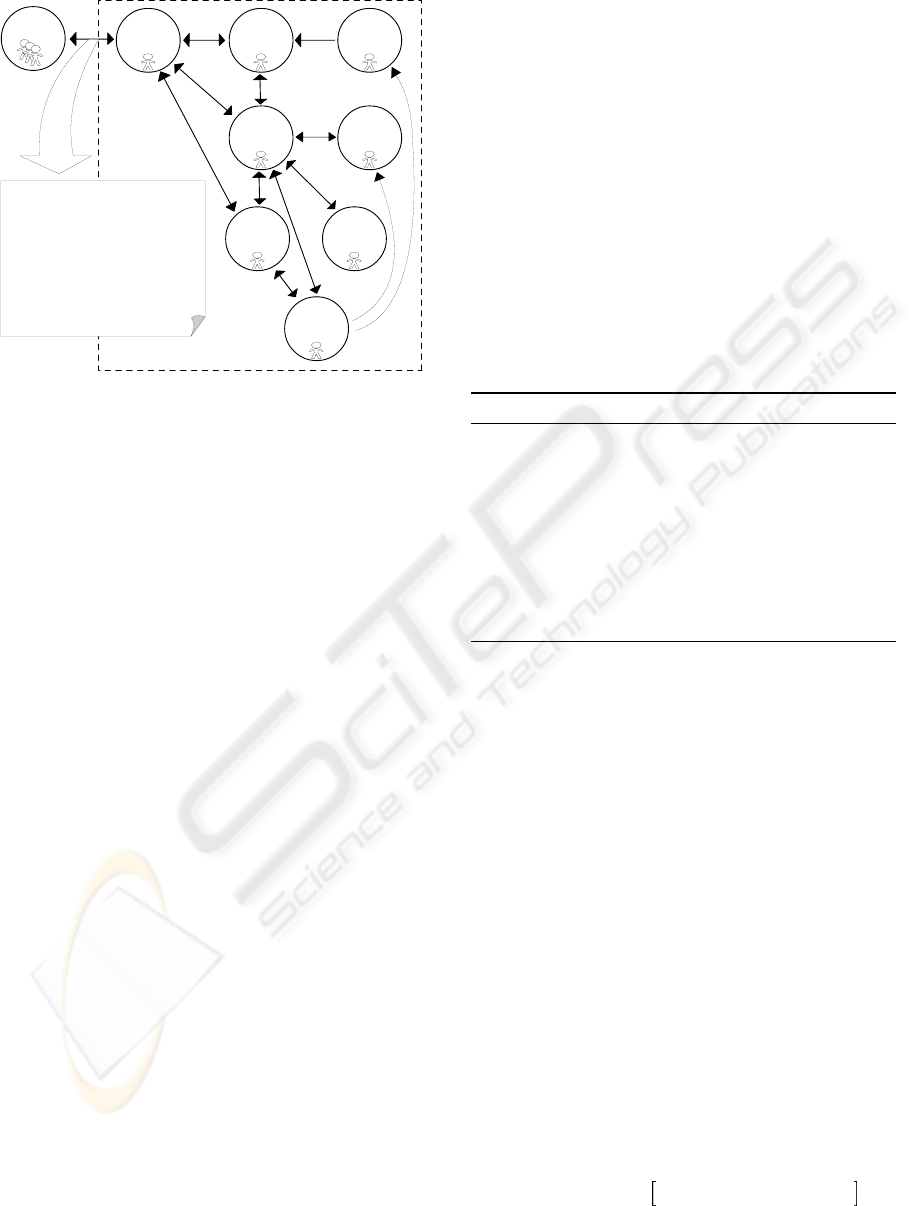

3 MAS ARCHITECTURE

This section shows how each agent behavior inside

a e-commerce scenery following the protocol specifi-

cations approached in the prior section. Then agent

descriptions based in Figure2 is explained below:

• Mediator Agent: is responsible for communicating

with others agents, managing decision of the col-

laborator agents and executing the negotiation pro-

tocol specified in Subsection 2.2.

• Manager Negotiator Agent: this agent is responsi-

ble for keeping the status of each negotiation that

has been performed. Also, the agent receives in-

formations about existent profile by Profile Cata-

loguer Agent (flows “b” in the Figure 2), jointly

with the credibility level.

• Manager Proposal Agent: This agent is responsible

for generating a good proposal to a negotiation that

has been performed. This agent searches the Man-

ager Strategies Agent the best strategy to be used to

send a proposal to MAS partner in question (flows

“g” in the Figure 2).

A MULTI-AGENT BASED FRAMEWORK FOR SUPPORTING LEARNING IN ADAPTIVE AUTOMATED

NEGOTIATION

155

M ediator

A gent

Partner

M AS

M anager

N egotiation

A gent

Profile

cataloguer

A gent

Historical

Agent

Strategies

M anager

A gent

Proposal

generator

Agent

Evaluator

A gent

Similarity

R eckoner

A gent

M AS N egociator - Architecture

1 - To request autentication

2 - To answ er autentication

3 - To define scenario in negotiation

4 - To configure pre-negociation

5 - To send agreem ent proposal

6 - To answ er agreem ent proposal

7 - To configure cooperative negotiation

8 - To send

mutual benefit proposal

9 - To answ er mutual benefit proposal

ab

c

d

e

f

g

h

i

j

l

k

Figure 2: Negotiator MAS - Architecture.

• Evaluator Agent: This agent is responsible for eval-

uating how to determine if the proposal is satisfac-

tory in a certain moment (flows “f” and “j” in the

Figure 2).

• Historical Agent: This agent is responsible for

maintaining the historical of each action performed

by the agent. It always notifies the agents Manager

Strategies and Profile Cataloguer (respectively, the

flows “h” and “e” in the Figure 2).

• Profile Cataloguer Agent: This agent is able to

identify the partners profiles under various view,

and, at the same time, inform the credibility level

that can be mapped in a determined client.

• Similarity Reckoner Agent: This agent is respon-

sible for evaluating how two proposals are simi-

lar, according to certain criterions are evaluated and

how each ones of these criterions are relevant to

this evaluation (flows “i” in the Figure 2).

• Manager Strategies Agent: This agent is responsi-

ble for inferring a strategy that is probably more

effectiveness in the negotiation.

4 LEARNING TECHNIQUES

One of the advantages in an multiagent approach

refers the use of artificial intelligence techniques in

a independent view, in each agents, and then optimize

all the system. In a negotiation process, learning is

present in the elaboration of the proposals, client clas-

sification, negotiation management and in any other

process associated in an agent of section 3.

The profile grouping comes to help the identifica-

tion of the MAS Negotiator’s, choosing a strategy.

The Profile Cataloger Agent is responsible for profiles

grouping. This agent uses a set of negotiation data

sent to learn about these classified groups. The pro-

file identification is made through the use of a Neural

Network SOM technique due its high generalization

power. As is used in each new business domain, the

network should be trained, using the n first occurred

negotiation to train the network. Besides this, the

agent should train the network periodically, making

it learn with new cases. After trained, the network is

able to identify quickly a new set of inputs between

the n generated profiles. The agent goals made the

classification based on personal client datas associ-

ated with features of the object negotiated. The sets of

weight w

i

for each proposal can be used in the future,

however, makes necessary a treatment to the identifi-

cation of standard variation in each negotiation. Be-

low, following the Learning techniques explored in

the Profile Cataloger Agent.

Algorithm 2 Neural Network Algorithm

1 - Obtain the first n negotiation in the historic;

2 - Define the quantity q of learning profiles;

3 - Performing a Network SOM with q output neurons and use the set of

negotiation n in the historic for treatment.

4 - Receive a new case and classify it as one profile q;

5 - Use a set of strategies associated to the profile;

6 - In the end of the negotiation, insert a new case in the historic;

7 - IF The number of new cases in historic justifying treatment

THEN Go to Step 3

ELSE Go to Step 4;

The profile identification algorithm defines the pro-

files grouping, thereby, before a new negotiation been

started, is defined the kind of profile that the nego-

tiation is embedded. In negotiation, is used the Q-

Learning approach objectifying improve the negotia-

tion process through the selection of betters strategies.

The goal of the agent in a Reinforcement Learn-

ing problem is to learn an action policy that maxi-

mizes the expected long term sum of values of the

reinforcement signal, from any starting state (Bianchi

et al., 2004). The Reinforcement Learning Problem

can be resolved as a discrete time, finite state, fi-

nite action Markov Decision Process (MDP). In the

present work, the problem is defined as a MDP solu-

tion.

The chosen of better strategies can modelled by a

4-tuple (S, A, T, R), where: S is finite set of states; A

is finite set of actions; T : S × A: is state transition

function represented for the probability value, signal-

izing the betters strategy to be chosen; R : S × A:is

is a reward function.

The Reinforcement Learning algorithm, concern-

ing Markov Decision Process, the estimated reward

function is defined as

ˆ

Q(s, a)=

ˆ

Q(s, a)+α

r + γmax

ˆ

Q(s

,a

) −

ˆ

Q(s, a) ,

where

ˆ

Q(s, a) is a recursive algorithm used to pre-

ICEIS 2006 - SOFTWARE AGENTS AND INTERNET COMPUTING

156

dict the best action; α =1/(1 + visits(s, a)), where

visits(s, a) is a total number of times this state-action

pair has been visited up to and including the current

interaction; r is a reward received; γ is a discount fac-

tor (0 ≤ γ<1).

We use in the e-commerce environment a proposal

approached in (Bianchi et al., 2004), the implements

an algorithm that is used in the action choice rule,

which defines which action must be performed when

the agent is in state s

t

. The heuristic function included

π(s

t

)=

argmax

at

[

ˆ

Q(s

t

,a

t

)+ξH

t

(s

t

,a

t

)] if q ≤ p,

a

random

otherwise,

• H : S × A → R is the heuristic function;

• : is a real variable used to weight the influence of

the heuristic function;

• q: is a random uniform probability density mapped

in [0, 1] and p(0 ≤ p ≤ 1) is the parameter which

defines the exploration / exploitation balance;

• a

random

is a random action selected among the

possible actions in state s

t

;

Then, the value heuristic H

t

(s

t

,a

t

) that can be de-

fined as:

H(s

t

,a

t

)=

max

a

ˆ

Q(s

t

,a) −

ˆ

Q(s

t

,a

t

)+ηifa

t

= π

H

(s

t

),

0 if otherwise,

Mapping the reinforcement learning algorithm to

e-commerce domain, the solution process used was

through MDP. Intuitively, a process is Markovian if

and only if the state transitions depend only on the

current state of the system and are independent of

all preceding states (Narayanan and Jennings, 2005).

Formally, in Markov Process, the conditional proba-

bility density function, P, is defined as:

P (x

n

|x

1

,x

2

,...,x

n−1

)=P (x

n

|x

n−1

)

The 4-tuple in e-commerce environment is defined as:

• S is set of pair of proposal and counter-proposal;

• A: finite set of strategies;

• T : S × A → Π(s): state transition function rep-

resented for the probability value, signalizing the

betters strategy to be chosen;

• R : S × A: is described as a utility value, defined

for the similarity of the attributes, mapped as a re-

ward function.

5 EXPERIMENTAL ANALYSIS

Were realized learning negotiation experiments, due

this was used a scenery of vehicles. In the exper-

iments, only the seller has learning characteristics.

Were used as negotiation attributes the product price,

the delivery time and the payment time ( A

= Price

Algorithm 3 The Heuristics Algorithm

Initialize Q(s, a)

Repeat:

Visit the s state

Select a strategy using the choice rule

Receive the reinforcement r(s, a) and observe the next state s

.

Update the values of H

t

(s, a).

Update the values of Q

t

(s, a) according to:

ˆ

Q(s, a)=

ˆ

Q(s, a)+α

r + γmax

ˆ

Q(s

,a

) −

ˆ

Q(s, a)

Update the s ← s

state

Until some stop criteria is reached,

where s = s

t

,s

= s

t+1

,a = a

t

ea

= a

t+1

=[

$

17000,

$

90000]; Delivery Time = {1 day, 2 days,

3 days, ...}; Payment

Time = {1 month, 2 months, 3

month, ...} ).

As characteristics C

, were defined the follow

attributes: potency (hp), consumption , luggage space

and quantity of doors ( Potency = [67hp,400hp];

Consumption = [8Km/liter,16Okm/liter]; Lug-

gage

Space = [180 liters,300 liters]; Quan-

tity

of doors = {3 doors, 5 doors} ).

The set D

, viewed in subsection 2.2.1, is

represented for the attributes name, civil state,

gender, finance, profession, age (Civil State =

{Single, Married, widower, Divorced}; Gender =

{Male,Female}; Finance = [

$

6000,

$

90000]; Profes-

sion = {Bricklayer, Programmer, Teacher, Journalist,

Engineering, Architect, Doctor, Lawyer, Judge, Politi-

cian }; Age = [18,80]).

Given this domain specification, were simu-

lated, firstly, 1000 negotiation. Then, were

created 1000 clients with different characteris-

tics, but coherent with their preferences. For

instance, a client with characteristics: D’ =

{Gender=Male, Civil

State=single, Age=35, Fi-

nance=

$

60000, Profissao=Lawyer}, his preferences

are adjusted to: Weight = {Price = 0.6, Deliv-

ery

Time = 0.05, Payment Time = 0.05 , Potency

= 0.1, Consumption = 0.1, Luggage

Space = 0.05,

Quantity

of Luggage = 0.05}.

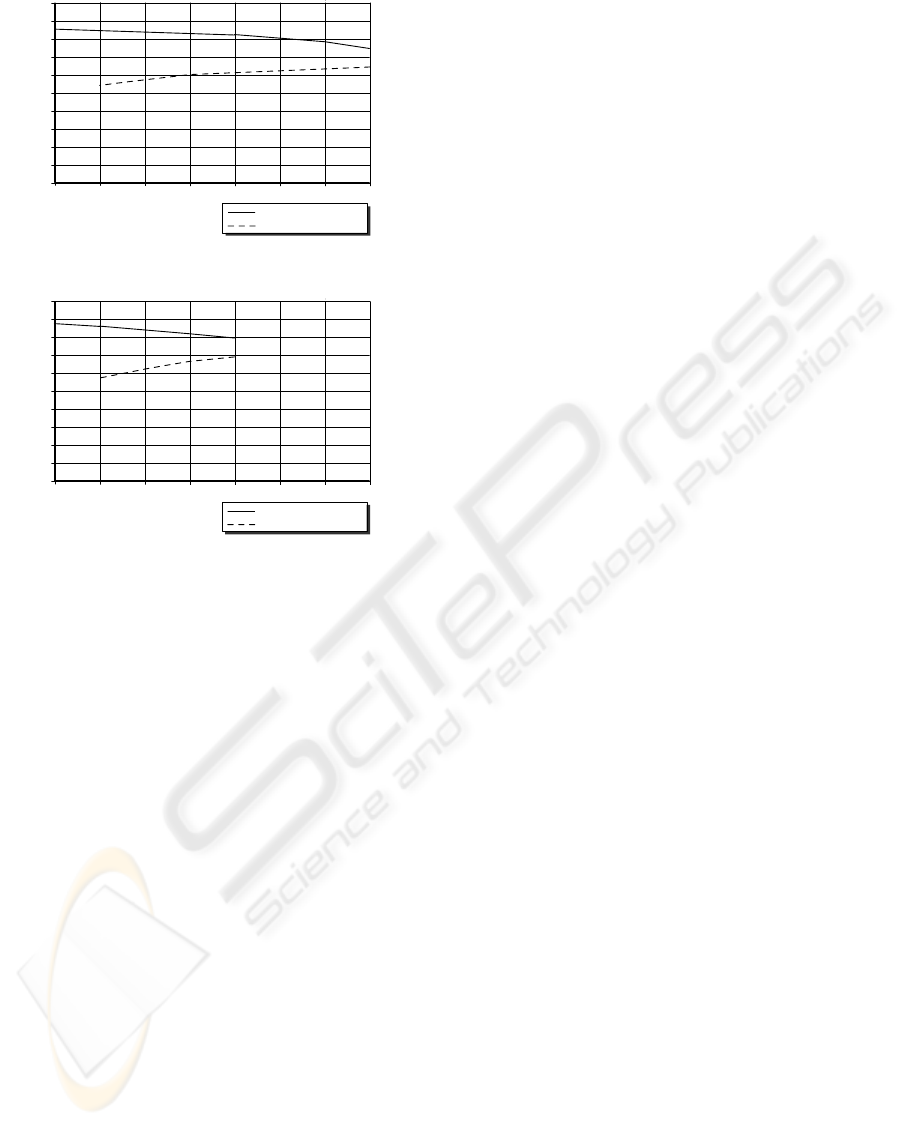

The outcomes of this first stage of the experiment

can be viewed in the Figure 3. The average time, spent

to the seller in a negotiation was 432ms.

Using the techniques showed in Section 4, and us-

ing the historical of 1000 negotiations of the first ex-

periment, a second experiment with more 1000 clients

created randomly was realized. The outcomes is rep-

resented in the Figure 4. In this experiment, the seller

knew decrease the average of interactions with their

clients and, consequently, decrease the average time

per negotiation to 207ms.

A MULTI-AGENT BASED FRAMEWORK FOR SUPPORTING LEARNING IN ADAPTIVE AUTOMATED

NEGOTIATION

157

1

0,2

0

0,1

0,3

0,4

0,5

0,6

0,7

0,8

1

0,9

2

3

4

5

6

78

Utility

Interactions

tneSslasoporP

devieceRslasoporP

Average Utility of Seller

Figure 3: Before, without machine learning.

1

0,2

0

0,1

0,3

0,4

0,5

0,6

0,7

0,8

1

0,9

2

3

4

5

6

78

Utility

Interactions

tneSslasoporP

devieceRslasoporP

Average Utility of Seller

Figure 4: After, with machine learning.

6 FINAL REMARKS

This paper has presented a novel approach by propos-

ing a software framework for supporting adaptive bi-

lateral negotiation. The major contribution of this pa-

per is the proposed decision making apparatus. To

model opponent players, an algorithm that uses self-

organizing maps (neural networks) and a Q-Learning

algorithm used basically to predicting the next im-

portant actions were proposed. Therefore, some im-

provements over current existing approaches include

the combination of neural networks and Q-Learning,

used in an appropriated software framework.

For future work, is aimed to develop new com-

ponents that implement other artificial intelligence

techniques in order to be compared with the exist-

ing ones. For example, case-based learning to be

compared with neural networks with regard to play-

ers modelling.

REFERENCES

Bartolini, C., Preist, C., and Jennings, N. R. (2004). A

Software Framework for Automated Negotiation. In

Choren, R., Garcia, A., Lucena, C., and Romanovsky,

A., editors, Software Engineering for Large-Scale

Multi-Agent Systems, volume 3390 of Lecture Notes in

Computer Science, pages 213–235. Springer-Verlag.

Bianchi, R. A. C., Ribeiro, C. H. C., and Costa, A. H. R.

(2004). Heuristically accelerated q-learning: A new

approach to speed up reinforcement learning. In Baz-

zan, A. L. C. and Labidi, S., editors, 17th Brazilian

Symposium on Artificial Intelligence, volume 3171 of

Lecture Notes in Computer Science, pages 245–254.

Springer.

Debenham, J. K. (2003). An eNegotiation Framework.

In Australian Conference on Artificial Intelligence,

pages 833–846.

Faratin, P., Sierra, C., and Jennings, N. R. (2002). Us-

ing Similarity Criteria to Make Issue Trade-offs

in Automated Negotiations. Artificial Intelligence,

142(2):205–237.

Fatima, S. S., Wooldridge, M., and Jennings, N. R. (2004).

An Agenda Based Framework for Multi-issues Nego-

tiation. Artificial Intelligence, 152(1):1–45.

Filho, R. R. G. N. and Costa, E. B. (2003). A Decision-

Making Model to Support Negotiation in Electronic

Commerce. In 3rd International Interdisciplinary

Conference on Electronic Commerce (ECOM-03),

pages 16–18, Gdansk, Poland.

Jennings, N. R., Faratin, P., Lomuscio, A. R., Parsons,

S., Wooldridge, M., and Sierra, C. (2001). Au-

tomated Negotiation: Prospects Methods and Chal-

lenges. Group Decision and Negotiation, 10(2):199–

215.

Klein, M., Faratin, P., Sayama, H., and Bar-Yam, Y. (2003).

Protocols for Negotiating Complex Contracts. IEEE

Intelligent Systems, 18(6):32–38.

Narayanan, V. and Jennings, N. R. (2005). An adap-

tive bilateral negotiation model for e-commerce set-

tings. In Seventh IEEE International Conference on

E-Commerce Technology.

Sardinha, J. A. R. P., Milidi

´

u, R. L., Paranhos, P. M., Cunha,

P. M., and de Lucena, C. J. P. (2005). An Agent Based

Architecture for Highly Competitive Electronic Mar-

kets. In Russell, I. and Markov, Z., editors, FLAIRS

Conference, pages 326–332. AAAI Press.

Wong, W. Y., Zhang, D. M., and Kara-Ali, M. (2000). Ne-

gotiating With Experience. In CSIRO Mathematical

and Information Sciences.

Zeng, D. and Sycara, K. (1997). Benefits of Learning in Ne-

gotiation. In Proceedings of the 14th National Confer-

ence on Artificial Intelligence and 9th Innovative Ap-

plications of Artificial Intelligence Conference (AAAI-

97/IAAI-97), pages 36–42, Menlo Park. AAAI Press.

Zhang, S., Ye, S., Makedon, F., and Ford, J. (2004). A

Hybrid Negotiation Strategy Mechanism in an Auto-

mated Negotiation System. In Breese, J. S., Feigen-

baum, J., and Seltzer, M. I., editors, EC ’04: Proceed-

ings of the 5th ACM conference on Electronic com-

merce, pages 256–257, New York, NY, USA. ACM

Press.

ICEIS 2006 - SOFTWARE AGENTS AND INTERNET COMPUTING

158