IMAGE PREPROCESSING FOR CBIR SYSTEM

Tatiana Jaworska

Systems Research Institute Polish Academy of Sciences, Newelska 6 St, 01-447 Warsaw, Poland

Keywords: Content-based image retrieval (CBIR), image preprocessing, image segmentation, clustering, object

extraction, texture extraction, discrete wavelet transformation.

Abstract: This article describes the way in which image is prepared for content-based image retrieval system. Our

CBIR system is dedicated to support estate agents. In our database there are images of houses and

bungalows. All efforts have been put into extracting elements from an image and finding their characteristic

features in the unsupervised way. Hence, the paper presents segmentation algorithm based on a pixel colour

in RGB colour space. Next, it presents the method of object extraction in order to obtain separate objects

prepared for the process of introducing them into database and further recognition. Moreover, a novel

method of texture identification which is based on wavelet transformation, is applied.

1 INTRODUCTION

Image processing for purposes of content-based

image retrieval (CBIR) systems seems to be a very

challenging task for the computer. Determining how

to store images in big databases, and later, how to

retrieve information from them, is an active area of

research for many computer science fields, including

graphics, image processing, information retrieval

and databases.

Although attempts have been made to perform

CBIR in an efficient way based on shape, colour,

texture and spatial relations, it has yet to attain

maturity. A major problem in this area is computer

perception. There remains a big gap between low-

level features like shape, colour, texture and spatial

relations, and high-level features like windows,

roofs, flowers, etc.

The purpose of this paper is to investigate image

processing with special attention given to

segmentation and selection of separate objects from

the whole image. In order to achieve this aim we

present two new methods: one is a very fast

algorithm for colour image segmentation, and the

second is a new approach to description of textured

objects, using discrete wavelet transformation.

2 CBIR CONCEPTION

OVERVIEW

In the last 15 years, CBIR techniques have drawn

much interest, and image retrieval techniques have

been proposed in context of searching information

from image databases. In the 90’s the Chabot project

at UC Berkeley (Ogle, 1995) was initialized to study

storage and retrieval of a vast collection of digitized

images. Also, at IBM Almaden Research Centre

CBIR was prepared by Flickner (Flickner, 1995),

Niblack (Niblack, 1993). This approach was

improved by Tan (Tan, 2001), Hsu (Hsu, 2000) and

by Mokhtarian, F. S. Abbasi and J. Kittler

(Mokhtarian, 1996) at Department of Electronics

and Electrical Engineering UK.

Our CBIR system is dedicated to support estate

agents. In the estate database there are images of

houses, bungalows, and other buildings. To be

effective in terms of presentation and choice of

houses, the system has to be able to find the image

of a house with defined architectural elements, for

example: windows, roofs, doors, etc. (Jaworska,

2005).

The first stage of our analysis is to split the

original image into several meaningful clusters; each

of them provides certain semantics in terms of

human understanding of image content. Then,

proper features are extracted from these clusters to

represent the image content on the visual perception

level. In the interest of the following processes, such

375

Jaworska T. (2007).

IMAGE PREPROCESSING FOR CBIR SYSTEM.

In Proceedings of the Fourth International Conference on Informatics in Control, Automation and Robotics, pages 375-378

DOI: 10.5220/0001644303750378

Copyright

c

SciTePress

as object recognition, the image features should be

selected carefully. Nevertheless, our efforts have

been put into extracting elements from an image in

the unsupervised way.

Figure 1: Example of an original image.

3 A NEW FAST ALGORITHM

FOR OBJECT EXTRACTION

FROM COLOUR IMAGES

We definitely prefer unsupervised techniques of

image processing. Although there are many different

methods of image segmentation, we began with two

well known clustering algorithms: the C-means

clustering (Seber,1984), (Spath, 1985), and later

developed, the fuzzy C-means clustering algorithm

(FCM) (Bezdek, 1981). In our case we found

clusters in the 3D colour space RGB and HSV.

Figure 2: The way of labelling the set of pixels. Regions I,

II, III show pixel brightness and the biggest value of triple

(R,G,B) determines its colour.

Unfortunately, results were unsatisfying. After

examining the point distribution in these both spaces

(for all images) it turned out that points created one

tight set. In figure 2 such a set is exemplified in

RGB space but points distribution in HSV space is

similar.

Figure 3: 12 cluster segmentation of fig. 1 obtained by

using the 'colour' algorithm.

These results forced us to work out a new

algorithm which uses colour information about a

single point to greater extent than the C-means

algorithm does. With the aim of labelling a pixel we

chose the biggest value from the triple (R,G,B) and

we defined it as a cluster colour. In this way we

obtained three segments – red, green and blue and

for better result we divided each colour into three

shades, according to the darkness of colour shown as

three regions (I, II, III) which determine point

brightness. The idea of the segmentation is illustrat-

ed in figure 2. The radius

3

2

max

2

max

2

max

BGRr ++=

of the dividing sphere was counted in Euclidean

measure, where R

max

= G

max

= B

max

# 255. Moreover,

we added three segments: black, grey and white for

pixels for whom R=G=B according to their region

(I, II, III). We called this algorithm ‘colour one’.

Figure 3 presents the image shown in fig. 1

divided into 12 clusters using the above-described

algorithm.

4 OBJECT EXTRACTION ON

THE BASE OF THE NEW

ALGORITHM

Based on this segmentation separate objects are

obtained. As an object we understand an image of

architectural element such as roof, chimney, door,

window, etc.

After performing the extraction of objects, the

following features for these objects were counted:

average colour (shown in fig. 4), texture parameters,

region-based shape descriptors, contour based shape

descriptors and location in the image as a region-

based representation.

ICINCO 2007 - International Conference on Informatics in Control, Automation and Robotics

376

Figure 4: Objects from fig. 2 presented in their average

colours.

5 THE DETERMINATION OF

TEXTURE PARAMETERS

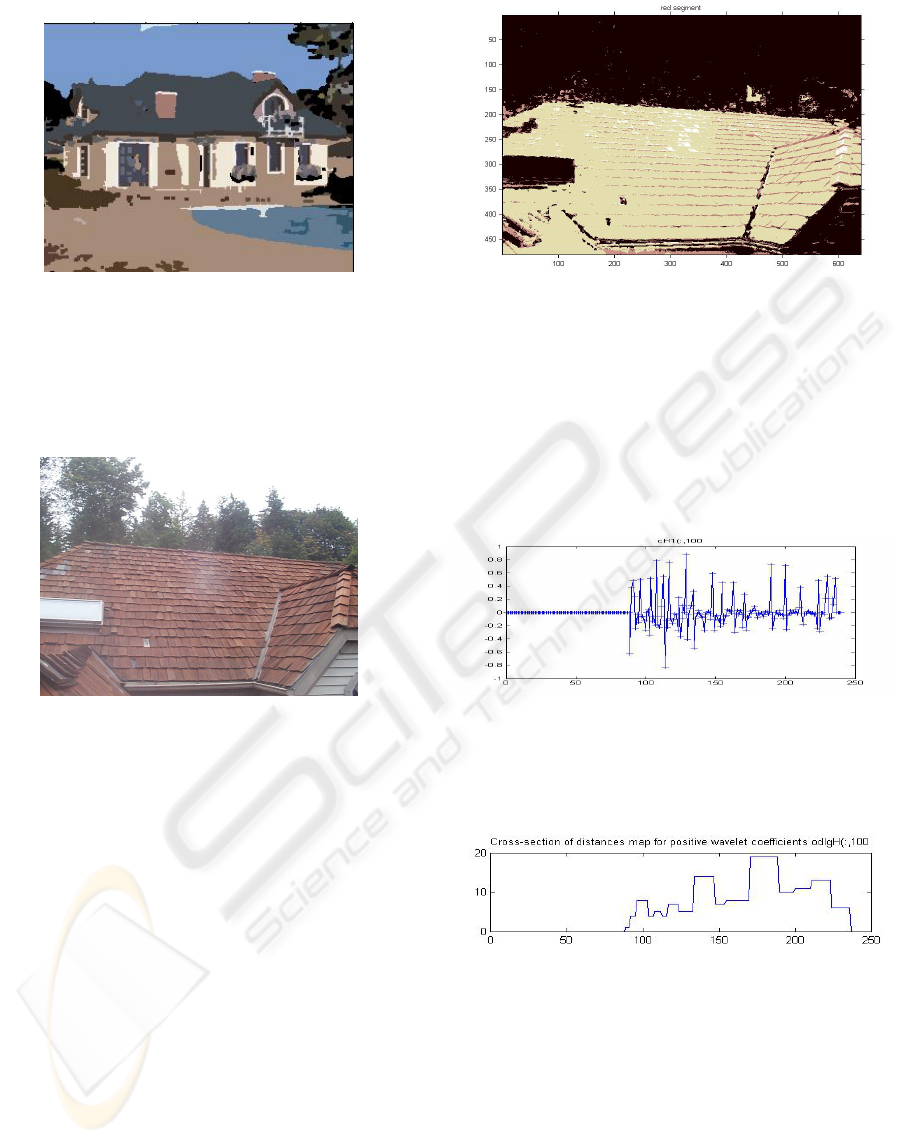

Figure 5: Example of an original image where the roof is a

textured surface.

The texture information presented in images is

one of the most powerful additional tools available.

There are many methods which can be used for

texture characterization. Unfortunately, they are

mostly useless for our purpose.

One of them is the two-dimensional frequency

transformation. For our aims we could apply as well

the classical Fourier transformation as several

spatial-domain texture-sensitive operators, for

instance, the Laplacian 3x3 or 5x5, the Gaussian

5x5, Hurst, Haralick, or Frei and Chen (Russ, 1995).

Regrettably, all of them are useful for relatively

small neighbourhoods.

The other method of texture recognition for

monochromatic image is the histogram thresholding.

Unfortunately, it can be used mainly for distinguish-

ing 2-3 textured regions. There also exists the two-

dimensional histogram of pixel pairs proposed by

Haralick in 1973 (Haralick, 1973).

Figure 6: The red segment (in three levels of brightness)

extracted from the whole segmentation from fig. 8.

The next methods are the transformation domain

approaches. In 2001 Balmelli and Mojsilović

(Balmelli, 2001) proposed the wavelet domain for

texture and pattern using statistical features only for

regular textures and geometrical patterns. So far

only Lewis and Fauzi manage to perform an

automatic texture segmentation for CBIR based on

discrete WT (DWT) (Fauzi, 2006).

Figure 7: Horizontal wavelet coefficients presented along

the 100

th

column of the image transform (for the Haar

wavelet, where j=1). Numbers of the Haar wavelets for the

first level of multiresolution analysis are on the horizontal

axis and values of coefficients cH1 are on vertical axis.

Figure 8: Cross-section through the 100

th

column of the

distances map for positive horizontal wavelet coefficients.

Numbers of the Haar wavelets for the first level of multi-

resolution analysis are on the horizontal axis and distances

between the maximal wavelet coefficients are on vertical

axis.

In our work we decided to use the Fast Wavelet

Transform (FWT). It is efficient and productive

enough for frequent use for our purpose.

One of the most important features of details is

their directionality. If we use this feature and

compute the convolution of an image consisting of

IMAGE PREPROCESSING FOR CBIR SYSTEM

377

regular tiles or bricks and relevant wavelet, we

obtain a 2D transform whose maximum values are

placed in the connection spots among these tiles or

bricks.

Figure 9: Distance map for positive horizontal wavelet

coefficients cH1. There are wavelet numbers on both axes.

Therefore, we have applied the Haar wavelet to the

roof region shown in fig. 7. Then, we obtained three

matrices of details

2

1

1

1

, dd

and

3

1

d

. The cross-section

through the 100

th

column of the horizontal details

matrix

1

1

d

(cH1) is presented in figure 8. Maxima

and minima in this figure are equivalent to

connections between tiles in fig. 7. Having

computed horizontal details, we have measured

distances between maxima for each column of this

matrix (shown in fig. 8) and we have measured

distances between minima for each column of this

matrix. We have located one threshold on the level

of 1% of the maximum value of the whole matrix

and we have measured distances between positive

coefficients on that level and we have done

analogically for negative coefficients. It has turned

out that these distances which are equivalent to the

size of tiles are good distinctive parameters for

textured region.

After counting the distances we have created two

distance maps for all positive and negative

horizontal coefficients. Figure 9 presents one of

these distance maps. Analogical procedure has been

carried out for vertical wavelet coefficients cV1.

Basing on the above distance maps we can estimate

that the size of tiles.

6 CONCLUSIONS

To sum up, this paper shows how to extract elements

from images in the unsupervised way and analyze

objects parameters. We have focused on the

description of texture parameters because it was the

most difficult task. The achieved results indicate that

it is possible to separate objects in the image with

acceptable accuracy for further interpretation in the

unsupervised way. In computer terms, objects are

recognized by finding the above-mentioned features

of each object and a new object is classified to one

of the previous created classes. So far, we have no

interpretation which of these objects are doors,

windows, etc. At present, the database structure is

being prepared. This structure will cover all

elements necessary for image content analysis;

namely basic object features as well as logical and

spatial relations.

REFERENCES

Bezdek, J. C., 1981. Pattern Recognition with Fuzzy

Objective Function Algorithms, Plenum Press, New

York.

Fauzi, M., Lewis, P., 2006. Automatic texture segmenta-

tion for content-based image retrieval application,

Pattern Analysis and Applications, Springer-Verlag,

London, (in printing).

Flickner, M., Sawhney, H., et al., 1995. Query by Image

and Video Content: The QBIC System, IEEE

Computer, Vol. 28, No. 9, pp. 23-32.

Haralick, R. M., Shanmugan, K., Dinstein, I., 1973.

Texture Features for Image Classification, IEEE

Transactions of Systems, Man and Cyberntics, SMC-3,

pp. 610-621.

Hsu, W., Chua, T. S., Pung, H. K., 2000. Approximation

Content-based Object-Level Image Retrieval,

Multimedia Tools and Applications, Vol. 12, Springer

Netherlands, pp. 59-79.

Jaworska, T., Partyka, A., 2005. Research: Content-based

image retrieval system [in Polish], Report RB/37/2005,

Systems Research Institute, PAS.

Mokhtarian, F., Abbasi, S., Kittler J., 1996. Robust and

Efficient Shape Indexing through Curvature Scale

Space, Proc. British Machine Vision Conference,

pp. 53-62.

Niblack, W., Flickner, M., et al., 1993. The QBIC Project:

Querying Images by Content Using Colour, Texture

and Shape, SPIE, Vol. 1908, pp. 173-187.

Ogle, V., Stonebraker, M., 1995. CHABOT: Retrieval

from a Relational Database of Images, IEEE

Computer, Vol. 28, No 9, pp. 40-48.

Russ, J. C., 1995 The image processing. Handbook, CRC,

London, pp. 361-385.

Seber, G., 1984. Multivariate Observations, Wiley.

Spath, H., 1985. Cluster Dissection and Analysis: Theory,

FORTRAN Programs, Examples, translated by J.

Goldschmidt, Halsted Press, pp. 226.

Tan, K-L., Ooi, B. Ch., Yee, Ch. Y., 2001. An Evaluation

of Color-Spatial Retrieval Techniques for Large Image

Databases, Multimedia Tools and Applications, Vol.

14, Springer Netherlands, pp. 55-78.

ICINCO 2007 - International Conference on Informatics in Control, Automation and Robotics

378