4I (FOR EYE) MULTIMEDIA

Intelligent Semantically Enhanced and Context-ware Multimedia Browsing

Oleksiy Khriyenko

Industrial Ontologies Group, Agora Center, University of Jyväskylä, P.O. Box 35(Agora), FIN-40014 Jyväskylä, Finland

Keywords: Semantic visual interface, multimedia searching and browsing, multimedia metadata, groupware interfaces,

integrated information visualization, context-aware GUI.

Abstract: Next generation of integration systems will utilize different methods and techniques to achieve the vision of

ubiquitous knowledge: Semantic Web and Web Services, Agent Technologies and Mobility. Unlimited

interoperability and collaboration are the important things for almost all the areas of people life.

Development of a Global Understanding eNvironment (GUN) (Kaykova et al., 2005), which would support

interoperation between all the resources and exchange of shared information, is a very profit-promising and

challenging task. And as usually, a graphical user interface is one of the important parts in a process

performing. Following the new technological trends, it is time to start a stage of semantic-based context-

dependent multidimensional resource visualization and semantic metadata based browsing across resources.

With a growing ubiquity of digital media content, whose management requires suitable annotation and

systems able to use that annotation, the ability to combine continuous media data with its own multimedia

specific content description into the one source brings the idea of a true multimedia semantic web one step

closer. Thus, 4I (FOR EYE) technology (Khriyenko, 2007) is a perfect basis for elaboration of intelligent

semantically enhanced and context-aware across multimedia content browsing.

1 INTRODUCTION

In recent years, the amount of digital multimedia

information distributed over the Web has increased

extremely because everyone can follow the

production line of digital multimedia content. The

discovery of “Web as platform”, termed in some

quarters as Web 2.0 (O’Reilly, 2005), and

innovative websites like Flickr

1

, Wikipedia

2

, Google

Map

3

, Wikimapia

4

and Yahoo Maps

5

encourage

social networking.

Accordingly to Lyndon J.B. Nixon work (Nixon,

2006), as the current trends develop we expect to

experience a future Web which will be media rich,

highly interactive and user oriented. The value of

this Web will lie not only in the massive amount of

information that will be stored within it, but the

ability of Web technologies to organize, interpret

1

http://www.flickr.com/

2

http://www.wikipedia.org/

3

http://maps.google.com/

4

http://www.wikimapia.org/

5

http://maps.yahoo.com/

and bring this information to the user. Media

presentation is a key challenge for the emerging

media-rich Web platforms.

The challenge of enabling computer systems to

make better use of Web data by making that data

machine-processable has been taken up by the

Semantic Web effort, which proposes formal

knowledge structures to represent concepts and their

relations in a domain. These structures are known as

ontologies and the World Wide Web Consortium

(W3C)

6

has recommended two standards, the

simpler Resource Description Framework (RDF)

7

and the more expressive Web Ontology Language

(OWL)

8

.

A number of vocabularies that deal at some level

with multimedia content currently exist (Geurts et

al., 2005): MPEG-7, Dublin Core Element Set,

VRA, Media Streams, Art and Architecture

Thesaurus (AAT), MIME, CSS, Composite

Capabilities/Preference Profiles (CC/PP), PREMO,

6

http://www.w3c.org

7

http://www.w3.org/RDF

8

http://www.w3.org/TR/owl-absyn/

233

Khriyenko O. (2007).

4I (FOR EYE) MULTIMEDIA - Intelligent Semantically Enhanced and Context-ware Multimedia Browsing.

In Proceedings of the Second International Conference on Signal Processing and Multimedia Applications, pages 229-236

DOI: 10.5220/0002133102290236

Copyright

c

SciTePress

Modality Theory, Web Content Accessibility

Guidelines. Of course, it is very important to

develop appropriate format for semantic annotation

of multimedia content. But, from the other hand, it is

more natural to find the way to build-in full

semantics to the digital formants of multimedia

(image, video, audio). Nowadays, production houses

shoot high-quality video in digital format;

organizations that hold multimedia content (such as

TV channels, film archives, museums, and libraries)

digitize analog material and use digital formats.

Maybe it is a time to reach all the digital media

formats with a Semantic Track, which will contain

not just content structure, but full semantic content

annotation including: content structure, concepts,

objects, actions and etc.

Considering the main aspect of the discussions

around a multimedia, Human is a main customer of

multimedia services and an end-user of a multimedia

content. With a sustainable multimedia content

growing, Human/User needs new intelligent

techniques for multimedia content browsing,

search/retrieving and adapted representation. At the

same time, the stated goal of the Semantic Web

initiative is to enable machine understanding of web

resources. However, it is not at all evident that such

machine-readable semantic information will be clear

and effective for human interpretation. Hence, in

order to effectively harness the powers of the

semantic web, it needs a “conceptual interface”

(Naeve, 2005), that is more comprehensible for

humans. Such conceptual interface can improve

multimedia content retrieving process and together

with well elaborated Semantic Track of the

multimedia resources, can provide a unique

basement for semantically enhanced across

multimedia contents browsing.

The paper contains two main sections. Section #2

is related to the aspects of a browsing process across

multimedia contents. The second section #3

describes a 4I (FOR EYE) technology based vision

to groupware collaboration approach (Khriyenko,

2007), and a multimedia resource browsing case

based on it.

2 SEMANTICALLY ENHANCED

BROWSING ACROSS

MULTIMEDIA CONTENTS

2.1 Resource Semantic Track

The sub-symbolic abstraction level covers the raw

multimedia information represented in well-known

formats for video, image, audio, text, metadata, and

etc., which are typically binary formats, optimized

for compression and streaming delivery. They aren’t

well suited for further processing that uses, for

example, the internal structure or other specific

features of the media stream. A structural (symbolic)

layer on top of the binary media stream provides this

information. The standards that operate in this

middle layer for the representation of multimedia

document descriptions are: Dublin Core, MPEG-7,

Visual Resource Association, and so on. The

problem with this structural approach is that the

semantics of the information encoded in the XML

are only specified within each standard’s framework.

MPEG-7 was not built specifically for web

applications and thus does not facilitate embedding

links to other resources and interoperability between

them. A possible solution to resolve the

interoperability conflict is to add a third layer (the

logical abstraction level) that provides the semantics

for the middle one, actually defining mappings

between the structured information sources and the

domain’s formal knowledge representation based on

semantically enriched languages (RDF and OWL).

RDF-based languages and technologies provided

by the W3C community is well suited to the formal,

semantic descriptions of the terms in a multimedia

document’s annotation. A combination of the

existing standards seems to be the most promising

path for multimedia document description in the

near future. For these reasons, the W3C has started a

Multimedia Annotation on the Semantic Web Task

Force

9

as part of the Semantic Web Best Practices

and Deployment Working Group. The new task

force operates within the framework of the W3C

Semantic Web Activity group

10

. One goal is to

provide guidelines for using Semantic Web

languages and technologies to create, store,

manipulate, interchange, and process image

metadata. Another is to study interoperability issues

between multimedia annotation standardization and

RDF- and OWL-based approaches. Hopefully, this

9

http://www.w3.org/2001/sw/BestPractices/MM/

10

http://www.w3.org/2001/sw/

SIGMAP 2007 - International Conference on Signal Processing and Multimedia Applications

234

effort will provide a unified framework of good

practices for constructing interoperable multimedia

annotations.

Research towards a multimedia content and

content description bounding has been going during

the last several years. Commonwealth Scientific and

Industrial Research Organization have developed an

open source family of technologies ANNODEX

(Pfeiffer et al., 2003) for embedding annotations and

hyperlinks directly within digital audio and video

files. Such embedding allows the combined resource

to become just like any web document which has

content and content description bound into one.

Also, the idea of a media semantic track utilizing has

been elaborated in another research (Khriyenko,

2005), which concerns issues of multimedia smart

messaging in an environment of limited devices.

Semantic annotation of multimedia content is

performed by using appropriate domain specific

ontologies that model the multimedia content

domain. Ontologies typically represent concepts by

linguistic terms. However, also multimedia

ontologies can be created, that assign multimedia

objects to concepts. At the same time with semantic

content metadata annotation, annotation of the

concepts of: people (artist, owner, restorer, author,

producer, etc.), art objects and representations

(painting, sculptures, films, digital representations,

etc.), events and activities, places, methods and

techniques, and etc., we should provide a basis for

multimedia content features to be presented in

semantic annotation also. This gives a possibility for

better automatic annotation of the multimedia

content. Further we try to specify the features of the

multimedia content that can be detected and

presented in Semantic Track.

In (Bertini et al., 2005) authors present a list of

systems of automatic semantic annotation, most of

them in the application domain of sports video.

Among these, there is an approach, where MPEG

motion vectors, playfield shape and players position

have been used with Hidden Markov Models to

detect soccer highlights. Another approach has been

aimed to detect the principal soccer highlights, such

as shot on goal, placed kick, forward launch and

turnover, from a few visual cues. Additionally, the

ball trajectory also has been used in order to detect

the main actions like touching and passing and

compute ball possession by each team; a Kalman

filter is used to check whether a detected trajectory

can be recognized as a ball trajectory. But, in all

these approaches a model based event classification

is not associated with any ontology-based

representation of the domain. However, although

linguistic terms are appropriate to distinguish event

and object categories, they are inadequate when they

must describe specific patterns of events or video

entities. In this case, high level concepts, expressed

through linguistic terms, and pattern specifications

represented instead through visual concepts, can be

both organized into new extended ontologies, that

will be referred to as pictorially enriched ontologies.

Ontologies can be extended to multimedia enriched

ontologies where concepts that cannot be expressed

in linguistic terms are represented by

prototypes/patterns of different media like video,

audio, etc.

The audio features used to characterize the sound

signal and classify the sample by instrument. The

CUICADO project (Peeters, 2003), provided a set of

72 audio features, and research has shown that some

of the features are more important in capturing the

signal characteristics: temporal shape, temporal

feature, energy features, special shape features,

harmonic features, perceptual features and MPEG-7

Low Level Audio Descriptors (spectral flatness and

crest factors).

Now we can see how many multimedia-specific

features and properties can enrich a Semantic Track

of multimedia resources.

2.2 Across Content Browsing

in a Sense of Concept based

Semantic Search

With the reference to the research (Marcos et al.,

2005

), there are a number of important criticisms that

can be made of Classical Model of information search.

On the one hand, this model does not adequately

distinguish between the needs of a user and what a user

must specify to get it. Very often, users may not know

how to specify a good search query, even in Natural

Language terms. Analyzing what is retrieved from the

first attempt is used not so much to select useful results,

as to find out what is there to be search over. A second

important criticism of the Classical Model is that any

knowledge generated during the process of formulation

a query is not used later on in the sequence of search

process steps, to influence the filtering step and

presenting step of the search results, or to select the

results. Finally, Classical Model provides an essentially

context-free process. There is no proper way in which

knowledge of the task context and situation, and user

profile can have an influence on the information search

proces

s.

To

address these criticisms, the WIDE Model of

information retrieval (

Marcos et al., 2005) treats the

general task of information finding as a kind of design

task, and not as a kind of search specification and

4I (FOR EYE) MULTIMEDIA - Intelligent Semantically Enhanced and Context-ware Multimedia Browsing

235

results selection tasks. Information retrieval is

understood as a kind of design task by first recognizing

the difference between users stating needs and forming

well specified requirements, and then properly

supporting the incremental development of a complete

and consistent requirements, and the re-use of the

knowledge

generated in this (sub) process to

effectively support the subsequent steps in the process

that concludes in a useful set of search results.

There are several projects that are aimed to

somehow enhance the Classical Model of

information retrieval. For example, a problem of

search query uncertainty has been faced in one of the

projects of Industrial Ontologies Group

(IOG):”Semantic Facilitators for Web Information

Retrieval”

11

. The main idea of the project is that

Semantic Search Assistant/Facilitator (SSA) uses

ontologically defined knowledge (WordNet

12

) about

words from Google search request and provides

possibility for user to specify right meaning of the

words from available set of them. Further, based on

description of a selected word meaning, SSA uses

embedded support of advanced Google-search query

features in order to construct more efficient queries

from formal textual description of searched

information (Kaykova et al., 2004).

Thus, we can see how much work is doing in the

area of enhancement of the classical information

retrieving model by adding some new useful

features. And this gives us basis for creation of a

fully ontology-based semantic query and search

mechanisms, mechanisms, where search query is

created based on ontological concepts specification.

Together with a Resource Semantic Track, this gives

us an opportunity to perform an across multimedia

contents browsing. It is a browsing process that

includes semi-automatic multimedia content based

semantic search query creation and semantic search

processes through Semantic Tracks of multimedia

resources.

11

http://www.cs.jyu.fi/ai/OntoGroup/SemanticFacilitator.h

tm

12

http://wordnet.princeton.edu/

3 4I MULTIMEDIA:

MULTIMEDIA BROWSING

BASED ON 4I (FOR EYE)

TECHNOLOGY

3.1 A New Human-centric Resource

Visualization Techniques - 4i (FOR

EYE) Technology

Nowadays, unlimited interoperability and

collaboration are the important things for industry,

business, education and research, health and

wellness, and other areas of people life. In an

emergency planning situation different agencies

have to collaborate and share data as well as

information about the actions they are performing.

Thus, we need an open environment to allow

different heterogeneous resources (software, data,

devices, humans, organizations, processes and etc.)

communicate and interoperate with each other. And

as usually, graphical user interface, that helps to

perform these interoperation and collaboration

processes in handy and easy for human/expert way,

is one of the important things in performing and

creation of these processes.

Following new technological trends, it is time to

start a new stage in user visual interface

development – a stage of semantic-based resource

visualization. We have a need somehow to visualize

the resource properties (in specific way, different

from “directed arc (vector) between objects”

representation), various relations between the

resources, inter-resource communication process and

etc. And even more, we have a need to make

visualization context dependent, to be able to

represent information in handy and adequate to a

certain case (context) way. Thus, the main focus will

be directed to the resource visualization aspects.

Now, we have a challenging task of semantic-based

context-dependent multidimensional resource

visualization.

Regarding to the core characteristics of Web 2.0,

a website is no longer a static page to be viewed in

the browser, but is a dynamic platform upon which

users can generate their own experience. The

richness of this experience is powered by the

implicit threads of knowledge that can be derived

from the content supplied by users and how they

interact with the site. Another aspect of this Web as

platform is sites which provide users with access to

their data through well defined APIs and hence

encourage new uses of that data, e.g. through its

integration with other data sources.

SIGMAP 2007 - International Conference on Signal Processing and Multimedia Applications

236

Now it has become evident that we cannot

separate the visual aspects of both data

representation and graphical interface from the

interaction mechanisms that help a user to browse

and query a data set through its visual

representation. Presented in (Khriyenko, 2007)

semantic-based context-dependent multidimensional

resource visualization approach provides an

opportunity to create intelligent visual interface that

presents relevant information in more suitable and

personalized for user form. It can be considered as a

new valuable extension of text-based Semantic

MediaWiki to Context-based Visual Semantic

MediaWiki with visual context-dependent

information representation, browsing and editing.

Context-awareness and intelligence of such interface

brings a new feature that gives a possibility for user

to get not just raw data, but required integrated

information based on a specified context. 4i (FOR

EYE) is a smart ensemble of Intelligent GUI-Shell

(smart middleware for context dependent use and

combination of a variety of different MetaProviders,

depending on the user needs) and MetaProviders as

graphical interfaces that visualise filtered integrated

information (see Figure 1). GUI Shell allows user

dynamic switching between MetaProviders for more

suitable information representation depending on a

context. From other side, MetaProvider provides

API to specify information filtering context. Such

switching and filtering process is a kind of semantic

browsing based on semantic description of the

context and resource properties.

3.2 Multimedia Semantic Browsing

We have to consider another developing trend on the

Web – a growth in multimedia content.

Technological progress has meant that we have

never had access to so much media content as now.

Future challenges for the Web will be the

meaningful organization of this huge amount of

online media content as well as its meaningful

delivery to the user. However, the present state of

the art of media and Web technologies prevents

richer integration.

A multimedia semantic browsing, as a sub-class

of general resources browsing is a complex process

that combines a set of sub-processes. This process

can be performed based on presented 4i (FOR EYE)

technology. Figure 2 shows us an example of an

across multimedia contents semantic browsing

architecture. In the left center of the figure, a GUI-

Shell is presented as a combination of the tools that

take parts in the process: multimedia content player,

Semantic Track visualization component, concept

browser and semantic search query builder/creator.

GUN Platform

GUN Platform

MetaProviders

MetaProviders

Register of

MetaProviders

Context-dependent retrieving

of appropriate MetaProviders

GUN-Resource

GUN-Resource

Contextual properties

Intelligent

Intelligent

GUI Shell

GUI Shell

Search context

(location)

Wind direction

– N:E

Request

Request (physical condition)

Response

Response (fire, forest work)

Request

Request (weather condition)

Response

Response (wind direction)

GUN Platform

Fire

- true

Forest work

- false

GUN

GUN

-

-

Resource

Resource

Filtering

Filtering

context

context

(physical damage)

Figure 1: Intelligent Interface of Integrated Information (Khriyenko, 2007).

4I (FOR EYE) MULTIMEDIA - Intelligent Semantically Enhanced and Context-ware Multimedia Browsing

237

Let us consider an example, where user is

watching an episode of a movie with some song

(soundtrack) at the background. User likes this

song/melody and would like to find more songs of

this author (or even more complex goal – find

similar songs to the initial one). To achieve the goal,

user should browse Semantic Track of this video

instance, which contains a structure of a video file,

objects, actions, soundtracks, etc.; and find a

reference to the searched song. Then, utilising a

concept browsing tool, which is connected to remote

ontology, user can specify a semantic query for a

needed multimedia resource (in our case - a song).

Such query specification can be considered as a

creation/construction of a resource semantic pattern

(virtual nested resource with specified properties).

As a result of the search process, appropriate audio

resource will be returned and even lyrics of the song

can be displayed based on its’ Semantic Track.

But it was just a simple case of semantic

search/browsing process. Multimedia Resource

Semantic Track usually contains just a structure of

content and descriptions of multimedia content

specific features (see the sub-chapter 2.1). And

because of this, very often we can not specify direct

linking between the contents of two Semantic Tracks

of the different resources. The “glue” for these two

semantic annotations is situated in Semantic

Knowledge Bases (for example semantically-

enhanced Wikipedia or different ontologies). It can

be useful in the next example. Now we are looking

for an image of the house of the first wife of some

actor from a movie that we are watching. Firstly, we

stop the movie on a scene where this actor is

presented and, based on Semantic Track, find a link

to this person. Then we browse a semantic

knowledge base via the concept browser and find a

link to his first wife and her house. After semantic

search query generation we get the searched image

on the browser.

At the same time, approach of instance based

search via MetaProviders can be beneficially utilized

in multimedia content searching/browsing. Let us

consider a case, when we would like to see other

houses, which are located nearby the house of the

mentioned wife. We can use some MetaProvider –

Wikimapia kind of service, which provide an access

to the registered resources via showing them on a

map. If the image is registered on this

service/platform, then we easily can find other

registered images in the same area (location),

especially if final visualization will be filtered in a

context that searched resource is an image of a

house.

Resource 1 Resource 2 Reso urce 3

Search:

Player:

Concept Browser

Semantic Search Query

Multimedia Player

Semantic Track

Video

Audio

Image

Semantic

Knowledge

Base

Wikipedia, etc.…

Figure 2: Multimedia semantic browsing.

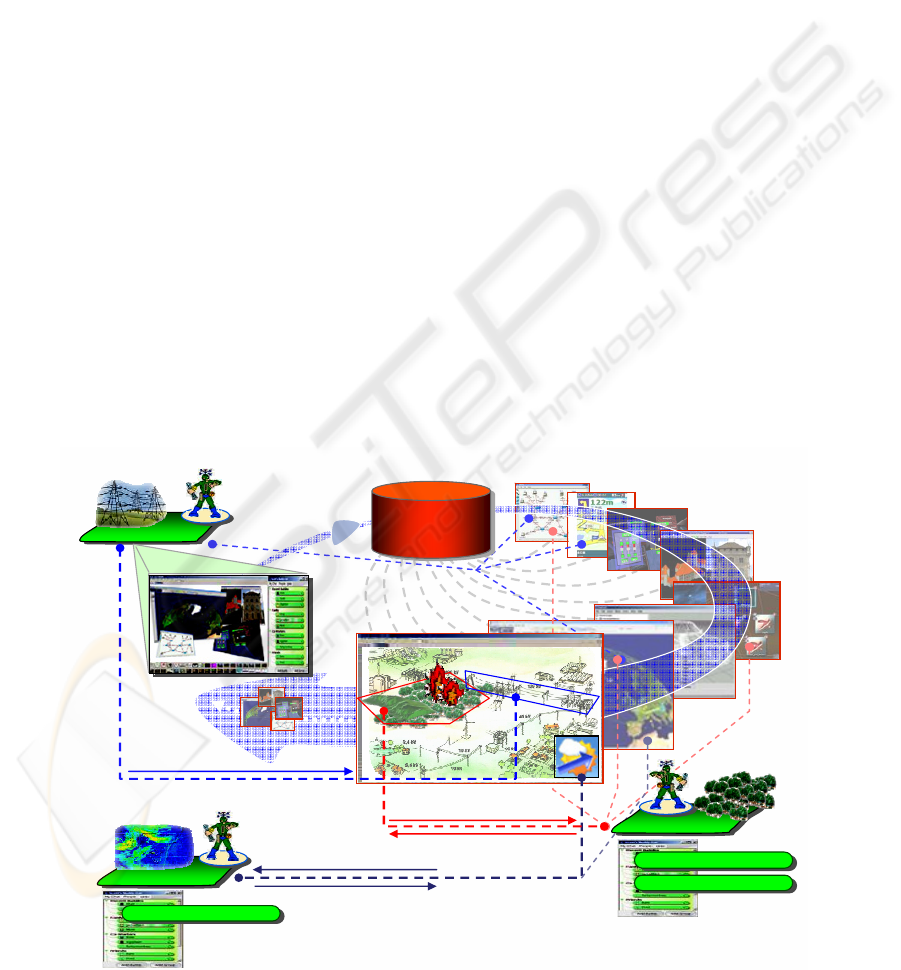

Accordingly to the GUN approach, all the parts

of searching/browsing process presented in GUI-

Shell can be developed as separate functional

modules (resource) and can be chosen by user to

allow personalization of a browsing interface. In this

particular use case of the OntoEnvironment, with

resources of the real world (people, objects and etc.)

we face new semantically-enhanced media-file

resources. As was mentioned, these resources

contain not just internal structure in their Semantic

Tracks, but also links to other resources. Thus, with

a purpose to be competitive in the open market of

the media resources and have big rank of use,

resources should be self-maintained and all the time

should have up-to-date links in Semantic Track.

Here we see the necessity of resource proactive

behaviour. Supplied with an agent-based GUN

Platform, behaviour of the resource can be

configured in a way that gives resource a possibility

to communicate with other resources and

change/update own Semantic Track in real time (see

Figure 3).

4 CONCLUSIONS

Presented 4i technology quite fits the demands of a

new generation of integration systems. It can be very

useful, especially if we have a deal with a Human-

Computer interaction process. Now, when human

becomes a very dynamic and proactive resource of a

large integration environment with a huge amount of

different heterogeneous data, it is quite necessary to

provide a technology and tools for easy and handy

human information access and manipulation.

SIGMAP 2007 - International Conference on Signal Processing and Multimedia Applications

238

Figure 3: Semantically enhanced multimedia resource infrastructure.

Presented semantic-based context-dependent

multidimensional resource visualization approach

provides an opportunity to create intelligent visual

interface that presents relevant information in more

suitable and personalized for user form. Context-

awareness and intelligence of such interface brings a

new feature that gives a possibility for user to get

not just raw data, but required information based on

a specified context.

There are already some developed domain-

oriented software applications that try to visualize

the data in domain specific and suitable for human

way (one of the most popular is graphics software

from SmartDraw®

13

). But it is standalone

application without any functionality for

interoperability. Subscribing to an opinion of

(Nixon, 2006), bridging the gap between the

emerging folksonomies of Web 2.0 and the formal

semantics of Semantic Web ontologies would

benefit the Semantic Web community with being

able to leverage the content and knowledge that Web

2.0 is already generating from its users and making

13

SmartDraw® - www.smartdraw.com

available over standardized APIs. This applies even

more in the multimedia community, where e.g.

collaborative user-contributed media annotation on a

Web scale is an attractive (compromised) solution to

the problem of extracting knowledge out of large

multimedia data stores. In recognition of this, a Web

2.0 based scenario has been chosen for SWeMPs

14

ontology-based multimedia presentation system (one

of the related works in this area).

With the idea of the GUN we come to the

environment where all the resources are

semantically interoperable and have own semantic

description – Resource Semantic Track. With the

growing ubiquity of digital media content, ability to

combine continuous media data with its own

multimedia specific content description into the one

source brings the idea of a true multimedia semantic

web one step closer.

Now, when environments with unlimited

interoperability and collaboration demand data and

information sharing, we need more open semantic-

based applications that are able to interoperate and

14

http://swemps.ag-nbi.de/

Video

Audio

Image

Semantic

Knowledge Base

Location

3D & 2D model

Semantic Track

G

U

N

P

l

a

t

f

o

r

m

G

U

N

P

l

a

t

f

o

r

m

GU

N

P

l

a

t

f

o

r

m

MetaProviders

MetaProviders

GUI Shell

GUI Shell

4I (FOR EYE) MULTIMEDIA - Intelligent Semantically Enhanced and Context-ware Multimedia Browsing

239

collaborate with each other. Ability of the system to

perform semantically enhanced resource

search/browsing based on Resource Semantic Track

brings a valuable benefit for today Web and for the

Web of the future with unlimited amount of the

resources. Proposed technology allows creation of a

Human-centric open environment for resource

collaboration with an enhanced semantic and

context-based instance resource browsing. This is a

good basis for the different business, production,

maintenance, healthcare, social process models

creation and multimedia content management as a

one of the fastest growing area of the Web.

ACKNOWLEDGEMENTS

This research has been performed as part of

UBIWARE (“Smart Semantic Middleware for

Ubiquitous Computing”) project in Agora Center

(University of Jyvaskyla, Finland) that is funded by

TEKES and industrial consortium. Also this research

partially has been funded by COMAS, as a part of

doctoral study. I am very grateful to the members of

“Industrial Ontologies Group” for fruitful

cooperation.

REFERENCES

Bertini M., Cucchiara R., Bimbo A., Torniai C., 2005.

Ontologies Enriched with Visual Information for

Video Annotation, In: Multimedia and the Semantic

Web workshop as part of the 2nd European Semantic

Web Conference (ESWC2005-MSW), Heraklion,

Crete, May-June, 2005.

Geurts J., Ossenbruggen J., Hardman L., 2005.

Requirements for practical multimedia annotation, In:

Multimedia and the Semantic Web workshop as part of

the 2nd European Semantic Web Conference

(ESWC2005-MSW), Heraklion, Crete, May-June,

2005.

Kaykova O., Khriyenko O., Klochko O., Kononenko O.,

Taranov A., Terziyan V., Zharko A., 2004. Semantic

Search Facilitator: Concept and Current State of

Development, In: InBCT Tekes Project Report,

Chapter 3.1.3 : “Industrial Ontologies and Semantic

Web”, Agora Center, University of Jyväskylä, January

– May 2004. URL: http://www.cs.jyu.fi/ai/

OntoGroup/InBCT_May_2004.html.

Kaykova, O., Khriyenko, O., Kovtun, D., Naumenko, A.,

Terziyan, V., Zharko, A., 2005.General Adaption

Framework: Enabling Interoperability for Industrial

Web Resources , In: International Journal on

Semantic Web and Information Systems, Idea Group,

ISSN: 1552-6283, Vol. 1, No. 3, July-September 2005,

pp.31-63.

Khriyenko O., 2005. SemaSM: Semantically enhanced

Smart Message, In: Eastern-European Journal of

Enterprise Technologies, Vol. 1, No. 13, 2005, ISSN:

1729-3774.

Khriyenko O., 2007. 4I (FOR EYE) Technology:

Intelligent Interface for Integrated Information, In: 9th

International Conference on Enterprise Information

Systems (ICEIS-2007), Funchal, Madeira – Portugal,

12-16 June 2007.

Marcos G., Smithers T., Jiménez I., Posada J., Stork A.,

Pianciamore M., Castro R., Marca S., Mauri M.,

Selvini P., Sevilmis N., Thelen B., Zechino V., 2005.

A Semantic Web based approach to multimedia

retrieval, In: Fourth International Workshop on

Content-Based Multimedia Indexing (CBMI 2005),

Riga, Latvia, 21-23 June, 2005.

Naeve A., 2005. The Human Semantic Web: shifting from

knowledge push to knowledge pull, In: International

Conference on Computational Science (ICCS 2005),

Atlanta, USA, 22-25 May, 2005.

Nixon L., 2006. Multimedia, Web 2.0 and the Semantic

Web: a strategy for synergy, In: First International

Workshop on Semantic Web Annotations for

Multimedia (SWAMM), as a part of the 15th World

Wide Web Conference, Edinburgh, Scotland, 22-26

May, 2006.

O’Reilly T., 2005. What is Web 2.0: Design Patterns and

Business Models for the Next Generation of Software.

Online

http://www.oreillynet.com/pub/a/oreilly/tim/news/200

5/09/30/what-is-web-20.html.

Peeters G., 2003. A large set of audio features for sound

description (similarity and classification) in the

CUIDADO project, In: CUIDADO project report,

2003. URL: http://www.ircam.fr/anasyn/peeters/

ARTICLES/Peeters_2003_cuidadoaudiofeatures.pdf.

Pfeiffer S., Parker C., Pang A., 2003. The Annodex

annotation and indexing format for timecontinuous

bitsreams, Version 2.0 (work in progress).

http://www.ietf.org/internet-drafts/draft-pfeiffer-

annodex-01.txt, December 2003.

Sinclair P., Goodall S., Lewis P.H., Martinez K., Addis

M.J., 2005. Concept browsing for multimedia retrieval

in the SCULPTEUR project, In: Multimedia and the

Semantic Web workshop as part of the 2nd European

Semantic Web Conference (ESWC2005-MSW),

Heraklion, Crete, May-June, 2005.

Stamou G., Ossenbruggen J., Pan J., Schreiber G., 2005.

Multimedia Annotations on the Semantic Web, In:

ISWC Workshop Semantic Web Case Studies and Best

Practices for eBusiness (SWCASE05), Galway,

Ireland, 6-10 November, 2005.

Yuxin, M., Zhaohui, W., Zhao, X., Huajun, C., Yumeng,

Y., 2005. Interactive Semantic-Based Visualization

Environment for Traditional Chinese Medicine

Information. In Web Technologies Research and

Development - APWeb 2005, 7th Asia-Pacific Web

Conference, Shanghai, China, March 29 - April 1,

2005, Springer, volume 3399/2005, ISBN 978-3-540-

25207-8, pp. 950-959.

SIGMAP 2007 - International Conference on Signal Processing and Multimedia Applications

240