COLLABORATIVE AUGMENTED REALITY ENVIRONMENT

FOR EDUCATIONAL APPLICATIONS

Claudio Kirner, Rafael Santin, Tereza G. Kirner

Methodist University of Piracicaba, Rodovia do Açúcar Km 156, 13400-911- Piracicaba, SP, Brazil

Ezequiel R. Zorzal

Federal University of Uberlândia – UFU, Av. João Naves de Ávila, 2121, 38.400-902 – Uberlândia, MG, Brazil

Keywords: Collaborative augmented reality environments; Augmented reality; Virtual reality; Educational applications.

Abstract: The face-to-face and remote collaborative learning has been successfully used in the educational area.

Nowadays, the technological evolution allows the implementation and the improvement of interpersonal

communication in networked computer environments, involving chat, audio and video conferencing, but the

remote manipulation of objects remains a problem to be solved. However, the virtual reality and augmented

reality make possible the manipulation of virtual objects in a way similar to real situations. This paper

discusses those subjects and presents a solution for interactions on remote collaborative environments, using

conventional resources for the interpersonal communication as well as augmented reality technology.

1 INTRODUCTION

The collaborative learning, as an educational

approach centered on students and based on group

activities, is growing along the years. That approach

takes into account that the learning involves an

active process and: it uses constructive process; it

depends on richer contexts; and it involves

heterogeneous groups of students. In this way, the

students are exposed to situations, in which each one

can evolve, work in group, compete, cooperate and

exercise the concept of responsibility.

Collaborative learning and the development of

abilities in group have been implemented in local

(face-to-face) environments, supported or not by

computers, and in remote environments supported

by computers and communication networks

(

Billinghurst, 2003)

The great advantage of pure local collaboration

is related to the easy interaction among people, who

use verbal communication, gestures, and facial

expressions for the manipulation of objects. When

the application involves the computer in the local

environment, part of those characteristics persists,

but the object manipulation is modified, through the

use of computer interface and interaction devices. In

remote applications, the interaction among people

becomes more difficult to be implemented, but the

richness of the applications tends to overcome those

difficulties. In that case, communication and

multimedia techniques, involving text, voice, video

and animation, are used to imitate and better explore

the local interaction characteristics. However, the

problems with objects manipulation remain being

difficult to be solved.

To enable the manipulation of objects in a more

natural way, virtual reality is used, allowing the

implementation of three-dimensional interfaces. In

this case, visualization and manipulation occur like

the actions in the real world, but demand special

devices, as gloves and helmets. In virtual reality

environments, the user has to enter in the context of

the application executed in the computer, demanding

that the user be able to navigate in that new

environment and to use special devices. Despite the

benefits of a more natural interaction, the need of

special equipments and training restrict the use of

virtual reality.

A solution for that kind of problem was given by

augmented reality, which mixes the real scene with

virtual objects generated by computer and produces

a virtual environment in the physical environment in

front of the user. Besides, the user can use the hands

to manipulate the real and virtual objects of the

257

Kirner C., Santin R., G. Kirner T. and R. Zorzal E. (2007).

COLLABORATIVE AUGMENTED REALITY ENVIRONMENT FOR EDUCATIONAL APPLICATIONS.

In Proceedings of the Ninth International Conference on Enterprise Information Systems - HCI, pages 257-262

DOI: 10.5220/0002352102570262

Copyright

c

SciTePress

mixed scene, without the need of special

equipments. Therefore, the augmented reality and

computer networks make a convergence of

multimedia resources that allow to the people getting

benefits of face-to-face interactions, even being in

remote environments.

Those characteristics allow the development of

collaborative applications, exploring the advantages

of educational games, taking into account the user

involvement, the development of abilities and the

construction of knowledge.

This paper presents the characteristics, resources

and educational applications of augmented reality,

showing the implementation of an educational game

in collaborative environment with augmented

reality, to justify the pointed out advantages and to

emphasize its potential benefits. Section 2 presents

the concepts of augmented reality, section 3

discusses collaborative environments with

augmented reality, section 4 shows a multiuser

educational application implemented in a distributed

augmented reality environment, and, finally, section

5 gives the conclusions.

2 AUGMENTED REALITY

Augmented reality is a particularization of a more

general concept, denominated mixed reality, which

consists in overlapping real and virtual

environments, in real-time, through a technological

device. One of the simplest ways to get an

augmented reality environment is based on a

microcomputer with a webcam, executing software

using techniques of computational vision and image,

processing to mix the scene of the real environment

captured by the webcam with virtual objects

generated by computer. The software also takes care

of the positioning, occlusion and interaction of the

virtual objects, giving the impression to the user that

the mixed scene is unique and real.

Mixed reality can receive two denominations:

augmented reality, when the user interface is based

on the real world, and augmented virtuality, when

the user interface is based on the virtual world.

Therefore, augmented reality can be defined as

the overlapping of virtual objects in the real world,

through a technological device, increasing the user's

vision and other sensorial aspects (Azuma, 1997). It

is important to point out that the virtual objects are

brought to the user's space, which is familiar to

him/her and where the user knows to interact with

objects, without training.

However, in order to visualize the overlapped

objects, it is necessary to use special software and

devices. Some devices used in virtual reality

environments, as the helmet (head mounted display),

can be adapted and adjusted in augmented reality

environments. The main differences found among

virtual and augmented reality devices are placed in

the displays and trackers.

2.1 Types of Augmented Reality

Systems

The augmented reality systems can be classified

according to the type of used display (Milgram,

1994), (Azuma, 2001), involving optical (see-

through) vision or video based vision, creating four

types of systems: (a) direct optical vision; (b) direct

video based vision; (c) video based vision using

monitor; (d) optical vision using projection.

Direct optical vision systems use glasses or

helmets, with lenses which allow the direct reception

of the real image, at the same time that the virtual

images, properly adjusted with the real scene, are

projected into the user's eyes, mixing the view scene.

A common way to get that characteristic is to use a

sloping lens to allow the direct vision, reflecting the

projection of images generated by computer directly

into the user's eyes. Figure 1a illustrates a direct

video based system type using helmet (hmd).

Direct video based vision systems use helmets

with a video micro camera coupled on it, pointing to

the same direction of the eyes. The real scene

captured by the micro camera is mixed with the

virtual objects generated by computer and presented

directly into the user's eyes, through hmd displays.

Video based vision systems using monitor use a

webcam to capture the real scene, mixing it with the

virtual objects generated by computer and presenting

the result on the monitor. The user's point of view is

usually fixed and depends on the positioning of the

webcam. Figure 1b shows a video based vision

system using monitor.

(a) using helmet (hmd) (b) using monitor

Figure 1: Video based vision systems.

ICEIS 2007 - International Conference on Enterprise Information Systems

258

Optical vision systems using projection uses

surfaces of the real environment, where images of

the virtual objects are projected, in a way that the

result can be visualized without the need of any

auxiliary equipment. Although interesting, those

systems are very restricted to the conditions of the

real space, because of the need of projection

surfaces.

The direct vision systems are appropriate for

situations where the loss of the image can be

dangerous, as in case of a person walking through

the street, driving a car or piloting an airplane, for

example. For closed places, where the user has

control of the situation, the use of the video based

vision is suitable, because in case of loss of the

image, it can remove the helmet with safety, for

example. The direct video based vision system is

cheaper and easier of being adjusted.

2.2 The ARToolKit Software

ARToolKit (Lamb, 2007) is a free software,

indicated for the development of augmented reality

applications. It is based on video to mix the captured

real scenes with the virtual objects generated by

computer. To adjust the position of the virtual

objects in the scene, the software uses a type of

marker (plate with square frame and a symbol inside

it), working as a bar code (see Figure 2).

Figure 2: ARToolKit marker and virtual object.

The frame is used to calculate the marker

position in the space, depending on the square image

in perspective. The marker needs to be previously

registered in front of the webcam. The internal

symbol works as an identifier of the virtual object,

associated with the marker in a previous stage of the

system. When the marker enters in the field of vision

of the webcam, the software identifies its position

and the associated virtual object, generating and

positioning the virtual object on the plate. When

moving the plate, the associated virtual object is

moved together as if it was grabbed on the plate.

This behavior allows the user to manipulate the

virtual object with the hands.

ARToolKit can be used with direct video based

vision devices, such as helmets, and with video

based vision systems using monitor. With direct

video based vision, the user sees the real scene with

the virtual objects, through the video camera

adjusted on the helmet and pointing to the eyes

direction, giving the impression of a real

manipulation and promoting the immersion

sensation. With the system based on monitor, the

user will see the mixed scene on the monitor, while

he/she manipulates the plates in his/her physical

space. If the webcam, which makes the capture of

the real scene, is on top of the monitor, pointing to

the user's space, the monitor will work as a mirror,

so that when the plate approximate or go away of the

webcam, the image size of the virtual object

increases or decrease, respectively. If the webcam is

beside the user or on his head, pointing to the

physical space between him and the monitor, it will

result in an effect similar to the user's direct vision.

As the objects are placed closer to or more distant of

the user, they will appear larger or smaller in the

mixed scene, shown in the monitor.

3 COLLABORATIVE

ENVIRONMENTS WITH

AUGMENTED REALITY

Nowadays, there is research being developed toward

the use of computers in collaborative activities,

involving mainly remote participants. The area of

Computer Supported Cooperative Work (CSCW)

presents many application examples involving: chat,

audio and video-conference, virtual reality

collaborative systems, and hybrid systems

(

Billinghurst, 2002), (Billinghurst, 2003).

However, to get the collaboration supported by

computer, including the natural manipulation of

objects, innovative interfaces were developed more

recently using augmented reality. Those interfaces

include face-to-face and remote collaboration,

involving real and virtual objects.

The face-to-face collaboration with augmented

reality (Schmalstieg, 2003) is based on the sharing

of the physical environment mixed with virtual

objects and visualized through helmet or monitor.

The participants of the collaborative work act on the

real and virtual objects placed in the same

environment. Each one has his/her own vision,

depending on his/her position, using a helmet with a

COLLABORATIVE AUGMENTED REALITY ENVIRONMENT

259

coupled micro camera, or the same vision, when a

monitor is used with a webcam. Both possibilities

use video based vision system.

In the case of the use of the helmet, the interface

is quite intuitive and toward to the collaboration in

the real world, keeping the current social protocols

of the face-to-face characteristic. When the monitor

is used to visualization, some adaptation is need to

manipulate the objects, because the real and

visualized hands positions are different.

The main characteristics of that collaborative

environment with augmented reality are: virtuality,

augmentation, cooperation, independence and

individuality.

As virtual objects present characteristics similar

to the real objects (dimension and positioning), they

can be manipulated with tangible techniques of

augmented reality, as touch, transport, etc.

In the case of the face-to-face collaboration with

augmented reality, using video based vision, monitor

and webcam, the users manipulate the objects,

visualizing them in the monitor, without

independence and individuality characteristics. The

interface is similar to the previous one, except that

all the users utilize the same point of view, shown in

the monitor, and the visualization, instead of

happening in the physical space, where there are the

hands, will appear on the monitor.

The remote collaboration with augmented reality

is based on computational interfaces which share

information and overlap several users' physical

spaces (as a table, for example), using a computer

network. In this way, each user can put his/her

virtual objects on the shared real table, visualizing

the whole set of virtual objects from all users and

manipulating them. In applications implemented

with Using ARToolKit, each user can put their

plates in the field of vision of the webcam, seeing

and manipulating their virtual objects and the virtual

objects of the others which appear in the scene,

performing the remote collaboration.

The integrated use of audio and video-conference

tools, with interaction in networked augmented

reality environments, allows the implementation of

collaborative distributed environments, keeping the

advantages of the face-to-face collaboration.

The success of the application depends on:

processing power, available for execution of the

augmented reality application using ARToolKit

software; and speed of the communication network

to allow communication and interaction in real time.

The current microcomputers present appropriated

computational power for augmented reality

applications. However, the communication networks

are the bottleneck, once they need to sustain the

minimum requirements of multimedia flow (audio

and video) under variable traffic flow. The solution

involves the improvement of the facilities and/or of

procedures that minimize the traffic and overcome

problems of delay and overload. Nowadays, in some

situations, where the broadband is already reality,

those applications work adequately, but for extend

their use, there is still a lot to do. Meanwhile,

multiple solutions, which allow the coexistence with

the heterogeneity of computers and communication

networks, are being developed to offer different

qualities compatible with the available resources.

4 A COLLABORATIVE

APPLICATION WITH

AUGMENTED REALITY

In order to test a collaborative application running in

a computer network, an application was developed,

using augmented reality, video based vision on

monitor, webcam, computer network connection and

user’s communication based on text, audio, video

and tangible actions. This application, the tic-tac-toe

game in distributed environment with augmented

reality was implemented to work in two remote

computers, according to the Figure 3.

Figure 3: Environment of the Tic-Tac-Toe game.

ARToolKit software was used for sharing the

game overlapping it in the users' physical

environments, so that all instances could work as a

unique environment. The local applications of

augmented reality (the game) communicate among

themselves by sockets, integrating the environments.

The user’s communication use multimedia

communication tools such as: Chat, Skype and

ICEIS 2007 - International Conference on Enterprise Information Systems

260

Messenger, or similar resources, allowing the user to

communicate with others by text, voice and video.

First, all resources are activated to contribute

with the collaboration. The placement of a marker

corresponding to the game board, in any of the

remote environments, generates a virtual board for

each user, placed a little above the surface of the

table, captured by the webcam. This procedure put

all markers below the virtual board, hiding them

from the users. Then, each user will go putting their

markers with virtual game pieces (colored cones and

cylinders, for example), visualizing the whole game

in the monitor. When one of the users gets to align

his/her pieces in the horizontal, vertical or diagonal

position, he/she will place the marker, corresponding

to a bar of the same color of his/her pieces and

indicate in the board that he/she was the winner.

In the same time the user carries on the actions,

he/she can communicate by: text messages in the

chat window, talk through the voice channel and see

the other through a video window. Depending on the

quality of the network and traffic flow, some of

those support elements will be disabled so that the

system continues working. The only element that

can not be disabled is the augmented reality

application, which is the game and potentially

demands less traffic in the network.

Some adjustments in the system were carried out,

aiming to minimize the traffic related to game

information. Initially, the information about the

virtual board and pieces positions were sent

continually to guarantee the consistence of the

positions for remote users, generating an intense and

unnecessary traffic of information in the network.

To reduce this traffic, it was developed a program

which analyzes the positions that store the previous

and current positions collected by the ARToolKit. If

the position (X, Y or Z) of the virtual board or

pieces differ less than a certain tolerance (for

example 0.5 cm), the current information does not

need to be sent; otherwise, it is sent and the current

position is updated to the previous one. In this way,

the information is only sent when there is a

meaningful alteration on positioning of board or

pieces. Thus, with a small traffic of short

information, the network can support the augmented

reality application, even in low speed conditions.

5 IMPROVEMENTS

A way to make the Collaborative Augmented

Reality Environment more powerful for local and

remote applications is creating new functionalities

making easy the user’s actions on handling the

virtual objects. The authoring of virtual

environments using only the hands and some

markers is a typical application which satisfies

people with no specific skills on virtual reality.

The development of such authoring tool requires

a real environment containing:

a catalog of virtual objects with markers;

a virtual scratch pad to receive the selected

virtual objects ;

a set of paddles (markers) with specific actions

to make easy the authoring activity besides the

storage and recovery of the resulting virtual

environment.

In this way, the first approach to solve the

problem has resulted on the functionalities below:

copy and transportation of virtual objects from

the catalog to the virtual scratch pad;

delete specific objects on the scratch pad; store

the scratch pad content (file with positions and

virtual objects references) to continue the

activity latter or to send it to another people;

recover the scratch pad content (from file) to

continue the authoring activity or to see the

authored virtual environment prepared by

another people.

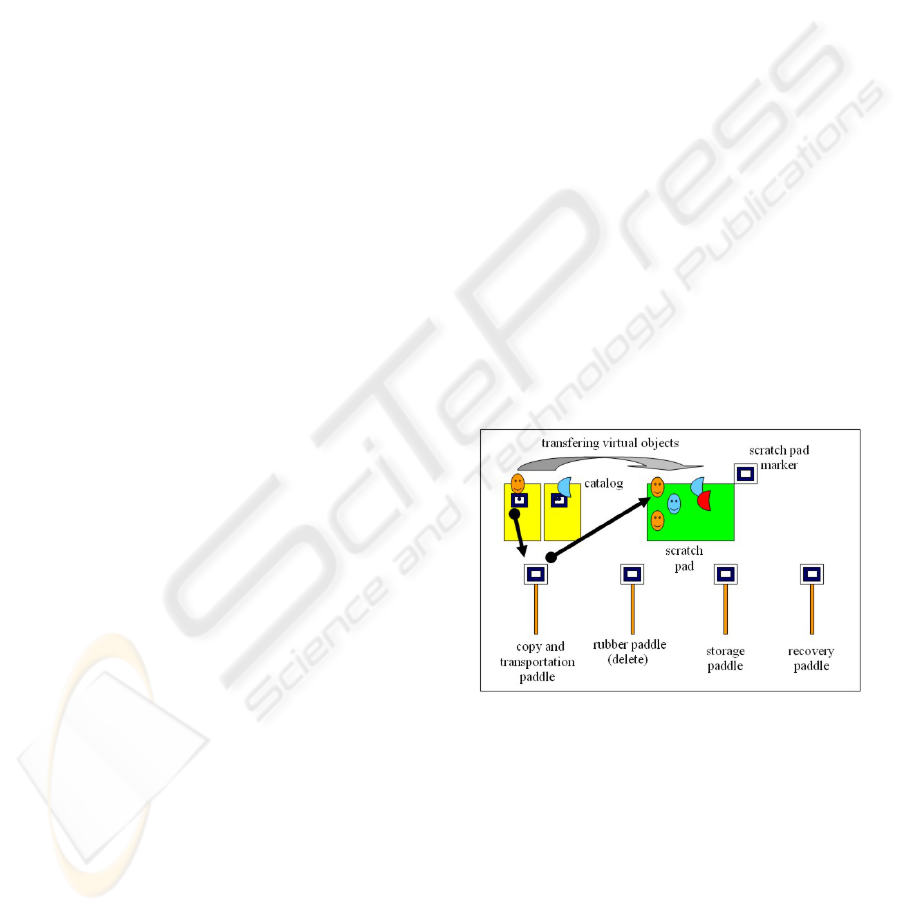

The Figure 4 shows the authoring tool

components, using augmented reality techniques.

Figure 4: Authoring of a virtual environment.

The activity begins when someone place the

catalog with the printed markers in front of the

webcam as well as a marker related to the virtual

scratch pad. Using the copy/transportation paddle,

the user choose a virtual object on the catalog and

capture a copy of it to place on a position on the

virtual scratch pad. If the user is not satisfied with

the position of a specific virtual object, he/she can

use the rubber paddle to delete it, so that, repeating

the copy/transportation action, the object can be

placed on a new position. When the virtual

COLLABORATIVE AUGMENTED REALITY ENVIRONMENT

261

environment on scratch pad is ready, or the user

wants to pause his/her work, he/she can use the

storage paddle to store the authored environment.

Using the recovery paddle, the user can restore the

authored virtual environment over the virtual scratch

pad to continue the activity or see what was done.

That environment allows face-to-face

collaboration of many people working with paddles

in front of a computer, or remote collaboration

manipulating objects on a shared collaborative

virtual scratch pad.

Those activities can be used for distance

education learning supporting synchronous

collaborative applications (in real-time) or

asynchronous collaborative activities, leaving

students contributing for authoring environments

alone and/or participating in a group, so that, at the

end of the task, the result can be sent to teachers by

email, for example. Figure 5 shows the

copy/transportation paddle in action.

Figure 5: Copy/transportation paddle in action.

It was implemented two ways to manipulate the

virtual objects with the copy/transportation paddle:

using proximity to capture the virtual object

from the catalog and pad inclination to leave it

on the virtual scratch pad;

using proximity to capture the virtual object

from the catalog and paddle occlusion to leave

it on the virtual scratch pad;

The inclination is easier than occlusion to use,

but the second has better precision during the

placement of the objects on scratch pad.

As the positions changing of virtual objects on

the virtual scratch pad are difficult to do, using

rubber and copy/transportation paddles, it was

implemented a new paddle only for transportation

virtual objects on the scratch pad. This

improvement has simplified the authoring work,

once the user can select and copy virtual objects

from the catalog, so that he/she can arrange them

later using the transportation paddle.

6 CONCLUSIONS

Augmented reality applied to educational

environments can contribute in a significant way to

the users' perception, interaction and motivation.

This paper discussed collaborative environments

with augmented reality and stressed that it is

possible to create collaborative environments,

through the use of the ARToolKit software and

communication resources operating over computer

networks. Such collaborative environments are very

useful to stimulate the learning and the development

of abilities in group. The work also pointed out

network problems and ways to overcome the

existing difficulties.

Using an application related to authoring of

virtual environment with a toll based on augmented

reality, the paper showed that this technology can be

applied in a powerful way, giving to users many

functionalities and options to operate on virtual

objects on real world using the hands and markers.

ACKNOWLEDGEMENTS

The authors are grateful for support provided by

CNPq/Brazil to the project: “Sistema Complexo

Aprendente: um ambiente de realidade aumentada

para educação”, grant number: 481027/2004-1.

REFERENCES

Lamb, P., 2007. ARToolKit. Retrieved March 08, 2007,

from http://www.hitl.washington.edu/artoolkit/.

Azuma, R.T., 1997. The Survey of Augmented Reality,

Presence: Teleoperators Virtual and Environments,

6(4), 355-385.

Azuma, R.T. et al., 2001. Recent Advances in Augmented

Reality, IEEE Computer Graphics and Applications,

21(6), 34-47.

Billinghurst, M., Kato, H. 2002. Collaborative augmented

reality, Communications of the ACM, 45(7), 40-44.

Billinghurst, M. et. al., 2003. Communication behaviors in

co-located collaborative AR interfaces, International

Journal of Human-Computer Interaction, 16(3), 395-

423.

Milgram, P. et. al., 1994. Augmented Reality: The Class

of Displays on the Reality-Virtuality Continuum,

Telemanipulator and Telepresence Technologies,

SPIE, 2351, 282-292.

Schmalstieg, D., Reitmayr, G. & Hesina, G., 2003.

Distributed applications for collaborative three-

dimensional workspaces, Presence: Teleoperators and

Virtual Environments, 12(1), 52-67.

ICEIS 2007 - International Conference on Enterprise Information Systems

262