MULTI-AGENT BUILDING CONTROL

IN SHARED ENVIRONMENT

Bing Qiao, Kecheng Liu and Chris Guy

School of Systems Engineering, University of Reading, Whiteknights, Reading, Berkshire, England

Keywords: Distributed artificial intelligence, intelligent agents.

Abstract: Multi-agent systems have been adopted to build intelligent environment in recent years. It was claimed that

energy efficiency and occupants’ comfort were the most important factors for evaluating the performance of

modern work environment, and multi-agent systems presented a viable solution to handling the complexity

of dynamic building environment. While previous research has made significant advance in some aspects,

the proposed systems or models were often not applicable in a “shared environment”. This paper introduces

an ongoing project on multi-agent for building control, which aims to achieve both energy efficiency and

occupants’ comfort in a shared environment.

1 INTRODUCTION

Intelligent sustainable healthy buildings improve

business value because they respect environmental

and social needs and occupants’ well-being, which

improves work productivity and human

performance.

MASBO (Multi-Agent System for Building

cOntrol) is an ongoing subproject of CMIPS

(Coordinated Management of Intelligent Pervasive

Spaces) project. It aims to provide a set of software

agents to support both online and offline

applications for intelligent work environment.

2 RELATED WORK

Developing software agents for intelligent building

control is an interdisciplinary task demanding

expertise in such areas as agent technology,

intelligent buildings, control network, and artificial

intelligence.

This section reviews previous work in this area

that could help build the core component of

MASBO: multi-agent system (MAS).

2.1 Agent Technology and Building

Intelligence

Research work conducted by (Davidsson and

Boman) uses a multi-agent system to control an

Intelligent Building. It is part of the ISES

(Information/Society/Energy/System) project that

aims to achieve both energy saving and customer

satisfaction via value added services. Energy saving

is realized by automatic control of lighting and

heating devices according to the presence of

occupants, while customer satisfaction is realized by

adapting light intensity and room temperature

according to occupants’ personal preferences.

While the discussed system is capable of

adjusting the heating and light level to meet personal

preferences, these preferences are predefined and

can not be adapted or learned according to the

feedback or behaviour of the occupants. It can detect

a person’s presence and adapt the room environment

settings according to his/her preferences via an

active badge system. The badge system itself,

however, does not provide the means to distinguish

between actuations from different occupants, which

is necessary for occupants’ behaviour learning

mechanisms proposed in some other research work

(Callaghan et al., 2001, Davidsson and Boman,

2005).

In (Callaghan et al., 2001, Davidsson and

Boman, 2005), a soft computing architecture is

discussed, based on a combination of DAI

159

Qiao B., Liu K. and Guy C. (2007).

MULTI-AGENT BUILDING CONTROL IN SHARED ENVIRONMENT.

In Proceedings of the Ninth International Conference on Enterprise Information Systems - AIDSS, pages 159-164

DOI: 10.5220/0002359301590164

Copyright

c

SciTePress

(distributed artificial intelligence), Fuzzy-Genetic

driven embedded-agents and IP internet technology

for intelligent buildings.

Besides the learning ability, this research also

presents another feature in some cases preferable for

intelligent building environment: user interaction

and feedback to the MAS. However its use of

embedded agents makes it difficult to take advantage

of sophisticated agent platforms and as claimed by

the researchers, places severe constraints on the

possible AI solutions.

Further research following (Callaghan et al.,

2001) is the iDorm project (Hagras et al., 2004),

where an intelligent dormitory is developed as a test

bed for a multiuse ubiquitous computing

environment. One of improvements of iDorm over

(Callaghan et al., 2001) is the introduction of iDorm

gateway server that overcomes many of the practical

problems of mixing networks. However, iDorm is

still based on embedded agents, which despite

demonstrating learning and autonomous behaviours,

are running on nodes with very limited capacity.

The requirement of user feedback or interactions

in intelligent building environment is controversial.

Some researchers claim that ambient intelligence

should not be intrusive, i.e., no special devices used

and no imposing rules on occupants’ behaviour. In

(Rutishauser et al., 2005), a multi-agent system is

discussed for intelligent building control. In contrast

to the approach in (Callaghan et al., 2001, Davidsson

and Boman, 2005), the MAS is equipped with an

unsupervised online real-time learning algorithm

that constructs a fuzzy rule-base, derived from very

sparse data in a non-stationary environment. All

feedback is acquired by means of observing

occupants’ behaviours without intruding on them.

While avoiding intrusiveness could be preferable in

some cases, the MAS loses the ability to distinguish

between actuations and preferences from different

occupants, and thus the preferences learned are not

coupled with the occupants but the room they are in.

By this way, it is unable to take into account

personal preferences.

2.2 Summary of Related Work

After reviewing previous research on multi-agent

systems for building control, a list of issues that may

need further studies have been summarized as

follows:

The personal preferences are often predefined

and can not be adapted or learned according to

the feedback or behaviour of the occupants.

Most systems do not provide a means to

distinguish between actuations from different

occupants, thus are not able to learn individual

preferences.

To learn preferences of occupants in a shared

environment, a single learning mechanism is

not capable of handling complex, dynamic

building environment.

Addressing both preferences learning and

multi-occupancy in a shared environment

complicates the design of the multi-agent

system.

A combination of environmental parameter

values is used as personal preferences. More

recent work in building construction indicates

that a function of such parameters more

accurately represents an occupant’s comfort or

satisfaction degree.

3 OVERVIEW OF MASBO

The design of a Multi-Agent System for Building

cOntrol (MASBO) emphasizes the dynamic

configuration of building facilities to meet the

requirements of building energy efficiency and the

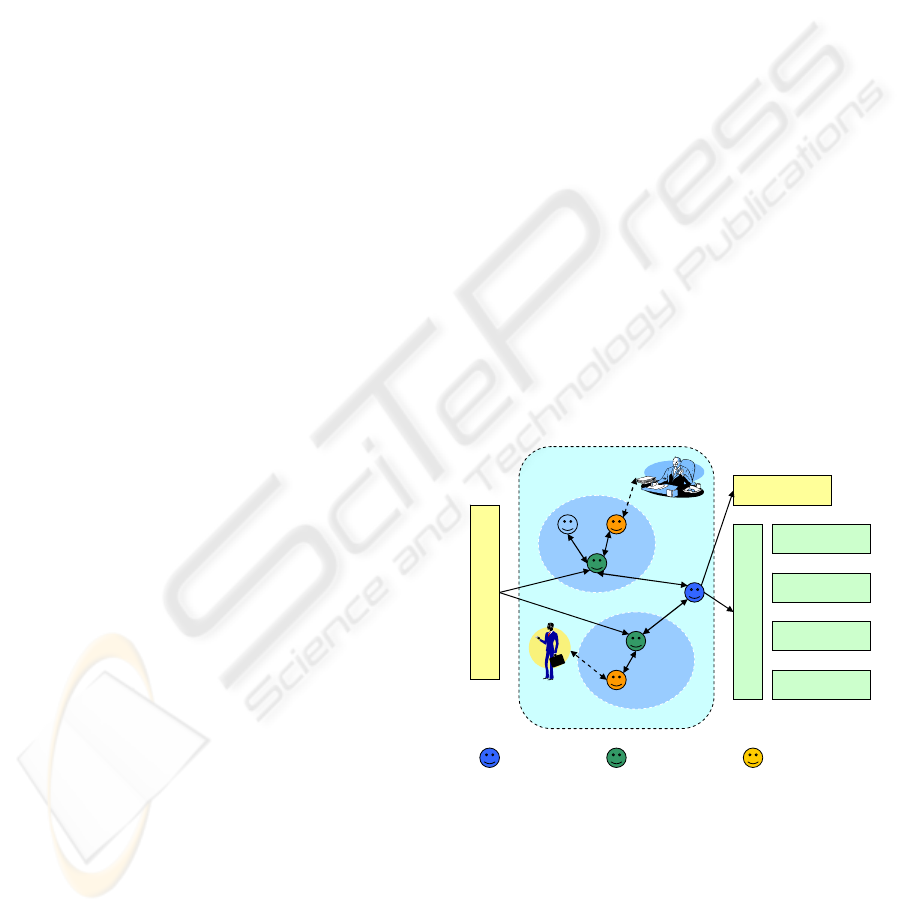

preferences of occupants. Fig. 1 shows the proposed

multi-agent system deployed in two zones. A zone

represents the basic unit of building structure under

MASBO’s control.

Figure 1: Scenario of MASBO. As the hub of CMIPS,

MASBO integrates with the other two components,

building assessment and wireless sensor network.

MASBO is designed as a system composed of a

number of software agents, capable of reaching

goals that are difficult to achieve by an individual

system (Wooldridge, 2002).

MASBO

Building Management System

HVAC Controller

CCTV Controller

Access Controller

Lighting Controller

Central Agent Local Agent Personal Agent

Building Assessment

Wireless Sensor Network

ICEIS 2007 - International Conference on Enterprise Information Systems

160

Personal agents act as assistants that manage user

(occupant) specific information, observe the ambient

environment, and present feedback from other

agents to their users (occupants).

Local agents act as mediator and information

provider. They reconcile contending preferences

from different occupants, learn occupants’

behaviour, and provide structure information of their

respective zones...

A central agent provides services that allow

operators to start or stop agents, deploy or delete

agents, and modify zone information for local

agents. It also aggregates decisions received from

local agents before converting them to BMS

(Building Management System) commands. If a

decision did not go through the central agent to

reach the BMS (has been abandoned or aggregated),

the corresponding local agent will be notified.

For a detailed discussion of agents defined in

MASBO, please refer to (Qiao et al., 2006).

4 DECISION MAKING AND

LEARNING

The design of decision-making and learning

components for MASBO aims to achieve such

flexibility that adapting the system to a dynamic,

shared building environment will just be a matter of

applying different static rules or data analysis

methods on dynamic rules.

4.1 Rules

Decision making and learning process in MASBO

are built upon rules (fuzzy rules when implemented

as a Fuzzy logic controller). A rule in MASBO is

defined as:

Antecedents and actions

Attributes

ID: the ID of the occupant who took the

action.

Priority: “safety”, “security” and

“economy” for static rules; “preference”

for dynamic rules.

Privilege: numbers, e.g. 0 ~ 9, for

making decision among conflicting

rules.

Weight: numbers, execution count, >=

1.

Predicted parameters Vector: a vector of

parameter values depicting the

environment resulted from an

occupant’s or an agent’s action.

Predicted TCI: The resulting TCI from

an occupant’s or an agent’s action. A

number of ways can be used to calculate

this, for instance by using the current

parameters just before executing next

rule.

Effective period: the time span of the

rule in effect, e.g., the interval between

consecutive rules.

Rules are categorised into two groups: static and

dynamic. Static rules are predefined by developers,

occupants, or building managers. They are static in

comparison to the dynamic rules that are generated

at runtime by the learning mechanisms of MASBO.

Static rules

If a zone has no occupants, it must

maintain some default environmental

settings.

If a zone is a corridor or belongs to any

other types of common zones, the

temperature is set with a default value

and the light is turned on only when at

least one person is in this zone.

If only one occupant is present in a non

common zone, such as an office, the

local agent must adapt the

environmental parameters to his/her

preferences.

If more than one occupant is present in

a non common zone, the local agent

needs to reconcile the contending

preferences according to the privileges

those occupants have in this zone.

If occupants' preferences conflict with

the rules applied on involved zone, the

local agent needs to reconcile the

conflicts according to the priorities

those rules have in this zone.

If actions have been taken by occupants

directly on the electrical equipment

instead of their personal agents, the

decisions made by the local agents in

MASBO can be overruled.

Dynamic rules are generated automatically by

the learning process

4.2 Decision Making

The input of decision making process is a

parameters Vector and occupant’s action. Local

agents will only conduct decision making and

learning activities when any of those events occur.

MULTI-AGENT BUILDING CONTROL IN SHARED ENVIRONMENT

161

Otherwise, the sampled data will be discarded, as

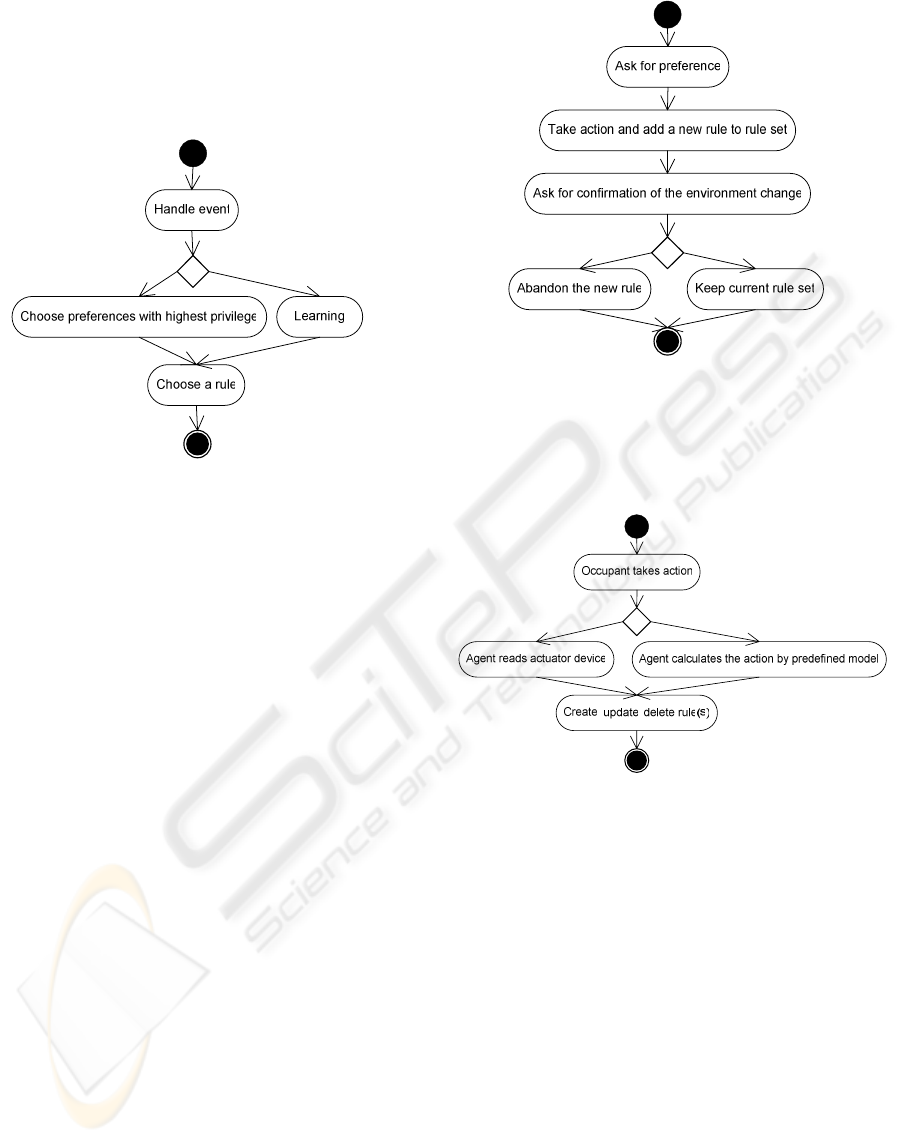

shown in Fig. 2. Three such events are defined as

follows:

“Occupants changed” events (OC)

“Parameters changed” events (PC)

“Device operated” events (DO)

Figure 2: Decision making and learning: handling events,

making decisions and learning occupant’s behaviour.

Decision making needs to solve conflicts in the

following scenarios:

Preferences conflicting with static rule.

Decision will be made according their

priorities.

Occupants with different privilege.

Dynamic rules with the same antecedents but

different actions. Decision will be made

according to rule attributes such as weight,

predicted Vector, TCI, and effective period.

4.3 Learning

Different learning processes can be categorised into

three groups: interactive, supervised, and

reinforcement learning. MASBO adopts a

combination of those processes, aiming to reduce

intrusiveness of the multi-agent system, without

losing the capability of learning individual

preferences.

By interactive learning, the agent asks for

occupant’s preference for any given programmable

setting and tries to adjust its rule set to achieve this

setting. The occupant is then asked to confirm the

environment change, the result of which will help

the agent to either abandon the new rule or keep the

updated rule set. Interactive learning is shown in

Fig. 3.

By supervised learning, it assumes that the

agents know the action of the user by direct

communication between agent and actuator or by

building a model to indirectly calculate the user

action, as shown in Fig. 4.

Figure 4: Supervised learning: having direct information

of the action taken by an occupant on the actuator.

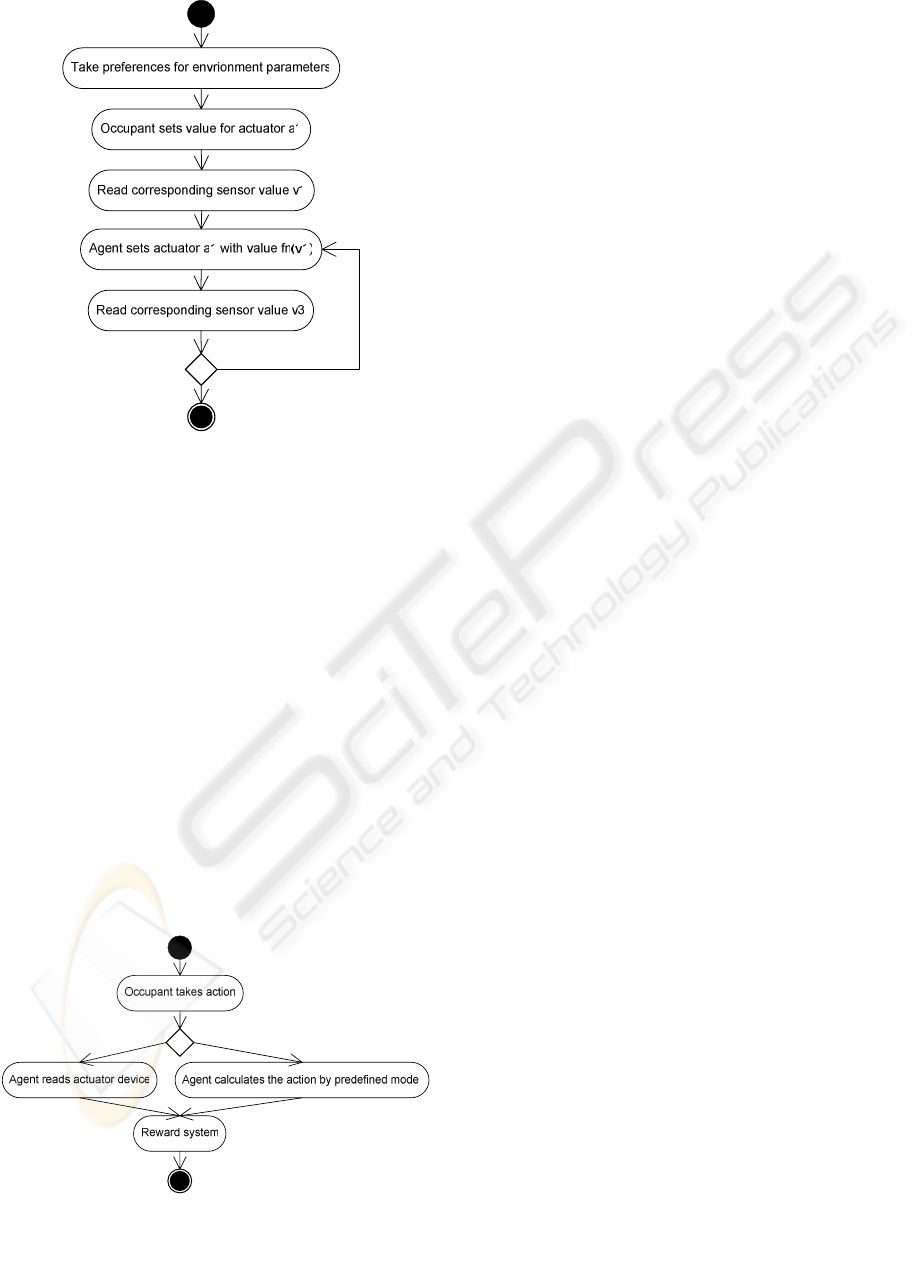

By reinforcement learning, the agent sets

environment parameters according to occupant’s

preferences, as shown in Fig. 5. The agent will try to

guess the value set on the actuator by trying to

minimize the difference between the results of user

action and agent action using the guessed value.

Even if direct information is known to the agent,

reinforcement learning could still be useful by

constructing a reward system, as shown in Fig. 6. If

an agent turns on a light and the occupant turns it

off, the agent would receive a negative

reinforcement. If the person does not change

anything the agent would receive a positive

reinforcement (reward).

Figure 3: Interactive learning

: asking confirmation from

the occupant.

ICEIS 2007 - International Conference on Enterprise Information Systems

162

4.4 Learning in MASBO

An occupant’s profile includes an ID and a number

of sets of preferences related to different

environmental contexts that define such attributes as

privilege, location, and time span.

A set of preferences is stored in a parameters

Vector. An occupant’s preferences are calculated

from all the dynamic rules produced for the related

occupant.

Learning occupants' preferences is conducted by

observing occupants' behaviour and identifying the

person who took the actions. Firstly, the less the

occupants need to instruct the building (e.g., adjust

the thermostat) to change the environment (in this

case, change the temperature), the more they are

satisfied. If the occupants are satisfied with the

current environment, the MASBO can assume that

the environment is preferable to the occupants and

thus calculate their preferences accordingly. On the

other hand, occupants change the environmental

settings via their personal agents and provide

feedback on their satisfaction if they would like to.

By this way, the environmental change can be traced

back to the exact person who made it and the

preference learned can be linked to this particular

individual instead of all occupants in the shared

space. This approach is a combination of the studies

in (Rutishauser et al., 2005, Callaghan et al., 2001).

It not only reduces intrusiveness of the MAS to

occupants, but also allows for the personalized space

for individuals.

The direct outcome of learning is a set of rules

that record the related occupant’s behaviour under

certain environment. The learning process does not

produce preferences. The preferences are calculated

from the learned rules. As defined in section 6.1, a

rule will have the following contents:

Input: (para1, …, parai, …, param)

Output: (action1, action2, …, actioni, …,

actionn)

Attributes: ID, priority, privilege, weight,

resulting(para1, …, parai, …, param),

resulting(TCI), period(start_time, end_time).

An occupant’s behaviour, recorded as a large

amount of rules, can be analysed using statistics or

data mined. Different strategies for analysing the

rules can provide different ways to update an

occupant’s preferences, for instance:

The predicted Vector of the first rule that has

been effective for more than “8” hours since

last update of preferences will be used to

update current preferences.

The predicted Vector of all the rules that,

grouped by predicted Vector, has the sum of

their weights increased “20” within one week

since last update of preferences will be used to

update current preferences.

If the preferences of occupant A have not been

changed for one week, and the sum of the

effective periods of A’s all rules during that

week is less than “5” hours, update them with

another occupant’s preferences that have taken

effect in most time of that week.

If the preferences of occupant A have not been

changed for “1” week, the preferences of

occupant A can be updated according to the

predicted Vector of the rule having TCI

valued “0” (comfort level in general) and the

biggest weight.

Figure 5:

Reinforcement learning: calculating the exact

action taken by an occupant.

Figure 6: Reinforcement learning: reward system.

MULTI-AGENT BUILDING CONTROL IN SHARED ENVIRONMENT

163

If the preferences of occupant A have not been

changed for “1” week, the TCI preference of

occupant A can be updated according to the

predicted TCI value that occurs most often

(personalised TCI value) in all related rules.

An occupant’s personal agent will negotiate with

local agents in respect of which learning mechanism

to be used for preferences learning. Such design of

occupant’s preferences, the rule, and the learning

process enables MASBO to search for the best

preferences learning mechanisms.

5 EVALUATION

Investigations have been made on previous similar

projects (Davidsson and Boman, Rutishauser et al.,

2005, Callaghan et al., Hagras et al., 2004) on how

to evaluate the model and eventually the

implemented system.

Qualitative simulation.

Energy consumption quantitative analysis that

compares the energy consumption of using or

not using MASBO.

Satisfaction quantitative analysis that checks

how well temperature or lighting history

records meet specified policies.

Satisfaction quantitative analysis that compares

the learned rules with the occupant’s journal

entries.

The number of rules learned over time that

indicates how well the Multi-agent system

performs.

6 CONCLUSIONS AND FUTURE

WORK

Many efforts have been made on using multi-agent

system for intelligent building control. However,

while previous work has addressed most of the

important features for MAS based intelligent

building control, we claim that no research has been

done to consider all the following requirements:

Energy efficiency and occupants' comfort

Preferences learning in shared environment

Personalized control and feedback

Human readable and accurate knowledge

representation

Sophisticated agent platform and techniques

We believe above requirements are essential to a

successful intelligent building environment and a

complete solution should be able to tackle all of

them. The first four requirements have been

discussed in this paper. The fifth requirement was

presented in (Qiao et al., 2006). The last requirement

will be addressed in the next step of this project.

Future work will be carried out in the following

steps 1) testing different strategies for preferences

learning and decision making in a simulated

environment; 2) developing MASBO on advanced

agent platform; 3) system integration with wireless

sensor network and building automation system, and

4) evaluation by experiments in real world buildings.

ACKNOWLEDGEMENTS

This paper is based on the research outcomes of

CMIPS project, sponsored by the Department of

Trade and Industry, UK. The project is a

collaborative work between University of Reading,

Thales PLC and Arup Group Ltd.

REFERENCES

Callaghan, V., Clarke, G., Colley, M. & Hagras, H. (2001)

A Soft-Computing DAI Architecture for Intelligent

Buildings. Soft Computing Agents: New Trends for

Designing Autonomous Systems. Springer-Verlag.

Davidsson, P. & Boman, M. (1998) Energy Saving and

Value Added Sevices: Controlling Intelligent

Buildings Using a Multi-Agent Systems Approach.

DA/DSM Europe DistribuTECH. PennWell.

Davidsson, P. & Boman, M. (2005) Distributed

Monitoring and Control of Office Buildings by

Embedded Agents. Information Sciences, 171, 293-

307.

Hagras, H., Callaghan, V., Colley, M., Clarke, G., Pounds-

Cornish, A. & Duman, H. (2004) Creating an

Ambient-Intelligence Environment Using Embedded

Agents. IEEE Intelligent Systems, 19, 12-20.

Qiao, B., Liu, K. & Guy, C. (2006) A Multi-Agent System

for Building Control. IEEE/WIC/ACM International

Conference on Intelligent Agent Technology. Hong

Kong.

Rutishauser, U., Joller, J. & Douglas, R. (2005) Control

and Learning of Ambience by an Intelligent Building.

IEEE Transactions on Systems, Man, and Cybernetics,

Part A: Systems and Humans, 35, 121-132.

Wooldridge, M. (2002) Introduction to MultiAgent

Systems, Wiley.

ICEIS 2007 - International Conference on Enterprise Information Systems

164