DESIGNING AN APPROPRIATE INFORMATION SYSTEMS

DEVELOPMENT METHODOLOGY

FOR DIFFERENT SITUATIONS

David Avison

Dept. of Information Systems, ESSEC Business School, Avenue Bernard Hirsch, Cergy-Pontoise, 95021, France

Jan Pries-Heje

Dept. of Communication, Business and IT, Roskilde University, Universitetsvej 1, DK-4000, Roskilde, Denmark

Keywords: Information systems development, methodology, radar diagram, design science, action research.

Abstract: The number of information systems development methodologies has proliferated and practitioners and

researchers alike have struggled to select a ‘one best’ approach for all applications. But there is no single

methodology that will work for all development situations. The question then arises: ‘when to use which

methodology?’ To address this question we used the design research approach to develop a radar diagram

consisting of eight dimensions. Using three action research cycles, we attempt to validate our design in three

projects that took place in a large administrative organization and elsewhere with groups of IT project

managers. Our artefact can be used to suggest a particular one-off approach for a particular situation.

1 INTRODUCTION

Information systems development methodologies

(ISDM) concern information systems development

processes and products. They lie at the core of the

discipline and practice of information systems.

Systems development typically unfolds as a lifecycle

consisting of a series of stages such as requirements

analysis, design, coding, testing and implementation.

In practice, these stages do not have to be carried out

sequentially but can be done as a series of iterations,

sometimes more or less in parallel. Often each stage

operates with a defined notation and will often result

in a prescribed artefact, such as a requirements

specification or a computer program.

An ISDM is a prescribed way of carrying out the

development process. The description typically

includes activities to be performed; artefacts

resulting form the activities; plus some principles for

organizing the activities and allocating people to

perform the activities. An ISDM can be aimed at a

specific type of development, e.g. database-intensive

applications with less than 10 people involved, or it

can be specific to a company. However, many ISD

methodologies claim to be of generic use. Avison

and Fitzgerald (2006) describe 34 generic

approaches which they refer to as themes, and 25

specific methodologies which they argue are very

distinct.

Early ISDMs were based on practical

experiences, where experienced practitioners

described how they developed their applications.

Others are often anchored in theory, such as

Highsmith (1999) that (partly) builds on the theory

of lean thinking (Womack 1996).

On the other hand, the practicality of using ISD

methodologies has been questioned altogether. A

growing number of studies have suggested that the

relationship of methodologies to the practice of

information systems development is altogether

tenuous (Fitzgerald, 2000, Wastell, 1996 and

Wynekoop & Russo, 1997). It seems that the idea of

an all-embracing methodology has been so dominant

in our thinking about systems development that it

may have become somewhat imaginary. In many

situations where an ISD methodology was claimed

to be used, this was not evident to researchers

(Bødker and Bansler, 1993). Indeed Truex et al.

(2000) suggests that systems development is

amethodical in practice, arguing that in reality the

63

Avison D. and Pries-Heje J. (2007).

DESIGNING AN APPROPRIATE INFORMATION SYSTEMS DEVELOPMENT METHODOLOGY FOR DIFFERENT SITUATIONS.

In Proceedings of the Ninth International Conference on Enterprise Information Systems - ISAS, pages 63-70

DOI: 10.5220/0002360100630070

Copyright

c

SciTePress

management and orchestration of systems

development is done without the predefined

sequence, control, rationality, or claims to

universality implied by much of methodological

thinking.

The conclusion of this line of thought, however,

is intolerable. If any ISDM only has academic but no

practical value then any ISD project team is left on

their own without guidance. On the other hand, we

agree that most methodologies are designed for

situations which follow a stated or unstated ‘ideal

type’. The methodology provides a step-by-step

prescription for addressing this ideal type. However,

situations are all different and there is no such thing

as an ‘ideal type’ in reality. So the mode of thinking

that suggests a methodology for all occasions is also

illusory and unrealistic.

Our world-view, therefore, is that we can

describe something of some use for an IS

development project, and the above harsh critique of

IS development methodology thinking is more a

matter of not finding a useful way of reducing a

methodology to an approach for a particular

situation. We need to guide developers as they

develop an application, but this is more likely to be a

methodology framework than a prescriptive

methodology.

Our experiences and thinking therefore concur

with Avison and Wood-Harper (1990) when

describing their Multiview framework and

Checkland (1999) in describing soft systems

methodology, that we need some form of guiding

framework. But even a generic approach at the level

of a framework needs to be reduced to apply to a

specific situation. The important words in the above

are reduced and particular situation, as the real

challenge lies in how this reduction is done for a

particular practical situation.

Avison and Wood-Harper, for example, argue

that in their experience IS development is contingent

on the particular situation and finding the right

combination of methods, techniques and tools is

really more like an exploration than the practice of

applying a methodology because:

• The 'fuzziness' of some applications requires an

attack on a number of fronts. This exploration

may lead to an understanding of the problem

area and hence lead to a reasonable solution.

• Tools and techniques appropriate for one set of

circumstances may not be appropriate for others.

• As an information systems project develops, it

takes on different perspectives or 'views' and any

methodology adopted should incorporate these

views, which may be human, political,

organizational, technical, economic, and so on

However, few writers give help on choosing

which specific combination is appropriate to which

specific situations. It is true that Avison and Taylor

(1996) identify five different classes of situation and

appropriate approaches as follows:

1. Well-structured problem situations with a well-

defined problem and clear requirements. A

traditional prescriptive approach might be

regarded as appropriate in this class of situation.

2. As above but with unclear requirements. A data,

process modeling, or a prototyping approach is

suggested as appropriate here.

3. Unstructured problem situation with unclear

objectives. A soft systems approach would be

appropriate in this situation.

4. High user-interaction systems. A people-focused

approach would be appropriate here.

5. Very unclear situations, where a contingency

approach, such as Multiview, is suggested.

However we feel that this advice is rather too

general and we wished to design a finer approach.

So to address this research question we set out to

develop a framework, which we tested at one

specific organization – Danske Bank – and we did it

over several years (2001-2005) as an action research

undertaking. We now believe that we are beginning

to have an answer to the question, in the form of a

framework focusing on the final product, with a few

well-chosen patterns through the ‘maze’ of

possibilities, and some rules for choosing

methodology parts.

We discuss our research method in section 2. In

Section 3 we describe a technique for documenting

the different types of development situations that we

refer to as ‘radar diagrams’. We also illustrate four

typical patterns and suggest strategies to develop

applications in these four situations. In section 4 we

show how we began to validate our approach at

Danske Bank using three action research cycles and

later with groups of project managers at a number of

companies. Finally, in section 5 we conclude and

suggest future research.

2 RESEARCH QUESTION AND

METHOD

Several books and consultants have claimed to have

found the methodology for all (or most) applications

in all (or most) situations, but it seems that there is

no single method that will ever work for (nearly) all

ICEIS 2007 - International Conference on Enterprise Information Systems

64

development situations. The question then arises:

When to use what?

We used two research approaches to address

this question. In the first part we used design science

to formulate a way of characterizing particular

situations. In the second part we used action research

to apply our design to see if it could be demonstrated

in practice and to improve the basic design using this

practical experience. Cole et al. (2005) provides a

discussion about cross-fertilization between the two

approaches of design research and action research.

Knowledge

flows

Process

steps

Knowledge

Flows

Awareness

of problem

Suggestion

Development

Evaluation

Conclusion

Circumscription

Operation

and goal

knowledge

Abduction

Deduction

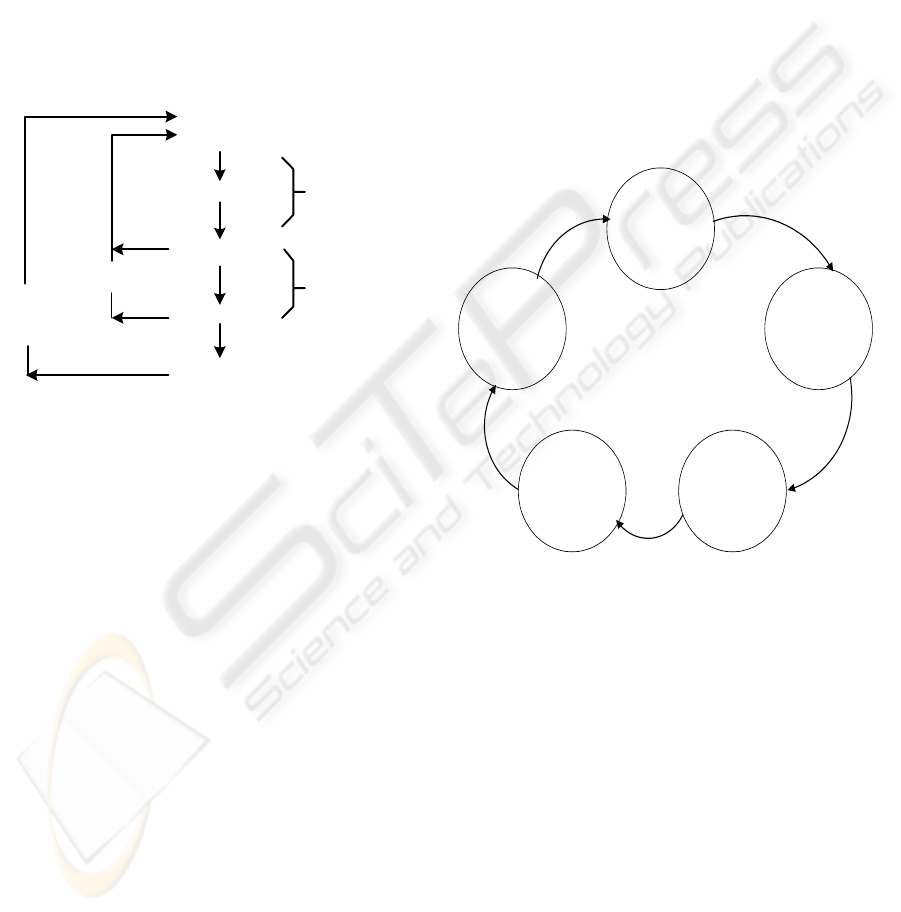

Figure 1: Design research (from Takeda, et al., 1990).

Design science ‘seeks to extend the boundaries

of human and organizational capabilities by creating

new and innovative artifacts’ (Hevner et al., 2004).

In the model shown as Figure 1 (see also ISWorld,

2006), awareness of the problem suggests either a

formal or informal proposal for a new research

effort. In our research we proposed the idea of a

‘radar’ that could be used as a basis for

understanding the type of situation. A tentative

design is produced in the suggestion phase. As we

shall see, our tentative design consisted of a ‘radar’

of eight dimensions and we developed this artifact.

We then tested this as an action research project

consisting of three cases that took place in a Danish

bank. Each specific act of construction, that is, each

action research case, leads us to understand the

design-in-action further and hence to evaluate it and

modify it as necessary and make conclusions from

this evidence and procedure.

Action research fits well into the design research

cycle. It can be used to cover the deduction part of

the design cycle, that is in our research, development

or modification of the ‘radar’ design, its testing in a

real-life situation. This in turn may be followed by

further evaluation and circumspection, which may

lead to a modification and retest or simply a retest.

Again, this itself may be followed by a further cycle

evaluation and circumspection, which may lead to

another modification and retest or simply yet another

retest. In this way the artifact is tuned further.

Action research also implies a synergy between

researchers and practitioners. Researchers (like us)

test and refine principles, tools, techniques and

methodologies to address real-world problems whilst

practitioners as well as researchers may participate

in the analysis, design and implementation processes

and contribute to any decision making.

A fuller description of the action research cycle

is given in Susman and Evered (1978) as shown in

Figure 2. (A new text edited by Ned Kock (2006)

provides a very comprehensive information systems

view of action research).

Figure 2: The Action Research Cycle (from Susman and

Evered, 1978).

3 TAILORING PROJECTS:

RADAR DIAGRAMS

The infrastructure for our project was first

established in the fall of 2001. A project group was

established with five people from Danske Bank and

a researcher (one of the authors) from outside. Three

of the six had worked together prior to this project in

another successful design undertaking (Pries-Heje et

al. 2001). This influenced the choice of a combined

design science and action research approach for this

project.

Early in 2001 it was questioned inside Danske

Bank whether the existing company ISDM was good

1. Diagnosing:

Identifying or defining a

problem

2. Action planning:

Considering alternative

courses of action for

solving a problem

5. Specifying learning:

Identifying general

findings

4. Evaluating: Studying

the consequences of

an action

3. Action taking:

Selecting a course of

action

DESIGNING AN APPROPRIATE INFORMATION SYSTEMS DEVELOPMENT METHODOLOGY FOR DIFFERENT

SITUATIONS

65

and useful enough. The diagnosing of this situation

was done by means of an interview study

undertaken among IS project managers within

Danske Bank. This study revealed that the existing

company methodology was very hard to tailor to the

specific needs of particular projects. The assumption

that ‘one size fits all projects’ had proved invalid!

After this diagnosis a formal action research

undertaking was established. We were asked to

intervene, but we could see the advantages of doing

so with the practitioners, hence the action research

approach. We saw ourselves as researchers not

consultants. Furthermore the projects demanded

more help and better tools for the tailoring process.

Thus what we called the tailoring project started.

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

5

3

4

31

1

3

2

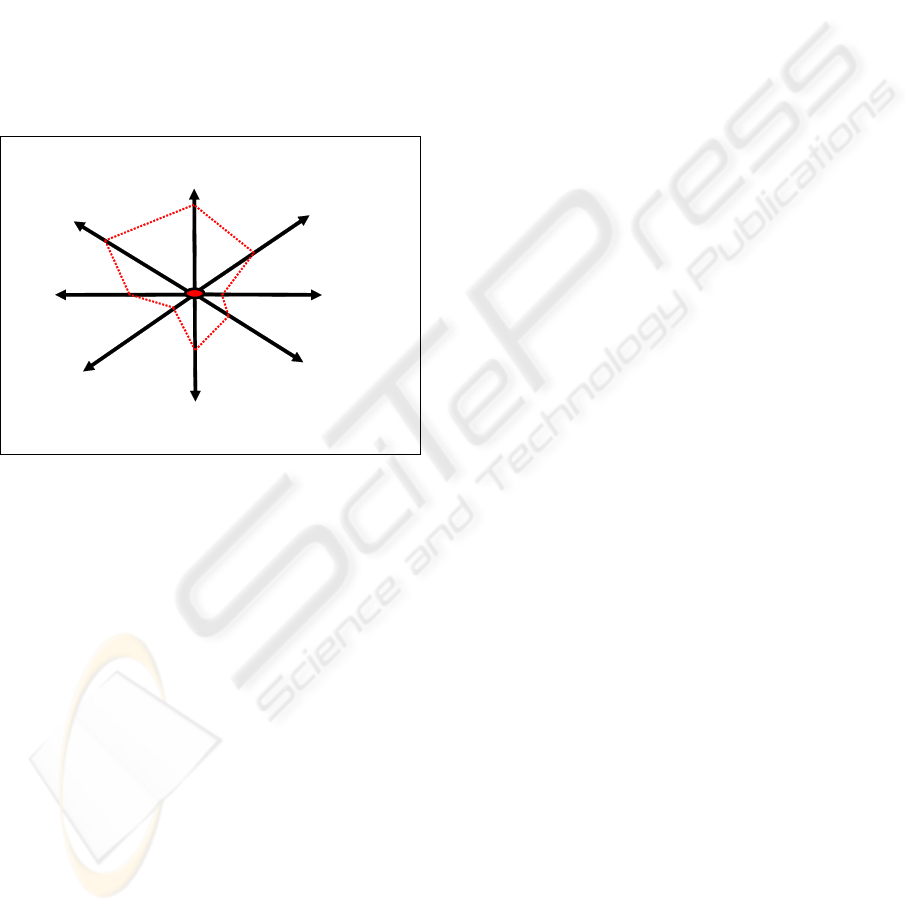

Figure 3: The ‘radar’ developed to characterize a project

along 8 dimensions.

The first idea – or theory – in the tailoring

project was to find a number of characteristics of

different projects, and then use these characteristics

to identify a subset of methods from the company

methodology. We analyzed the notes from the

interview study and we studied existing literature

and identified eight dimensions that could be used to

characterize a project. We decided to use a 1 to 5

scale to score each dimension. When a concrete

project was scored, and the project profile thereby

identified, we then wanted to have a set of

guidelines to help us to tailor the company

methodology for the specific project. In figure 3 we

show the eight dimensions with an example scoring.

We called this project profile the radar.

In the middle of the radar is the ‘sweet spot’

meaning the characteristics that make the project as

‘developmental friendly’ as possible. For example,

for the dimension called Team, the scoring ‘1’

would be given in relation to a project which, for

example, would be carried out in a small group, with

group members sharing the same background,

having worked together before, and with a perfect

mix of personality types and temperaments in the

group. On the other hand, the scoring ‘5’ on the

Team dimension might be given for a large group;

having different backgrounds (education and/or

experience), an unbalanced mix of personality types

and temperaments, and where the team members do

not know each other.

Figure 3 gives the case where aspects in the

lower half, including the Team dimension were

developmental friendly, whereas those in the top

half, for example, difficulties with regard to the task

to be performed, and quality requirements would

prove to be much more challenging.

The radar gives a good insight into what kind of

IS project one was facing. We were also able to

establish specific advice for each of the eight

dimensions in the radar. For example, to score 5 on

the dimension ‘Individual and background’ the IS

development project might be characterised by:

1. Individuals having minimal or no project

experience,

2. Individuals being forced into the project – and

therefore may be feeling ‘punished’ by being

assigned to project, and

3. Individuals allocated part time – and the other

projects they are allocated to are of higher

priority.

Thus a score of 5 on this dimension constitutes a

very challenging situation for the project manager.

Nevertheless we were able to find mitigation

remedies to recommend to the team. For example,

based on McConnell (1996) we might advise that

development project managers:

1 Aim at uninterrupted days or periods for the

people working in the project. So, for example,

they could agree that a 20% allocation occurs

every Tuesday full time instead of an hour or so

here and there.

2 Write things down – make a kind of contract

with the individual.

3 Try to identify and focus on the motivation of

the individual

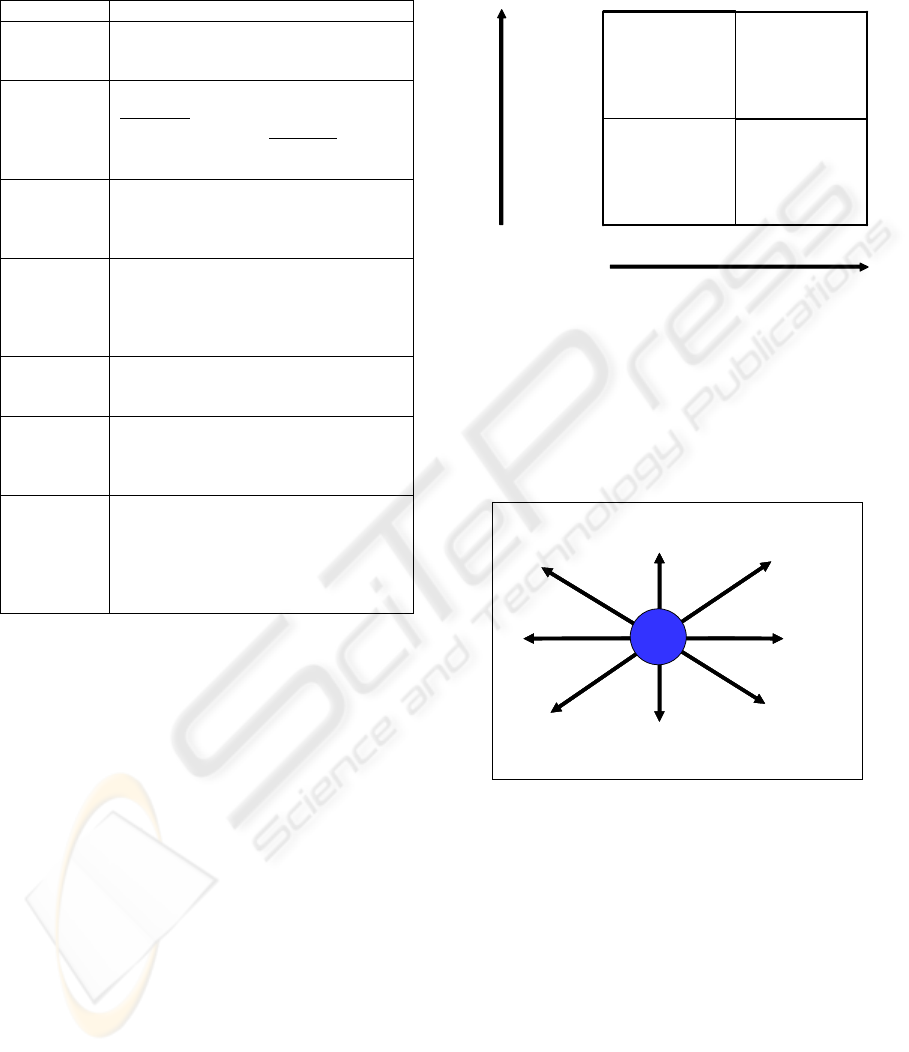

In Figure 4 we have shown what is required to

score ’5’ in the seven other dimensions.

However, in practice it was very difficult for us

to establish the causal relationship between the

‘radar picture’ and recommendations for both the IS

management process and the IS development

process. In the literature we could find simple causal

relationships, like ‘IF you have sparse calendar time

AND on-time delivery is important THEN use time

boxing’ (inspired by McConnell 1996). However,

ICEIS 2007 - International Conference on Enterprise Information Systems

66

we could not find complex relationships like ‘IF

Team + Task is High AND Knowledge about is low

THEN do this and that’.

Figure 4: What characterises scoring ’5’ in the radar.

Thus we looked for a simpler way to characterize

projects. The framework developed by Mathiassen

and Stage (1990) has two dimensions, complexity

and uncertainty. The degree of complexity

represents the amount of relevant information that is

available in a given situation. In contrast, the degree

of uncertainty represents the availability and

reliability of information that is relevant in a given

situation. Complexity can be measured on a 2-point

scale from simple to complex. Likewise uncertainty

can be measured on a 2-point scale from stable to

dynamic.

Using the resulting 2-by-2 matrix we succeeded

in establishing the relationship between project

characteristics and recommendations (Mathiassen

and Stage, 1990). We have shown this in figure 5.

Now we had eight dimensions that literature and

practice in Danske Bank told us were of importance.

We also had the simple 2-by-2 matrix with

recommendations (figure 5) that looks closely at the

task dimension. We then examined our data

carefully and succeeded in identifying four patterns

in the scoring of the eight dimensions. The four

patterns we called: ‘Sun’, ‘Ant’, ‘Amoeba’, and

‘Bomb’.

Figure 5: The simple 2-by-2 matrix we used for tailoring.

The ‘Sun’ is characterised by low scoring on the

eight dimensions. In this case the project at hand is

developmental friendly and we may simply use a

traditional waterfall approach. We have shown an

example of the sun in figure 6.

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

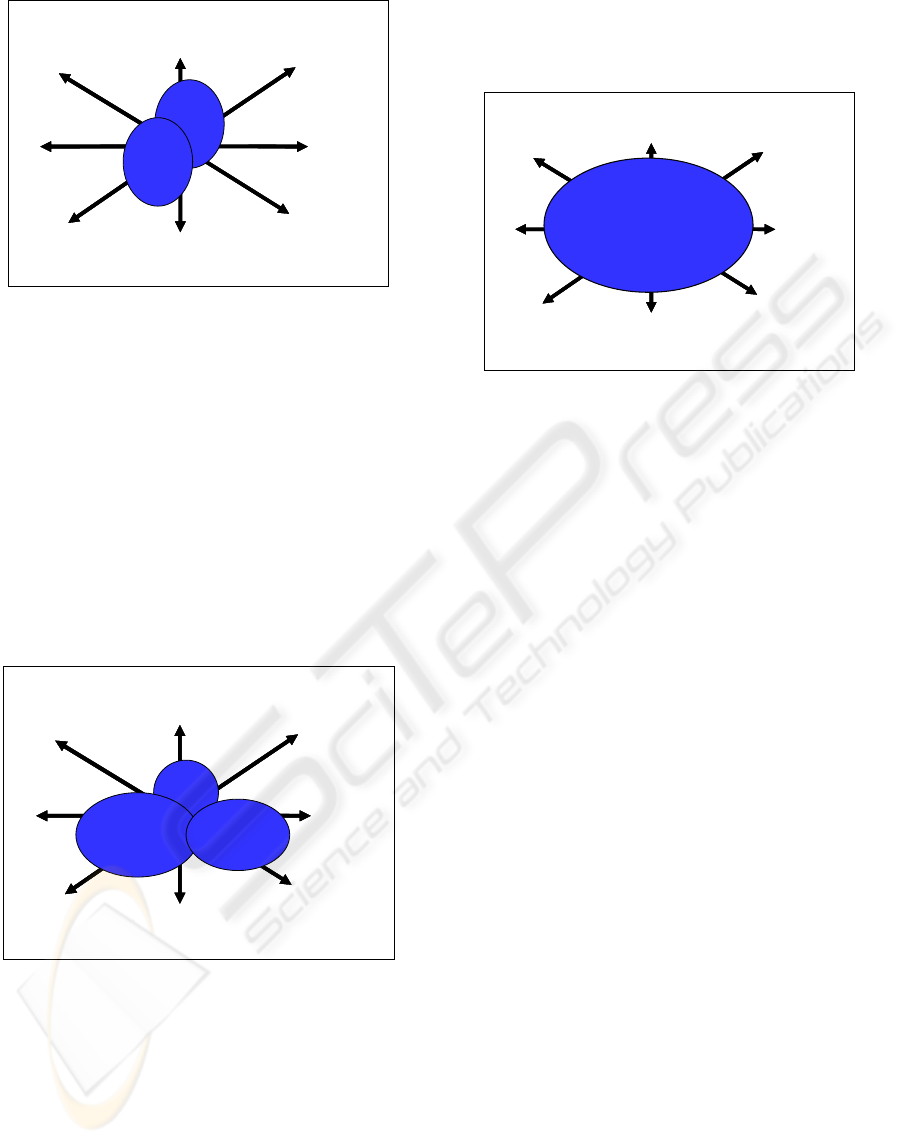

Figure 6: An example of the ‘Sun’ pattern.

The ‘Ant’ is characterised by Task scoring high

and 1-2 characteristics scoring high as well (but the

others scoring low). In this case the recommendation

to the project team is to use a phased model. Focus

first on getting an overview and structure to the

work, and then split the complex task into smaller

deliverables, for example. one every third month.

This splitting can also be made by prioritizing

requirements. Figure 7 represents an example of the

‘Ant’ pattern.

Dimension 5 is characterised by

Task Large task.

Unclear goal.

Complex = hard to understand or use.

Knowledge

about …

Limited domain knowledge on the

application

of research project.

Limited knowledge for developing

the

project results.

Limited technical and tool knowledge.

Environment You are sitting far from each other –

different buildings/countries.

Impossible to avoid interruptions.

Imperfect spatial conditions.

Team Large group,, different background

(education and/or experience)

Unbalanced mix of personality types and

temperaments

Don’t know each other.

Calendar

Time

Short time.

Time critical – have to be delivered by a

specific date

Stakeholders Conflict and disagreement.

Many stakeholders.

Limited attention from management.

Long and unclear decision making

Quality and

criticality

Critical – defects will threaten life.

Very specific process requirements, i.e.

things have to be done in a specific way.

Target group need unknown and hard to

elicit.

Many and difficult product requirements.

Uncertainty

Complexity

Make ex peri ments,

e.g. prototypes to

reduce uncertainty

Only short term

planning

Keep it simple

Use traditional

waterfall-like

approach

Use long term

detailed planning

Split task in small (3

mont hs) deliverables

Create overview and

structure first

Prioritize req. hard

Follow-up on plans

Combine

experimentation

with systematic

progress planning,

exec ution and

follow-up

Stable

Simple High

Dynamic

DESIGNING AN APPROPRIATE INFORMATION SYSTEMS DEVELOPMENT METHODOLOGY FOR DIFFERENT

SITUATIONS

67

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Figure 7: An example of the ‘Ant’ pattern.

The ‘Amoeba’ is the adverse of the ‘Ant’ in that

the Task is scoring low but at least 3 other

dimensions are scoring high. This creates

considerable uncertainty for the development

project. The advice on methodology in this case is to

use 2-3 development iterations to reduce uncertainty

and to do this as soon as possible. This can be

achieved by making usability prototypes, hole-

through testing, and scenarios to illuminate

problems. We also advise against promising an end

date to the whole project. It is best to make only

short-term detailed plans; 1-2 months ahead. In

figure 8 we show an example of an ‘Amoeba’.

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Figure 8: An example of the ‘Amoeba’ pattern.

Finally, the ‘Bomb’ (as the name suggests) is

characterised by high scoring on all dimensions. The

advice on methodology that we derived here was to

use a development model that includes many

experiments – preferably risk-driven (for example,

the spiral model of Boehm, 1986). We also

recommend to experiment early and to have many

iteration cycles. Further, we suggest starting the

project by creating an overview, trying to establish

some structure, planning carefully. Yet at the same

time have many milestones and small deliverables.

In figure 9 we have shown an example of the

‘Bomb’ pattern.

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Task

Knowledge

about …

Individual &

background

Environment

Team

Calendar time

Stakeholders

Quality / Criticality

Figure 9: An example of the ‘Bomb’ pattern.

4 TESTING THE DESIGN AT

DANSKE BANK

The Danske Bank group is a financial institution that

provides all types of financial services such as

banking, mortgaging and insurance in northern

Europe. Danske Bank employs 17000 employees

and has more than 3 million private customers in

Scandinavia. As part of the Danske Bank Group

there is an IT department with 1700 employees

located at four development centres.

IS development projects at Danske Bank vary

widely in size; most are small and short-term, but

there are also some major projects that have strategic

implications for the entire Danske Bank group.

Project teams of three to five people typically handle

the smaller projects, which usually take from six to

12 months. Large projects, such as the Year 2000

compliance project, typically involve up to 150

people and last from 6 months to 3 years.

The four development centres are headed by a

senior vice president. Each individual division is led

by a vice president and organized into departments,

with typically 20 to 50 people divided among five or

so projects. Project managers oversee regular

projects, while the vice president manages high-

profile projects. IS developers in Danske Bank

typically have a bachelor’s degree in either an IT-

related field or banking, insurance or real estate.

After designing our concept model we validated

it in an action research cycle with projects in Danske

Bank. In total we did three action research cycles.

The first action taking in a project lasted a full day

ICEIS 2007 - International Conference on Enterprise Information Systems

68

and was carried out as a kind of facilitated workshop

(with one of the authors of this paper as facilitator).

The action taking was in a project called ‘online

signup’. There were seven people from the project

participating. The project scored high on three

dimensions and we used most of the time to discuss

how they could cope with these three specific

dimensions. The evaluation of the first action taking

suggested that our 8 dimensions used to characterise

a project worked well. However, the learning that

we elicited after evaluating was that a number of the

detailed questions used to determine the scoring on

the dimensions needed developing. In particular, we

realized that the “Task” and the “Knowledge about

…” dimensions were very weakly developed.

Following further study of the literature we

developed an improved concept (a better radar). For

example we improved the questions for deciding the

scoring on the eight dimensions and we brought in

the model shown as figure 4 to help us identify the

‘Task’ dimension. Further, we considerably

improved the ‘Knowledge about …’ dimension by

developing a concept called knowledge maps (Pries-

Heje, 2004). As part of this action taking we

considered many other alternatives than the ones

chosen.

Our second round action taking took place in a

project called ‘new pay-out system’ where four

people from the project participated in the action.

The second round action taking took place three

months after the first round. With 5 people working

approximately half time on average, that means that

more than 7.5 people months were invested in one

action research cycle.

Our evaluation from the second round action

taking suggested that our improved questioning to

each of the eight dimensions worked well, but that a

workshop aimed at helping the project should take

place at an earlier time than was the case here. The

analysis phase for the project had more or less

finished. For each of the eight dimensions we had

come up with recommendations for the project.

However, they were somewhat confused by the long

list of recommendations. Evaluating this observation

we realized that we needed some structure to the

recommendations. As part of our third round action

planning we decided to put all the recommendations

of activities that we identified on a timeline as part

of the workshop.

The third action taking was done in a project

called ‘credit secured by mortgage on real property’.

This occurred in phase 2 of the project and phase 1

had already delivered some results. When evaluating

the outcome we found that the eight dimensions and

the recommendations related to scoring high on each

dimension were working well. The uncertainty-

complexity dimension also worked well but it was

difficult to explain to the participants why one of the

eight dimensions received so much more attention

than the seven others. Based on this we specified our

learning in the form of the ‘Sun’, ‘Ant’, ‘Amoeba’

and ‘Bomb’ as reported earlier in this paper.

After each action taking in the three action

research cycles we asked the participants to evaluate

their subjective satisfaction. On a scale from 1 to 5

we had an average of 4.11 with 5 being the best

score. So the three project teams felt that the

tailoring concept as we have presented it above was

valuable and useful.

Further, all three projects followed the outcome

from the action taking - in the form of recommended

‘methodology pieces’. Participants were satisfied

with the selection that resulted from the workshop.

All three projects were finished and delivered

within their scope. Did they go better as a result of

our action taking? It is impossible to know. We can

never compare reality with a ‘what-if’ reality other

than ask the participants whether they thought they

were better off? But in that sense there was quite a

positive evaluation.

However, we can also ask whether the action

research reported here made a lasting influence on

the organization? Here the answer is no: even

though the projects were successful and practitioners

satisfied, we could not convince top management in

the organization that the tailoring concept was

useful. At the same time Danske Bank implemented

an organizational restructuring and the tailoring

project was stopped (see Pries-Heje, 2006).

However, as one of the authors was teaching

courses for professional IT practitioners (project

managers) it was natural to validate the concept

further. The first round of validation took place in

the spring semester of 2004. These practitioners

were divided into groups and each group had to

identify a project in a real setting. They were asked

to use the radar and show the resulting patterns. A

second and a third validation took place in the spring

semester 2005 and in 2006 with the project

managers. In all projects, the project manager found

the eight dimensions and the four patterns proved

valuable to gain an overview and to make informed

decisions on how to tailor a methodology for a

particular project.

DESIGNING AN APPROPRIATE INFORMATION SYSTEMS DEVELOPMENT METHODOLOGY FOR DIFFERENT

SITUATIONS

69

5 CONCLUSIONS

We argue that we have designed an artefact that can

be used for reducing a methodology to suggest a

particular one-off approach for a particular

situation. We have validated the concept in one

company and at the overview level in approximately

20 other companies and projects. However, the

artefact needs to be tested in many more situations

using action research in order to really convince and

for refining purposes. We believe the concept is

ready for further diffusion outside a Danish context

and the classroom.

ACKNOWLEDGEMENTS

The authors are grateful that Danske Bank which

allowed us to carry out this study. Special thanks go

to Helle Svane, Ann-Dorte Fladkjær-Nielsen,

Lisbeth Nørgaard, and Lana Westhi who all

participated in the action research cycles at Danske

Bank.

REFERENCES

Avison, D. E. and Fitzgerald, G. (2006) Information

Systems Development: Methodologies, Techniques and

Tools, McGraw-Hill, Maidenhead, 4

th

edition.

Avison, D. E. and Taylor, V. (1996) Information systems

development methodologies: A classification

according to problem situations, Journal of

Information Technology, 11.

Avison, D.E. and Wood-Harper, A.T. (1990) Multiview:

An Exploration in Information Systems Development,

McGraw-Hill, Maidenhead.

Boehm, B. (1986). A spiral model of software

development and enhancement. ACM SIGSOFT

Software Engineering Notes, 11, 4, 14-24.

Bødker, K. & J. Bansler (1993). A reappraisal of

structured analysis: design in an organizational

context. ACM Transactions on Information Systems

(TOIS), 11, 2.

Checkland, P. (1999) Soft systems methodology: A 30-

year retrospective, In Systems Thinking, Systems

Practice, John Wiley, Chichester.

Cole, R, S. Purao, M. Rossi & M.K. Sein (2005). Being

Proactive: Where Action research meets Design

Research. In: Proceedings of the 26th International

Conference on Information Systems (ICIS).

Fitzgerald, B. (2000). Systems development

methodologies: The problem of tenses. Information

Technology and People, 13, 2, 13-22.

Hevner, A. R., March, S. T., Park, J. and Ram, S. (2004)

Design Science in Information Systems Research, MIS

Quarterly, 28, 1, 75-105.

Highsmith, J. (1999). Adaptive Software Development.

Dorset House, New York.

ISWorld (2006) http://www.isworld.org/

Researchdesign/drisISworld.htm, accessed November 21,

2006.

Kock, N. (2006) Information Systems Action Research: An

Applied View of Emerging Concepts and Methods,

Springer, New York.

Mathiassen, L and J. Stage (1990). Complexity and

uncertainty in software design. In CompEuro '90 -

Proceedings of the 1990 IEEE International

Conference on Computer Systems and Software

Engineering, May 1990, Tel Aviv, 482-489.

McConnell, S (1996). Rapid Development. Microsoft

Press, New York.

Papazoglou, M.P., and Georgakopoulos, D. (2003),

Service Oriented Computing: Introduction,

Communications of the ACM, 46, 10, 25-28.

Pries-Heje, J., S. Tryde & A.F. Nielsen (2001).

Framework for organizational implementation of SPI.

Chapter 15 in: Mathiassen, L., J. Pries-Heje & O.

Ngwenyama (eds.) (2001). Improving Software

Organizations - From Principles to Practice. Addison-

Wesley.

Pries-Heje, J. (2004). Managing Knowledge in IT

Projects. IFCAI Journal of Knowledge Management,

II, 4, 49-62.

Pries-Heje, J. (2006). When to use what? - Selecting

systems development method in a Bank. Australian

Conference on Information Systems, Adelaide,

December 2006.

Susman, G. and Evered, R. (1978) An assessment of the

Scientific Merits of Action Research. Administrative

Science Quarterly 23, 4, 582-603.

Takeda, H., Veerkamp, P., Tomiyama, T., Yoshikawam,

H. (1990). Modeling Design Processes. AI Magazine,

Winter, 37-48.

Truex, D., Baskerville, R., & Travis, J. (2000).

Amethodical Systems Development: The Deferred

Meaning of Systems Development Methods.

Accounting, Management and Information

Technology, 10, 53-79.

Wastell, D. (1996) The fetish of technique: Methodology

as a social defence, Information Systems Journal, 6, 1.

Womack, J.P. (1996). Lean Thinking. Rawson, New York.

Wynekoop, J. L., & Russo, N. L. (1997). Studying system

development methodologies: An examination of

research methods. Information Systems Journal, 7, 1,

47-65.

ICEIS 2007 - International Conference on Enterprise Information Systems

70