GESTURE THERAPY

A Low-Cost Vision-Based System for Rehabilitation after Stroke

L. Enrique Sucar

1

, Ron S. Leder

2

, David Reinkensmeyer

3

, Jorge Hernández

4

, Gidardo Azcárate

5

Nallely Casteñeda

6

and Pedro Saucedo

7

1

Departamento de Computación, INAOE, Tonantzintla, Puebla, México

2

División de Ingeniería Eléctrica, UNAM, México D.F., México

3

Department of Mechanical and Aerospace Engineering, UC Irvine, USA

4

Unidad de Rehabilitación, INNN, Mexico D.F., Mexico

5

ITESM Campus Cuernavaca, Morelos, México

6

Unidad de Rehabilitación, INNN, Mexico D. F., Mexico

7

Universidad Anáhuac del Sur, Mexico D.F., Mexico

Keywords: Rehabilitation, stroke, therapeutic technology.

Abstract: An important goal for rehabilitation engineering is to develop technology that allows individuals with stroke

to practice intensive movement training without the expense of an always-present therapist. We have

developed a low-cost, computer vision system that allows individuals with stroke to practice arm movement

exercises at home or at the clinic, with periodic interactions with a therapist. The system intgrates a web-

based system for facilitating repetitive movement training, with state-of-the art computer vision algorithms

that track the hand of a patient and obtain its 3-D coordinates, using two inexpensive cameras and a

conventional personal computer. An initial prototype of the system has been evaluated in a pilot clinical

study with positive results.

1 INTRODUCTION

Each year in the U.S. alone over 600,000 people

survive a stroke (ASA 2004), and similar figures

exist in other countries. Approximately 80% of

acute stroke survivors lose arm and hand movement

skills. Movement impairments after stroke are

typically treated with intensive, hands-on physical

and occupational therapy for several weeks after the

initial injury. Unfortunately, due to economic

pressures on health care providers, stroke patients

are receiving less therapy and going home sooner.

The ensuing home rehabilitation is often self-

directed with little professional or quantitative

feedback. Even as formal therapy declines, a

growing body of evidence suggests that both acute

and chronic stroke survivors can improve movement

ability with intensive, supervised training. Thus, an

important goal for rehabilitation engineering is to

develop technology that allows individuals with

stroke to practice intensive movement training

without the expense of an always-present therapist.

We have developed a prototype of a low-cost,

computer vision system that allows individuals with

stroke to practice arm movement exercises at home

or at the clinic, with periodic interactions with a

therapist. The system makes use of our previous

work on a low-cost, highly accessible, web-based

system for facilitating repetitive movement training,

called “Java Therapy”, which has evolved into T-

WREX (Fig. 1) (Reinkensmeyer 2002 and Sanchez

2006). T-WREX provides simulation activities

relevant to daily life. The initial version of Java

Therapy allowed users to log into a Web site,

perform a customized program of therapeutic

activities using a mouse or a joystick, and receive

107

Enrique Sucar L., S. Leder R., Reinkensmeyer D., Hern

´

andez J., Azc

´

arate G., Caste

˜

neda N. and Saucedo P. (2008).

GESTURE THERAPY - A Low-Cost Vision-Based System for Rehabilitation after Stroke.

In Proceedings of the First International Conference on Health Informatics, pages 107-111

Copyright

c

SciTePress

quantitative feedback of their progress. In

preliminary studies of the system, we found that

stroke subjects responded enthusiastically to the

quantitative feedback provided by the system. The

use of a standard mouse or joystick as the input

device also limited the functional relevance of the

system. We have developed an improved input

device that consists of an instrumented, anti-gravity

orthosis that allows assisted arm movement across a

large workspace. However, this orthosis costs about

$4000 to manufacture, limiting its accessibility.

Using computer vision this system becomes

extremely attractive because it can be implemented

with low cost (i.e. using an inexpensive camera and

conventional computer).

For “Gesture Therapy” we combine T-WREX

with state-of-the art computer vision algorithms that

track the hand of a patient and obtain its 3-D

coordinates, using two inexpensive cameras (web

cams) and a conventional personal computer (Fig.

2). The vision algorithms locate and track the hand

of the patient using color and motion information,

and the views obtained from the two cameras are

combined to estimate the position of the hand in 3-D

space. The coordinates of the hand (X, Y, Z) are sent

to T-WREX so that the patient interacts with a

virtual environment by moving his/her impaired

arm, performing different tasks designed to mimic

real life situations and thus oriented for

rehabilitation. In this way we have a low-cost system

which increases the motivation of stroke subjects to

follow their rehabilitation program, and with which

they can continue their arm exercises at home.

Figure 1: Screen shot of T-WREX. Arm and hand

movements are focused as a mouse pointer to activate an

object in the simulation. In this case a hand interacts with

a basketball. The upper left insert shows the camera views,

frontal and side, of the patient’s hand tracked by the

system.

Figure 2: Set up for the Gesture Therapy system. The

patient is seated in front of a table that serves as a support

for the impaired arm, and its movements are followed by

two cameras. The patient watches in a monitor the

simulated environment and his/her control of the

simulated actuator.

A prototype of this system has been installed at

the rehabilitation unit at the National Institute of

Neurology and Neurosurgery (INNN) in Mexico

City, and a pilot study was conducted with a patient

diagnosed with ischemic stroke, left hemi paresis,

with a time of evolution of 4 years. After 6 sessions

with Gesture Therapy, the results based on the

therapist and patient opinions are positive, although

a more extensive controlled clinical trial is required

to evaluate the impact of the system in stroke

rehabilitation. In this paper we describe the Gesture

Therapy system and present the results of the pilot

clinical study.

2 METHODOLOGY

Gesture Therapy integrates a simulated environment

for rehabilitation (Java Therapy) with a gesture

tracking software in a low-cost system for

rehabilitation after stroke. Next we describe each of

these components.

Figure 3: Reference pattern used for obtaining the intrinsic

parameters of each camera (camera 1 and 2).

HEALTHINF 2008 - International Conference on Health Informatics

108

2.1 Java Therapy/T-WREX

The Java Therapy/T-WREX web-based user

interface has three key elements: therapy activities

that guide movement exercise and measure

movement recovery, progress charts that inform

users of their rehabilitation progress, and a therapist

page that allows rehabilitation programs to be

prescribed and monitored

The therapy activities are presented in the

software simulation like games and the system

configuration allows therapists to customize the

software to enhance the therapeutic benefits for each

patient, by selecting a specific therapy activity

among others in the system

The therapy activities were designed to be

intuitive even for patients with minimal cognitive or

perceptual problems to understand. These activities

are for repetitive daily task-specific practice and

were selected by its functional relevance and

inherent motivation like grocery shopping, car

driving, playing basketball, self feeding, etc.

Additionally, the system gives objective visual

feedback of patient task performance, and patient

progress can be illustrated easily by the therapist by

a simple statistical chart. The visual feedback has the

effect of enhancing motivation and endurance along

the rehabilitation process by patients awareness of

his/her progress.

2.2 Gesture Tracking

Using two cameras (stereo system) and a computer,

the hand of the user is detected and tracked in a

sequence of images to obtain its 3-D coordinates in

each frame, which are sent to the T-WREX

environment. This process involves several stages:

Calibration,

Segmentation,

Tracking,

3-D reconstruction.

Next we describe each stage.

2.2.1 Calibration

To have a precise estimation of the 3-D position in

space of the hand, the camera system has to be

calibrated. The calibration consists in obtaining the

intrinsic (focal length, pixel size) and extrinsic

(position and orientation) parameters of the cameras.

The intrinsic parameters are obtained via a reference

pattern (checker board) that is put in front of each

camera, as shown in figure 3.

The extrinsic parameters are obtained by giving

the system the position and orientation of each

camera in space with respect to a reference point,

see figure 2. The reference point could be the lens of

one of the cameras, or an external point such as a

corner of the table. The colors on the checker board

pattern and the status bar shown in figure 3 above

indicate the progress of the calibration process.

Note that the calibration procedure is done only

once and stored in the system, so in subsequent

sessions this procedure does not need to be repeated,

unless the cameras are moved or changed for other

models.

2.2.2 Segmentation

The hand of the patient is localized and segmented

in the initial image combining color and motion

information. Skin color is a good clue to point

potential regions where there is a hand/face of a

person. We trained a Bayesian classifier with many

(thousand) samples of skin pixels in HSV (hue,

saturation, value), which is used to detect skin pixels

in the image. Additionally, we use motion

information based on image subtraction to detect

moving objects in the images, assuming that the

patient will be moving his impaired arm. Regions

that satisfy both criteria, skin color and motion, are

extracted by an intersection operation, and this

region corresponds to the hand of the person. This

segment is used as the initial position of the hand

for tracking it in the image sequence, as described in

the next section. This procedure is applied to both

images, as illustrated in figure 4.

Figure 4: Hand detection and segmentation in both

images. The approx. hand region is shown as a rectangle,

in which the center point is highlighted, used later for

finding the 3-D coordinates.

The system can be confused with objects that

have a similar color as human skin (i.e wood), so we

assume that this does not occur. For this it is

recommended that the patient uses long sleeves, and

to cover the table and back wall with a uniform cloth

GESTURE THERAPY - A Low-Cost Vision-Based System for Rehabilitation after Stroke

109

in a distinctive color (like black or blue). It is also

recommended that the system is used indoors with

artificial lighting (white). Under these conditions

that system can localize and track the hand quite

robustly in real time.

2.2.3 Tracking

Hand tracking is based on the Camshift algorithm

(Bradski, 1998). This algorithm uses only color

information to track an object in an image sequence.

Based on an initial object window, obtained in the

previous stage, Camshift builds a color histogram of

the object of interest, in this case the hand. Using a

search window (define heuristically according to the

size of the initial hand region) and the histogram,

Camshift obtains a probability of each pixel in the

search region to be part of the object, and the center

of the region is the “mean” of this distribution. The

distribution is updated in each image, so the

algorithm can tolerate small variation in illumination

conditions.

In this way, the 2-D position of the hand in

each image in the video sequence is obtained, which

corresponds to the center point of the color

distribution obtained with Camshift. The 3-D

coordinates are obtained by combining both views,

as described in the next section.

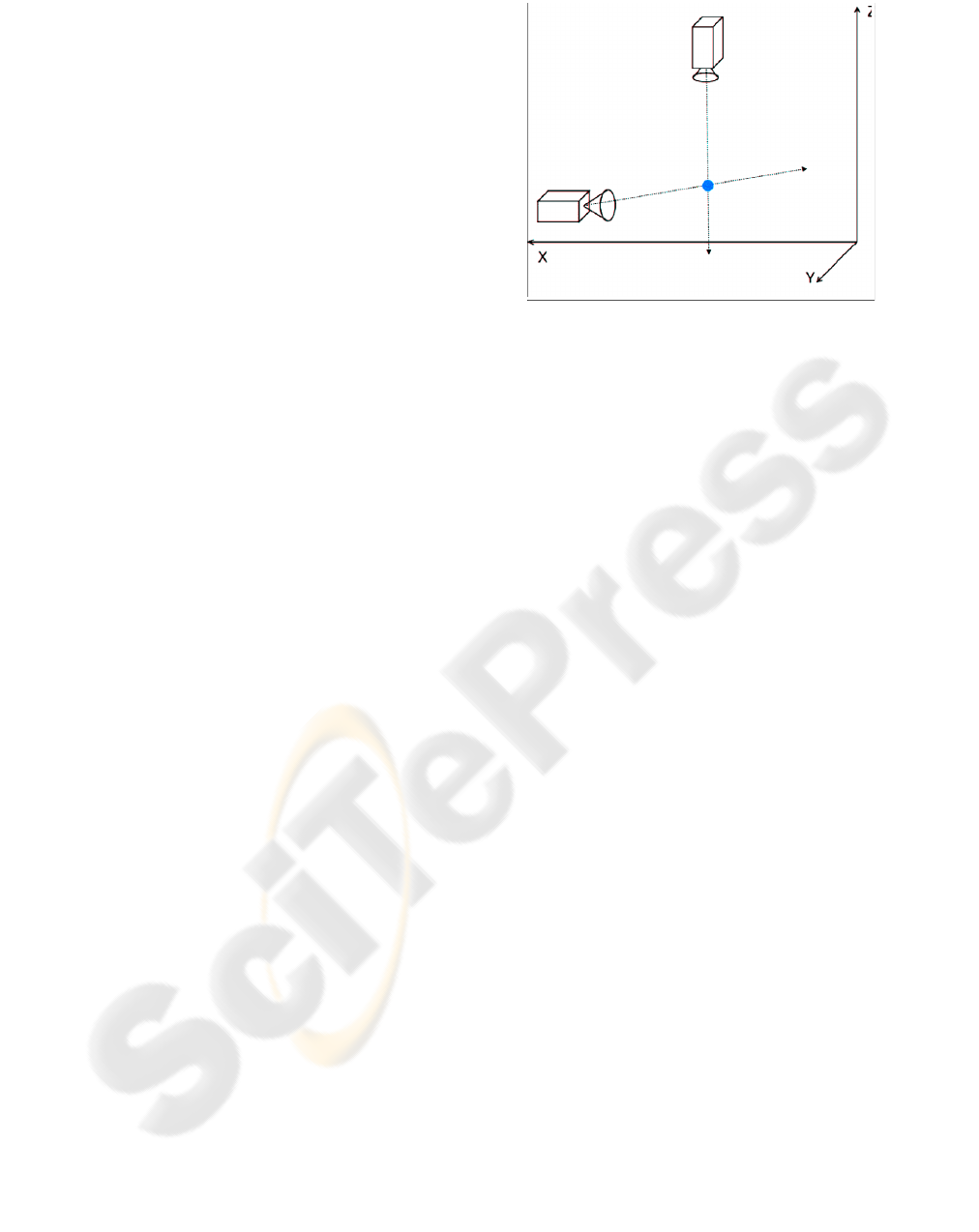

2.2.4 3-D Reconstruction

Based on the 2-D coordinates of the center point of

the image region in each image, the 3-D coordinates

are obtained in the following way. For each image, a

line in 3-D space is constructed by connecting the

center of the hand region and the center of the

camera lens, based on the camera parameters. This is

depicted in figure 5. Once the two projection lines

are obtained, their intersection provides the

coordinates in 3-D (X, Y, Z).

Thus, we have the 3-D position of the hand for

each processed image pair (about 15 frames per

second in a standard PC), which are sent to T-

WREX so that the patient can interact with the

virtual environments.

3 PILOT STUDY

We performed a pilot study with one patient using

"Gesture Therapy" at the National Institute for

Neurology and Neurosurgery (INNN) in Mexico

City. The purpose of this pilot study was to improve

the protocol for a larger clinical trail with Gesture

Therapy, anticipating potential problems and gaining

experience using the technology in the hospital

Figure 5: Estimation of the 3-D position of the hand by

intersecting the projection lines obtained from the images.

setting.

The patient was diagnosed with ischemic stroke,

left hemi paresis, with a time of evolution of 4 years.

An evaluation with the Fugl-Meyer (Fugl-Meyer,

1975) scale was performed at the start and end of the

study.

The patient used Gesture Therapy for 6 sessions,

between 20 and 45 minutes each session. The main

objective of the exercises was the control of the

distal portion of the upper extremity and hand. The

patient performed pre exercises for stretching,

relaxation, and contraction of the fingers and wrist

flexors and extensors. The patient performed several

of the simulated exercises in the virtual

environment, increasing in difficulty as the sessions

progressed (clean stove, clean windows, basketball,

paint room, car race).

After the 6 sessions the patient increased his

capacity to voluntarily extend and flex the wrist

through relaxation of the extensor muscles. He also

tried to do bimanual activities (such as take and

throw a basket ball) even if he maintained the

affected left hand closed; he increased use of the

affected extremity to close doors.

In the therapist’s opinion: "The GT system

favours the movement of the upper extremity by the

patient. It makes the patient maintain the control of

his extremity even if he does not perceive it. GT

maintains the motivation of the patient as he tries to

perform the activity better each time (more control

in positioning the extremity, more speed to do the

task, more precision). This particular patient gained

some degree of range of movement of his wrist.

There are still many problems with the fingers flexor

synergy, but he feels well and motivated with his

achievements. It is also important to note the

motivation effect the system has on patient

endurance to complete the treatment until the last

HEALTHINF 2008 - International Conference on Health Informatics

110

day by increasing the enthusiasm of the patient in

executing the variety of rehabilitation exercises.”

In the patient's opinion: “At the beginning I felt

that my arm was too "heavy", and at the shoulder I

felt as if there was something cutting me, now I feel

it less heavy and the cutting sensation has also been

reduced.”

An “analogical visual scale” in the range 1-10

(very bad, ..., excellent) was applied, asking the

patient about the treatment based on GT, he gave it a

10. Asked about if he will like to continue using GT,

his answer was “YES”.

The Fugl-Meyer scale (3 points increase) was not

sufficiently sensitive to detect the clear clinical and

subjective improvement in the patient.

4 CONCLUSIONS AND FUTURE

WORK

This single case shows the importance of motivation

in rehabilitation. Involving the patient in simulated

daily activities helps the psychological rehabilitation

component as well. The potential ease of use,

motivation promoting characteristics, and objective

quantitative potential are obvious advantages to this

system. The patient can work independently with

reduced therapist interaction. With current

technology the system can be adapted to a portable

low-cost device for the home including

communications for remote interaction with a

therapist and medical team.

It is possible to extend the system to a full arm

tracking, including wrist, hand and fingers for more

accurate movements. Movement trajectories can be

compared and used to add a new metric of patient

progress. To make the system easier to use a GUI

tool is planned for system parameters configuration,

including the camera. Future work includes more

games to increase the variety of therapy solutions

and adaptability to patient abilities, so that a

therapist or patient can match the amount of

challenge necessary to keep the rehabilitation

advancing.

In the current low-cost, vision-based system the

table top serves as an arm support for 2D movement

until the patients are strong enough to lift their arms

into 3D. Extending the system to wrist, hand, and

finger movement is planned to make a full superior

extremity rehabilitation system.

Wrist accelerometers can be used to increase the

objectivity of clinical studies in addition to

subjective reports of patients and caregivers;

especially when the patient spends less time in the

clinic. (Uswatte 2006). fMRI of patients’ brains, pre

and post training, are planned for increasing our

understanding of the biological basis for

rehabilitation (Johansen-Berg 2002).

ACKNOWLEDGEMENTS

This work was supported in part by a grant from

UC-MEXUS/CONACYT.

REFERENCES

American Stroke Association, 2004. Retrieved July 10,

2007 from http://www.strokeassociation.org.

G.R. Bradski, 1998. Computer vision face tracking as a

component of a perceptual user interface. In Workshop

on Applications of Computer Vision, pp. 214-219.

A. R. Fugl-Meyer, L. Jaasko, I. Leyman, S. Olsson, and S.

Steglind, 1975. The post-stroke hemiplegic patient: a

method for evaluation of physical performance. In

Scand J Rehabil Med, vol. 7, pp. 13-31.

D. Reinkensmeyer, C. Pang, J. Nessler, and C. Painter,

2002. Web-based telerehabilitation for the upper-

extremity after stroke. In IEEE Transactions on

Neural Science and Rehabilitation Engineering, vol.

10, pp. 1-7.

R. J. Sanchez, J. Liu, S. Rao, P. Shah, R. Smith, T.

Rahman, S. C. Cramer, J. E. Bobrow, and D.

Reinkensmeyer, 2006. Automating arm movement

training following severe stroke: Functional exercise

with quantitative feedback in a gravity-reduced

environment. In IEEE Trans. Neural. Sci. Rehabil.

Eng., vol. 14(3), pp. 378-389.D. Reinkensmeyer, S.

Housman, Vu Le, T. Rahman and R. Sanchez, 2007.

Arm-Training with T-WREX After Chronic Stroke:

Preliminary Results of a Randomized Controlled Trial.

In ICORR 2007, 10

th

International Conference on

Rehabilitation Robotics, Noordwijk.

D. Reinkensmeyer and S. Housman, 2007. If I can’t do it

once, why do it a hundred times?: Connecting volition

to movement success in a virtual environment

motivates people to exercise the arm after stroke. In

IWVR 2007

G, Uswatte, C. Giuliani, C. Winstein, A. Zeringue, L.

Hobbs, SL Wolf, 2006. Validity of accelerometry for

monitoring real-world arm activity in patients with

subacute stroke: evidence from the extremity

constraint-induced therapy evaluation trial. Arch Med

Rehabil: 86:1340-1345.

H. Johansen-Berg, H. Dawes, C. Guy, S.M. Smith, D.T.

Wade and P.M. Matthews, 2002 Correlation between

motor improvements and altered fMRI activity after

rehabilitative therapy. Brain Journal: 125, 2731-2742

GESTURE THERAPY - A Low-Cost Vision-Based System for Rehabilitation after Stroke

111